Abstract

Anterior cingulate cortex (ACC) response during attentional control in the context of task-irrelevant emotional faces is a promising biomarker of cognitive behavioral therapy (CBT) outcome in patients with social anxiety disorder (SAD). However, it is unclear whether this biomarker extends to major depressive disorder (MDD) and is specific to CBT outcome. In the current study, 72 unmedicated patients with SAD (n = 39) or MDD (n = 33) completed a validated emotional interference paradigm during functional magnetic resonance imaging before treatment. Participants viewed letter strings superimposed on task-irrelevant threat and neutral faces under low perceptual load (high interference) and high perceptual load (low interference). Biomarkers comprised anatomy-based rostral ACC (rACC) and dorsal ACC (dACC) response to task-irrelevant threat (>neutral) faces under low and high perceptual load. Patients were randomly assigned to 12 weeks of CBT or supportive therapy (ST) (ClinicalTrials.gov identifier: NCT03175068). Clinician-administered measures of social anxiety and depression severity were obtained at baseline and every 2 weeks throughout treatment (7 assessments total) by an assessor blinded to the treatment arm. A composite symptom severity score was submitted to latent growth curve models. Results showed more baseline rACC activity to task-irrelevant threat>neutral faces under low, but not high, perceptual load predicted steeper trajectories of symptom improvement throughout CBT or ST. Post-hoc analyses indicated this effect was driven by subgenual ACC (sgACC) activation. Findings indicate ACC activity during attentional control may be a transdiagnostic neural predictor of general psychotherapy outcome.

Similar content being viewed by others

Introduction

Social anxiety disorder (SAD) and major depressive disorder (MDD) are prevalent, impairing internalizing disorders [1]. Effective treatment can reduce this burden and cognitive behavioral therapy (CBT) is considered the ‘gold standard’ psychotherapy for internalizing disorders [2]. Yet, CBT outcome varies and many remain clinically symptomatic following treatment [3,4,5], thereby highlighting the need to delineate markers that prospectively identify who is most likely to benefit from CBT.

An important construct in understanding heterogeneity in CBT outcomes is attentional control. Attentional control facilitates goal-directed behavior by inhibiting task-irrelevant information that competes with goals and is considered a form of implicit emotion regulation [6]. Patients with SAD and MDD demonstrate deficiencies in attentional control over negative information, resulting in attentional bias for negative stimuli [7, 8]. CBT utilizes an amalgam of techniques that draw on executive functions including attentional control [9] and is hypothesized to strengthen top-down regulatory processes [10]. Thus, transdiagnostic baseline individual differences in attentional control mechanisms in the context of task-irrelevant negative stimuli (i.e., emotional interference) may interact with CBT-specific techniques to predict symptom improvement.

The anterior cingulate cortex (ACC) plays a key role in attentional control (i.e., resolving emotional interference) [11,12,13] and subregion specialization constitutes the dorsal ACC (dACC), which is involved in conflict monitoring and the deployment of cognitive control, and rostral ACC (rACC), which is involved in emotional conflict resolution and the assessment of motivationally relevant information [11,12,13]. Separate lines of research indicate ACC is a promising biomarker of CBT outcomes. In SAD, more baseline dACC activity in response to task-irrelevant (>task-relevant) emotional faces [14] and task-irrelevant threat (>neutral) faces [15] has been shown to correspond with greater symptom improvement following CBT. Also, more baseline dACC activity in response to task-irrelevant numbers during a cognitive interference paradigm predicts greater reduction in social anxiety severity following CBT with or without pharmacotherapy [16].

We are not aware of emotional interference studies that have evaluated ACC as a predictor of CBT outcome in MDD. However, meta-analytic studies suggest pre-treatment rACC engagement is a general marker of treatment response in MDD [17, 18]. For example, more rACC activity at rest foretells greater symptom improvement following pharmacological [19], sleep deprivation [20], and neuromodulation [21] interventions. However, findings regarding ACC as a predictor of CBT response in MDD are mixed. One study found less baseline dACC response to sad faces [22] corresponded with more symptom improvement following CBT. Other studies report similar relations, though in subgenual ACC (sgACC), an rACC subregion [23]. Specifically, less sgACC during rest [24, 25] or in response to self-referential stimuli [26, 27] predicts more symptom improvement. The ACC is involved in various functions beyond attentional control (e.g., self-referential processing [28], emotion perception [29]), which likely contribute to mixed results along with other methodological differences between studies. Even so, findings suggest ACC serves as a treatment outcome marker in MDD. An important next step is determining whether ACC as it relates to attentional control is a transdiagnostic marker of CBT outcome.

When evaluating attentional control, it is important to consider task difficulty as attention is a limited capacity system [30, 31]. According to load theory, when perceptual load is low (e.g., task is easy to perform), emotional interference is high as residual attentional resources are ‘left over’ to process task-irrelevant information. Conversely, when perceptual load is high (e.g., task is difficult to perform), emotional interference is low as resources are restricted [32]. Therefore, when evaluating attentional control in the context of negative ‘distractors’, individual differences are likely to be observed when perceptual load is low. In support, associations have been observed between rACC or dACC activity to threat (>neutral) face distractors, and self-reported trait anxiety, behavioral inhibition, and subjective attentional control in healthy participants or patients with internalizing disorders under low, but not high, perceptual load [33,34,35]. This said, when evaluating CBT outcome in SAD, more baseline dACC activity to threat (>neutral) face distractors under high, but not low, load predicted greater pre-to-post CBT decrease in social anxiety severity [15]. Findings suggest variance in ACC to threat distractors under low load is sensitive to individual differences in constructs that factor into psychopathology, though variance in the more cognitively effortful high load condition may also interact with CBT.

The current study sought to extend previous findings by examining whether ACC as a predictor of symptom improvement was transdiagnostic and unique to CBT versus psychotherapy in general. Specifically, CBT involves a range of strategies to reduce maladaptive cognitions and behaviors in addition to common factors shared across psychotherapies such as rapport and treatment adherence [36]. Therefore, supportive therapy (ST) was included as a ‘common factors’ comparator treatment arm to examine whether ACC interacted with CBT-specific techniques or common factors. Also, we examined whether baseline dACC or rACC activity to threat (>neutral) face distractors predicted trajectory of symptom change over the course of treatment as opposed to one endpoint (i.e., post-treatment symptom severity), which does not allow for the characterization of patterns of change over time (e.g., [37, 38]). This may be particularly important when comparing two psychotherapies, as the shape of symptom trajectories may differ even if post-treatment symptom severity is the same.

Based on prior CBT-related findings in SAD, we hypothesized that greater baseline dACC activity in response to threat distractors under high perceptual load would correspond with steeper trajectories of symptom improvement. In addition, we hypothesized that the relation between baseline ACC and symptom trajectories would not differ between patients with SAD and MDD. Finally, as CBT explicitly targets regulatory processes [9], we expected ACC would predict symptom improvement for patients randomized to CBT, but not ST.

Materials and methods

Participants

Seventy-two treatment-seeking patients with a principal diagnosis of SAD or MDD were recruited as part of an ongoing parallel-group randomized control trial (1:1 schedule) examining neural predictors and mechanisms of CBT and ST treatment response (ClinicalTrials.gov Identifier: NCT03175068). All participants were required to be between 18- and 65- years old and have a diagnosis of SAD (n = 39) or MDD (n = 33) based on DSM-5 diagnostic criteria. Patients were also required to either surpass a symptom severity threshold (i.e., a Liebowitz Social Anxiety Scale (LSAS; [39]) score ≥60 for SAD patients; a Hamilton Depression Rating Scale (HAMD; [40]) score ≥17 or a Beck Depression Inventory-II [41] score ≥16 for MDD patients) or report clinically significant levels of impairment (based on diagnostic interview). Regarding demographic characteristics, the average age for SAD patients was 25.51 (SD = 7.50), and 61.5% identified as women. For MDD patients, the average age was 29.82 (SD = 10.33) and 69.7% identified as women.

Although comorbidity was allowed, patients with MDD were not permitted to have comorbid SAD and vice-versa. See Supplementary Materials for exclusion criteria. All participants tested negative for substance use and pregnancy before the MRI scan.

Participants were recruited between September 2017 and December 2020 from a local outpatient clinic and community. After obtaining informed consent, a psychiatric interview was conducted consisting of the Structured Clinical Interview for DSM-5 [42], LSAS, and HAMD. A Best-Estimate/Consensus Panel of at least three study staff members determined study eligibility and participants were informed of assignment to CBT or ST by a non-treating clinician. All study and treatment procedures were conducted at the University of Illinois at Chicago and were approved by the university Institutional Review Board and complied with the Helsinki Declaration. All participants were compensated for their time.

Treatment procedures

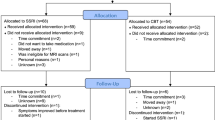

Patients were randomized to receive either 12 weeks of CBT or 12 weeks of ST using a covariate adaptive randomization (i.e., minimization) approach. See Fig. 1 for information regarding study flow and patient retention. As seen, 37 CBT patients and 35 ST patients completed treatment and all baseline and follow-up measures for analyses.

At the time of interim analysis, the majority of the proposed final patient sample (n = 140) had completed treatment before COVID-19, thereby reducing possible confounds. Also, the sample sizes for each treatment arm exceeds the sample sizes of previous studies that either observed an effect of ACC activation during attentional control on CBT treatment outcome in patients with SAD (ns: 21, 32; [14, 15]) or observed treatment differences (i.e., CBT with versus without SSRIs) in the relation between ACC activity and SAD symptom improvement (ns: per arm: 23, 24; [16]).

Patients received 12 weekly 60-min psychotherapy sessions from trained PhD-level licensed clinical psychologists. Participants randomized to CBT received manualized treatment for SAD or MDD (e.g. [43,44,45]); the core content of sessions involved psychoeducation, cognitive restructuring, behavioral activation (for MDD), in vivo exposures (for SAD), and relapse prevention. ST resembled client-centered therapy (e.g. [46, 47]) and emphasized reflective listening and elicitation of effect as appropriate. See Supplementary Materials for information regarding treatment fidelity and competency.

Clinical symptoms

The LSAS and HAMD served as primary outcome measures and were administered to patients by a trained clinician blinded to the treatment arm before treatment, as well as at Sessions 2, 4, 6, 8, 10, and 12 (i.e., 7 time points) to assess social anxiety and depression severity, respectively. As expected, patients with SAD exhibited higher social anxiety symptoms, t(70) = 10.92, p < 0.001, and patients with MDD exhibited higher depression symptoms, t(70) = 3.45, p = 0.001, at baseline. Therefore, to examine clinical change across treatment, we utilized a composite score of patients’ symptoms by summing the LSAS and HAMD total scores to create an outcome measure that was sensitive to clinical change for both diagnostic groups. Standardized z scores are not recommended in longitudinal studies, as standardization at each time point prevents examination of mean-level changes [48]. Therefore, consistent with other treatment-outcome studies combining different symptom measures [49, 50], we utilized the proportion of maximum scaling method [51] to transform LSAS and HAMD before creating composite scores for each assessment.

Emotional faces interference task

The emotional faces interference task (EFIT) has been used in prior studies [15, 33, 35]. In brief, participants were presented with a string of six letters superimposed on a task-irrelevant face distractor and instructed to identify target letters (N or X) via button press as quickly and accurately as possible. Task-irrelevant faces consisted of fearful, angry, and neutral expressions from eight different individuals from a standardized stimulus set [52]. In low perceptual load trials, the string was comprised entirely of target letters whereas under high perceptual load, the string included a single target letter and five non-target consonants (e.g., HKMWZ) in randomized order. There were two image acquisition runs, each comprising 12 blocks of 5 trials. A mixed block/event-related design was employed whereby perceptual load (low vs. high) varied across blocks and the facial expressions varied within blocks on a trial-by-trial basis. Images were presented for 200 ms followed by a fixation cross presented for 1800 ms. Within blocks, the intertrial interval varied randomly between 2 and 6 s; trials between blocks were separated by 4–8 s.

fMRI data acquisition, preprocessing, and analysis

Scanning during the EFIT task was conducted on a 3.0 Tesla MR 750 scanner (General Electric Healthcare; Waukesha, WI) using a standard radiofrequency coil. Blood oxygen-level dependent (BOLD) functional images were acquired using a gradient-echo echo-planar imaging sequence with the following parameters: repetition time (TR) = 2 s, echo time (TE) = 25 ms, flip angle = 900, field of view = 22 × 22 cm, acquisition matrix 64 × 64; 44 axial, 3-mm-thick slices with no gap. The first 4 volumes from each run were discarded to allow for T1 equilibration effects. For anatomical localization, a high-resolution, T1-weighted volumetric anatomical scan was acquired.

Conventional preprocessing steps were used in Statistical Parametric Mapping (SPM12) software package (Wellcome Trust Centre for Neuroimaging, London www.fil.ion.ucl.ac.uk/spm). Images were temporally corrected to account for differences in slice time collection, spatially realigned to the first image of the first run, coregistered to the anatomical, normalized to a Montreal Neurological Institute (MNI) space using warping based on the anatomical, resampled to 2 × 2 × 2 mm voxels, and smoothed with an 8 mm isotropic Gaussian kernel.

A general linear model identifying the low and high perceptual load blocks for angry, fearful, and neutral faces was applied to the time series, convolved with the canonical hemodynamic response function and with a 128 s high-pass filter. Nuisance regressors comprising six motion parameters were included to correct for motion artifacts. To maximize the signal across threat content, angry and fearful faces were collapsed. Contrasts of interest were task-irrelevant threat (i.e., fearful/angry) faces>neutral faces under low (i.e., Threat Low>Neutral Low) and high (i.e., Threat High>Neutral High) perceptual load conditions. A priori regions of interest (ROI) were used to test hypotheses. The Automatic Anatomical Labeling (AAL) atlas 3 [53] was used to generate masks (Supplementary Fig. 1) such that rACC comprised sgACC and pregenual ACC (pgACC) (search volume = 21,704 mm3). Supracallosal ACC and AAL 3 median cingulate anterior to y = 0 delineated dACC (search volume = 19256 mm3). MarsBaR [54] was used to extract activation (β weights, arbitrary units [a.u.]) from all ROIs which were submitted to tests in Statistical Package for the Social Sciences (Chicago, IL version 26) and Amos (version 27).

Analytic plan

Analyses regarding task performance (i.e., EFIT accuracy and reaction time) are presented in Supplementary Materials.

To evaluate whether contrasts of interest probed ACC in general, whole-brain analyses were conducted. See Supplementary Materials for the analytic plan.

Hypotheses were tested using a series of latent growth curve models in treatment completers. First, in our base model we examined if internalizing composite symptom scores changed over the course of treatment and whether the treatment arm (CBT vs. ST) predicted symptom trajectory. To determine whether symptom improvement followed linear or non-linear trajectories of improvement, linear, quadratic, and cubic symptom trajectories were examined. Log-linear trajectories were also examined to account for trajectories indicating sudden gains. Second, we examined whether pre-treatment rACC and/or dACC activity to threat (>neutral) face distractors under low and high perceptual load predicted the intercept (i.e., baseline symptom severity) and slope (i.e., trajectories of symptom change) over the course of treatment (see Supplementary Fig. 2). Third, we examined whether the treatment arm moderated the impact of baseline rACC and/or dACC response on symptom intercept and slope. Finally, to examine ACC as a transdiagnostic predictor, we examined whether diagnosis (SAD vs. MDD) moderated the impact of baseline ACC response on symptom intercept and slope (see Supplementary Fig. 3 for model). A Bonferroni correction (adjusted p = 0.0125) was implemented for all primary analyses to control for the number of examined ROIs (i.e., rACC and dACC under both high and low perceptual load).

Given evidence baseline sgACC activity predicts CBT outcome in MDD [24,25,26,27], post hoc analysis was performed for significant rACC results to evaluate whether a specific subregion explained findings. Specifically, both sgACC and pgACC were simultaneously examined as predictors of the intercept and slope of symptom change within the same model to examine the unique influences of rACC subdivisions. Finally, as a test of robustness, post hoc analysis was conducted for significant findings to see if relations between ACC response and symptom trajectories were maintained when statistically controlling for baseline symptom composite scores.

Results

Task performance

Accuracy was greater and reaction time faster across participants when detecting task-relevant target letters under low relative to high perceptual load. Results are in Supplementary Materials.

Intent to treat analysis

Analyses revealed racial identity and baseline symptom differences between treatment completers and non-completers, such that non-completers were more like to be Asian and to have lower depression symptoms at baseline. No other demographic or clinical differences were observed. See Supplementary Materials and Supplementary Table 1 for details.

Participant characteristics

Patient demographic and clinical characteristics are presented in Table 1. As seen, patients randomly assigned to CBT or ST did not differ in any demographic characteristics, principal diagnosis, or symptom severity across any of the assessments. Regarding diagnostic differences, SAD patients were younger than MDD patients, t(70) = −2.04, p = 0.045, but did not differ from MDD patients on any other demographic characteristics (ps ≥ 0.13). Therefore, patient age was entered as a covariate for all analyses. Compared to MDD patients, SAD patients demonstrated higher symptom severity (i.e., composite score) across all assessments, ts = 3.08–6.56, all p’s ≤ 0.003.

Whole-brain analyses

One-sample t-tests for Threat Low>Neutral Low and Threat High>Neutral High were performed to determine whether contrasts of interest probed ACC. See full results in Supplementary Materials, Supplementary Table 2, and Supplementary Fig. 4.

Preliminary analyses

Linear, quadratic, cubic, and log-linear growth models of symptom change were fit to the data. The linear growth model was determined to be the best fit and was, therefore, used in all subsequent analyses (see Supplementary Materials, Supplementary Table 3, and Supplementary Fig. 5 for model comparison). Results indicated that means for both symptom intercept, M = 0.77, SE = 0.11, p < 0.001, and slope, M = −0.05, SE = 0.017, p = 0.003, were significant, suggesting symptoms linearly decreased throughout treatment. Treatment arm was not associated with symptom intercept, β = −0.02, p = 0.87, or slope, β = 0.19, p = 0.16, suggesting patients in both arms demonstrated similar levels of baseline symptoms and similar symptom trajectories (see Fig. 2). Patient age was not associated with symptom intercept, β = −0.19, p = 0.13, or slope, β = −0.04, p = 0.79, suggesting there was not an effect of age.

Baseline neural activity and symptom change

Next, a series of analyses examining baseline ACC as a predictor of symptom change was conducted. See Table 2 for the result summary. Results indicated that greater baseline rACC response under low load was negatively associated with symptom slope, such that greater rACC activation predicted a steeper trajectory of symptom decrease, β = −0.39, p = 0.002. Post-hoc analysis indicated that this main effect of rACC response on symptom slope was maintained when statistically controlling for baseline symptoms, β = −0.31, p = 0.027. No other main effects of neural activation on symptom slope (all p’s ≥ 0.02) survived Bonferroni correction.

Post hoc analyses were conducted to examine whether rACC subdivisions (i.e., subgenual, pregenual) under low load were unique predictors of symptom slope. Results indicated greater sgACC activation, β = −0.39, p = 0.009, but not pgACC activation, β = −0.15, p = 0.32, was uniquely associated with steeper slope of symptom change. Post-hoc findings showed the sgACC effect was maintained when controlling for baseline symptom severity, β = −0.38, p = 0.02 (see Fig. 3).

A Anatomic mask for sgACC used as region of interest. B Figure depicting projected trajectories of symptom change (across Sessions 2–12) as a function of patient baseline sgACC activation to threat (>neutral) emotional face distractors under low perceptual load while statistically controlling for covariates (i.e., treatment arm, patient age, pregenual anterior cingulate cortex activation, and baseline symptom severity). Simple slopes are depicted for low (−1 SD), average, and high (+1 SD) levels of sgACC activation.

Moderation by treatment arm

Primary analyses were re-conducted with the interaction between neural activation and arm included to test whether the treatment arm moderated the impact of baseline ACC on symptom improvement. None of the ROI × arm interactions significantly predicted symptom slope (all p’s ≥ 0.28).

Moderation by diagnosis

Finally, primary analyses were re-conducted with patient diagnosis and the ROI × diagnosis interaction included to examine whether the relation between baseline ACC and symptom improvement was disorder specific. None of the ROI × diagnosis interactions predicted symptom slope (all p’s ≥ 0.31).

Discussion

The primary aim of the current study was to examine whether more baseline ACC activity during attentional control predicted symptom improvement over the course of CBT or ST for patients with SAD or MDD. Behavioral findings showed the manipulation of task difficulty was effective as accuracy was higher and reaction times faster for low perceptual load (i.e., high emotional interference) relative to high perceptual load (i.e., low emotional interference) conditions. In addition, results revealed greater baseline ACC activation to threat (>neutral) face distractors under low, but not high, the perceptual load was associated with a steeper trajectory of symptom improvement (i.e., rate of change) regardless of principal diagnosis or treatment modality. Therefore, findings partially support hypotheses.

Our hypothesis that more baseline ACC activity to threat distractors would predict greater symptom improvement over the course of psychotherapy was supported. Findings are consistent with previous SAD studies [14,15,16] and evidence that ACC as a predictor of psychotherapy outcome extends to MDD highlights the possibility that ACC during attentional control may be a transdiagnostic biomarker of treatment response. Findings build upon an increasing body of literature that focuses on neural correlates of treatment response across disorders, rather than within categorically defined disorders [55, 56]. It will be important for future studies to determine if our preliminary results replicate in more diagnostically heterogenous samples.

While the direction of activity was expected, the specific ACC sub-region and its relation to perceptual load was not supported, as greater rate of symptom improvement was predicted by more rACC, but not dACC, activity to threat distractors under low, but not high, perceptual load. This may in part be due to methodological differences, as other prior studies examined dACC response in the context of task-irrelevant numbers [16] or used a whole-brain regression approach to identify regions during emotional interference that foretold pre-to-post symptom improvement [14, 15]. In the current study, we focused on a priori ROIs, examined trajectories of symptom change, included ST for comparison, and included patients with MDD. That said, current findings are not entirely unexpected, as links between rACC response to threat distractors and participant characteristics (e.g., trait anxiety, behavioral inhibition, self-reported attentional control) have been observed under low, but not high, perceptual load [33,34,35], suggesting the high emotional interference condition may be sensitive to individual differences. Furthermore, the specificity of findings to rACC, rather than dACC, under low load is not surprising, given rACC’s role in resolving emotional conflict [11,12,13]. Larger, well-powered studies are needed to replicate and confirm the reliability of findings.

Post-hoc analysis suggests the observed rACC effect was driven by sgACC. The sgACC is strongly connected with regions important for emotion processing (e.g., amygdala) and is known to engage during emotional conflict [11, 12, 57]. Subgenual ACC engagement may reflect top-down inhibition of amygdala response to task-irrelevant emotional stimuli or bottom-up amygdala habituation to task-irrelevant stimuli leading to sgACC activation (i.e., ‘disinhibited’ sgACC response; [57]). Although further study is needed to clarify the underpinnings of sgACC as a predictor of symptom change, evidence of sgACC as a biomarker is consistent with studies showing sgACC activation during rest [24, 25] or self-referential emotion processing [26, 27] corresponds with CBT response in depressed patients. Methodological differences across studies do not permit comparison with the current study and likely contribute to differences in the directionality of sgACC findings. Even so, our results indicate sgACC activity during emotional interference may be a transdiagnostic marker of treatment outcome.

We expected ACC activity would be predict CBT, not ST, outcome. However, there was no effect of treatment arm on symptom trajectories and no interaction with baseline ACC activity. Current findings bring up questions regarding mechanisms of change for CBT. CBT-specific strategies aim to improve patients’ emotion regulation strategies [9] and CBT is hypothesized to exert its effect via strengthening top-down regulation [10]. However, meta-analyses show post-treatment symptom improvement in CBT is comparable to ST [58], though CBT exhibits superior long-term efficacy [59, 60], highlighting the possibility that CBT may contribute to therapeutic gains via both common factors shared across psychotherapies (e.g., therapeutic alliance, treatment adherence) and CBT-specific techniques (e.g., emotion regulation skills) [61]. Accordingly, our findings also suggest that CBT may, at least in part, contribute to symptom improvement via common factors as ACC appears to interact with common factors shared across psychotherapies to predict individual differences in treatment response. This said, the current study focused on ACC as a predictor of treatment change. Future research is needed to examine whether other baseline neural markers interact with CBT-specific strategies to predict symptom improvement.

The current study is not without limitations, which should be acknowledged. One limitation is the utilization of neutral faces as a ‘baseline’ comparison, given increasing evidence that emotionally ambiguous faces are not truly ‘neutral’, but elicit aberrant ACC activation in patients [62]. Also, the lack of a waitlist control group or pharmacotherapy arm precludes firm conclusions from being drawn regarding the extent to which the relation between pre-treatment ACC activation and symptom improvement can be specifically ascribed to psychotherapy response. Therefore, conclusions should remain tentative pending replication in studies utilizing such controls. Findings may not generalize to patients with other internalizing conditions. Patients with MDD were older than those with SAD; although age was covaried in analysis and there was no evidence of an age effect, we cannot rule out the potential that age may have impacted findings. Depression severity was mild overall; therefore, results may not generalize to a severely depressed population. Also, non-treatment completers had less baseline depression than completers suggesting low depression levels may have factored into attrition. Furthermore, task-irrelevant positive faces were not included, which may have reduced our ability to detect diagnostic-specific effects. In addition, the study focused on ACC; it is possible that baseline activity in other brain regions predict treatment outcome. Lastly, the lack of a healthy comparator group in the current study precludes conclusions from being drawn regarding whether baseline ACC activation connotes aberrant response.

Despite important limitations, preliminary findings indicate more rACC/sgACC engagement during threat interference under low, but not high, perceptual load predicts symptom improvement over the course of psychotherapy in SAD and MDD, pointing to a transdiagnostic neural marker of treatment outcome. Though current results suggest this pattern of activation may predict positive response to psychotherapy in general, future studies with larger sample sizes and control groups are needed to confirm the predictive utility of this biomarker as general- versus modality-specific marker of treatment response. Finally, the objective of the current study was to contribute to theory regarding transdiagnostic neural predictors of psychotherapy outcome in light of gaps in the literature and previous work that point to the relevance of ACC as a predictor of treatment outcome. However, it will be important that future studies take a data-driven approach (e.g., machine learning) in large samples to determine if ACC predicts treatment outcome at the single-subject level.

References

Wittchen HU, Jacobi F, Rehm J, Gustavsson A, Svensson M, Jönsson B, et al. The size and burden of mental disorders and other disorders of the brain in Europe 2010. Eur Neuropsychopharmacol. 2011;21:655–79.

David D, Cristea I, Hofmann SG. Why cognitive behavioral therapy is the current gold standard of psychotherapy. Front Psychiatry. 2018;9:4.

Springer KS, Levy HC, Tolin DF. Remission in CBT for adult anxiety disorders: A meta-analysis. Clin Psychol Rev. 2018;61:1–8.

Hollon SD, Thase ME, Markowitz JC. Treatment and prevention of depression. Psychol Sci Public Interest. 2002;3:39–77.

Cuijpers P, Cristea IA, Karyotaki E, Reijnders M, Huibers MJH. How effective are cognitive behavior therapies for major depression and anxiety disorders? A meta-analytic update of the evidence. World Psychiatry. 2016;15:245–58.

Koole SL, Rothermund K. ‘I feel better but I don’t know why’: The psychology of implicit emotion regulation. Cogn Emot. 2011;25:389–99.

Peckham AD, McHugh RK, Otto MW. A meta-analysis of the magnitude of biased attention in depression. Depress Anxiety. 2010;27:1135–42.

Bögels SM, Mansell W. Attention processes in the maintenance and treatment of social phobia: Hypervigilance, avoidance and self-focused attention. Clin Psychol Rev. 2004;24:827–56.

Arch JJ, Craske MG. First-line treatment: A critical appraisal of cognitive behavioral therapy developments and alternatives. Psychiatr Clin North Am. 2009;32:525–47.

DeRubeis RJ, Siegle GJ, Hollon SD. Cognitive therapy versus medication for depression: Treatment outcomes and neural mechanisms. Nat Rev Neurosci. 2008;9:788–96.

Etkin A, Egner T, Kalisch R. Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn Sci. 2011;15:85–93.

Stevens FL, Hurley RA, Taber KH. Anterior cingulate cortex: Unique role in cognition and emotion. J Neuropsychiatry Clin Neurosci. 2011;23:121–5.

Kanske P, Kotz SA. Emotion speeds up conflict resolution: A new role for the ventral anterior cingulate cortex? Cereb Cortex. 2011;21:911–9.

Klumpp H, Fitzgerald DA, Angstadt M, Post D, Phan KL. Neural response during attentional control and emotion processing predicts improvement after cognitive behavioral therapy in generalized social anxiety disorder. Psychol Med. 2014;44:3109–21.

Klumpp H, Fitzgerald DA, Piejko K, Roberts J, Kennedy AE, Phan KL. Prefrontal control and predictors of cognitive behavioral therapy response in social anxiety disorder. Soc Cogn Affect Neurosci. 2016;11:630–40.

Frick A, Engman J, Alaie I, Björkstrand J, Gingnell M, Larsson EM, et al. Neuroimaging, genetic, clinical, and demographic predictors of treatment response in patients with social anxiety disorder. J Affect Disord. 2020;261:230–7.

Pizzagalli DA. Frontocingulate dysfunction in depression: Toward biomarkers of treatment response. Neuropsychopharmacology. 2011;36:183–206.

Fu CHY, Steiner H, Costafreda SG. Predictive neural biomarkers of clinical response in depression: A meta-analysis of functional and structural neuroimaging studies of pharmacological and psychological therapies. Neurobiol Dis. 2013;52:75–83.

Mayberg HS, Brannan SK, Mahurin RK, Jerabek PA, Brickman JS, Tekell JL, et al. Cingulate function in depression: A potential predictor of treatment response. Neuroreport. 1997;8:1057–61.

Ebert D, Feistel H, Barocka A, Kaschka W. Increased limbic blood flow and total sleep deprivation in major depression with melancholia. Psychiatry Res Neuroimaging. 1994;55:101–9.

Langguth B, Wiegand R, Kharraz A, Landgrebe M, Marienhagen J, Frick U, et al. Pre-treatment anterior cingulate activity as a predictor of antidepressant response to repetitive transcranial magnetic stimulation (rTMS). Neuroendocrinol Lett. 2007;28:633–8.

Fu CHY, Williams SCR, Cleare AJ, Scott J, Mitterschiffthaler MT, Walsh ND, et al. Neural responses to sad facial expressions in major depression following cognitive behavioral therapy. Biol Psychiatry. 2008;64:505–12.

Palomero-Gallagher N, Mohlberg H, Zilles K, Vogt B. Cytology and receptor architecture of human anterior cingulate cortex. J Comp Neurol. 2008;508:906–26.

McGrath CL, Kelley ME, Dunlop BW, Holtzheimer PEH, Craighead WE, Mayberg HS. Pretreatment brain states identify likely nonresponse to standard treatments for depression. Biol Psychiatry. 2014;76:527–35.

Konarski JZ, Kennedy SH, Segal ZV, Lau MA, Bieling PJ, McIntyre RS, et al. Predictors of nonresponse to cognitive behavioural therapy or venlafaxine using glucose metabolism in major depressive disorder. J Psychiatry Neurosci. 2009;34:175.

Siegle GJ, Carter CS, Thase ME. Use of fMRI to predict recovery from unipolar depression with cognitive behavior therapy. Am J Psychiatry. 2006;163:735–8.

Siegle GJ, Thompson WK, Collier A, Berman SR, Feldmiller J, Thase ME, et al. Toward clinically useful neuroimaging in depression treatment: Prognostic utility of subgenual cingulate activity for determining depression outcome in cognitive therapy across studies, scanners, and patient characteristics. Arch Gen Psychiatry. 2012;69:913–24.

Northoff G, Heinzel A, de Greck M, Bermpohl F, Dobrowolny H, Panksepp J. Self-referential processing in our brain—A meta-analysis of imaging studies on the self. Neuroimage. 2006;31:440–57.

Phillips ML, Drevets WC, Rauch SL, Lane R. Neurobiology of emotion perception I: The neural basis of normal emotion perception. Biol Psychiatry. 2003;54:504–14.

Broadbent DE. Perception and communication. New York: Pergamon Press; 1958.

Pashler H. Dual-task interference in simple tasks: Data and theory. Psychol Bull. 1994;116:220–44.

Lavie N, Hirst A, De Fockert JW, Viding E. Load theory of selective attention and cognitive control. J Exp Psychol Gen. 2004;133:339–54.

Bishop SJ, Jenkins R, Lawrence AD. Neural processing of fearful faces: Effects of anxiety are gated by perceptual capacity limitations. Cereb Cortex. 2007;17:1595–603.

Bunford N, Roberts J, Kennedy AE, Klumpp H. Neurofunctional correlates of behavioral inhibition system sensitivity during attentional control are modulated by perceptual load. Biol Psychol. 2017;127:10–7.

Klumpp H, Kinney KL, Kennedy AE, Shankman SA, Langenecker SA, Kumar A, et al. Trait attentional control modulates neurofunctional response to threat distractors in anxiety and depression. J Psychiatr Res. 2018;102:87–95.

Wampold BE. How important are the common factors in psychotherapy? An update. World Psychiatry. 2015;14:270–7.

Chu BC, Skriner LC, Zandberg LJ. Shape of change in cognitive behavioral therapy for youth anxiety: Symptom trajectory and predictors of change. J Consult Clin Psychol. 2013;81:573–87.

Andrews LA, Hayes AM, Abel A, Kuyken W. Sudden gains and patterns of symptom change in cognitive-behavioral therapy for treatment-resistant depression. J Consult Clin Psychol. 2020;88:106–18.

Liebowitz MR. Social phobia. Mod Probl Pharmacopsychiatry. 1987;22:141–73.

Hamilton M. A rating scale for depression. J Neurol Neurosurg Psychiatry. 1960;23:56–62.

Beck AT, Steer RA, Brown GK. Manual for the Beck depression inventory-II. Psychological Corporation: San Antonio, TX; 1996.

First MB, Williams JBW, Karg RS, Spitzer RL. Structured clinical interview for DSM-5—Research version (SCID-5 for DSM-5, research version; SCID-5-RV). Arlington, VA: American Psychiatric Association; 2015.

Beck AT, Rush AJ, Shaw BF, Emery G. Cognitive therapy of depression. Guilford Press: New York; 1979.

Hope DA, Heimberg RG, Turk CL. Managing social anxiety: A cognitive-behavioral therapy approach. Oxford University Press: New York; 2006.

Martell CR, Dimidjian S, Herman-Dunn R. Behavioral activation for depression: A clinician’s guide. Guilford Press: New York; 2010.

Markowitz JC, Manber R, Rosen P. Therapists’ responses to training in brief supportive psychotherapy. Am J Psychother. 2008;62:67–81.

Rogers CR. Significant aspects of client-centered therapy. Am Psychol. 1946;1:415–22.

Moeller J. A word on standardization in longitudinal studies: Don’t. Front Psychol. 2015;6:1389.

Kuckertz JM, Najmi S, Baer K, Amir N. Refining the analysis of mechanism–outcome relationships for anxiety treatment: a preliminary investigation using mixed models. Behav Modif. April. https://doi.org/10.1177/0145445519841055.

Asnaani A, Benhamou K, Kaczkurkin AN, Turk-Karan E, Foa EB. Beyond the constraints of an RCT: Naturalistic treatment outcomes for anxiety-related disorders. Behav Ther. 2020;51:434–46.

Little TD. Longitudinal structural equation modeling. New York, NY: Guilford Press; 2013.

Ekman P, Friesen WV. Pictures of facial affect. Consult Psychol Press: Palo Alto, CA; 1976.

Rolls ET, Huang CC, Lin CP, Feng J, Joliot M. Automated anatomical labelling atlas 3. Neuroimage 2020;206:116189.

Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox. Present. 8th Int. Conferance Funct. Mapp. Hum. Brain, 2002.

Gorka SM, Young CB, Klumpp H, Kennedy AE, Francis J, Ajilore O, et al. Emotion-based brain mechanisms and predictors for SSRI and CBT treatment of anxiety and depression: A randomized trial. Neuropsychopharmacology. 2019;44:1639–48.

Yang Z, Oathes D, Linn KA, Bruce SE, Satterthwaite TD, Cook PA, et al. Cognitive behavioral therapy is associated with enhanced cognitive control network activity in major depression and posttraumatic stress disorder. Biol Psychiatry Cogn Neurosci Neuroimaging. 2018;3:311–9.

Etkin A, Egner T, Peraza DM, Kandel ER, Hirsch J. Resolving emotional conflict: A role for the rostral anterior cingulate cortex in modulating activity in the amygdala. Neuron. 2006;51:871–82.

Tolin DF. Is cognitive-behavioral therapy more effective than other therapies? A meta-analytic review. Clin Psychol Rev. 2010;30:710–20.

Van Dis EAM, Van Veen SC, Hagenaars MA, Batelaan NM, Bockting CLH, Van Den Heuvel RM, et al. Long-term outcomes of cognitive behavioral therapy for anxiety-related disorders: A systematic review and meta-analysis. JAMA Psychiatry. 2020;77:265–73.

Karyotaki E, Smit Y, De Beurs DP, Henningsen KH, Robays J, Huibers MJH, et al. The long-term efficacy of acute-phase psychotherapy for depression: A meta-analysis of randomized trials. Depress Anxiety. 2016;33:370–83.

Cuijpers P, Reijnders M, Huibers MJH. The role of common factors in psychotherapy outcomes. Annu Rev Clin Psychol. 2019;15:207–31.

Filkowski MM, Haas BW. Rethinking the use of neutral faces as a baseline in fMRI neuroimaging studies of axis-I psychiatric disorders. J Neuroimaging. 2017;27:281–91.

Funding

This work was supported by NIH/NIMH R01-MH112705 (HK), NIH/NIMH T32-MH067631 (CF), and the Center for Clinical and Translational Research (CCTS) UL1RR029879.

Author information

Authors and Affiliations

Contributions

CF contributed to data analysis, manuscript writing, and interpretation of results. JJ contributed to the collection and analysis of neuroimaging data. RB assisted with the analytic approach. JD performed psychotherapy and contributed to manśuscript preparation. GRM performed psychotherapy and contributed to manuscript preparation. OA reviewed the medical history of patients and contributed to manuscript preparation. SAS contributed to the interpretation of findings and manuscript preparation. SAL contributed to the interpretation of findings and manuscript preparation. MGC oversaw treatment fidelity, adherence, and manuscript preparation. KLP contributed to the interpretation of findings and manuscript preparation. HK contributed to study conception, manuscript writing, and interpretation of results. All authors contributed to and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Feurer, C., Jimmy, J., Bhaumik, R. et al. Anterior cingulate cortex activation during attentional control as a transdiagnostic marker of psychotherapy response: a randomized clinical trial. Neuropsychopharmacol. 47, 1350–1357 (2022). https://doi.org/10.1038/s41386-021-01211-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41386-021-01211-2