Google uses automated methods that rank search results on the basis of quality measures. But there could be additional approaches to preventing people falling into data voids of misinformation and disinformation.Credit: Aytac Unal/Anadolu Agency/Getty

This year, countries with a combined population of 4 billion — around half the world’s people — are holding elections, in what is being described as the biggest election year in recorded history. Some researchers are concerned that 2024 could also be one of the biggest years for the spreading of misinformation and disinformation. Both refer to misleading content, but disinformation is deliberately generated.

Vigorous debate and argument ahead of elections is foundational to democratic societies. Political parties have long competed for voter approval and subjected their differing policies to public scrutiny. But the difference now is that online search and social media enable claims and counterclaims to be made almost endlessly.

A study in Nature1 last month highlights a previously underappreciated aspect of this phenomenon: the existence of data voids, information spaces that lack evidence, into which people searching to check the accuracy of controversial topics can easily fall. The paper suggests that media-literacy campaigns that emphasize ‘just searching’ for information online need to become smarter. It might no longer be enough for search providers to combat misinformation and disinformation by just using automated systems to deprioritize these sources. Indeed, genuine, lasting solutions to a problem that could be existential for democracies needs to be a partnership between search-engine providers and sources of evidence-based knowledge.

The mechanics of how misinformation and disinformation spread has long been an active area of research. According to the ‘illusory truth effect’, people perceive something to be true the more they are exposed to it, regardless of its veracity. This phenomenon pre-dates2,3 the digital age and now manifests itself through search engines and social media.

Read the paper: Online searches to evaluate misinformation can increase its perceived veracity

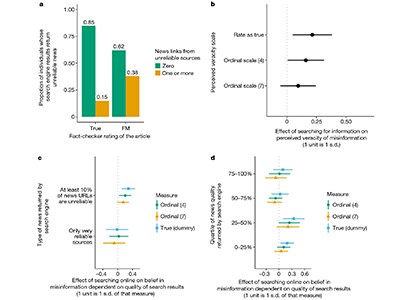

In their recent study1, Kevin Aslett, a political scientist at the University of Central Florida in Orlando, and his colleagues found that people who used Google Search to evaluate the accuracy of news stories — stories that the authors but not the participants knew to be inaccurate — ended up trusting those stories more. This is because their attempts to search for such news made them more likely to be shown sources that corroborated an inaccurate story.

In one experiment, participants used the search engine to verify claims that the US government engineered a famine by locking down during the COVID-19 pandemic. When they entered terms used in inaccurate news stories, such as 'engineered famine', to get information, they were more likely to find sources uncritically reporting an engineered famine. The results also held when participants used search terms to describe other unsubstantiated claims about SARS-CoV-2: for example, that it rarely spreads between asymptomatic people, or that it surges among people even after they are vaccinated.

Nature reached out to Google to discuss the findings, and to ask what more could be done to make the search engine recommend higher-quality information in its search results. Google’s algorithms rank news items by taking into account various measures of quality, such as how much a piece of content aligns with the consensus of expert sources on a topic. In this way, the search engine deprioritizes unsubstantiated news, as well as news sources carrying unsubstantiated news from its results. Furthermore, its search results carry content warnings. For example, ‘breaking news’ indicates that a story is likely to change and that readers should come back later when more sources are available. There is also an ‘about this result’ tab, which explains more about a news source — although users have to click on a different icon to access it.

Clearly, copying terms from inaccurate news stories into a search engine reinforces misinformation, making it a poor method for verifying accuracy. So, what more could be done to route people to better sources? Google does not manually remove content, or de-rank a search result; nor does it moderate or edit content, in the way that social-media sites and publishers do. Google is sticking to the view that, when it comes to ensuring quality results, the future is automated methods that rank results on the basis of quality measures. But there can be additional approaches to preventing people falling into data voids of misinformation and disinformation, as Google itself acknowledges and as Aslett and colleagues show.

The disinformation sleuths: a key role for scientists in impending elections

Some type of human input, for example, might enhance internal fact-checking systems, especially on topics on which there might be a void of reliable information. How this can be done sensitively is an important research topic, not least because the end result should be not about censorship, but about protecting people from harm.

There’s also a body of literature on improving media literacy — including suggestions on more, or better education on discriminating between different sources in search results. Mike Caulfield, who studies media literacy and online verification skills at the University of Washington in Seattle, says that there is value in exposing a wider population to some of the skills taught in research methods. He recommends starting with influential people, giving them opportunities to improve their own media literacy, as a way to then influence others in their networks.

One point raised by Paul Crawshaw, a social scientist at Teesside University in Middlesbrough, UK, is that research-methods teaching on its own does not always have the desired impact. Students benefit more when they are learning about research methods while carrying out research projects. He also suggests that lessons could be learnt by studying the conduct and impact of health-literacy campaigns. In some cases, these can be less effective for people on lower incomes4, compared with those on higher incomes. Understanding that different population groups have different needs will also need to be factored into media-literacy campaigns, he argues. Research journals, such as Nature, also have a part to play in bridging data voids; it cannot just be the responsibility of search-engine providers. In other words, any response to misinformation and disinformation needs to be a partnership.

Clearly, there’s work to do. The need is urgent, because it’s possible that generative artificial-intelligence and large language models will propel misinformation to much greater heights. The often-mentioned phrase ‘search it online’ could end up increasing the prominence of inaccurate news instead of reducing it. In this super election year, people need to have the confidence to know that, if a piece of news comes from an untrustworthy source, the best choice might be to simply ignore it.

Read the paper: Online searches to evaluate misinformation can increase its perceived veracity

Read the paper: Online searches to evaluate misinformation can increase its perceived veracity

The disinformation sleuths: a key role for scientists in impending elections

The disinformation sleuths: a key role for scientists in impending elections