The sculpture of Alan Turning at Bletchley Park, UK. It is 73 years since Turing proposed an ‘imitation’ game as a test of computers’ thinking capabilities.Credit: Lenscap/Alamy

“I propose to consider the question, ‘Can machines think?” So began a seminal 1950 paper by British computing and mathematics luminary Alan Turing (A. M. Turing Mind LIX, 433–460; 1950).

But as an alternative to the thorny task of defining what it means to think, Turing proposed a scenario that he called the “imitation game”. A person, called the interrogator, has text-based conversations with other people and a computer. Turing wondered whether the interrogator could reliably detect the computer — and implied that if they could not, then the computer could be presumed to be thinking. The game captured the public’s imagination and became known as the Turing test.

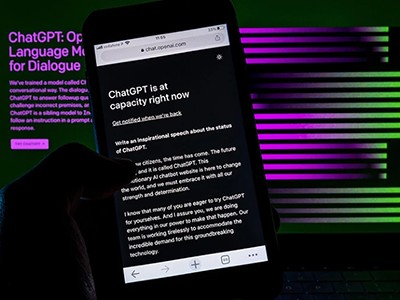

Although an enduring idea, the test has largely been considered too vague — and too focused on deception, rather than genuinely intelligent behaviour — to be a serious research tool or goal for artificial intelligence (AI). But the question of what part language can play in evaluating and creating intelligence is more relevant today than ever. That’s thanks to the explosion in the capabilities of AI systems known as large language models (LLMs), which are behind the ChatGPT chatbot, made by the firm OpenAI in San Francisco, California, and other advanced bots, such as Microsoft’s Bing Chat and Google’s Bard. As the name ‘large language model’ suggests, these tools are based purely on language.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

With an eerily human, sometimes delightful knack for conversation — as well as a litany of other capabilities, including essay and poem writing, coding, passing tough exams and text summarization — these bots have triggered both excitement and fear about AI and what its rise means for humanity. But underlying these impressive achievements is a burning question: how do LLMs work? As with other neural networks, many of the behaviours of LLMs emerge from a training process, rather than being specified by programmers. As a result, in many cases the precise reasons why LLMs behave the way they do, as well as the mechanisms that underpin their behaviour, are not known — even to their own creators.

As Nature reports in a Feature, scientists are piecing together both LLMs’ true capabilities and the underlying mechanisms that drive them. Michael Frank, a cognitive scientist at Stanford University in California, describes the task as similar to investigating an “alien intelligence”.

Revealing this is both urgent and important, as researchers have pointed out (S. Bubeck et al. Preprint at https://arxiv.org/abs/2303.12712; 2023). For LLMs to solve problems and increase productivity in fields such as medicine and law, people need to better understand both the successes and failures of these tools. This will require new tests that offer a more systematic assessment than those that exist today.

Breezing through exams

LLMs ingest enormous reams of text, which they use to learn to predict the next word in a sentence or conversation. The models adjust their outputs through trial and error, and these can be further refined by feedback from human trainers. This seemingly simple process can have powerful results. Unlike previous AI systems, which were specialized to perform one task or have one capability, LLMs breeze through exams and questions with a breadth that would have seemed unthinkable for a single system just a few years ago.

ChatGPT: five priorities for research

But as researchers are increasingly documenting, LLMs’ capabilities can be brittle. Although GPT-4, the most advanced version of the LLM behind ChatGPT, has aced some academic and professional exam questions, even small perturbations to the way a question is phrased can throw the models off. This lack of robustness signals a lack of reliability in the real world.

Scientists are now debating what is going on under the hood of LLMs, given this mixed performance. On one side are researchers who see glimmers of reasoning and understanding when the models succeed at some tests. On the other are those who see their unreliability as a sign that the model is not as smart as it seems.

AI approvals

More systematic tests of LLMs’ capabilities would help to settle the debate. These would provide a more robust understanding of the models’ strengths and weaknesses. Similar to the processes that medicines go through to attain approval as treatments and to uncover possible side effects, assessments of AI systems could allow them to be deemed safe for certain applications and could enable the ways they might fail to be declared to users.

In May, a team of researchers led by Melanie Mitchell, a computer scientist at the Santa Fe Institute in New Mexico, reported the creation of ConceptARC (A. Moskvichev et al. Preprint at https://arxiv.org/abs/2305.07141; 2023): a series of visual puzzles to test AI systems’ ability to reason about abstract concepts. Crucially, the puzzles systematically test whether a system has truly grasped 16 underlying concepts by testing each one in 10 ways (spoiler alert: GPT-4 performs poorly). But ConceptARC addresses just one facet of reasoning and generalization; more tests are needed.

What ChatGPT and generative AI mean for science

Confidence in a medicine doesn’t just come from observed safety and efficacy in clinical trials, however. Understanding the mechanism that causes its behaviour is also important, allowing researchers to predict how it will function in different contexts. For similar reasons, unravelling the mechanisms that give rise to LLMs’ behaviours — which can be thought of as the underlying ‘neuroscience’ of the models — is also necessary.

Researchers want to understand the inner workings of LLMs, but they have a long road to travel. Another hurdle is a lack of transparency — for example, in revealing what data models were trained on — from the firms that build LLMs. However, scrutiny of AI companies from regulatory bodies is increasing, and could force more such data to be disclosed in future.

Seventy-three years after Turing first proposed the imitation game, it’s hard to imagine a more important challenge for the field of AI than understanding the strengths and weaknesses of LLMs, and the mechanisms that drive them.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

ChatGPT broke the Turing test — the race is on for new ways to assess AI

What ChatGPT and generative AI mean for science

What ChatGPT and generative AI mean for science

ChatGPT: five priorities for research

ChatGPT: five priorities for research

How Nature readers are using ChatGPT

How Nature readers are using ChatGPT

ChatGPT gives an extra productivity boost to weaker writers

ChatGPT gives an extra productivity boost to weaker writers