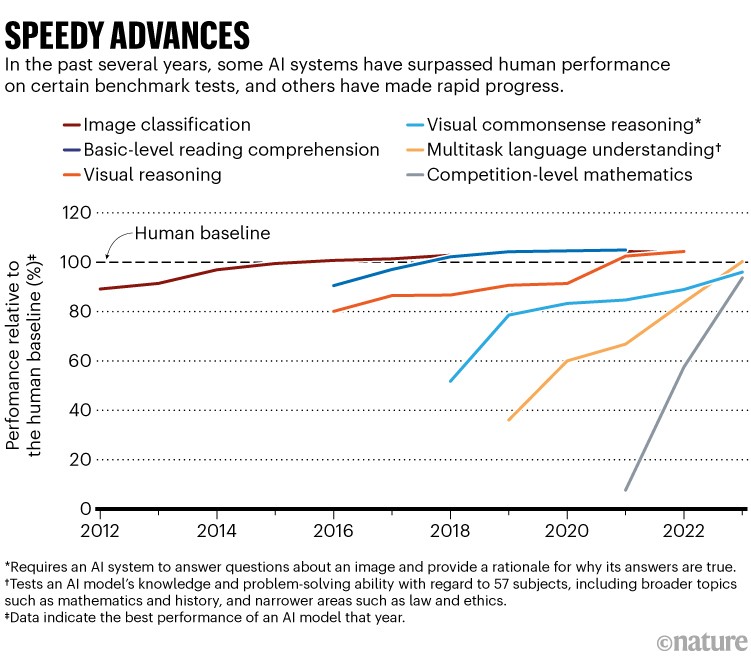

Artificial intelligence (AI) systems, such as the chatbot ChatGPT, have become so advanced that they now very nearly match or exceed human performance in tasks including reading comprehension, image classification and competition-level mathematics, according to a new report (see ‘Speedy advances’). Rapid progress in the development of these systems also means that many common benchmarks and tests for assessing them are quickly becoming obsolete.

These are just a few of the top-line findings from the Artificial Intelligence Index Report 2024, which was published on 15 April by the Institute for Human-Centered Artificial Intelligence at Stanford University in California. The report charts the meteoric progress in machine-learning systems over the past decade.

In particular, the report says, new ways of assessing AI — for example, evaluating their performance on complex tasks, such as abstraction and reasoning — are more and more necessary. “A decade ago, benchmarks would serve the community for 5–10 years” whereas now they often become irrelevant in just a few years, says Nestor Maslej, a social scientist at Stanford and editor-in-chief of the AI Index. “The pace of gain has been startlingly rapid.”

Stanford’s annual AI Index, first published in 2017, is compiled by a group of academic and industry specialists to assess the field’s technical capabilities, costs, ethics and more — with an eye towards informing researchers, policymakers and the public. This year’s report, which is more than 400 pages long and was copy-edited and tightened with the aid of AI tools, notes that AI-related regulation in the United States is sharply rising. But the lack of standardized assessments for responsible use of AI makes it difficult to compare systems in terms of the risks that they pose.

The rising use of AI in science is also highlighted in this year’s edition: for the first time, it dedicates an entire chapter to science applications, highlighting projects including Graph Networks for Materials Exploration (GNoME), a project from Google DeepMind that aims to help chemists discover materials, and GraphCast, another DeepMind tool, which does rapid weather forecasting.

Growing up

The current AI boom — built on neural networks and machine-learning algorithms — dates back to the early 2010s. The field has since rapidly expanded. For example, the number of AI coding projects on GitHub, a common platform for sharing code, increased from about 800 in 2011 to 1.8 million last year. And journal publications about AI roughly tripled over this period, the report says.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

Much of the cutting-edge work on AI is being done in industry: that sector produced 51 notable machine-learning systems last year, whereas academic researchers contributed 15. “Academic work is shifting to analysing the models coming out of companies — doing a deeper dive into their weaknesses,” says Raymond Mooney, director of the AI Lab at the University of Texas at Austin, who wasn’t involved in the report.

That includes developing tougher tests to assess the visual, mathematical and even moral-reasoning capabilities of large language models (LLMs), which power chatbots. One of the latest tests is the Graduate-Level Google-Proof Q&A Benchmark (GPQA)1, developed last year by a team including machine-learning researcher David Rein at New York University.

The GPQA, consisting of more than 400 multiple-choice questions, is tough: PhD-level scholars could correctly answer questions in their field 65% of the time. The same scholars, when attempting to answer questions outside their field, scored only 34%, despite having access to the Internet during the test (randomly selecting answers would yield a score of 25%). As of last year, AI systems scored about 30–40%. This year, Rein says, Claude 3 — the latest chatbot released by AI company Anthropic, based in San Francisco, California — scored about 60%. “The rate of progress is pretty shocking to a lot of people, me included,” Rein adds. “It’s quite difficult to make a benchmark that survives for more than a few years.”

Cost of business

As performance is skyrocketing, so are costs. GPT-4 — the LLM that powers ChatGPT and that was released in March 2023 by San Francisco-based firm OpenAI — reportedly cost US$78 million to train. Google’s chatbot Gemini Ultra, launched in December, cost $191 million. Many people are concerned about the energy use of these systems, as well as the amount of water needed to cool the data centres that help to run them2. “These systems are impressive, but they’re also very inefficient,” Maslej says.

Costs and energy use for AI models are high in large part because one of the main ways to make current systems better is to make them bigger. This means training them on ever-larger stocks of text and images. The AI Index notes that some researchers now worry about running out of training data. Last year, according to the report, the non-profit research institute Epoch projected that we might exhaust supplies of high-quality language data as soon as this year. (However, the institute’s most recent analysis suggests that 2028 is a better estimate.)

AI ‘breakthrough’: neural net has human-like ability to generalize language

Ethical concerns about how AI is built and used are also mounting. “People are way more nervous about AI than ever before, both in the United States and across the globe,” says Maslej, who sees signs of a growing international divide. “There are now some countries very excited about AI, and others that are very pessimistic.”

In the United States, the report notes a steep rise in regulatory interest. In 2016, there was just one US regulation that mentioned AI; last year, there were 25. “After 2022, there’s a massive spike in the number of AI-related bills that have been proposed” by policymakers, Maslej says.

Regulatory action is increasingly focused on promoting responsible AI use. Although benchmarks are emerging that can score metrics such as an AI tool’s truthfulness, bias and even likability, not everyone is using the same models, Maslej says, which makes cross-comparisons hard. “This is a really important topic,” he says. “We need to bring the community together on this.”

ChatGPT broke the Turing test — the race is on for new ways to assess AI

ChatGPT broke the Turing test — the race is on for new ways to assess AI

AI ‘breakthrough’: neural net has human-like ability to generalize language

AI ‘breakthrough’: neural net has human-like ability to generalize language

Google AI has better bedside manner than human doctors — and makes better diagnoses

Google AI has better bedside manner than human doctors — and makes better diagnoses

Abstracts written by ChatGPT fool scientists

Abstracts written by ChatGPT fool scientists

What ChatGPT and generative AI mean for science

What ChatGPT and generative AI mean for science