Abstract

A new research topic in spintronics relating to the operation principles of brain-inspired computing is input-driven magnetization dynamics in nanomagnet. In this paper, the magnetization dynamics in a vortex spin-torque oscillator driven by a series of random magnetic field are studied through a numerical simulation of the Thiele equation. It is found that input-driven synchronization occurs in the weak perturbation limit, as found recently. As well, chaotic behavior is newly found to occur in the vortex core dynamics for a wide range of parameters, where synchronized behavior is disrupted by an intermittency. Ordered and chaotic dynamical phases are examined by evaluating the Lyapunov exponent. The relation between the dynamical phase and the computational capability of physical reservoir computing is also studied.

Similar content being viewed by others

The ability to excite chaotic dynamics in nanomagnets1,2,3,4,5,6,7,8,9 would potentially have practical applications, such as encoding and random number generators10. So far, however, it has proven difficult to achieve; chaos is a non-periodic and sustainable motion of a physical body maintained by energy injected into a nonlinear system, whereas the magnetization dynamics induced by applying magnetic field and/or current are mainly in the form of switching or a periodic oscillation11,12,13,14,15,16.

Here, several techniques have recently been proposed and experimentally demonstrated with the goal of exciting and identifying chaos, including ones using a delayed-feedback circuit5,7,9 and ones that add another ferromagnet2,3,4,5,6,8. Another possible stage on which to demonstrate chaotic dynamics is an input-driven dynamical system17, where a series of time-dependent stimuli drive the nonlinear dynamics of a physical system. An example that utilizes such input-driven dynamics is human-voice recognition by a vortex spin-torque oscillator (STO)18, which is based on the algorithm of physical reservoir computing19,20,21. Wherein a human voice is converted into an electric-voltage input signal that modulates the dynamical amplitude of the vortex core. The input signal can be recognized when a one-to-one correspondence between it and the vortex dynamics is obtained through machine learning. Such a correspondence can be regarded as synchronization between the input signal and the STO response. A critical difference between this sort of synchronization and the conventional synchronizations studied in STOs previously, such as forced synchronization22,23, is that the input signal is generally non-periodic, whereas the input in the conventional cases usually has a certain periodicity. Moreover, while the STO dynamics in the conventional cases become identical to the input signal except a phase difference, the STO dynamics in this case are not generally identical to the input signal but have a certain reproducibility; the STO response is the same when the same input signal is injected. Thus, it is a wider concept of synchronization that encompasses the conventional ideas, which can be called generalized synchronization24, i.e., is a kind of input-driven dynamics first examined in the 1990s25,26,27. Another example of input-driven dynamics is chaos28,29, near which the computational capability of physical reservoir computing is sometimes enhanced30,31. As can be seen from these examples, input-driven dynamics is of great interest to researchers studying brain-inspired computing, because its complexity can be used for information processing32,33. However, there are only a few reports29,34,35,36 on input-driven dynamics, in particular chaos, in spintronics devices.

In this work, we study input-driven magnetization dynamics in a vortex STO by performing a numerical simulation of the Thiele equation. A uniformly distributed random magnetic field is used as a series of input signals; such input signals have often been used to study of input-driven dynamics37. We found that when the input is weak, input-driven synchronization occurs38; the output of the STO is determined by the input signal and is independent of the initial state. On the other hand, in the strong input limit, input-driven chaotic dynamics appear, in the form of an intermittency in the synchronized behavior, and therefore, the temporal dynamics depend on the initial state. We distinguish these ordered and chaotic dynamical phases by evaluating the largest conditional Lyapunov exponent, which is a measure quantifying the sensitivity of the dynamics to the initial state of the STO. We also study the relation between the dynamical phase and computational capability of the STO by relating the Lyapunov exponent to the short-term memory capacity.

Model

Thiele equation

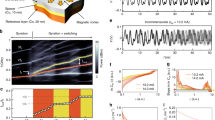

The system we consider is schematically shown in Fig. 1a, where the vortex STO consists of a ferromagnetic/nonmagnetic/ferromagnetic trilayer. The free layer has a magnetic vortex, while the reference layer is uniformly magnetized. The z axis is normal to the film-plane, while the x axis is parallel to the projection of the magnetization in the reference layer onto the film-plane. Throughout this paper, the unit vector pointing in the direction of the k-axis (\(k=x,y,z\)) is denoted as \({\textbf{e}}_{k}\). It has been shown that the experimental and numerical results on the magnetization dynamics in a vortex STO are well described by the Thiele equation,39,40,41,42,43,44,45,46

where \({\textbf{X}}=(X,Y,0)\) is the position vector of the vortex core, while \(G=2\pi pML/\gamma\) and \(D=-(2\pi \alpha ML/\gamma )[1-(1/2)\ln (R_{0}/R)]\) consist of the saturation magnetization M, the gyromagnetic ratio \(\gamma\), the Gilbert damping constant \(\alpha\), the thickness L, the disk radius R, and the core radius \(R_{0}\) of the free layer. The polarity p and chirality c are each assumed to be \(+1\) for convenience. The normalized distance of the vortex core’s position from the disk center is \(s=|{\textbf{X}}|/R=\sqrt{(X/R)^{2}+(Y/R)^{2}}\). The dimensionless parameter \(\xi\) quantifies the nonlinear damping in a highly excited state45. The parameters \(\kappa\) and \(\zeta\) relate to the magnetic potential energy W via

and \(\kappa =(10/9)4\pi M^{2}L^{2}/R\)45. The dimensionless parameter \(\zeta\) provides the linear dependence of the oscillation frequency on the current45. As mentioned below, the oscillation amplitude of the vortex core is determined by the current magnitude, and thus, we call \(\zeta\) the amplitude-frequency coupling parameter, for convenience. The spin-transfer torque strength with spin polarization P is \(a_{J}=\pi \hslash P/(2e)\)43,44. The electric current density J is positive when the current flows from the reference to the free layer. The current is denoted as \(I=\pi R^{2}J\). The unit vector pointing in the magnetization direction in the reference layer is \({\textbf{p}}=(p_{x},0,p_{z})\). The external magnetic field is denoted as \({\textbf{H}}\), while \(\mu ^{*}=\pi MLR\). The material parameters are summarized in “Methods” section. Note that the current is an experimentally controllable variable; typically, it is on the order of 1 mA (\(I < 10\) mA47). Moreover, the value of \(\zeta\) relates to the material and is on the order of 0.1–1.046. Therefore, we will study the dynamical behavior of the vortex core as a function of J (or equivalently, I) and \(\zeta\); see also “Methods” section.

In the absence of a current and magnetic field, the vortex core is located near the disk center. By applying a direct current, spin-transfer torque moves the vortex core from the disk center and excites an auto-oscillation with an approximately constant amplitude when the current density is larger than a critical value48,

The oscillation amplitude and frequency are \(s_{0}=\sqrt{[(J/J_{\textrm{c}})-1]/(\xi +\zeta )}\) and \(f_{0}=(\kappa /G)(1+\zeta s_{0}^{2})/(2\pi )\), respectively. The condition that the vortex core is inside the ferromagnetic disk, i.e., \(|{\textbf{X}}|<R\) or equivalently, \(s < 1\), implies that the Thiele equation is applicable when the current density is less than another threshold value,

Figure 1b shows an example of such an auto-oscillation, where \(I=5.0\) mA and \(\zeta =7.52\). The figure indicates that the vortex core moves from the disk center (\(s=0\)) and shows an oscillation around the center with an approximately constant radius \(s_{0}\). The small-amplitude oscillation of s is due to the fifth term in Eq. (1), which originates from the in-plane component of \({\textbf{p}}\) and breaks the axial symmetry of the Thiele equation around the z axis.

The input-driven dynamics appear when a series of time-dependent signals is injected to this auto-oscillation state. The input signal in this study is a random-pulse magnetic field, as in an experiment on physical reservoir computing49,

where \(h_{x}\) is the maximum value of the input magnetic field, and r(t) is a uniformly distributed random number in the range of \(-1 \le r(t) \le 1\) whose corresponding input signal has a pulse width \(t_{\textrm{p}}\). Although the pulse input signal in the experiments has a rise time, it can be shortened by the preprocessing50. For simplicity, we assume a step-function pulse in this work.

(a) Schematic illustration of vortex spin torque oscillator and dynamics of the vortex core induced by a direct current (DC) and random magnetic field. The DC induces an oscillation of the vortex core around the disk center whereas the random magnetic field modulates the oscillation amplitude. (b) Evolution of the normalized amplitude s in the presence of the current \(I=5\) mA. The inset shows the dynamics of the x component and y component of the magnetic vortex core normalized by the radius of the magnetic vortex core.

Results

Temporal dynamics

The input magnetic field given by Eq. (5) causes two kinds of dynamics; synchronization and chaotic. Figure 2a shows two solutions of Eq. (1) with slightly different initial conditions before injection of the input signal, where \(I=5.0\) mA and \(\zeta =7.52\). For convention, let us denote the two solutions as \({\textbf{X}}_{1}\) and \({\textbf{X}}_{2}\). The solutions oscillate with the same frequency but different phases. When a weak input signal is injected from \(t=200\) ns, they overlap in the limit \(t\rightarrow \infty\); i.e., synchronization occurs; see Fig. 2b, where \(h_{x}=4.0\) Oe and \(t_{\textrm{p}}=3.0\) ns. The difference between the two solutions, \(|{\textbf{X}}_{1}-{\textbf{X}}_{2}|/R\), is illustrated in Fig. 2c. As shown, the difference decreases over time, which also indicates that phase synchronization is achieved. Recall that the input signal does not have periodicity; therefore, the synchronization of the two solutions is different from the forced synchronization found in, for example, Refs.22,23. The synchronization here is input-driven51,52,53,54,55, where the temporal dynamics are determined by the input signal, as was found in a vortex STO recently38. It occurs when the strength of the input signal is relatively weak.

Chaotic dynamics also appear as the input-signal strength increases. Figure 2d shows an example with \(h_{x}=16.0\) Oe, where the two solutions of Eq. (1) were arrived at with slightly different initial conditions. As shown, the solutions are never completely overlapped even though a long time passes. The difference between the two solutions often becomes small; i.e., synchronized behavior appears. However, before the difference completely becomes zero, intermittency appears and the two solutions separate from each other; see also Fig. 2e. This result indicates that the dynamical behavior of the vortex core is not solely determined by the input signal, but is also sensitive to its initial state. The sensitivity of the dynamical behavior implies chaos. We emphasize that the chaotic behavior is caused by the input signal. In fact, without the input signal, the system described by the Thiele equation is a two-dimensional autonomous system, and in such a system, chaos is prohibited by Poincaré–Bendixson theorem56. Therefore, the behavior of the vortex core must be input-driven chaos.

The chaotic dynamics found here derives from the temporal dynamics. To identify it more systematically, we can evaluate the Lyapunov exponent.

(a) Temporal dynamics of two X/Rs, denoted as \(X_{1}/R\) and \(X_{2}/R\), with slightly different initial condition. Here, the random magnetic field is not injected yet. (b) Temporal dynamics of \(X_{1}/R\) and \(X_{2}/R\) after injection of the input signal. The input signal is relatively weak (\(h_{x}=4\) Oe). (c) Difference between \({\textbf{X}}/R\)s, \(|{\textbf{X}}_{1}-{\textbf{X}}_{2}|/R\), for a small input strength. (d) Temporal dynamics of \(X_{1}/R\) and \(X_{2}/R\) after injection of the input signal. The input signal is relatively strong (\(h_{x}=16\) Oe). (e) Difference between \({\textbf{X}}/R\)s, \(|{\textbf{X}}_{1}-{\textbf{X}}_{2}|/R\), for a large input strength.

Lyapunov exponent

The Lyapunov exponent is the expansion rate of the difference between two solutions of an equation of motion with slightly different initial conditions. In the present case, we will define the difference between two solutions, \({\textbf{X}}_{1}\) and \({\textbf{X}}_{2}\), as \(\delta x = |{\textbf{X}}_{1}-{\textbf{X}}_{2}|/R\). When \({\textbf{X}}_{1}\simeq {\textbf{X}}_{2}\) at a certain time \(t_{0}\), the time development of \(\delta x\) can be described by a linear equation, \(\delta {\dot{x}}\simeq \lambda \delta x\). The solution is \(\delta x(t)\simeq \delta x(t_{0}) e^{\lambda t}\), and \(\lambda\) is the Lyapunov exponent. Accordingly, the two solutions become identical when the Lyapunov exponent is negative. The dynamics corresponding to a negative Lyapunov exponent are, for example, saturation to a fixed point and phase synchronization. On the other hand, when the Lyapunov exponent is positive, the difference between the two solutions increases, indicating sensitivity of the dynamics to the initial state. A positive exponent implies the appearance of chaos. Moreover, when the exponent is zero, the initial difference is kept constant. An example of such dynamics is a limit-cycle oscillation. The method of evaluating the Lyapunov exponent is summarized in “Methods” section.

Figure 3 summarizes the dependence of the Lyapunov exponent on the input magnetic field strength \(h_{x}\) and amplitude-frequency coupling parameter \(\zeta\) for various current values I; (a) 2.5, (b) 4.0, and (c) 5.0 mA. The pulse width of the input signal is \(t_{\textrm{p}}=3.0\) ns. The figure provides a broad perspective on the relation between the parameter values and the dynamical phases, although the detailed structure depends on the current value (see also Supplementary Information, which has the other data supporting the discussion below, obtained for various currents and pulse widths).

Dependence of Lyapunov exponent (\(\lambda\)) on the strength of the input signal (\(h_{x}\)) and amplitude–frequency coupling parameter (\(\zeta\)) for currents of (a) \(I=2.5\) mA, (b) \(I=4.0\) mA, and (c) \(I=5.0\) mA. The pulse width is 3.0 ns. The white dots represent the zero-exponent regions.

First, let us focus on the parameter regions where the values of \(h_{x}\) and \(\zeta\) are relatively small. The Lyapunov exponent takes on large negative values when \(h_{x}\) or \(\zeta\) increases. This tendency is clear in, for example, Fig. 3b, from \(h_{x}\simeq 0\) to \(h_{x}\simeq 4\) Oe. On the other hand, it seems somewhat unclear for \(I=2.5\) mA in Fig. 3a; but a closer look to small \(h_{x}\) and \(\zeta\) regions shows the negative enhancement of the exponent; see also Supplementary Information. This result implies that the input-induced synchronization is rapidly realized because the negative Lyapunov exponent corresponds to the inverse of the time scale decreasing the difference in the initial state; i.e., a large negative Lyapunov exponent means rapid synchronization. When the input strength \(h_{x}\) is large, the input signal substantially modifies the vortex-core position and contributes to a fast alignment of \({\textbf{X}}_{1}\) and \({\textbf{X}}_{2}\). Similarly, when \(\zeta\) is large, the oscillation frequency of the vortex core strongly depends on its oscillation amplitude s, and therefore, the vortex cores having different amplitudes oscillate with different frequencies and decrease their phase difference. Accordingly, synchronization easily occurs as \(h_{x}\) and \(\zeta\) increase, resulting in a large negative Lyapunov exponent.

Increasing the values of these parameters any further, however, makes it more difficult to fine tune the vortex-core position, because even a single input signal causes a large change in position. In such case, it is hard to align the vortex core positions by injection of an input signal; rather, a small difference between the initial states leads to a big difference in position. As a result, chaotic dynamics appears, which is confirmed by the positive Lyapunov exponent. We should also notice that the dependence of the Lyapunov exponent on the parameters is sometimes non-monotonic. For example, for \(I=5.0\) mA in Fig. 3c, at \(h_{x}\gtrsim 5.0\) Oe, the Lyapunov exponent becomes negative or positive depending on the value of \(\zeta\) around \(\zeta =3.0\). Such a parameter dependence has been frequently observed in nonlinear dynamical systems showing chaos10; see also Supplemental Information showing the dependence of the Lyapunov exponent on \(\zeta\) and the bifurcation diagram.

In summary, increasing the values of the system parameters leads to an enhancement in the efficiency of achieving input-driven synchronization, which can be quantified by an increasingly large negative Lyapunov exponent. Increasing the parameters further, however, results in a transition of the dynamical phase from synchronized to chaotic, because a large modulation of the vortex-core position expands the difference between the initial states. Chaotic dynamics appears when the Lyapunov exponent is positive.

At the end of this section, let us give a brief comment on the verification of chaos experimentally. In the experiments, the initial state of the vortex core is uncontrollable. Therefore, it is difficult to evaluate the Lyapunov exponent experimentally by a method similar to that used here, although there are statistical methods estimating the Lyapunov exponent from the experimentally observed data57,58,59. In addition, the stochastic torque due to thermal fluctuation60 makes the vortex-core dynamics complex, which also makes it difficult to evaluate the Lyapunov exponent. Note that there are other methods identifying chaos from the experimentally observed data. For example, the noise limit61,62 can be a figure of merit for the identification of chaos; in fact, it has been used in the recent experiments using the vortex STOs2,9. The noise limit has been, however, mainly evaluated in autonomous systems, while the present system is nonautonomous. An extension making it applicable to the nonautonomous system might be necessary, which could be a future work.

Discussion

We have found that chaotic dynamics in a vortex STO can be driven by injection of a random magnetic-field pulse. Moreover, Ref.29 found that a pulse-current driven bifurcation from ordered to chaotic dynamics occurs in a macrospin STO. While both results were obtained through numerical simulations, our findings, which are based on the use of a magnetic field, might be more suitable for an experimental verification. This is because excitation of chaos often requires a large input signal, and a large current can be used for generating a magnetic field, whereas there is a restriction on the magnitude of the current that can be directly applied to an STO because of its high resistance; see also “Methods” section.

As mentioned in the Introduction, an interesting application of input-driven dynamics is information processing. Let us, therefore, evaluate the computational capability of the STO and investigate the relation between this capability and the dynamical phase. As a figure of merit, we will use the short-term memory capacity50 of physical reservoir computing, which corresponds to, roughly speaking, the number of random input signals a physical reservoir can recognize; see also “Methods” section describing the evaluation of the memory capacity. A large memory capacity corresponds to high computational capability. Figure 4 plots the value of the short-term memory capacity for various currents I for the pulse width of 3.0 ns. In particular, Fig. 4a–c show the dependence of the short-term memory capacity, as well as that of the Lyapunov exponent, on the input strength \(h_{x}\) for a fixed \(\zeta\), while Fig. 4d–f show the dependence of the short-term memory capacity on \(h_{x}\) and \(\zeta\); see also Supplemental Information for the short-term memory capacity obtained for different pulse widths. The short-term memory capacity is large when the current magnitude is large. It is attributed to the current dependence of the relaxation time. The short-term memory capacity becomes large when the reservoir recognizes the input data well. The STO recognizes the input data by the change of the oscillation amplitude. When the current is small, the relaxation time, or the response of the vortex core, is slow48, and therefore, the input data is not well recognized. Therefore, the input data is not well recognized by the STO, leading to a small short-term memory capacity. On the other hand, when the current is large, the vortex core responds to the input data immediately. Therefore, the STO recognizes the injection of the input data leading to a large short-term memory capacity. We also note that the magnitude relationship between the relaxation time and the pulse width affects the computational capability, which was studied in Ref.63.

Dependence of short-term memory capacity (\(C_{\textrm{STM}}\)) and the Lyapunov exponent \(\lambda\) on the input strength \(h_{x}\) for currents I of (a) 2.5, (b) 4.0, and (c) 5.0 mA; here \(\zeta =7.52\). Dependence of \(C_{\textrm{STM}}\) and \(h_{x}\) on \(h_{x}\) and \(\zeta\) for currents of (d) 2.5, (e) 4.0, and (f) 5.0 mA.

Comparing the results shown in Fig. 4 with those in Fig. 3 leads us to the following conclusions. The short-term memory capacity is large in the parameter regions where the Lyapunov exponent is negative. On the other hand, it becomes almost zero when the exponent is positive. These results can be interpreted as follows. A recognition task of an input signal is done by learning the response from the STO. Imagine that we inject identical input signals into the STO at different times. In general, the initial states of the STO for these different trials are different. Therefore, for STO to be able to recognize that input signals are the same, the output signal from it should saturate to a certain state that is independent of the initial ones. In other words, the temporal response of the STO should be solely determined by the input signal. Note that a negative Lyapunov exponent corresponds to the dynamics saturating to a fixed point and thus becoming independent of the initial state. Therefore, the dynamical phase corresponding to a negative Lyapunov exponent is applicable to a recognition task. On the other hand, when the dynamics are sensitive to the initial state, the STO will output different signals for the injection of a common input signal. In such a case, the success rate of a recognition task will be low. Chaos is a typical dynamics having sensitivity to the initial state. Therefore, the short-term memory capacity becomes zero when the Lyapunov exponent is positive. At the same time, we should note that, although chaotic dynamics are not useful for computing, its dynamical neighborhood can be used for computing. In some cases33, the boundary of the dynamical phase is useful for particular tasks. For example, the short-term memory capacity is maximized near the edge of chaos in some physical reservoirs30,31, although this is not the present case; see also Supplementary Information, where the Lyapunov exponent and the short-term memory capacity for various parameters are summarized. Therefore, performing computation even in a chaotic region would help to deepen our understanding of the computational capability of STOs.

In summary, we showed that input-driven chaotic magnetization dynamics can be excited in a vortex STO in a numerical simulation of the Thiele equation. Chaos was identified from the temporal dynamics of the solutions of the equation with slightly different conditions, as well as the analysis of the Lyapunov exponent. The correspondence between the dynamical phase of the input-driven STO and the short-term memory capacity of physical reservoir computing was also investigated. The results showed that the performance of brain-inspired computing relates to the dynamical phase of the input-driven dynamical systems, and therefore, manipulation of the phase is necessary for practical applications. The present work indicates that the dynamical phase of STO can be tuned between input-driven synchronized and chaotic states by system parameters. While the present work chose physical reservoir computing as an example, chaotic dynamics might have different applications in brain-inspired computing because chaotic dynamics has been found in artificial neural networks emulating brain activities64. The investigation of such applications will be a future work.

Methods

Material parameters

The material parameters were taken from typical experiments and simulations45,46,47 as \(M=1300\) emu/cm\({}^{3}\), \(\gamma =1.764\times 10^{7}\) rad/(Oe s), \(\alpha =0.01\), \(L=5\) nm, \(R=187.5\) nm, \(R_{0}=10\) nm, \(P=0.7\), \(\xi =2\), and \({\textbf{p}}=(\sin 60^{\circ },0,\cos 60^{\circ })\). A current I of 1 mA corresponds to a current density J of 0.9 MA/cm\({}^{2}\). The value of the current used in, for example, the experiment in Ref.47 is 8 mA at maximum. The value of \(\zeta\) in Ref.46 in the absence of a current is defined as \(\zeta =\kappa _{\textrm{ms}}^{\prime }/\kappa _{\textrm{ms}}\), where \(\kappa _{\textrm{ms}}\) and \(\kappa _{\textrm{ms}}^{\prime }\) relate to the magnetostatic energy and their values are determined by fitting experiments and/or numerical analysis of the Thiele equation. The values of these parameters in Ref.46 are widely distributed between the experimental (about \(\zeta \sim 5\)) and theoretical (about 0.2) analyses.

A typical magnitude of the input magnetic field used in the present work is on the order of 1–10 Oe. In experiments, a pulse magnetic field can be generated by applying a pulse electric current to a metal line. For example, assuming a thin metal line, a current of 1 mA flowing above an STO within a distance on the order of 10 nm generates a magnetic field on the order of 1–10 Oe, where we have used the Ampére law, \(H=I/(2\pi {\mathscr {R}})\) with current I and distance \({\mathscr {R}}\). In reality, the metal line has a finite width and the magnitude of the magnetic field will be small; for example, in Ref.65, a metal line is placed on a nanomagnet within a distance of 20 nm, and current of 20 mA generates a magnetic field of 80 Oe. Note that the magnitude of the magnetic field could be further enhanced by applying a large current, which is possible because the current is in a metal line. On the other hand, Ref.29 reported a bifurcation from order to chaos by injection of electric current pulses into a macrospin STO, where the current magnitude was on the order of 1–10 mA. However, it should be noted that a large current, such as 10 mA, leads to electrostatic breakdown of the STO, which includes an oxide barrier such as MgO.

Method of evaluating the Lyapunov exponent

The Shimada–Nagashima method66 was used to evaluate the Lyapunov exponent of STO6,7,29,36,67. Note that the Lyapunov exponent is the expansion rate of the difference between two solutions of an equation of motion with slightly different initial conditions. In this method, at a certain time \(t_{0}\), we add a small perturbation to a solution of the Thiele equation \({\textbf{X}}(t_{0})\) as \({\textbf{X}}^{(1)}(t_{0})={\textbf{X}}(t_{0})+\varepsilon R {\textbf{n}}_{0}\), where a unit vector \({\textbf{n}}_{0}\) in the xy plane points in an arbitrary direction and the meaning of the superscript “(1)” will be clarified below. The dimensionless parameter \(\varepsilon\) corresponds to the magnitude of the perturbation.

We solve the Thiele equations of \({\textbf{X}}(t_{0})\) and \({\textbf{X}}^{(1)}(t_{0})\) and obtain \({\textbf{X}}(t_{0}+\Delta t)\) and \({\textbf{X}}^{(1)}(t_{0}+\Delta t)\), where \(\Delta t\) is the time increment of the Thiele equation. Then, from the difference between \({\textbf{X}}(t_{0}+\Delta t)\) and \({\textbf{X}}^{(1)}(t_{0}+\Delta t)\), we evaluate the temporal Lyapunov exponent at \(t_{1}=t_{0}+\Delta t\) as \(\lambda ^{(1)}=(1/\Delta t)\ln [\varepsilon ^{(1)}/\varepsilon ]\), where \(\varepsilon ^{(1)}= |{\textbf{X}}^{(1)}(t_{1})-{\textbf{X}}(t_{1})|/R\).

Next, we introduce \({\textbf{X}}^{(2)}(t_{0}+\Delta t)\), which can be obtained by moving \({\textbf{X}}(t_{0}+\Delta t)\) in the direction of \({\textbf{X}}^{(1)}(t_{0}+\Delta t)\) over a distance \(\varepsilon\). Solving the Thiele equation of \({\textbf{X}}(t_{0}+\Delta t)\) and \({\textbf{X}}^{(2)}(t_{0}+\Delta t)\) yields \({\textbf{X}}(t_{0}+2 \Delta )\) and \({\textbf{X}}^{(2)}(t_{0}+2\Delta t)\). Then, the temporal Lyapunov exponent at \(t_{2}=t_{0}+2\Delta t\) is obtained as \(\lambda ^{(2)}=(1/\Delta t)\ln [\varepsilon ^{(2)}/\varepsilon ]\) with \(\varepsilon ^{(2)}=|{\textbf{X}}^{(2)}(t_{2})-{\textbf{X}}(t_{2})|/R\).

Now we repeat the above procedure: i.e., first, we introduce \({\textbf{X}}^{(n+1)}(t_{0}+n \Delta t)\) by moving \({\textbf{X}}(t_{0}+n \Delta t)\) in the direction of \({\textbf{X}}^{(n)}(t_{0}+\Delta t)\) over a fixed distance \(\varepsilon\). Second, by solving the Thiele equation, we obtain \({\textbf{X}}^{(n+1)}[t_{0}+(n+1)\Delta t]\) and \({\textbf{X}}[t_{0}+(n+1)\Delta t]\). Third, the temporal Lyapunov exponent at \(t_{n+1}=t_{0}+(n+1)\Delta t\) is obtained as

where

The Lyapunov exponent is the average of the temporal Lyapunov exponents defined as

Strictly speaking, the Lyapunov exponent obtained by this procedure corresponds to the maximum (or largest) Lyapunov exponent, i.e., the largest expansion rate of the difference between two solutions with slightly different initial conditions. The method assumes that, even though an initial perturbation is given in an arbitrary direction (\({\textbf{n}}_{0}\) mentioned above), the perturbed solution will naturally move in the most expandable direction. In addition, the Lyapunov exponent evaluated here is a conditional one, where, although the system is non-autonomous because time-dependent input signals are injected, only the expansion rate of \({\textbf{X}}\) is evaluated because the input signal is constant during a pulse width longer than the time increment29.

Method of evaluating short-term memory capacity

The memory capacity is a figure of merit quantifying the computational capability of physical reservoir computing68. As can be seen below, roughly speaking, the memory capacity is the number of the previous input signal which can be reproduced by the output signal from physical system. Let us assume that the input signals are pulse-shaped random number \(q_{k}\), where the suffix \(k=1,2,\ldots\) corresponds to the order of the input signals. In the present work, a binary input \(b_{k}=0,1\) is used to evaluate the short-term memory capacity in accordance with Refs.48,49,50. Note that a uniformly distributed random input \(r_{k}\) (\(-1 \le r_{k} \le 1\)) has also been used in some reports29. An example of the target data is

where an integer D, called a delay, characterizes the order of the past input data. There are other kinds of target data, which, in general, include nonlinear transformation of input data37. A memory capacity is defined for each kind of target data. The short-term memory capacity is for target data given by Eq. (9). The following procedure for evaluating of the short-term memory capacity is applicable to general target data. Therefore, for generality, let us denote the target data as \(y_{k,D}\), for a while.

The procedure is as follows. In physical reservoir computing, the physical system consists of many bodies, corresponding to neurons. The output signal from the ith neuron with respect to the kth input signal \(q_{k}\) is denoted as \(u_{k,i}\). Note that, since the present system consists of a single STO, we apply the time-multiplexing method33 to introduce virtual neurons. The output signal from a vortex STO is proportional to the y component of the vortex-core position through the magnetoresistance effect. In experiments18,47,49, the output signal is decomposed into the amplitude s and the phase through the Hilbert transformation. The amplitude is used as a dynamical response in Refs.18,47, while the phase is used in Ref.49. In this work, we use the amplitude as an output signal. The virtual neurons \(u_{k,i}\) are then defined as \(u_{k,i}= s[t_{0} + (k-1 + i/N_{\textrm{node}})t_{\textrm{p}}]\), where \(t_{0}\) is the time at which the first input signal is injected. The number of virtual neurons is denoted as \(N_{\textrm{node}}\). We inject the input signal \(q_{k}\) (\(k=1,2,\ldots ,N\)) to the STO and evaluate \(u_{k,i}\), where N is the number of input signals. After that, we introduce a weight \(w_{D,i}\) and evaluate its value to minimize the error,

where \(u_{k,N_{\textrm{node}}+1}=1\) is a bias term. The process determining the weight is called learning. Note that a weight is introduced for each target data and delay.

After the weight is determined, we inject a different series of input data \(q_{n}^{\prime }\) (\(n=1,2,\ldots ,N^{\prime }\)), where \(N^{\prime }\) is the number of input data \(q_{n}^{\prime }\) and is, in general, not necessarily the same as N. The response from the ith neuron with respect to the nth input signal \(q_{n}^{\prime }\) is denoted as \(u_{n,i}^{\prime }\). Next, we introduce the system output defined as

The target data defined from \(q_{n}^{\prime }\) is denoted as \(y_{n,D}^{\prime }\).

If the learning is done well, the system output \(v_{n,D}^{\prime }\) closely reproduces the target data \(y_{n,D}^{\prime }\). The reproducibility is quantified by the correlation coefficient,

where \(\langle \cdots \rangle\) represents the averaged value. When the system output \(v_{n,D}^{\prime }\) completely reproduces the target data \(y_{n,D}^{\prime }\), \(|\textrm{Cor}(D)|=1\). On the other hand, when \(v_{n,D}^{\prime }\) is completely different from \(y_{n,D}^{\prime }\), \(|\textrm{Cor}(D)|=0\). Therefore, the correlation coefficient \(\textrm{Cor}(D)\) quantifies the reproducibility of the input signal D times before the present data.

As mentioned, the short-term memory capacity is the memory capacity when the target data \(y_{k,D}\) is given by Eq. (9). The short-term memory capacity is defined as

The maximum delay \(D_{\textrm{max}}\) is 20; see also Supplementary Information for the dependence of \(\textrm{Cor}(D)\) on D. Note that, in this work, the short-term memory capacity is defined from the correlation coefficient \(\textrm{Cor}(D)\) from \(D=1\), as can be seen in Eq. (13), whereas, in other work29, the correlation coefficient for \(D=0\) is included in the definition.

Data availibility

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Petit-Watelot, S. et al. Commensurability and chaos in magnetic vortex oscillations. Nat. Phys. 8, 682–687 (2012).

Devolder, T. et al. Chaos in magnetic nanocontact vortex oscillators. Phys. Rev. Lett. 123, 147701 (2019).

Bondarenko, A. V., Holmgren, E., Li, Z. W., Ivanov, B. A. & Korenivski, V. Chaotic dynamics in spin-vortex pairs. Phys. Rev. B 99, 054402 (2019).

Montoya, E. A. et al. Magnetization reversal driven by low dimensional chaos in a nanoscale ferromagnet. Nat. Commun. 10, 543 (2019).

Williame, J., Accoily, A. D., Rontani, D., Sciamanna, M. & Kim, J.-V. Chaotic dynamics in a macrospin spin-torque nano-oscillator with delayed feedback. Appl. Phys. Lett. 114, 232405 (2019).

Yamaguchi, T. et al. Synchronization and chaos in a spin-torque oscillator with a perpendicularly magnetized free layer. Phys. Rev. B 100, 224422 (2019).

Taniguchi, T. et al. Chaos in nanomagnet via feedback current. Phys. Rev. B 100, 174425 (2019).

Taniguchi, T. Synchronized, periodic, and chaotic dynamics in spin torque oscillator with two free layers. J. Magn. Magn. Mater. 483, 281–292 (2019).

Kamimaki, A. et al. Chaos in spin-torque oscillator with feedback circuit. Phys. Rev. Res. 3, 043216 (2021).

Strogatz, S. H. Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering (Westview Press, Boulder, 2001), 1st edn.

Katine, J. A., Albert, F. J., Buhrman, R. A., Myers, E. B. & Ralph, D. C. Current-driven magnetization reversal and spin-wave excitations in Co/ Cu/Co pillars. Phys. Rev. Lett. 84, 3149 (2000).

Kiselev, S. I. et al. Microwave oscillations of a nanomagnet driven by a spin-polarized current. Nature 425, 380 (2003).

Kubota, H. et al. Evaluation of spin-transfer switching in CoFeB/MgO/CoFeB magnetic tunnel junctions. Jpn. J. Appl. Phys. 44, L1237 (2005).

Tulapurkar, A. A. et al. Spin-torque diode effect in magnetic tunnel junctions. Nature 438, 339 (2005).

Kubota, H. et al. Quantitative measurement of voltage dependence of spin-transfer-torque in MgO-based magnetic tunnel junctions. Nat. Phys. 4, 37 (2008).

Sankey, J. C. et al. Measurement of the spin-transfer-torque vector in magnetic tunnel junctions. Nat. Phys. 4, 67 (2008).

Manjunath, G., Tiňo, P. & Jaeger, H. Theory of input driven dynamical systems. In ESANN 2012 proceedings (2012).

Torrejon, J. et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428 (2017).

Maas, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531 (2002).

Jaeger, H. & Haas, H. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science 304, 78 (2004).

Verstraeten, D., Schrauwen, B., D’Haene, M. & Stroobandt, D. An experimental unification of reservoir computing methods. Neural Netw. 20, 391 (2007).

Quinsat, M. et al. Injection locking of tunnel junction oscillators to a microwave current. Appl. Phys. Lett. 98, 182503 (2011).

Rippard, W., Pufall, M. & Kos, A. Time required to injection-lock spin torque nanoscale oscillators. Appl. Phys. Lett. 103, 182403 (2013).

Pikovsky, A., Rosenblum, M. & Kurths, J. Synchronization: A Universal Concept in Nonlinear Sciences 1st edn. (Cambridge University Press, Cambridge, 2003).

Pecora, L. M. & Carroll, T. L. Synchronization in chaotic systems. Phys. Rev. Lett. 64, 821 (1990).

Maritan, A. & Banavar, J. R. Chaos, noise, and synchronization. Phys. Rev. Lett. 72, 1451 (1994).

Rulkov, N., Sushchik, M. M., Tsimring, L. S. & Abarbanel, H. D. I. Generalized synchronization of chaos in directionally coupled chaotic systems. Phys. Rev. E 51, 903 (1995).

Crutchfield, J. P., Farmer, J. D. & Huberman, B. A. Fluctuations and simple chaotic dynamics. Phys. Rep. 92, 45 (1982).

Akashi, N. et al. Input-driven bifurcations and information processing capacity in spintronics reservoirs. Phys. Rev. Res. 2, 043303 (2020).

Bertschinger, N. & Natschläger, T. Real-time computation at the edge of chaos in recurrent neural networks. Neural. Comput. 16, 1413 (2004).

Nakayama, J., Kanno, K. & Uchida, A. Laser dynamical reservoir computing with consistency: An approach of a chaos mask signal. Opt. Express 24, 8679–8692 (2016).

Nakajima, K. Physical reservoir computing—An introductory perspective. Jpn. J. Appl. Phys. 59, 060501 (2020).

Nakajima, K. & Fischer, I. (eds) Reservoir Computing: Theory, Physical Implementations, and Applications (Springer, Singapore, 2021).

Yang, Z., Zhang, S. & Li, Y. C. Chaotic dynamics of spin-valve oscillators. Phys. Rev. Lett. 99, 134101 (2007).

Akashi, N. et al. A coupled spintronics neuromorphic approach for high-performance reservoir computing. Adv. Intell. Syst. 4, 2200123 (2022).

Taniguchi, T., Ogihara, A., Utsumi, Y. & Tsunegi, S. Spintronic reservoir computing without driving current or magnetic field. Sci. Rep. 12, 10627 (2022).

Kubota, T., Takahashi, H. & Nakajima, K. Unifying framework for information processing in stochastically driven dynamical systems. Phys. Rev. Res. 3, 043135 (2021).

Imai, Y., Tsunegi, S., Nakajima, K. & Taniguchi, T. Noise-induced synchronization of spin-torque oscillators. Phys. Rev. B 105, 224407 (2022).

Thiele, A. A. Steady-state motion of magnetic domains. Phys. Rev. Lett. 30, 230 (1973).

Guslienko, K. Y., Han, X. F., Keavney, D. J., Divan, R. & Bader, S. D. Magnetic vortex core dynamics in cylindrical ferromagnetic dots. Phys. Rev. Lett. 96, 067205 (2006).

Guslienko, K. Y. Low-frequency vortex dynamic susceptibility and relaxation in mesoscopic ferromagnetic dots. Appl. Phys. Lett. 89, 022510 (2006).

Ivanov, B. A. & Zaspel, C. E. Excitation of spin dynamics by spin-polarized current in vortex state magnetic disks. Phys. Rev. Lett. 99, 247208 (2007).

Khvalkovskiy, A. V., Grollier, J., Dussaux, A., Zvezdin, K. A. & Cros, V. Vortex oscillations induced by spin-polarized current in a magnetic nanopillar: Analytical versus micromagnetic calculations. Phys. Rev. B 80, 140401(R) (2009).

Guslienko, K. Y., Aranda, G. R. & Gonzalez, J. Spin torque and critical currents for magnetic vortex nano-oscillator in nanopillars. J. Phys. Conf. Ser. 292, 012006 (2011).

Dussaux, A. et al. Field dependence of spin-transfer-induced vortex dynamics in the nonlinear regime. Phys. Rev. B 86, 014402 (2012).

Grimaldi, E. et al. Response to noise of a vortex based spin transfer nano-oscillator. Phys. Rev. B 89, 104404 (2014).

Tsunegi, S. et al. Control of the stochastic response of magnetization dynamics in spin-torque oscillator through radio-frequency magnetic fields. Sci. Rep. 11, 16285 (2021).

Yamaguchi, T. et al. Periodic structure of memory function in spintronics reservoir with feedback current. Phys. Rev. Res. 2, 023389 (2020).

Tsunegi, S. et al. Physical reservoir computing based on spin torque oscillator with forced synchronization. Appl. Phys. Lett. 114, 164101 (2019).

Tsunegi, S. et al. Evaluation of memory capacity of spin torque oscillator for recurrent neural networks. Jpn. J. Appl. Phys. 57, 120307 (2018).

Mainen, Z. F. & Sejnowski, T. J. Reliability of spike timing in neocortical neurons. Science 268, 1503 (1995).

Toral, R., Mirasso, C. R., Hernández-García, E. & Piro, O. Analytical and numerical studies of noise-induced synchronization of chaotic systems. Chaos 11, 655 (2001).

Teramae, J. N. & Tanaka, D. Robustness of the noise-induced phase synchronization in a general class of limit cycle oscillators. Phys. Rev. Lett. 93, 204103 (2004).

Goldobin, D. S. & Pikovsky, A. Synchronization and desynchronization of self-sustained oscillators by common noise. Phys. Rev. E 71, 045201(R) (2005).

Nakao, H., Arai, K. & Kawamura, Y. Noise-induced synchronization and clustering in ensembles of uncoupled limit-cycle oscillators. Phys. Rev. Lett. 98, 184101 (2007).

Alligood, K. T., Sauer, T. D. & Yorke, J. A. Chaos. An Introduction to Dynamical Systems (Spinger, New York, 1997).

Sano, M. & Sawada, Y. Measurement of the Lyapunov spectrum from a chaotic time series. Phys. Rev. Lett. 55, 1082 (1985).

Rosenstein, M. T., Collins, J. J. & Luca, C. J. D. A practical method for calculating largest Lyapunov exponents from small data sets. Physica D 65, 117 (1993).

Kantz, H. A robust method to estimate the maximal Lyapunov exponent of a time series. Phys. Lett. A 185, 77 (1994).

Brown, W. F. Jr. Thermal fluctuations of a single-domain particle. Phys. Rev. 130, 1677 (1963).

Barahona, M. & Poon, C.-S. Detection of nonlinear dynamics in short, noisy time series. Nature 381, 215–217 (1996).

Poon, C.-S. & Barahona, M. Titration of chaos with added noise. Proc. Natl. Acad. Sci. U.S.A. 98, 7107 (2001).

Yamaguchi, T. et al. Step-like dependence of memory function on pulse width in spintronis reservoir computing. Sci. Rep. 10, 19536 (2020).

Aihara, K., Takabe, T. & Toyoda, M. Chaotic neural networks. Phys. Lett. A 144, 333 (1990).

Suto, H. et al. Subnanosecond microwave-assisted magnetization switching in a circularly polarized microwave magnetic field. Appl. Phys. Lett. 110, 262403 (2017).

Shimada, I. & Nagashima, T. A numerical approach to ergodic problem of dissipative dynamical systems. Prog. Theor. Phys. 61, 1605 (1979).

Taniguchi, T. Synchronization and chaos in spin torque oscillator with two free layers. AIP Adv. 10, 015112 (2020).

Dambre, J., Verstraeten, D., Schrauwen, B. & Massar, S. Information processing capacity of dynamical systems. Sci. Rep. 2, 514 (2012).

Acknowledgements

Y.I. and T.T. are grateful to Shingo Tamaru for his valuable discussion on the energy efficiency of pulse-signal injection. This paper is based on results obtained in a project (Innovative AI Chips and Next-Generation Computing Technology Development/(2) Development of next-generation computing technologies/Exploration of Neuromorphic Dynamics towards Future Symbiotic Society) commissioned by NEDO. The work is also supported by JSPS KAKENHI Grant Number 20H05655.

Author information

Authors and Affiliations

Contributions

Y.I. and T.T. designed the project with help from K.N. and S.T. K.N. supported the statistical analysis of Y.I. and T.T. Y.I. and T.T. developed the codes, performed the simulations, wrote the manuscript and prepared the figures. All authors contributed to discussing the results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Imai, Y., Nakajima, K., Tsunegi, S. et al. Input-driven chaotic dynamics in vortex spin-torque oscillator. Sci Rep 12, 21651 (2022). https://doi.org/10.1038/s41598-022-26018-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-26018-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.