Abstract

Damage mechanism identification has scientific and practical ramifications for the structural health monitoring, design, and application of composite systems. Recent advances in machine learning uncover pathways to identify the waveform-damage mechanism relationship in higher-dimensional spaces for a comprehensive understanding of damage evolution. This review evaluates the state of the field, beginning with a physics-based understanding of acoustic emission waveform feature extraction, followed by a detailed overview of waveform clustering, labeling, and error analysis strategies. Fundamental requirements for damage mechanism identification in any machine learning framework, including those currently in use, under development, and yet to be explored, are discussed.

Similar content being viewed by others

Introduction

Thousands of years predating the wheel and written language, potters across the world, from the Fertile Crescent to eastern Asia, listened for sounds emanating from ceramics during cooling as a prognosis of structural integrity1,2. At the start of the Bronze age in 3000 BC, smelters in Asia minor documented the “tin cry,” a characteristic cackling produced during forming, to guide tin mining and alloy processing3. By the eighth century, the Persian alchemist Jabir ibn Hayyan reported on the sounds emitted by Venus (copper) and Mars (iron) as part of a treatise on seven metals; his work contributed to alchemic theories about metallic composition that were widely accepted for a millennium4,5.

We now call these phenomena acoustic emission (AE): the release of accumulated strain energy from material displacement in the form of elastic waves6. While most historical documents report damage activity audible to humans, AE emissions have been captured from damage sources down to the order of nanometers in size. For example, AE has been used to indicate the formation of polar nanoregions in relaxor ferroelectrics7. In practice, the AE technique is used to triangulate damage source locations6, identify areas of concern in the material bulk8, and evaluate the severity of incurred damage9.

An outpouring of AE research efforts emerged in the 1980s10,11,12, but were limited by both the experimental hardware and data storage limitations of the time. The narrow frequency response of resonant transducers forced waves to be recorded as dampened sine waves, losing detailed frequency (and therefore time domain) resolution. Moreover, storage and memory constraints mandated that waves be preserved in terms of time-domain parameters such as first peak amplitude, energy, and duration (Table 1), which resulted in further information loss6. The development of broadband transducers combined with advances in computer hardware during the 1990s enabled researchers to record the full frequency spectrum of a signal and digitally store the waveform. This method, known as modal AE, is now standard practice6,13,14.

Armed with improved waveform resolution in the time and frequency domains, researchers could discern previously obfuscated differences between waveforms. A new era of AE studies began, aimed at evaluating the chronology of damage progression by leveraging a range of specimen geometries14,15,16, architectural and processing choices17, and loading conditions18.

These efforts laid the foundation for a hypothesis that we are still wrestling with today: that there are features encoded in AE signatures that can be used to discriminate between damage modes. The ability to discriminate damage modes from AE information has the potential to revolutionize non-destructive structural health monitoring tools for scientific investigations and practical applications19. Resolving the local damage chronology would shed light on how processing and microstructural landscapes are tied to the failure response of structural materials. A detailed understanding of the damage accumulation and failure envelope would significantly broaden the potential application space for structural materials of more complex geometries20,21,22,23,24. As such, high-fidelity damage mode identification of AE signals has become a core objective of modern AE investigations.

Initial efforts in damage mode identification used only one or a few features of the waveform to describe the emitting damage mode25,26,27. While these low-dimensional representations are useful for indicating broader trends in damage mode shifts, they obstruct the high-fidelity characterization of signals. It is well known that certain waveform features, such as peak frequency, are affected by propagation pathways28,29,30 and difficult to control experimental factors31. These effects are included in the received waveforms and currently influence subsequent analyses; thus, they lower the confidence with which damage modes can be assigned for any one waveform. In general, many features can be used to describe a waveform (Table 1); such features are complex, and often interdependent. Furthermore, it is possible that while one waveform feature may be an obvious discriminator between some mechanisms, this may not be the case for all mechanisms9,27. Therefore, multivariate (high-dimensional) analyses are necessary to explore relationships between features and tie them to the related damage mode.

Historically, the AE community has used the well-established k-means algorithm for such multivariate analyses32,33,34,35. Although k-means is in general a robust and repeatable algorithm with many applications, it is not necessarily well-suited to AE data. K-means finds isotropic clusters, and so it is best suited for data that has this structure36; it is unclear if AE data abide by this constraint, as up to this point there has been limited analysis of the validity of partitions of AE data by k-means.

The recent, rapid development of machine learning (ML), also termed pattern recognition, tools has greatly increased the range of data structures that can be analyzed36,37. With pre-packaged ML algorithms38 now readily available, the AE community has easy access to analytical pathways for high-dimensional AE representations in the identification of waveform-damage mode relationships. Despite the growing abundance of available statistical tools, the majority of analyses are still limited to specific, well-understood techniques. Therefore, a discussion of canonical and emerging approaches, their strengths and weaknesses, and their applicability to the outstanding question of damage source identification via AE is needed.

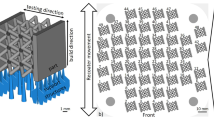

In this review, we discuss current ML efforts to identify damage modes based on their AE signatures. We restrict our discussion to the analysis of composites as a model material system, as their multi-phase structures host distinct damage mechanisms well suited for study by AE (Fig. 1a). We begin with a description of wave propagation physics in solids as it relates to ML. We follow with an overview of how waveforms are represented in feature space (an example of which is shown in Fig. 1b) and subsequently relate feature choice to the current understanding of wave propagation. Then, we highlight clustering algorithms that have been employed to distinguish source mechanisms (the outcome of which is shown schematically in Fig. 1c) and provide guidelines for effective use. Finally, we review prior efforts that have utilized the aforementioned tools and propose avenues for future work.

a When damage occurs in a composite, accumulated strain energy from various damage mechanisms is released as AE into the surrounding medium until it is recorded by broadband sensors. b The recorded waveforms are described with features hypothesized to encode information about the source damage mechanism. c Machine learning uses these features to partition waveforms, which can then be labeled according to their source damage mechanism.

Wave propagation in solid materials

Historically, researchers have used Lamb’s homogeneous equations (a special case of the wave equation) to understand AE phenomena13,39, where it is assumed that acoustic waves are confined to a thin plate of thickness h. This model has been useful for engineering applications as many modern manufactured composites typically follow this constraint40. When waves are modeled this way, the predicted out-of-plane flexural (w) and in-plane extensional (u0, v0) displacements are governed by:

with constants A and D (the bending stiffness):

where ρ is the density, E is the elastic modulus, and ν is the Poisson ratio.

While this model is a powerful tool for predicting the basic properties of elastic waves in solids, the assumptions of elastic isotropy and material homogeneity in this model are often violated, which prevents its use for damage mode discrimination. The microstructure (e.g., pores, grain boundaries, and architectural heterogeneities) affects density and introduces scattering sites14,15,41,42,43.

Current efforts model AE through a process known as forward modeling, wherein dynamic equilibrium equations are solved via finite element method (FEM) calculations44,45,46,47,48,49,50. In these studies, a wave (or a damage event that emits a wave) is simulated and modeled as it propagates through the material. The wave is then passed through a set of filters to mimic the sensor response to the received wave.

Forward modeling allows researchers to explore the effects of microstructural heterogeneities and determine potentially useful waveform characteristics, without experimental evidence. Sause and Horn demonstrated differences between damage modes in the time-frequency domain with an FEM model of a carbon fiber-reinforced composite48. Their model produced frequency spectra in good agreement with their concurrent experiments and supported the claim that changes to power carried by a frequency band are minimal in the near field48,51. This suggests that partial power (Table 1) is a salient feature.

Other researchers have employed forward modeling to evaluate the efficacy of commonly used AE features in the identification of damage modes52,53,54,55. Gall et al. showed that in the absence of defect structures, rise time, amplitude, and energy scatter are dependent on source-to-sensor distance, while partial power is not53. Similarly, Aggelis et al. used numerical simulations to show that RA values (Table 1) increase with increasing source-to-sensor distance54 and provide evidence that the density of scattering interfaces distorts the waveform in the time-domain.

In general, forward modeling studies guide us to two complementary conclusions: (i) if there is information encoded in AE that enables damage mode discrimination, it likely exists within the frequency domain; and (ii) the high degree of perturbation imposed on time-domain features by experimental factors likely renders them inadequate for differentiating source mechanisms. These deductions highlight the necessity for determining salient features for ML applications, where the accuracy of the output is directly dependent on the quality of feature choice.

Machine learning

ML describes algorithms that learn from input data56. Broadly speaking, ML tasks are categorized as clustering (the grouping of similar objects), regression (model selection), or forecasting (prediction of future events). An ML algorithm accomplishes these tasks either in an unsupervised or supervised manner, depending on input information. In unsupervised algorithms, raw data are supplied, and a model is generated57,58,59. In contrast, supervised algorithms build a model based on a ground-truth data set, and subsequently interpret new data according to this model36,37,60. As ground truth data sets rarely exist (exceptions are detailed in the section “Toward improved AE-ML frameworks”), researchers are constrained to unsupervised clustering methods, for categorizing AE data, whose workflow follows37:

-

1.

Experimentation: a number, n, observations are collected

-

2.

Feature extraction: observations are vectorized by extracting d pertinent features (i.e., each waveform is represented by a d-dimensional vector)

-

3.

Metrics and transformations: a metric or similarity function is chosen and the set of feature vectors undergoes an optional transformation

-

4.

Machine learning algorithm: an algorithm is selected and waveforms are clustered

-

5.

Error analysis: post-clustering analysis is performed to assess the validity of results

The following discussion focuses on steps 2–4. A general overview of how AE data are obtained (step 1) is provided in ref. 6 and a description of error quantification (step 5) is given in the section “Toward improved AE-ML frameworks.”

The authors note that clustering tasks are also identified as pattern recognition tasks60,61; however, these terms are equivalent and here we will use the term clustering for consistency.

Feature extraction

The accuracy of a clustering algorithm is foremost contingent on feature choice (i.e., objects must be represented by features which are salient in order to find meaningful partitions). To illustrate this, consider a researcher who aims to differentiate a set of ducks and cats (Fig. 2a). The researcher could represent each animal by color, resulting in the partition in Fig. 2b, or by their ability to fly (Fig. 2c). While both partitions are valid, only Fig. 2c is a useful result if the researcher aimed to differentiate species.

This example is formalized by Watanabe’s ugly duckling theorem, which states that any two objects are equally similar unless a bias is applied when clustered37,62. A corollary of this theorem is that, when described by improper features, it is possible to obtain compact and well-separated clusters that do not represent desired categories. Without salient feature representation, researchers will incur a substantial loss in discriminating power.

Historically, AE waveforms have been represented by parameters in the time domain (Fig. 3a), the frequency domain, or by composite values comprised of two or more base values13,28,63,64,65,66,67,68,69,70,71,72,73,74. Table 1 lists common time domain, frequency, and composite features, where x(t) is the measured signal and F[*] is the Fourier transform operator. The predominance of time domain features appears to be for historical reasons, as AE was originally stored in terms of these parameters; today, these parameters are readily extractable either through commercial AE software or in-house programs.

a Parameter features are readily extracted by means of commercial or in-house software. b The continuous wavelet transform is applied to the signal using Eq. (6). These CWT coefficients are then used as input to the feature vector. c Wavelet packet decomposition takes the original signal, and down-samples it in to 2j subsignals, each containing frequency content according to Eq. (7). The energy contained in each of these subsignals is then used as features. d Features from the marginal Hilbert spectrum are extracted. The frequency centroid of each IMF is used in this example. Panel b is adapted from ref. 79 with permission from Elsevier. Panel c is adapted from ref. 83 with permission from Elsevier. Panel d is adapted from ref. 89 with permission from Elsevier.

Despite their prevalence, the efficacy of certain traditional features is unclear. For example, many features in the time domain are dependent on the signal time-of-arrival (ToA) (e.g., signal duration and RA value). If parameters derived from ToAs have large scatter for the same damage source, then this will negatively impact the ability to discriminate between damage modes. This is evidenced by forward modeling studies, which cast doubt on the salience of time domain features and instead advocate for the use of frequency features.

While there is an agreement that frequency features should be used as inputs to ML, AE waveforms are non-stationary, meaning that their frequency content changes in time and the optimal way to extract frequency information remains unclear. The fast Fourier transform (FFT) is typically used to extract frequency information, but systematically misrepresents frequency content for non-stationary waves75. For this reason, researchers have espoused time-frequency representations45,76,77,78,79,80.

The continuous wavelet transform (CWT) is a popular time-frequency representation for inspecting non-stationary signals, whose frequency content changes in time (Fig. 3b). It can be used for either qualitative AE analyses45,76,81 or quantitative characterization79,82. The CWT is described by:

where ϕ* is the complex conjugate of the mother wavelet ϕ (a function satisfying certain criteria79), a is the scale parameter corresponding to a pseudo-frequency, and b is the translation parameter. Common choices for ϕ include the Debauchies wavelet and the Morlet wavelet79,83.

Eq. (6) is a convolution calculation; however, when a and b are considered fixed, Eq. (6) is the inner product of the time series with the mother wavelet. This leads to an intuitive interpretation; high values of the CWT at time b indicate a large frequency content corresponding to a. The authors are aware of only one method to extract features with the CWT: calculate all desired values of CWT(a, b) and represent them as a column vector79,84. For example, if an AE signal has 1000 time domain samples, CWT(a, b) can be calculated for N values of frequency, resulting in a feature vector whose length is 1000 × N.

An alternate method for interrogating non-stationary signals is the wavelet packet transform (WPT) 83,85,86,87. The WPT is a set of decompositions of the original signal, x(0, 0). At each decomposition iteration, two down-sampled signals containing the original’s low frequency (approximation) and high-frequency (detail) components are retrieved. The decomposition process continues on both subsignals until a specified decomposition level is reached. This is shown schematically in Fig. 3c.

A signal decomposed to level j will produce 2j waveforms, x(j, i), i ∈ [1, 2, . . . , 2j] with non-overlapping frequencies in each sub-signal86,88. The relative energies (the portion of the original signal’s energy contained within each down-sampled signal at the final decomposition level) are then extracted and used as features83,86,88.

While the CWT and WPT are useful for inspection of non-stationary signals, these approaches decompose signals with a fixed set of basic functions that may not be representative of the underlying process. The Hilbert–Huang transform (HHT) addresses this issue by extracting the instantaneous frequencies and amplitudes using basis functions defined by the signal itself75,77,89. An HHT analysis consists of two steps: (i) empirical mode decomposition (EMD) of a signal into a set of intrinsic mode functions (IMFs), and (ii) a Hilbert transformation of the IMFs.

In a well-behaved time series x(t) (symmetric about 0 with no superimposed waves), the Hilbert transform y(t) is defined as75,77:

where PV is the Cauchy principal value of the improper integral. From this, the analytic function z(t) is defined:

This has a natural phasor representation:

where a(t) is the instantaneous amplitude of x(t), and θ(t) is the instantaneous phase of x(t).

The instantaneous frequency ω(t) is then calculated:

In practice, x(t) is unlikely to be well behaved; however, EMD ensures that each IMF, ci, is. By EMD, the frequency content of the first IMF is the highest, and decreases monotonically with index i. For AE data, the lowest order IMFs isolate frequency information from the signal, thereby expelling statistical noise. An analogous approach is ensemble empirical mode decomposition (EEMD), which provides a superior level of robustness to EMD and should be used when computationally affordable75. The readers are referred to Huang et al.75 for details of executing E/EMD; in addition, there are available MATLAB88 and Python90,91 packages that readily perform both E/EMD and the Hilbert transform.

After EMD, the Hilbert transform is applied to each IMF. For a given IMF, we can define:

which assigns a time value and its related instantaneous frequency to the instantaneous amplitude of ci. The sum of all Hi is the Hilbert spectrum, which represents the extracted instantaneous frequency and amplitude from all ci77.

An alternative visualization of the Hilbert spectrum is the marginal Hilbert spectrum, defined as:

where T is the length of signal x(t) (Fig. 3d). The marginal Hilbert spectrum is the probability of finding an instantaneous frequency at any given point in the signal, which Huang et al. differentiated from the FFT, which represents the total energy persisting from a frequency throughout the signal77.

While the HHT has the potential to reveal hidden insights in the data, its applicability for feature extraction is uncertain89,92,93,94. Though previous work by Hamdi et al. suggested that HHT features could be used for unsupervised ML, their findings are inconclusive89. Their input data included labeled data from other studies95,96 that employed ML techniques unverified with experiments, as a basis for labeling their own data sets. In general, HHT properties are not as well characterized as the FFT, CWT, or WPT. For this reason, it has not been as widely adopted.

Metrics and transformations

Once features are extracted, a metric, or similarity function, d(*, *), can be used to define the degree of similarity between observations. In general, d must satisfy:

-

1.

d(x, y) ≥ 0

-

2.

d(x, y) = 0 ⇔ x = y

-

3.

d(x, y) = d(y, x)

-

4.

d(x, y) ≤ d(x, z) + d(z, y)

In some cases (e.g., an algorithm working on local neighborhoods), requirement 4 can be relaxed97.

The efficacy of a given metric is tied to the geometry of the input data, which manifests itself in the quality of the resulting partition37. For example, neighborhood-based distances are better suited for grouping nested circles than Euclidian distance97. While there is flexibility in choosing a metric, an alternative strategy is to pre-process feature vectors by a transformation36,37,60.

Principal component analysis (PCA) is a transformation used for decorrelation, a requirement for k-means, and for dimension reduction34,61,79,98. PCA is a linear and orthogonal change of basis calculation whereby the new coordinate system describes the directions of maximum variance. It transforms the ordered basis (e1, e2, . . . , ed) to a new basis, \(({{\bf{e}}}_{{\bf{1}}}^{\prime},{{\bf{e}}}_{{\bf{2}}}^{\prime},...,{{\bf{e}}}_{{\bf{d}}}^{\prime})\) with the property that \({{\bf{e}}}_{{\bf{1}}}^{\prime}\) is oriented along the direction of greatest variance, \({{\bf{e}}}_{{\bf{2}}}^{\prime}\) is oriented along the direction of second greatest variance, and so on, with a diagonal covariance matrix (i.e., no correlation).

In addition, when the feature vector \({\bf{v}}=\mathop{\sum }\nolimits_{i = 1}^{d}{a}_{i}{{\bf{e}}}_{{\bf{i}}}^{\prime}\) is projected to the q-dimensional subspace by dropping the last d − q basis vectors, the reconstruction error is minimized, allowing for data to be efficiently represented in a lower dimensional space.

It is worth noting that dimension reduction via PCA aims to decrease the computational load. However, the intrinsic computational load for AE data is often light, with few observations (≾10,000) and low-dimensionality (≾20), and runtimes for common ML algorithms tend not to exceed a few minutes. As such, the dimensionality reduction described above is not recommended, as information is lost.

However, PCA is still a useful transformation. Features with large variance tend to dominate clustering results37, it therefore may be desirable to perform PCA whitening, or sphering37,79,99. The whitening transformation is a re-scaling of the PCA axes such that the diagonal covariance matrix obtained by PCA is the identity matrix. Major programming languages offer packages to execute both PCA and whitening88,91,100. The reader is referred to MacGregor et al.101 for further details.

In general, many transformations are available, but the business of choosing an appropriate one is tricky. Transformations alter the geometry of the input data so that clustering can be executed. However, a complete understanding of the transformation’s effect on the input data is contingent on a priori knowledge of the starting geometry. This is problematic, as AE data lives in a high-dimensional space and can never be viewed in its natural setting. Modern projection techniques, described further in the section “Data visualization,” allow for estimation of the high-dimensional geometry.

Machine learning algorithms

After pre-processing, we arrive at the matter of choosing a clustering algorithm, which must complement the new geometry of the processed data. The authors stress that if an informed decision is not made at this juncture, an accurate partition cannot be achieved, even if the initial data are properly represented.

K-means is among the earliest clustering techniques and as such, it has positioned itself as the primary clustering method for AE applications. The k-means algorithm finds spherical clusters and is therefore best suited for clustering convex data36. However, it remains unknown if AE data obeys this constraint, and thus whether k-means should be the default choice. To this end, a deeper understanding of the k-means algorithm is needed to properly leverage its strengths and interpret the efficacy of previous AE studies that have utilized it.

The k-means algorithm seeks to partition a set of observations into k clusters by finding the minimum of the L2 loss function:

where μj is the center of cluster j ∈ {1, 2, . . . , k} and xi are all data points associated with cluster j36,37,60. The process is outlined in Fig. 4a and works as follows:

-

1.

k centroids are initialized

-

2.

Feature vectors are labeled according to the closest centroid

-

3.

The center of the labeled data is calculated, and the respective centroids are moved

-

4.

Steps 2–3 are repeated until a stopping criterion is reached

a When clustering data with k-means, seed points are selected to represent the initial data, which are subsequently labeled (or colored) according to the closest seed point. The seeds are then moved to the centroid of the labeled data, and points are labeled. This process is repeated until a user-defined stopping criterion is reached. b K-means often falls into local minima, resulting in sub-optimal partitions. An initialization with 15 centroids is shown, where two centroids have fallen into a local minima (denoted with minuses) and are unable to move to their optimal position (denoted with pluses). c Although widely used, k-means is unsuited for clustering certain types of data. The performance of k-means, GMM, and spectral clustering is demonstrated on a Gaussian data set (row 1), an anisotropic data set (row 2), and a concentric circle data set (row 3). The performance of an ML algorithm is dependent on the data scale and geometry. Panel a is adapted from ref. 36 with permission from Elsevier. Panel b is adapted from ref. 59 under creative commons license. Panel c is generated from the sci-kit learn toolbox38.

Because L2 is minimized by gradient descent, final clusters are dependent on where centroids are initialized. For this reason, k-means tends to fall into local minima102, and for any given run of k-means there is likely a better partition to be found (Fig. 4b).

To avoid falling into such local minima, variants of k-means modify the initialization scheme65,95,103. Among those, the maxmin and k-means++ variants are the most robust59,91,102, as both initialize well-dispersed seeds. Maxmin selects a point for the first cluster center, and at each subsequent step, the following centroid is calculated as the farthest distance from the nearest centroid. K-means++ selects subsequent seeds from a statistical distribution with farther distances being preferred102. Sibil et al. developed an approach using a genetic algorithm, where multiple runs of k-means are initialized, and the best results are cross-bred according to a set of user-defined rules103. While this genetic algorithm allows k-means to find an optimal solution with fewer restarts, appreciable gains in runtime or accuracy are not achieved by its use.

An alternative to modifying the initialization scheme is to alter how centroids move after initialization. One such approach is fuzzy c-means (FCM), in which a feature vector (xj) is assigned a membership grade (ui) to cluster i such that ui(xj) ∈ [0, 1]. FCM updates centroids according to weighted (rather than absolute) membership, which reduces tendency to fall into local minima37. Despite these benefits, it is unclear if FCM is superior to k-means96,104,105,106,107,108 due to difficulties in verifying cluster results.

Ultimately, the user can opt for another strategy altogether: restarting k-means multiple times and selecting the best run. When the number of clusters is low and data are poorly separated, as is often the case with AE data, this strategy yields acceptable results provided that the number of restarts is sufficiently large (>100)59. Given that clustering via k-means tends to have short runtimes, this is a feasible strategy.

However, none of the aforementioned techniques address the issue of data geometry. Therefore, alternative algorithms must be considered that are suited for larger ranges of data geometries at similar computational costs.

One such viable alternative to k-means found in the literature is the Gaussian mixture model (GMMs)74. In contrast to k-means, GMMs find Gaussian distributions that model the input data and thus are well suited to clustering data sampled from Gaussian distributions. Moreover, they provide greater flexibility for clustering non-convex geometries and data with different scales (Fig. 4c).

GMMs model input data as belonging to a set of d-dimensional Gaussian distributions and search for the set of related parameters58,109. The probability of finding feature vector xj ∈ X where X is the set of feature vectors sampled is:

where M is the number of component Gaussians, and wi is the weight associated with the multivariate Gaussian distribution:

parameterized by covariance matrix Σi and mean μi. The weights are normalized such that their sum is unity. The Gaussian mixture is then completely described by the set of parameters:

The GMM searches for λ, which maximizes the likelihood of sampling X by way of the Expectation-Maximization (EM) algorithm110. Once EM reaches some stopping criterion, feature vectors are labeled according to the Gaussian that provides the highest likelihood of sampling it. Final GMM partitions are also sensitive to the initialization, and therefore multiple restarts are recommended. For an in-depth discussion of GMMs, the reader is referred to Reynolds et al.58.

Hyperparameter selection

Once a suitable clustering algorithm has been chosen, a set of user input parameters known as hyperparameters must be specified. These control how the ML algorithm operates on the data and influences the final partition.

In unsupervised learning, a common hyperparameter is the number of clusters within the natural structure of data, which in practice, is uncertain. In AE literature this is analogous to identifying the number of unique damage modes that are discernible. We have previously described two hypotheses developed by the AE community: (i) any AE event is generated by only one damage mode and (ii) waveforms have distinct features corresponding to that damage mode. It then follows that the number of clusters should correspond with the number of detectable damage modes. However, if two or more damage modes have similar characteristics, experimental noise may mask the true source, in which case, the number of distinct clusters will be between one and the number of unique damage modes.

The above assumes that each AE signal is the result of only one damage mode, yet in practice, the AE-damage relationship is more nuanced. It has been experimentally shown in situ that more than one damage mechanism can be active within the timeframe of a single AE event9, such that in the case of a fiber-bridged matrix crack, the damage mechanisms of transverse matrix fracture, fiber debonding, and interfacial sliding all occur within a short period of time and are captured by a single AE signal. It is infeasible to deconvolute the contribution of each mechanism to the signal. Yet the critical features of that signal are the sum of the contributing features, and thus are dependent on the activity of each contributing mechanism. Future work must address whether multi-mode AE events fall into the cluster of the dominant damage mode, or into their own multi-mode cluster(s).

Ultimately, due to multi-mode damage mechanisms and noise masking, the true number of clusters is unknown. In addition, data projection methods are unsuitable for determining cluster number due to inherent information loss101,111,112. As a solution, researchers apply heuristic functions to measure intercluster/intracluster spread. All such functions operate on the premise that the optimal number of clusters yields the highest intercluster separation and lowest intracluster variance113,114,115,116,117,118,119, and the true number of clusters is estimated where the function is extremized.

The silhouette value (SV) is one of the most popular heuristic functions115. It has become a staple in unsupervised clustering because of its ease of use and natural graphical representation. For a feature vector xk, the SV is defined as:

where a(xk) is the average distance of xk to all objects in its cluster, and b(xk) is the minimum of the average distance from xk to all objects in a different cluster. While defined for a single feature vector, the SV of a partition is the average SV over all feature vectors; this is the value used to estimate the number of clusters. The SV is bounded such that SV ∈ [0, 1], with 1 corresponding to perfectly compact clusters.

The SV is applicable to evaluate clusters independent of their geometry; however, it is best suited for convex and spherical data. When non-convex data are clustered, other heuristic functions should be considered. In GMMs, both the Akaike information criterion (AIC) and Bayesian information criterion (BIC) are established model selection techniques91. It has been shown that AIC generally overestimates the true number of mixture components, whereas there is considerable evidence that BIC consistently selects the correct number120.

The authors stress that heuristic functions are estimates. When possible, multiple heuristic functions should be used to corroborate the results of each other. Finally, researchers cannot rely on heuristic functions to evaluate clustering success, as it is possible to obtain compact and well-separated clusters whose membership does not reflect damage modes.

Data visualization

In addition to quantitative assessments of partition quality, such as those described in the section “Hyperparameter selection,” it is often useful to visualize data to inspect the partition quality. The self-organizing map (SOM) is a type of neural network used for the visual assessment of partition quality through dimension reduction111. SOMs maintain their topology, meaning that nearby points in the reduced representation correspond to similar feature vectors. Unlike PCA, SOMs are non-linear and thereby better suited to visualize a wider variety of data geometries.

The SOM consists of a grid G of l × l nodes, hij. The grid architecture describes node connections and dictates that nodes move when the SOM is learning the structure of the input data. Finally, each node has an associated weight vector, wij. This can be considered the node’s position within the high-dimensional feature space. The SOM learns the structure of the input data as follows:

-

1.

Weights wij are randomly initialized

-

2.

A random observation, xk is selected (presented) and its distance from the high-dimensional embedding of node ij is calculated as:

-

3.

Step 2 is repeated for all nodes, and the closest node is identified by:

-

4.

The weight of node \({h}_{ij}^{* }\) is updated according to:

where t is the time step and η is the learning gain factor that starts at 1 and monotonically decreases with t

-

5.

The weights of nodes in the neighborhood of h* are updated according to Step 4, and t is incremented

-

6.

Steps 2–5 are repeated until all data points have been presented at least once

Once completed, the high-dimensional data can be visualized by assigning a gray value to the 2D grid of the SOM according to the maximum distance of a node from its neighbors (Fig. 5a)121. Thus, the SOM effectively has two representations, a high-dimensional representation and a 3D representation (2D grid + 1D gray values). The strength of the SOM is rooted in the fact that the high-dimensional representation adapts to the structure of the feature vectors, while maintaining a meaningful 3D representation111.

a The weight vectors of the SOM are initialized and then adapt to the structure of the input data in the high-dimensional feature space. The weight vectors are then represented by a grid in 2D, where each point on the grid is either assigned a gray value or a label. b The same set of 6000 handwritten digits is visualized using four common manifold learning techniques: t-SNE, Sammon mapping, Isomap, and local linear embedding. Only t-SNE maintains distinct clusters in the plane, thereby outperforming the other data visualization techniques. Panel a is adapted from ref. 95 with permission from Elsevier and from ref. 153 with permission from the Journal of Acoustic Emission154. Panel b is adapted from ref. 112 with permission from the authors.

Previously, AE studies have used SOMs to pre-process data by associating input data to the closest wij and clustering these weight vectors with k-means95,122,123. However, approximating points by their closest node can only reduce the amount of available information, thereby degrading the fidelity of k-means. For this reason, the authors recommend that data be clustered and subsequently visualized with an SOM, rather than clustering SOM nodes directly.

Another method for visualizing high-dimensional data is t-distributed stochastic neighbor embedding (t-SNE), which is a manifold learning algorithm. While the SOM projection loses geometric structure in the low-dimensional representation, the t-SNE projection retains a sense of the high-dimensional geometry112.

At the highest level, t-SNE maintains all pairwise distances between the high-dimensional and low-dimensional representation of feature vectors, xi and yi, respectively112. The fundamental assumption in t-SNE is that for a given feature vector, xi, all other points follow a Gaussian distribution with standard deviation σi centered at xi. The conditional probability of finding another feature vector xj is then:

There does not exist an optimal value for σi that describes all data points. Instead, this is estimated with a hyperparameter termed perplexity, which is a measure of how much of the local structure is retained in the final low-dimensional map. As perplexity increases, local structure information is exchanged for global structure information112.

Pairwise probabilities in the high dimension are translated to similar pairwise probabilities in the low dimension, qj∣i. If the low-dimensional representation has correctly maintained the same high dimension pairwise distances, then pj∣i = qj∣i for all pairs i, j.

In contrast to the high-dimensional representation, where similarities are calculated according to a Gaussian distribution, pairwise similarities in the low dimension are calculated according to a Student t-distribution with a single degree of freedom. This alleviates the crowding problem in traditional SNE while permitting for tractable runtimes.

Like PCA, t-SNE axes have no intrinsic meaning; only relative distances between points are valuable. Intercluster separation and cluster size also have no intrinsic meaning. Despite this, t-SNE is exceptionally powerful, as few user inputs are needed and it outperforms other manifold learning techniques such as Sammon mapping, Isomap, and local linear embedding124,125,126 (Fig. 5b). T-SNE is described in greater detail in van der Maaten and Hinton112 and a practical guide is provided by Wattenberg et al.127.

Toward improved AE-ML frameworks

Experimental, numerical, and statistical tools have all been leveraged to infer damage modes from AE. While the first two have provided valuable insights, there are still unknowns regarding experimental factors (e.g., effect of sensor coupling) and microstructural details (e.g., wave scattering in a complex microstructural landscape). This mandates the use of statistical tools provided by ML to relate what is known about AE to explore the waveform-mechanism relationship. In this section, we discuss advantages and limitations of previous studies and highlight issues that must be addressed moving forward.

While methodologies and findings in AE/ML studies differ within composites, there is consensus on the following: (i) damage modes are distinguishable by their AE signatures (Fig. 1), (ii) clusters can be discerned with unique activities, and (iii) robust methods for verifying results are needed.

The details of what features are deemed salient vary between investigations, but there is overwhelming agreement that they exist and are frequency based. Forward models of carbon/glass fiber-reinforced polymers show explicit differences in frequency characteristics of measured waveforms48,128, lending support to experimental efforts suggesting that damage modes can be distinguished by frequency17,18,26,27,33,129.

Clusters can be identified with reasonable degrees of separation based on heuristic functions34,65,68,70,130. This suggests that ML can efficiently partition AE signatures, although what the partitions represent remains uncertain. Despite variations in experimental configurations, material systems, and representation schemes, the number of clusters reported is consistently in the range of 3–667,69,84,95,131. This agrees with the number of expected damage modes, suggesting that partitions are meaningful; few studies report more clusters than the feasible number of damage modes66.

The verification of ML results is a closed loop problem; the optimal feature representation and ML algorithm is not known a priori, motivating the need for manual verification that requires a priori knowledge of distinguishing features. As a result, strategies that rely on domain knowledge, such as the activity of damage in the stress domain, have been employed. The simplest verification method is correlation of average cluster frequency values (peak or centroid) to damage modes, or correlation of cluster activity to damage modes35,66,67,79,80,89,96,108,130. Some researchers have gone a step further and incorporated high-resolution postmortem techniques (namely, microscopy) to further justify cluster labels65,69,123,132,133,134,135.

More complex solutions involve the creation of AE libraries to serve as ground truth data sets32,68,136,137. This is a particularly powerful approach because once ground truth sets have been established, there is no difficulty in the interpretation of final results and refinement of the learning procedure becomes possible. One such method is to isolate one to two damage modes by using specialized sample geometries, and then leverage these cluster characteristics to label the damage modes in a more relevant geometry. For example, Godin et al. used AE from pure epoxy resin and single-fiber microcomposites to label the unsupervised results from unidirectional composites32. Another method of library creation is to test full-scale samples in ways that promote one damage mechanism. Gutkin et al. tested a variety of composite geometries under monotonic tension, compact tension, compact compression, double cantilever beam, and four-point end notch flexure tests68. Finally, libraries have been created by testing specimens with known damage modes and using secondary high-resolution in situ methods to assign labels to clusters. Tat et al. collected AE from open-hole tension samples in conjunction with x-ray radiography and DIC to characterize damage modes137. While such studies should elucidate the nature of the AE-mechanism relationship, there are caveats that limit their usefulness, as discussed below.

Despite progress toward the development of an AE/ML framework, there are outstanding issues in: (i) verification procedures, (ii) error quantification, and (iii) feature selection and algorithm choice, which obstruct continued advances.

Verification procedures are each accompanied by certain deficiencies. When cluster verification is limited to correlation of frequency values, there is often large experimental variance. We now know that singleton frequency values (e.g., peak, average, or centroid) are influenced by factors such as source-to-sensor distance and the state of damage accumulation; both affect waveform attenuation and the number of scattering sites29,30,54,61. When cluster activity is considered, there is often considerable overlap in ways that are not physically expected. For example, Lyu et al. concluded that all modes of damage in 0/90 woven Cf/SiC CMCs, including fiber bundle failure, are active from the beginning of a creep test. It is unclear if this is a real phenomenon, or if fewer damage modes are active and are instead being incorrectly distributed across all clusters138. Few studies show distinct differences in chronological cluster activation84,130,139,140. More complex fiber architectures often show simultaneous cluster activation; however, these findings have not been verified experimentally34,66,141,142. Furthermore, previously applied postmortem methods are insufficient for informing on damage chronology and can only provide information on what labels are available to be assigned. Finally, the library methods discussed train models with flawed data sets. They use unsupervised clustering results whose complete membership is unknown, rather than using reference data to directly train models. Preliminary results by Godin et al. suggest that the accuracy of such methods is low (≈55%)32.

There exists a natural marriage between error quantification and cluster verification. Robust procedures for error quantification exist and have been adapted for AE61. However, the ability to broadly implement them hinges on knowledge of ground truth. Although libraries have been developed to serve as ground truth data sets, they cannot be used to assess error because they lack one-to-one correlation between events and damage modes. Moreover, of the studies that employ these libraries32,84,87,103,136,143, there is no overlap between material systems or experimental configurations, and no framework currently exists to address these differences. This prevents meaningful comparison of the efficacy of different representation schemes and choice of ML algorithm.

The authors are only aware of one library, from Tat et al.137, that is a candidate for assessing error. However, Tat et al. opted to capture a single tomographic scan in the period of interest, limiting temporal resolution as well as the library’s utility. In addition, this study used an open-hole dogbone specimen geometry, which alters waveform characteristics144, preventing its extension to other geometries. More generally, a library built for a specific specimen geometry can only be used to classify waveforms from the same geometry. Moving forward, meaningful error quantification relies on the use of spatially and temporally resolved bulk damage observations in situ, which can then be used to assign labels to AE events. While this is experimentally challenging, it is the key to confirming that an ML framework is effective and for quantifying its accuracy.

Related to feature selection, there is significant experimental evidence17,18,26,33,145 and modeling efforts48,49,53,98,128, which indicate that damage mechanisms exhibit distinguishable differences in frequencies. It then follows that waveforms should be represented by methods that encode frequency information such as frequency parameters, CWT coefficients, and HHT spectra. However, the salient frequency features remain unknown because the nature of experimental frequency variations are in dispute29,30,54.

Moreover, such features depend on the experimental configuration. Changes to the sample geometry affect waveform features, even when the dominant damage mode has not changed46,49,72,146. Ospitia et al. demonstrated that plate geometries exhibit lower frequencies for the same artificial excitations as compared to beam geometries, with similar trends observed in actual mechanical tests146. This difference was attributed to the unrestricted crack extension in the beam geometry compared with incremental extensions in the plate geometry. In addition, variations in propagation pathways distort acoustic waveforms resulting from a single event recorded at different sensors46,49,72,146. Hamstad showed, via FEA calculations, that excitations will produce lower frequencies corresponding to the flexural wave mode when the excitation is farther away from the midplane of the plate46; this has been experimentally verified29. Maillet et al. experimentally demonstrated that path lengths shift frequency centroids to lower values for larger source-to-sensor distances72. Thus, an event not emitted from the center of a specimen will have different frequency content depending on which sensor is used to analyze the wave. Finally, the sensor contact is also known to influence the frequency spectra of a signal147,148,149. Theobald et al. showed that the acoustic impedance matching between a couplant and the sensor significantly influences the recorded frequency spectra148. It is the authors’ opinion that future AE studies aiming to study point-by-point AE information across specimens must standardize methods for ensuring experimentally consistent sensor contact.

Because waveform features are tied to the experimental configuration (and for other historical reasons6), large numbers of features, including time domain features, are commonly employed (Table 1), with the expectation that the correct features are included. This leads to the use of many features whose physical basis is unclear. This practice has been observed to degrade the fidelity of clustering32,66,98,150.

Data geometry and spread are affected by the feature extraction process. Many common parameter features are highly correlated65,67, which implies that many composite features are also correlated. In some cases, the method of feature extraction introduces artificial variance, thereby increasing the intrinsic cluster spread. For example, signal onset time is often determined by hand or by use of a floating threshold66,68,87,143. While hand-selected signal onset time introduces less variance than threshold determined onset time72, both are artificial in the sense that there is no mathematical rigor involved. In contrast, the AIC method offers a mathematically consistent procedure, rooted in statistical theory, for extraction of signal onset time72,151. Although manual measurements are considered to be more accurate than AIC, it is time consuming to extract first peak times for large quantities of signals (>1000), which can introduce inconsistencies in analysis that are mitigated by the AIC approach. Moving forward, it should also be the case that other features are extracted in similarly consistent manners.

Finally, while most investigators agree that broader categories of damage mechanisms can be distinguished32,48,130, it is unclear if more precise labels can be assigned34,67,138,152. For example, Moevus et al. claimed to discriminate three types of matrix cracking in woven composites67 based on cluster activity. More recently, Lyu et al. claimed to discriminate between single-fiber failures and fiber bundle failures but did not differentiate types of matrix cracks138. Meanwhile, Li et al. made no distinction between delamination and fiber failure34 in woven glass fiber composites. Furthermore, the lack of consensus when assigning more precise labels to damage modes is exacerbated by the uncertainty in whether feature differences are phenomenologically expected for these labels.

Conclusions

Recent advances in ML techniques have created an opportunity for high-fidelity labeling of single AE signals. Such a breakthrough would be seminal to our understanding of the structure-damage mode relationship at the length scale on which damage initiates. This clarity would enable an understanding of the local structural drivers on the damage response of composites. Ultimately, high-fidelity characterization would shed light on the critical microstructural features that, under thermo-mechanical loading, determine when and how the material will fail.

In this review, we summarize and discuss ML techniques that have been leveraged for damage mode identification in composites from their AE. We present an overview of the AE phenomenon and the full implementation of a general ML framework. We detail a variety of commonly used features and their extraction in practice. Specific considerations at each step of building an ML framework are identified such that the user maximizes the possibility of accurately clustering a set of AE waveforms. We end with an overview of the state of ML as it relates to AE in composite materials, discussing current agreements within the community and challenges that must be addressed before an AE-ML framework can be used to relate damage modes to microstructural parameters.

In reviewing AE, composites, and ML literature we draw the following conclusions:

-

1.

There are encoded characteristics within the frequency domain of waveforms that relate AE to damage modes. This is stipulated by the fact that singular frequency values should not be used alone due to their dependence on propagation pathways from damage sources.

-

2.

It is often the case that features are used with no physical basis, and in some cases, features are extracted by arbitrary methods. This practice degrades the quality of partitions. Only physically meaningful features should be used, and their extraction should be mathematically consistent.

-

3.

Previous efforts in AE-ML have explored different feature sets, yet have largely relied on k-means clustering. Future work should move beyond k-means, as it is only suited for a narrow set of data geometries.

-

4.

There is limited work in verifying fidelity of cluster labels and membership. Progress in this field hinges on quantifying error, which is contingent on spatially and temporally resolved in situ observations of bulk damage accumulation.

Data availability

Data sharing not applicable to this article as no data sets were generated or analyzed during the current study.

References

Miller, R. K. Nondesteructive Testing Handbook, 2nd edn: Vol. 6, Acoustic Emission Testing (Amer Society for Nondestructive Testing, 1987).

Rice, P. M. Pottery Analysis: A Sourcebook (University of Chicago Press, 2015).

Maddin, R., Wheeler, T. S. & Muhly, J. D. Tin in the ancient near east. Expedition 19, 35–47 (1977).

Haq, S. N. Names, Natures and Things: The Alchemist Jābir ibn Hayyān and his Kitāb al-Ahjār (Book of Stones) Vol. 158 (Springer Science & Business Media, 2012).

Norris, J. A. The mineral exhalation theory of metallogenesis in pre-modern mineral science. Ambix 53, 43–65 (2006).

Morscher, G. N. & Godin, N. Use of acoustic emission for ceramic matrix composites. In: Ceramic Matrix Composites: Materials, Modeling and Technology 1st edn (eds Bansal, N. P. & Lamon, J), 571–590 (John Wiley & Sons, Inc., 2015).

Roth, M., Mojaev, E., Dul’kin, E., Gemeiner, P. & Dkhil, B. Phase transition at a nanometer scale detected by acoustic emission within the cubic phase Pb(Zn1/3Nb2/3)O3-xPbTiO3 relaxor ferroelectrics. Phys. Rev. Lett. 98, 1–4 (2007).

Whitlow, T., Jones, E. & Przybyla, C. In-situ damage monitoring of a SiC/SiC ceramic matrix composite using acoustic emission and digital image correlation. Composite Struct. 158, 245–251 (2016).

Swaminathan, B. et al. Microscale characterization of damage accumulation in cmcs. J. Eur. Ceram. Soc. 41, 3082–3093 (2021).

Park, J. M. & Kim, H. C. The effects of attenuation and dispersion on the waveform analysis of acoustic emission. J. Phys. D: Appl. Phys. 22, 617–622 (1989).

Wadley, H. N., Scruby, C. B. & Speake, J. H. Acoustic emission for physical examination of metals. Int. Met. Rev. 25, 41–62 (1980).

Williams, J. H., Nayeb-Hashemi, H. & Lee, S. S. Ultrasonic attenuation and velocity in AS/3501-6 graphite fiber composite. J. Nondestructive Evaluation 1, 137–148 (1980).

Gorman, M. R. & Prosser, W. H. AE source orientation by plate wave analysis. J. Acoust. Emiss. 9, 283–288 (1991).

Prosser, W. H., Dorighi, J. & Gorman, M. R. Extensional and flexural waves in a thin-walled graphite/epoxy tube. J. Composite Mater. 26, 2016–2027 (1992).

Morscher, G. N., Martinez-Fernandez, J. & Purdy, M. J. Determination of interfacial properties using a single-fiber microcomposite test. J. Am. Ceram. Soc. 79, 1083–1091 (1996).

Ni, Q.-Q., Kurashiki, K. & Iwamoto, M. AE technique for identification of micro in CFRP composites failure modes. Mater. Sci. Res. Int. 7, 67–71 (2001).

Gorman, M. R. & Ziola, S. M. Plate waves produced by transverse matrix cracking. Ultrasonics 29, 245–251 (1991).

Surgeon, M. & Wevers, M. Modal analysis of acoustic emission signals from CFRP laminates. NDT E Int. 32, 311–322 (1999).

Evans, A. G. & Linzer, M. Failure prediction in structural ceramics using acoustic emission. J. Am. Ceram. Soc. 56, 575–581 (1973).

Brewer, D. HSR/EPM combustor materials development program. Mater. Sci. Eng. A 261, 284–291 (1999).

Morscher, G. N., Hurst, J. & Brewer, D. Intermediate-temperature stress rupture of a woven hi-nicalon, bn-interphase, sic-matrix composite in air. J. Am. Ceram. Soc. 83, 1441–1449 (2000).

Glass, D. E. Ceramic matrix composite (CMC) thermal protection systems (TPS) and hot structures for hypersonic vehicles. In 15th AIAA International Space Planes and Hypersonic Systems and Technologies Conference, March 2007, 1–36 (2008).

Lee, W. E., Gilbert, M., Murphy, S. T. & Grimes, R. W. Opportunities for advanced ceramics and composites in the nuclear sector. J. Am. Ceram. Soc. 96, 2005–2030 (2013).

Zok, F. W. Ceramic-matrix composites enable revolutionary gains in turbine engine efficiency. J. Am. Ceram. Soc. 95, 22–28 (2016).

Barr, S. & Benzeggagh, M. L. On the use of acoustic emission to investigate damage mechanisms in glass-fibre-reinforced polypropylene. Compos. Sci. Technol. 52, 369–376 (1994).

de Groot, P. J., Wijnen, P. A. & Janssen, R. B. Real-time frequency determination of acoustic emission for different fracture mechanisms in carbon/epoxy composites. Compos. Sci. Technol. 55, 405–412 (1995).

Morscher, G. N. Modal acoustic emission of damage accumulation in a woven SiC/SiC composite. Compos. Sci. Technol. 59, 687–697 (1999).

Alchakra, W., Allaf, K. & Ville, J. M. Acoustical emission technique applied to the characterisation of brittle materials. Appl. Acoust. 52, 53–69 (1997).

Maillet, E., Baker, C., Morscher, G. N., Pujar, V. V. & Lemanski, J. R. Feasibility and limitations of damage identification in composite materials using acoustic emission. Compos. Part A: Appl. Sci. Manuf. 75, 77–83 (2015).

Oz, F. E., Ersoy, N. & Lomov, S. V. Do high frequency acoustic emission events always represent fibre failure in CFRP laminates? Compos. Part A: Appl. Sci. Manuf. 103, 230–235 (2017).

Maillet, E. et al. Analysis of acoustic emission energy release during static fatigue tests at intermediate temperatures on ceramic matrix composites: towards rupture time prediction. Compos. Sci. Technol. 72, 1001–1007 (2012).

Godin, N., Huguet, S., Gaertner, R. & Salmon, L. Clustering of acoustic emission signals collected during tensile tests on unidirectional glass/polyester composite using supervised and unsupervised classifiers. NDT E Int. 37, 253–264 (2004).

Ramirez-Jimenez, C. R. et al. Identification of failure modes in glass/polypropylene composites by means of the primary frequency content of the acoustic emission event. Compos. Sci. Technol. 64, 1819–1827 (2004).

Li, L., Lomov, S. V., Yan, X. & Carvelli, V. Cluster analysis of acoustic emission signals for 2D and 3D woven glass/epoxy composites. Composite Struct. 116, 286–299 (2014).

Xu, D., Liu, P. F., Li, J. G. & Chen, Z. P. Damage mode identification of adhesive composite joints under hygrothermal environment using acoustic emission and machine learning. Composite Struct. 211, 351–363 (2019).

Jain, A. K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 31, 651–666 (2010).

Jain, A. K., Murty, M. P. & Flynn, P. J. Data clustering: a review. ACM Comput. Surv. 31, 264–323 (1999).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Graff, K. F.Wave Motion in Elastic Solids (Ohio State University Press, 1975).

Halbig, M. C., Jaskowiak, M. H., Kiser, J. D. & Zhu, D. Evaluation of ceramic matrix composite technology for aircraft turbine engine applications. 51st AIAA Aerospace Sciences Meeting including the New Horizons Forum and Aerospace Exposition 2013 (2013).

Huang, W., Rokhlin, S. I. & Wang, Y. J. Effect of fibre-matrix interphase on wave propagation along, and scattering from, multilayered fibres in composites. Transfer matrix approach. Ultrasonics 33, 365–375 (1995).

Morscher, G. N. & Gyekenyesi, A. L. The velocity and attenuation of acoustic emission waves in SiC/SiC composites loaded in tension. Compos. Sci. Technol. 62, 1171–1180 (2002).

Biwa, S., Watanabe, Y. & Ohno, N. Analysis of wave attenuation in unidirectional viscoelastic composites by a differential scheme. Compos. Sci. Technol. 63, 237–247 (2003).

Scruby, C. B., Wadley, H. N. & Hill, J. J. Dynamic elastic displacements at the surface of an elastic half-space due to defect sources. J. Phys. D: Appl. Phys. 16, 1069–1083 (1983).

Hamstad, M. A. & Gary, J. A wavelet transform applied to acoustic emission signals: Part 1: source identification. J. Acoust. Emiss. 20, 39–61 (2002).

Hamstad, M. A. Acoustic emission signals generated by monopole (pencil lead break) versus dipole sources: finite element modeling and experiments. J. Acoust. Emiss. 25, 92–106 (2007).

Wilcox, P. D. et al. Progress towards a forward model of the complete acoustic emission process. Adv. Mater. Res. 13-14, 69–76 (2006).

Sause, M. G. & Horn, S. Simulation of acoustic emission in planar carbon fiber reinforced plastic specimens. J. Nondestructive Evaluation 29, 123–142 (2010).

Sause, M. G. R. & Horn, S. R. Influence of specimen geometry on acoustic emission signals in fiber. 29th European Conference on Acoustic Emission Testing 1–8 (2010).

Bhuiyan, M. Y., Bao, J., Poddar, B. & Giurgiutiu, V. Toward identifying crack-length-related resonances in acoustic emission waveforms for structural health monitoring applications. Struct. Health Monit. 17, 577–585 (2018).

Prosser, W. Advanced AE techniques in composite materials research. J. Acoust. Emiss. 14 (1996).

Zelenyak, A. M., Hamstad, M. A. & Sause, M. G. Modeling of acoustic emission signal propagation in waveguides. Sensors 15, 11805–11822 (2015).

Gall, T. L., Monnier, T., Fusco, C., Godin, N. & Hebaz, S. E. Towards quantitative acoustic emission by finite element modelling: Contribution of modal analysis and identification of pertinent descriptors. Appl. Sci. 8, 2557 (2018).

Aggelis, D. G., Shiotani, T., Papacharalampopoulos, A. & Polyzos, D. The influence of propagation path on elastic waves as measured by acoustic emission parameters. Struct. Health Monit. 11, 359–366 (2012).

Tabrizi, I. E., Kefal, A., Zanjani, J. S. M., Akalin, C. & Yildiz, M. Experimental and numerical investigation on fracture behavior of glass/carbon fiber hybrid composites using acoustic emission method and refined zigzag theory. Composite Struct. 223, 110971 (2019).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016). http://www.deeplearningbook.org

Ng, A., Jordan, M. & Weiss, Y. On spectral clustering: analysis and an algorithm. Adv. Neural Inf. Process. Syst. 14 (2001).

Reynolds, D. Gaussian mixture models. Encycl. Biometrics 741, 1–5 (2009).

Fränti, P. & Sieranoja, S. How much can k-means be improved by using better initialization and repeats? Pattern Recognit. 93, 95–112 (2019).

Jain, A. K., Duin, R. & Mao, J. Statistical pattern recognition: a review. IEEE Trans. Pattern Anal. Mach. Intell. 22, 4–37 (2000).

Sause, M. G. & Horn, S. Quantification of the uncertainty of pattern recognition approaches applied to acoustic emission signals. J. Nondestructive Evaluation 32, 242–255 (2013).

Watanabe, S. Pattern Recognition: Human and Mechanical (John Wiley and Sons, 1985).

Elsley, R. K. & Graham, L. J. Pattern recognition in acoustic emission experiments. In Pattern Recognition and Acoustical Imaging, Vol. 0768, 285 (1987).

Johnson, M. Waveform based clustering and classification of AE transients in composite laminates using principal component analysis. NDT E Int. 35, 367–376 (2002).

Kostopoulos, V., Loutas, T. H., Kontsos, A., Sotiriadis, G. & Pappas, Y. Z. On the identification of the failure mechanisms in oxide/oxide composites using acoustic emission. NDT E Int. 36, 571–580 (2003).

de Oliveira, R. & Marques, A. T. Health monitoring of FRP using acoustic emission and artificial neural networks. Computers Struct. 86, 367–373 (2008).

Moevus, M. et al. Analysis of damage mechanisms and associated acoustic emission in two SiCf/[Si-B-C] composites exhibiting different tensile behaviours. Part II: unsupervised acoustic emission data clustering. Compos. Sci. Technol. 68, 1258–1265 (2008).

Gutkin, R. et al. On acoustic emission for failure investigation in CFRP: Pattern recognition and peak frequency analyses. Mech. Syst. Signal Process. 25, 1393–1407 (2011).

Momon, S., Godin, N., Reynaud, P., R’Mili, M. & Fantozzi, G. Unsupervised and supervised classification of AE data collected during fatigue test on CMC at high temperature. Compos. Part A: Appl. Sci. Manuf. 43, 254–260 (2012).

Sause, M. G., Gribov, A., Unwin, A. R. & Horn, S. Pattern recognition approach to identify natural clusters of acoustic emission signals. Pattern Recognit. Lett. 33, 17–23 (2012).

Kempf, M., Skrabala, O. & Altstädt, V. Reprint of: acoustic emission analysis for characterisation of damage mechanisms in fibre reinforced thermosetting polyurethane and epoxy. Compos. Part B: Eng. 65, 117–123 (2014).

Maillet, E. & Morscher, G. N. Waveform-based selection of acoustic emission events generated by damage in composite materials. Mech. Syst. Signal Process. 52, 217–227 (2015).

Li, L., Swolfs, Y., Straumit, I., Yan, X. & Lomov, S. V. Cluster analysis of acoustic emission signals for 2D and 3D woven carbon fiber/epoxy composites. J. Composite Mater. 50, 1921–1935 (2016).

Das, A. K., Suthar, D. & Leung, C. K. Machine learning based crack mode classification from unlabeled acoustic emission waveform features. Cem. Concr. Res. 121, 42–57 (2019).

Huang, N. E. & Wu, Z. A review on Hilbert-Huang transform: method and its applications to geophysical studies. Rev. Geophysics 46, 1–23 (2008).

Suzuki, H. et al. Wavelet transform of acoustic emission signals. J. Acoust. Emiss. 14, 69–84 (1996).

Huang, N. E., Shen, Z. & Long, S. R. A new view of nonlinear water waves: the Hilbert spectrum. Annu. Rev. Fluid Mech. 31, 417–457 (1999).

Qi, G. Wavelet-based AE characterization of composite materials. NDT E Int. 33, 133–144 (2000).

Baccar, D. & Söffker, D. Identification and classification of failure modes in laminated composites by using a multivariate statistical analysis of wavelet coefficients. Mech. Syst. Signal Process. 96, 77–87 (2017).

Wirtz, S. F., Beganovic, N. & Söffker, D. Investigation of damage detectability in composites using frequency-based classification of acoustic emission measurements. Struct. Health Monit. 18, 1207–1218 (2019).

Bak, K. M., Kalaichelvan, K., Vijayaraghavan, G. K. & Sridhar, B. Acoustic emission wavelet transform on adhesively bonded single-lap joints of composite laminate during tensile test. J. Reinforced Plast. Compos. 32, 87–95 (2013).

Arakawa, K. & Matsuo, T. Acoustic emission pattern recognition method utilizing elastic wave simulation. Mater. Trans. 58, 1411–1417 (2017).

Maillet, E. et al. Damage monitoring and identification in SiC/SiC minicomposites using combined acousto-ultrasonics and acoustic emission. Compos. Part A: Appl. Sci. Manuf. 57, 8–15 (2014).

Satour, A., Montrésor, S., Bentahar, M. & Boubenider, F. Wavelet based clustering of acoustic emission hits to characterize damage mechanisms in composites. J. Nondestructive Evaluation 39, 1–11 (2020).

Daubechies, I. The wavelet transform, time-frequency localization and signal analysis. IEEE Trans. Inf. Theory 36, 961–1005 (1990).

Fotouhi, M., Saeedifar, M., Sadeghi, S., Ahmadi Najafabadi, M. & Minak, G. Investigation of the damage mechanisms for mode I delamination growth in foam core sandwich composites using acoustic emission. Struct. Health Monit. 14, 265–280 (2015).

Morizet, N. et al. Classification of acoustic emission signals using wavelets and random forests: application to localized corrosion. Mech. Syst. Signal Process. 70-71, 1026–1037 (2016).

The Mathworks, Inc., Natick, Massachusetts. MATLAB version 9.9.0.1462360 (R2020b) (2020).

Hamdi, S. E. et al. Acoustic emission pattern recognition approach based on Hilbert-Huang transform for structural health monitoring in polymer-composite materials. Appl. Acoust. 74, 746–757 (2013).

Laszuk, D. Python implementation of empirical mode decomposition algorithm. https://github.com/laszukdawid/PyEMD (2017).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Yang, Z., Yu, Z., Xie, C. & Huang, Y. Application of Hilbert-Huang transform to acoustic emission signal for burn feature extraction in surface grinding process. Meas.: J. Int. Meas. Confederation 47, 14–21 (2014).

WenQin, H., Ying, L., AiJun, G. & Yuan, F. G. Damage modes recognition and Hilbert-Huang transform analyses of CFRP laminates utilizing acoustic emission technique. Appl. Composite Mater. 23, 155–178 (2016).

Xu, J., Wang, W., Han, Q. & Liu, X. Damage pattern recognition and damage evolution analysis of unidirectional CFRP tendons under tensile loading using acoustic emission technology. Compos. Struct. 238, 111948 (2020).

Godin, N., Huguet, S. & Gaertner, R. Integration of the Kohonen’s self-organising map and k-means algorithm for the segmentation of the AE data collected during tensile tests on cross-ply composites. NDT E Int. 38, 299–309 (2005).

Marec, A., Thomas, J. H. & El Guerjouma, R. Damage characterization of polymer-based composite materials: multivariable analysis and wavelet transform for clustering acoustic emission data. Mech. Syst. Signal Process. 22, 1441–1464 (2008).

Chidananda Gowda, K. & Krishna, G. Agglomerative clustering using the concept of mutual nearest neighbourhood. Pattern Recognit. 10, 105–112 (1978).

Rajendra, D., Knighton, T., Esterline, A. & Sundaresan, M. J. Physics-based classification of acoustic emission waveforms. In Nondestructive Characterization for Composite Materials, Aerospace Engineering, Civil Infrastructure, and Homeland Security 2011, Vol. 7983 (2011).

Moevus, M. et al. Analysis of damage mechanisms and associated acoustic emission in two SiC/[Si-B-C] composites exhibiting different tensile behaviours. Part I: damage patterns and acoustic emission activity. Compos. Sci. Technol. 68, 1250–1257 (2007).

R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2017).

MacGregor, J. F. & Kourti, T. Statistical process control of multivariate processes. Control Eng. Pract. 3, 403–414 (1995).

Celebi, M. E., Kingravi, H. A. & Vela, P. A. A comparative study of efficient initialization methods for the k-means clustering algorithm. Expert Syst. Appl. 40, 200–210 (2013).

Sibil, A., Godin, N., R’Mili, M., Maillet, E. & Fantozzi, G. Optimization of acoustic emission data clustering by a genetic algorithm method. J. Nondestructive Evaluation 31, 169–180 (2012).

Fotouhi, M., Heidary, H., Ahmadi, M. & Pashmforoush, F. Characterization of composite materials damage under quasi-static three-point bending test using wavelet and fuzzy C-means clustering. J. Composite Mater. 46, 1795–1808 (2012).

Mi, Y., Zhu, C., Li, X. & Wu, D. Acoustic emission study of effect of fiber weaving on properties of fiber-resin composite materials. Composite Struct. 237, 111906 (2020).

Mohammadi, R., Najafabadi, M. A., Saeedifar, M., Yousefi, J. & Minak, G. Correlation of acoustic emission with finite element predicted damages in open-hole tensile laminated composites. Compos. Part B: Eng. 108, 427–435 (2017).

Shateri, M., Ghaib, M., Svecova, D. & Thomson, D. On acoustic emission for damage detection and failure prediction in fiber reinforced polymer rods using pattern recognition analysis. Smart Mater. Struct. 26, 065023 (2017).

Refahi Oskouei, A., Heidary, H., Ahmadi, M. & Farajpur, M. Unsupervised acoustic emission data clustering for the analysis of damage mechanisms in glass/polyester composites. Mater. Des. 37, 416–422 (2012).

Dempster, A. P., Laird, N. M. & Rubin, D. B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc.: Ser. B (Methodol.) 39, 1–22 (1977).

Do, C. B. & Batzoglou, S. What is the expectation maximization algorithm? Nat. Biotechnol. 26, 897–899 (2008).

Kohonen, T. The self-organizing map. Neurocomputing 21, 1–6 (1998).

van der Maaten, L. & Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605 (2008).

Tou, J. T. Dynoc-A dynamic optimal cluster-seeking technique. Int. J. Computer Inf. Sci. 8, 541–547 (1979).

Davies, D. L. & Bouldin, D. W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1, 224–227 (1979).

Rousseeuw, P. J. Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J. Computational Appl. Math. 20, 53–65 (1987).

Hubert, L. & Arabie, P. Comparing partitions. J. Classification 2, 193–218 (1985).

Rand, W. M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66, 846–850 (1971).

Santos, J. M. & Embrechts, M. On the use of the adjusted rand index as a metric for evaluating supervised classification. In Artificial Neural Networks – ICANN 2009 (eds Alippi, C., Polycarpou, M., Panayiotou, C. & Ellinas, G.) 175–184 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2009).

Gates, A. J. & Ahn, Y. Y. The impact of random models on clustering similarity. J. Mach. Learn. Res. 18, 1–28 (2017).

Mclachlan, G. J. & Rathnayake, S. On the number of components in a Gaussian mixture model. Wiley Interdiscip. Rev.: Data Min. Knowl. Discov. 4, 341–355 (2014).

Jain, A. K. Artificial neural networks for feature extraction and multivariate data projection. IEEE Trans. Neural Netw. 6, 296–317 (1995).

McCrory, J. P. et al. Damage classification in carbon fibre composites using acoustic emission: a comparison of three techniques. Compos. Part B: Eng. 68, 424–430 (2015).

Kim, J. T., Sakong, J., Woo, S. C., Kim, J. Y. & Kim, T. W. Determination of the damage mechanisms in armor structural materials via self-organizing map analysis. J. Mech. Sci. Technol. 32, 129–138 (2018).

Roweis, S. T. & Saul, L. K. Nonlinear dimensionality reduction by locally linear embedding. Science 290, 2323–2326 (2000).

Tenenbaum, J. B., De Silva, V. & Langford, J. C. A global geometric framework for nonlinear dimensionality reduction. Science 290, 2319–2323 (2000).

Sammon, J. W. A nonlinear mapping for data structure analysis. IEEE Trans. Computers C-18, 401–409 (1969).

Wattenberg, M., Viégas, F. & Johnson, I. How to use t-sne effectively. Distill 1 (2016).

Scholey, J. J., Wilcox, P. D., Wisnom, M. R. & Friswell, M. I. Quantitative experimental measurements of matrix cracking and delamination using acoustic emission. Compos. Part A: Appl. Sci. Manuf. 41, 612–623 (2010).

Wang, Z., Ning, J. & Ren, H. Frequency characteristics of the released stress wave by propagating cracks in brittle materials. Theor. Appl. Fract. Mech. 96, 72–82 (2018).

Kostopoulos, V., Loutas, T. & Dassios, K. Fracture behavior and damage mechanisms identification of SiC/glass ceramic composites using AE monitoring. Compos. Sci. Technol. 67, 1740–1746 (2007).

Sause, M. G., Müller, T., Horoschenkoff, A. & Horn, S. Quantification of failure mechanisms in mode-I loading of fiber reinforced plastics utilizing acoustic emission analysis. Compos. Sci. Technol. 72, 167–174 (2012).

Anastassopoulos, A. & Philippidis, T. Clustering methodology for the evaluation of acoustic emission from composites. J. Acoust. Emiss. 13, 11–22 (1995).

Bussiba, A., Kupiec, M., Ifergane, S., Piat, R. & Böhlke, T. Damage evolution and fracture events sequence in various composites by acoustic emission technique. Compos. Sci. Technol. 68, 1144–1155 (2008).

fei Zhang, P., Zhou, W., fei Yin, H. & jing Shang, Y. Progressive damage analysis of three-dimensional braided composites under flexural load by micro-CT and acoustic emission. Composite Struct. 226, 111196 (2019).

Zhou, W., Qin, R., ning Han, K., yuan Wei, Z. & Ma, L. H. Progressive damage visualization and tensile failure analysis of three-dimensional braided composites by acoustic emission and micro-CT. Polym. Test. 93, 106881 (2021).

Farhidzadeh, A., Mpalaskas, A. C., Matikas, T. E., Farhidzadeh, H. & Aggelis, D. G. Fracture mode identification in cementitious materials using supervised pattern recognition of acoustic emission features. Constr. Build. Mater. 67, 129–138 (2014).