Abstract

We demonstrate a low-profile holographic imaging system at millimeter wavelengths based on an aperture composed of frequency-diverse metasurfaces. Utilizing measurements of spatially-diverse field patterns, diffraction-limited images of human-sized subjects are reconstructed. The system is driven by a single microwave source swept over a band of frequencies (17.5–26.5 GHz) and switched between a collection of transmit and receive metasurface panels. High fidelity image reconstruction requires a precise model for each field pattern generated by the aperture, as well as the manner in which the field scatters from objects in the scene. This constraint makes scaling of computational imaging systems inherently challenging for electrically large, coherent apertures. To meet the demanding requirements, we introduce computational methods and calibration approaches that enable rapid and accurate imaging performance.

Similar content being viewed by others

Introduction

Microwave and millimeter wave (mmW) radio frequency signals (1–300 GHz) can penetrate many optically opaque materials, allowing visualization of hidden objects. Economical radio hardware in this regime supports coherent measurements from a large number of sub-apertures to realize three dimensional (3D) image reconstruction. However, it is non-trivial to realize spatially coherent measurements across the large aperture necessary to achieve the resolution required for applications such as security screening, through-wall imaging, automotive radar, machine vision, and medical diagnosis1,2,3,4,5,6,7,8. As the demand for mmW imaging increases, low-cost and robust approaches are being sought that can be deployed in large volume.

Archetypical approaches to mmW imaging have relied on either (a) sampling an aperture with a dense array of sources for beam forming, as in active electronically scanned antennas (AESAs)9, or (b) mechanically scanning a transceiver over the aperture, as in synthetic aperture radar (SAR) systems10,11. The former approach yields fast acquisition times but with expensive hardware, while the latter approach has inherently slow acquisition time. Both approaches result in a sampling of the aperture at roughly the Nyquist limit (half the free space wavelength), making either beam forming possible or allowing fast Fourier transform (FFT) techniques to be applied for image reconstruction.

The limitations associated with conventional approaches can be circumvented by adopting a more abstract perspective of the measurement process. As we abandon conventional antenna elements and Nyquist sampling of the full aperture, the computationally simple relationships between measured data and image reconstruction no longer exist. Fortunately, modern computational imaging (CI) schemes12 provide the mathematical foundation for advanced imaging systems that can take advantage of arbitrary measurement modalities. Leveraging continual advances in computing power, CI approaches have become increasingly viable, relaxing hardware constraints and enabling alternative aperture architectures to be explored.

Across the electromagnetic spectrum, from microwave to x-ray, and even in the acoustic regime, demonstrations of CI approaches are numerous in the literature13,14,15,16,17,18,19. A particularly relevant example, coded apertures, has enabled the development of single pixel imaging systems at infrared, terahertz (THz) and x-ray wavelengths20,21,22 where detector arrays may be prohibitively expensive. In these systems, light reflected from a scene is passed through a set of masks, each of which has a spatially varying transparency, and is then focused to a detector. Scene information is thus multiplexed across many non-orthogonal measurements, with the image reconstructed using more general CI algorithms. The variable mask plus detector can be thought of as a large aperture antenna that either measures or produces complex radiation patterns, or measurement modes. Coded aperture systems trade-off complex modes and processing to minimize the need for expensive detectors.

The CI concept has also been used to take advantage of frequency-diversity, with several demonstrations of metasurface apertures that produce distinct mode patterns as a function of frequency. Such apertures can acquire scene information using only a frequency sweep from a single source, without moving parts23.

Metasurfaces, a more practical outgrowth of metamaterials research24,25,26, are appealing for imaging applications for several reasons. Propagating waves through a single metamaterial layer circumvents many of the problematic aspects associated with volumetric metamaterials. Moreover, the enormous flexibility associated with metasurface designs provides tremendous opportunities for the development of apertures that optimally leverage CI algorithms. The frequency-diverse metasurface aperture is attractive because it can be fabricated at mmW wavelengths using standard printed circuit manufacturing, leading to an extremely low-cost and low-profile form factor.

Results

Millimeter wave imaging system

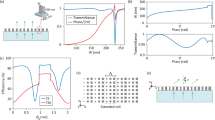

The imaging system demonstrated here is based on an array of metasurface panels, as indicated in Fig. 1. Each metasurface panel is constructed of low-loss, copper clad printed circuit substrate (Rogers 3003, Rogers Corporation). The top and bottom bounding copper layers, plus a via fence at the periphery of the board, define a 2D irregularly shaped dielectric cavity, as illustrated in Fig. 1(B). A back mounted coaxial connector feeds a cylindrical coplanar waveguide mode into the cavity at an off-center point. Subwavelength irises, etched into the top copper surface, sample the waveguide mode and transmit or receive radiation. The spatially varying waveguide modes within the irregular circuit board cavity feed the irises, which in turn produce distinct radiation patterns that vary as a function of the driving frequency27,28,29,30.

(A) Frequency swept measurements are acquired from each combination of the 24 transmit (blue) and 72 receive (green) metasurface panels in the imaging system. The measurements probe the scene with complex radiation patterns, as shown here projected on a mannequin target at 1 m. RF radiation passes through weak dielectric materials, such as clothing, but reflects off the body, metallic objects, and high dielectrics. The frequency-diverse response of the PCB based metasurfaces, shown in (B), provide a large set of distinct radiation patterns for image reconstruction.

Because each panel can only support a limited number of frequency-diverse measurements, the composite imaging system consists of 24 transmit (Tx) panels and 72 receive (Rx) panels (12 cm × 12 cm). The metasurface panels are distributed over a 2.1 m × 2.1 m aperture, as shown in Fig. 1. The metasurface sub-aperture panels are grouped into modules for mechanical convenience, which in turn are distributed on an irregular grid; the removal of periodicity avoids aliasing in the reconstructed images. In this configuration, a given Tx panel is repeatedly excited by a frequency sweep, with measurements taken sequentially on all of the Rx panels. The total number of measurements available from the system is 24 × 72 × Nf, where Nf is the number of frequencies measured. Measurements are taken from each pair of Tx/Rx panels using a switch-based signal distribution network. The system is driven by a single custom homodyne RF transceiver (radio) that sweeps across the K-band (17.5–26.5 GHz) in discrete frequency steps. The received in-phase and quadrature-phase data is sampled by the radio hardware and sent to a host PC for image reconstruction. The average noise floor of the radio is −100 dB, allowing for the measurement of weak return signals from small objects or dielectrics with permittivity close to air.

Maximizing the set of useful frequencies measurements, Nf, implies maximizing the diversity of the radiated fields, which in turn requires optimization of the geometry of the metasurface. To assess the imaging capacity of the panels, we perform a plane-wave decomposition of the radiative modes and a mapping of their spatial frequencies (k-space, as described in the Supporting Online Material - SOM). For the frequency-diverse panels, the distribution of the irises determines the accessible Fourier space, while the variability of the modes in the cavity—across the frequency band—determines the specific Fourier components that are sampled at each measurement mode31.

Investigation of the k-space support led to the Mills Cross design32. The irises have a slot shape and are oriented horizontally, at the top and bottom of the transmitter metasurface panels; and vertically, at the left and right of the receiver metasurface panels, as shown in Fig. 1(B). This sparse distribution provides the same k-space support as a densely spaced iris layout. The use of minimally redundant antenna distributions, such as Golay patterns, is well established in radio astronomy where it reduces hardware requirements33. Here, by minimizing the number of radiation channels, we statistically increase the phase accumulation along the different scattering paths inside the cavity, which in turn, manifests as increased mode diversity between frequency steps (widening the impulse response). The loaded quality factor, Q, of a panel encapsulates this mode diversity and is straight-forwardly measured34. A higher Q statistically ensures mode diversity and maximizes the number of distinct measurement modes available for imaging. The number of useful frequency measurements for a set of frequency-diverse panels can be estimated as Nf = QB/f0, where B is the bandwidth and f0 is the center frequency of the bandwidth31. The number of distinct frequency measurements, Nf, determines the maximum dimension of the measurement space that can be measured, and hence the number of spatial components that can be observed. For the metasurface panel pair, ideally Nf ≈ (Niris)2, where Niris is the number of radiating irises on each Tx and Rx metasurface panel. Designing the metasurface panels requires balancing the material loss, size, the number of radiation irises, and the backend hardware requirements, to achieve the above equality. The optimal Q of the panels was designed to be Q ≈ 330, giving approximately Nf = 135 useful frequency measurements for the 10 cm × 10 cm cavity embedded in the panels (Nf = 100 was used in experiments). A distribution of Niris = 16 radiating irises on the panels (slightly above the predicted value) was found to be a compromise between maximizing spatial frequency sampling and maintaining the necessary signal-to-noise in the system32. The metasurface panels have an average radiation efficiency of η ≈ 30%.

Experimental imaging

In Fig. 2, we present experimental images of a full human sized mannequin at three different locations in the scene (with varying perspectives to demonstrate the 3D nature of the images). The mannequin is painted with conductive nickel paint to approximate the high reflectivity characteristics of human skin at mmW. Fine feature detail of the mannequin (at the diffraction limit) is clearly observable in these fully 3D images. We note that conductive targets at mmW often exhibit strong specularity due to their high conductivity and low surface roughness (with respect to the illumination wavelength), regardless of the imaging approach used. This reduces the viewing angle, in contrast to the diffuse scattering more commonly observed at optical wavelengths.

To reconstruct the images shown in Fig. 2, a complete characterization of the spatial field distribution corresponding to each of the Tx and Rx panels is necessary, as is an accurate model for object scattering. The importance of this model cannot be overstated; model accuracy dictates image fidelity in a CI system. For the scattering model, as is typical in mmW imaging, we apply the first Born approximation, which assumes the electric field reflected by a volume element is directly proportional to the incident field, or Eref(r) = f(r)Einc(r). This approximation neglects both material dispersion and assumes isotropy—both good approximations at mmW bands. With the scene space divided into discrete volume elements, or voxels, the relationship between the field measurements gi and reflectivity values fi takes the form of a matrix equation g = Hf + n, where Hij∝EiTxEjRx are the elements of an MxN measurement matrix and n is noise in the system. That is, the measurement matrix (H) elements are proportional to the field from the Tx panel at a given point in space, ETx, multiplied by the field from the Rx panel at the same point, ERx. In the absence of noise and with a complete set of orthogonal measurement modes, the scene reflectivity could be found by simple matrix inversion. However, for modes that exhibit correlation, as in our system, and with the number of measurements either under or over-sampling the scene, H cannot be inverted directly. The strategy for image reconstruction, then, is to estimate the image by solving  for the reflectivity vector f that minimizes the expression. We use a least squares (LS) algorithm here with an appropriate regularizer35.

for the reflectivity vector f that minimizes the expression. We use a least squares (LS) algorithm here with an appropriate regularizer35.

Image resolution and field of view

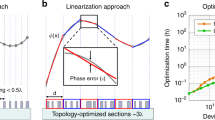

The resolution of the metasurface imager can be understood in the general context of SAR, for which the range resolution relates to the operational bandwidth, δr = c/2B, while the cross-range resolution is determined by the aperture size, δc = λminD/L, where λmin is the wavelength, D is the distance to the target, and L is the aperture width10. These estimates suggest resolution limits of δc = 5.4 mm and δr = 16.7 mm. The achieved resolution of the metasurface imager, however, is dependent on the actual sampling of the k-space as previously discussed. Critically, even if the aperture is sampled sparsely, reconstructions over a subset of the volume can still achieve diffraction limited resolution. For a specific imaging configuration, the singular value decomposition (SVD) of H provides a useful means of assessing the system’s imaging capacity. A nearly flat singular value spectrum signifies greater orthogonality of the measurements modes, while a decaying spectrum signifies redundancy in the measurements modes.

We use the SVD of the measurement matrix as a means of quantitatively assessing the cross-range measurement capacity of the system, considering a cross-range slice of 2 m2 in the scene at a distance of 1 meter. This area exceeds the estimated average cross-section of a person36, roughly 0.75 m2. Figure 3(A) shows the singular value spectrum of the frequency-diverse metasurface system prototyped here, and for comparison, a similarly sized monostatic SAR system. The SAR system utilizes two frequencies (17.5 GHz, 26.5 GHz) with aperture sampling at the midband Nyquist limit (7 mm), such that it has nearly the same number of measurement modes as the metasurface system. The measurement matrix for the SAR system possesses a nearly flat singular value spectrum as shown in Fig. 3(A). For the metasurface system, the non-orthogonality of the measurement modes results in a decaying slope; however, with sufficient signal-to-noise ratio and appropriate algorithmic approaches, it is possible to acquire a majority of the information available in the scene. This is confirmed with an analysis of the system point spread function (PSF)37, shown inset in Fig. 3(A). For this analysis the simple adjoint operator is used, i.e. f = H*g (where H* is the conjugate of H). In this manner we assess the PSF independent of the noise level. We obtain a cross-range resolution that approaches the diffraction limit—6.1 mm for the metasurface system and for the SAR system. Experimentally we have confirmed the resolution limits of the metasurface system, using resolution targets, as shown in Fig. 3(B). The critical comparison here is that both systems exhibit similar imaging resolution for a similar number of measurement modes. Leveraging frequency diversity thus eliminates the need for mechanical scanning or a vast number of switched elements to reach the ~80,000 Nyquist sampled measurements across the entire aperture, as would be needed for SAR imaging. There are only 96 switching channels for the metasurface system, for which the swept frequency measurements can be made extremely fast using current continuous wave radio hardware. This suggests a technical approach that can achieve fast imaging rates to rival electronic SAR systems but at much lower component costs comparable to mechanically scanned SAR systems.

(A) The simulated singular value spectrum over a 2 m2 cross range scene slice with Nf = 100 frequency sampling points over the K-band (17.5–26.5 GHz), compared with a SAR system having a comparable number of measurement modes as described—operating at two frequencies (17.5 GHz, 26.5 GHz). The Simulated PSF response (Matched Filter) for both systems is also shown; (B) Experimental images (LS reconstruction) of 7 mm, 10 mm, and 15 mm resolution targets showing the ability to clearly resolve the targets at the diffraction limit.

For potential 3D imaging applications, such as personnel screening, the imaging volume can be physically large. A human can fill a rectangular volume of ~2.5 million voxels when sampled at the diffraction limit. Fortunately, natural images often conform to simplifying priors, such as scene sparsity. This knowledge is utilized in compressive algorithms to extract an image from an underdetermined measurement set38,39. Here, a simple physical prior is appropriate and computationally inexpensive. The system is augmented with an optical structured light sensor (Kinect, Microsoft) to identify the target and reduce the extent of the reconstruction region. As an initial step in the imaging sequence, information about the target received from the Kinect sensor is used to build a voxel cage over the surface of the target; the reflectivity is then reconstructed at these voxel locations only, significantly reducing the computational effort.

Because the Kinect sensor can locate the target dynamically, the imaging region is not tightly constrained; rather, the target can be positioned anywhere within the field-of-view of the system. If the data acquisition and reconstruction time is suitably fast, targets can be imaged while in motion, allowing multiple images to be collected with non-redundant information. Such images can be stitched together to provide enhanced resolution, or to resolve image artifacts due to specularity and model error. In Fig. 4, we demonstrate the prospects for enhanced imaging, forming a composite image from a sequence of reconstructed images of a mannequin rotated in 5 degree increments (details and a rotating movie of the stitching can be found in the SOM). We see that the stitched image exposes nearly all surface detail of the mannequin as compared with any of the single images shown in Fig. 2.

Multiple experimental images can be stitched together to reveal the full detail of the mannequin and thereby overcome the limited specular view observed for any single pose. This approach enhances the detection of threat objects, such as the gun phantom (right side of the body, white dashed line) and knife phantom (left armpit, green dashed line) shown here. This image is composed of images taken of a mannequin as it was rotated through 5 degree increments.

To assess the system performance in comparison to its theoretical capacity, we first image the mannequin target using the Kinect sensor, creating a stereo-lithography (STL) file that can be imported into software simulation tools we have developed. A voxel cage is then built around the STL model exactly as done in the experiment, and the imported fields from the panels are used to illuminate the digitized mannequin. The mannequin voxels are given reflectivity values corresponding to the actual painted mannequin (ftarget~0.8), and the image reconstructed from the virtual measurements. Figure 5 shows the excellent agreement obtained between the simulated and measured mannequin target.

Because the simulated measurements implicitly assume perfect alignment between the fields and the virtual panels, the simulated reconstruction represents the best achievable image reconstruction fidelity. The experimental reconstructions are obtained by taking the actual measurements from the system and incorporating the alignment procedure described in the Methods section. If the alignment is not achieved to within a fairly tight tolerance, the reconstructed image is rapidly destroyed. The agreement between the simulated and measured mannequin target confirms that alignment errors have been minimized.

The imported STL model can also be used to compare the reconstruction capabilities of the metasurface system versus the comparable SAR system previously described in Fig. 3. The metasurface system is seen to be of similar quality to that of the SAR reconstruction in Fig. 6, despite the sparse spatial sampling of the aperture. We note that imperfections in the imported model (due to limitations of the structured light sensor) limit the absolute quality of either reconstruction simulation. None-the-less, for a similar number of allotted measurements, the study confirms that the metasurface approach can achieve comparable image quality to traditional SAR approaches.

A simulated reconstruction of the mannequin using (A) the metasurface system and (B) comparable SAR system, with parameters described previously in Fig. 3, is shown. (C) The 3D STL model was captured using a structured light measurement of an actual mannequin as shown in (D).

Discussion

Frequency-diverse metasurfaces provide a uniquely flexible platform for exploiting CI capabilities. This approach can readily be scaled to higher frequencies, where the benefits in minimizing active components and their associated costs are even more compelling. Rather than relying strictly on frequency-diversity and switching networks, dynamically reconfigurable metasurfaces can be implemented that would enable inexpensive imaging systems with reduced frequency bandwidth requirements40,41,42,43,44. Whether static or dynamic, metasurface architectures are poised to revolutionize RF aperture design, and represent an exciting paradigm for imaging sensors in the mmW and THz regimes.

Methods

Characterization of the measurement modes

The quality imaging observed in Figs 2, 3, 4, 5, 6 demands accurate knowledge of the fields in the scene. In experimental implementation, it is difficult to obtain precise field patterns for the metasurface apertures from either analytical models or full-wave simulations, at the level required for an accurate measurement matrix. We thus obtain a characterization of the fields using near-field scanning (NFS), in which a probe antenna measures the radiated field over a plane close to the aperture. Because of manufacturing tolerances, the fields from each of the 96 panels must be separately measured and stored45,46. From these near-field scans, the fields can be numerically propagated everywhere over the field-of-view (FOV) by application of the dyadic Green’s function propagator47, allowing a complete characterization of the fields over the imaging volume and hence of the measurement matrix.

System alignment

Once the field values are determined by near-field scans, they must be brought into coincidence with the actual physical locations of the panels within the final layout. To facilitate alignment across the full 2.1 m × 2.1 m aperture, we incorporate four additional radiating irises into the metasurface panels, in isolated locations, to act as alignment fiducials, as shown Fig. 1(B). Their physical locations are determined in relation to the near-field scans, via field propagating and search methods. Optical fiducials are also integrated onto the surfaces of the panels. Once the panels are bolted into the frame, their locations and orientations are determined by stereophotogrammetry (3D MAXShot, CREAform). Finally, the appropriate transformations from the NFS to the imaging coordinate system are determined, allowing exact orientation of the measured radiation patterns of the metasurfaces, regardless of their placement and orientation.

Image reconstruction

Once the metasurface panels have been aligned and characterized, the measurement matrix must be determined throughout the scene. Even with the optical sensor constraining reconstruction to an envelope of voxels within +/−4 cm of the front surface of the mannequin, the number of required voxels for diffraction-limited resolution is still large, on the order of 100,000–200,000. To facilitate a tractable reconstruction, we make use of the information that each pair of Tx and Rx panels has a predominant k-vector, and use the analytical form of the propagator to enable a partitioning of the full measurement matrix into a set of smaller sub-matrices48. The computation of these sub-matrices can be further parallelized using graphical processing units (GPUs), so that the equivalent required measurement matrix operations can be performed in minutes or even seconds. We apply this computational approach as a simulation tool to design, evaluate, and optimize the metasurface panels as well as the overall multi-panel aperture. We also use the computational technique for image reconstructions, importing the near-field scan data and scene measurement data.

Phase calibration of the feeding network

In addition to the precise alignment of the physical panels with respect to their computed field distributions, it is also necessary to obtain an accurate calibration of the phase advance through the feeding network to each metasurface panel49. Measuring the phase offset for each Tx/Rx path directly, however, is futile due to the perturbative effects that mechanically connecting the paths together would have on the measurements. Instead, we apply an in-scene calibration approach, in which two wideband dipole antennas within the scene transmit directly to all Tx/Rx metasurface panels. The positions of these dipole antennas are localized using the stereophotogrammetric approach, such that the free-space path lengths between all the metasurface panels and the antennas are determined. The phase advance along each path is then accurately modeled in software and subtracted from the actual experimental measurement, giving the desired calibration correction. This approach can be performed periodically to counter any drift in the feeding network and radio.

Additional Information

How to cite this article: Gollub, J. N. et al. Large Metasurface Aperture for Millimeter Wave Computational Imaging at the Human-Scale. Sci. Rep. 7, 42650; doi: 10.1038/srep42650 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Sheen, D. M., McMakin, D. L. & Hall, T. E. Three-dimensional millimeter-wave imaging for concealed weapon detection. IEEE T Microw Theory 49, 1581–1592, doi: 10.1109/22.942570 (2001).

Charvat, G. L., Goodwin, J., Tobias, M., Pozderac, J. & Peabody, J. Detection Algorithm Implementation and Measured Results for A Real-Time, Through-Wall Radar System using a TDM MIMO Antenna Array. IEEE Rad Conf (2012).

Nikolova, N. K. Microwave Imaging for Breast Cancer. IEEE Microw Mag 12, 78–94, doi: 10.1109/Mmm.2011.942702 (2011).

Ahmed, S. S., Schiessl, A. & Schmidt, L. P. A Novel Fully Electronic Active Real-Time Imager Based on a Planar Multistatic Sparse Array. IEEE T Microw Theory 59, 3567–3576, doi: 10.1109/Tmtt.2011.2172812 (2011).

Bond, E. J., Li, X., Hagness, S. C. & Van Veen, B. D. Microwave imaging via space-time beamforming for early detection of breast cancer. IEEE T Antenn Propag 51, 1690–1705 (2003).

Amin, M. G. Through-the-wall radar imaging (CRC press, 2016).

Dehmollaian, M. & Sarabandi, K. Refocusing through building walls using synthetic aperture radar. IEEE Transactions on Geoscience and Remote Sensing 46, 1589–1599 (2008).

Russell, M. E. et al. Millimeter-wave radar sensor for automotive intelligent cruise control (ICC). IEEE T Microw Theory 45, 2444–2453, doi: 10.1109/22.643858 (1997).

Fenn, A. J., Temme, D. H., Delaney, W. P. & Courtney, W. E. The development of phased-array radar technology. Lincoln Laboratory Journal 12, 321–340 (2000).

Skolnik, M. I. Radar handbook 3rd edn (McGraw-Hill, 2008).

Brown, W. M. Synthetic Aperture Radar. IEEE T Aero Elec Sys Aes3, 217 (1967).

Brady, D. J. & Optical Society of America. Optical imaging and spectroscopy (Wiley, 2009).

Xie, Y. B. et al. Single-sensor multispeaker listening with acoustic metamaterials. P Natl Acad Sci USA 112, 10595–10598, doi: 10.1073/pnas.1502276112 (2015).

Liutkus, A. et al. Imaging With Nature: Compressive Imaging Using a Multiply Scattering Medium. Sci Rep 4, doi: 10.1038/srep05552 (2014).

Fergus, R., Torralba, A. & Freeman, W. T. Random lens imaging. (2006).

Wang, L. B., Li, L. L., Li, Y. B., Zhang, H. C. & Cui, T. J. Single-shot and single-sensor high/super-resolution microwave imaging based on metasurface. Sci Rep-Uk 6, doi: ARTN 26959 10.1038/srep26959 (2016).

Venkatesh, S., Viswanathan, N. & Schurig, D. W-band sparse synthetic aperture for computational imaging. Opt. Express 24, 8317–8331, doi: 10.1364/Oe.24.008317 (2016).

Montaldo, G., Palacio, D., Tanter, M. & Fink, M. Building three-dimensional images using a time-reversal chaotic cavity. IEEE T Ultrason Ferr 52, 1489–1497, doi: 10.1109/Tuffc.2005.1516021 (2005).

Furxhi, O., Marks, D. L. & Brady, D. J. Echelle crossed grating millimeter wave beam scanner. Opt. Express 22, 16393–16407, doi: 10.1364/Oe.22.016393 (2014).

Chan, W. L. et al. A single-pixel terahertz imaging system based on compressed sensing. Appl Phys Lett 93, doi: 10.1063/1.2989126 (2008).

Takhar, D. et al. A new Compressive Imaging camera architecture using optical-domain compression - art. no. 606509. P Soc Photo-Opt Ins 6065, 6509–6509, doi: 10.1117/12.659602 (2006).

Watts, C. M. et al. Terahertz compressive imaging with metamaterial spatial light modulators. Nat Photonics 8, 605–609, doi: 10.1038/Nphoton.2014.139 (2014).

Hunt, J. et al. Metamaterial Apertures for Computational Imaging. Science 339, 310–313, doi: 10.1126/science.1230054 (2013).

Holloway, C. L. et al. An Overview of the Theory and Applications of Metasurfaces: The Two-Dimensional Equivalents of Metamaterials. IEEE Antenn Propag M 54, 10–35, doi: 10.1109/Map.2012.6230714 (2012).

Engheta, N. & Ziolkowski, R. W. Institute of Electrical and Electronics Engineers. Metamaterials: physics and engineering explorations (Wiley-Interscience, 2006).

Ding, X. M. et al. Ultrathin Pancharatnam-Berry Metasurface with Maximal Cross-Polarization Efficiency. Adv Mater 27, 1195–1200, doi: 10.1002/adma.201405047 (2015).

Fromenteze, T. et al. Computational imaging using a mode-mixing cavity at microwave frequencies. Appl Phys Lett 106, doi: 10.1063/1.4921081 (2015).

Yurduseven, O., Gowda, V., Gollub, J. & Smith, D. Multistatic Microwave Imaging with Arrays of Planar Cavities. IET Microwaves, Antennas & Propagation 10, 1174–1181 (2016).

Yurduseven, O., Gowda, V. R., Gollub, J. N. & Smith, D. R. Printed Aperiodic Cavity for Computational and Microwave Imaging. IEEE Microw Wirel Co 26, 367–369 (2016).

Imani, F., Sleasman, M., Gollub, T. J. N. & Smith, D. R. Analytical modeling of printed metasurface cavities for computational imaging. Journal of Applied Physics 120, 144903, doi: 10.1063/1.4964336 (2016).

Marks, D. L., Gollub, J. & Smith, D. R. Spatially resolving antenna arrays using frequency diversity. JOSA A 33, 899–912 (2016).

Yurduseven, O., Gollub, J. N., Marks, D. L. & Smith, D. R. Frequency-diverse microwave imaging using planar Mills-Cross cavity apertures. Opt. Express 24, 8907–8925, doi: 10.1364/OE.24.008907 (2016).

Golay, M. J. E. Point Arrays Having Compact, Nonredundant Autocorrelations. J Opt Soc Am 61, 272–273, doi: 10.1364/Josa.61.000272 (1971).

Siebert, W. M. Circuits, Signals, and Systems (MIT Press, 1986).

Liu, C., Wang, Y. M. & Heng, P. A. A comparison of truncated total least squares with Tikhonov regularization in imaging by ultrasound inverse scattering. Phys Med Biol 48, 2437–2451, doi: 10.1088/0031-9155/48/15/313 (2003).

Swearingen, J. J. & McFadden, E. B. Vol. 63 (ed. Civil Aeromedical Research Institute) 1–9 (U. S. Government Printing Office, Oklahoma City, OK, 1964).

Yurduseven, O. et al. Resolution of the Frequency Diverse Metamaterial Aperture Imager. Prog Electromagn Res 150, 97–107, doi: 10.2528/Pier14113002 (2015).

Candes, E. J., Romberg, J. K. & Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun Pur Appl Math 59, 1207–1223, doi: 10.1002/cpa.20124 (2006).

Donoho, D. L. Compressed sensing. IEEE T Inform Theory 52, 1289–1306, doi: 10.1109/Tit.2006.871582 (2006).

Sleasman, T., Imani, M. F., Gollub, J. N. & Smith, D. R. Dynamic metamaterial aperture for microwave imaging. Appl Phys Lett 107, doi: 10.1063/1.4935941 (2015).

Sleasman, T., Boyarsky, M., Imani, M. F., Gollub, J. N. & Smith, D. R. Design considerations for a dynamic metamaterial aperture for computational imaging at microwave frequencies. J. Opt. Soc. Am. B 33, 1098–1111, doi: 10.1364/JOSAB.33.001098 (2016).

Yang, H. H. et al. A programmable metasurface with dynamic polarization, scattering and focusing control. Sci Rep-Uk 6, doi: ARTN 35692 10.1038/srep35692 (2016).

Sleasman, T., Imani, M. F., Gollub, J. N. & Smith, D. R. Microwave Imaging Using a Disordered Cavity with a Dynamically Tunable Impedance Surface. Physical Review Applied 6, doi: 10.1103/PhysRevApplied.6.054019 (2016).

Yaghjian, A. An overview of near-field antenna measurements. IEEE T Antenn Propag 34, 30–45 (1986).

Gregson, S. F., McCormick, J. & Parini, C. & Institution of Engineering and Technology. Principles of planar near-field antenna measurements (Institution of Engineering and Technology, 2007).

Joy, E. B. & Paris, D. T. Spatial Sampling and Filtering in near-Field Measurements. IEEE T Antenn Propag 20, 253–261, doi: 10.1109/Tap.1972.1140193 (1972).

Lipworth, G. et al. Metamaterial apertures for coherent computational imaging on the physical layer. J Opt Soc Am A 30, 1603–1612, doi: 10.1364/Josaa.30.001603 (2013).

Boag, A. A fast multilevel domain decomposition algorithm for radar imaging. IEEE T Antenn Propag 49, 666–671 (2001).

Yurduseven, O. et al. Software Calibration of a Frequency-Diverse, Multistatic, Computational Imaging System. IEEE Access 4, 2488–2497 (2016).

Acknowledgements

This work was supported by the Department of Homeland Security, Science and Technology Directorate (Contract No. HSHQDC-12-C-00049). The published material represents the position of the author(s) and not necessarily that of the DHS.

Author information

Authors and Affiliations

Contributions

O.Y., D.L.M., J.N.G., M.F.I., T.S, H.O., T.Z., M.B., and G.L. designed, optimized, fabricated, and characterized the metasurface panels, and with the additional help of K.P.T., developed the full system calibration routines. D.A., A.P., and M.R. designed and built the custom RF radio. A.R. developed the software used for system simulation and experimental image reconstruction. D.L.M. and D.B. provided theoretical insight into the optimization of frequency-diverse systems. K.P.T. developed the control code for operating the experimental system. M.B. designed the custom structural components and packaging of the system. J.N.G., M.R., and D.R.S. supervised the project. All authors contributed to writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

D.R.S., D.A.B., and M.R. are all co-founders of and have financial interests in Evolv Technology, a company that was formed to commercialize computational imaging concepts for security screening applications.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Gollub, J., Yurduseven, O., Trofatter, K. et al. Large Metasurface Aperture for Millimeter Wave Computational Imaging at the Human-Scale. Sci Rep 7, 42650 (2017). https://doi.org/10.1038/srep42650

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep42650

This article is cited by

-

Reconfigurable flexible metasurfaces: from fundamentals towards biomedical applications

PhotoniX (2024)

-

Metasurfaces for bioelectronics and healthcare

Nature Electronics (2021)

-

Imaging with metamaterials

Nature Reviews Physics (2021)

-

Lowering latency and processing burden in computational imaging through dimensionality reduction of the sensing matrix

Scientific Reports (2021)

-

Analogue computing with metamaterials

Nature Reviews Materials (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.