Abstract

Genome-wide association studies present computational challenges for missing data imputation, while the advances of genotype technologies are generating datasets of large sample sizes with sample sets genotyped on multiple SNP chips. We present a new framework SparRec (Sparse Recovery) for imputation, with the following properties: (1) The optimization models of SparRec, based on low-rank and low number of co-clusters of matrices, are different from current statistics methods. While our low-rank matrix completion (LRMC) model is similar to Mendel-Impute, our matrix co-clustering factorization (MCCF) model is completely new. (2) SparRec, as other matrix completion methods, is flexible to be applied to missing data imputation for large meta-analysis with different cohorts genotyped on different sets of SNPs, even when there is no reference panel. This kind of meta-analysis is very challenging for current statistics based methods. (3) SparRec has consistent performance and achieves high recovery accuracy even when the missing data rate is as high as 90%. Compared with Mendel-Impute, our low-rank based method achieves similar accuracy and efficiency, while the co-clustering based method has advantages in running time. The testing results show that SparRec has significant advantages and competitive performance over other state-of-the-art existing statistics methods including Beagle and fastPhase.

Similar content being viewed by others

Introduction

Genome-wide association studies (GWAS) are promising to contribute to uncovering the genetic variations for many complex human diseases, with the many initiatives including the International HapMap Project and the 1000 Genomes Project. Genotype imputation represents the computational challenge of predicting the genotypes at the SNPs that are not directly genotyped in the study sample. Genotype imputation could increase the power of the GWAS study through fine-mapping to increase the chance of a causal SNP being identified, meta-analysis when combining different cohorts using different genotyping chips, and imputation of untyped variations which are not typed in the reference panel or the study sample1. Genotype imputation methods have been widely used to boost genome-wide association studies across the genome or at a focused region.

With the advances of genotyping technologies, large amounts of genetic datasets are being continuously and rapidly generated around the world. As is pointed in ref. 2, for “next-generation” association data studies in the near future, we will be handling datasets with (1) larger sample sizes, (2) unphased and incomplete genotypes, and (3) multiple reference panels and more diverse study datasets from different platforms with different sets of SNPs. This brings great computational challenges to the genotype imputation problem, which calls for not only the improvement of the current approaches, but also new mathematical models, which are supposed to exploit the structure of the large datasets and model the imputation problem in ways different from traditional approaches.

Many current imputation methods are based on statistics models, such as the Hidden Markov Model (HMM) and the Expectation-Maximization (EM) algorithm1,3. The way they work is to use haplotype patterns in reference panels to predict untyped genotypes in study panels. They depend on the availability of the reference panels, thus their performances are in a way limited by the quality of the chosen reference panels. Due to the nature of the models, current statistics methods are not flexible in handling datasets with multiple reference panels and diverse study panels for imputation tasks with various studies. Current statistics methods often require a cumbersome process to prepare the formatted input files, as well as a process for interpreting the output results from the computational methods, which makes the approaches less amenable to biological and biomedical researchers imputing their study samples.

Chi et al.4 developed a low-rank matrix completion based method Mendel-Impute for GWAS imputation. The HMM or EM based statistics methods and the low-rank matrix completion based method Mendel-Impute all rely on the identification of shared haplotypes (or the low-rank structure) in local blocks arising from linkage disequilibrium (LD). For the computational models proposed in this paper, our idea originated from the observation that there are often some sort of sparsity structures, such as low-rank and/or low numbers of co-clusters, in large genomic data matrices. Though similar sparsity structure has been used in imaging processing in the context of compressive sensing, only until recently we applied it in analyzing cancer patient gene expression profiling data matrices5. In this paper we propose to use the sparsity structure to meet the challenge of missing data imputation for GWAS.

In this paper we present a new framework, SparRec (Sparse Recovery), for imputing missing genetic data in genome-wide association studies. The models of SparRec are designed based on the sparse properties of low-rank and low numbers of co-clusters of the large, noisy, genetic datasets of matrices with missing data. We would like to point out that the low-rank matrix completion (LRMC) model is similar to Mendel-Impute, but the matrix co-clustering factorization (MCCF) model is completely new. We will illustrate how our approach is able to effectively find patterns for imputation within study data, both with and without reference panels, and even with data missing rate as high as 90%. We will compare the performance of our approach with several other mainstream approaches for genotype imputation, including statistics methods fastPHASE6 and Beagle7, and the low-rank matrix completion based method Mendel-Impute4. SparRec is easy to use for metadata analysis, and it requires very simple, easy-to-process input file format and easy-to-interpret output result files. It has better or comparable performance compared to current state-of-the-art methods, especially for handling large sample size data with very different sets of SNPs and no reference panels.

Methods

The problem of genotype imputation

Current approaches for haplotype inference and missing data imputation usually process the genotype data, and each sample is phased and the haplotypes are modeled as mosaic of those haplotypes of the reference panel1,3. Our approach could conduct imputation for both haplotype and genotype data matrices from different cohorts using different genotyping chips.

Given the matrices of the genotype data with untyped SNPs or the phased haplotype data with missing values, the imputation problem is to impute the missing data entries of the data matrices. The reader is referred to the illustrative formats of the data matrices in Figs 1 and 2 for the diploid genotype data and the corresponding phased haplotype data. For the data matrices, each row corresponds to one individual sample and each column corresponds to one SNP. In the genotype data matrices, 0, 1, and 2, which correspond to the number of minor alleles that an individual carries, are used to represent the possible genotypes such as, BB, Bb, bb, with B and b being A, T, C or G. In the haplotype data matrices, 0 and 1 are used to represent the major allele and the minor allele.

We present a natural and flexible modelling framework, which utilizes information across multiple reference panels and study panels, and which achieves high recovery accuracy even when the data matrices have high percentages of missing entries. Our approach combines the multiple chosen reference panels and the different study panels together as a large whole data matrix with missing entries (refer to Figs 1 and 2). The idea of our approach is based on the following observation: although the large genotype data matrix, which usually has missing entries and which may be contaminated by noises and errors from experimental samples and sequencing technologies, appear to be very complex, the underlying structure of the data matrix contains essential “sparse” information. By “sparse”, we mean the data matrix has the mathematical property of low-rank or low number of co-clusters. We test the sparse property using large genotype data matrices and the testing results show the matrices are usually low-ranked. In the following we present the technical details of our approach, and test the effects of different matrix numerical representations to the ranks of data matrices.

Imputation Based on Low-Rank Matrix Completion

The low-rank matrix completion (LRMC) model aims to fill in missing data values of a matrix based on the priori information that the matrix under consideration is of low rank. The low-rank matrix completion model can be formulated as the following optimization problem:

where rank(X) denotes the rank of matrix X, and Ω denotes the index set of the known entries of M. That is, we are given a set of known entries of M, and we want to fill in the missing entries such that the completed matrix is of low rank. In the genotype missing data imputation problem, each row of the matrix M represents a patient sample, and each column of the matrix M corresponds to a SNP. That is, Mij represents the j-th allele of the i-th patient sample. It is usually believed that patients can be classified into different categories and patients in the same category should have similar genetic patterns. Therefore, we believe that the matrix M is low-rank, or at least numerically low-rank.

The LRMC model has been widely used in online recommendation, collaborative filtering, computer vision and so on. Recently, it has been shown in refs 8, 9, 10, that under certain randomness hypothesis, the model (1) is equivalent to the following convex optimization problem with high probability:

where ||X||* is called the nuclear norm of matrix X and is defined as the sum of singular values of X. The nuclear norm minimization problem (NNM) is numerically easier to solve than the LRMC model because it is a convex problem. Many efficient numerical algorithms have been suggested to solve the NNM model, for example11,12,13, to name just a few. In this paper, we use the fixed point continuation method (FPCA) proposed in ref. 11 to solve the model (2).

A closely related work to (LRMC) is the Mendel-Impute method introduced in ref. 4. The Mendel-Impute method implements Nesterov’s accelerated proximal gradient method (APG) to solve (2), while FPCA proposed in ref. 11 can be seen as the ordinary version of proximal gradient method for solving (2). Theoretically, APG is faster than FPCA for solving LRMC, because the former attains an  -optimal solution in

-optimal solution in  iterations, while the latter one attains an

iterations, while the latter one attains an  -optimal solution in

-optimal solution in  iterations. Mendel-Impute also implements two important techniques to further accelerate the speed of APG: the sliding window scheme to better balance the trade-offs between accuracy and running time, and the line search technique to find an appropriate step size for the proximal gradient step. From our experiments, we found that the sliding window scheme is quite helpful for missing data imputation. Thus, we incorporated the sliding window scheme to LRMC, denoted as LRMC-s. We observed from our numerical tests that the performance of LRMC-s is comparable to Mendel-Impute.

iterations. Mendel-Impute also implements two important techniques to further accelerate the speed of APG: the sliding window scheme to better balance the trade-offs between accuracy and running time, and the line search technique to find an appropriate step size for the proximal gradient step. From our experiments, we found that the sliding window scheme is quite helpful for missing data imputation. Thus, we incorporated the sliding window scheme to LRMC, denoted as LRMC-s. We observed from our numerical tests that the performance of LRMC-s is comparable to Mendel-Impute.

Imputation Based on Matrix Co-clustering Factorization

We now propose a new approach for imputation that is based on matrix co-clustering factorization (MCCF). Ma et al. in ref. 5 developed a co-clustering model for two-dimensional and higher-dimensional matrix co-clustering, which is based on a tensor optimization model and an optimization method termed Maximum Block Improvement (MBI)14,15. Inspired by the idea of matrix co-clustering for imputation, we develop a basic model as follows.

where

In (3), the Frobenius norm of a matrix X is defined as  Our imputation approach, based on the matrix co-clustering factorization, aims to complete matrix M by using a low-rank matrix factorization model. In our framework, A is the data matrix with missing entries; Y1 and Y2 are the artificial row assignment matrix and the artificial column assignment matrix, respectively, and X is the artificial central-point matrix. Note that A is also an unknown decision variable in (3), because only a subset of its entires is known. Moreover, note that (3) requires the input of k1 and k2, which are closely related to the rank of the matrix to be completed. Therefore, in practice, if we have a good estimation to the rank of the matrix, then (3) is a better model to use than (2), because it also provides us the clustering information of individual samples and SNPs.

Our imputation approach, based on the matrix co-clustering factorization, aims to complete matrix M by using a low-rank matrix factorization model. In our framework, A is the data matrix with missing entries; Y1 and Y2 are the artificial row assignment matrix and the artificial column assignment matrix, respectively, and X is the artificial central-point matrix. Note that A is also an unknown decision variable in (3), because only a subset of its entires is known. Moreover, note that (3) requires the input of k1 and k2, which are closely related to the rank of the matrix to be completed. Therefore, in practice, if we have a good estimation to the rank of the matrix, then (3) is a better model to use than (2), because it also provides us the clustering information of individual samples and SNPs.

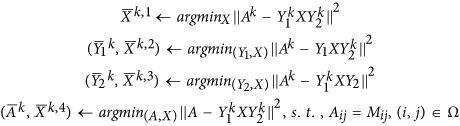

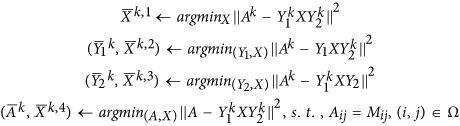

Although the MCCF model is non-convex, it has some natural block-structure that can be utilized to adopt an efficient solution method. We propose to solve the model (3) using a block coordinate update (BCU) procedure. There are four block variables in the model (3), namely A, X, Y1 and Y2. The basic idea of BCU is, at each iteration, to minimize the function f with respect to one block variable while the other three blocks are fixed at the current known values. This idea is effective because we observed that minimizing f for only one block variable among A, X, Y1 and Y2 is always relatively easy. A naive implementation of the BCU idea is to minimize f in the order of A → Y1 → X → Y2, and in each step only one block variable is updated with the other three blocks being fixed. The flow chart of this BCU algorithm is shown in Fig. 3. In our MCCF model, the matrix X actually plays a more important role than the other three blocks. As a result, it is beneficial if we can update the X block more frequently than the other three blocks. Therefore, we implemented the following four different algorithms based on the BCU idea.

-

1

“BCU-1”: BCU with Block Loop in the following order (A − X) → (Y1 − X) → (Y2 − X). That is, we take A and X together as one (bigger) block. Similarly Y1 and X is one block and Y2 and X is one block. For these three blocks, we use BCU to update them one by one. In each block, for example, (A − X), since we need to minimize f with respect to A and X simultaneously, we use the alternating minimization procedure that minimizes f with respect to A and X alternatingly, until the function value ceases to change. The other two blocks (Y1 − X) and (Y2 − X) are dealt with in the same way.

-

2

“BCU-2”: BCU with the following order of block variables: A → X → Y1 → X → Y2. That is, we update X twice in each sweep of the block variables.

-

3

“BCU-3”: BCU with the following order of block variables: A → X → Y1 → X → Y2 → X. That is, we update X three times in each sweep of the block variables. This variant of BCU has been considered by Xu16 for tensor decomposition problems.

-

4

“MBI-BL”: This is a variant of the MBI algorithm proposed by Chen et al.15. MBI-BL applies MBI algorithm in ref. 15 to minimize f with four blocks variables:

and (A − X). In each block, for example, (A − X), we use alternating block minimization scheme to minimize f with respect to A and X alternatingly, until the function value ceases to change. After having attempted all four block variables, we update the block variable with maximum improvement.

and (A − X). In each block, for example, (A − X), we use alternating block minimization scheme to minimize f with respect to A and X alternatingly, until the function value ceases to change. After having attempted all four block variables, we update the block variable with maximum improvement.

The flow chart of the imputation algorithm based on the idea of matrix co-clustering factorization (MCCF).

A is the data matrix with missing entries; Y_1 and Y_2 are the artificial row assignment matrix and the artificial column assignment matrix, respectively, and X is the artificial central-point matrix.

All the algorithms are terminated when the objective value in the (k + 1)-th iteration does not decrease significantly from that in the k-th iteration. We give the detailed description of BCU-1, BCU-2, BCU-3 and MBI-BL as Algorithms 1, 2, 3 and 4, respectively (Refer to the steps of each of the four algorithms as follows).

Algorithm 1 (BCU-1)

Given initial iterates  and initial values v0 = 0, v1 = 1:

and initial values v0 = 0, v1 = 1:

For k = 0, 1, …, run the following until

1) (Update A and X). For  run the following updates I and II for A and X until the objective value does not change much; Xk,0 = Xk

run the following updates I and II for A and X until the objective value does not change much; Xk,0 = Xk

I.

II.

2) Set  ,

,

3) (Update Y1 and X). For  run the following updates I and II for Y1 and X until the objective value does not change much:

run the following updates I and II for Y1 and X until the objective value does not change much:

I.

II.

4) Set  ,

,

5) (Update Y2 and X). For  run the following updates I and II for Y2 and X until the objective value does not change much:

run the following updates I and II for Y2 and X until the objective value does not change much:

I.

II.

6) Set

7) Compute

Algorithm 2 (BCU-2)

Given initial iterates  and initial values v0 = 0, v1 = 1:

and initial values v0 = 0, v1 = 1:

For k = 0, 1, …, run the following until

-

1

Update A:

-

2

Update X:

-

3

Update Y1:

-

4

Update X:

-

5

Update Y2:

-

6

Compute

Algorithm 3 (BCU-3)

Given initial iterates  and initial values v0 = 0, v1 = 1:

and initial values v0 = 0, v1 = 1:

For k = 0, 1, …, run the following until

-

1

Update A:

-

2

Update X:

-

3

Update Y1:

-

4

Update X:

-

5

Update Y2:

-

6

Update X:

-

7

Compute

Algorithm 4 (MBI-BL)

Given initial iterates  , and initial values v0 = 0, v1 = 1.

, and initial values v0 = 0, v1 = 1.

For k = 0, 1,…, run the following until

-

1

Block Improvement:

-

2

Compute the corresponding objective values:

-

3

Maximum Improvement: Compare w1, w2, w3, w4, pick up the smallest value to update the corresponding block variables:

-

I

If w1 is the smallest, then

, vk+1 ← w1

, vk+1 ← w1 -

II

If w2 is the smallest, then

, vk+1 ← w2

, vk+1 ← w2 -

III

If w3 is the smallest, then

-

IV

If w4 is the smallest, then

-

I

The effects of different matrix numerical representations to the matrix ranks

Here we show that the way we represented the entries of the matrix with different numbers seems to generate approximate low-rank matrices in practice. These observations justified that our (LRMC-s) and (MCCF) models indeed capture the underlying structures of the data. We used two data sets referred to as “data-00-ATCG” and “data-15-ATCG” in our experiment: Both data sets are of size (10,000 × 3,000), with each data set including 10,000 alleles and 1,500 sample diplotypes from the British Birth Cohort. We computed the singular values of the data matrix obtained by assigning A = 0, T = 1, C = 0, G = 1 in data-00-ATCG, and the top ten leading singular values are 1e + 3*[3.3236, 0.6248, 0.0687, 0.0660, 0.0635, 0.0599, 0.0592, 0.0582, 0.0578, 0.0574]. We can see that the largest singular value is about 48 times bigger than the third largest singular value, the second largest singular value is about 9 times bigger than the third largest singular value. However, starting from the third singular value, there is not much difference among the rest singular values. As a result, we can conclude that this data matrix is approximately a rank-2 matrix. To help the readers better visualize this observation, we plot the 100 leading singular values of this data matrix in Fig. 4a, from which we can clearly see that the first two singular values are significantly larger than other singular values and the matrix can be viewed as an approximately rank-2 matrix. Similarly, Fig. 4b shows the 100 leading singular values of the data matrix obtained by assigning A = 1, T = 2, C = 3, G = 4 in data-00-ATCG, whose 10 leading singular values are 1e + 4*[1.5100, 0.1621, 0.0159, 0.0143, 0.0142, 0.0139, 0.0136, 0.0135, 0.0135, 0.0133], which again indicates that the resulting matrix can be regarded as an approximate rank-2 matrix. Figure 4c shows the 100 leading singular values of the matrix obtained by assigning A = −2, T = −1, C = 1, G = 2 in data-15-ATCG, whose 10 leading singular values are 1e + 3*[6.7762, 2.3994, 0.2154, 0.1906, 0.1879, 0.1866, 0.1855, 0.1842, 0.1830, 0.1819], which again indicates that the resulting matrix is approximately rank-2. Figure 4d shows the 100 leading singular values of the matrix obtained by assigning A = 1, T = 0, C = 1, G = 0 in data-15-ATCG, whose leading 10 singular values are 1e + 3*[3.5305, 0.6383, 0.0746, 0.0630, 0.0601, 0.0596, 0.0585, 0.0580, 0.0578, 0.0575], which again indicates that the resulting matrix is approximately rank-2. Moreover, we also computed the leading singular values of datasets Chr22, Chr22_3841, HapMap3 and 1KG_chr22 are the results are shown in Fig. 5. From Fig. 5 we can see that these datasets are approximately low-rank as well. All these observations suggest that the resulting matrices are usually low-rank, and thus can be completed by solving our models (LRMC-s) and (MCCF) using the proposed methods.

The effects of different numerical representations to the matrix ranks, with the matrix of size (10,000 × 3,000).

Part a (top left): the distribution of the 100 leading singular values of the data matrix obtained by assigning A = 0, T = 1, C = 0, G = 1 in data-00-ATCG. Part b (top right): the distribution of the 100 leading singular values of the data matrix obtained by assigning A = 1, T = 2, C = 3, G = 4 in data-00-ATCG. Part c (bottom left): the distribution of the 100 leading singular values of the data matrix obtained by assigning A = −2, T = −1, C = 1, G = 2 in data-15-ATCG. Part d (bottom right): the distribution of the 100 leading singular values of the data matrix obtained by assigning A = 1, T = 0, C = 1, G = 0 in data-15-ATCG.

For LRMC-s and MCCF, they are two different models for the same target: matrix completion. Usually if we have a good estimation of the rank of the matrix, then we can decide the size of matrices Y1, X, Y2, and thus MCCF is preferred because it also provides the clustering information of individual samples and SNPs. Otherwise, we should use LRMC-s, which does not need the rank information.

Data availability

The framework of SparRec (Sparse Recovery) is implemented in MATLAB and the source code is available from: https://sourceforge.net/projects/sparrec/files/?source=navbar Or, http://bioinformatics. astate.edu/code2/ and also available on GitHub at: https://github.com/astate-bioinformatics/SparRec.

Testing Results

In this section, we shall test the recovering capabilities of all of our proposed methods and the three currently mainstream imputation methods (Beagle, fastPHASE and Mendel-Impute) for several real datasets with or without reference information. In Tables 1, 2, 3, 4, 5, 6, we report the error rates of fastPHASE, Beagle, Mendel-Impute, LRMC-s, and four MCCF algorithms: BCU-1, BCU-2, BCU-3 and MBI-BL, where we chose k1 = 100 and k2 is the one that gives the best error rate from the list {2, 3, 5, 10, 20}, and in most cases k2 = 20. Moreover, in Tables 7 and 8, we report the comparison of these algorithms for various datasets with 1% masked genotypes, which are commonly encountered in GWAS study. Overall, we shall see that Mendel-Impute and LRMC-s are quite comparable, and outperform other methods. In Tables 9, 10, 11, 12, 13, we test the robustness of our four MCCF algorithms with different combinations of k1 and k2, and our results show that the MCCF algorithms are not sensitive to k1, and larger k2 usually gives better error rates.

Comparison of our LRMC-s and MCCF algorithms with Mendel-Impute, Beagle and fastPHASE

To justify the performance of our proposed methods, we compare the recovery accuracy of our algorithms with that of some popular statistics imputation method such as Beagle7 (version 3.3.2), fastPHASE6 (version 1.4.0). Both of those methods belong to haplotype-inference methods. In particular, fastPHASE is based on a haplotype-clustering model with a fixed number of clusters, while Beagle describes the correlation between markers as a localized haplotype-cluster model, which can in turn be viewed as a hidden Markov model (HMM). The merit of HMM is that it can be used to sample or find the most likely haplotype pair for each individual. Different from other approaches (such as IMPUTE v22), Beagle and fastPHASE still work even in the absence of reference panels, making them an ideal candidate for comparison. We also compare our approaches with another low-rank matrix completion based method Mendel-Impute4 in the experiment.

Criteria for Evaluation

For each data set, we first masked 5%, 10%, 25%, 50%, 60%, 70%, 80% and 90% of the genotypes respectively. Then we run fastPHASE, Beagle (default setting with R = 4) and our methods proposed above together with some rounding procedure if necessary to recover the missing data. The raw output of our methods consists of numbers in the range 0–2. These are converted into genotypes by rounding and an estimate of uncertainty could be obtained by means of a similar post-processing step to that used in ref. 4.

The so-called allelic-imputation error rate is referred to as the proportion of missing alleles that are incorrectly imputed. It has been widely adopted in the literature to measure the capability of various imputation approaches. In this paper, we calculated this error rate of the tested methods and summarize the results in the following tables.

In Tables 1, 2, 3, 4, 5, 6, we reported the comparison results of Mendel-Impute, LRMC-s and MCCF on various datasets. We shall see that the performance of Mendel-Impute and LRMC-s is quite comparable.

Comparison without Reference Information

The first three datasets used for comparison are tested without using a reference panel, and are provided by the Wellcome Trust Case Control Consortium (WTCCC). Two of these data sets contain genetic information from chromosome 22. Of these, the smaller dataset referred to as “Chr22” contains 500 markers, while the larger referred to as “Chr22_3841” contains 3841 markers. Both studies include the genetic information of 1093 sample genotypes. The latter set is the same set that is used in ref. 6 for the study of ImputeV2.

Tables 1 and 2 give allelic-imputation error rate of Chr22 and Chr22_3841 respectively for each haplotype-inference method. Those results suggest that both LRMC-s and MCCF algorithms consistently outperform Beagle for both data sets regardless whether or not the missing proportion is low or high. For the approaches based on the matrix co-clustering factorization, BCU-2 and BCU-3 usually achieve the least error rate.

The third set, which is referred to as “WTCCC” in the study, includes one thousand and five hundred samples from the British Birth Cohort with 10,000 alleles for each. These 10,000 markers were taken from the middle of the entire genome-wide association study.

The error rates of various imputation approaches are summarized in Table 3. Similar to the results of previous tests, the best-performing approaches are BCU-2 and BCU-3. The low-rank matrix completion method performs better than Beagle but slightly worse than the best two co-clustering factorization algorithms.

Comparison in the Presence of Reference Panel

In the previous tests, we did not provide any reference information when performing imputation, which however is not the case in many imputation studies. To further justify the capability of our approaches, we run our test on a dataset that includes reference information. This dataset is from the HapMap3, and itself consists of 165 sampled individuals with 19711 alleles per sample. For the purpose of imputation, 41 of the samples were separated to form a reference panel, and we had the remaining 124 samples gradually masked (from 5% to 90%) as the testing data.

The error rates of the imputation methods discussed in this paper are presented in Table 4.

It appears in this case that both LRMC-s and MCCF algorithms significantly outperforms other methods. In the presence of reference panels, Beagle is superior to all of our matrix co-clustering factorization based approaches. However, we notice that the dataset under test is not balanced in its two dimensions. In other words, we are dealing with a very unbalanced matrix with almost 20000 markers and only hundreds of samples.

Therefore, to further investigate the impact of reference information on our approaches, we keep the number of samples unchanged while selecting only the first 3000 markers, even further only the first 300 markers, and run similar tests under new settings respectively. The error rates of each imputation algorithm are reported in Tables 5 and 6. From those tables, we can see that the performances of our matrix co-clustering factorization based algorithms steadily improve as the number of markers decreases. When considering only 300 markers, all of our proposed algorithms enjoy a lower error rate than that of Beagle.

Comparison under some more practical scenarios

In this section, we generated a few new data sets to further compare the error rates given by Mendel-Impute, LRMC-s and MCCF, and these results are reported in Table 7; and the CPU time consumed is given in Table 8. Specifically, we generated 1% missing rate data for chr22, chr22_3841, WTCCC and HapMap3 respectively. In addition, we generated 1KG_chr22, which consists 60000 SNPs and 1092 individuals. We took the first half of individuals as reference panel and masked about 85% the second half individuals as study panel. Then we tested this data set either with reference or without reference panel. It is worth mentioning that the resulting matrices by masking components through these two ways are more likely to be encountered in practice.

The dataset of 1KG_chr22 setup information is detailed as follows. The dataset 1KG_chr22 was prepared to represent imputing SNPs from a reference panel typed on a different chip from the study sample, as described in ref. 4. We assumed that the data were generated by the Illumina “Infinium Omni2.5-8 BeadChip”. We created a study and reference panel derived from the 1000 Genomes Project haplotypes from the March 2012 release of Phase 1, obtained from the IMPUTE2 website2. This dataset consists of haplotypes for 1092 individuals, which we split into a study and reference panel by assigning half of the individuals to each such that the distribution of ethnicities was preserved across both groups. For our study panel we chose 60,000 SNPs from a randomly selected region on chromosome 22 and masked genotypes of all SNPs that were not present in Illumina’s manifest file for the Omni2.5-8 Beadchip. The resulting study panel included 8,808 SNPs for which genotypes were present, and 51,192 SNPs with masked genotypes. Thus, the study panel consists of 85.3% systematically missing data for 546 individuals.

For MCCF algorithms, we used k1 = 100 and k2 = 20. The error rates of different algorithms are summarized in Table 7. All the experiments in this subsection were done on a multicore computer with eight 2.8 GHz Intel Core E3 processors and 16 GB of RAM.

From Table 7 we see that LRMC-s and Mendel achieve comparable error rates, both outperforming Beagle and fastPHASE in all scenarios. Moreover, for the biggest data set 1KG_chr22 with reference panel (a matrix of size 60000 × 2184), the error rate of LRMC-s is slightly lower than that of Mendel. Since there are markers with all samples missing in the study panel of 1KG_chr22, both fastPHASE and Beagle failed to return a valid phased file when they are not provided with reference panel.

Since it is reported in ref. 4 that Mendel is very efficient in terms of running time (much faster than Beagle, especially when the data set is large), we also compared the CPU times of Mendel-Impute and our algorithms. The running times are recorded in seconds using the tic/toc functions of MATLAB.

Table 8 suggests that BCU-2 and BCU-3 are the fastest algorithms. Combining with Table 7, we note that their yielded error rates are not as good as that of Mendel-Impute and LRMC-s but the differences are relatively small. Moreover, their error rates are better than Beagle’s in most cases. In addition, Mendel is usually faster than LRMC-s. However, for the 1KG_chr22 data set without reference, LRMC-s is faster than Mendel.

For general memory usage, we tested our algorithms with the dataset 1KG_chr22 with reference panel (which is a matrix of 60000*2100 entries). It shows that the peak memory usage of our algorithms is about 1 GB, which matches the size of the input matrix representation with MATLAB.

Robustness of our MCCF algorithms with different values of k1 and k2

Tables 9, 10, 11, 12, 13 show the error rates of the four MCCF algorithms with different combinations of k1 and k2 for datasets Chr22, Chr22_3841, WTCCC and HapMap3 with 1% missing entries, and 1KG_chr22.

From Tables 9, 10, 11, 12, 13 we can observe that in terms of error rate, MCCF is not sensitive to k1, while larger k2 usually produces smaller error rate (the only exception is MBI_BL for 1KG_chr22 where k2 = 2 gives better error rate than larger ones, but the difference is not very significant). Moreover, it is intuitive that larger k2 usually results in longer CPU time, because larger linear systems are needed to be solved in the MCCF algorithms. Therefore, to balance the tradeoff of error rate and running time, in practice we may choose BCU-2 and BCU-3 with k1 = 50 or 100, and k2 = 5.

Summary and Discussion

In this study, we present an observation regarding the underlying structural property of the large, noisy GWAS genetic data matrices with missing data: these data matrices may contain the sparse information of low-rank and low number of co-clusters. Chi et al.4 have developed Mendel-Impute as a low-rank matrix completion model for GWAS imputation. For the next-generation genotype imputation, we believe that further mathematical or statistics model design and new method development should make use of this sparsity structure.

With this paper, we present SparRec: a new framework for genotype imputation based on our study of the sparse properties of genetic data matrices. The computational models of SparRec are based on low-rank and low number of co-clusters of GWAS data matrices, and the performance is better than or comparable to the current state-of-the-art methods for GWAS imputation. As with other matrix completion based methods, such as Mendel-Impute, SparRec does not require the information of reference panels, and it can naturally handle large and complex missing data imputation tasks, which may contain multiple reference panels and diverse study panels from different platforms with different sets of SNPs. While IMPUTEv2 tried to handle datasets with unphased and incomplete genotypes from different platforms (refer to scenario 1 and scenario 2 of IMPUTEv22), its model may not be able to accommodate scenarios other than the two scenarios considered in ref. 2, and it is not convenient to prepare for the required input file formats for IMPUTEv2. Also note that current imputation methods, such as Beagle, can work without a reference panel; however it does not work with two or more haplotype or genotype reference panels used in the same run. Compared with the state-of-the-art approaches, SparRec is user-friendly and there is no limit on the number of references or study panels for the metadata analysis. To further enhance GWAS capabilities and facilitate downstream analysis, SparRec offers a viable alternative for biomedical researchers to perform various imputation tasks.

From a methodological point of view, current popular statistics imputation methods, such as IMPUTE v117, IMPUTE v2.22, MACH18, fastPHASE6 and Beagle7, are based on using “local” structure information of the reference panels, and the genotype imputation is modeled as a “mosaic” of patterns in relation to the haplotype reference panels. For this reason, current statistics methods usually handle only one study dataset at a time with only one or two reference panels1,3. In contrast, Mendel-Impute4 and our newly proposed LRMC-s method use information based on low-rank structure of the data. From that point of view, Mendel-Impute and our method could naturally utilize all available information of the reference panels and the study panels to impute the missing data entries. Moreover, our MCCF method takes advantage of the low co-cluster number property, which is also the structural information of the dataset. Interestingly, even with missing data rates as high as 90%, our methods could still reliably achieve high level of imputation accuracy because the data matrix does possess a clear sparse/low-rank structure.

Additional Information

How to cite this article: Jiang, B. et al. SparRec: An effective matrix completion framework of missing data imputation for GWAS. Sci. Rep. 6, 35534; doi: 10.1038/srep35534 (2016).

Change history

21 November 2016

A correction has been published and is appended to both the HTML and PDF versions of this paper. The error has been fixed in the paper.

References

Marchini, J. & Howie, B. Genotype imputation for genome-wide association studies. Nature Reviews Genetics 11, 499–511 (2010).

Howie, B., Donnelly, P. & Marchini J. A Flexible and Accurate Genotype Imputation Method for the Next Generation of Genome-Wide Association Studies. Plos Genetics 5, e1000529 (2009).

Browning, S. R. Missing data imputation and haplotype phase inference for genome-wide association studies. Hum Genet. 124, 439–450, doi: 10.1007/s00439-008-0568-7 (2008).

Chi, E. C., Zhou, H., Chen, G. K., Ortega Del Vecchyo, D. & Lange, K. Genotype imputation via matrix completion. Genome Research 23, 509–518 (2013).

Ma, S. et al. SPARCoC: a new framework for molecular pattern discovery and cancer gene identification, PLoS One 10, e0117135, doi: 10.1371/journal.pone.0117135 (2015).

Scheet, P. & Stephens, M. A fast and flexible statistical model for large-scale population genotype data: applications to inferring missing genotypes and haplotypic phase. The American Journal of Human Genetics 78, 629–644 (2006).

Browning, S. R. & Browning, B. L. Rapid and Accurate Haplotype Phasing and Missing-Data Inference for Whole-Genome Association Studies by Use of Localized Haplotype Clustering. The American Journal of Human Genetics 81, 1084–1097 (2007).

Recht, B., Fazel, M. & Parrilo, P. A. Guaranteed Minimum Rank Solutions to Linear Matrix Equations via Nuclear Norm Minimization. SIAM Review 52, 471–501 (2010).

Candès, E. J. & Recht, B. Exact Matrix Completion via Convex Optimization. Foundations of Computational Mathematics 9, 717–772 (2009).

Candès, E. J. & Tao, T. The power of convex relaxation: Near-optimal matrix completion. IEEE Trans. Inform. Theory 56, 2053–2080 (2010).

Ma, S., Goldfarb, D. & Chen, L. Fixed Point and Bregman Iterative Methods for Matrix Rank Minimization. Mathematical Programming Series A 128, 321–353 (2011).

Cai, J., Candès, E. J. & Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optimization 20, 1956–1982 (2010).

Toh, K. C. & Yun, S. W. An accelerated proximal gradient algorithm for nuclear norm regularized least squares problems. Pacific J. Optimization 6, 615–640 (2010).

Chen, B., He, S., Li, Z. & Zhang, S. Maximum block improvement and polynomial optimization. SIAM J. Optimization 27, 87–107 (2012).

Zhang, S., Wang, K., Chen, B. & Huang, X. A new framework for co-clustering of gene expression data. Lecture Notes in Bioinformatics 7036, 1–12 (2011).

Xu, Y. Alternating proximal gradient method for sparse nonnegative Tucker decomposition. Mathematical Programming Computation 7, 39–70 (2015).

Marchini, J. A new multipoint method for genome-wide association studies via imputation of genotypes. Nature Genetics 39, 906–913 (2007).

Li, Y. MaCH: using sequence and genotype data to estimate haplotypes and unobserved genotypes. Genet Epidemiol 34, 816–834 (2010).

Acknowledgements

The authors would like to thank the anonymous reviewers for useful comments. B.J. was partially supported by the National Natural Science Foundation of China with grant number 11401364. S.M. was partially supported by the Hong Kong Research Grants Council General Research Fund with grant number 14205314. S.Z. was partially supported by National Science Foundation with grant number 1462408. X.H. was partially supported by National Science Foundation with grant number 1553680 and 1452211. This work was also partially supported by the National Institute of Health grants from the National Center for Research Resources (P20RR016460) and the National Institute of General Medical Sciences (P20GM103429).

Author information

Authors and Affiliations

Contributions

X.H. and S.Z. conceived and designed the experiments. B.J., S.M., J.C. and M.P.H. performed the experiments. X.H., S.Z., B.J., S.M., J.C. and M.P.H. analyzed the data. B.J., S.M., M.P.H., L.Q., J.C., I.B. and D.J. worked on numerical testing in this work. X.H., S.Z., B.J. and S.M. wrote the paper.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Jiang, B., Ma, S., Causey, J. et al. SparRec: An effective matrix completion framework of missing data imputation for GWAS. Sci Rep 6, 35534 (2016). https://doi.org/10.1038/srep35534

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep35534

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

and (A − X). In each block, for example, (A − X), we use alternating block minimization scheme to minimize f with respect to A and X alternatingly, until the function value ceases to change. After having attempted all four block variables, we update the block variable with maximum improvement.

and (A − X). In each block, for example, (A − X), we use alternating block minimization scheme to minimize f with respect to A and X alternatingly, until the function value ceases to change. After having attempted all four block variables, we update the block variable with maximum improvement.

, vk+1 ← w1

, vk+1 ← w1 , vk+1 ← w2

, vk+1 ← w2