Abstract

Assessment of hypnotic susceptibility is usually obtained through the application of psychological instruments. A satisfying classification obtained through quantitative measures is still missing, although it would be very useful for both diagnostic and clinical purposes. Aiming at investigating the relationship between the cortical brain activity and the hypnotic susceptibility level, we propose the combined use of two methodologies - Recurrence Quantification Analysis and Detrended Fluctuation Analysis - both inherited from nonlinear dynamics. Indicators obtained through the application of these techniques to EEG signals of individuals in their ordinary state of consciousness allowed us to obtain a clear discrimination between subjects with high and low susceptibility to hypnosis. Finally a neural network approach was used to perform classification analysis.

Similar content being viewed by others

Introduction

Classifying individuals according to their level of susceptibility to hypnosis has become necessary within the perspective of experimental hypnosis in order to predict individual responses to suggestions. The differences in susceptibility are particularly important because they influence individuals' everyday life in their ordinary state of consciousness. In fact, out of hypnosis, individuals can also differ in various aspects of sensorimotor integration1,2,3,4 and cardiovascular control5.

Several scales6 can be used to characterize subjects as high (highs), medium (mediums) and low (lows) hypnotizables. Nonetheless, the administration of scales requires time and experience; moreover, most of the hypnotizability scales measure suggestibility after hypnotic induction rather than hypnotizability, i.e. suggestibility in the absence of hypnotic induction7. Suggestibility while awake may change after hypnotic induction8 and its assessment is important because hypnotizability predicts the individuals' response to suggestions out of hypnosis and allows, for instance, to ease manageability of suggestions such as analgesia and anaesthesia in clinical contexts.

The few attempts pursued to extract information on susceptibility levels from electroencephalograms (EEGs) were performed after hypnotic induction. Many techniques inherited from nonlinear time series analysis and nonlinear dynamics have been applied to EEG signals9,10, proving the existence of a significant relationship between hypnotizability and a few indices extracted from the EEG. At present and to the best of our knowledge, only one study proves that it is possible to classify highs and lows using EEGs recorded in the ordinary state of consciousness during relaxation11. The difference between highs and lows, however, was present only in the earliest minutes of relaxation, in line with earlier reports indicating that highs and lows process the relaxation request through different cognitive strategies leading to the same perceived relaxation12. In Madeo et al.11 the EEG dynamics of individuals with different levels of hypnotizability was characterized through the quantitative measures of two indicators called Determinism and Entropy - related to the determinism and the complexity of the EEG signal, respectively - in the framework of the nonlinear time series analysis based on recurrences13. Actually Determinism and Entropy were recognized as the most appropriate parameters for discriminating highs and lows subjects based on a minimal set of quantitative indicators11.

Presently our objective is more refined, aiming at developing an optimal classification tool that can be used for clinical purposes, which should be as simple and user-friendly as possible and to avoid the use of a large number of indicators that could increase the complexity of the classification algorithm. As a consequence, we propose the combined use of Recurrence Quantification Analysis (RQA) and Detrended Fluctuation Analysis (DFA). Specifically, in order to obtain a more detailed and broad-spectrum investigation of the EEG recordings, we integrate determinism with the fluctuation exponent as a complementary indicator related to the stochastic part of the signal.

The RQA is a nonlinear technique that can be traced back to the work by Poincarè14 and Ruelle and Takens15,16. It quantifies the small-scale structures of recurrence plots which present number and duration of recurrences of a dynamical system in a reconstructed phase space. The main advantage of this kind of analysis is to provide useful information even for non-stationary data where other methods fail. The DFA is a method basically designed to investigate long-range correlations in nonstationary series17,18,19, through the estimate of a scaling exponent obtained from the slope of the so-called fluctuation function F(s) as a function of lag s, in a log-log plot. The value of this exponent can discriminate between (short or long-range) correlated and uncorrelated time series. The observed signals characterizing a complex system often exhibit long-range correlations. It is of crucial importance and significance to quantify such long-range correlations in order to have a deep understanding of the dynamics of the underlying complex systems. The DFA technique has been extensively used on EEG databases to investigate the relationship between hypnotizability and brain activity20, but typically it has been applied to hypnotized subjects only and never on datasets obtained from basal EEG out of hypnosis.

As many other physiological phenomena, the hypnotizability of a subject emerges from a complex mechanism, involving many cerebral and cognitive activities, such as memory retrieval, self-reflection, mental imagery and stream-of-consciousness processing21. As a consequence, the dynamics of the underlying system must be explored at many different time scales. This goal can be achieved through the integration of the proposed techniques, strengthening the results provided by the separate application of the two methodologies. On one hand RQA is a powerful discriminatory tool which can provide information regarding the degree of determinism characterizing the system, as well as the degree of complexity or randomness of the signals. On the other hand, DFA is used to quantify the fractal-like scaling properties of the same signals. Once combined with the other indicator estimated in the framework of RQA, the power-law exponent of DFA can be used to classify hypnotic susceptibility, providing a quantitative discrimination between highs and lows.

The aim of this study is to show that the use of RQA coupled with scaling analysis can discriminate highs from lows on the basis of EEG recordings obtained from non-hypnotized participants during 15 minutes of relaxation11. In order to achieve a systematic integration of the two methodologies several neural networks were developed based on the time varying measures RQA and DFA evaluated on a dataset including the EEG recordings of 8 highs and 8 lows. On the basis of previous findings11 four channels - out of 32 - were used in the neural network. The neural network approach, unlike typical classification or clustering techniques such as linear separation, allowed us to take into account the intrinsic nonlinearities present in the signals.

Results

In this section the preliminary step performed for data acquisition and the main results obtained through the combined use of RQA, DFA and Neural Networks, are reported.

Experimental Setup and Data Preprocessing

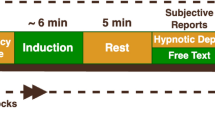

The experiments were performed on 16 healthy males (age: 19–30 yrs), 8 highs (score > 8/12) and 8 lows (score < 3/12), selected according to the Stanford Hypnotic Susceptibility Scale22,23. Subjects were asked to relax their best, remain silent and avoid movements for a time period of 15 minutes. The EEG signals have been acquired through a Neuroscan device (40 channels) with a sampling frequency of 1 kHz during the whole experimental session. After acquisition, data was preprocessed in order to remove artifacts and measurement errors. Among the 40 recorded channels, CZ, TP7, P3 and PO1 incorporated the best information to discriminate between highs and lows on the basis of nonlinear Recurrence Quantification Analysis11. It is important to emphasize that the selected channels are located either on the left-hemisphere or along the midline of the scalp. The midline sites in which we have observed hypnotizability related differences are closely linked to the default mode circuit, which includes the posterior cingulate cortex and precuneus, medial prefrontal and pregenual cingulate cortices, temporo-parietal regions and medial temporal lobes (for a review see Hoeft et al.24). This circuit is implicated in episodic memory retrieval, self-reflection, mental imagery and stream-of-consciousness processing, which are cognitive activities potentially associated with long-lasting relaxation. The crucial role of these brain regions justified the selection of the four channels CZ, TP7, P3 and PO1 used in our analysis. Therefore the particular choice of electrodes was motivated by a compromise between the significance of the results and the simplicity of the overall procedure.

Regarding signal acquisition and preprocessing, we emphasize that standard electroencephalographic recordings were performed according to the International 10–20 System by Ag/AgCl electrodes embedded in an elastic cap. The signal was sampled at 1 kHz. A band-pass digital filter (0.5, 100 Hz) was applied to EEG signals to remove DC bias and non-significant high frequency contributions; a notch filter was used to reduce the 50 Hz power-line noise. The FASTER algorithm25 was used to remove the ECG and EOG contaminations. It is based on well-known Independent Component Analysis (ICA) and removes undesired components by comparing the autocorrelation of the ECG and EOG signals to the corresponding independent ones.

The EEG time series were obtained after applying a basic filtering procedure, starting from the real raw signal. There are two reasons why we used minimal manipulation of data: to avoid nonlinear methods, like those performed to obtain absolute values and/or amplitudes which could produce artificial time series; and, as we are predominantly interested in pre-clinical studies, our main task was to produce less elaborate and highly accessible procedures. Indeed, using a combined application of RQA and DFA techniques to our filtered time series, we obtained a useful biomarker in order to classify and distinguish lows from highs patients, which was the principal aim of our work.

Combining recurrence and fluctuation analysis

In order to obtain a powerful discriminating procedure, we applied the DFA and RQA methods to EEG recordings relative to channels CZ, TP7, P3 and PO1. The analysis was performed by using non-overlapping time windows of 10 seconds, which implies having analyzed subsets of 10000 points of the main signal. For each channel and each individual we obtained a time series of fluctuation exponents α with a sampling rate of 10 seconds. We then set up a combined procedure to discriminate between EEG signals of highs and lows based on fluctuation exponent α and determinism D. In this way we obtained a dataset for each individual consisting of values of two measures calculated every 10 seconds for each of the four aforementioned channels.

Fig. 1 and Fig. 2 report the resulting dataset for D and α, respectively and for each of the channels CZ, TP7, P3 and PO1. Each graph has been obtained through the evaluation of the average value of D and α indicators for highs and lows. In order to obtain a clearer discrimination of the two classes we also considered a smoothed (moving average) version of measures. On the left-hand sides of the same Figures we also included some examples of Recurrence Plot and log-log plots for the estimate of the indicators D and α. Moreover, we show in Table 1 mean and standard deviation of both indicators evaluated on channel CZ.

The overall range of values of α runs from 1.1 to 1.3, proving nontrivial autocorrelation properties in EEG signals. In fact, the range of values of the scaling exponent is crucial to the attempt to characterize EEG signals through their correlation properties. It is expected that these values depend on the state of participants, whether they are resting or involved in some cognitive tasks. Further, the same values depend on the kind of statistical analysis that is performed on the data. Our values are partly in agreement with other approaches. See Watters26, Linkenkaer-Hansen et al.27 and Buiatti et al.28 for similar results.

All values of D are high, showing significant levels of determinism in EEG signals. In general, D ranges from 0 to 1 and accounts for the distribution of the diagonal lines composed by recurrent points in the RP (see Figure 1) with respect to the total number of recurrent points. Noisy signals have D close to 0, while periodic signals have D close to 1. Intermediate values may indicate time series produced by more complex systems, such as chaotic ones.

Once the estimates for α exponents are added to the information extracted from measures of the deterministic part of the same signals we obtain complementary descriptions of the dataset concerning both the deterministic and the stochastic components. The couples of values (D, α) taken together provide a discriminating tool to distinguish different levels of hypnotic susceptibility.

Neural networks

The above results show a strong cross-evidence of a discrimination between highs and lows in the first seven minutes of analysis. In order to confirm this discrimination we employed Neural Networks, a very commonly used method for classification problems29. In this paper, the Neural Networks have been used to classify highs and lows on the basis of nonlinear measures, such as fluctuation exponent and determinism described above. For this purpose a two-layer feed-forward network, with sigmoidal hidden and output neurons, was used employing the Levenberg-Marquardt algorithm. We created a dataset of 576 vectors, each consisting of 8 features (4 values of α and 4 values of D) relating to each individual low and high, examined in a 10-second window during an overall 6-minutes time span, excluding the first transient minute. Thus the overall number of vectors is obtained from 36 temporal records times 16 subjects. We used 70% of the whole dataset for training the network, 15% for validating and 15% for testing. We trained each network 1000 times, on both smoothed and non-smoothed data, for a number of neurons equal to 5, 10, 15, 20, respectively. All the networks have been trained on dataset related to each 10-second window and then, having fixed the number of neurons and the type of dataset (smoothed or non-smoothed), we computed the average performance of the network as a function of time.

The results obtained through these neural networks training are shown in Fig. 3, including both smoothed and non-smoothed signals. The time range used for the setup of the training dataset and consequently the time interval shown in the graph, has been limited to a window of six minutes starting from the end of the first minute until the end of the seventh minute of our analysis.

In Fig 4 the performances of the neural networks are highlighted through the evaluation of errors distribution. As one can see, the neural network with 10 neurons is the best choice, indeed the errors are significantly lower than those obtained by the neural network with 5 neurons. Furthermore, in both cases, the networks evaluated on smoothed data show better results.

In order to additionally validate the outcomes of our experiment we included in Fig. 3 the results obtained from the neural network procedure applied to each indicator separately. It is clear that the combined use of the two indicators demonstrated much better performance compared to the neural network procedure based on each indicator separately.

Reliability and significance of results

Additional information on the method's performance can be extracted from Fig. 3, reporting the time course of the average errors for each group of neural networks. We find that for all groups the errors in the first minutes of the recordings are higher. Furthermore, initially the errors decrease and reach a flat region. This region was observed for all threshold settings and can be identified in the time interval ranging from second 170 to second 250 (see the green strip of Fig. 5). After this the errors increase again until the final part of the experiment (see also Fig. 3).

Table 2 provides additional details of the above analysis, where the performances of the groups only focusing on the flat region (170–250 seconds) are calculated. Specifically, the threshold in the first column and the number of selected networks falling below each threshold in the second column are reported. The third and fourth columns indicate the averages and the standard deviations of classification errors on the whole dataset and on the time interval 170–250 seconds, respectively.

These results indicate that the identified neural networks show robustness with respect to the percentage errors. Indeed, the number of neural networks belonging to each group increases by raising the threshold and the same happens for the averages and standard deviations. Moreover, the number of networks for each group increases in a nonlinear way with respect to the threshold. The 25% of the total number of networks shows an error lower than 2.5% (first row of Table 2), while 90% of networks has an error lower than 20% (second last row of Table 2). A sort of saturating mechanism of networks number is also evident for threshold between 10% and 20%. Note also that performances improve significantly if we consider only the data in the time window 170–250 seconds.

Discussion

In this paper the problem of assessing hypnotic susceptibility has been approached using nonlinear dynamics through the combined use of Recurrence Quantification Analysis and Detrended Fluctuation Analysis techniques, supplemented by a neural network classification. By using the time course of two significant indicators, cross-evidence for hypnotic susceptibility was obtained starting from the basal EEG monitored on subjects in their ordinary state of consciousness.

The main outcome of this paper is twofold: on one hand we obtained a clear indication on the EEG minimum recording time required to classify subjects as highs or lows in the absence of hypnotic induction; on the other hand, the neural network procedure turned out to provide successful and robust classification of subjects, letting us take into account the intrinsic nonlinearities present in the signals. These results can be used by operators to design suitable experimental sessions related to hypnotic assessment and implement user-friendly devices, allowing quick hypnotic assessment in both experimental and clinical contexts.

Thus, the combined use of the two techniques, RQA and DFA, inherited from nonlinear dynamics is a successful discriminating procedure and a hypnotic susceptibility classification tool, providing reliable predictions of hypnotizability levels. The strength of our approach relies on the use of two complementary features - one related to the deterministic part and the other to the stochastic part of the signals - enabling to capture two different aspects of the complex nonlinear underlying dynamics. To the best of our knowledge, the integration of the two methods, although employed in different fields30,31, has not been sufficiently used in EEG analysis. Some recent papers which make combined use of different classification methods based on different biomarkers measured from EEG signals are present in the literature regarding early diagnosis of Alzheimer's disease (AD). In particular, in Lehmann et al.32 the authors used linear and non-linear classification algorithms - but not DFA - to discriminate between the electroencephalograms of patients with varying degrees of AD and their age-matched control subjects. Moreover in Poil et al.33 the authors combine DFA together with other biomarkers in order to obtain a global diagnostic classification index in predicting the transition from normal aging to Alzheimer's disease. Both approaches are crucial for effective application of early diagnostics and subsequent treatment strategies, but in neither of the previous examples the authors apply their classification methods combining RQA and DFA as indicators, nor their aim was to classify hypnotic susceptibility. In this respect our paper is quite innovative in the framework of hypnotic classification.

With respect to the cited previous work carried out by some of the authors11, whose target was to perform a statistical analysis in order to obtain an initial understanding of possible discrimination between highs and lows subjects, the present paper is not a mere validation of previous results. In fact, in that paper discriminant analysis was applied after Bonferroni corrected MANOVA, showing significant differences between the highs and lows' determinism for the channels analysed in the present study. On the contrary, our main objective was to develop a clustering tool based on extended nonlinear indicators and on neural network models, which is reliable and robust enough to be used for clinical purposes. “Clustering tool” means that classification is automatic and does not require any additional analysis. Once the tool is ready to use it becomes more and more reliable any time the EEG of a new subject is recorded and included in the system, due to the increase of the training and validating data sets of the neural network.

Methods

In this section the nonlinear methods of Detrended Fluctuation Analysis, Recurrence Quantification Analysis and Neural Networks are presented.

Detrended Fluctuation Analysis

Detrended Fluctuation Analysis (DFA) can be traced back to the work by Hurst34, the so-called Rescaled Range (R/S) Analysis, introduced with the aim of quantifying fluctuations in time series, in order to distinguish random from fractal time series and to recognize the existence of long-range correlations.

Roughly speaking, the R/S analysis gives a measure of displacement that the system undergoes - on average and rescaled by the local standard deviation - over the considered time. In particular, the procedure gives an estimate of exponent H in the scaling law for the mean quadratic displacement 〈(Δx)2〉 ~ (Δt)2H, by splitting a time series into adjacent non-overlapping windows and evaluating the range R of the standardized cumulative series rescaled by the standard deviation S. This scaling law is a generalization of the characteristic behavior in a Brownian motion, corresponding to H = 0.5 and it is related to fractional Brownian motion and anomalous diffusion.

The original procedure by Hurst has been widely improved and generalized. Its most used extension is DFA, originally introduced to address the existence of power-laws in DNA sequences35. Through the specification of a proper detrending operation DFA proved itself to be a well suited procedure to obtain a correct estimation of power-law scaling and a method specifically adapted to the analysis of non stationary time series17,36.

To illustrate the DFA method, we consider a noisy time series, {x(i)}i= 1,…N. We integrate the original time series and obtain the profile of cumulative sums {y(j)}:

We divide the profile into  boxes of equal size s. Since the length of the series is generally not an integer multiple of time scale s, a small portion of data at the end of the profile can not included in any interval. In order not to neglect this data we repeat the entire procedure starting from the end of the series, obtaining 2Ns segments. Then, in each box we fit the integrated time series by using a discretized polynomial function yfit, the local trend. We calculate the local trend for each interval of width s via a linear fit of data. Indicating the fit in the ν-th box with

boxes of equal size s. Since the length of the series is generally not an integer multiple of time scale s, a small portion of data at the end of the profile can not included in any interval. In order not to neglect this data we repeat the entire procedure starting from the end of the series, obtaining 2Ns segments. Then, in each box we fit the integrated time series by using a discretized polynomial function yfit, the local trend. We calculate the local trend for each interval of width s via a linear fit of data. Indicating the fit in the ν-th box with  we define the detrended profile as follows:

we define the detrended profile as follows:

For each of 2Ns intervals we calculate the mean square deviation from the local trend

Finally, we calculate the mean on all segments to obtain the fluctuation function

The above computation is repeated for each box size (different scales) to provide a relationship between F(s) and s. The existence of a power-law like F(s) ∝ sα can be checked through the measure of the parameter α, called the scaling exponent, or correlation exponent - directly related to the Hurst Exponent H if the time series is stationary. It gives a measure of the correlation properties of the signal: if α = 0.5 the signal is an uncorrelated signal (white noise); if α < 0.5, the signal is anticorrelated; if α > 0.5, there are positive correlations in the signal34,36,37.

Recurrence Quantification Analysis

In this section we provide basic notions on recurrence methods for nonlinear time series data (for a review the reader is referred to Marwan et al.13).

Starting from a time series [s1, …, sN] where si = s(iΔt) and Δt is the sampling time, the system dynamics can be reconstructed using the Takens' embedding theorem16. The reconstructed trajectory x is expressed as a matrix in which each row is a phase space vector  where i = 1, …, N − (DE − 1)τ. The matrix is characterized by two key parameters: the embedding dimension DE and the delay time τ. DE is the minimum dimension at which the reconstructed attractor can be considered completely unfolded and there is no overlapping of the reconstructed trajectories. The delay time τ represents a measure of correlation existing between two consecutive components of DE– dimensional vectors used in trajectory reconstruction38.

where i = 1, …, N − (DE − 1)τ. The matrix is characterized by two key parameters: the embedding dimension DE and the delay time τ. DE is the minimum dimension at which the reconstructed attractor can be considered completely unfolded and there is no overlapping of the reconstructed trajectories. The delay time τ represents a measure of correlation existing between two consecutive components of DE– dimensional vectors used in trajectory reconstruction38.

The Recurrence Plot (RP) is defined on the embedded trajectory x as follows:

where i and j are the time instant labels of the associated phase-space vector x. The RP is a two-dimensional object accounting for recurrences between the state vectors at different times. The recurrences are represented by black dots in the RP. Since any point is recurrent with itself, the RP always includes the diagonal line, Ri,j = 1, ∀i = j, called Line of Identity (LOI). Thus the RP provides information on the dynamical properties of the reconstructed state space, with emphasis on the presence of periodicities of any length.

Recurrence Quantification Analysis (RQA) provides a set of measures that allow to quantify the characteristics of RPs, otherwise difficult to be read by only visual inspection. In particular, RQA offers a set of indicators computed on the structures of the RP, based on the length l of the diagonal lines parallel to the LOI and their distribution P(l). This distribution provides valuable information regarding the structure of the RP and the unknown dynamics of the system under investigation13. Among the available indicators, Determinism D evaluates the proportion of recurrent points forming diagonal line structures in the RP and affects time series predictability39.

In the present paper the RP for each electrode and each subject has been constructed and the recurrence indicator Determinism has been quantified. In particular, the embedded state space has been reconstructed by using space dimension DE = 3 and time lag τ = 120040 and D evaluated from its definition:

D accounts for the fraction of recurrent points forming diagonal structures with a minimum length lmin with respect to all recurrences.

In order to provide a quantitative description of dynamical behavior of spatio-temporal systems starting from observed time series, such as the EEGs analyzed in this paper, RQA measures have been recently extended (see, for example Marwan et al.41, Facchini et al.42 and Mocenni et al.43).

Neural Network Classification

Neural networks are computational models inspired by the nervous system of animals that are used in artificial intelligence applications, such as machine learning and pattern recognition. They are very commonly used for solving classification problems.

Artificial neural networks provide procedures performing nonlinear statistical modeling, emulating biological neural systems. They give constructive alternatives to linear or logistic regression, the most commonly used methods for developing predictive models for binary outcomes in diagnostic procedures. In this respect, neural networks can perform tasks that a linear program can not because they are able to catch the nonlinear features in the underlying system. They are simple and natural to setup, not very sensitive to noise and do not require complicated statistical training. Several training algorithms available in the literature can be used for their implementation, in any kind of applications. The main disadvantage corresponds to their intrinsic “black box” nature, together with their basic empirical character, with the consequence that it is almost impossible to avoid the “human component” in classification results. They do not always guarantee convergence towards an optimal solution. In our case the network was small and easy to construct. It worked very fast and well even if we recognize that the whole procedure could be implemented. Finally, we emphasize that the neural networks were only exploited in order to validate the hypothesis of distinction between highs and lows based on results obtained through completely different methodologies.

Neural computing has recently emerged as a practical and successful technology, especially in problems of pattern recognition44. In this case it makes use of feed-forward network architectures such as multi-layer perceptron and the radial basis function network. Pattern recognition encompasses a wide range of information processing problems of great practical significance, in particular for medical diagnosis. In this respect neural networks are an extension of conventional techniques in statistical pattern recognition and this field offers many powerful results.

Basically simple artificial nodes (“neurons”) are connected together to form a network which mimics a biological neural network. A neuron consists of a set of adaptive weights, which are numerical parameters tuned by a learning algorithm; they represent the strength of the connection between neurons activated during the training phase. At the end of the training the whole network is capable of approximating non-linear functions of their inputs29.

Ethics statement

The work developed in this paper satisfies the ethics statement required by the journal. The experimental session was approved by the Ethics Committee of the University of Pisa where the EEG recordings were collected. Participants were selected from the students attending Physiology classes with informed written consent.

References

Carli, G., Manzoni, D. & Santarcangelo, E. L. Hypnotizability-related integration of perception and action. Cogn. Neuropsychol. 25, 1065–1076 (2008).

Santarcangelo, E. L. et al. Hypnotizability-dependent modulation of postural control: effects of alteration of the visual and leg proprioceptive inputs. Exp. Brain Res. 191, 331–340 (2008).

Menzocchi, M., Santarcangelo, E. L., Carli, G. & Berthoz, A. Hypnotizability-dependent accuracy in the reproduction of haptically explored paths. Exp. Brain Res. 216, 217–223 (2012).

Castellani, E., Carli, G. & Santarcangelo, E. L. Visual identification of haptically explored objects in high and low hypnotizable subjects. Int. J. Clin. Exp. Hypn. 59, 250–265 (2011).

Santarcangelo, E. L. et al. Hypnotisability modulates the cardiovascular correlates of subjective relaxation. Int. J. Clin. Exp. Hypn. 60, 383–96 (2012).

Council, J. R. A historical overview of hypnotizability assessment. Am. J. Clin. Hypn. 44, 199–208 (2002).

Kirsch, I. Suggestibility or hypnosis: what do our scales really measure? Int. J. Clin. Exp. Hypn. 45, 212–225 (1997).

Milling, L. S., Coursen, E. L., Shores, J. S. & Waszkiewicz, J. A. The predictive utility of hypnotizability: the change in suggestibility produced by hypnosis. J. Consult. Clin. Psychol. 78, 126–130 (2010).

Baghdadi, G. & Nasrabadi, A. M. Effect of hypnosis and hypnotizability on temporal correlations of EEG signals in different frequency bands. Eur. J. Clin. Hypn. 9, 67–74 (2009).

Baghdadi, G. & Nasrabadi, A. M. Comparison of different EEG features in estimation of hypnosis susceptibility level. Comput. Biol. Med. 42, 590–597 (2012).

Madeo, D., Castellani, E., Santarcangelo, E. L. & Mocenni, C. Hypnotic assessment based on the Recurrence Quantification Analysis of EEG recorded in the ordinary state of consciousness. Brain Cogn. 83, 227–233 (2013).

Sebastiani, L. et al. Relaxation as a cognitive task. Arch. Ital. Biol. 143, 1–12 (2005).

Marwan, N., Romano, M. C., Thiel, M. & Kurths, J. Recurrence plots for the analysis of complex systems. Phys. Rep. 438, 237–329 (2007).

Poincaré, H. Sur la problème des tres corps et les équations de la dynamique. Acta Math. 13, A3–A270 (1890).

Eckman, J. P., Kamphorst Oliffson, S. & Ruelle, D. Recurrence Plots of Dynamical Systems. Europhys. Lett. 4, 973–977 (1987).

Takens, F. Detecting strange attractors in turbulence, in “Dynamical systems and turbulence”. Lect. Notes Math. 898, 366–381 (1981).

Kantelhardt, J. W. et al. Detecting long-range correlations with detrended fluctuation analysis. Phys. A 295, 441–454 (2001).

Kantelhardt, J. W. et al. Multifractal Detrended Fluctuation Analysis of Nonstationary Time Series. Phys. A 316, 87–114 (2002).

Chen, Z., Ivanov, P. C., Hu, K. & Stanley, H. E. Effect of nonstationarities on detrended fluctuation analysis. Phys. Rev. E 65, 041107 (2002).

Lee, J. S. et al. Fractal analysis of EEG in hypnosis and its relationship with hypnotizability. Int. J. Clin. Exp. Hyp. 55, 14–31 (2007).

Chialvo, D. R. Emergent complex neural dynamics. Nat. Phys. 6, 744–750 (2010).

Weitzenhoffer, A. M. & Hilgard, E. R. Stanford Hypnotic Susceptibility Scale, Form C, Consulting Psychologists Press (1962).

De Pascalis, V., Bellusci, A. & Russo, P. M. Italian norms for the Stanford hypnotic susceptibility scale form C. Int. J. Clin. Exp. Hyp. 48, 315–323 (2000).

Hoeft, F. et al. Functional Brain Basis of Hypnotizability. Arch. Gen. Psychiat. 69, 1064–72 (2012).

Nolan, H., Whelan, R. & Reilly, R. B. FASTER: Fully automated statistical thresholding for EEG artifact rejection. J. Neurosci. Meth. 192, 152–162 (2010).

Watters, P. A. Fractal Structure in the Electroencephalogram. Complex. Intl. 5 (1998).

Linkenkaer-Hansen, K., Nikouline, V. V., Palva, J. M. & Ilmoniemi, R. J. Long-range Temporal Correlations and Scaling Behavior in human Barian Oscillations. J. Neurosci. 21, 1370–1377 (2001).

Buiatti, M., Papo, D., Baudonnière, P. M. & Van Vreeswijk, C. Feedback modulates the temporal scale-free dynamics of brain electrical activity in a hypothesis testing task. Neurosci. 146, 1400–1412 (2007).

Haykin, S. O. Neural Networks and Learning Machines. Prentice Hall (2008).

Wallot, S., Fusaroli, R., Tylén, K. & Jegindø, E. Using complexity matrix with R-R intervals and BPM heart rate measures. Front. Physiol. 4, 211 (2013).

Little, M. A. et al. Exploiting Nonlinear Recurrence and Fractal Scaling Properties for Voice Disorder Detection. Biomed. Eng. OnLine 6,23 (2007).

Lehmann, C. et al. Applications and comparison of classification algorithms for recognition of Alzheimer's disease in electrical brain activity (EEG). J. Neurosci. Meth. 16, 1342–350 (2007).

Poil, S. S. et al. Integrative EEG biomarkers predict progression to Alzheimer's disease at the MCI stage. Front. Aging Neurosci. 5, 1–12 (2013).

Hurst, H. E. Long-term storage capacity of reservoirs. Trans. Amer. Soc. Civil Eng. 116, 770–808 (1951).

Peng, C. K. et al. Mosaic organization of DNA nucleotides. Phys. Rev. E 49, 1685–1689 (1994).

Hu, K. et al. Effect of trends on detrended fluctuation analysis. Phys. Rev. E 64, 011114 (2001).

Mandelbrot, B. B. The fractal geometry of nature. W. H. Freeman and Co., New York (1983).

Abarbanel, H. Analysis of Observed Chaotic Data. Springer-Verlag, (1996).

Webber, C. L., Jr & Zbilut, J. P. Recurrence quantification analysis of nonlinear dynamical systems. In: Riley, M. A. & Van Orden, G. (Eds), Tutorials in contemporary nonlinear methods for the behavioral sciences. 226–94. (2005).

Becker, K. et al. Anesthesia monitoring by recurrence quantification analysis of EEG data. PLoS ONE 26, e8876 (2010).

Marwan, N., Kurths, J. & Saparin, P. Generalised recurrence plot analysis for spatial data. Phys. Lett. A 360, 545–551 (2007).

Facchini, A. & Mocenni, C. Recurrence Methods for the Identification of Morphogenetic Patterns. PLoS ONE 8, e73686 (2013).

Mocenni, C., Facchini, A. & Vicino, A. Identifying the dynamics of complex spatio-temporal systems by spatial recurrence properties. PNAS 107, 8097–8102 (2010).

Bishop, C. M. Neural Networks and Pattern Recognition. Oxford University Press, (1995).

Author information

Authors and Affiliations

Contributions

R.C., D.M., M.I.L. and C.M. designed the study evaluated the RQA and DFA indicators on the EEG signals, developed the Neural Networks for classification and wrote the manuscript. E.L.S. and E.C. designed the experiments and recorded the EEG signals of the enrolled subjects. E.L.S. performed the hypnotic assessment and contributed to write the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder in order to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/

About this article

Cite this article

Chiarucci, R., Madeo, D., Loffredo, M. et al. Cross-evidence for hypnotic susceptibility through nonlinear measures on EEGs of non-hypnotized subjects. Sci Rep 4, 5610 (2014). https://doi.org/10.1038/srep05610

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep05610

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.