Abstract

Community structure detection in complex networks is important since it can help better understand the network topology and how the network works. However, there is still not a clear and widely-accepted definition of community structure and in practice, different models may give very different results of communities, making it hard to explain the results. In this paper, different from the traditional methodologies, we design an enhanced semi-supervised learning framework for community detection, which can effectively incorporate the available prior information to guide the detection process and can make the results more explainable. By logical inference, the prior information is more fully utilized. The experiments on both the synthetic and the real-world networks confirm the effectiveness of the framework.

Similar content being viewed by others

Introduction

Community structure detection in complex networks is of critical importance for understanding not only the network topology, but also how the network works1,2,3. In many real applications, the revealed communities often correspond to functional modules of the network, such as pathways in metabolic networks, or a group of people that have common interest. These functional modules can be considered building blocks of networks. Furthermore, dynamics in the networks with communities can be very different from those without communities.

However, it is very hard to give a general and widely-accepted definition of community structure due to the complexity of real problems and most of the revealed communities are model-based, which makes the results hard to explain, or in other words, the correctness and meanings of the communities cannot be confirmed without the background information about the functions of the nodes. Hence if the background information can be effectively incorporated to guide the process of community structure detection, we will get much better results. In our previous work, we have proposed a semi-supervised learning framework to incorporate two types of background information into community detection: must-link and cannot link4. An interesting question is: How to make better use of the prior knowledge to do more with less?

In this paper, based on our previous work4 and logical inference, we propose an enhanced semi-supervised learning framework for community structure detection, which can incorporate the available prior information more effectively. The most important contribution of the framework is that it adds a logical inference step to more fully utilize the two types of prior information, must-link constraints and cannot-link constraints and can more effectively combine the information with the topology structure of networks to guide the detecting process. The experimental results show that the proposed method can significantly improve the detection performance. We also evaluate which type of constraints is more useful, indicating that the constraints of must-link contribute much more than that of cannot-link.

Results

In this section, we empirically tested the effectiveness of the enhanced semi-supervised learning framework for community detection. To do this, we applied NMF, spectral clustering and InfoMap with the revised adjacency matrices to several well-studied networks.

Data description

Both the synthetic and the real-world networks were used in our experiments. Details are as follows:

-

1

GN1: The GN network, also known as the “four groups” network, has 128 nodes which are divided into four equalized sized non-overlapping communities with 32 nodes each. On average, each node has Zin + Zout = 16 neighbors, or in other words, it randomly connects with Zin nodes in its own community and Zout nodes in other communities. As expected, with an increasing Zout, the community structure will become less and less clear and the problem more challenging. In our experiment, we set Zout to 10 and Zin to 6.

-

2

LFR5: Compared with GN networks, LFR networks are more realistic. In LFR networks, both the degree and the community size distributions obey power laws, with exponents γ and β. Each node has a fraction 1 − μ of its neighbors in its own community and a fraction μ in other communities. Here μ is called the mixing parameter.

We set the parameters of the LFR network as follows: the number of nodes was 1000, the average degree of the nodes was 20, the maximum degree was 50, the exponent of degree distribution γ was 2 and that of community size distribution β was 1 and the mixing parameter μ was 0.9. The communities did not overlap with each other.

-

3

Political books (unpublished http://www.orgnet.com/cases.html): This dataset contains 105 books on US politics and 441 edges. Nodes are books sold by the bookseller Amazon.com and edges represent co-purchasing of books. Nodes have been manually given labels as “liberal”, “neutral”, or “conservative”.

-

4

Football team network1: this dataset is about the network of American football games (not soccer) between 115 college teams during regular season Fall 2000. The edges connect the teams that had games.

Assessment standards

In this submission, we used the normalized mutual information (NMI)6 to assess the effectiveness of our approach. The value can be formulated as follows:

where M1 is the ground-truth community label and M2 is the computed community label, k is the community number, n is the number of nodes, nij is the number of nodes in the ground-truth community i that are assigned to the computed community j,  is the number of nodes in the ground-truth community i and

is the number of nodes in the ground-truth community i and  is the number of nodes in the computed community j, log is the natural logarithm.

is the number of nodes in the computed community j, log is the natural logarithm.

In general, the higher the NMI, the better the result.

Results analysis

Consequently, we compared the results of NMI obtained by the models with and without prior information on synthetic and real networks. For an undirected network with n nodes, there are totally n(n − 1)/2 node pairs available. We randomly selected a percentage of node pairs and determined whether the pairs were ML or CL. Then we incorporated these pairwise constraints into the adjacency matrix A to get B[1] and B[2]. To evaluate which type of constraints is more useful, we further used the following three matrices, where only one type of constraints was incorporated:

and based on B[1]_ML,

Note that if we only consider the constraints of CL, the information cannot be enhanced, so B[2]_CL should be identical with B[1]_CL and was not defined again. The results on synthetic networks were averages of ten trails and have summarized in Fig. 1, 2. From these figures, one can observe that: (1) The averaged NMI of the semi-supervised learning with and without the information-enhanced step increases with the increasing percentage of ML and CL pairs constrained; (2) The information-enhanced step does significantly increase the detection performance. For example, given 5 percent of pairs constrained on GN networks, the NMI of the non-enhanced semi-supervised NMF ( = 55.78%) is forty percent higher than that of the unsupervised one ( = 10.51%) and the NMI of the enhanced NMF ( = 87.35%) is further improved by more than thirty percent; 3) For the GN networks, the spectral clustering is slightly better, while for the LFR networks, the InfoMap is better; 4) The type of ML constraints contributes much more than that of CL constraints. Note that for the LFR benchmarks, the results of NMF on B[2]_ML are slightly better because, for B[2], with a high percentage of pairs constrained, many elements are replaced by zero due to CL constraints and some columns only have few non-zero elements, reducing the clustering performance.

In addition, Table 1 lists the percentage of nonzero elements in matrix B[2] on LFR networks, from which one can observe that the matrix becomes denser, but only a little bit, making it possible to apply the framework for large scale networks. Actually since the prior information may also delete some edges across different communities, the matrix may not necessarily become denser. Note that the key point of the method is to change the network's adjacency matrix (and accordingly, change the network's topology) using prior information, making the community structures clearer. However, the performance may not necessarily be as good as on the network with equivalent mixing parameter, because this kind of change is not “uniform”, i.e., only the connections of the selected node pairs are changed, making the topology characteristics of the network different from the computer-generated networks, even if they have the same pin or pout, where pin is the possibility that the two nodes in the same community are connected and pout is that in different communities. Fig. 3 gives the relations among the percentage of pairs constrained, the mixing parameter of LFR benchmarks and pin, pout.

The results on the network of politics books are shown in Fig. 4, from which one can see that the trends are similar with that on the synthetic networks and the information enhanced step significantly improve the performance.

A case study: college football network

In this subsection, we analyzed the football team network as a case study to show the effectiveness of our semi-supervised learning framework.

In the football network, there are 115 nodes (teams) and they belong to 12 different conferences. Most of them played against the ones in the same conference more frequently. However, there are also abnormal teams that played more frequently against the ones in other conferences, including the teams 37, 43, 81, 83, 91 (in conference IA Independents), 12, 25, 51, 60, 64, 70, 98 (in conference Sunbelt), 111, 29 and 59. For more details, please see our previous work4.

Combined with our previous work4, we set the community number to 11 and the teams 37, 43, 81, 83, 91 (conference IA Independents) did not have ground-truth conference labels, or in other words, there were 110 labeled teams and 110 × (110 − 1)/2 = 5995 team pairs available. Firstly, we randomly selected some pairs in them as prior information: if the two teams were in the same conference, they were must-link (ML), otherwise, they were cannot-link (CL) and then we proceeded to implement the information-enhanced step. Finally, we applied NMF on the revised adjacency matrices to give the partitioning results.

Fig. 5 gives the partitioning results of NMF corresponding to different percentage of pairs constrained (with and without information-enhanced step), from which, one can observe that:

-

i

When no prior information is given, there are five abnormal teams mis-clustered: teams 29, 60, 64, 98, 111.

-

ii

When given 5 percent of pairs constrained, the number of ML and CL pairs of constrained without the information-enhanced step is 300 (5 percent of node pairs available) and increases to 1130 after the information-enhanced step (18.85 percent of node pairs available). There are three abnormal teams mis-clustered: teams 29, 64, 111. An interesting observation here is that the team 64 is mis-clustered into two different conferences (conference 10 and conference 5) under the non-information-enhanced learning and the information-enhanced learning. The reasons are as follows: in this experiment, before the information-enhanced step, there are 7 CL pairs and no ML pairs related with node 64, among which, two are related with the conference 10 while none is related with the conference 5. After the information-enhanced step, there are 18 CL pairs and no ML pairs related with 64, among which, seven are related with the conference 10 and there are still no pairs related with conference 5. The result is thus guided by the enhanced prior information and the team 64 is reassigned to conference 5. Note that since the pairs of constrained are selected randomly, another round of the experiment may result in different network partitions. Table 2 gives more details about the ML and CL pairs related with the team 64.

Table 2 Must link and cannot link pairs of teams related with team 64 and the teams in conference 10 and 5. The boxed nodes are that included in ML or CL -

iii

When given 20 percent of prior knowledge, the number of ML and CL pairs of constrained is 1199 (20 percent, before enhancement) and increases to 5651 (94.26 percent, after). There is only one team 111 mis-clustered into the conference 12 under the non-enhanced learning. After enhancement, all the labeled teams are corrected clustered. The teams of the conference 12 are all in the CL set of the team 111, which is very helpful to the community structure detection process. Table 3 gives more details about the ML and CL pairs related with the team 111. The team 64 is clustered correctly. Before the information-enhanced step, there are 30 CL pairs and 3 ML pairs related with 64 and after enhancement, there are 102 CL pairs (including almost all the labeled teams that are not in conference 11) and 6 ML pairs (including all the labeled teams in conference 11) related with 64.

Table 3 Must link and cannot link pairs of teams related with team 111 and the teams in conference 5 and 12. The boxed nodes are that included in ML or CL

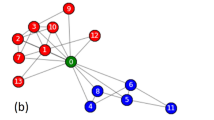

Comparison of the semi-supervised learning results of NMF with and without the information-enhanced step corresponding to different percentage of pairs constrained (color online).

(a): Real grouping in football dataset. There are 12 conferences of 8–12 teams (nodes) each. Teams in conference 6 are not labeled. (b): Result of NMF without any prior information. (c): Result of NMF given 5 percent of pairs constrained (without the information-enhanced step). (d): Result of NMF given 5 percent of pairs constrained (with). (e): Result of NMF given 20 percent of pairs constrained (without). (f): Result of NMF given 20 percent of pairs constrained (with) and all the labeled teams are corrected clustered.

In summary, our semi-supervised learning framework does make better use of the prior information and can significantly improve the model performance.

Discussion

In this paper, we propose an enhanced semi-supervised learning framework for community structure detection. The framework can add the supervision of must-link (ML) and cannot-link (CL) pairwise constraints into the adjacency matrix. Through the information enhancement based on logical inference, the detection performance can be improved significantly. Note that this step is only feasible under the case of non-overlapping communities. If otherwise, for example, node i has multiple community labels, the prior information that nodes i and t are ML and nodes i and k are CL may not necessarily result in the fact that nodes t and k are also CL.

In addition, we evaluate the contributions of the two types of constraints, indicating that the type of ML constraints is much more important than CL, which is interesting, because in many real scenarios, it is easier to get the constraints of ML (positive constraints)7,8.

An interesting problem which is related with our work is the analysis of dynamic networks, such as detecting the communities in a series of time-varying networks. Given the network structure at time t, we can find some conservative relationships between nodes and use them as ML and CL constraints to detect the communities in the new network at time t + 1, which is termed by us as online semi-supervised learning.

Methods

Enhanced semi-supervised learning for community structure detection

In this section, we give our enhanced learning framework for community structure detection. Firstly, given an undirected and unweighted simple graph G, we can define the associated symmetric adjacency matrix A as follows:

where i ~ j means there is an edge between nodes i and j and  means there is no edge between them.

means there is no edge between them.

In many real applications, there is often some prior information available, We can try to incorporate this information into the community detection process to make the result more explainable and clear. Specifically, if we have known that some pairs of nodes are must-link (the two nodes are in the same community, ML), or some pairs of nodes are cannot-link (the two nodes are not in the same community, CL), or both, we can incorporate these pairwise constraints into the adjacency matrix A to get a new matrix B[1] as follows4:

In addition, based on logical inferences, one can get further knowledge of the constraints that i) if nodes i and t are ML and nodes i and k are ML, then t and k should also be ML (The friend of my friend is my friend); ii) if nodes i and t are ML and nodes i and k are CL, then t and k should also be CL (The friend of my enemy is my enemy), which leads to the following revision of B[1]:

In this paper, we call this logical inference step as information-enhanced step. After this step, the prior knowledge is more fully utilized. We set α to 2 in this work4.

Note that the idea of the information-enhanced step can be traced back to the balance theory in psychology9,10, which was used for explanation of attitude change.

Illustrative examples

We use a small example to intuitively show the ideas behind our framework. Figure 6 (A) is part of a network, where nodes 1, 2, 3, 4, 5, 6 are in the same community and node 7 in another community. Based on some domain knowledge, we may know that nodes 3 and 5, nodes 4 and 5 are must-link and nodes 3 and 7 are cannot-link. By logical inference, nodes 3 and 4 should also be must-link, consequently, nodes 4 and 7 should be cannot-link and finally, nodes 5 and 7 should also be cannot-link. The background information is thus significantly enhanced.

In addition, we illustrate the effectiveness of the approach with a GN network: given an undirected and unweighted “equally sized four groups” network with 128 nodes, we want to detect the communities in it. The heatmap of the associated adjacency matrix A is shown as the upper left in Fig. 7. Suppose that we have some prior information about the functions of the nodes and can thus determine a percentage of pairs of nodes as must-link (ML) or cannot link (CL). These pieces of information on ML and CL are incorporated into the adjacency matrix A. As expected, the communities become more and more clear as the percentage of pairs constrained increases. Furthermore, an surprising observation is the effectiveness of logical inferences, which has dramatically improved the data quality (the second row in Fig. 7).

After incorporating the background information into the adjacency matrix, we can then apply some unsupervised learning models, such as nonnegative matrix factorization (NMF), spectral clustering and InfoMap, on them for community structure detection.

Nonnegative matrix factorization (NMF)

The model of NMF is often formulated as the following nonlinear programming11,12:

or in other words, given an nonnegative matrix X of size n × n, we try to find two nonnegative matrices, W of size n × k and H of size k × n, such that: X ≈ WH. The objective matrix X for NMF can be selected as A, B[1], or B[2]. The communities of the network can be revealed from H: node i is of community k if Hki is the largest element in the ith column. The algorithm of multiplicative update rules for NMF can be summarized in Algorithm 1.

Algorithm 1 Nonnegative Matrix Factorization (Least Squares Error)

Input: X, iter % In this paper, the iteration number iter is set to 100.

Output: W, H.

For t = 1: iter

end

Spectral clustering

Spectral clustering is another powerful tool for unsupervised learning. The standard algorithm can be summarized in Algorithm 213.

Algorithm 2 Spectral Clustering

Input:

Output: Community Label  of the n nodes.

of the n nodes.

L = D1/2BD1/2, where D is the diagonal matrix with the element  .

.

Forming the matrix  , where xi,

, where xi,  are the top k eigenvectors of L.

are the top k eigenvectors of L.

Normalizing X so that rows of X have the same L2 norm:  .

.

Clustering rows of X into k clusters by K-means.

Yi = j if the ith row is assigned to cluster j.

InfoMap14

This model grows out of information theory and tries to detect the communities by minimizing the expected description length of random walks on the network. The model is among the most recommended approaches especially when there is no prior information on the network15.

References

Girvan, M. & Newman, M. E. J. Community structure in social and biological networks. PNAS 99, 7821–7826 (2002).

Newman, M. E. J. & Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E 69, 026113 (2004).

Newman, M. E. J. Communities, modules and large-scale structure in networks. Nature Phys. 8, 25–31 (2011).

Zhang, Z.-Y. Community structure detection in complex networks with partial background information. EPL 101, 48005 (2013).

Lancichinetti, A., Fortunato, S. & Radicchi, F. Benchmark graphs for testing community detection algorithms. Phys. Rev. E 78, 046110 (2008).

Strehl, A. & Ghosh, J. Cluster ensembles—a knowledge reuse framework for combining multiple partitions. JMLR 3, 583–617 (2003).

Ben-Hur, A. & Noble, W. S. Choosing negative examples for the prediction of protein-protein interactions. BMC Bioinformatics 7, S2 (2006).

Zhao, X.-M., Wang, Y., Chen, L. & Aihara, K. Gene function prediction using labeled and unlabeled data. BMC Bioinformatics 9, 57 (2008).

Heider, F. Attitudes and cognitive organization. The Journal of Psychology 21, 107–112 (1946).

Heider, F. The psychology of interpersonal relations (John Wiley & Sons, 1958).

Lee, D. D. & Seung, H. S. Learning the parts of objects by non-negative matrix factorization. Nature 401, 788–791 (1999).

Lee, D. D. & Seung, H. S. Algorithms for non-negative matrix factorization. In: NIPS 556–562 (2001).

Ng, A., Jordan, M. & Weiss, Y. On spectral clustering: Analysis and an algorithm. In: NIPS 849–856 (2002).

Rosvall, M. & Bergstrom, C. Maps of random walks on complex networks reveal community structure. PNAS 105, 1118–1123 (2008).

Lancichinetti, A. & Fortunato, S. Community detection algorithms: a comparative analysis. Phys. Rev. E 80, 056117 (2009).

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant No. 61203295 and Program for Innovation Research in Central University of Finance and Economics. The authors greatly appreciate the reviewer's insightful comments.

Author information

Authors and Affiliations

Contributions

Z.-Y.Z. devised the study; Z.-Y.Z., K.-D.S. and S.-Q.W. performed the experiments and analyzed the data; Z.-Y.Z. prepared the figures and wrote the paper.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareALike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Zhang, ZY., Sun, KD. & Wang, SQ. Enhanced Community Structure Detection in Complex Networks with Partial Background Information. Sci Rep 3, 3241 (2013). https://doi.org/10.1038/srep03241

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep03241

This article is cited by

-

Semi-supervised community detection on attributed networks using non-negative matrix tri-factorization with node popularity

Frontiers of Computer Science (2021)

-

Active semi-supervised overlapping community finding with pairwise constraints

Applied Network Science (2019)

-

Explore semantic topics and author communities for citation recommendation in bipartite bibliographic network

Journal of Ambient Intelligence and Humanized Computing (2018)

-

Improving the Efficiency and Effectiveness of Community Detection via Prior-Induced Equivalent Super-Network

Scientific Reports (2017)

-

Active link selection for efficient semi-supervised community detection

Scientific Reports (2015)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.