Key Points

-

Demonstrates that digital radiology is not without limitations and appropriate caution needs to be exercised when using this technology.

-

Image processing artefacts can mimic pathology and this is one of the limitations of digital radiology.

-

This paper makes recommendations on work practice to reduce the potential risk of misdiagnosis related to pathology mimicked by artefacts.

Abstract

Background Image processing of digital X-ray images is known to have the potential to produce artefacts that may mimic pathology. A study was conducted at a UK dental radiology conference to demonstrate this effect in dentistry. Method Sixteen digital X-rays of single teeth containing restorations were randomly presented in both unprocessed and processed formats to an auditorium of 42 participants. Participants interactively scored each image on a scale from 1-5 where 1 was definitely no pathology and 5 was definitely pathology. The display conditions were confirmed for each participant using a validated threshold contrast test. Results The results show that 52% (81/157) of responses at level 1 for the unprocessed images changed to levels 4 or 5 after image processing. Conclusion This study illustrates the potential for image processing artefacts to mimic pathology particularly at high contrast boundaries and introduces the risk of unnecessary interventions. In order to minimise this risk, it is recommended that for digital radiographs containing pathology relating to high contrast boundaries, non-related high contrast features such as unrelated restorations or tooth/bone margins are also considered to exclude the possibility of artefact. If there is doubt, reference should be made to the unprocessed data.

Similar content being viewed by others

Introduction

Digital imaging is increasingly becoming de rigueur for X-ray imaging in the twenty-first century. The advantages of the rapid acquisition, delivery and display of image data outweigh any potential compromises in image quality.1,2 All digital data are processed at several different levels before display. Image processing may be local to a specific area in the image, for example to remove dead pixels, or global across the whole image to improve visualisation by enhancing or suppressing certain elements in the image. Many of these processes are outside of the user control although most systems provide post processing options. The unsharp mask subtraction (UMS) algorithm has been widely adopted as the most common process for general image processing as it enhances both contrast and sharpness. It is also efficient with respect to speed and does not require high-end computational power. Many systems still use this algorithm in varying degrees of sophistication but as computer power has increased, so too has the complexity of the algorithms. Recent methods favour multi-scalar processing which operates on multiple derivatives of the original data, each at different spatial scales.3

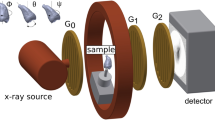

Image processing is intended to improve the image presentation but it is also possible, especially for non-adaptive algorithms, to produce an artefact that has a deleterious impact on the image by mimicking the presence of pathology. A classic example of this is the well known 'halo' artefact produced by the UMS, also referred to as the 'Uberschwinger' or 'rebound' artefact.4,5 In its simplest form the UMS process requires the subtraction of a blurred version of the original image, from the original. The amount of blur gives the algorithm frequency selective capabilities. The halo occurs because the blurred image contains edges that are wider than in the original so that on subtraction a residual inverted contrast boundary is produced. Where high intensity boundaries occur in the images, eg around bone or metal, the impact of any blur is greater, resulting in a more pronounced shadow effect (Fig. 1).

This can mimic boundary changes relating to genuine pathology and in general radiology includes prosthetic loosening, pneumothorax, pneumomediastinum and diaphragmatic calcification.4,5,6 These effects have also been recently reported in veterinary publications as the uptake of digital imaging in that field increases.7,8 In dentistry a recent case example highlighted the potential for the halo artefact emanating from restoration boundaries to be misdiagnosed as misfit of the luted definitive restoration or caries.9 Although the UMS artefact is quite gross it clearly demonstrates the potential for artefacts to mimic genuine pathology. Even relatively benign processing such as edge enhancement, if excessively applied, can mimic pathology such as osteolytic lesions and Paget's disease.10

Image processing artefact is still present in the more sophisticated algorithms but is often more subtle or reduced which makes it harder for the observer to identify the presence of any unintended artefacts.

A previous publication has proposed methods for introducing a digital frame of reference into an image that readily allows the nature of an artefact to be isolated and provide feedback to the user on whether a perceived pathology may in fact be artefactual.11 This method is not currently suitable for dental radiology because of its physical size.

Radiology in general dental practice is one area where the adoption of digital imaging has lagged behind general radiology due to the costs and the large number of practices involved. However, the affordability and image quality of new digital systems are improving and there is an increasing momentum towards digital. But with the prevalence of restorations, the large number of disparate systems and the large user base, there is an increased opportunity for induced artefacts to be misdiagnosed as genuine pathology potentially altering treatment decisions.

At a national conference on dental and maxillofacial radiology (BSDMFR meeting, London, September 2008) an interactive demonstration of the potential to mimic recurrent caries or failing crown margins was conducted.

The results of this study are presented in this paper to highlight the potential risks in using image processing. This is particularly relevant in dental radiology where there is a prevalence of high contrast boundaries relating to pathological changes.

Method

Sixteen image segments were taken from digital panoramic images acquired on a Fuji Capsula XC Computed Radiography system (Bedford, UK). Each segment was chosen to have only one focus tooth and were selected by a consultant radiologist to cover a range of presentations of recurrent caries or crown margin failures. All images were exported with no image processing applied. The images were then processed using an unsharp mask algorithm in ImageJ (NIH, http://rsbweb.nih.gov/ij/) with a filter size of 15 and a weighting of 0.6. These parameters were chosen by the experiment controller such that the end presentation was representative of clinical processing. The total image set of 16 raw and 16 processed images were randomly interleaved in presentation order and one image in each pair was randomly selected and flipped about the horizontal axis to help prevent recognition when viewed.

The images were imported into a PowerPoint (Microsoft, Reading, UK) presentation which had been integrated with the Turning Point (Reivo Ltd, Berkshire, UK) interactive audience feedback system. This system collects participant responses to the slide displayed using a numeric keypad. Before the study an information sheet was distributed among the audience explaining the nature of the experiment and setting out methods for consenting and withdrawing from the study. The presentation was shown to the audience at a dental radiology conference under lighting conditions that had been established as allowing the users to see the keypad with the minimum ambient light. The first few slides in the presentation reiterated the nature of the experiment and the ability to withdraw at any time. The pathology to look for was also stated as:

'Where there is a coronal restoration (this can be mesial/distal and/or occlusal) is there recurrent caries?

'Where there is a crown, is there a failing crown margin?'

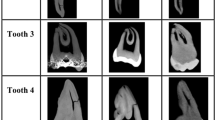

A visual example of the extremes of range of presentations was also shown (Fig. 2).

The first interactive slide in the presentation asked the user to confirm whether they consented or not to take part, the second collected the occupation of the participant and was the only demographic data collected. All the responses were correlated against keypad but this was not related to individual users. The subsequent four slides were based on the verified login system12 and presented numbers on backgrounds at 0%, 50% and 100% grey level range. All the numbers were 5% above the background and the user had to repeat the number on their keypad. In this way the minimal level of contrast resolution for each participant was validated. The following slide sequence showed the raw and processed images in their random sequence. The participant indicated their confidence of pathology for each image as:

-

1

1. = Definitely no pathology

-

2

2. = Probably no pathology

-

3

3. = Possibly pathology

-

4

4. = Probably pathology

-

5

5. = Definitely pathology.

These options were shown on each image slide as an aide memoir. The data were exported into Excel (Microsoft, Reading, UK) for analysis.

Results

Of the 53 members of the audience who responded, 49 consented to take part in this study. Of these seven were withdrawn from the study by the experiment controller, two because they had failed the verified login and five as they had three or more null results in a row and were deemed to have withdrawn from the study as per the study information sheet.

The demographics for the validated participants are shown in Table 1.

Figure 3 shows an image pair used in the study processed. The scores in these images changed from an average of 1.62 for the unprocessed image to 3.97 in the processed image for all observers.

Figure 4 shows all the responses for the unprocessed and processed images. It can be seen that there is a reasonably even spread of occurrences at each confidence level for the unprocessed data but this is skewed to the high scores after processing. Figure 5 shows how the level 1 response on the unprocessed images changed after processing.

Discussion

In psychophysical studies large numbers of observers are required, however, for certain groups, for example consultant radiologists, achieving these high numbers is difficult. This study used a novel method for a large scale observer trial. The key elements that facilitated this were a large cohort of relevant health professionals attending a conference, interactive key pads to record responses to test images and the use of the verified login method to validate the minimum contrast sensitivity of each observer in the auditorium. The experimental management was also expedited by the use of the key pads allowing demographic and consent information to be rapidly collected. In total the experiment lasted for 20 minutes. Training, both in the use of the key pads and the diagnostic task were addressed by a warm up session at the beginning of the experiment and the collection of the support information. Two participants failed the verified login test. This could have been due to keypad error, misunderstanding of the test or contrast acuity issues but it was not possible in this study to identify the exact cause. It is important to note that this type of test is not designed to test spatial resolution just contrast. In the task proposed spatial resolution was of lesser concern than contrast.

This is thought to be the first time this method of validated participation in large scale observer studies has been employed and adds a new option for studies of this type.

Although the possibility of image artefacts mimicking pathology is well known, especially for the common algorithms, the clinical impact is not well demonstrated. In dental radiographs the halo effect is particularly evident due to the prevalence of high contrast signal boundaries.

Although, anecdotally, frequent users of digital systems do recognise the halo effect and adapt their interpretation accordingly a misdiagnosis may still arise with new users, locums or remote reporting. Additionally more complex adaptive algorithms may produce artefacts that are less obvious and therefore harder to recognise.

In this study the intent was to illustrate the potential for the halo effect to be misinterpreted as genuine pathology in the presence of restorations and was conducted as an educational demonstration. However, the novel, and robust, experimental design coupled with the significant results has prompted wider dissemination especially considering the paucity of clinical results in this area.

In the analysis of the study results scores of 1 or 2 were deemed to require no intervention. A score of 3 would require watching and scores of 4 or 5 could solicit intervention. Therefore a significant change was considered to be a score of 1 or 2 changing to a 4 or 5 ie a change from 'do nothing' to 'intervene'. It is emphasised that the X-ray is only one diagnostic tool and clinical presentation/examination has not been considered in this study and may have moderated any intervention.

A recognised limitation of this study is the use of panoral image data rather than intraoral images due to availability in our institution. It has been reported that there is a reduced diagnostic accuracy for caries diagnosis from panoral images13 but more recent studies have suggested that there is 'no difference in overall diagnostic performance ... between bitewing and panoramic radiographs for the diagnosis of occlusal dentine caries.'14 In this study a relative change was the key consideration rather than the absolute diagnosis. It was also considered that the presentation of the high contrast boundaries were comparable to those in intraoral images. In addition if recurrent caries was suspected on viewing a panoral image an intervention could still occur, eg additional imaging. Therefore these results do have clinical relevance within panoral imaging and are considered highly suggestive of the potential for the same impact in intraoral imaging.

In order to minimise the potential to misdiagnose artefact as genuine pathology, it is recommended that for all digital radiographs containing pathology relating to high contrast boundaries, non-related high contrast features, such as unrelated restorations or tooth/bone margins, are also considered to confirm the presence of artefact. If there is still doubt the raw data should be referred to.

Conclusion

We believe that the experimental methodology presented opens up the opportunity for fast and robust large scale observer studies. The study results illustrate that there is a potential for image processing artefacts to mimic pathology that cannot be discriminated from genuine pathology. Users of digital systems should be aware of this and if in doubt reference should be made to non-related high contrast signals and ultimately refer to the raw data.

References

Brennan J. An introduction to digital radiography in dentistry. J Orthod 2002 29: 66–69.

Christensen G J. Why switch to digital radiography? J Am Dent Assoc 2004 135: 1437–1439.

Vuylsteke P, Schoeters E . Image processing in computed radiography. Proceedings of the International Symposium on Computerized Tomography for Industrial Applications and Image Processing in Radiology 1999. pp 87–101.

Tan T H, Boothroyd A E . Uberschwinger artefact in computed radiographs. Br J Radiol 1997; 70: 431.

Oestmann J W, Prokop M, Schaefer C M, Galanski M . Hardware and software artifacts in storage phosphor radiography. Radiographics 1991; 11: 795–805.

Solomon S L, Jost R G, Glazer H S, Sagel S S, Anderson D J, Molina P L . Artifacts in computed radiography. Am J Roentgenol 1991; 157: 181–185.

Jiménez D A, Armbrust L J . Digital radiographic artifacts. Vet Clin North Am Small Anim Pract 2009; 39: 689–709.

Drost W T, Reese D J, Hornof W J . Digital radiography artifacts. Vet Radiol Ultrasound 2008; 49(1 Suppl 1): S48–S56.

Schweitzer D M, Berg R W . A digital radiographic artifact: a clinical report. J Prosthet Dent 2010; 103: 326–329.

Tan L T H, Ong K L . Artifacts in computed radiography. Hong Kong J Emerg Med 2000; 7: 27–32.

Brettle D S. A digital frame of reference for viewing digital images. Br J Radiol 2001; 74: 69–72.

Brettle D S, Bacon S E . A method for verified access when using soft copy display. Br J Radiol 2005; 78: 749–751.

Rushton V E, Horner K . The use of panoramic radiology in dental practice. J Dent 1996; 24: 185–201.

Thomas M F, Ricketts D N J, Wilson R F . Occlusal caries diagnosis in molar teeth from bitewing and panoramic radiographs. Prim Dent Care 2001; 8: 63–69.

Acknowledgements

The authors would like to thank the British Society of Dental and Maxillofacial Radiology for supporting this study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Brettle, D., Carmichael, F. The impact of digital image processing artefacts mimicking pathological features associated with restorations. Br Dent J 211, 167–170 (2011). https://doi.org/10.1038/sj.bdj.2011.676

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.bdj.2011.676

This article is cited by

-

Impact of enhancement filters of a CMOS system on halo artifact expression at the bone-to-implant interface

Clinical Oral Investigations (2024)

-

Automatic detection of image sharpening in maxillofacial radiology

BMC Oral Health (2021)

-

A modified unsharp masking with adaptive threshold and objectively defined amount based on saturation constraints

Multimedia Tools and Applications (2019)