Abstract

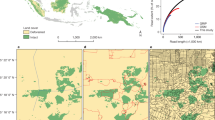

Modern city governance relies heavily on crowdsourcing to identify problems such as downed trees and power lines. A major concern is that residents do not report problems at the same rates, with heterogeneous reporting delays directly translating to downstream disparities in how quickly incidents can be addressed. Here we develop a method to identify reporting delays without using external ground-truth data. Our insight is that the rates at which duplicate reports are made about the same incident can be leveraged to disambiguate whether an incident has occurred by investigating its reporting rate once it has occurred. We apply our method to over 100,000 resident reports made in New York City and to over 900,000 reports made in Chicago, finding that there are substantial spatial and socioeconomic disparities in how quickly incidents are reported. We further validate our methods using external data and demonstrate how estimating reporting delays leads to practical insights and interventions for a more equitable, efficient government service.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Public 311 data for both NYC and Chicago is available via their Open Data portals, and are updated periodically. The main datasets we used from these portals are as follows: 311 service requests made to the Forestry unit of NYC Parks. This dataset is actively maintained, and the version used in the main text was retrieved on 22 October 2022 at 14:45 EDT from https://data.cityofnewyork.us/Environment/Forestry-Service-Requests/mu46-p9is. Inspections conducted by the Forestry unit of NYC Parks. This dataset is actively maintained, and the version used in the main text was retrieved 22 October 2022 at 14:32 EDT from https://data.cityofnewyork.us/Environment/Forestry-Inspections/4pt5-3vv4. Work orders completed by the Forestry unit of NYC Parks. This dataset is actively maintained, and the version used in the main text was retrieved 22 October 2022 at 14:31 EDT from https://data.cityofnewyork.us/Environment/Forestry-Work-Orders/bdjm-n7q4. Risk assessments by the Forestry unit of NYC Parks. This dataset is actively maintained, and the version used in the main text was retrieved 24 October 2022 at 14:30 EDT from https://data.cityofnewyork.us/Environment/Forestry-Risk-Assessments/259a-b6s7; 311 service requests data from City of Chicago. This dataset is actively maintained, and the version used in the main text was retrieved 4 July 2022 at 21:20 EDT from https://data.cityofchicago.org/Service-Requests/311-Service-Requests/v6vf-nfxy In the reproduction code capsule23, we have included official links to the portals and further host the exact version of data used in this work, along with description of the data. The primary NYC results in the main text are aided by private data confidentially provided to us by NYC DPR that cannot be shared—that data additionally contains columns for anonymized reporter identification, to allow filtering duplicate reports by the same user. All results replicate with the available public data, as reported in the Supplementary Section. The Chicago analyses are fully based on publicly available data. Source Data are provided with this paper.

References

Yuan, Q. Co-production of public service and information technology: a literature review. In Proc. 20th Annual International Conference on Digital Government Research (ACM, 2019).

Brabham, D. C. Crowdsourcing in the Public Sector (Georgetown Univ. Press, 2015).

Schwester, R. W., Carrizales, T. & Holzer, M. An examination of the municipal 311 system. Int. J. Org. Theory Behav. 12, 218–236 (2009).

Hacker, K. P., Greenlee, A. J., Hill, A. L., Schneider, D. & Levy, M. Z. Spatiotemporal trends in bed bug metrics: New York City. PloS One 17, e0268798 (2022).

Minkoff, S. L. NYC 311: a tract-level analysis of citizen–government contacting in New York City. Urban Affairs Rev. 52, 211–246 (2016).

Lee, M., Wang, J., Janzen, S., Winter, S. & Harlow, J. Crowdsourcing behavior in reporting civic issues: the case of Boston’s 311 systems. In Academy of Management Proceedings NY 10510 (Academy of Management, 2021).

Thijssen, P. & Van Dooren, W. Who you are/where you live: do neighbourhood characteristics explain co-production? Int. Rev. Adm. Sci. 82, 88–109 (2016).

Clark, B. Y., Brudney, J. L. & Jang, Sung-Gheel Coproduction of government services and the new information technology: investigating the distributional biases. Public Adm. Rev. 73, 687–701 (2013).

Cavallo, S., Lynch, J. & Scull, P. The digital divide in citizen-initiated government contacts: a GIS approach. J. Urban Technol. 21, 77–93 (2014).

Kontokosta, C. E. & Hong, B. Bias in smart city governance: how socio-spatial disparities in 311 complaint behavior impact the fairness of data-driven decisions. Sustain. Cities Soc. 64, 102503 (2021).

Pak, B., Chua, A. & Vande Moere, A. FixMyStreet Brussels: socio-demographic inequality in crowdsourced civic participation. J. Urban Technol. 24, 65–87 (2017).

Kontokosta, C., Hong, B. & Korsberg, K. Equity in 311 reporting: understanding socio-spatial differentials in the propensity to complain. Preprint at arXiv https://doi.org/10.48550/arXiv.1710.02452 (2017).

O’Brien, DanielTumminelli, Sampson, R. J. & Winship, C. Ecometrics in the age of big data: measuring and assessing ‘broken windows’ using large-scale administrative records. Sociol. Methodol. 45, 101–147 (2015).

O’Brien, DanielTumminelli, Offenhuber, D., Baldwin-Philippi, J., Sands, M. & Gordon, E. Uncharted territoriality in coproduction: the motivations for 311 reporting. J. Public Admin. Res.Theory 27, 320–335 (2017).

O’Brien, D. T. The Urban Commons: How Data and Technology Can Rebuild Our Communities (Harvard Univ. Press, 2018).

Klemmer, K., Neill, D. B. & Jarvis, S. A. Understanding spatial patterns in rape reporting delays. R. Soc. Open Sci. 8, 201795 (2021).

Lum, K. & Isaac, W. To predict and serve? Significance 13, 14–19 (2016).

Nil-Akpinar, J., De-Arteaga, M. & Chouldechova, A. The effect of differential victim crime reporting on predictive policing systems. In Proc. 2021 ACM Conference on Fairness, Accountability, and Transparency 838–849 (ACM, 2021).

Morris, M. et al. Bayesian hierarchical spatial models: implementing the Besag York Mollié model in stan. Spat. Spatio-temporal Epidemiol. 31, 100301 (2019).

Kruks-Wisner, G. Seeking the local state: gender, caste, and the pursuit of public services in post-tsunami India. World Dev. 39, 1143–1154 (2011).

Lambert, D. Zero-inflated poisson regression, with an application to defects in manufacturing. Technometrics 34, 1–14 (1992).

Carpenter, B. et al. Stan: a probabilistic programming language. J. Stat. Softw. 76, 1–32 (2017).

Liu, Z. & Garg, N. Quantifying spatial under-reporting disparities in resident crowdsourcing. Code Ocean https://doi.org/10.24433/CO.0984693.V1 (2023).

Garg, N. & Liu, Z. Nikhgarg/Spatial_Underreporting_Crowdsourcing: Accepted, November (Zenodo, 2023); https://doi.org/10.5281/zenodo.10086832

Liu, Z. & Garg, N. Reporting Rate Estimation Method, November (Zenodo, 2023); https://doi.org/10.5281/zenodo.10086346

Acknowledgements

We benefited from discussions with A. Ahmad, A. Schein, A. Koenecke, A. Kobald, B. Laufer, E. Pierson, G. Agostini, K. Shiragur, T. Jiang and Q. Xie. We also thank the New York City Department of Parks and Recreation for their valuable work, inside knowledge, and data, and we especially thank F. Watt. This work was funded in part by the Urban Tech Hub at Cornell Tech, and we especially thank A. Townsend and N. Sobers. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Z.L. and N.G. devised the method, performed data analysis, analysed the results, and wrote the paper. U.B. provided domain expertise and anonymized data, and contributed to writing.

Corresponding author

Ethics declarations

Competing interests

Uma Bhandaram is an employee of the New York Department of Parks and Recreation. We use data from the NYC DPR and discuss how our findings have implications for their policies.

Peer review

Peer review information

Nature Computational Science thanks Daniel T. O’Brien, Ravi Shroff, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Fernando Chirigati, in collaboration with the Nature Computational Science team. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Sections 1–4, Tables 1–28, Figs. 1–22 and Proposition 1.

Source data

Source Data Fig. 1

Statistical source data.

Source Data Fig. 2

Statistical source data.

Source Data Fig. 3

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Z., Bhandaram, U. & Garg, N. Quantifying spatial under-reporting disparities in resident crowdsourcing. Nat Comput Sci 4, 57–65 (2024). https://doi.org/10.1038/s43588-023-00572-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s43588-023-00572-6

This article is cited by

-

Disentangling truth from bias in naturally occurring data

Nature Computational Science (2023)