Abstract

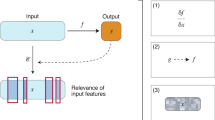

Autoencoders are versatile tools in molecular informatics. These unsupervised neural networks serve diverse tasks such as data-driven molecular representation and constructive molecular design. This Review explores their algorithmic foundations and applications in drug discovery, highlighting the most active areas of development and the contributions autoencoder networks have made in advancing this field. We also explore the challenges and prospects concerning the utilization of autoencoders and the various adaptations of this neural network architecture in molecular design.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Schneider, G. & Wrede, P. Artificial neural networks for computer-based molecular design. Prog. Biophys. Mol. Biol. 70, 175–222 (1998).

Lecun, Y. & Soulie Fogelman, F. Modeles connexionnistes de l’apprentissage. Intellectica 2, 114–143 (1987).

Bourlard, H. & Kamp, Y. Auto-association by multilayer perceptrons and singular value decomposition. Biol. Cybern. 59, 291–294 (1988).

Baldi, P. & Hornik, K. Neural networks and principal component analysis: learning from examples without local minima. Neural Netw. 2, 53–58 (1989).

Hinton, G. E. & Zemel, R. S. Autoencoders, minimum description length and Helmholtz free energy. In Proc. 6th International Conference on Neural Information Processing Systems 3–10 (Morgan Kaufmann, 1993).

Kramer, M. A. Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 37, 233–243 (1991).

Cho, K. et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. In Proc. 2014 Conference on Empirical Methods in Natural Language Processing (eds Moschitti, A. et al.) 1724–1734 (ACL, 2014).

Bond-Taylor, S., Leach, A., Long, Y. & Willcocks, C. G. Deep generative modelling: a comparative review of VAEs, GANs, normalizing flows, energy-based and autoregressive models. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7327–7347 (2022).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. In Proc. International Conference on Learning Representations (ICLR, 2014).

Rezende, D. J., Mohamed, S. & Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. In Proc. 31st International Conference on Machine Learning (ICML, 2014).

Todeschini, R. & Consonni, V. Handbook of Molecular Descriptors Vol. 11 (WileyVCH, 2000).

Durant, J. L., Leland, B. A., Henry, D. R. & Nourse, J. G. Reoptimization of MDL keys for use in drug discovery. J. Chem. Inf. Comput. Sci. 42, 1273–1280 (2002).

Morgan, H. L. The generation of a unique machine description for chemical structures—a technique developed at chemical abstracts service. J. Chem. Doc. 5, 107–113 (1965).

Yang, X., Wang, Y., Byrne, R., Schneider, G. & Yang, S. Concepts of artificial intelligence for computer-assisted drug discovery. Chem. Rev. 119, 10520–10594 (2019).

Weininger, D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31–36 (1988).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems Vol. 30 (eds Guyon, I. et al.) 5998–6008 (Curran Associates, 2017).

Zhou, J. et al. Graph neural networks: a review of methods and applications. AI Open 1, 57–81 (2020).

Duvenaud, D. K. et al. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems Vol. 28 (eds Cortes, C. et al.) (Curran Associates, 2015).

Kearnes, S., McCloskey, K., Berndl, M., Pande, V. & Riley, P. Molecular graph convolutions: moving beyond fingerprints. J. Comput. Aided Mol. Des. 30, 595–608 (2016).

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

Yang, K. et al. Analyzing learned molecular representations for property prediction. J. Chem. Inf. Model. 59, 3370–3388 (2019).

Bronstein, M. M., Bruna, J., Cohen, T. & Veličković, P. Geometric deep learning: grids, groups, graphs, geodesics, and gauges. Preprint at https://arxiv.org/abs/2104.13478 (2021).

Gao, K. et al. Are 2D fingerprints still valuable for drug discovery? Phys. Chem. Chem. Phys. 22, 8373–8390 (2020).

Bengio, Y., Courville, A. & Vincent, P. Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35, 1798–1828 (2013).

Livingstone, D. in Neural Networks in QSAR and Drug Design: Principles of QSAR and Drug Design (ed. Devillers, J.) 157–176 (Academic Press, 1996).

Lovric, M. et al. Should we embed in chemistry? A comparison of unsupervised transfer learning with PCA, UMAP, and VAE on molecular fingerprints. Pharmaceuticals 14, 758 (2021).

Karlova, A., Dehaen, W. & Svozil, D. Molecular fingerprint VAE. In 2021 ICML Workshop on Computational Biology (ICML, 2021).

Ilnicka, A. & Schneider, G. Compression of molecular fingerprints with autoencoder networks. Mol. Inf. 42, 2300059 (2023).

Xu, Z., Wang, S., Zhu, F. & Huang, J. Seq2seq fingerprint: an unsupervised deep molecular embedding for drug discovery. In Proc. 8th ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics 285–294 (Association for Computing Machinery, 2017).

Samanta, S., O’Hagan, S., Swainston, N., Roberts, T. J. & Kell, D. B. VAE-Sim: a novel molecular similarity measure based on a variational autoencoder. Molecules 25, 3446 (2020).

Honda, S., Shi, S. & Ueda, H. R. SMILES transformer: pre-trained molecular fingerprint for low data drug discovery. Preprint at https://arxiv.org/abs/1911.04738 (2019).

Winter, R., Montanari, F., Noé, F. & Clevert, D.-A. Learning continuous and data-driven molecular descriptors by translating equivalent chemical representations. Chem. Sci. 10, 1692–1701 (2019).

Bjerrum, E. J. Smiles enumeration as data augmentation for neural network modeling of molecules. Preprint at https://arxiv.org/abs/1703.07076 (2017).

Alperstein, Z., Cherkasov, A. & Rolfe, J. T. All SMILES variational autoencoder. In Second Workshop on Machine Learning and the Physical Sciences (NeurIPS, 2019).

Goh, G. B., Siegel, C., Vishnu, A., Hodas, N. O. & Baker, N. Chemception: a deep neural network with minimal chemistry knowledge matches the performance of expert-developed QSAR/QSPR models. Preprint at https://arxiv.org/abs/1706.06689 (2017).

Bjerrum, E. J. & Sattarov, B. Improving chemical autoencoder latent space and molecular de novo generation diversity with heteroencoders. Biomolecules 8, 131 (2018).

Mathea, M., Klingspohn, W. & Baumann, K. Chemoinformatic classification methods and their applicability domain. Mol. Inf. 35, 160–180 (2016).

Iovanac, N. C. & Savoie, B. M. Improved chemical prediction from scarce data sets via latent space enrichment. J. Phys. Chem. A 123, 4295–4302 (2019).

Iovanac, N. C. & Savoie, B. M. Simpler is better: how linear prediction tasks improve transfer learning in chemical autoencoders. J. Phys. Chem. A 124, 3679–3685 (2020).

Tevosyan, A. et al. Improving VAE based molecular representations for compound property prediction. Preprint at https://arxiv.org/abs/2201.04929 (2022).

Tavakoli, M. & Baldi, P. Continuous representation of molecules using graph variational autoencoder. In Proc. AAAI 2020 Spring Symposium on Combining Artificial Intelligence and Machine Learning with Physical Sciences (AAAI, 2020).

Winter, R., Noé, F. & Clevert, D.-A. Auto-encoding molecular conformations. In Machine Learning for Molecules Workshop at NeurIPS 2020 (NeurIPS, 2021).

Hu, Q., Feng, M., Lai, L. & Pei, J. Prediction of drug-likeness using deep autoencoder neural networks. Front. Genet. 9, 585 (2018).

Masuda, T., Ragoza, M. & Koes, D. R. Generating 3D molecular structures conditional on a receptor binding site with deep generative models. In Machine Learning for Structural Biology Workshop, NeurIPS 2020 (NeurIPS, 2020).

Skalic, M., Jiménez, J., Sabbadin, D. & De Fabritiis, G. Shape-based generative modeling for de novo drug design. J. Chem. Inf. Model. 59, 1205–1214 (2019).

Li, C. et al. Geometry-based molecular generation with deep constrained variational autoencoder. IEEE Trans. Neural Netw. Learn. Syst. https://doi.org/10.1109/TNNLS.2022.3147790 (2022).

Le, T., Winter, R., Noé, F. & Clevert, D.-A. Neuraldecipher—reverse-engineering extended-connectivity fingerprints (ECFPs) to their molecular structures. Chem. Sci. 11, 10378–10389 (2020).

Clevert, D.-A., Le, T., Winter, R. & Montanari, F. Img2mol—accurate SMILES recognition from molecular graphical depictions. Chem. Sci. 12, 14174–14181 (2021).

Blaschke, T., Olivecrona, M., Engkvist, O., Bajorath, J. & Chen, H. Application of generative autoencoder in de novo molecular design. Mol. Inf. 37, 1700123 (2018).

Gómez-Bombarelli, R. et al. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 4, 268–276 (2018).

Kusner, M. J., Paige, B. & Hernández-Lobato, J. M. Grammar variational autoencoder. In Proc. 34th International Conference on Machine Learning (ICML, 2017).

Dai, H., Tian, Y., Dai, B., Skiena, S. & Song, L. Syntax-directed variational autoencoder for structured data. In International Conference on Learning Representations (ICLR, 2018).

Podda, M., Bacciu, D. & Micheli, A. A deep generative model for fragment-based molecule generation. In Proc. 23rd International Conference on Artificial Intelligence and Statistics (AISTATS, 2020).

Lim, J., Ryu, S., Kim, J. W. & Kim, W. Y. Molecular generative model based on conditional variational autoencoder for de novo molecular design. J. Cheminform. 10, 31 (2018).

Mohammadi, S., O’Dowd, B., Paulitz-Erdmann, C. & Goerlitz, L. Penalized variational autoencoder for molecular design. Preprint at chemRxiv https://doi.org/10.26434/chemrxiv.7977131.v2 (2019).

Yan, C., Wang, S., Yang, J., Xu, T. & Huang, J. Re-balancing variational autoencoder loss for molecule sequence generation. In Proc. 11th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics article no. 54 (Association for Computing Machinery, 2020).

Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Mach. Learn. Sci. Technol. 1, 045024 (2020).

Mercado, R. et al. Practical notes on building molecular graph generative models. Appl. AI Lett. https://doi.org/10.1002/ail2.18 (2020).

Yan, N. et al. Molecule generation for drug design: a graph learning perspective. Preprint at https://arxiv.org/abs/2202.09212 (2022).

Kipf, T. N. & Welling, M. Variational graph auto-encoders. In Bayesian Deep Learning Workshop (NIPS 2016) (NIPS, 2016).

Ma, T., Chen, J. & Xiao, C. Constrained generation of semantically valid graphs via regularizing variational autoencoders. In Proc. 32nd International Conference on Neural Information Processing Systems (NeurIPS, 2018).

Simonovsky, M. & Komodakis, N. GraphVAE: towards generation of small graphs using variational autoencoders. In Artificial Neural Networks and Machine Learning, ICANN 2018 (Springer, 2018).

Flam-Shepherd, D., Wu, T. & Aspuru-Guzik, A. Graph deconvolutional generation. Preprint at https://arxiv.org/abs/2002.07087 (2020).

Grover, A., Zweig, A. & Ermon, S. Graphite: iterative generative modeling of graphs. In International Conference On Machine Learning (ICML, 2019).

Nesterov, V., Wieser, M. & Roth, V. 3DMolNet: a generative network for molecular structures. In Machine Learning for Molecules Workshop at NeurIPS 2020 (NeurIPS, 2020).

Kang, S.-g, Morrone, J. A., Weber, J. K. & Cornell, W. D. Analysis of training and seed bias in small molecules generated with a conditional graph-based variational autoencoder—insights for practical AI-driven molecule generation. J. Chem. Inf. Model. 62, 801–816 (2022).

Li, Y., Vinyals, O., Dyer, C., Pascanu, R. & Battaglia, P. Learning deep generative models of graphs. In Proc. 35th International Conference on Machine Learning (ICML, 2018).

Liu, Q., Allamanis, M., Brockschmidt, M. & Gaunt, A. L. Constrained graph variational autoencoders for molecule design. In Proc. 32nd International Conference on Neural Information Processing Systems (NeurIPS, 2018).

Lim, J., Hwang, S.-Y., Moon, S., Kim, S. & Kim, W. Y. Scaffold-based molecular design with a graph generative model. Chem. Sci. 11, 1153–1164 (2020).

Rigoni, D., Navarin, N. & Sperduti, A. Conditional constrained graph variational autoencoders for molecule design. In IEEE Symposium Series on Computational Intelligence (SSCI) 729-736 (IEEE, 2020).

Samanta, B. et al. NeVAE: a deep generative model for molecular graphs. In Proc. AAAI Conference on Artificial Intelligence (AAAI, 2019).

Bresson, X. & Laurent, T. A two-step graph convolutional decoder for molecule generation. In Second Workshop on Machine Learning and the Physical Sciences (NeurIPS 2019) (NeurIPS, 2019).

Armitage, J. et al. Fragment graphical variational autoencoding for screening molecules with small data. Preprint at https://arxiv.org/abs/1910.13325 (2019).

Kong X., Huang W., Tan Z. & Liu Y., Molecule generation by principal subgraph mining and assembling. In Advances in Neural Information Processing Systems 35 (NeurIPS 2022) (eds Koyejo, S. et al.) (NeurIPS, 2022).

Jin, W., Barzilay, R. & Jaakkola, T. Junction tree variational autoencoder for molecular graph generation. In Proc. 35th International Conference on Machine Learning (ICML, 2018).

Chang, D. T. Tiered latent representations and latent spaces for molecular graphs. Preprint at https://arxiv.org/abs/1904.02653 (2019).

Chang, D. T. Tiered graph autoencoders with PyTorch geometric for molecular graphs. Preprint at https://arxiv.org/abs/1908.08612 (2019).

Jin, W., Barzilay, R. & Jaakkola, T. Hierarchical generation of molecular graphs using structural motifs. In Proc. 37th International Conference on Machine Learning 4839–4848 (ICML, 2020).

Bradshaw, J., Paige, B., Kusner, M. J., Segler, M. H. S. & Hernández-Lobato, J. M. A model to search for synthesizable molecules. In Proc. 33rd International Conference on Neural Information Processing Systems (eds Wallach, H. et al.) (Curran Associates, 2019).

Hartenfeller, M. et al. DOGS: reaction-driven de novo design of bioactive compounds. PLoS Comput. Biol. 8, e1002380 (2012).

Kajino, H. Molecular hypergraph grammar with its application to molecular optimization. In Proc. 36th International Conference on Machine Learning, Proc. Machine Learning Research Vol. 97 (eds Chaudhuri, K. & Salakhutdinov, R.) 3183–3191 (PMLR, 2019).

Hawkins, P. C. D. Conformation generation: the state of the art. J. Chem. Inf. Model. 57, 1747–1756 (2017).

Simm, G.N.C. & Hernandez-Lobato, J. A generative model for molecular distance geometry. In Proc. 37th International Conference on Machine Learning (ICML, 2020).

Mansimov, E., Mahmood, O., Kang, S. & Cho, K. Molecular geometry prediction using a deep generative graph neural network. Sci. Rep. 9, 20381 (2019).

Xu, M. et al. An end-to-end framework for molecular conformation generation via bilevel programming. In Proc. 38th International Conference on Machine Learning 11537–11547 (ICML, 2021).

Zhu, J. et al. Direct molecular conformation generation. In Transactions on Machine Learning Research (TMLR, 2022).

Riniker, S. & Landrum, G. A. Better informed distance geometry: using what we know to improve conformation generation. J. Chem. Inf. Model. 55, 2562–2574 (2015).

Landlum, G. RDKit: open-source cheminformatics. http://www.rdkit.org

Wang, W. et al. Generative coarse-graining of molecular conformations. In Proc. 39th International Conference on Machine Learning 23213–23236 (ICML, 2022).

Huang, Y., Peng, X., Ma, J. & Zhang, M. 3DLinker: an E(3) equivariant variational autoencoder for molecular linker design. In Proc. 39th International Conference on Machine Learning 9280–9294 (ICML, 2022).

Schneider, G. & Fechner, U. Computer-based de novo design of drug-like molecules. Nat. Rev. Drug Discov. 4, 649–663 (2005).

Joo, S., Kim, M. S., Yang, J. & Park, J. Generative model for proposing drug candidates satisfying anticancer properties using a conditional variational autoencoder. ACS Omega 5, 18642–18650 (2020).

Kim, H., Na, J. & Lee, W. B. Generative chemical transformer: neural machine learning of molecular geometric structures from chemical language via attention. J. Chem. Inf. Model. 61, 5804–5814 (2021).

Kingma, D. P., Rezende, D. J., Mohamed, S. & Welling, M. Semi-supervised learning with deep generative models. In Proc. 27th International Conference on Neural Information Processing Systems Vol. 2, 3581–3589 (MIT Press, 2014).

Kang, S. & Cho, K. Conditional molecular design with deep generative models. J. Chem. Inf. Model. 59, 43–52 (2019).

Iovanac, N. C. & Savoie, B. M. Improving the generative performance of chemical autoencoders through transfer learning. Mach. Learn. Sci. Technol. 1, 045010 (2020).

Griffiths, R.-R. & Hernández-Lobato, J. Constrained Bayesian optimization for automatic chemical design using variational autoencoders. Chem. Sci. 11, 577–586 (2020).

Sattarov, B. et al. De novo molecular design by combining deep autoencoder recurrent neural networks with generative topographic mapping. J. Chem. Inf. Model. 59, 1182–1196 (2019).

Winter, R. et al. Efficient multi-objective molecular optimization in a continuous latent space. Chem. Sci. 10, 8016–8024 (2019).

Chenthamarakshan, V. et al. Cogmol: target-specific and selective drug design for COVID-19 using deep generative models. In Advances in Neural Information Processing Systems (eds Larochelle, H. et al.) (Curran Associates, 2020).

Das, P. et al. Accelerated antimicrobial discovery via deep generative models and molecular dynamics simulations. Nat. Biomed. Eng. 5, 613–623 (2021).

Zhavoronkov, A. et al. Deep learning enables rapid identification of potent DDR1 kinase inhibitors. Nat. Biotechnol. 37, 1038–1040 (2019).

Batra, R. et al. Polymers for extreme conditions designed using syntax-directed variational autoencoders. Chem. Mater. 32, 10489–10500 (2020).

Harel, S. & Radinsky, K. Prototype-based compound discovery using deep generative models. Mol. Pharm. 15, 4406–4416 (2018).

Iovanac, N. C., MacKnight, R. & Savoie, B. M. Actively searching: inverse design of novel molecules with simultaneously optimized properties. J. Phys. Chem. A 126, 333–340 (2022).

Tripp, A., Daxberger, E. & Hernández-Lobato, J. M. Sample-efficient optimization in the latent space of deep generative models via weighted retraining. In 34th Conference on Neural Information Processing Systems (NeurIPS 2020) (NeurIPS, 2020).

Du, Y., Fu, T., Sun, J. & Liu, S. MolGenSurvey: a systematic survey in machine learning models for molecule design. Preprint at https://arxiv.org/abs/2203.14500 (2022).

Sousa, T., Correia, J., Pereira, V. & Rocha, M. Generative deep learning for targeted compound design. J. Chem. Inf. Model. 61, 5343–5361 (2021).

Segler, M. H. S., Kogej, T., Tyrchan, C. & Waller, M. P. Generating focused molecule libraries for drug discovery with recurrent neural networks. ACS Cent. Sci. 4, 120–131 (2018).

Gupta, A. et al. Generative recurrent networks for de novo drug design. Mol. Inform. 37, 1700111 (2018).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Pogány, P., Arad, N., Genway, S. & Pickett, S. D. De novo molecule design by translating from reduced graphs to SMILES. J. Chem. Inf. Model. 59, 1136–1146 (2019).

You, J., Ying, R., Ren, X., Hamilton, W. L. & Leskovec, J. GraphRNN: generating realistic graphs with deep auto-regressive models. In Proc. 35th International Conference on Machine Learning 5708–5717 (ICML, 2018).

Popova, M., Shvets, M., Oliva, J. & Isayev, O. MolecularRNN: generating realistic molecular graphs with optimized properties. Preprint at https://arxiv.org/abs/1905.13372 (2019).

Goodfellow, I. et al. Generative adversarial nets. In Advances in Neural Information Processing Systems (NIPS) (NIPS, 2014).

Guimaraes, G. L., Sánchez-Lengeling, B., Farias, P. L. C. & Aspuru-Guzik, A. Objective-reinforced generative adversarial networks (ORGAN) for sequence generation models. Preprint at http://arxiv.org/abs/1705.10843 (2017).

Cao, N. D. & Kipf, T. MolGAN: an implicit generative model for small molecular graphs. In Proc. ICML2018 Workshop: Theoretical Foundations and Applications of Deep Generative Models (ICML, 2018).

Makhzani, A., Shlens, J., Jaitly, N. & Goodfellow, I. Adversarial autoencoders. In International Conference on Learning Representations (ICLR, 2016).

Polykovskiy, D. et al. Entangled conditional adversarial autoencoder for de novo drug discovery. Mol. Pharm. 15, 4398–4405 (2018).

Kadurin, A. et al. The cornucopia of meaningful leads: applying deep adversarial autoencoders for new molecule development in oncology. Oncotarget 8, 10883–10890 (2017).

Kadurin, A., Nikolenko, S., Khrabrov, K., Aliper, A. & Zhavoronkov, A. druGAN: an advanced generative adversarial autoencoder model for de novo generation of new molecules with desired molecular properties in silico. Mol. Pharm. 14, 3098–3104 (2017).

Prykhodko, O. et al. A de novo molecular generation method using latent vector based generative adversarial network. J. Cheminform. 11, 74 (2019).

Méndez-Lucio, O., Baillif, B., Clevert, D.-A., Rouquié, D. & Wichard, J. De novo generation of hit-like molecules from gene expression signatures using artificial intelligence. Nat. Commun. 11, 10 (2020).

Madhawa, K., Ishiguro, K., Nakago, K. & Abe, M. GraphNVP: an invertible flow model for generating molecular graphs. Preprint at https://arxiv.org/abs/1905.11600 (2019).

Zang, C. & Wang, F. MoFlow: an invertible flow model for generating molecular graphs. In Proc. 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 617–626 (Association for Computing Machinery, 2020).

Shi, C. et al. GraphAF: a flow-based autoregressive model for molecular graph generation. In International Conference on Learning Representations (ICLR, 2020).

Luo, Y., Yan, K. & Ji, S. GraphDF: a discrete flow model for molecular graph generation. In Proc. 38th International Conference on Machine Learning, Proc. Machine Learning Research Vol. 139 (eds Meila, M. & Zhang, T.) 7192–7203 (PMLR, 2021).

Ma, C. & Zhang, X. GF-VAE: a flow-based variational autoencoder for molecule generation. In Proc. 30th ACM International Conference on Information and Knowledge Management 1181–1190 (Association for Computing Machinery, 2021).

Olivecrona, M., Blaschke, T., Engkvist, O. & Chen, H. Molecular de-novo design through deep reinforcement learning. J. Cheminform. 9, 48 (2017).

Popova, M., Isayev, O. & Tropsha, A. Deep reinforcement learning for de novo drug design. Sci. Adv. 4, eaap7885 (2018).

Stahl, N., Falkman, G., Karlsson, A., Mathiason, G. & Bostrom, J. Deep reinforcement learning for multiparameter optimization in de novo drug design. J. Chem. Inf. Model. 59, 3166–3176 (2019).

Pereira, T., Abbasi, M., Ribeiro, B. & Arrais, J. P. Diversity oriented deep reinforcement learning for targeted molecule generation. J. Cheminform. 13, 21 (2021).

Zhou, Z., Kearnes, S., Li, L., Zare, R. N. & Riley, P. Optimization of molecules via deep reinforcement learning. Sci. Rep. 9, 10752 (2019).

You, J., Liu, B., Ying, R., Pande, V. & Leskovec, J. Graph convolutional policy network for goal-directed molecular graph generation. In Proc. 32nd International Conference on Neural Information Processing Systems 6412–6422 (Curran Associates, 2018).

Romeo Atance, S., Viguera Diez, J., Engkvist, O., Olsson, S. & Mercado, R. De novo drug design using reinforcement learning with graph-based deep generative models. In Reinforcement Learning for Real Life (RL4RealLife) Workshop in the 38th International Conference on Machine Learning (ICML, 2021).

Gottipati, S. K. et al. Towered actor critic for handling multiple action types in reinforcement learning for drug discovery. Proc. AAAI Conf. Artif. Intell. 35, 142–150 (2021).

Kwon, Y. et al. Efficient learning of non-autoregressive graph variational autoencoders for molecular graph generation. J. Cheminform. 11, 70 (2019).

Kearnes, S., Li, L. & Riley, P. Decoding molecular graph embeddings with reinforcement learning. In ICML 2019 Workshop on Learning and Reasoning with Graph-Structured Data (ICML, 2019).

Yu, Y., Kossinna, P., Li, Q., Liao, W. & Zhang, Q. Explainable autoencoder-based representation learning for gene expression data. Preprint at bioRxiv https://doi.org/10.1101/2021.12.21.473742 (2021).

Acknowledgements

This research was supported by the Swiss National Science Foundation to G.S. (SNSF, grant no. 205321\_182176).

Author information

Authors and Affiliations

Contributions

Both authors made equal contributions to the paper. A.I. conducted the literature analysis and carried out the experiment as described in Supplementary Information.

Corresponding author

Ethics declarations

Competing interests

G.S. declares a potential financial conflict of interest as co-founder of inSili.com LLC, Zurich, and in his role as scientific consultant to the pharmaceutical industry.

Peer review

Peer review information

Nature Computational Science thanks Igor Baskin and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Kaitlin McCardle, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1 and 2 and Tables 1 and 2.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ilnicka, A., Schneider, G. Designing molecules with autoencoder networks. Nat Comput Sci 3, 922–933 (2023). https://doi.org/10.1038/s43588-023-00548-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s43588-023-00548-6

This article is cited by

-

Prospective de novo drug design with deep interactome learning

Nature Communications (2024)