Abstract

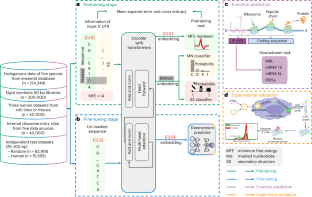

The 5′ untranslated region (UTR), a regulatory region at the beginning of a messenger RNA (mRNA) molecule, plays a crucial role in regulating the translation process and affects the protein expression level. Language models have showcased their effectiveness in decoding the functions of protein and genome sequences. Here, we introduce a language model for 5′ UTR, which we refer to as the UTR-LM. The UTR-LM is pretrained on endogenous 5′ UTRs from multiple species and is further augmented with supervised information including secondary structure and minimum free energy. We fine-tuned the UTR-LM in a variety of downstream tasks. The model outperformed the best known benchmark by up to 5% for predicting the mean ribosome loading, and by up to 8% for predicting the translation efficiency and the mRNA expression level. The model was also applied to identifying unannotated internal ribosome entry sites within the untranslated region and improved the area under the precision–recall curve from 0.37 to 0.52 compared to the best baseline. Further, we designed a library of 211 new 5′ UTRs with high predicted values of translation efficiency and evaluated them via a wet-laboratory assay. Experiment results confirmed that our top designs achieved a 32.5% increase in protein production level relative to well-established 5′ UTRs optimized for therapeutics.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets are available and can be downloaded at https://codeocean.com/capsule/6711822 (ref. 39). This link includes training data for the pretrained model as well as datasets for various downstream tasks. Detailed statistics for these datasets are provided in Supplementary DiscussionA. Source data are provided with this paper.

Code availability

The code is freely available at https://github.com/a96123155/UTR-LM (ref. 40) under the GNU General Public Licence Version 3 and the implemented demo can be found at https://codeocean.com/capsule/6711822 (ref. 39).

References

Araujo, P. R. et al. Before it gets started: regulating translation at the 5′ UTR. Comp. Funct. Genomics 2012, 475731 (2012).

Miao, Z., Tidu, A., Eriani, G. & Martin, F. Secondary structure of the SARS-CoV-2 5′-UTR. RNA Biol. 18, 447–456 (2021).

Li, X., Kazan, H., Lipshitz, H. D. & Morris, Q. D. Finding the target sites of RNA-binding proteins. Wiley Interdiscip. Rev. RNA 5, 111–130 (2014).

Zeraati, M. et al. Cancer-associated noncoding mutations affect RNA G-quadruplex-mediated regulation of gene expression. Sci. Rep. 7, 708 (2017).

Karollus, A., Avsec, Ž. & Gagneur, J. Predicting mean ribosome load for 5′ UTR of any length using deep learning. PLoS Comput. Biol. 17, e1008982 (2021).

Sample, P. J. et al. Human 5′ UTR design and variant effect prediction from a massively parallel translation assay. Nat. Biotechnol. 37, 803–809 (2019).

Cao, J. et al. High-throughput 5′ UTR engineering for enhanced protein production in non-viral gene therapies. Nat. Commun. 12, 4138 (2021).

Barazandeh, S., Ozden, F., Hincer, A., Seker, U. O. S. & Cicek, A. E. UTRGAN: learning to generate 5′ UTR sequences for optimized translation efficiency and gene expression. Preprint at bioRxiv https://doi.org/10.1101/2023.01.30.526198 (2023).

Zheng, W. et al. Discovery of regulatory motifs in 5′ untranslated regions using interpretable multi-task learning models. Cell Syst. 14, 1103–1112.e6 (2023).

Chen, J. et al. Interpretable RNA foundation model from unannotated data for highly accurate RNA structure and function predictions. Preprint at https://doi.org/10.48550/arxiv.2204.00300 (2022).

Ozden, F., Barazandeh, S., Akboga, D., Seker, U. O. S. & Cicek, A. E. RNAGEN: a generative adversarial network-based model to generate synthetic RNA sequences to target proteins. Preprint at bioRxiv https://doi.org/10.1101/2023.07.11.548246 (2023).

Akiyama, M. & Sakakibara, Y. Informative RNA base embedding for RNA structural alignment and clustering by deep representation learning. NAR Genom. Bioinform. 4, lqac012 (2022).

Wang, J. & Gribskov, M. IRESpy: an XGBoost model for prediction of internal ribosome entry sites. BMC Bioinf. 20, 409 (2019).

Kolekar, P., Pataskar, A., Kulkarni-Kale, U., Pal, J. & Kulkarni, A. IRESPred: web server for prediction of cellular and viral internal ribosome entry site (IRES). Sci. Rep. 6, 27436 (2016).

Zhao, J. et al. IRESfinder: identifying RNA internal ribosome entry site in eukaryotic cell using framed k-mer features. J. Genet. Genomics 45, 403–406 (2018).

Zhou, Y. et al. DeepCIP: a multimodal deep learning method for the prediction of internal ribosome entry sites of circRNAs. Comput. Biol. Med. 164, 107288 (2023).

Zeng, C. et al. Leveraging mRNA sequences and nanoparticles to deliver SARS-CoV-2 antigens in vivo. Adv. Mater. 32, e2004452 (2020).

Babendure, J. R., Babendure, J. L., Ding, J.-H. & Tsien, R. Y. Control of mammalian translation by mRNA structure near caps. RNA 12, 851–861 (2006).

Hinnebusch, A. G., Ivanov, I. P. & Sonenberg, N. Translational control by 5′-untranslated regions of eukaryotic mRNAs. Science 352, 1413–1416 (2016).

Calvo, S. E., Pagliarini, D. J. & Mootha, V. K. Upstream open reading frames cause widespread reduction of protein expression and are polymorphic among humans. Proc. Natl Acad. Sci. USA 106, 7507–7512 (2009).

Zuccotti, P. & Modelska, A. Studying the translatome with polysome profiling. Post-Transcriptional Gene Regulation (ed Dassi, E.) 59–69 (2016).

Whiffin, N. et al. Characterising the loss-of-function impact of 5′ untranslated region variants in 15,708 individuals. Nat. Commun. 11, 2523 (2020).

Kozak, M. An analysis of 5′-noncoding sequences from 699 vertebrate messenger RNAs. Nucleic Acids Res. 15, 8125–8148 (1987).

Kozak, M. Downstream secondary structure facilitates recognition of initiator codons by eukaryotic ribosomes. Proc. Natl Acad. Sci. USA 87, 8301–8305 (1990).

Stoneley, M. & Willis, A. E. Cellular internal ribosome entry segments: structures, trans-acting factors and regulation of gene expression. Oncogene 23, 3200–3207 (2004).

Weingarten-Gabbay, S. et al. Comparative genetics. Systematic discovery of cap-independent translation sequences in human and viral genomes. Science 351, aad4939 (2016).

Zhao, J. et al. IRESbase: a comprehensive database of experimentally validated internal ribosome entry sites. Genom. Proteom. Bioinform. 18, 129–139 (2020).

Mokrejs, M. et al. IRESite–a tool for the examination of viral and cellular internal ribosome entry sites. Nucleic Acids Res. 38, D131–D136 (2010).

Kalvari, I. et al. Rfam 14: expanded coverage of metagenomic, viral and microRNA families. Nucleic Acids Res. 49, D192–D200 (2021).

Leppek, K. et al. Combinatorial optimization of mRNA structure, stability, and translation for RNA-based therapeutics. Nat. Commun. 13, 1536 (2022).

Gleason, A. C., Ghadge, G., Chen, J., Sonobe, Y. & Roos, R. P. Machine learning predicts translation initiation sites in neurologic diseases with nucleotide repeat expansions. PLoS ONE 17, e0256411 (2022).

Hernández, G., Osnaya, V. G. & Pérez-Martínez, X. Conservation and variability of the AUG initiation codon context in eukaryotes. Trends Biochem. Sci. 44, 1009–1021 (2019).

Cunningham, F. et al. Ensembl 2022. Nucleic Acids Res. 50, D988–D995 (2022).

Vaswani, A. et al. Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS, 2017).

Sinha, K. et al. Masked language modeling and the distributional hypothesis: order word matters pre-training for little. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing 2888–2913 (2021).

Lorenz, R. et al. ViennaRNA package 2.0. Algorithms Mol. Biol. 6, 26 (2011).

Leppek, K., Das, R. & Barna, M. Functional 5′ UTR mRNA structures in eukaryotic translation regulation and how to find them. Nat. Rev. Mol. Cell Biol. 19, 158–174 (2018).

Rao, R. M., Meier, J., Sercu, T., Ovchinnikov, S. & Rives, A. Transformer protein language models are unsupervised structure learners. International Conference on Learning Representations (ICLR, 2020).

Chu, Y. et al. A 5′ UTR language model for decoding untranslated regions of mRNA and function predictions. Zenodo https://doi.org/10.5281/zenodo.10621605 (2024).

Chu, Y. et al. UTR-LM GitHub https://github.com/a96123155/UTR-LM (2024).

Acknowledgements

This paper is partially supported by National Science Foundation grant no. 1953686 and partially supported by RVAC Medicines.

Author information

Authors and Affiliations

Contributions

Y.C. developed the UTR-LM model. D.Y. performed experimental validation. Y.L. produced in-house data. K.H. reviewed both the code and manuscript. Y.S. developed the web server. L.C. contributed to manuscript preparation. J.Z. initiated the experimental part of the project. M.W. led the entire project. All authors contributed to manuscript preparation.

Corresponding author

Ethics declarations

Competing interests

RVAC Medicines has submitted patent applications related to the designed UTR sequences. D.Y., Y.L. and Y.S. are affiliated with RVAC Medicines. J.Z. is affiliated with Zipcode Bio. Other authors have declared no conflicts of interest.

Peer review

Peer review information

Nature Machine Intelligence thanks Joshua W. K. Ho, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1

The computation flow of identifying patterns in 5′ UTR sequences based on attention scores.

Supplementary information

Supplementary Information

Supplementary Discussion, Figs. 1–10 and Tables 1–6.

Source data

Source Data Fig. 2

Statistical source data.

Source Data Fig. 3

Statistical source data.

Source Data Fig. 4

Statistical source data.

Source Data Fig. 5

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chu, Y., Yu, D., Li, Y. et al. A 5′ UTR language model for decoding untranslated regions of mRNA and function predictions. Nat Mach Intell 6, 449–460 (2024). https://doi.org/10.1038/s42256-024-00823-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-024-00823-9