Abstract

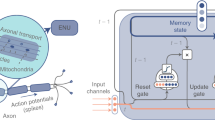

Continual learning aims to empower artificial intelligence with strong adaptability to the real world. For this purpose, a desirable solution should properly balance memory stability with learning plasticity, and acquire sufficient compatibility to capture the observed distributions. Existing advances mainly focus on preserving memory stability to overcome catastrophic forgetting, but it remains difficult to flexibly accommodate incremental changes as biological intelligence does. Here, by modelling a robust Drosophila learning system that actively regulates forgetting with multiple learning modules, we propose a generic approach that appropriately attenuates old memories in parameter distributions to improve learning plasticity, and accordingly coordinates a multi-learner architecture to ensure solution compatibility. Through extensive theoretical and empirical validation, our approach not only enhances the performance of continual learning, especially over synaptic regularization methods in task-incremental settings, but also potentially advances the understanding of neurological adaptive mechanisms.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All benchmark datasets used in this paper are publicly available, including CIFAR-10/10045 (https://www.cs.toronto.edu/~kriz/cifar.html), Omniglot57 (https://www.omniglot.com), CUB-200-201158 (https://www.vision.caltech.edu/datasets/cub_200_2011/), Tiny-ImageNet17 (https://www.image-net.org/download.php), CORe5059 (https://vlomonaco.github.io/core50/) and Atari games73 (https://github.com/openai/baselines).

Code availability

The implementation code is available via Zenodo https://doi.org/10.5281/zenodo.8293564 ref. 74.

References

Chen, Z. & Liu, B. Lifelong machine learning. (San Rafael: Morgan & Claypool Publishers, 2018).

Parisi, G. I., Kemker, R., Part, J. L., Kanan, C. & Wermter, S. Continual lifelong learning with neural networks: a review. Neural Netw. 113, 54–71 (2019).

Kudithipudi, D. et al. Biological underpinnings for lifelong learning machines. Nat. Mach. Intell. 4, 196–210 (2022).

McCloskey, M. & Cohen, N. J. Catastrophic interference in connectionist networks: the sequential learning problem. Psychol. Learn. Motiv. 24, 109–165 (1989).

McClelland, J. L., McNaughton, B. L. & O’Reilly, R. C. Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and failures of connectionist models of learning and memory. Psychol. Rev. 102, 419 (1995).

Wang, L., Zhang, X., Su, H. & Zhu, J. A comprehensive survey of continual learning: theory, method and application. Preprint at https://arxiv.org/abs/2302.00487 (2023).

Kirkpatrick, J. et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl Acad. Sci. USA 114, 3521–3526 (2017).

Aljundi, R., Babiloni, F., Elhoseiny, M., Rohrbach, M. & Tuytelaars, T. Memory aware synapses: learning what (not) to forget. In Proc. European Conference on Computer Vision 139–154 (Springer, 2018).

Zenke, F., Poole, B. & Ganguli, S. Continual learning through synaptic intelligence. In Proc. International Conference on Machine Learning 3987–3995 (PMLR, 2017).

Chaudhry, A., Dokania, P. K., Ajanthan, T. & Torr, P. H. Riemannian walk for incremental learning: understanding forgetting and intransigence. In Proc. European Conference on Computer Vision 532–547 (Springer, 2018).

Ritter, H., Botev, A. & Barber, D. Online structured laplace approximations for overcoming catastrophic forgetting. Adv. Neural Inf. Process. Syst. 31, 3742–3752 (2018).

Rebuffi, S.-A., Kolesnikov, A., Sperl, G. & Lampert, C. H. iCaRL: incremental classifier and representation learning. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 2001–2010 (IEEE, 2017).

Shin, H., Lee, J. K., Kim, J. & Kim, J. Continual learning with deep generative replay. Adv. Neural Inf. Process. Syst. 30, 2990–2999 (2017).

Wang, L. et al. Memory replay with data compression for continual learning. In International Conference on Learning Representations (2021).

Serra, J., Suris, D., Miron, M. & Karatzoglou, A. Overcoming catastrophic forgetting with hard attention to the task. In Proc. International Conference on Machine Learning 4548–4557 (PMLR, 2018).

Fernando, C. et al. PathNet: evolution channels gradient descent in super neural networks. Preprint at https://arxiv.org/abs/1701.08734 (2017).

Delange, M. et al. A continual learning survey: defying forgetting in classification tasks. IEEE Trans. Pattern Anal. Mach. Intell. (2021).

Hadsell, R., Rao, D., Rusu, A. A. & Pascanu, R. Embracing change: continual learning in deep neural networks. Trends Cogn. Sci. 24, 1028–1040 (2020).

Shuai, Y. et al. Forgetting is regulated through Rac activity in Drosophila. Cell 140, 579–589 (2010).

Cohn, R., Morantte, I. & Ruta, V. Coordinated and compartmentalized neuromodulation shapes sensory processing in Drosophila. Cell 163, 1742–1755 (2015).

Waddell, S. Neural plasticity: dopamine tunes the mushroom body output network. Curr. Biol. 26, R109–R112 (2016).

Modi, M. N., Shuai, Y. & Turner, G. C. The Drosophila mushroom body: from architecture to algorithm in a learning circuit. Annu. Rev. Neurosci. 43, 465–484 (2020).

Aso, Y. et al. Mushroom body output neurons encode valence and guide memory-based action selection in Drosophila. eLife 3, e04580 (2014).

Aso, Y. & Rubin, G. M. Dopaminergic neurons write and update memories with cell-type-specific rules. eLife 5, e16135 (2016).

Gao, Y. et al. Genetic dissection of active forgetting in labile and consolidated memories in Drosophila. Proc. Natl Acad. Sci. USA 116, 21191–21197 (2019).

Zhao, J. et al. Genetic dissection of mutual interference between two consecutive learning tasks in Drosophila. eLife 12, e83516 (2023).

Richards, B. A. & Frankland, P. W. The persistence and transience of memory. Neuron 94, 1071–1084 (2017).

Dong, T. et al. Inability to activate Rac1-dependent forgetting contributes to behavioral inflexibility in mutants of multiple autism-risk genes. Proc. Natl Acad. Sci. USA 113, 7644–7649 (2016).

Zhang, X., Li, Q., Wang, L., Liu, Z.-J. & Zhong, Y. Active protection: learning-activated Raf/MAPK activity protects labile memory from Rac1-independent forgetting. Neuron 98, 142–155 (2018).

Davis, R. L. & Zhong, Y. The biology of forgetting—a perspective. Neuron 95, 490–503 (2017).

Mo, H. et al. Age-related memory vulnerability to interfering stimuli is caused by gradual loss of MAPK-dependent protection in Drosophila. Aging Cell 21, e13628 (2022).

Cervantes-Sandoval, I., Chakraborty, M., MacMullen, C. & Davis, R. L. Scribble scaffolds a signalosome for active forgetting. Neuron 90, 1230–1242 (2016).

Noyes, N. C., Phan, A. & Davis, R. L. Memory suppressor genes: modulating acquisition, consolidation, and forgetting. Neuron 109, 3211–3227 (2021).

Cognigni, P., Felsenberg, J. & Waddell, S. Do the right thing: neural network mechanisms of memory formation, expression and update in Drosophila. Curr. Opin. Neurobiol. 49, 51–58 (2018).

Amin, H. & Lin, A. C. Neuronal mechanisms underlying innate and learned olfactory processing in Drosophila. Curr. Opin. Insect Sci. 36, 9–17 (2019).

Handler, A. et al. Distinct dopamine receptor pathways underlie the temporal sensitivity of associative learning. Cell 178, 60–75 (2019).

McCurdy, L. Y., Sareen, P., Davoudian, P. A. & Nitabach, M. N. Dopaminergic mechanism underlying reward-encoding of punishment omission during reversal learning in Drosophila. Nat. Commun. 12, 1115 (2021).

Berry, J. A., Cervantes-Sandoval, I., Nicholas, E. P. & Davis, R. L. Dopamine is required for learning and forgetting in Drosophila. Neuron 74, 530–542 (2012).

Berry, J. A., Phan, A. & Davis, R. L. Dopamine neurons mediate learning and forgetting through bidirectional modulation of a memory trace. Cell Rep. 25, 651–662 (2018).

Aitchison, L. et al. Synaptic plasticity as bayesian inference. Nat. Neurosci. 24, 565–571 (2021).

Schug, S., Benzing, F. & Steger, A. Presynaptic stochasticity improves energy efficiency and helps alleviate the stability–plasticity dilemma. eLife 10, e69884 (2021).

Wang, L. et al. AFEC: active forgetting of negative transfer in continual learning. Adv. Neural Inf. Process. Syst. 34, 22379–22391 (2021).

Benzing, F. Unifying importance based regularisation methods for continual learning. In Proc. International Conference on Artificial Intelligence and Statistics 2372–2396 (PMLR, 2022).

Bouton, M. E. Context, time, and memory retrieval in the interference paradigms of pavlovian learning. Psychol. Bull. 114, 80 (1993).

Krizhevsky, A. et al. Learning multiple layers of features from tiny images. Technical Report, Citeseer (2009).

Shuai, Y. et al. Dissecting neural pathways for forgetting in Drosophila olfactory aversive memory. Proc. Natl Acad. Sci. USA 112, E6663–E6672 (2015).

Chen, L. et al. AI of brain and cognitive sciences: from the perspective of first principles. Preprint at https://arxiv.org/abs/2301.08382 (2023).

Caron, S. J., Ruta, V., Abbott, L. F. & Axel, R. Random convergence of olfactory inputs in the Drosophila mushroom body. Nature 497, 113–117 (2013).

Endo, K., Tsuchimoto, Y. & Kazama, H. Synthesis of conserved odor object representations in a random, divergent-convergent network. Neuron 108, 367–381 (2020).

Long, M., Cao, Y., Wang, J. & Jordan, M. Learning transferable features with deep adaptation networks. In Proc. International Conference on Machine Learning 97–105 (PMLR, 2015).

Wang, L., Zhang, X., Li, Q., Zhu, J. & Zhong, Y. CoSCL: cooperation of small continual learners is stronger than a big one. In Proc. European Conference on Computer Vision 254–271 (Springer, 2022).

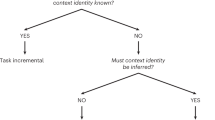

van de Ven, G. M., Tuytelaars, T. & Tolias, A. S. Three types of incremental learning. Nat. Mach. Intell. 4, 1185–1197 (2022).

Riemer, M. et al. Learning to learn without forgetting by maximizing transfer and minimizing interference. In International Conference on Learning Representations (2018).

Schwarz, J. et al. Progress & compress: a scalable framework for continual learning. In Proc. International Conference on Machine Learning 4528–4537 (PMLR, 2018).

Jung, S., Ahn, H., Cha, S. & Moon, T. Continual learning with node-importance based adaptive group sparse regularization. Adv. Neural Inf. Process. Syst. 33, 3647–3658 (2020).

Cha, S., Hsu, H., Hwang, T., Calmon, F. & Moon, T. CPR: classifier-projection regularization for continual learning. In International Conference on Learning Representations (2020).

Lake, B. M., Salakhutdinov, R. & Tenenbaum, J. B. Human-level concept learning through probabilistic program induction. Science 350, 1332–1338 (2015).

Wah, C., Branson, S., Welinder, P., Perona, P. & Belongie, S. The Caltech-UCSD birds-200-2011 dataset. (2011). http://www.vision.caltech.edu/datasets/

Lomonaco, V. & Maltoni, D. Core50: a new dataset and benchmark for continuous object recognition. In Conference on Robot Learning 17–26 (PMLR, 2017).

Ryan, T. J. & Frankland, P. W. Forgetting as a form of adaptive engram cell plasticity. Nat. Rev. Neurosci. 23, 173–186 (2022).

Luo, L. et al. Differential effects of the Rac GTPase on Purkinje cell axons and dendritic trunks and spines. Nature 379, 837–840 (1996).

Tashiro, A., Minden, A. & Yuste, R. Regulation of dendritic spine morphology by the rho family of small gtpases: antagonistic roles of Rac and Rho. Cerebral Cortex 10, 927–938 (2000).

Hayashi-Takagi, A. et al. Disrupted-in-Schizophrenia 1 (DISC1) regulates spines of the glutamate synapse via Rac1. Nat. Neurosci. 13, 327–332 (2010).

Hayashi-Takagi, A. et al. Labelling and optical erasure of synaptic memory traces in the motor cortex. Nature 525, 333–338 (2015).

Martens, J. & Grosse, R. Optimizing neural networks with kronecker-factored approximate curvature. In Proc. International Conference on Machine Learning 2408–2417 (PMLR, 2015).

Knoblauch, J., Husain, H. & Diethe, T. Optimal continual learning has perfect memory and is NP-hard. In Proc. International Conference on Machine Learning 5327–5337 (PMLR, 2020).

Deng, D., Chen, G., Hao, J., Wang, Q. & Heng, P.-A. Flattening sharpness for dynamic gradient projection memory benefits continual learning. Adv. Neural Inf. Process. Syst. 34, 18710–18721 (2021).

Mirzadeh, S. I., Farajtabar, M., Pascanu, R. & Ghasemzadeh, H. Understanding the role of training regimes in continual learning. Adv. Neural Inf. Process. Syst. 33, 7308–7320 (2020).

McAllester, D. A. PAC-Bayesian model averaging. In Proc. Twelfth Annual Conference on Computational Learning Theory 164–170 (ACM, 1999).

Pham, Q., Liu, C., Sahoo, D. & Steven, H. Contextual transformation networks for online continual learning. In International Conference on Learning Representations (2021).

Schulman, J., Wolski, F., Dhariwal, P., Radford, A. & Klimov, O. Proximal policy optimization algorithms. Preprint at https://arxiv.org/abs/1707.06347 (2017).

Lopez-Paz, D. et al. Gradient episodic memory for continual learning. Adv. Neural Inf. Process. Syst. 30, 6467–6476 (2017).

Mnih, V. et al. Playing Atari with deep reinforcement learning. Preprint at https://arxiv.org/abs/1312.5602 (2013).

Wang, L. & Zhang, X. lywang3081/CAF: CAF paper. Zenodo https://doi.org/10.5281/zenodo.8293564 (2023).

Selvaraju, R. R. et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 618–626 (IEEE, 2017).

Acknowledgements

This work was supported by the National Key Research and Development Program of China (2020AAA0106302, to J.Z.), the STI2030-Major Projects (2022ZD0204900, to Y.Z.), the National Natural Science Foundation of China (nos 62061136001 and 92248303, to J.Z., 32021002, to Y.Z., U19A2081, to H.S.), the Tsinghua-Peking Center for Life Sciences, the Tsinghua Institute for Guo Qiang, and the High Performance Computing Center, Tsinghua University. L.W. was also supported by the Shuimu Tsinghua Scholar. J.Z. was also supported by the New Cornerstone Science Foundation through the XPLORER PRIZE.

Author information

Authors and Affiliations

Contributions

L.W., X.Z., J.Z. and Y.Z. conceived the project. L.W., X.Z., Q.L. and M.Z. designed the computational model. X.Z. performed the theoretical analysis, assisted by L.W. L.W. performed all experiments and analysed the data. L.W., X.Z. and Q.L. wrote the paper. L.W., X.Z., Q.L., M.Z., H.S., J.Z. and Y.Z. revised the paper. J.Z. and Y.Z. supervised the project.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Gido van de Ven and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Text, Tables 1–6 and Figs. 1–7.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, L., Zhang, X., Li, Q. et al. Incorporating neuro-inspired adaptability for continual learning in artificial intelligence. Nat Mach Intell 5, 1356–1368 (2023). https://doi.org/10.1038/s42256-023-00747-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00747-w

This article is cited by

-

Continual learning, deep reinforcement learning, and microcircuits: a novel method for clever game playing

Multimedia Tools and Applications (2024)