Abstract

Auditory models are commonly used as feature extractors for automatic speech-recognition systems or as front-ends for robotics, machine-hearing and hearing-aid applications. Although auditory models can capture the biophysical and nonlinear properties of human hearing in great detail, these biophysical models are computationally expensive and cannot be used in real-time applications. We present a hybrid approach where convolutional neural networks are combined with computational neuroscience to yield a real-time end-to-end model for human cochlear mechanics, including level-dependent filter tuning (CoNNear). The CoNNear model was trained on acoustic speech material and its performance and applicability were evaluated using (unseen) sound stimuli commonly employed in cochlear mechanics research. The CoNNear model accurately simulates human cochlear frequency selectivity and its dependence on sound intensity, an essential quality for robust speech intelligibility at negative speech-to-background-noise ratios. The CoNNear architecture is based on parallel and differentiable computations and has the power to achieve real-time human performance. These unique CoNNear features will enable the next generation of human-like machine-hearing applications.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The source code of the TL-model v.1.1 used for training is available via https://doi.org/10.5281/zenodo.3717431or https://github.com/HearingTechnology/Verhulstetal2018Model, the TIMIT speech corpus used for training can be found online45. Most figures in this paper can be reproduced using the CoNNear model repository.

Code availability

The code for the trained CoNNear model, including instructions of how to execute it is available from https://github.com/HearingTechnology/CoNNear_cochlea or https://doi.org/10.5281/zenodo.4056552. A non-commercial, academic Ghent University licence applies.

References

von Békésy, G. Travelling waves as frequency analysers in the cochlea. Nature 225, 1207–1209 (1970).

Narayan, S. S., Temchin, A. N., Recio, A. & Ruggero, M. A. Frequency tuning of basilar membrane and auditory nerve fibers in the same cochleae. Science 282, 1882–1884 (1998).

Robles, L. & Ruggero, M. A. Mechanics of the mammalian cochlea. Phys. Rev. 81, 1305–1352 (2001).

Shera, C. A., Guinan, J. J. & Oxenham, A. J. Revised estimates of human cochlear tuning from otoacoustic and behavioral measurements. Proc. Natl Acad. Sci. USA 99, 3318–3323 (2002).

Oxenham, A. J. & Shera, C. A. Estimates of human cochlear tuning at low levels using forward and simultaneous masking. J. Assoc. Res. Otolaryngol. 4, 541–554 (2003).

Greenwood, D. D. A cochlear frequency-position function for several species—29 years later. J. Acoust. Soc. Am. 87, 2592–2605 (1990).

Jepsen, M. L. & Dau, T. Characterizing auditory processing and perception in individual listeners with sensorineural hearing loss. J. Acoust. Soc. Am. 129, 262–281 (2011).

Bondy, J., Becker, S., Bruce, I., Trainor, L. & Haykin, S. A novel signal-processing strategy for hearing-aid design: neurocompensation. Sig. Process. 84, 1239–1253 (2004).

Ewert, S. D., Kortlang, S. & Hohmann, V. A model-based hearing aid: psychoacoustics, models and algorithms. Proc. Meet. Acoust. 19, 050187 (2013).

Mondol, S. & Lee, S. A machine learning approach to fitting prescription for hearing aids. Electronics 8, 736 (2019).

Lyon, R .F. Human and Machine Hearing: Extracting Meaning from Sound (Cambridge Univ. Press, 2017).

Baby, D. & Van hamme, H. Investigating modulation spectrogram features for deep neural network-based automatic speech recognition. In Proc. Insterspeech 2479–2483 (ISCA, 2015).

de Boer, E. Auditory physics. Physical principles in hearing theory. I. Phys. Rep. 62, 87–174 (1980).

Diependaal, R. J., Duifhuis, H., Hoogstraten, H. W. & Viergever, M. A. Numerical methods for solving one-dimensional cochlear models in the time domain. J. Acoust. Soc. Am. 82, 1655–1666 (1987).

Zweig, G. Finding the impedance of the organ of corti. J. Acoust. Soc. Am. 89, 1229–1254 (1991).

Talmadge, C. L., Tubis, A., Wit, H. P. & Long, G. R. Are spontaneous otoacoustic emissions generated by self-sustained cochlear oscillators? J. Acoust. Soc. Am. 89, 2391–2399 (1991).

Moleti, A. et al. Transient evoked otoacoustic emission latency and estimates of cochlear tuning in preterm neonates. J. Acoust. Soc. Am. 124, 2984–2994 (2008).

Epp, B., Verhey, J. L. & Mauermann, M. Modeling cochlear dynamics: interrelation between cochlea mechanics and psychoacoustics. J. Acoust. Soc. Am. 128, 1870–1883 (2010).

Verhulst, S., Dau, T. & Shera, C. A. Nonlinear time-domain cochlear model for transient stimulation and human otoacoustic emission. J. Acoust. Soc. Am. 132, 3842–3848 (2012).

Zweig, G. Nonlinear cochlear mechanics. J. Acoust. Soc. Am. 139, 2561–2578 (2016).

Hohmann, V. in Handbook of Signal Processing in Acoustics (eds Havelock, D. et al.) 205–212 (Springer, 2008).

Rascon, C. & Meza, I. Localization of sound sources in robotics: a review. Robot. Auton. Syst. 96, 184–210 (2017).

Morgan, N., Bourlard, H. & Hermansky, H. in Speech Processing in the Auditory System (eds Greenberg, S. et al.) 309–338 (Springer, 2004).

Patterson, R. D., Allerhand, M. H. & Giguère, C. Time-domain modeling of peripheral auditory processing: a modular architecture and a software platform. J. Acoust. Soc. Am. 98, 1890–1894 (1995).

Shera, C. A. Frequency glides in click responses of the basilar membrane and auditory nerve: their scaling behavior and origin in traveling-wave dispersion. J. Acoust. Soc. Am. 109, 2023–2034 (2001).

Shera, C. A. & Guinan, J. J. in Active Processes and Otoacoustic Emissions in Hearing (eds Manley, A. et al.) 305–342 (Springer, 2008).

Hohmann, V. Frequency analysis and synthesis using a Gammatone filterbank. Acta Acust. United Acust. 88, 433–442 (2002).

Saremi, A. et al. A comparative study of seven human cochlear filter models. J. Acoust. Soc. Am. 140, 1618–1634 (2016).

Lopez-Poveda, E. A. & Meddis, R. A human nonlinear cochlear filterbank. J. Acoust. Soc. Am. 110, 3107–3118 (2001).

Lyon, R. F. Cascades of two-pole-two-zero asymmetric resonators are good models of peripheral auditory function. J. Acoust. Soc. Am. 130, 3893–3904 (2011).

Saremi, A. & Lyon, R. F. Quadratic distortion in a nonlinear cascade model of the human cochlea. J. Acoust. Soc. Am. 143, EL418–EL424 (2018).

Altoè, A., Charaziak, K. K. & Shera, C. A. Dynamics of cochlear nonlinearity: automatic gain control or instantaneous damping? J. Acoust. Soc. Am. 142, 3510–3519 (2017).

Baby, D. & Verhulst, S. SERGAN: speech enhancement using relativistic generative adversarial networks with gradient penalty. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 106–110 (2019).

Pascual, S., Bonafonte, A. & Serrà, J. SEGAN: speech enhancement generative adversarial network. In Interspeech 2017 3642–3646 (ISCA, 2017).

Drakopoulos, F., Baby, D. & Verhulst, S. Real-time audio processing on a Raspberry Pi using deep neural networks. In 23rd International Congress on Acoustics (ICA) (2019).

Altoè, A., Pulkki, V. & Verhulst, S. Transmission line cochlear models: Improved accuracy and efficiency. J. Acoust. Soc. Am. 136, EL302–EL308 (2014).

Verhulst, S., Altoè, A. & Vasilkov, V. Computational modeling of the human auditory periphery: auditory-nerve responses, evoked potentials and hearing loss. Hear. Res. 360, 55–75 (2018).

Oxenham, A. J. & Wojtczak, M. in Oxford Handbook of Auditory Science: Hearing (ed. Plack, C. J.) Ch. 2 (Oxford Univ. Press, 2010); https://doi.org/10.1093/oxfordhb/9780199233557.013.0002

Robles, L., Ruggero, M. A. & Rich, N. C. Two-tone distortion in the basilar membrane of the cochlea. Nature 349, 413 (1991).

Ren, T. Longitudinal pattern of basilar membrane vibration in the sensitive cochlea. Proc. Natl Acad. Sci. 99, 17101–17106 (2002).

Precise and Full-Range Determination of Two-Dimensional Equal Loudness Contours (International Organization for Standardization, 2003).

Lorenzi, C., Gilbert, G., Carn, H., Garnier, S. & Moore, B. C. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc. Natl Acad. Sci. USA 103, 18866–18869 (2006).

Isola, P., Zhu, J. Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5967–5976 (2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Garofolo, J.S. et al. DARPA TIMIT: Acoustic-Phonetic Continuous Speech Corpus CD-ROM (Linguistic Data Consortium, 1993).

Shera, C. A., Guinan, J. J. & Oxenham, A. J. Otoacoustic estimation of cochlear tuning: validation in the chinchilla. J. Assoc. Res. Otolaryngol. 11, 343–365 (2010).

Russell, I., Cody, A. & Richardson, G. The responses of inner and outer hair cells in the basal turn of the guinea-pig cochlea and in the mouse cochlea grown in vitro. Hear. Res. 22, 199–216 (1986).

Houben, R. et al. Development of a Dutch matrix sentence test to assess speech intelligibility in noise. Int. J. Audiol. 53, 760–763 (2014).

Gemmeke, J. F. et al. Audio set: an ontology and human-labeled dataset for audio events. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 776–780 (IEEE, 2017).

Paul, D. B. & Baker, J. M. The design for the Wall Street Journal-based CSR corpus. In Second International Conference on Spoken Language Processing, ICSLP (ISCA, 1992).

Dorn, P. A. et al. Distortion product otoacoustic emission input/output functions in normal-hearing and hearing-impaired human ears. J. Acoust. Soc. Am. 110, 3119–3131 (2001).

Janssen, T. & Müller, J. in Active Processes and Otoacoustic Emissions in Hearing 421–460 (Springer, 2008).

Verhulst, S., Ernst, F., Garrett, M. & Vasilkov, V. Suprathreshold psychoacoustics and envelope-following response relations: Normal-hearing, synaptopathy and cochlear gain loss. Acta Acus. United Acus. 104, 800–803 (2018).

Verhulst, S., Bharadwaj, H. M., Mehraei, G., Shera, C. A. & Shinn-Cunningham, B. G. Functional modeling of the human auditory brainstem response to broadband stimulation. J. Acoust. Soc. Am. 138, 1637–1659 (2015).

Kell, A. J., Yamins, D. L., Shook, E. N., Norman-Haignere, S. V. & McDermott, J. H. A task-optimized neural network replicates human auditory behavior, predicts brain responses, and reveals a cortical processing hierarchy. Neuron 98, 630–644 (2018).

Akbari, H., Khalighinejad, B., Herrero, J. L., Mehta, A. D. & Mesgarani, N. Towards reconstructing intelligible speech from the human auditory cortex. Sci. Rep. 9, 1–12 (2019).

Kell, A. J. & McDermott, J. H. Deep neural network models of sensory systems: windows onto the role of task constraints. Curr. Opin. Neurobiolog. 55, 121–132 (2019).

Amsalem, O. et al. An efficient analytical reduction of detailed nonlinear neuron models. Nat. Comm. 11, 1–13 (2020).

Richards, B. A. et al. A deep learning framework for neuroscience. Nat. Neurosci. 22, 1761–1770 (2019).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. In Proc. International Conference on Learning Representations (ICLR, 2015).

Chollet, F. et al. Keras v.2.3.1 (2015); https://keras.io

Abadi, M. et al. TensorFlow v.1.13.2 (2015); https://www.tensorflow.org/

Moore, B. C. & Glasberg, B. R. Suggested formulae for calculating auditory-filter bandwidths and excitation patterns. J. Acoust. Soc. Am. 74, 750–753 (1983).

Glasberg, B. R. & Moore, B. C. Derivation of auditory filter shapes from notched-noise data. Hear. Res. 47, 103–138 (1990).

Raufer, S. & Verhulst, S. Otoacoustic emission estimates of human basilar membrane impulse response duration and cochlear filter tuning. Hear. Res. 342, 150–160 (2016).

Ramamoorthy, S., Zha, D. J. & Nuttall, A. L. The biophysical origin of traveling-wave dispersion in the cochlea. Biophys. J. 99, 1687–1695 (2010).

Dau, T., Wegner, O., Mellert, V. & Kollmeier, B. Auditory brainstem responses with optimized chirp signals compensating basilar-membrane dispersion. J. Acoust. Soc. Am. 107, 1530–1540 (2000).

Neely, S. T., Johnson, T. A., Kopun, J., Dierking, D. M. & Gorga, M. P. Distortion-product otoacoustic emission input/output characteristics in normal-hearing and hearing-impaired human ears. J. Acoust. Soc. Am. 126, 728–738 (2009).

Kummer, P., Janssen, T., Hulin, P. & Arnold, W. Optimal L1–L2 primary tone level separation remains independent of test frequency in humans. Hear. Res. 146, 47–56 (2000).

Acknowledgements

This work was supported by the European Research Council (ERC) under the Horizon 2020 Research and Innovation Programme (grant agreement no. 678120 RobSpear). We thank C. Shera and S. Shera for their help with the final edits.

Author information

Authors and Affiliations

Contributions

D.B. and S.V. conceptualized the study, developed the methodology and wrote the original draft of the manuscript with visualisation support from A.V.D.B.; D.B. and A.V.D.B. developed the software and performed the investigation and validation. D.B. and A.V.D.B completed the formal analysis and data curation. S.V. reviewed and edited the manuscript, supervised and administered the project and acquired the funding.

Corresponding authors

Ethics declarations

Competing interests

A patent application (PCTEP2020065893) was filed by Ghent University on the basis of the research presented in this manuscript. Inventors on the application are S.V., D.B., F. Drakopoulos and A.V.D.B.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

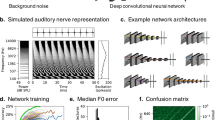

Extended Data Fig. 1 Overview of the CoNNear architecture parameters.

The table gives a brief description of the fixed CoNNear parameters and hyperparameters.

Extended Data Fig. 2 CNN layer depth comparison.

The first column details the CoNNear architecture. The next columns describe the total number of required model parameters, the required training time per epoch of 2310 TIMIT training sentences and average L1 loss across all windows of the TIMIT training set. Average L1 losses were also computed for BM displacement predictions to a number of unseen acoustic stimuli (click and 1-kHz pure tones) with levels between 0 and 90 dB SPL. Lastly, average L1 loss was also computed for the 550 sentences of the TIMIT test set. For each evaluated category, the best performing architecture is highlighted in bold font.

Extended Data Fig. 3 Activation function comparison.

The first column details the CoNNear architecture. The next columns describe the total number of required model parameters, the required training time per epoch of 2310 TIMIT training sentences and average L1 loss across all windows of the TIMIT training set. Average L1 losses were also computed for BM displacement predictions to a number of unseen acoustic stimuli (click and 1-kHz pure tones) with levels between 0 and 90 dB SPL. Lastly, average L1 loss was also computed for the 550 sentences of the TIMIT test set. For each evaluated category, the best performing architecture is highlighted in bold font.

Extended Data Fig. 4 Simulated BM displacements for a 10048-sample speech stimulus and a 16384-sample music stimulus.

The stimulus waveform is depicted in panel (a) and panels (b-c) depict instantaneous BM displacement intensities (darker colours = higher intensities) of the simulated TL-model (b) and CoNNear (c) outputs. The NCF=201 considered output channels are labelled per channel number: channel 1 corresponds to a CF of 12 kHz and channel 201 to a CF of 100 Hz. The same colour scale was used for both simulations and ranged between -0.5 μm (blue) and 0.5 μm (red). The left panels show simulations to a speech stimulus from the Dutch matrix test 48 and the right panels shows simulations to a music fragment (Radiohead - No Surprises).

Extended Data Fig. 5 Comparing TL and CoNNear model predictions at the median and maximum L1 prediction error.

The figure compares BM displacement intensities of the BM (b) and CoNNear (c) model to audio fragments which resulted in the median and maximum L1 errors of 0.008 and 0.038 simulated for the TIMIT test set (Fig. 5). The NCF=201 considered output channels are labelled per channel number: channel 1 corresponds to a CF of 12 kHz and channel 201 to a CF of 100 Hz. The same colour scale was used for both simulations and ranged between -0.5 μm (blue) and 0.5 μm (red).

Extended Data Fig. 6 Root mean-square error (RMSE) between simulated excitation patterns of the TL and CoNNear models reported as fraction of the TL excitation pattern maximum (cf. Fig. 3).

Using the PReLU activation function (a) leads to an overall high RMSE as this architecture failed to learn the level-dependent cochlear compression characteristics and filter shapes. The models using the tanh nonlinearity (b,c) did learn to capture the level-dependent properties of cochlear excitation patterns, and performed with errors below 5% for the frequency ranges and stimulus levels captured by the speech training data (for CFs below 5 kHz, and stimulation levels below 90 dB SPL) The RMSE increased above 5% for all architectures when evaluating its performance on 8- and 10-kHz excitation patterns. This decreased performance results from the limited frequency content of the TIMIT training material.

Rights and permissions

About this article

Cite this article

Baby, D., Van Den Broucke, A. & Verhulst, S. A convolutional neural-network model of human cochlear mechanics and filter tuning for real-time applications. Nat Mach Intell 3, 134–143 (2021). https://doi.org/10.1038/s42256-020-00286-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-00286-8

This article is cited by

-

Deep neural network models of sound localization reveal how perception is adapted to real-world environments

Nature Human Behaviour (2022)

-

Speeding up machine hearing

Nature Machine Intelligence (2021)

-

A convolutional neural-network framework for modelling auditory sensory cells and synapses

Communications Biology (2021)

-

Harnessing the power of artificial intelligence to transform hearing healthcare and research

Nature Machine Intelligence (2021)