Abstract

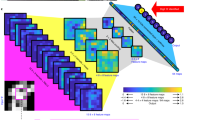

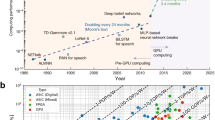

Recent breakthroughs in recurrent deep neural networks with long short-term memory (LSTM) units have led to major advances in artificial intelligence. However, state-of-the-art LSTM models with significantly increased complexity and a large number of parameters have a bottleneck in computing power resulting from both limited memory capacity and limited data communication bandwidth. Here we demonstrate experimentally that the synaptic weights shared in different time steps in an LSTM can be implemented with a memristor crossbar array, which has a small circuit footprint, can store a large number of parameters and offers in-memory computing capability that contributes to circumventing the ‘von Neumann bottleneck’. We illustrate the capability of our crossbar system as a core component in solving real-world problems in regression and classification, which shows that memristor LSTM is a promising low-power and low-latency hardware platform for edge inference.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding author upon reasonable request. The code that supports the plots within this Article and other finding of this study is available at http://github.com/lican81/memNN. The code that supports the communication between the custom-built measurement system and the integrated chip is available from the corresponding author upon reasonable request.

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Gers, F. A., Schmidhuber, J. & Cummins, F. Learning to forget: continual prediction with LSTM. Neural Comput. 12, 2451–2471 (2000).

Schmidhuber, J., Wierstra, D. & Gomez, F. Evolino: hybrid neuroevolution/optimal linear. In Proc 19th International Joint Conference on Artificial Intelligence 853–858 (Morgan Kaufmann, San Francisco, 2005).

Bao, W., Yue, J. & Rao, Y. A deep learning framework for financial time series using stacked autoencoders and long-short term memory. PLoS ONE 12, e0180944 (2017).

Jia, R. & Liang, P. Data recombination for neural semantic parsing. In Proc. 54th Annual Meeting of the Association for Computational Linguistics (eds Erk, K. & Smith, N. A.) 12–22 (Association for Computational Linguistics, 2016).

Karpathy, A. The unreasonable effectiveness of recurrent neural networks. Andrej Karpathy Blog http://karpathy.github.io/2015/05/21/rnn-effectiveness/ (2015).

Wu, Y. et al. Google’s neural machine translation system: bridging the gap between human and machine translation. Preprint at https://arxiv.org/abs/1609.08144 (2016).

Xiong, W. et al. The Microsoft 2017 conversational speech recognition system. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 5934–5938 (IEEE, 2018).

Sudhakaran, S. & Lanz, O. Learning to detect violent videos using convolutional long short- term memory. In Proc. 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS) 1–6 (IEEE, 2017).

Chang, A. X. M. & Culurciello, E. Hardware accelerators for recurrent neural networks on FPGA. In Proc 2017 IEEE International Symposium on Circuits and Systems 1–4 (IEEE, 2017).

Guan, Y., Yuan, Z., Sun, G. & Cong, J. FPGA-based accelerator for long short-term memory re- current neural networks. In Proc. 2017 22nd Asia and South Pacific Design Automation Conference 629–634 (IEEE, 2017).

Zhang, Y. et al. A power-efficient accelerator based on FPGAs for LSTM network. In Proc. 2017 IEEE International Conference on Cluster Computing 629–630 (IEEE, 2017).

Conti, F., Cavigelli, L., Paulin, G., Susmelj, I. & Benini, L. Chipmunk: a systolically scalable 0.9 mm2, 3.08 Gop/s/mW @ 1.2 mW accelerator for near-sensor recurrent neural network inference. In 2018 IEEE Custom Integrated Circuits Conference (CICC) 1–4 (IEEE, 2018).

Gao, C., Neil, D., Ceolini, E., Liu, S.-C. & Delbruck, T. DeltaRNN. in Proc. 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays 21–30 (ACM, 2018); http://dl.acm.org/citation.cfm?doid=3174243.3174261.

Rizakis, M., Venieris, S. I., Kouris, A. & Bouganis, C.-S. Approximate FPGA-based LSTMs under computation time constraints. In 14th International Symposium in Applied Reconfigurable Computing (ARC) (eds Voros, N. et al.) 3–15 (Springer, Cham, 2018).

Chua, L. Memristor—the missing circuit element. IEEE Trans. Circuit Theory 18, 507–519 (1971).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. Nature 453, 80–83 (2008).

Yang, J. J., Strukov, D. B. & Stewart, D. R. Memristive devices for computing. Nat. Nanotech. 8, 13–24 (2013).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018).

Le Gallo, M. et al. Mixed-precision in-memory computing. Nat. Electron. 1, 246–253 (2018).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural net- work (165 000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron. Devices 62, 3498–3507 (2015).

Yu, S. et al. Binary neural network with 16 mb rram macro chip for classification and online training. In 2016 IEEE International Electron Devices Meeting (IEDM) 16.2.1–16.2.4 (IEEE, 2016).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Hu, M. et al. Memristor-based analog computation and neural network classification with a dot product engine. Adv. Mater. 30, 1705914 (2018).

Li, C. et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 2385 (2018).

Xu, X. et al. Scaling for edge inference of deep neural networks. Nat. Electron. 1, 216–222 (2018).

Jeong, D. S. & Hwang, C. S. Nonvolatile memory materials for neuromorphic intelligent machines. Adv. Mater. 30, 1704729 (2018).

Du, C. et al. Reservoir computing using dynamic memristor for temporal information processing. Nat. Commun. 8, 2204 (2017).

Smagulova, K., Krestinskaya, O. & James, A. P. A memristor-based long short term memory circuit. Analog. Integr. Circ. Sig. Process 95, 467–472 (2018).

Jiang, H. et al. Sub-10 nm Ta channel responsible for superior performance of a HfO2 memristor. Sci. Rep. 6, 28525 (2016).

Yi, W. et al. Quantized conductance coincides with state instability and excess noise in tantalum oxide memristors. Nat. Commun. 7, 11142 (2016).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Mozer, M. C. A focused backpropagation algorithm for temporal pattern recognition. Complex Syst. 3, 349–381 (1989).

Werbos, P. J. Generalization of backpropagation with application to a recurrent gas market model. Neural Netw. 1, 339–356 (1988).

Chollet, F. Keras: deep learning library for Theano and tensorflow. Keras https://keras.io (2015).

International Airline Passengers: Monthly Totals in Thousands. Jan 49 – Dec 60. DataMarket https://datamarket.com/data/set/22u3/international-airline-passengers-monthly-totals-in-thousands-jan-49-dec-60 (2014).

Phillips, P. J., Sarkar, S., Robledo, I., Grother, P. & Bowyer, K. The gait identification challenge problem: data sets and baseline algorithm. In Proc. 16th International Conference on Pattern Recognition Vol. 1, 385–388 (IEEE, 2002).

Kale, A. et al. Identification of humans using gait. IEEE Trans. Image Process. 13, 1163–1173 (2004).

Tieleman, T. & Hinton, G. Lecture 6.5—RMSprop: divide the gradient by a running average of its recent magnitude. COURSERA: Neural Netw. Mach. Learn. 4, 26–31 (2012).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Burgt, Y. et al. A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nat. Mater. 16, 414–418 (2017).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Sheridan, P. M., Cai, F., Du, C., Zhang, Z. & Lu, W. D. Sparse coding with memristor networks. Nat. Nanotech. 12, 784–789 (2017).

Shafiee, A. et al. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. In Proc. 43rd International Symposium on Computer Architecture 14–26 (IEEE, 2016).

Gokmen, T. & Vlasov, Y. Acceleration of deep neural network training with resistive cross-point devices: design considerations. Front. Neurosci. 10, 33 (2016).

Cheng, M. et al. TIME: a training-in-memory architecture for memristor-based deep neural networks. In Proc. 54th Annual Design Automation Conference 26 (ACM, 2017).

Song, L., Qian, X., Li, H. & Chen, Y. PipeLayer: a pipelined ReRAM-based accelerator for deep learning. In 2017 IEEE International Symposium on High Performance Computer Architecture 541–552 (IEEE, 2017).

Acknowledgements

This work was supported in part by the US Air Force Research Laboratory (grant no. FA8750-15-2-0044) and the Intelligence Advanced Research Projects Activity (IARPA; contract no. 2014-14080800008). D.B., an undergraduate from Swarthmore College, was supported by the NSF Research Experience for Undergraduates (grant no. ECCS-1253073) at the University of Massachusetts. P.Y. was visiting from Huazhong University of Science and Technology with support from the Chinese Scholarship Council (grant no. 201606160074). Part of the device fabrication was conducted in the cleanroom of the Center for Hierarchical Manufacturing, an NSF Nanoscale Science and Engineering Center located at the University of Massachusetts Amherst.

Author information

Authors and Affiliations

Contributions

Q.X. and C.L. conceived the idea. Q.X., J.J.Y. and C.L. designed the experiments. C.L., Z.W. and D.B. carried out programming, measurements, data analysis and simulation. M.R., P.Y., C.L., H.J., N.G. and P.L. built the integrated chips. Y.L., C.L., W.S., M.H., Z.W. and J.P.S. built the measurement system and firmware. Q.X., C.L., J.J.Y. and R.S.W. wrote the manuscript. M.B., Q.W. and all other authors contributed to the results analysis and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Figures, Notes and References

Rights and permissions

About this article

Cite this article

Li, C., Wang, Z., Rao, M. et al. Long short-term memory networks in memristor crossbar arrays. Nat Mach Intell 1, 49–57 (2019). https://doi.org/10.1038/s42256-018-0001-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-018-0001-4