Abstract

Approximate numerical methods are one of the most used strategies to extract information from many-interacting-agents systems. In particular, numerical approximations are of extended use to deal with epidemic, ecological and biological models, since unbiased methods like the Gillespie algorithm can become unpractical due to high CPU time usage required. However, the use of approximations has been debated and there is no clear consensus about whether unbiased methods or biased approach is the best option. In this work, we derive scaling relations for the errors in approximations based on binomial extractions. This finding allows us to build rules to compute the optimal values of both the discretization time and number of realizations needed to compute averages with the biased method with a target precision and minimum CPU-time usage. Furthermore, we also present another rule to discern whether the unbiased method or biased approach is more efficient. Ultimately, we will show that the choice of the method should depend on the desired precision for the estimation of averages.

Similar content being viewed by others

Introduction

Epidemic modeling has traditionally relied on stochastic methods to go beyond mean-field deterministic solutions1,2,3,4,5. The contagion process itself is naturally adapted to a stochastic treatment since the basic units, individuals, can not be described successfully using deterministic laws. For example, two given individuals may or may not develop a contact even though they are potentially able to do so given their geographical location. Even further, should the contact be established and should one of the individuals be infectious, the infection of the second individual is not a certainty, but rather an event that occurs with some probability. Computational epidiomiologists have implemented these stochastic contagions in all the modeling efforts and at different scales, from agent-based6,7,8,9,10,11,12 to population-based13,14,15,16,17. In the case of agent-based models stochastic contagion events can be traced one by one, even though for practical purposes in computation sometimes they may be aggregated. In the population-level models, different contagion processes are aggregated together following some generic feature (number of neighboring individuals, geographical location, etc.). These models have the virtue of drastically reducing the number of variables needed to describe the whole population and, at the computational level, the positive side effect of enormously decreasing the model running time. A widespread practice nowadays18,19,20,21,22,23,24,25 is to approximate the statistical description of these contagion aggregations by binomial or multinomial distributions.

The same methodological and conceptual issues appear well beyond the reign of epidemic modeling. Indeed, stochastic processes are one of the main pillars of complexity science26,27,28. The list of fruitful applications is endless, but just to name a few paradigmatic examples: population dynamics in ecology29,30, gene expression31, metabolism in cells32, finances and market crashes33,34, telecommunications35, chemical reactions36, quantum physics37 and active matter38. As models become more intricate, there arises the technical challenge of producing stochastic trajectories in feasible computation times, since unbiased methods that generate statistically correct realizations of stochastic trajectories may become unpractical due to lengthy computations. Approximate methods aim at solving this issue by significantly reducing the CPU time usage. The use of approximated methods is extended (see e.g.16,17,21), and some authors assert that they might be the only way to treat effectively large systems of heterogeneous agents39. However, other works claim that the systematic errors induced by the approximations might not trade-off the reduction in computation time40,41. The primary objective of this work is to shed light on this debate and assess in which circumstances approximate methods based on binomial extractions, which we call binomial methods, can be advantageous with respect to the unbiased algorithms.

To solve this question, we derive in this paper a scaling relation for the errors of the binomial methods. This main result allows us to obtain optimal values for the discretization time and number of realizations to compute averages with a desired precision and minimum CPU time consumption. Furthermore, we derive a rule to discern if the binomial method is going to be faster than the unbiased counterparts. Lastly, we perform a numerical study to compare the performance of both the unbiased and binomial methods and check the applicability of our proposed rules. Ultimately, we will show that the efficiency of the binomial method is superior to the unbiased approaches only when the target precision is below a certain threshold value.

Methods

Transition rates

Throughout this work we will focus on pure jumping processes, this is, stochastic models in which agents switch states within a discrete set of possible states. Spontaneous creation or annihilation of agents will not be considered, therefore, its total number, N, is conserved. We furthermore assume Markovian dynamics, so given that the system is in a particular state at time t, the microscopic rules that dictate the switching between states just depend on the current state \({{{{{{{\boldsymbol{s}}}}}}}}(t)=\left\{{s}_{1}(t),\ldots ,{s}_{N}(t)\right\}\). These microscopic rules are given in terms of the transition rates, defined as the conditional probabilities per unit of time to observe a transition,

A particular set of transitions in which we are specially interested define the one-step processes, meaning that the only transitions allowed are those involving the change of a single agent’s state, with rates

for i = 1, …, N. Our last premise is to consider only transition rates \({w}_{i}({s}_{i}\to {s}_{i}^{{\prime} })\) that do not depend explicitly on time t. Note that the rates could, in principle, be different for every agent and depend in an arbitrary way on the state of the system. The act of modeling is actually to postulate the functional form of these transition rates. This step is conceptually equivalent to the choice of a Hamiltonian in equilibrium statistical mechanics.

Jumping processes of two-state agents, such that the possible states of the ith agent can be si = 0 or si = 1, are widely used in many different applications, such as protein activation42, spins 1/243, epidemic spreading4,44, voting dynamics45, chemical reactions46,47, drug-dependence in pharmacology48, etc. For binary-state systems, quite commonly, the rate of the process si = 0 → si = 1 is different from the reverse process si = 1 → si = 0 and we define the rate of agent i as

As a detailed observation is usually unfeasible, we might be interested in a macroscopic level of description focusing, for example, on the occupation number n(t), defined as the total number of agents in state 1,

being N − n(t) the equivalent occupation of state 0. In homogeneous systems, those in which wi(si) = w(si), ∀ i, transition rates at this coarser level can be computed from those at the agent level as

Some applications might require an intermediate level of description between the fully heterogeneous [Eq. (2)] and the fully homogeneous [Eq. (5)]. In order to deal with a coarse-grained heterogeneity, we define \({{{{{{{\mathcal{C}}}}}}}}\) different classes of agents. Agents can be labeled in order to identify their class, so that li = ℓ means that the ith agent belongs to the class labeled ℓ with \(\ell \in [1,{{{{{{{\mathcal{C}}}}}}}}]\) and we require that all agents in the same class share the same transition rates wi(si) = wℓ(si), ∀ li = ℓ. This classification allows us to define the occupation numbers Nℓ and nℓ as the total number of agents of the ℓth class and the number of those in state 1 respectively. Moreover, we can write the class-level rates:

In general, stochastic models are very difficult and can not be solved analytically. Hence, one needs to resort to numerical simulations than can provide suitable estimations to the quantities of interest. There are two main types of simulation strategies: unbiased continuous-time and discrete-time algorithms. Each one comes with its own advantages and disadvantages that we summarize in the next sections.

Unbiased continuous-time algorithms

We proceed to summarize the main ideas behind the unbiased continuous-time algorithms, and refer the reader to39,44,49,50,51,52,53,54 for a detailed description. Say that we know the state of the system s(t) at a given time t. Such a state will remain unchanged until a random time \({t}^{{\prime} } > t\), when the system experiences a transition or “jump" to a new state, also random, \({{{{{{{{\boldsymbol{s}}}}}}}}}^{{\prime} }({t}^{{\prime}})\):

Therefore, the characterization of a change in the system necessarily requires us to sample both the transition time \(\Delta t={t}^{{\prime} }-t\) and the new state \({{{{{{{\boldsymbol{{s}}}}}}}^{{\prime} }}}({t}^{{\prime} })\).

For binary one-step processes, new states are generated by changes in single agents si → 1 − si. The probability that agent i changes its state in a time interval \({t}^{{\prime} }\in [t,t+dt]\) is \({w}_{i}\left({s}_{i}\right)dt\) by definition of transition rate. Therefore, the probability that the agent will not experience such a transition in an infinitesimal time interval is \(1-{w}_{i}\left({s}_{i}\right)dt\). Concatenating such infinitesimal probabilities, we can compute the probability Qi(si, Δt) that a given agent does not change its state during an arbitrary time lapse Δt as well as the complementary probability Pi(si, Δt) that it does change state as

Eq. (8) conforms the basic reasoning from which most of the continuous-time algorithms to simulate stochastic trajectories are built. It allows us to extend our basic postulate from Eq. (1), which only builds probabilities for infinitesimal times (dt), to probabilities of events of arbitrary duration (Δt). We note that Eq. (8) is actually a conditional probability: it is only valid provided that there are no other updates of the system in the interval Δt. From it we can also compute the probability density function that the ith agent remains at si for a non-infinitesimal time Δt and then experiences a transition to \({s}_{i}^{{\prime} }=1-{s}_{i}\) in the time interval [t + Δt, t + Δt + dt]:

The above quantity is also called first passage distribution for the ith agent. Therefore, given that the system is in state s at time t, one can use the elements defined above to compute the probability that the next change of the system is due to switching in the agent i at time \({t}^{{\prime} }\in [t+\Delta t,t+\Delta t+dt]\):

where we have defined the total exit rate,

Two methods, namely the first-reaction method and the Gillespie algorithm, can be distinguished based on the scheme used to sample the random jumping time \({t}^{{\prime} }\) and switching agent i from the distribution specified in Eq. (10). The first-reaction method involves sampling one tentative random time per transition using fi(si; Δt) and then choosing the minimum among them as the transition time and reaction that actually occurs. In contrast, the Gillespie algorithm directly samples the transition time using the total rate W(s) and then determines which transition is being activated. Depending on the algorithm used to randomly select the next reaction, the computational complexity of the unbiased methods can vary from linear to constant in the number of reactions (see e.g.54). Through the rest of the paper, we will use Gillespie algorithms with binary search in representation of unbiased methods.

Discrete-time approximations

In this section, we consider algorithms which at simulation step j update time by a constant amount, tj+1 = tj + Δt. Note that the discretization step Δt is no longer stochastic, and it has to be considered as a new parameter that we are in principle free to choose. Larger values of Δt result in faster simulations since fewer steps are needed in order to access enquired times. Nevertheless, the discrete-time algorithms introduce systematic errors that grow with Δt.

Discrete-synchronous

It is possible to use synchronous versions of the process where all agents can potentially update their state at the same time tj using the probabilities Pi(si, Δt) defined in Eq. (8) (see e.g.52,55,56).

Algorithm 1

Discrete time synchronous agent level

1: Increment time: tj+1 = tj + Δt

2: Compute all probabilities Pi(si, Δt), i = 1, …, N, using Eq. (8).

3: For all agents, generate a uniform random number \({\hat{u}}_{i}\in [0,1]\). If \({\hat{u}}_{i} < {P}_{i}({s}_{i},\Delta t)\) change the state si → 1 − si.

4: go to 1.

We note that the use of synchronous updates changes the nature of the process since simultaneous updates were not allowed in the original continuous-time algorithms. Given that the probabilities Pi(si, Δt) tend to zero as Δt → 0, one expects to recover the results of the continuous-time asynchronous approach in the limit Δt → 0. Nevertheless, users of this method should bear in mind that this approximation could induce discrepancies with the continuous-time process that go beyond statistical errors57.

Binomial method: two simple examples

When building the class version of the synchronous agent level (Algorithm 1), one can merge together events with the same transition probability and sample the updates using binomial distributions. This is the basic idea behind the binomial method, which is of extended use in the current literature (e.g.17,23,25,58,59). Since references presenting this method are scarce, we devote a longer section to its explanation.

Let us start with a simple example. Say that we are interested in simulating the decay of N radioactive nuclei. We denote by si = 1 that nucleus i is non-disintegrated and by si = 0 the disintegrated state. All nuclei have the same time-independent decay rate μ:

This is, all nuclei can decay with the same probability μdt in every time-bin of infinitesimal duration dt, but the reverse reaction is not allowed. This simple stochastic process leads to an exponential decay of the average number nt of active nuclei at time t as 〈nt〉 = Ne−μt.

Using the rates (12), we can compute the probability that one nucleus disintegrates in a non-infinitesimal time Δt [Eq. (8)],

Therefore every particle follows a Bernoulli process in the time interval Δt. That is, each particle decays with a probability p and remains in the same state with a probability 1 − p. As individual decays are independent of each other, the total number of decays in a temporal bin of duration Δt follows a binomial distribution B(N, p),

The average of the binomial distribution is 〈n〉 = Np and its variance σ2[n] = Np(1 − p). This result invites us to draw stochastic trajectories with a recursive relation:

where we denote by Δnt ~ B(nt , p) a random value drawn from the binomial distribution, with average value 〈Δnt〉 = ntp, and we start from n0 = N. In this simple example, it turns out that Eq. (15) does generate unbiased realizations of the stochastic process. From this equation we obtain

The symbol 〈⋅〉B denotes averages over the binomial method. The solution of this recursion relation with initial condition n0 = N is

which coincides with the exact result independently of the value of Δt. Therefore, the choice of Δt is just related to the desired time resolution of the trajectories. If Δt ≪ (Nμ)−1, many of the outcomes Δnt used in Eq. (15) will equal zero as the resolution would be much smaller than the mean time between disintegration events. Contrary, if Δt ≫ (Nμ)−1, much of the information about the transitions will be lost and we would generate a trajectory with abrupt transitions. Still, both simulations would faithfully inform about the state of the system at the enquired times [see Fig. 1a, b].

Simulations of the radioactive decay process with rates given by Eq. (12), using the binomial method [Eq. (15)]. In a the time discretization is Δt = 1, whereas in b is Δt = 0.5. In both panels N = 100 and μ = 1. Dots and error bars indicate the average and standard error respectively, both computed from 20 simulations. With continuous line, we show the analytical average (black) plus and minus the analytical standard error (gray dashed lines): \(\langle n(t)\rangle \pm \sigma [n(t)]/\sqrt{20}\). Independently of the discretization time, the results from simulations agree with the analytical value within errors.

Let us now apply this method to another process where it will no longer be exact. Nevertheless, the basic idea of the algorithm is the same: compute non-infinitesimal increments of stochastic trajectories using binomial distributions. We consider a system with N agents which can jump between states with homogeneous constant rates:

Which, at the macroscopic level read

from which we can see that this process is a birth-death process. Reasoning as before, the probabilities that a particle changes state in a non-infinitesimal time Δt are:

Where we can avoid the use of subscripts since all agents share the transition rates. At this point, we might feel also invited to write an equation for the evolution of agents in state 1 in terms of the stochastic number of transitions:

Where Δnt,0 and Δnt,1 are binomial random variables distributed according to B(N − nt, P(0, Δt)) and B(nt, P(1, Δt)), respectively. However, trajectories generated with Eq. (21) turn out to be only an approximation to the original process. The reason is that the probability that a given number of transitions 0 → 1 happen in a time window is modified as soon as a transition 1 → 0 occurs (and vice-versa). If we now take averages in Eq. (21), use the known averages of the binomial distribution and solve the resulting linear iteration relation for \({\langle {n}_{t}\rangle }_{B}\), we obtain

with a = 2 − e−μΔt − e−κΔt and b = N(1 − e−κΔt). It is true that in the limit Δt → 0, this solution recovers the exact solution for the evolution equation of the average number of agents in state 1 for the continuous-time process, namely

but the accuracy of the discrete approximation depends crucially on the value of Δt. If, for instance, we take \(\Delta t\gg \max ({\kappa }^{-1},{\mu }^{-1})\), then we can approximate a ≈ 2, b ≈ N, such that Eq. (22) yields

a numerical instability that shows up as a wild oscillation, see Fig. 2.

Four realizations of the birth and death process with constant rates defined by Eq. (18) simulated with the use of the binomial method [Eq. (21)]. In this case, we also use different time discretizations Δt, and fixed N = 1000, μ = 1, and κ = 1. Note the numerical instability that shows up as wild oscillations in the numerical trajectories for large time steps Δt = 10 (triangles), and Δt = 3 (crosses). Otherwise, there is a good agreement between simulations and the expected average value (continuous black line) for both Δt = 0.1, 1 (circles and squares respectively).

Therefore, the fact that agents are independent and rates are constant is not sufficient condition to guarantee that the binomial method generates unbiased trajectories for arbitrary values of the discretization step Δt. Nevertheless, we note that the only condition needed to ensure that Eq. (21) is a good approximation to the exact dynamics, Eq. (23), is that \(\Delta t\ll \min ({\kappa }^{-1},{\mu }^{-1})\). Given than the system size N does not appear in this condition, we expect the binomial method to be very efficient to simulate this kind of process if we take a sufficiently small value for Δt, independently of the number of agents, see Fig. 2, where both Δt = 0.1, 1 produce a good agreement for μ = κ = 1. By comparing the average value of the binomial method, Eq. (22) with the exact value, Eq. (23), we note that the error of the binomial approximation can be expanded in a Taylor series

where the coefficient of the linear term λ depends on t and N, as well as on other parameters of the model. We will check throughout this work that a similar expansion of the errors in the binomial method holds for the case of more complex models.

Binomial method: general algorithm

If we go back to the general two-state process in which the functional form of the rates can have an arbitrary dependence on the state of the system, we can approximate the probability that the state of agent i changes in a time interval Δt by Pi(si, Δt) [Eq. (8)]. If all these probabilities are different, we cannot group them in order to conform binomial samples. If, on the other hand, we can identify large enough classes \(\ell =1,2,\ldots ,{{{{{{{\mathcal{C}}}}}}}}\) such that all Nℓ agents in the same class ℓ have the same rates wℓ(s), we can approximate the variation of the occupation number nℓ of each class during the time Δt as the difference Δnℓ,0 − Δnℓ,1 where Δnℓ,0 and Δnℓ,1 follow, respectively, binomial distributions B(Nℓ − nℓ, Pℓ(0, Δt)) and B(nℓ, Pℓ(1, Δt)), with Pℓ(si, Δt) given by Eq. (8) using any agent i belonging to class ℓ. All class occupation numbers are updated at the same time step j, yielding the synchronous binomial algorithm, which reads:

Algorithm 2

Binomial synchronous class level

1: Update time as tj+1 = tj + Δt.

2: For every class \(\ell \in [1,\ldots ,{{{{{{{\mathcal{C}}}}}}}}]\): Update the values of Pℓ(1, Δt), Pℓ(0, Δt), using Eq. (8).

3: For every class \(\ell \in [1,\ldots , {{{{{{{\mathcal{C}}}}}}}}]\): Update the number of agents as nℓ → nℓ + Δnℓ,0 − Δnℓ,1, where Δnℓ,0 and Δnℓ,1 are values of binomial random variables distributed according to B(Nℓ − nℓ, Pℓ(0, Δt)) and B(nℓ, Pℓ(1, Δt)), respectively.

4: go to 1.

A similar reasoning can be built departing from the knowledge that the number n of occurrences of continuous-time independent processes with constant rates follows a Poisson distribution36, namely e−ΛΛn/n!, being the parameter Λ of the Poisson distribution equal to the product of the rate times the time interval considered. Therefore, the number of firings of each class in the time interval Δt, Δnℓ,1 and Δnℓ,0, can be approximated by Poisson random variables with parameters Wℓ(nℓ → nℓ − 1)Δt and Wℓ(nℓ → nℓ + 1)Δt, respectively. This conception gives rise to the τ-leaping algorithm39,44,56,60,61,62,63 used in the context of chemical modeling. Given that Poisson random variables are unbounded from above, the τ-leaping algorithm may yield negative values for the occupation numbers nℓ (see e.g. refs. 39,56). Consequently, our focus will be on the binomial method, which does not exhibit this drawback.

Results and discussion

The \(\frac{27}{4}\) rule

The major drawback of the binomial method to simulate trajectories is the necessity of finding a proper discretization time Δt that avoids both slow and inaccurate implementations. In this section, we propose a semi-empirical predictor for the values of the optimal choice of Δt that propitiates the smallest computation time for a fixed desired accuracy. Moreover, we will present a rule to discern whether an unbiased continuous-time algorithm or the discrete-time binomial method is more suitable for the required task.

Consider that we are interested in computing the average value 〈Z〉 of a random variable Z that depends on the stochastic trajectory in a time interval [0, T]. For example, Z could be the number of nuclei for the process defined in Eq. (12) at a particular time t ∈ [0, T]. We remark that 〈Z〉 could also stand for the central second moment of some random variable, thus accounting for fluctuations around some mean value. Also, the average 〈Z〉 could represent probabilities if Z is chosen to be an indicator function (see e.g.64).

The standard approach to compute 〈Z〉 numerically generates M independent realizations of the stochastic trajectories and measures the random variable Z(i) in each trajectory i = 1, …, M. The average value 〈Z〉 is then approximated by the sample mean

Note that ZM itself should be considered a random variable as its value changes from a set of M realizations to another.

For an unbiased method, such as Gillespie, the only error ε in the estimation of 〈Z〉 by ZM is of statistical nature and can be computed from the standard deviation of ZM, namely

The quantification of the importance of the error, for sufficiently large M, follows from the central limit theorem50,64 using the confidence intervals of a normal distribution:

It is in this sense, that one says that the standard error ε is the precision of the estimation and writes accordingly

Note that, according to Eq. (27), for an unbiased method the error in the estimation of the sample mean ZM tends to zero in the limit M → ∞.

For a biased method, such as the binomial, that uses a finite discretization time Δt and generates MB independent trajectories, the precision is altered by a factor that does not tend to zero in the limit MB → ∞. Based on the result found in the simple birth and death example of the previous section, let us assume for now that this factor scales linearly with the discretization time Δt and can be written as λΔt where λ is a constant depending on the model. We will corroborate this linear assumption for the binomial method both with calculations and numerical simulations in the next section, and we refer to Supplementary Note 1 for a more general discussion in the case of a method with a possible non-linear dependence. Then we can write the estimator using the binomial method as

where \({\varepsilon }_{B}=\frac{\sigma }{\sqrt{{M}_{B}}}\) and \({Z}_{{M}_{B}}\) is the sample average, Eq. (26), using MB realizations. The maximum absolute error of the biased method is then ∣λ∣Δt + εB. Due to the presence of a bias term in the error, the only way that the precision of the binomial method can equal the one of an unbiased approach is by increasing the number of realizations MB compared to the number of realizations M of the unbiased method. Matching the values of the errors of the unbiased and the biased methods, we arrive at the condition that the required number of steps of the biased method is

and the additional requirement \(\Delta t < \frac{\varepsilon }{| \lambda | }\) (otherwise the bias is so large that it can not be compensated by the increase in the number of realizations MB).

What a practitioner needs is to compare the CPU times that the biased and unbiased methods require to achieve the same accuracy ε. For the biased method with a fixed time step Δt, the CPU time \({t}_{B}^{{{{{{{{\rm{(CPU)}}}}}}}}}\) needed to generate one stochastic trajectory is proportional to the number of steps, \(\frac{T}{\Delta t}\), needed to reach the final time T and can be written as \({C}_{B}\frac{T}{\Delta t}\), where CB is the CPU time needed to execute one iteration of the binomial method. Hence the total time required to generate MB trajectories is

(Note that in massive parallel architectures, it might be possible to obtain a sub-linear dependence of the total time with the number of realizations M. This possibility is discussed in Supplementary Note 1.)

The discretization time associated with a minimum value of the CPU time consumption and subject to the constraint of fixed precision is obtained by inserting Eq. (31) in Eq. (32) and minimizing for Δt (see Supplementary Note 1). The optimal time reads:

Inserting the equation for the optimal Δt in Eq. (31), one obtains

which does not depend of λ or other parameters. Eqs. (33) and (34) have major practical use, since they tell us how to choose Δtopt and \({M}_{B}^{{{{{{{{\rm{opt}}}}}}}}}\) to use the binomial method to reach the desired precision ε and with minimum CPU time usage.

Still, one important question remains. Provided that we use the optimal pair (\({M}_{B}^{{{{{{{{\rm{opt}}}}}}}}}\), Δtopt), is the binomial method faster than an unbiased approach? In order to answer this question we first obtain the expected CPU time of the binomial method with the optimal choice inserting Eqs.(33) and (34) in Eq. (32):

On the other hand, the CPU time needed to generate one trajectory using the unbiased method is proportional to the maximum time T, and the total CPU time to generate M trajectories is \({t}_{U}^{{{{{{{{\rm{(CPU)}}}}}}}}}={C}_{U}M\,T\), where CU is a constant depending on the unbiased method used. The expected ratio between the optimal CPU time consumption with the binomial method an the unbiased approach is

Equaion (36) defines what we called the \(\frac{27}{4}\) rule, and its usefulness lies in the ability to indicate in which situations the binomial method is more efficient than the unbiased procedure (when α < 1). Also from Eq. (36) we note that unbiased methods become the preferred option as the expected precision is increased, i.e. when ε is reduced. We note that there is a threshold value \({\varepsilon }_{{{{{{{{\rm{TH}}}}}}}}}=\frac{27}{4}\frac{| \lambda | {C}_{B}}{{C}_{U}}\) for which both the unbiased and binomial methods are equally efficient.

Eqs. (33), (34) and (36) conform the main result of this work. These three equations (i) fix the free parameters of the binomial method (Δt and MB) in order to compute averages with fixed precision ε at minimum CPU time usage, and (ii) inform us if the binomial method is more efficient than the unbiased method. The use of these equations require the estimation of four quantities: σ, CU, λ, and CB, which can be computed numerically with limited efforts. While σ and λ rely solely on the process and approximation, hence are expected to remain constant across different machines, both CU and CB depend on the machine, but also on the programming language and the user’s ability to write efficient codes. The standard deviation σ depends only on the random variable Z and has to be computed anyway in order to have a faithful estimate of the errors. As we will show in the examples of section Numerical study, the constant λ can be obtained through extrapolation at high values of Δt (thus, very fast implementations). Finally, the constants CU and CB can be determined very accurately and at a little cost by measuring the CPU usage time of a few iterations with standard clock routines. Furthermore, in Supplementary Note 2, we provide a detailed discussion on the estimation of CU without the need to implement any unbiased method. This approach offers a practical means to determine the value of CU while avoiding the complexities associated with unbiased methods.

We can also work with alternative rules that fix the relative error, defined to as

instead of the absolute error ε. To do so, we consider that the difference 〈Z〉 − ZM is of order ε and replace 〈Z〉 by a rough estimation ZM. Then, we can replace in Eqs. (33), (34) and (36)

where we note that the errors of using Eq. (38) instead of Eq. (37) are of order \({\left(\varepsilon /{Z}_{M}\right)}^{2}\). Therefore, working with relative errors result in implicit rules, in the sense that one has to make a rough estimation of the quantity that we aim to estimate (i.e. 〈Z〉).

In the analysis of errors, the number of agents N plays a crucial role due to its significant impact on the magnitude of fluctuations. For instance, when estimating average densities of individuals, and when the central limit theorem applies, the standard error scales as \(\sigma \sim 1/\sqrt{N}\)36. The average time Δt between updates in unbiased methods is expected to be inversely proportional to N (see Supplementary Note 2). Therefore, we expect CU ~ N. Since λ is a difference between biased and unbiased estimations, it will have the same scaling with N as the quantity 〈Z〉 (see Supplementary Note 3). The constant CB depends crucially on the method used to sample binomial random variables, and in some cases is independent of N, as discussed in Supplementary Note 4. Therefore, when estimating average densities, we anticipate α to decrease with increasing system size, as

making the use of biased methods more suitable as the system size grows.

Numerical study

In this section, we want to compare the performance of the Gillespie algorithm (in representation of the unbiased strategies) and the binomial method (in representation of unbiased synchronous methods). Also, we show the applicability of the rules derived in last section to fix the optimal values of Δt and MB, and decide whether the biased or unbiased method is faster. We will do so in the context of the SIS model with all-to-all connections and a more complex SEIR model with meta-population structure.

All-to-all SIS model

We study in this section the all-to-all connectivity, where every agent is connected to all others and have the same values of the transition rates. In the particular context of the SIS process, these rates read :

Where μ represents the rate at which infected individuals recover from the disease and β is the rate at which susceptible individuals contract the disease from an infected contact. The transition rates at the macroscopic description are also easily read from the macroscopic variable itself. From Eq. (5):

The main outcome of this all-to-all setting is well known and can easily be derived from the mean-field equation for the average number of infected individuals65,

and indicates that for R0 ≔ β/μ > 1 there is an “active” phase with a non-zero stable steady-sate value 〈n〉st = (1 − μ/β)N, whereas for R0 < 1 the stable state is the “epidemic-free” phase 〈n〉st = 0 where the number of infected individuals tends to zero with time.

In order to draw trajectories of this process with the binomial method we use Algorithm 2 with a single class containing all agents, Nℓ = N, nℓ = n. The probability to use in the binomial distributions is extracted from the individual rates of Eq. (40):

We note that the probability P(0, Δt) in Eq. (43) that a susceptible agent experiences a transition in a time Δt is an approximation of

Such approximation is a good representation of the original process when Δt is so small that n(t) can be considered as constant in \(\left[t,t+\Delta t\right]\). In any case, we checked both analytically (see Supplementary Note 3) and numerically [see Fig. 3a, b] that the errors of the method still scale linearly with the time discretization, as pointed out in section The \(\frac{27}{4}\) rule.

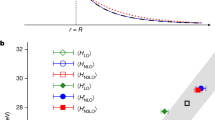

Panel a plots the average density \({\langle {x}_{t}\rangle }_{B}:= \frac{{\langle {n}_{t}\rangle }_{B}}{N}\) of infected individuals of the all-to-all SIS model at time t = 20 obtained using the binomial method for different values of the discretization step Δt. The number of realizations is MB = 100, and other parameter values are β = 4, μ = 1, N = 103, n(t = 0) = 10. The statistical error bars are smaller than the symbol size. In accordance with Eq. (26), we find that the average \({\langle {x}_{t}\rangle }_{B}\) follows a linear dependence at small Δt with slope λ = − 0.25(1). The horizontal dashed line is the extrapolation at Δt = 0 of 〈x〉B obtained from the linear fit (continuous line). In panel b we plot for the same case, the relative error \({\varepsilon }_{r}:= | \frac{{\langle {n}_{t}\rangle }_{B}}{\langle {n}_{t}\rangle }-1 |\), using a very accurate value of \(\frac{\langle {n}_{t}\rangle }{N}=0.7497\) obtained with the so-called Gaussian approximation75, corroborating the linear dependence with the discretization step (dashed line of slope 0.25 × N/〈nt〉 = 0.33).

Now, let us illustrate the relevance of choosing an appropriate discretization Δt for the binomial method. First we look for a condition on Δt that ensures that Eq. (44) can be properly approximated by Eq. (43). Since the average time between updates at the non-zero fixed point \(W{({n}_{{{{{{{{\rm{st}}}}}}}}})}^{-1}={[2\mu (1-\mu /\beta )N]}^{-1}\), a heuristic sufficient condition to ensure proper integration is to fix Δt ∝ 1/N. In Fig. 4a, it is shown that this sufficient condition indeed generates a precise integration of the process. Also in Fig. 4a we can see that this is in contrast with the use of Δt = 1, which provides a poor representation of the process (as claimed in40). However, regarding the CPU-time consumption, the sufficient option performs poorly [Fig. 4b]. Therefore, a proper balance between precision and CPU time consumption requires to fine tune the parameter Δt. This situation highlights the relevance of the rule derived in section The \(\frac{27}{4}\) rule to choose Δt and discern if the binomial method is advantageous with respect to the unbiased counterparts.

We plot in panel a the average density \({\langle {x}_{t}\rangle }_{B}:= \frac{{\langle {n}_{t}\rangle }_{B}}{N}\) of infected individuals of the all-to-all SIS model at time t = 20 obtained using the binomial method as a function of \({{{{{{{{\mathcal{R}}}}}}}}}_{0}=\beta /\mu\) for different discretization times Δt. We take n(t = 0) = 10, μ = 1.0, N = 103, and MB = 100. Statistical error bars are smaller than the symbol size. The estimations of the average agree within errors for Δt = 10−3 and Δt = 10−2. However, discrepancies are found for bigger values of Δt, for which the systematic errors are bigger than the statistical errors. Thus, the analysis of systematic errors should be taken into account to produce results with fixed desired precision. In panel b, we plot the average CPU time (in seconds) per realization which, according to Eq. (32) scales as 1/Δt. This figure evidences the need of a fine tuning of Δt in order to avoid slow and imprecise calculations.

In Fig. 5a, we show the agreement of Eqs. (36) and (39) with results from simulations. In this figure, the discretization step Δt and number of realizations for the binomial method MB have been optimally chosen according to Eqs. (33) and (34). This figure informs us that the binomial method is more efficient than an unbiased Gillespie algorithm counterpart for a system of size N = 103 when the target error is large, namely for ε > 3 ⋅ 10−3, whereas the unbiased method should be the preferred choice for dealing with high precision estimators. In Fig. 5b we fix the precision and vary the system size N to check that α is inversely proportional to N [Eq. (39)]. Thus, the efficiency of biased methods tends to overcome unbiased approaches as the system size grows. Both in Fig. 5a, b, we show that it is possible to use estimations of CU without actually having to implement the unbiased method (see Supplementary Note 2). This finding highlights the possibility of achieving accurate results while avoiding the complexities associated with implementing biased methods. It is relevant for the application of the \(\frac{27}{4}\) rule that CPU time consumption is not highly dependent on \({{{{{{{{\mathcal{R}}}}}}}}}_{0}\) (as demonstrated in Fig. 3b). Therefore, the efficiency study can be conducted at fixed \({{{{{{{{\mathcal{R}}}}}}}}}_{0}\) values.

We plot in panel a the ratio between the CPU times of the binomial and the Gillespie algorithms applied to the simulation of an all-to-all SIS model with parameter values T = 20, μ = 1, β = 4, N = 103, and n(t = 0) = 10 as a function of the target error ε. The dots are the results of the numerical simulations using the binomial method with the optimal values of the discretization step Δtopt and number of realizations \({M}_{B}^{\,{{\mbox{opt}}}\,}\) as given by Eqs. (33) and (34), while the number of trajectories in the Gillespie algorithm was computed from Eq. (27). The solid line is Eq. (36), using the values obtained from the simulations: λ = − 0.25, CU = 7 ⋅ 10−3 s, CB = 2 ⋅ 10−6 s. With triangles we represent results from the use of Eq. (36) with the estimation of CU explained in Supplementary Note 2. The dashed horizontal line at α = 1 signals where the unbiased and biased methods are equally efficient and it crosses the data at \({\varepsilon }_{{}_{TH}}=\frac{27}{4}\frac{| \lambda | {C}_{B}}{{C}_{U}}=3\cdot 1{0}^{-3}\). In panel b we proceed similar to (a), but fix the precision, and vary N. Again, we fix Δt and MB to their optimal values using Eqs. (33) and (34) respectively, and plot results from simulations (dots), our prediction from Eq. (36) measuring λ, CU, and CB from simulations (solid line), and Eq. (36) using our theoretical estimation of CU (triangles) using Eq. B4. This plot is in agreement with the expected scaling of α from Eq. (39). See values of absolute CPU time consumption in Supplementary Note 5.

Meta-population SEIR model

Next, we show that our results hold in a more complex model involving meta-population connectivity and many-state agents. The meta-population framework consist on \({{{{{{{\mathcal{C}}}}}}}}\) sub-systems or classes, such that class \(\ell =1,\ldots ,{{{{{{{\mathcal{C}}}}}}}}\) contains a population of Nℓ individuals. Agents of different sub-populations are not connected and therefore cannot interact, whereas agents within the same population interact through an all-to-all scheme similar to the one used in section All-to-all SIS model. Individuals can diffuse through populations, thus infected individuals can move to foreign populations and susceptible individuals can contract the disease abroad. Diffusion is tuned by a mobility matrix m, being the element \({m}_{\ell ,{\ell }^{{\prime} }}\) the rate at which individuals from population ℓ travel to population \({\ell }^{{\prime} }\). Therefore, to fully specify the state of agent i we need to give its state si and the sub-population ℓi it belongs to at a given time. Regarding the macroscopic description of the system, the inhabitants of a population can fluctuate and therefore it is needed to keep track of all the numbers Nℓ as well as the occupation numbers nℓ.

In this case we examine the SEIR paradigmatic epidemic model where agents can exist in one of four possible states: susceptible, exposed, infected, or recovered (see e.g.44). The exposed and recovered compartments are new additions compared to the SIS model discussed in the previous section. These compartments represent individuals who have been exposed to the disease but are not yet infectious, and individuals who are immune to the disease respectively. The rates of all processes at the sub-population level are:

where Sℓ, Eℓ, Iℓ, and Rℓ denote the number of susceptible, exposed, infected, and recovered individuals in population ℓ, respectively.

If we assume homogeneous diffusion, the elements of the mobility matrix are \({m}_{\ell ,{\ell }^{{\prime} }}=m\) if there is a connection between subpopulations ℓ and \({\ell }^{{\prime} }\) and \({m}_{\ell ,{\ell }^{{\prime} }}=0\) otherwise. Also if the initial population distribution is homogeneous, Nℓ(t = 0) = N0, ∀ ℓ, then the total exit rate reads:

which can be expressed as a function of the occupation variables {Sℓ, Eℓ, Iℓ, Nℓ}. In this case, the average time between mobility-events, \({[m{{{{{{{\mathcal{C}}}}}}}}{N}_{0}]}^{-1}\), is constant and inversely proportional to the total number of agents \({{{{{{{\mathcal{C}}}}}}}}{N}_{0}\). This makes simulating meta-population models with unbiased methods computationally expensive, as a significant portion of CPU time is devoted to simulating mobility events. The binomial method is, therefore, the preferred strategy to deal with this kind of process (see Supplementary Note 6 for details on how to apply the binomial method to meta-population models66). However, one has to bear in mind that the proper use of the binomial method requires supervising the proper value of Δt that generates a faithful description of the process at affordable times.

In Fig. 6 we also check the applicability of the rules derived in section The \(\frac{27}{4}\) rule, this time in the context of metapopulation models. As in the case of all-to-all interactions, the preferential use of the binomial method is conditioned to the desired precision for the estimator. Indeed, unbiased methods become more convenient as the target errors decrease.

Similar to Fig. 5 for the case of the meta-population SEIR model with parameter values t = 7.5, γ = 1, μ = 1, β = 4. There are \({{{{{{{\mathcal{C}}}}}}}}=100\) subpopulations arranged in a square 10 × 10 lattice such that each subpopulation is connected to 4 nearest neighbors (we assume periodic boundary conditions); each subpopulation contains initially Nℓ(t = 0) = 103 agents, ∀ ℓ. At time zero the state of the system is I1(0) = 10, Iℓ(0) = 0 ∀ ℓ ≠ 1, Eℓ(0) = 0, Rℓ(0) = 0, Sℓ(0) = N0 − Iℓ ∀ ℓ. We have set the mobility among neighboring subpopulations to a constant value m = 10. The discretization step and the number of trajectories of the binomial method take the optimal values of Eqs. (33) and (34), while the number of trajectories in the Gillespie algorithm was computed from Eq. (27). The required constants measured from the simulations are λ = 0.045, CU = 0.12 s, CB = 1.2 ⋅ 10−4 s. The dashed horizontal line at α = 1 signals where the Gillespie and binomial methods are equally efficient and it crosses the data at \({\varepsilon }_{{}_{TH}}=\frac{27}{4}\frac{| \lambda | {C}_{B}}{{C}_{U}}\approx 3\cdot 1{0}^{-4}\). The continuous line is the theoretical prediction Eq. (36), while circles are results from simulations. Squares and triangles are estimations of α that avoid making simulations of the original process. Squares where obtained through simulations of the all-to-all process. Triangles also use the all-to-all process plus the estimation of CU using deterministic mean-field equations as outlined in Supplementary Note 2. We note that the values of α are in general agreement across theory, simulations and approximations. See fit for λ in Fig. S5 of the Supplementary Note 5.

Efficient calculation of C U and C B

In principle, the use of Eq. (36) requires the implementation of both the unbiased and biased methods to estimate the constants CU and CB. It would be preferable to devise rules that do not require both implementations, as they can become cumbersome for complex processes with numerous reactions. To address this issue, we propose two approximations to Eq. (36). The first approximation consists of conducting the efficiency analysis on a simpler all-to-all system rather than on the meta-population structure, as outlined in Supplementary Note 2. Our second proposal entirely avoids the implementation of the unbiased method, opting instead for the mean-field estimation of CU as also described in Supplementary Note 2. In Fig. 6, we also illustrate the concurrence between these two approximations and the direct application of Eq. (36). Overall, Fig. 6 shows the advantage of using the binomial method for low precision. Compared to the case of the all-to-all interactions of section All-to-all SIS model, the required CPU-time of the Gillespie method is very large, making it computationally very expensive to use. Therefore, this situation exemplifies the superiority of the binomial method with optimal choices for the discretization times and number of realizations when dealing with complex processes.

Final implementation

Summing up, we propose the following steps to use the results of section The \(\frac{27}{4}\) rule.

Algorithm 3

Rule 27/4

1: Estimate a target quantity, 〈Z〉, using the biased method with several (large) values of Δt. Plotting the estimations versus Δt, compute λ as the slope of the linear fit [see Fig. 3a and Fig. S6 of Supplementary Note 5 for examples].

2: Estimate CU and CB on simple all-to-all process. Alternatively, estimate CU using deterministic mean-field calculations as in Supplementary Note 2. Estimations can be done at a small system size Ns, then CU at target system size N is recovered through CU(N) = CU(Ns)N/Ns.

3: Use Eq. (36) to discern whether the unbiased (for α > 1) or biased (for α < 1) approach are the most efficient option.

4: If the biased method is the preferred option, then use Eqs. (33) and (34) to fix the discretization time and number of realizations respectively.

Conclusion

This work provides useful insight into the existing debate regarding the use of the binomial approximation to sample stochastic trajectories. The discretization time of the binomial method needs to be chosen carefully since large values can result in errors beyond the desired precision, while low values can produce extremely inefficient simulations. A proper balance between precision and CPU time consumption is necessary to fully exploit the potential of this approximation and make it useful.

We have demonstrated, through both numerical and analytical evidence, that the systematic errors of the binomial method scale linearly with the discretization time. Using this result, we can establish a rule for selecting the optimal discretization time and number of simulations required to estimate averages with a fixed precision while minimizing CPU time consumption. Furthermore, when comparing specific biased and unbiased implementations, we have derived a rule to identify the more efficient option.

It is not possible to determine whether the unbiased or biased approach is the best option in absolute terms. CPU time consumption varies depending on factors such as the programming language, the machine used for calculations, and the user’s coding proficiency. This variability is parametrized through the constants CU and CB in our theory. Nevertheless, we can make general statements independent of the implementation. Firstly, the advantage of using the binomial method depends on the target precision: the use of unbiased methods becomes more optimal as the target precision increases. Second, since CPU time scaling with the number of reactions depends on the method, biased methods tend to outperform unbiased methods as the complexity of the model increases.

The numerical study of our proposed rules signals that the ratio of CPU times between the unbiased and binomial methods are similar in both all-to-all and meta-population structures. This result facilitates the use of the rules in the latter case. Indeed, one can develop the study of efficiency in the all-to-all framework and then use the optimal values of the discretization time and number of realizations in the more complex case of meta-populations.

Our work contributes to the generation of trustworthy and fast stochastic simulations, crucial for many real-world applications. Future work will focus on generalizing this approach to deal with adaptive discretizations and address cases involving non-Poissonian processes (see e.g. ref. 67), where unbiased algorithms are challenging to implement.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Code availability

References

Britton, T. Stochastic epidemic models: a survey. Math. Biosci. 225, 24–35 (2010).

Allen, L. J. An introduction to stochastic epidemic models, in Mathematical epidemiology (eds Brauer, F., van den Driessche, P. & Wu, J.) 81–130 (Springer, 2008).

Andersson, H. & Britton, T. Stochastic Epidemic Models and their Statistical Analysis Vol. 151 (Springer Science & Business Media, 2012).

Pastor-Satorras, R., Castellano, C., Van Mieghem, P. & Vespignani, A. Epidemic processes in complex networks. Rev. Mod. Phys. 87, 925 (2015).

Brauer, F. Mathematical epidemiology: past, present, and future. Infectious Dis. Modelling 2, 113–127 (2017).

Eubank, S. et al. Modelling disease outbreaks in realistic urban social networks. Nature 429, 180–184 (2004).

Ferguson, N. M. et al. Strategies for containing an emerging influenza pandemic in Southeast Asia. Nature 437, 209–214 (2005).

Longini Jr, I. M. et al. Containing pandemic influenza at the source. Science 309, 1083–1087 (2005).

Germann, T. C., Kadau, K., Longini Jr, I. M. & Macken, C. A. Mitigation strategies for pandemic influenza in the united states. Proc. Natl Acad. Sci. 103, 5935–5940 (2006).

Ciofi degli Atti, M. L. et al. Mitigation measures for pandemic influenza in Italy: an individual based model considering different scenarios. PLoS ONE 3, e1790 (2008).

Merler, S. & Ajelli, M. The role of population heterogeneity and human mobility in the spread of pandemic influenza. Proc. Roy. Soc. B: Biol. Sci. 277, 557–565 (2010).

Aleta, A. et al. Modelling the impact of testing, contact tracing and household quarantine on second waves of COVID-19. Nature Hum. Behaviour 4, 964–971 (2020).

Sattenspiel, L. & Dietz, K. A structured epidemic model incorporating geographic mobility among regions. Math. Biosci. 128, 71–91 (1995).

Colizza, V., Barrat, A., Barthélemy, M. & Vespignani, A. The role of the airline transportation network in the prediction and predictability of global epidemics. Proc. Natl Acad. Sci. 103, 2015–2020 (2006).

Colizza, V., Barrat, A., Barthelemy, M., Valleron, A.-J. & Vespignani, A. Modeling the worldwide spread of pandemic influenza: baseline case and containment interventions. PLOS Med. 4 (2007).

Balcan, D. et al. M. Proc. Natl Acad. Sci. 106, 21484–21489 (2009).

Balcan, D. et al. Modeling the spatial spread of infectious diseases: The GLobal Epidemic and Mobility computational model. J. Comput. Sci. 1, 132–145 (2010).

Balcan, D. & Vespignani, A. Phase transitions in contagion processes mediated by recurrent mobility patterns. Nat. Phys. 7, 581–586 (2011).

Merler, S. et al. Spatiotemporal spread of the 2014 outbreak of Ebola virus disease in Liberia and the effectiveness of non-pharmaceutical interventions: a computational modeling analysis. Lancet Infectious Dis. 15, 204–211 (2015).

Zhang, Q. et al. Spread of Zika virus in the Americas. Proc. Natl Acad. Sci. USA 114, E4334–E4343 (2017).

Gómez-Gardeñes, J., Soriano-Panos, D. & Arenas, A. Critical regimes driven by recurrent mobility patterns of reaction–diffusion processes in networks. Nat. Phys. 14, 391–395 (2018).

Gilbert, M. et al. Preparedness and vulnerability of African countries against importations of COVID-19: a modeling study. Lancet 395, 871–877 (2020).

Chinazzi, M. et al. The effect of travel restrictions on the spread of the 2019 novel coronavirus (COVID-19) outbreak. Science 368, 395–400 (2020).

Arenas, A. et al. Modeling the spatiotemporal epidemic spreading of COVID-19 and the impact of mobility and social distancing interventions. Phys. Rev. X 10, 041055 (2020).

Aguilar, J. et al. Impact of urban structure on infectious disease spreading. Sci. Rep. 12, 1–13 (2022).

Argun, A., Callegari, A. & Volpe, G. Simulation of Complex Systems (IOP Publishing, 2021).

Thurner, S., Hanel, R. & Klimek, P. Introduction to the Theory of Complex Systems (Oxford University Press, 2018).

Tranquillo, J. V. An Introduction to Complex Systems (Springer, 2019).

Odum, E. P. & Barrett, G. W. Fundamentals of Ecology Vol. 3 (Saunders Philadelphia, 1971).

Balaban, N. Q., Merrin, J., Chait, R., Kowalik, L. & Leibler, S. Bacterial persistence as a phenotypic switch. Science 305, 1622–1625 (2004).

Elowitz, M. B., Levine, A. J., Siggia, E. D. & Swain, P. S. Stochastic gene expression in a single cell. Science 297, 1183–1186 (2002).

Kiviet, D. J. et al. Stochasticity of metabolism and growth at the single-cell level. Nature 514, 376–379 (2014).

Rolski, T., Schmidli, H., Schmidt, V. & Teugels, J. L. Stochastic Processes for Insurance and Finance (John Wiley & Sons, 2009).

Sornette, D. Critical market crashes. Phys. Rep. 378, 1–98 (2003).

Baccelli, F. & Błaszczyszyn, B. et al. Stochastic geometry and wireless networks: Volume II Applications. Foundations Trends Networking 4, 1–312 (2010).

van Kampen, N. Stochastic Processes in Physics and Chemistry (Elsevier Science Publishers, 1992).

Milz, S. & Modi, K. Quantum stochastic processes and quantum non-Markovian phenomena. PRX Quantum 2, 030201 (2021).

Ramaswamy, S. The mechanics and statistics of active matter. Annu. Rev. Condens. Matter Phys. 1, 323–345 (2010).

Gillespie, D. T. Stochastic simulation of chemical kinetics. Annu. Rev. Phys. Chem. 58, 35–55 (2007).

Fennell, P. G., Melnik, S. & Gleeson, J. P. Limitations of discrete-time approaches to continuous-time contagion dynamics. Physi. Rev. E 94, 052125 (2016).

Gómez, S., Gómez-Gardenes, J., Moreno, Y. & Arenas, A. Nonperturbative heterogeneous mean-field approach to epidemic spreading in complex networks. Physical Review E 84, 036105 (2011).

Leff, P. The two-state model of receptor activation. Trends Pharmacol. Sci. 16, 89–97 (1995).

Brush, S. G. History of the Lenz-Ising model. Rev. Mod. Phys. 39, 883 (1967).

Keeling, M. J. & Rohani, P. Modeling Infectious Diseases in Humans and Animals (Princeton University Press, 2011).

Fernández-Gracia, J., Suchecki, K., Ramasco, J. J., San Miguel, M. & Eguíluz, V. M. Is the voter model a model for voters? Phys. Rev. Lett. 112, 158701 (2014).

Lee, B. & Graziano, G. A two-state model of hydrophobic hydration that produces compensating enthalpy and entropy changes. J. Am. Chem. Soc. 118, 5163–5168 (1996).

Huang, H. W. Action of antimicrobial peptides: two-state model. Biochemistry 39, 8347–8352 (2000).

Bridges, T. M. & Lindsley, C. W. G-protein-coupled receptors: from classical modes of modulation to allosteric mechanisms. ACS Chem. Biol. 3, 530–541 (2008).

Gillespie, D. T. A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 22, 403–434 (1976).

Toral, R. & Colet, P. Stochastic Numerical Methods: an Introduction for Students and Scientists (John Wiley & Sons, 2014).

Cota, W. & Ferreira, S. C. Optimized Gillespie algorithms for the simulation of Markovian epidemic processes on large and heterogeneous networks. Comput. Phys. Commun. 219, 303–312 (2017).

Colizza, V. & Vespignani, A. Epidemic modeling in metapopulation systems with heterogeneous coupling pattern: theory and simulations. J. Theoret. Biol. 251, 450–467 (2008).

Tailleur, J. & Lecomte, V. Simulation of large deviation functions using population dynamics. AIP Conf. Proc. Vol. 1091, 212–219 (2009).

Masuda, N. & Vestergaard, C. L. Gillespie algorithms for stochastic multiagent dynamics in populations and networks. In Elements in Structure and Dynamics of Complex Networks, (ed Guido Caldarelli) (Cambridge University Press, 2022).

Colizza, V., Pastor-Satorras, R. & Vespignani, A. Reaction–diffusion processes and metapopulation models in heterogeneous networks. Nat. Phys. 3, 276–282 (2007).

Goutsias, J. & Jenkinson, G. Markovian dynamics on complex reaction networks. Phys. Rep. 529, 199–264 (2013).

Allen, L. J. Some discrete-time SI, SIR, and SIS epidemic models. Math. Biosci. 124, 83–105 (1994).

Leier, A., Marquez-Lago, T. T. & Burrage, K. Generalized binomial τ-leap method for biochemical kinetics incorporating both delay and intrinsic noise. J. Chem. Phys. 128 (2008).

Peng, X., Zhou, W. & Wang, Y. Efficient binomial leap method for simulating chemical kinetics. J. Chem. Phys. 126 (2007).

Cao, Y., Gillespie, D. T. & Petzold, L. R. Efficient step size selection for the tau-leaping simulation method. J. Chem. Phys. 124 (2006).

Cao, Y. & Samuels, D. C. Discrete stochastic simulation methods for chemically reacting systems. Methods Enzymol. 454, 115–140 (2009).

Lecca, P. Stochastic chemical kinetics: a review of the modelling and simulation approaches. Biophys. Rev. 5, 323–345 (2013).

Rathinam, M., Petzold, L. R., Cao, Y. & Gillespie, D. T. Consistency and stability of tau-leaping schemes for chemical reaction systems. Multiscale Modeling Simul. 4, 867–895 (2005).

Asmussen, S. & Glynn, P. W. Stochastic Simulation: Algorithms and Analysis Vol. 57 (Springer, 2007).

Marro, J. & Dickman, R. Nonequilibrium Phase Transitions in Lattice Models. Collection Alea-Saclay: Monographs and Texts in Statistical Physics (Cambridge University Press, 1999).

Chandler, R. & Northrop, P. The FORTRAN library RANDGEN http://www.homepages.ucl.ac.uk/~ucakarc/work/software/randgen.f. See documentation at https://www.ucl.ac.uk/~ucakarc/work/software/randgen.txt.

Ferguson, N. M. et al. Strategies for mitigating an influenza pandemic. Nature 442, 448–452 (2006).

Aguilar, J. GitHub repository. https://github.com/jvrglr/Biased-versus-unbiased-codes.git.

The FORTRAN library ranlib.f. https://www.netlib.org/random/.

Python’s numpy.random.binomial source code, as C function random_binomial: https://fossies.org/linux/numpy/numpy/random/src/distributions/distributions.c.

Kachitvichyanukul, V. & Schmeiser, B. W. Binomial random variate generation. Commun. ACM 31, 216–222 (1988).

Press, W. H., Flannery, B. P., Teukolsky, S. A. & Vetterling, W. T. Numerical Recipes FORTRAN 90: the Art of Scientific and Parallel Computing (Cambridge University Press, 1996).

Davis, C. S. The computer generation of multinomial random variates. Comput. Statistics Data Anal. 16, 205–217 (1993).

Fishman, G. S. Sampling from the binomial distribution on a computer. J. Am. Statistical Assoc. 74, 418–423 (1979).

Lafuerza, L. F. & Toral, R. On the Gaussian approximation for master equations. J. Statistical Phys. 140, 917–933 (2010).

Acknowledgements

The authors thank Sandro Meloni and Luis Irisarri for their useful comments. We also thank anonymous reviewers for valuable comments that have helped us to improve the presentation and discussion of our results. Partial financial support has been received from the Agencia Estatal de Investigación (AEI, MCI, Spain) MCIN/AEI/10.13039/501100011033 and Fondo Europeo de Desarrollo Regional (FEDER, UE) under Project APASOS (PID2021-122256NB-C21) and the María de Maeztu Program for units of Excellence in R&D, grant CEX2021-001164-M, and the Conselleria d’Educació, Universitat i Recerca of the Balearic Islands (grant FPI_006_2020), and the contract ForInDoc (GOIB).

Author information

Authors and Affiliations

Contributions

J.A., J.J.R., and R.T. conceived and designed the study. J.A. performed the simulations. J.A., J.J.R., and R.T. wrote the paper. All the authors read and approved the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aguilar, J., Ramasco, J.J. & Toral, R. Biased versus unbiased numerical methods for stochastic simulations. Commun Phys 7, 155 (2024). https://doi.org/10.1038/s42005-024-01648-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-024-01648-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.