Abstract

Reducing the impact of disruptions is essential to provide reliable and attractive public transport. In this work, we introduce a topological approach for evaluating recoverability, i.e., the ability of public transport networks to return to their original performance level after disruptions, which we model as topological perturbations. We assess recoverability properties in 42 graph representations of metro networks and relate these to various topological indicators. Graphs include infrastructure and service characteristics, accounting for in-vehicle travel time, waiting time, and transfers. Results show a high correlation between recoverability and topological indicators, suggesting that more efficient networks (in terms of the average number of hops and the travel time between nodes) and denser networks can better withstand disruptions. In comparison, larger networks that feature more redundancy can rebound faster to normal performance levels. The proposed methodology offers valuable insights for planners when designing new networks or enhancing the recoverability of existing ones.

Similar content being viewed by others

Introduction

To fully understand the dynamics of connected complex systems it is essential to comprehend the underlying connectivity and interaction between its components. Many real-world complex systems can be modeled as complex networks including biological epidemics, social interactions, energy and communication infrastructures, and transportation systems1,2. Network science has emerged as an effective tool to study the topology and dynamics of these complex networks3. In particular, public transport networks (PTNs) have been increasingly studied from a network science perspective, providing a topological explanation for different features observed in transport systems4. For PTNs’ to provide reliable and attractive services, it is crucial to reduce the impact of disruptions in both the design and operational planning phases. Given PTNs’ role as a critical infrastructure and the recurrence of service disruptions, a large body of research has been devoted to studying PTNs’ vulnerability and robustness, i.e., the extent to which systems can withstand disruptions5. Many works have studied the robustness of PTNs from a topological point of view, simulating random and/or targeted attacks on either nodes6,7,8,9 or links8,9,10,11,12. Overall, while there is a wealth of literature related to assessing and improving the robustness of PTNs, little is known about the topological aspects of the recovery process once these failures occur. Furthermore, past studies have often adopted a strictly topological perspective, disregarding public transport service aspects and the consequences of disruptions for passengers’ travel itineraries.

Next to a network’s ability to withstand disruptions, its ability to recover back to its original level of performance is paramount. Studying the recoverability of public transport networks can help to enhance their resilience in the face of natural disasters or human-made disruptions13, optimizing the response and recovery efforts by prioritizing critical components and resource allocation14,15. In these scenarios, timely recovery is essential for maintaining service quality, ensuring customer satisfaction, and minimizing economic impacts16. Moreover, incorporating recoverability assessment into long-term planning can lead to more resilient networks when designing or expanding public transport systems17,18. In this work, we study the notion of recoverability following a topological approach inspired by previous work in the context of optical networks19. We adapt this methodology for the topological assessment of recoverability in PTNs and apply it to a dataset of 42 metro networks worldwide that we constructed based on General Transit Feed Specification (GTFS) data. This dataset does not only consist of more networks than considered in related works but is also more insightful, since it is comprised of weighted network representations of both infrastructure and service layers labeled with in-vehicle travel time and waiting time, respectively, instead of the more prevalent unlabeled networks found in the literature8.

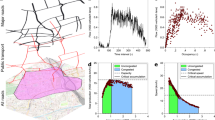

We model partial/complete failures by successively removing a random link from the network. Then, we use a simple greedy heuristic to recover the network to its original state. At each step of the recovery process, the greedy heuristic selects the link among those removed during failure that, if added, would render the largest performance improvement. We adopt a passenger-focused approach and define performance as the mean of the reciprocals of the generalized travel cost (GTC) between each pair of nodes in the network. The GTC accounts for initial and transfer waiting times, in-vehicle travel times, and a time-equivalent penalty cost per transfer between service lines. We measure the performance of the network throughout the failure/recovery process to assess the network’s ability to recover. Figure 1 illustrates the failure/recovery process using the Copenhagen metro network as an example, where strongly connected components are shown using different colors. Starting from the original network (a), a partial failure of 20 links is modeled, resulting in a heavily disconnected network with 15 strongly connected components (b). The greedy heuristic starts the recovery process by adding first the links that render the largest increase in performance. Despite its myopic view, the strategy is able to rapidly increase the size of the largest connected component from 6 to 21 by just adding three links back into the network (c). The recovery process continues by iteratively connecting smaller components to the largest connected component (d). The links connecting to end nodes are the last to be recovered since they yield a relatively lower increase in performance compared to more central nodes (e). At the end of the recovery process, the network is back in its original state (f).

Colors indicate strongly connected components. a original network. b partial failure of 20 links. c greedy recovery adds first the links that yield the largest increase in performance. d recovery successively connects smaller components to the largest connected component. e links connecting to end nodes are the last to be recovered. f the network is back in its original state at the end of the recovery process.

We define the retained performance ratio \({P}_{{G}_{i}}\) as the performance at step i of the failure/recovery process, normalized by the performance of the original network. Figure 2 shows the retained performance ratio at each step of the failure/recovery process for the Copenhagen example illustrated in Fig. 1. The cumulative performance loss during the failure process is given by the indicator F, which corresponds to the area above the retained performance curve during the failure process. Conversely, the cumulative performance gain during the recovery process is given by indicator R, which corresponds to the area under the performance ratio curve. We characterize the recoverability properties of a network based on the values of F, R, the ratio R/F, and the difference R − F. Intuitively, a network with good recoverability properties will consistently have low values of F and large values of R, R/F, and R − F. Besides studying these recoverability indicators on their own, we examine how they correlate with several topological characteristics of the network which account for its size, connectivity, efficiency, and hierarchical structure.

Results

Recoverability indicators

We simulate the failure/recovery process of each network in our dataset to assess its recoverability properties. Figure 3 shows the retained performance ratio (\({P}_{{G}_{i}}\)) at each step i of the failure/recovery process for different link-removal thresholds. Each curve in the plot corresponds to a single realization (i.e., a single independent failure/recovery process). We consider partial failures that remove 25%, 50%, and 75% of the links in the networks, as well as complete failures. Looking at the plots in Fig. 3, it is clear that the failure and recovery curves are not symmetrical, since the area over the recovery curves is much smaller than the area over the failure curves. This shows that the simple greedy recovery strategy is able to rebound quickly from the loss of performance during the failure process of the network. As expected, the variability in the retained performance ratio decreases when increasing the percentage of removed links. In particular, the greedy recovery process becomes deterministic in the case of removing all links and the only remaining variability corresponds to the different networks studied.

Figure 4 allows us to visually compare different networks in terms of their recoverability properties. Cities are color-coded based on their relative ranking (lower is better) on each of the four recoverability indicators in scenarios with varying percentages of removed links. The exact position of each city in the ranking is noted for the combined indicator R/F with complete link removal and is also displayed in Fig. 5, which shows a map depicting all studied PTNs and highlights the top and bottom three performing networks. The best values in terms of cumulative performance loss (F)—least relative loss of performance compared to normal operations—are achieved by relatively small networks, namely, Marseille, Lyon, and Warsaw (F ≤ 10.1 for 25% link removal and F ≤ 76.3 for 100% link removal). For the largest networks in the dataset, results show that London, Santiago, and Paris achieve better—lower—values of F than New York, Madrid, Berlin, and Chicago, across all removal thresholds. The worst-performing networks in terms of F are Oslo, Stockholm, and Rome where a significant loss in performance is observed already early on in the failure process (F ≥ 14.0 with 25% link removal and F ≥ 84.4 with 100% link removal). The Santiago network stands out in terms of the cumulative performance gain indicator (R), obtaining the best values across all removal thresholds (R = 19.9 with 25% links removed, R = 59.4 with 100% links removed). Along with Santiago, the networks of New York, London, Paris, Madrid, and Valencia are also consistently among the top-ranked networks in terms of the R indicator across all removal thresholds. Conversely, the worst-performing networks in terms of R include—as in the case of F—Oslo, Rome, and Stockholm (R ≤ 16.5 with 25% removal and R ≤ 41.8 with 100% removal), with the addition of Boston and Prague, which perform worse when removing a larger percentage of links. For the combined indicators (R/F and R − F), results show that the top five performing networks are: Santiago, London, New York, Paris, and Lyon (in that order); all relatively large networks with the exception of Lyon. Conversely, the worst-performing networks include Boston, Chicago, Rome, Stockholm, and Oslo; a combination of mid- to large-scale networks. Figure 4, also allows a comparison of how networks perform when varying the percentage of links removed/added in the failure/recovery process. For this purpose, we compute the Spearman correlation between the values obtained for each of the four recoverability indicators with different removal thresholds. Results show that the values of F are highly correlated (0.86 to 0.98) between different removal thresholds. Thus, networks perform consistently under partial and complete failure scenarios in terms of this indicator. Similar results were observed for the combined indicators R/F and R − F. In the case of the R indicator, however, we observe a moderately positive correlation, which decreases with larger differences in the percentage of removed links (0.66 to 0.89). This finding suggests that some networks may perform better at partial failure scenarios (with fewer links removed) and worse at complete failure scenarios—and vice versa—when considering this indicator.

Cities are color-coded based on their relative ranking (lower is better) on each of the four recoverability indicators (F, R, R/F, and R − F) in scenarios with varying percentages of removed links (25%, 50%, 75%, and 100% of the links in the network removed). The exact position of each city in the ranking is noted for the combined indicator R/F with 100% link removal.

Networks higher in the ranking (i.e., that perform better) are depicted in green while networks on the lower-end of the ranking are depicted in red. The top and bottom three performing networks are highlighted and a visual representation of the network is included. Background map: Esri. “Dark Gray Canvas” [basemap]. Scale Not Given. Feb 2, 2024. https://www.arcgis.com/home/item.html?id=c11ce4f7801740b2905eb03ddc963ac8(Accessed: Feb 29, 2024). Sources: Esri, TomTom, Garmin, FAO, NOAA, USGS, © OpenStreetMap contributors, and the GIS User Community.

Relation with topological indicators

Next, we study the correlation between the four recoverability indicators and relevant topological indicators. Figure 6 shows a heatmap of the Pearson correlation between topological and recoverability indicators for different removal thresholds. Indicator F shows a moderate to high positive correlation (0.39 to 0.57) with diameter (D) and average shortest path, both weighted and unweighted (correlations of 0.42 to 0.48 with \(\overline{{{{{{\mathrm{SP}}}}}}^{w}}\) and 0.60 to 0.70 with \(\overline{\,{{{{\mathrm{SP}}}}}\,}\)). This points to a relation between network efficiency in terms of hops and travel time and the impact of disruptions, with networks where passengers need to travel longer distances showing a larger cumulative performance loss. It is worth noting here the absence of correlation between F and the size of the graph both in terms of number of nodes (∣V∣) and links (∣E∣). The ability to withstand disruptions appears thus to be related to the efficiency of the connection between nodes, irrespective of the size of the network. An example of this can be seen when comparing Santiago with Oslo, Rome, or Stockholm. Despite being networks of comparable size, Santiago, which is more efficient than the rest—in terms of average weighted shortest path—is able to withstand disruptions much better, as depicted in Fig. 4. Similarly, indicator F shows a moderate negative correlation ( − 0.37 to − 0.65) with density (γ), suggesting that dense networks are able to withstand disruptions more effectively, achieving a smaller cumulative performance loss during the failure process. The correlation is stronger when increasing the percentage of links removed, suggesting that dense networks are particularly effective at coping with large disruptions in the system.

The four recoverability indicators are depicted (i.e., F, R, R/F and R − F). For each recoverability indicator the results with different link-removal thresholds are depicted (i.e., 25%, 50%, 75% and 100% of the links removed). The topological indicators considered are: number of nodes (∣V∣), number of links (∣E∣), diameter (D), density (γ), meshedness (α), average shortest path (\(\overline{\,{{\mbox{SP}}}\,}\)), average weighted shortest path (\(\overline{{{{\mbox{SP}}}}^{w}}\)), rate of the exponential distribution of node betweenness centrality unweighted (λ) and weighted (λw), and assortativity (r). Green values indicate a positive correlation while pink values indicate a negative correlation.

In terms of indicator R, we find a moderate to high positive correlation (0.70 to 0.78) with meshedness across all removal thresholds. This finding suggests that networks that feature more redundancy (i.e., networks with a greater prevalence of cycles) are able to rebound faster from the loss of performance. This can be observed when looking at the network rankings in Fig. 4 and the topological indicators in Table 1, where the top five performing networks in terms of indicator R have meshedness values of α > 77.8 × 103 while the bottom five performing networks have meshedness values of α < 15.23 × 103. In networks with high meshedness, the greedy strategy is able to take advantage of the existence of multiple alternative paths to connect two nodes and can recover those links that render a larger increase in performance early on in the recovery process. Similarly, indicator R is moderately to highly correlated with the size of the network in terms of nodes and links (0.53 to 0.60 with ∣V∣ and 0.56 to 0.64 with ∣E∣). This can be explained by the fact that the larger the network is the larger the set of links the greedy strategy can choose from during the recovery process. Thus, in larger networks, the decision space for the greedy recovery strategy is larger and, as a result, there is more room for improvement in performance.

A moderate positive correlation with meshedness is found, as in the case of R, also for the combined recoverability indicators (R/F and R − F), albeit with lower values (0.52–0.70). The combined indicators also show a low positive correlation with the network size (0.23–0.41 with ∣V∣ and 0.27–0.46 with ∣E∣) and a moderate to low correlation with the average (weighted) shortest path, which decreases with larger removal thresholds.

Assortativity and the rate of the exponential distribution of node betweenness centrality, both unweighted (λ) and weighted (λw), show negligible to low correlation with all recoverability indicators across all removal thresholds. Thus, we cannot infer a strong relation between network hierarchy and recoverability.

We delve deeper into two of the identified correlations between recoverability and topological indicators by plotting scatter plots for two selected relations in Fig. 7. Results shown are for the scenario of complete link removal. Figure 7a shows the F indicator against the average shortest path (\(\overline{SP}\)). Lower—better—values of F are achieved by networks with lower average shortest path. The best values of F are achieved by networks among the smallest included in the dataset, which have naturally smaller values for \(\overline{SP}\). Among the largest networks in the dataset, London outperforms Paris and New York despite having a larger average shortest path and achieves an even better improvement in F compared to Berlin and Madrid, both of which have larger average shortest path. Figure 7b shows the relation between the R indicator and the meshedness (α) of the network. In this case, results show that larger values of meshedness lead to higher—better—values of R. The most outstanding case is Santiago: with a significantly higher meshedness value than the rest of the networks in our dataset, Santiago clearly outperforms all other networks in terms of the R indicator. Following Santiago, the networks of New York, London, Madrid, and Paris show large values for meshedness and good recoverability properties in terms of the R indicator.

Discussion

We presented a topological approach to measure recoverability in PTNs, i.e., the ability of networks to return to the original performance level in the aftermath of successive disruptions. The proposed methodology was applied to a dataset of 42 metro networks that was constructed for this purpose, which stands out from those found in previous literature not only for its larger size but also for incorporating service information that accounts for in-vehicle travel time and waiting time. We assess the recoverability of the networks in the dataset based on four simple indicators and relate them to several topological properties of the networks. Results show that a simple greedy recovery heuristic is able to rebound quickly from the loss of performance during the failure process. In relation to topology, results suggest that more efficient and denser networks are able to better withstand disruptions, while larger networks with more redundancy can rebound faster to the normal performance level during recovery. In practice, reducing the average in-vehicle travel time between nodes can improve the networks’ ability to withstand disruptions, while increasing the redundancy of the network (by incorporating alternative paths between pairs of nodes) can improve the network performance during the recovery phase.

The findings of this study can help planners in designing new networks with good recoverability properties as well as enhance the recoverability of existing ones. The proposed methodology is useful for both strategic and operational planning. As an example, long-term strategic planning involving the introduction of new lines or the extension of existing ones, would result in a change in the topology of the network and, therefore, in its recoverability properties. In such cases, planners could use the proposed methodology to assess the recoverability properties of different network designs and incorporate this information into the decision-making process. In the context of real-time operational planning, since the methodology accounts for in-vehicle travel time and waiting time, even the impact of operational changes on the recoverability of the network can be assessed. For instance, an operator could assess different tentative timetables and select among them based on their impact on the recoverability properties of the network. Nonetheless, practitioners should bear in mind that the proposed approach studies the network from a strictly service topology point of view, without modeling details related to infrastructure features (e.g., rail junctions, passing loops, or extra tracks) which may affect the operations during failure/recovery.

We identify several lines of future research that arise from this work. Firstly, we plan on further expanding the dataset of networks and including other modes of transport. This ultimately depends on the availability and correctness of GTFS data, which is identified as a key bottleneck for building comprehensive datasets of PT network graph representations. Secondly, other failure and recoverability strategies could be devised including targeted node/link attacks and smarter recovery algorithms that extend the simple greedy heuristic presented in this work (e.g., incorporating information from the disruption into the recovery strategy). Thirdly, new performance metrics should be incorporated to account for other aspects of performance besides the generalized travel cost (GTC). Finally, we propose studying multi-modal PTNs, to look into the interplay of different modes in the event of failures and its impact on recoverability.

Methods

Networks dataset

A dataset consisting of 51 graph representations of metro networks was constructed based on General Transit Feed Specification (GTFS) data, the de facto standard for transit data. Multiple definitions exist to specify what constitutes a metro system. In this work, we consider those PTNs that fulfill the following criteria, as stated by the International Association of Public Transport20: i) located in an urban area; ii) exclusive right-of-way; iii) grade-separated; iv) high-frequency and high-capacity vehicles. GTFS data corresponding to each metro network were obtained mainly from database.mobilitydata.org and local transit agencies and operators’ websites. These data were initially processed using the gtfspy library21. We selected a representative day for each network dataset (i.e., a day with at least 90% as many vehicle trips as the day with the highest number of trips) and filtered out trips occurring between midnight and 5 a.m., when service levels are significantly reduced. Gtfspy provides an initial graph representation of the network, with nodes representing stops and links labeled with the average travel time and the number of vehicles per route that connect the two nodes.

In most cases, the graph built by gtfspy for a given city has multiple nodes corresponding to the same physical station (e.g., one node per platform, different nodes for ground/elevated access). For the purpose of our study, we need to merge all nodes corresponding to the same station. We therefore merge all nodes within walking proximity where passengers are not requested to check out of the transport system. This graph post-processing involves three steps and requires having the official metro map of the network in sight for validation purposes. First, nodes with identical names that are less than 200 meters apart are automatically merged. Then, stops with names that are 75% similar (according to Levenshtein distance) and which are less than 500 meters apart, are candidates for merging subject to a manual confirmation. This step allows merging nodes that have different names for the same physical station (e.g., “Central Station Ground” and “Central Station Elevated”). Finally, a visual interface allows for the merging of arbitrary nodes that were not detected in the previous steps.

After this post-processing, several sanity checks were performed, resulting in two distinctive graph representations: L-space (space of infrastructure) and P-space (space of service). In L-space, each node represents a stop, and two stops are connected with a link only if they are adjacent on a rail segment. In our analysis, the generated L-space is a directed graph where links are labeled with the average in-vehicle travel time. In P-space, nodes are stops and two stops are connected if they are served by at least one common route. Thus, the first-degree neighbors of a node in P-space represent the set of stops that can be reached without performing a transfer. In our analysis, P-space is a directed graph labeled with the average waiting time, which is approximated as half of the headway based on the joint service frequency of all direct services between the two respective stops (nodes). For an in-depth description of L-space and P-space we refer readers to the work by Luo et al.22. The curated set of metro networks is publicly available23,24. In this work, we filter out networks with fewer than 50 links, where studying recoverability becomes trivial. Consequently, we discard the nine smaller networks and consider only the remaining 42 networks for our study.

Measuring recoverability of PTNs

This section outlines the methodology to characterize the recoverability properties of a metro network.

Network performance

The performance of a network in our analysis is characterized by its efficiency \(e:G(V,E)\to {\mathbb{R}}\), which is defined as the mean of the reciprocals of the Generalized Travel Cost (GTC) between each pair of nodes in a graph (Eq. (1)).

The GTC between pairs of stops accounts for initial and transfer waiting times, in-vehicle travel times, and a time-equivalent penalty cost for transfers. For a given pair of nodes i and j, we consider sp1, …, spm, the m shortest paths in L-space, i.e., the shortest in terms of in-vehicle travel time. For those paths, we compute the sum of the waiting times for each leg in the path and the number of transfers, according to the labels and number of hops of the corresponding paths in P-space. The GTC is determined by the cost of the path that minimizes the weighted sum defined in Eq. (2) and GTC(i, j) = ∞ if no path connects stop i with stop j.

Network recoverability analysis

Let G0(V, E0) be the L-space representation of a PTN comprised of V nodes and E0 links. The failure process consists of K steps where, in each step, a given link of the network is selected randomly and uniformly and removed from the graph. We define the retained performance ratio \({P}_{{G}_{i}}\) as the efficiency at step i normalized by the efficiency of the original network: \({P}_{{G}_{i}}=\frac{e({G}_{i})}{e({G}_{0})}\). The number of links to be removed is defined as a fraction of the total number of links in the graph: K = σ × E0.

Conversely, the recovery process corresponds to the iterative re-introduction of the previously removed K links back to the graph. At each step of the recovery process, we select a link among those removed during failure that, if added, would render the largest improvement in \({P}_{{G}_{i}}\). This is a myopic heuristic that seeks the best local improvement in each iteration.

The cumulative performance loss during the failure process is given by \(F=\int\nolimits_{i = 0}^{i = K}(1-{P}_{{G}_{i}})\). Conversely, the cumulative performance gain during the recovery process is given by \(R=\int\nolimits_{i = K}^{i = 2K}({P}_{{G}_{i}}-{P}_{{G}_{K}})\). We characterize the recoverability properties of a network based on the values of F, R, the ratio R/F, and the difference R − F.

Experimental design

We simulate failures with σ ∈ {0.25, 0.50, 0.75, 1.00}. The behavioral route choice parameters are set to m = 5, α = 2, and β = 5 in all our experiments except for the networks of London, Paris, and New York, where m = 1 due to the otherwise excessive computational times. The minimum required number of replications of the failure/recovery process for each network was computed, with a minimum of 10 independent runs per network and a confidence level of 95%. The null hypothesis of normality on the distributions of F and R could not be rejected according to the Shapiro-Wilk test with a confidence level of 99% for all networks. Thus, we report mean values for each network and each removal threshold considered. We compare the four recoverability indicators against several topological indicators of the networks, which are defined next for a graph G(V, E). Let d(u, v, w) be the length of the shortest path between u and v in terms of number of hops (w = 1) or in-vehicle travel time (w = IVT). We consider the following topological indicators : number of nodes (∣V∣), number of links (∣E∣), diameter (\(D=\mathop{\max }\nolimits_{u\in V}\mathop{\max }\nolimits_{v\in V}d(u,v,1)\)), density (\(\gamma =\frac{| E| }{| V| \times (| V| -1)}\)), meshedness (\(\alpha =\frac{| {E}^{{\prime} }| -| V| +1}{2\times | V| -5}\)) on the equivalent undirected graph \(G(V,{E}^{{\prime} })\), average shortest path (\(\overline{{{{{{\mathrm{SP}}}}}}^{w}}={\sum }_{u\ne v\in V}\frac{d(u,v,w)}{| V| \times (| V| -1)}\)) in terms of hops (w = 1) and in-vehicle travel time (w = IVT), and degree assortativity (r) as defined in25. Moreover, we compute the distribution of betweenness centrality per node, i.e., the sum of the fraction of all-pairs shortest paths that pass through a given node, and study the goodness of fit to known distributions from the literature (power law, exponential, lognormal) with the Kolmogorov-Smirnov (KS) test. Table 1 summarizes the topological indicators considered for each network. Statistical analysis suggests that node betweenness centrality follows an exponential distribution for most networks. Thus, we report the rate of this distribution (λw) for the unweighted (w = 1) and the weighted (w = IVT) betweenness centrality, for networks with significant values according to the KS test (with α = 0. 99).

Data availability

The datasets generated during and/or analyzed during the current study are available in the following Github repository, https://github.com/renzomassobrio/recoverability.

References

Lewis, T. G. Network Science: Theory and applications (John Wiley & Sons, 2011).

Ayyub, B. M. et al. Topology-based analysis of freight railroad networks for resilience: unweighted and weighted using waybill data. Tech. Rep. United States. (Department of Transportation, Federal Railroad Administration, USA, 2023).

Barabási, A.-L. Network science. Philos.Trans. R. Soc. A: Math. Phys. Eng. Sci. 371, 20120375 (2013).

Lin, J. & Ban, Y. Complex network topology of transportation systems. Trans. Rev. 33, 658–685 (2013).

Mattsson, L.-G. & Jenelius, E. Vulnerability and resilience of transport systems—a discussion of recent research. Transp. Res. Part A Policy Pract. 81, 16–34 (2015).

Angeloudis, P. & Fisk, D. Large subway systems as complex networks. Phys. A: Stat. Mech. Appl. 367, 553–558 (2006).

Berche, B., Von Ferber, C., Holovatch, T. & Holovatch, Y. Resilience of public transport networks against attacks. Eur. Phys. J. B 71, 125–137 (2009).

Saadat, Y., Ayyub, B. M., Zhang, Y., Zhang, D. & Huang, H. Resilience of metrorail networks: Quantification with washington, dc as a case study. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part B: Mech. Eng. 5, 041011 (2019).

Zhang, Y., Ayyub, B. M., Saadat, Y., Zhang, D. & Huang, H. A double-weighted vulnerability assessment model for metrorail transit networks and its application in Shanghai metro. Int. J. Crit.Infrastruct. Protect. 29, 100358 (2020).

Derrible, S. & Kennedy, C. The complexity and robustness of metro networks. Phys. A: Stat. Mech. Appl. 389, 3678–3691 (2010).

Berche, B., Ferber, C., Holovatch, T. & Holovatch, Y. Transportation network stability: A case study of city transit. Adv. Complex Syst. 15, 1250063 (2012).

von Ferber, C., Berche, B., Holovatch, T. & Holovatch, Y. A tale of two cities. J. Trans. Secur. 5, 199–216 (2012).

Cats, O., Yap, M. & van Oort, N. Exposing the role of exposure: public transport network risk analysis. Trans. Res. Part A: Policy Pract. 88, 1–14 (2016).

Afrin, T. & Yodo, N. A concise survey of advancements in recovery strategies for resilient complex networks. J. Complex Netw. 7, 393–420 (2018).

Yu, H. & Yang, C. Partial network recovery to maximize traffic demand. IEEE Commun. Lett. 15, 1388–1390 (2011).

Matisziw, T., Murray, A. & Grubesic, T. Strategic network restoration. Netw. Spatial Econ. 10, 345–361 (2010).

Ayyub, B. M. Practical resilience metrics for planning, design, and decision making. ASCE-ASME J. Risk Uncertain/ Eng/ Syst/ Part A: Civil Eng/ 1, 04015008 (2015).

Cadarso, L. & Marín, A. Combining robustness and recovery in rapid transit network design. Transportmet. A: Trans. Sci. 12, 203–229 (2016).

Sun, P., He, Z., Kooij, R. E. & Van Mieghem, P. Topological approach to measure the recoverability of optical networks. Opt. Switch. Netw. 41, 100617 (2021).

International Association of Public Transport. World Metro Figures. Tech. Rep. D/2022/0105/07. https://www.uitp.org/publications/metro-world-figures-2021/ (2022).

Kujala, R., Weckström, C., Mladenović, M. N. & Saramäki, J. Travel times and transfers in public transport: comprehensive accessibility analysis based on pareto-optimal journeys. Comput. Environ. Urban Syst. 67, 41–54 (2018).

Luo, D., Cats, O., van Lint, H. & Currie, G. Integrating network science and public transport accessibility analysis for comparative assessment. J. Trans. Geogr. 80, 102505 (2019).

Vijlbrief, S., Cats, O., Krishnakumari, P., van Cranenburgh, S. & Massobrio, R. A curated data set of L-space representations for 51 metro networks worldwide. https://data.4tu.nl/articles/dataset/A_curated_data_set_of_L-space_representations_for_51_metro_networks_worldwide/21316824/1 (2022).

Vijlbrief, S., Cats, O., Krishnakumari, P., van Cranenburgh, S. & Massobrio, R. A curated data set of P-space representations for 51 metro networks worldwide. https://data.4tu.nl/articles/dataset/A_curated_data_set_of_P-space_representations_for_51_metro_networks_worldwide/21316950/2 (2022).

Newman, M. E. J. Mixing patterns in networks. Phys. Rev. E 67, 026126 (2003).

Acknowledgements

The work of R. Massobrio was funded by call UCA/R155REC/2021 (European Union—NextGenerationEU), project TED2021-131880B-I00 funded by MCIN/AEI/10.13039/501100011033 and the European Union “NextGenerationEU”/PRTR, and Project eMob (PID2022-137858OB-I00) funded by Spanish MCIN /AEI /10.13039/501100011033 and by “ERDF A way of making Europe”.

Author information

Authors and Affiliations

Contributions

R.M. conducted the experiments. R.M. and O.C. conceived the method, experiments, analyzed the results, and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks Bilal Ayyub and Shauhrat Chopra for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Massobrio, R., Cats, O. Topological assessment of recoverability in public transport networks. Commun Phys 7, 108 (2024). https://doi.org/10.1038/s42005-024-01596-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-024-01596-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.