Abstract

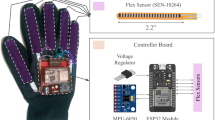

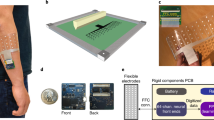

Gesture recognition using machine-learning methods is valuable in the development of advanced cybernetics, robotics and healthcare systems, and typically relies on images or videos. To improve recognition accuracy, such visual data can be combined with data from other sensors, but this approach, which is termed data fusion, is limited by the quality of the sensor data and the incompatibility of the datasets. Here, we report a bioinspired data fusion architecture that can perform human gesture recognition by integrating visual data with somatosensory data from skin-like stretchable strain sensors made from single-walled carbon nanotubes. The learning architecture uses a convolutional neural network for visual processing and then implements a sparse neural network for sensor data fusion and recognition at the feature level. Our approach can achieve a recognition accuracy of 100% and maintain recognition accuracy in non-ideal conditions where images are noisy and under- or over-exposed. We also show that our architecture can be used for robot navigation via hand gestures, with an error of 1.7% under normal illumination and 3.3% in the dark.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding author upon reasonable request. The SV datasets used in this study are available at https://github.com/mirwang666-ime/Somato-visual-SV-dataset.

Code availability

The code that supports the plots within this paper and other findings of this study are available at https://github.com/mirwang666-ime/Somato-visual-SV-dataset. The code that supports the human–machine interaction experiment is available from the corresponding author upon reasonable request.

References

Yamada, T. et al. A stretchable carbon nanotube strain sensor for human-motion detection. Nat. Nanotechnol. 6, 296–301 (2011).

Amjadi, M., Kyung, K.-U., Park, I. & Sitti, M. Stretchable, skin-mountable and wearable strain sensors and their potential applications: a review. Adv. Funct. Mater. 26, 1678–1698 (2016).

Rautaray, S. S. & Agrawal, A. Vision based hand gesture recognition for human computer interaction: a survey. Artif. Intell. Rev. 43, 1–54 (2015).

Lim, S. et al. Transparent and stretchable interactive human machine interface based on patterned graphene heterostructures. Adv. Funct. Mater. 25, 375–383 (2015).

Pisharady, P. K., Vadakkepat, P. & Loh, A. P. Attention based detection and recognition of hand postures against complex backgrounds. Int. J. Comput. Vis. 101, 403–419 (2013).

Giese, M. A. & Poggio, T. Neural mechanisms for the recognition of biological movements. Nat. Rev. Neurosci. 4, 179–192 (2003).

Tan, X. & Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19, 1635–1650 (2010).

Liu, H., Ju, Z., Ji, X., Chan, C. S. & Khoury, M. Human Motion Sensing and Recognition (Springer, 2017).

Liu, K., Chen, C., Jafari, R. & Kehtarnavaz, N. Fusion of inertial and depth sensor data for robust hand gesture recognition. IEEE Sens. J. 14, 1898–1903 (2014).

Chen, C., Jafari, R. & Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 76, 4405–4425 (2017).

Dawar, N., Ostadabbas, S. & Kehtarnavaz, N. Data augmentation in deep learning-based fusion of depth and inertial sensing for action recognition. IEEE Sens. Lett. 3, 7101004 (2019).

Kwolek, B. & Kepski, M. Improving fall detection by the use of depth sensor and accelerometer. Neurocomputing 168, 637–645 (2015).

Tang, D., Yusuf, B., Botzheim, J., Kubota, N. & Chan, C. S. A novel multimodal communication framework using robot partner for aging population. Expert Syst. Appl. 42, 4540–4555 (2015).

Wang, C., Wang, C., Huang, Z. & Xu, S. Materials and structures toward soft electronics. Adv. Mater. 30, 1801368 (2018).

Kim, D. H. et al. Dissolvable films of silk fibroin for ultrathin conformal bio-integrated electronics. Nat. Mater. 9, 511–517 (2010).

Ehatisham-Ul-Haq, M. et al. Robust human activity recognition using multimodal feature-level fusion. IEEE Access 7, 60736–60751 (2019).

Imran, J. & Raman, B. Evaluating fusion of RGB-D and inertial sensors for multimodal human action recognition. J. Amb. Intel. Hum. Comput. 11, 189–208 (2020).

Dawar, N. & Kehtarnavaz, N. Action detection and recognition in continuous action streams by deep learning-based sensing fusion. IEEE Sens. J. 18, 9660–9668 (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Wang, M., Wang, T., Cai, P. & Chen, X. Nanomaterials discovery and design through machine learning. Small Methods 3, 1900025 (2019).

Li, S.-Z., Yu, B., Wu, W., Su, S.-Z. & Ji, R.-R. Feature learning based on SAE–PCA network for human gesture recognition in RGBD images. Neurocomputing 151, 565–573 (2015).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115–118 (2017).

Long, E. et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat. Biomed. Eng. 1, 0024 (2017).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Silver, D. et al. Mastering the game of Go without human knowledge. Nature 550, 354–359 (2017).

Chandrasekaran, C., Lemus, L. & Ghazanfar, A. A. Dynamic faces speed up the onset of auditory cortical spiking responses during vocal detection. Proc. Natl Acad. Sci. USA 110, E4668–E4677 (2013).

Lakatos, P., Chen, C. M., O’Connell, M. N., Mills, A. & Schroeder, C. E. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292 (2007).

Henschke, J. U., Noesselt, T., Scheich, H. & Budinger, E. Possible anatomical pathways for short-latency multisensory integration processes in primary sensory cortices. Brain Struct. Funct. 220, 955–977 (2015).

Lee, A. K. C., Wallace, M. T., Coffin, A. B., Popper, A. N. & Fay, R. R. (eds) Multisensory Processes: The Auditory Perspective (Springer, 2019).

Bizley, J. K., Jones, G. P. & Town, S. M. Where are multisensory signals combined for perceptual decision-making? Curr. Opin. Neurobiol. 40, 31–37 (2016).

Ohyama, T. et al. A multilevel multimodal circuit enhances action selection in Drosophila. Nature 520, 633–639 (2015).

Bullmore, E. & Sporns, O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198 (2009).

Yamins, D. L. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl Acad. Sci. USA 111, 8619–8624 (2014).

Gilbert, C. D. & Li, W. Top-down influences on visual processing. Nat. Rev. Neurosci. 14, 350–363 (2013).

Chortos, A., Liu, J. & Bao, Z. Pursuing prosthetic electronic skin. Nat. Mater. 15, 937–950 (2016).

Barbier, V. et al. Stable modification of PDMS surface properties by plasma polymerization: application to the formation of double emulsions in microfluidic systems. Langmuir 22, 5230–5232 (2006).

Bakarich, S. E. et al. Recovery from applied strain in interpenetrating polymer network hydrogels with ionic and covalent cross-links. Soft Matter 8, 9985–9988 (2012).

Van der Maaten, L. & Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605 (2008).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Proc. 25th International Conference on Neural Information Processing Systems Vol. 1, 1097–1105 (NIPS, 2012).

Polson, N. & Rockova, V. Posterior concentration for sparse deep learning. In Proc. 31st International Conference on Neural Information Processing Systems 930–941 (NIPS, 2018).

Le, X. & Wang, J. Robust pole assignment for synthesizing feedback control systems using recurrent neural networks. IEEE Trans. Neural Netw. Learn. Syst. 25, 383–393 (2013).

Acknowledgements

The project was supported by the Agency for Science, Technology and Research (A*STAR) under its Advanced Manufacturing and Engineering (AME) Programmatic Scheme (no. A18A1b0045), the National Research Foundation (NRF), Prime Minister’s office, Singapore, under its NRF Investigatorship (NRF-NRFI2017-07), Singapore Ministry of Education (MOE2017-T2-2-107) and the Australian Research Council (ARC) under Discovery Grant DP200100700. We thank all the volunteers for collecting data and also A.L. Chun for critical reading and editing of the manuscript.

Author information

Authors and Affiliations

Contributions

M.W. and X.C. designed the study. M.W. designed and characterized the strain sensor. M.W., T.W. and P.C. fabricated the PAA hydrogels. Z.Y. and M.W. carried out the machine learning algorithms and analysed the results. M.W., S.G. and Y.Z. collected the SV data. M.W. performed the human–machine interaction experiment. M.W. and X.C. wrote the paper and all authors provided feedback.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Notes 1–3, Figs. 1–11, Tables 1–3 and refs. 1–7.

Supplementary Video 1

Conformable and adhesive stretchable strain sensor.

Supplementary Video 2

Comparison of the robot navigation using the BSV learning-based and visual-based hand gesture recognition under a normal illuminance of 431 lux.

Supplementary Video 3

Comparison of the robot navigation using the BSV learning-based and visual-based hand gesture recognition under a dark illuminance of 10 lux.

Rights and permissions

About this article

Cite this article

Wang, M., Yan, Z., Wang, T. et al. Gesture recognition using a bioinspired learning architecture that integrates visual data with somatosensory data from stretchable sensors. Nat Electron 3, 563–570 (2020). https://doi.org/10.1038/s41928-020-0422-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-020-0422-z

This article is cited by

-

Encoding of multi-modal emotional information via personalized skin-integrated wireless facial interface

Nature Communications (2024)

-

Intelligent upper-limb exoskeleton integrated with soft bioelectronics and deep learning for intention-driven augmentation

npj Flexible Electronics (2024)

-

Computational design of ultra-robust strain sensors for soft robot perception and autonomy

Nature Communications (2024)

-

Design and fabrication of wearable electronic textiles using twisted fiber-based threads

Nature Protocols (2024)

-

Capturing complex hand movements and object interactions using machine learning-powered stretchable smart textile gloves

Nature Machine Intelligence (2024)