Abstract

Due to data availability long-term variations in precipitation rates are mostly studied based on daily precipitation recordings. Recent research suggests, however, that variations in sub-daily precipitation are subject to higher dynamics compared to daily precipitation and a more rapid intensification is likely. Here we show that both observational data with at least 58 years of sub-daily precipitation records and a dynamical downscaling approach with low spatial resolution based on atmospheric re-analysis data confirm these expectations with consistent results. High percentiles of precipitation are subject to multi-decadal oscillations and increased during the last 150 years. We found an increase of 4% K−1 (daily), 12% K−1 (hourly), and 13% K−1 (10 min), which is consistent with Clausius–Clapeyron- (CC) and super CC-scaling, respectively. These findings highlight that dynamical downscaling can help to reliably shed light on sub-daily precipitation variations if small timescales are considered in the experiments.

Similar content being viewed by others

Introduction

Short-term precipitation events with high intensities govern the dynamics of numerous fast hydrological processes like flash floods1 in urban areas and soil erosion2 in agriculture. It is expected that precipitation events will intensify as a consequence of climate change. Recent studies focused on unravelling the relationship between temperature and precipitation following the Clausius–Clapeyron (CC) equation. This equation suggests that precipitation extremes—more specifically the saturation vapour pressure—increase by 7% per degree of warming3,4 or even exceed this rate at lower temporal scales (super CC-scaling)3,5,6,7. Since temperature–precipitation-scaling also shows decreasing rates above a certain temperature or dewpoint level, it is argued that these scaling approaches are not valid under all possible conditions and thus they are not suitable for projecting changes in precipitation extremes8. Moreover, trend analyses involving long-term records of precipitation extremes are mostly in agreement with these findings as two-thirds of stations worldwide showed increasing trends9. Other studies found more stations with negative than positive trends in summer precipitation extremes in Europe10.

Global circulation models (GCMs) and regional climate models (RCMs) are capable of representing changes in precipitation characteristics at longer time scales (e.g., seasonal)6. Their applicability to reconstruct changes in sub-daily precipitation is viewed uncertain due to (i) validation data at sub-daily time scales with sufficient record length is hardly available and the (ii) errors introduced by applications of parameterization models outside their expected use. Parameterizations include e.g. convection processes6,11, which need to be considered for model grid spacings of more than ~5 km. Recent convection-resolving RCMs with higher spatial resolution below ~5 km do not need such convection parameterizations and thus are viewed promising for simulating sub-daily rainfall6,11,12 even though they are still subjected to uncertainties6,8. However, coarser scale GCM and RCM simulations are still capable to represent relevant characteristics of sub-daily precipitation (e.g., temperature–precipitation scaling, high-precipitation percentiles)3,13,14. They also reproduce temporal changes and trends on decadal scales15. For climate projections on the global scale convection parameterizations are still relevant since convection-permitting models are demanding in terms of computational resources6,8.

Even though the availability of long-term records of sub-daily precipitation is very limited, uncertainties involved in modelling sub-daily rainfall extremes16 highlight the relevance of validating RCMs and GCMs in terms of their ability to predict sub-daily precipitation and its sensitivity to climate variability and more specifically climate change. Climate variability including both natural climate variability and anthropogenic forcing affect changes in precipitation extremes over time17, whereby natural climate variability can mask the anthropogenic signal caused by greenhouse gas emissions18. While long-term records of daily precipitation are in general more readily available1,19 and reflect higher evidence4,9,10,20, only a few studies focus on sub-daily precipitation11,17,21. Transferring results from analyses involving daily precipitation to smaller temporal scales is not reliable due to the higher relevance of super CC-scaling especially at time scales below one day6.

In this study, we address the impediment to validate sub-daily precipitation simulations under non-stationary conditions imposed by climate variability and climate change through compiling long-term records of sub-hourly precipitation to provide a comprehensive dataset for model validation. We analyse a set of sub-daily precipitation recordings in Austria, Belgium, Germany and the Netherlands with a temporal coverage of at least 58 years and a temporal resolution not coarser than one hour. Our analyses focus on the temporal variability of sub-daily precipitation at multi-decadal to centennial time scales extending earlier work17,21. Based on that, we test the hypothesis that the variability found in observed records can be reconstructed using reanalysis data downscaled with a model with convective parameterization. This approach complements ongoing research on temperature scaling and validating models regarding their capability to reproduce sub-daily precipitation by focusing on downscaling reanalysis data. Therefore, we utilize the Weather Research and Forecasting Model22 (WRF) to downscale the Twentieth Century Reanalysis Project dataset23 to a spatial and temporal resolution of 30 km and 10 min, respectively. The spatial domain covers Central Europe and the temporal coverage is 1850–2014, which allows one to analyse variations from the end of the Little Ice Age (LIA) to near present. Since we apply a coarse regional model which needs a convection parameterization to compute grid cell averages of convective precipitation that are not directly comparable to station observations, we compare the observed and modelled variability in sub-daily precipitation in terms of anomalies computed as mean of the 95th, 99th, and 99.5th percentiles (“Methods”).

Results

Observed and downscaled anomalies of heavy rainfall in Central Europe

Anomalies are computed for each station in Fig. 1 with overlapping sub-periods of 15 years. Similarly, the same procedure is applied to the downscaled time series derived from the nearest grid point of the model. Figure 2 shows a comparison of these anomalies computed for observed and modelled time series at the Uccle station. Different aggregation levels have been considered in order to highlight variations across process-relevant time scales. In general, the downscaled data reflects major features of the observed time series. In case of observational data (Fig. 2a), the comparison among these temporal resolutions show that the variability of each aggregation level shows a similar course with three maxima, the first in the early 20th century, a second one in the late 1960s and another maximum in the near present. Similarly, minima occur in the 1940s and the 1980s. The minima and maxima are slightly shifted in the modelled time series (Fig. 2b), suggesting maxima in the 1920s and early 1960s, and minima in the 1930s and 1970s. From the variations achieved for both datasets a slight tendency towards higher variability with decreasing aggregation level is obvious. Considering the modelled time series, the differences among aggregation levels are smaller and the overall variability reflects smaller amplitudes. It is worth noting that different methodologies in evaluating long-term variations in precipitation, e.g. the derivation of erosivity factors, show similar cycles for the Uccle station24.

Source: The station layer is based on metadata from precipitation datasets. Administrative borders are taken from Natural Earth. Satellite image is taken from NASA Worldview https://worldview.earthdata.nasa.gov/ which is free to use under public domain.

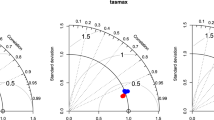

In the next step, we systematically analysed all stations in a similar way (Supplementary Fig. 1) and summarized the comparison for each station and aggregation level in a Taylor diagram25 (Fig. 3). Here, for reasons of readability, only 7 out of 11 stations with at least 60 years of data are shown and the full record length is considered for each (the other stations are shown in Supplementary Fig. 2). In terms of correlation, the results suggest on average a reasonable match of the phase (sequence of minima and maxima in the oscillating temporal course). Pearson correlations r range from 0.2 to 0.95 with values representing the spatial mean (“Methods”) above r = 0.8 (see plus symbol in Fig. 3), which suggests an acceptable model performance26. Except for Oberhausen, sub-daily anomalies show better correlations than daily anomalies. Regarding the variability, the results suggest an underestimation of amplitudes, since the majority of points has a normalized standard deviation smaller than 1. The RMSE ranges from around 0.5 to 1.25, whereby the majority of runs is characterized by RMSE values smaller than the normalized standard deviation of 1. For Uccle, we see correlations around 0.7 for 10 min and 1 h, while the correlation drops to 0.6 in case of daily data. Similarly, RMSE increases from around 0.7 to 0.8, also suggesting a drop in model performance. The variability is underestimated for sub-daily data (<1), while daily data matches the variability well (around 1). This observation is in line with the results found in Fig. 2. Acceptable results (r > 0.8) are also found for both stations in Duisburg at sub-daily time steps. The model performance achieved for Andelsbuch near the Alps also suggests correlation values close to 0.8, at least for sub-daily data. In contrast, the coincidence is generally lower in case of De Bilt and Oberhausen. Hence, apart from deficiencies related to single sites, our dynamical downscaling approach is capable of representing the variability of high precipitation percentiles across Central Europe, reflected by the correlation values of 0.84 (daily), 094 (hourly), and 0.91 (10 min), respectively.

For each station the full length of the time series is considered. Each comparison is represented by one single point. The spatial mean compares the average values considering all stations for the observed data with the average over all grid points shown in the maps of Fig. 4 for the downscaled data. Aggregation levels are represented by different colours (10min: green, 1h: blue, 24h: orange). Ordinate and abscissa refer to the standard deviation of the time series. The radial distance between each point and the origin represents the normalized standard deviation of the model run (corresponding observation is 1). The angle between the abscissa and the lines representing the shortest distance of each point to the origin is related to the correlation between observation and model run. The geometric relationship in the Taylor diagram also incorporates the central pattern root mean square error (RMSE) computed for the observation and the model run. The RMSE corresponds to the concentric isolines which are centred around the observation point. The latter has the following characteristics by definition: its standard deviation is 1, the correlation is 1 and the RMSE is 025.

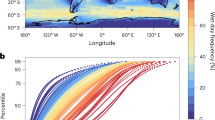

In order to visualize spatial patterns of variations in high precipitation percentiles computed for 10 min aggregation level, Fig. 4 compiles a series of maps ranging from the end of the LIA to near present. The maps show that anomalies are heterogeneous in terms of their spatial distribution for each period considered in the maps. Some regions show higher temporal variability (e.g., Northern Germany and Northern Italy), while other regions are subject to smaller temporal variation (e.g., the regions around the Alps). For instance, the absolute values computed for Andelsbuch are smaller than those computed for the Northern European Plain (including the Netherlands and Northern Germany). Figure 5 shows time series of the spatial mean including each map and intermediate steps. Figure 5a includes the time series of averaged observed time series, while the lower panel (Fig. 5b) presents the spatial mean time series computed considering all grid points. The temporal evolution of high precipitation anomalies is in line with those achieved for observed data. From Fig. 5 it is obvious that multi-decadal variations found in the observational data (Fig. 5a) seems to be valid at larger scales as well (Fig. 5b). Moreover, differences in the amplitudes among different aggregation levels are also visible for large spatial averages, suggesting that sub-daily anomalies in high precipitation percentiles are subject to higher variability in the past 150 years. For the last decade (i.e., the 2000s) we found positive anomalies in modelled high precipitation percentiles relative to the reference period 1971–2000. Figure 6 shows the scaling rates (s) achieved by comparing anomalies of high precipitation percentiles of the dynamical downscaling experiment (Fig. 5b) with temperature anomalies. The temperature anomalies are computed for the mean annual temperature of the dynamical downscaling experiment (Fig. 5c) for the reference period 1971–2000. The scaling rates of 4% K−1 (daily), 12% K−1 (hourly) and 13% K−1 (10 min), derived by linear regression, suggest that the aforementioned positive anomalies are in line with CC-scaling (daily aggregation level) and super-CC-scaling (sub-daily aggregation levels).

a In case of observations, the number stations involved in the spatial mean is also indicated. b For the modelled data, the spatial mean is the average over all grid points. In both panels, different aggregation levels (10min, 1h, and 24h) are displayed. Coloured bands denote ±one standard deviation. The rolling mean of the temperature c is derived for the mean annual temperature corresponding to the dynamical downscaling experiment.

Discussion

The results achieved in the framework of this study highlight that high precipitation percentiles are subjected to multi-decadal oscillations at the centennial scale and that these variations are captured by the downscaling experiment. Similar variations in observational datasets were reported by several authors who conclude that these oscillations are related to natural climate variability and climate change, at least as a possible explanation for the increase in the past decades17,21,27. Besides that, we were able to demonstrate that different aggregation levels of the precipitation time series reflect different magnitudes of variations, whereby sub-daily variations are characterized by higher magnitudes than those achieved for daily time series. This outcome is in line with recent findings6,17. In contrast to earlier work we utilized a larger set of long-term station datasets with sub-daily resolution which allows us to more comprehensively validate our downscaling approach based on reanalysis data, which was found to perform reasonably well, albeit its simplicity. Although the validation data is clustered around the Netherlands and Western Germany, the results achieved for Andelsbuch display that the lower variability found for the Alpine region is also captured by the model.

Nevertheless, this study is based on a range of assumptions: (i) First, due to the limitation in terms of data availability, different length of time series is relevant. A direct comparison among all sites is only possible when starting the analyses in 1957. (ii) From a historical overview of measuring sub-daily rainfall28,29, it becomes evident that the homogeneity of time series is a source of uncertainty due to changes in instrumentation within long records. Little is known about changes in instrumentations for most sites. For some sites, changes in instrumentation have been reported (e.g., De Bilt30). We applied the time series ‘as is’ which means that the analyses might be subjected to uncertainties arising from inhomogeneities relevant for specific characteristics of the time series. (iii) The combination of 30 km spatial resolution with a small domain and 10 min temporal resolution is not a common approach, even though the spatial resolution of 30 km requires an internal model time step of 2.5 min for numerical reasons. Still, other studies involve similar settings, e.g., Sunyer et al.14 presents studies on rainfall extremes achieved by a 50 km model at hourly time scale. They found that a sub-daily model with 25 km aggregated to 24 h best matches the observational dataset. However, our setup is viewed as a compromise considering computational costs and data storage requirements on the one hand and the focus on variations in rainfall characteristics rather than event-based considerations on the other. The results achieved for the more common 24 h aggregation level might be viewed as a reference to evaluate the sub-daily aggregation levels likewise. Even though WRF is a proven model that has been tested for various spatial resolutions31,32, improved simulations are expected if the model is employed with convection-resolving resolution11,12. While Knist et al. 32 found that the super CC-scaling is not captured well by a non-convection permitting resolution in WRF, our results indicate CC scaling (daily aggregation level) and super-CC-scaling (sub-daily aggregation levels), although slightly underestimated. The feasibility of coarse spatial resolutions is also justified by the fact that spatial resolutions around 8–12 km are in the range of the ‘grey zone’, which might be subjected to a doubled accounting of convection, suggesting a combined effect of partly resolved and parameterized convection33.

Besides the limitations of the approach demonstrated here, the results are promising to better validate GCMs and RCMs in terms of their capability to simulate long-term variations in sub-daily precipitation. This is especially relevant, since Westra et al. 6 identified temporal scaling across different aggregation levels as one key element relevant for validating RCMs and GCMs in terms of precipitation extremes. This study demonstrates that even a dynamical downscaling approach with low spatial resolution is capable of reconstructing temporal variation in high precipitation percentiles at the centennial scale. The latter also emphasizes that trend analyses—as usually done for the past three decades only in case of sub-daily rainfall—are critical, since both increasing and decreasing trends have been detected similarly throughout the last decades9,10 for different spatial and temporal scales. Our results reveal that for some stations a decline in high precipitation percentiles in recent years is found and that this decline is also computed by the downscaling approach. This suggests that temporal scaling as key criterion to validate models should also involve the role of climate variability, which might obliterate temperature–precipitation scaling18, at least at the decadal scale as it is evident from the time series of anomalies.

A better validation of downscaling approaches regarding their accuracy in sub-daily precipitation modelling is highly relevant for the simulation of future climates with different modelling approaches including GCMs (which still require convection parameterizations) and RCMs with improved spatial resolution with grid spacings smaller than ~5 km. This increase in spatial resolution is also in line with an expected increase in the representation of precipitation processes, especially since it suggests that models resolve convection. The successful reconstruction of anomalies in high precipitation percentiles by a dynamical downscaling approach with coarse spatial resolution is a first step towards understanding the causes that hold responsible for the variability in high precipitation percentiles. Hence, the variability is inherent in the dynamical downscaling approach forced by re-analysis data, suggesting that modes of variability can be attributed to individual causes (e.g., increase in CO2 concentration) in future model experiments. This has also major implications on attribution studies to analyse to what extent anthropogenic forcing contributes to an increase in precipitation extremes.

Methods

Table 1 provides a summary of the stations involved in our study, while Fig. 1 shows a map including the location of each rainfall station. Except for the most relevant meta data (e.g., coordinates, elevation) little is known about other information relevant for this study like changes in instrumentation or corrections applied to the data. For the Uccle station, a historic overview34 and detailed analyses exist17,35. The data observed at De Bilt was also subject to numerous analyses relevant to this study3,21. The De Bilt dataset available to the authors was corrected by the data provider in order to account for a correction of the gage height and changes in surface area of the funnel30. According to the providers, the data has been checked carefully which is why we utilize the data in our study ‘as is’. The minimum temporal resolution of all time series is at least one hour (Table 1). Andelsbuch, Duisburg H. (Hülsermanngraben), Duisburg S. (Schmidthorst), Oberhausen, Soest, and Uccle are stations with sub-hourly time series.

Precipitation intensities with a temporal resolution of 10 min are computed from the end of the LIA to near present utilizing the WRF22 forced by the Twentieth Century Reanalysis Project dataset version 2c (TCRP)23 which provides meteorological fields at arbitrary levels every 6 h. The re-analysis dataset acknowledges the fact that radiosonde and remote sensing data were not available in the 19th century, which is why surface and sea level pressure were used as input to the data assimilation. This dataset has been applied in many studies that focus on the climate in past periods especially those considering the end of the LIA or the early 20th century36,37,38,39,40. The following is a list of parameterizations that have been chosen for the downscaling experiment: Morrison two-moment bulk microphysics41; Kain–Fritsch convection scheme42, Yonsei University boundary layer scheme43; Noah land surface model (LSM)44; Dudhia shortwave numerical scheme45; and the Rapid Radiative Transfer Model for longwave radiation46. This setup showed good results for precipitation characteristics in the framework of an earlier study performed by the authors47. WRF was set-up for a domain covering Central Europe, the Alps (to avoid coincidence of the boundary with mountain ranges) and Northern Italy with a single domain covering 64 rows, 44 columns, and 40 vertical levels. This step has been performed using the WRF pre-processing system (WPS) in order to generate the 6-hourly input files for the period 1851–2014 based on the TCRP dataset. The corresponding internal time step is 150 s. The output is 600 s corresponding to the target temporal resolution of 10 min, which is an integral multiple of the internal time step.

In this study, we focus on high precipitation percentiles of 95th, 99th, and 99.5th for which we explore centennial scale variations. These percentiles were also proposed by Lenderink et al. 21. Since the downscaling experiment has a spatial resolution of 30 km, convective events are only considered through a convection parameterization and the precipitation total per time step is an average representative for a grid cell. Thus, a direct comparison of rainfall extremes (e.g., partial or annual series as described by Willems17) derived for both the observed data and the model is not feasible48. However, the focus on high percentiles instead of extreme value distributions derived utilizing partial or annual series is beneficial, since rolling averages over extremes along the time axis might introduce oscillations caused by single extreme events49, if not considered with special care50. Combining the average over percentiles21 with the moving window approach avoids such limitations, since the consideration of more than one percentile is more robust compared to the consideration of single extreme events that might amplify the anomalies.

For overlapping periods of 15 years17 the average of the 95th, 99th, and 99.5th percentiles \(\hat{P}=\overline{P_{95},P_{99},P_{99.5}}\) is computed for both the station data and the corresponding grid point in the model. The choice of percentiles is a compromise that acknowledges sample size on one hand and window size on the other. Utilizing one single percentile might result in too noisy signals due to smaller sample size per 15 years period, suggesting a possible overestimation due to the amplification of single extreme values21,49. Based on this consideration, we compute anomalies for each period of 15 years nsub period by involving the corresponding average achieved for the reference period 1971–2000 as follows:

This approach yields an annual series of anomalies in which each year represents an average information of 15 years from 7 years before and 7 years after the considered year. These anomalies are computed for different aggregation levels ranging from 10 min, to 1 and 24 h representing time scales relevant in different applications in hydrology. For instance, 10 min rainfall is relevant for urban hydrology, torrential flow and flash floods, while hourly values are suitable for studying floods in small catchments. The daily resolution makes the results comparable to many more studies that involve daily rainfall totals only. This temporal scale is also relevant for a lot of applications in hydrology ranging from floods in large river basins to water balance studies. Anomalies computed for observed and modelled time series can be compared using different measures including Pearson correlation. Here, we combine correlation with a comparison of the standard deviation of both time series and the root mean square error (RMSE) in a Taylor diagram25.

The article was previously published as a preprint:

Förster, K. & Thiele, L. -B. Variations in sub-daily precipitation at centennial scale. Preprint at EarthArXiv. https://doi.org/10.31223/osf.io/2f54a (2019).

Data availability

Data used for forcing the model was provided by the NOAA/CIRES Twentieth Century Global Reanalysis Version 2c which is available online https://doi.org/10.5065/D6N877TW. The spatial results shown in Fig. 4 are published: https://doi.org/10.5281/zenodo.3693560. Time series of the rainfall stations were kindly provided by the institutions listed in Table 1. Most of the station data from the Netherlands is publicly available via KNMI’s website https://www.knmi.nl/nederland-nu/klimatologie/uurgegevens.

Code availability

The WRF version 4.0.1 model code was obtained via the repository at https://github.com/wrf-model/WRF. A function to plot the Taylor diagrams as shown in this article is also available: https://gist.github.com/ycopin/3342888.

References

Willems, P. Adjustment of extreme rainfall statistics accounting for multidecadal climate oscillations. J. Hydrol. 490, 126–133 (2013).

Mueller, E. N. & Pfister, A. Increasing occurrence of high-intensity rainstorm events relevant for the generation of soil erosion in a temperate lowland region in Central Europe. J. Hydrol. 411, 266–278 (2011).

Lenderink, G. & van Meijgaard, E. Increase in hourly precipitation extremes beyond expectations from temperature changes. Nat. Geosci. 1, 511–514 (2008).

Berg, P. et al. Seasonal characteristics of the relationship between daily precipitation intensity and surface temperature. J. Geophys. Res. 114, D18102 (2009).

Berg, P., Moseley, C. & Haerter, J. O. Strong increase in convective precipitation in response to higher temperatures. Nat. Geosci. 6, 181–185 (2013).

Westra, S. et al. Future changes to the intensity and frequency of short-duration extreme rainfall. Rev. Geophys. 52, 522–555 (2014).

Lenderink, G., Barbero, R., Loriaux, J. M. & Fowler, H. J. Super-Clausius–Clapeyron scaling of extreme hourly convective precipitation and its relation to large-scale atmospheric conditions. J. Clim. 30, 6037–6052 (2017).

Zhang, X., Zwiers, F. W., Li, G., Wan, H. & Cannon, A. J. Complexity in estimating past and future extreme short-duration rainfall. Nat. Geosci. 10, 255–259 (2017).

Westra, S., Alexander, L. V. & Zwiers, F. W. Global increasing trends in annual maximum daily precipitation. J. Clim. 26, 3904–3918 (2013).

Łupikasza, E. Seasonal patterns and consistency of extreme precipitation trends in Europe, December 1950 to February 2008. Clim. Res. 72, 217–237 (2017).

Prein, A. F. et al. The future intensification of hourly precipitation extremes. Nat. Clim. Change 7, 48–52 (2017).

Ban, N., Schmidli, J. & Schär, C. Heavy precipitation in a changing climate: does short-term summer precipitation increase faster? Geophys. Res. Lett. 42, 1165–1172 (2015).

Tripathi, O. P. & Dominguez, F. Effects of spatial resolution in the simulation of daily and subdaily precipitation in the southwestern US. J. Geophys. Res. Atmos. 118, 7591–7605 (2013).

Sunyer, M. A., Luchner, J., Onof, C., Madsen, H. & Arnbjerg-Nielsen, K. Assessing the importance of spatio-temporal RCM resolution when estimating sub-daily extreme precipitation under current and future climate conditions. Int. J. Climatol. 37, 688–705 (2017).

Gregersen, I. B. et al. Assessing future climatic changes of rainfall extremes at small spatio-temporal scales. Clim. Change 118, 783–797 (2013).

Berg, P. et al. Summertime precipitation extremes in a EURO-CORDEX 0.11° ensemble at an hourly resolution. Nat. Hazards Earth Syst. Sci. 19, 957–971 (2019).

Willems, P. Multidecadal oscillatory behaviour of rainfall extremes in Europe. Clim. Change 120, 931–944 (2013).

Martel, J.-L., Mailhot, A., Brissette, F. & Caya, D. Role of Natural climate variability in the detection of anthropogenic climate change signal for mean and extreme precipitation at local and regional scales. J. Clim. 31, 4241–4263 (2018).

Förster, K., Hanzer, F., Winter, B., Marke, T. & Strasser, U. An open-source MEteoroLOgical observation time series DISaggregation Tool (MELODIST v0.1.1). Geosci. Model Dev. 9, 2315–2333 (2016).

Tabari, H. & Willems, P. Lagged influence of Atlantic and Pacific climate patterns on European extreme precipitation. Sci. Rep. 8, 5748 (2018).

Lenderink, G., Mok, H. Y., Lee, T. C. & van Oldenborgh, G. J. Scaling and trends of hourly precipitation extremes in two different climate zones—Hong Kong and the Netherlands. Hydrol. Earth Syst. Sci. 15, 3033–3041 (2011).

Skamarock, W. C. et al. A Description of the Advanced Research WRF Version 3. NCAR Technical note-475+STR (2008).

Compo, G. P. et al. The twentieth century reanalysis project. Q. J. R. Meteorol. Soc. 137, 1–28 (2011).

Fiener, P., Neuhaus, P. & Botschek, J. Long-term trends in rainfall erosivity-analysis of high resolution precipitation time series (1937–2007) from Western Germany. Agric. For. Meteorol. 171–172, 115–123 (2013).

Taylor, K. E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. 106, 7183–7192 (2001).

Lo, J. C.-F., Yang, Z.-L. & Pielke, R. A. Assessment of three dynamical climate downscaling methods using the Weather Research and Forecasting (WRF) model. J. Geophys. Res. 113, D9 (2008).

Kunkel, K. E., Easterling, D. R., Redmond, K. & Hubbard, K. Temporal variations of extreme precipitation events in the United States:1895-2000. Geophys. Res. Lett. 30, 1–4 (2003).

Kurtyka, J. C. & Madow, L. Precipitation Measurements Study. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.461.3364&rep=rep1&type=pdf (1952).

Strangeways, I. A history of rain gauges. Weather 65, 133–138 (2010).

Beersma, J., Bessembinder, J., Brandsma, T., Versteeg, R. & Hakvoort, H. Actualisatie meteogegevens voor waterbeheer 2015 (2015).

Singleton, A. & Toumi, R. Super-Clausius–Clapeyron scaling of rainfall in a model squall line. Q. J. R. Meteorol. Soc. 139, 334–339 (2013).

Knist, S., Goergen, K. & Simmer, C. Evaluation and projected changes of precipitation statistics in convection-permitting WRF climate simulations over Central Europe. Clim. Dyn. https://doi.org/10.1007/s00382-018-4147-x (2018).

Chan, S. C., Kendon, E. J., Fowler, H. J., Blenkinsop, S. & Roberts, N. M. Projected increases in summer and winter UK sub-daily precipitation extremes from high-resolution regional climate models. Environ. Res. Lett. 9, 084019 (2014).

Demarée, G. R. Le pluviographe centenaire du plateau d’Uccle: son histoire, ses données et ses applications. La Houille Blanche 4, 95–102 (2003).

De Jongh, I. L. M., Verhoest, N. E. C. & De Troch, F. P. Analysis of a 105-year time series of precipitation observed at Uccle, Belgium. Int. J. Climatol. 26, 2023–2039 (2006).

Michaelis, A. C. & Lackmann, G. M. Numerical modeling of a historic storm: Simulating the Blizzard of 1888. Geophys. Res. Lett. 40, 4092–4097 (2013).

Xiang-Hui, K. & Xun-Qiang, B. Dynamical downscaling of the twentieth century reanalysis for China: climatic means during 1981–2010. Atmos. Ocean. Sci. Lett. 8, 166–173 (2015).

Stucki, P. et al. Evaluation of downscaled wind speeds and parameterised gusts for recent and historical windstorms in Switzerland. Tellus A: Dyn. Meteorol. Oceanogr. 68, 31820 (2016).

Brönnimann, S. Weather Extremes in an Ensemble of Historical Reanalyses (Geographica Bernensia, 2017).

Parodi, A. et al. Ensemble cloud-resolving modelling of a historic back-building mesoscale convective system over Liguria: the San Fruttuoso case of 1915. Climate 13, 455–472 (2017).

Morrison, H., Thompson, G. & Tatarskii, V. Impact of cloud microphysics on the development of trailing stratiform precipitation in a simulated squall line: comparison of one- and two-moment schemes. Mon. Weather Rev. 137, 991–1007 (2009).

Kain, J. S. The Kain–Fritsch convective parameterization: an update. J. Appl. Meteor. 43, 170–181 (2004).

Hong, S.-Y., Noh, Y. & Dudhia, J. A new vertical diffusion package with an explicit treatment of entrainment processes. Mon. Weather Rev. 134, 2318–2341 (2006).

Chen, F. & Dudhia, J. Coupling an advanced land surface-hydrology model with the Penn State-NCAR MM5 modeling system. Part I: model implementation and sensitivity. Mon. Weather Rev. 129, 569–585 (2001).

Dudhia, J. Numerical study of convection observed during the winter monsoon experiment using a mesoscale two-dimensional model. J. Atmos. Sci. 46, 3077–3107 (1989).

Mlawer, E. J., Taubman, S. J., Brown, P. D., Iacono, M. J. & Clough, S. A. Radiative transfer for inhomogeneous atmospheres: RRTM, a validated correlated-k model for the longwave. J. Geophys. Res. 102, 16663–16682 (1997).

Förster, K., Meon, G., Marke, T. & Strasser, U. Effect of meteorological forcing and snow model complexity on hydrological simulations in the Sieber catchment (Harz Mountains, Germany). Hydrol. Earth Syst. Sci. 18, 4703–4720 (2014).

Chen, C.-T. & Knutson, T. On the verification and comparison of extreme rainfall indices from climate models. J. Clim. 21, 1605–1621 (2008).

Fischer, S. & Schumann, A. Comment on the paper of Willems, P.: multidecadal oscillatory behaviour of rainfall extremes in Europe. Published in: Climatic Change 120 (4), p. 931–944. Clim. Change 130, 77–81 (2015).

Willems, P. Author’s response to the commentary by S. Fischer & A. Schumann on ‘Multidecadal oscillatory behaviour of rainfall extremes in Europe (Climatic Change, 120(4), 931–944)’. Clim. Change 3, 83–85 (2015).

Acknowledgements

This study was only possible thanks to the great support of the data providers. They provided the long-term time series analysed in this study. We wish to thank the following persons and data providers, respectively: Jutta Eybl from the Hydrographic Office at the Ministry of Sustainability and Tourism (Austria), Emschergenossenschaft & Lippeverband (Germany), the Royal Netherlands Meteorological Institute (KNMI), and the Royal Meteorological Institute of Belgium (KMI—IRM). We are thankful to Florian Hanzer, who provided helpful comments on an early version of this manuscript, and María Herminia Pesci for helping us with visualizations. We acknowledge the very helpful comments provided by the reviewers which significantly helped to improve the manuscript.

Author information

Authors and Affiliations

Contributions

K.F. designed the study, compiled the data, and performed the dynamical downscaling experiment. L.-B.T. further developed the statistical analyses. Both authors discussed the results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Förster, K., Thiele, LB. Variations in sub-daily precipitation at centennial scale. npj Clim Atmos Sci 3, 13 (2020). https://doi.org/10.1038/s41612-020-0117-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41612-020-0117-1

This article is cited by

-

Analysis of extreme precipitation event characteristics and typical events based on the intensity–area–duration method in Liaoning Province

Theoretical and Applied Climatology (2023)

-

Spatial and temporal scaling of sub-daily extreme rainfall for data sparse places

Climate Dynamics (2023)