Abstract

The quantity and complexity of scientific and technological information provided to policymakers have been on the rise for decades. Yet little is known about how to provide science advice to legislatures, even though scientific information is widely acknowledged as valuable for decision-making in many policy domains. We asked academics, science advisers, and policymakers from both developed and developing nations to identify, review and refine, and then rank the most pressing research questions on legislative science advice (LSA). Experts generally agree that the state of evidence is poor, especially regarding developing and lower-middle income countries. Many fundamental questions about science advice processes remain unanswered and are of great interest: whether legislative use of scientific evidence improves the implementation and outcome of social programs and policies; under what conditions legislators and staff seek out scientific information or use what is presented to them; and how different communication channels affect informational trust and use. Environment and health are the highest priority policy domains for the field. The context-specific nature of many of the submitted questions—whether to policy issues, institutions, or locations—suggests one of the significant challenges is aggregating generalizable evidence on LSA practices. Understanding these research needs represents a first step in advancing a global agenda for LSA research.

Similar content being viewed by others

Introduction

Both in presidential and parliamentary systems of government, legislatures can play substantial roles in setting national policy, albeit with different degrees of power and influence (Shugart, 2006). In performing their functions, legislative policymakers rely on receiving information from complex advisory systems: formal and informal networks of expertise both within the legislature and externally (Halligan, 1995). Many critical issues legislators face—such as cybersecurity, climate change, nuclear power, food security, health care, and digital privacy—involve science and technology. Legislators need help addressing the informational deluge as the amount of technical information relevant to policy decisions grows (Bornmann and Mutz, 2015), technological change accelerates (Kurzweil, 2004), and innovation is sought to spur economic growth (Broughel and Thierer, 2019). The emergence of the ability to conduct an Internet search on any science and technology policy issue—with varying standards of information review and quality—has made the role of vetted advice even more important today than in the past (Lewandowsky et al., 2017).

Different ways of integrating scientific and technical expertise into policymaking have emerged internationally, reflecting distinctive cultures and traditions of decision-making. These can be formal or informal, internal or external, permanent or ad hoc. They can operate in different branches and at different levels of government (Gual Soler et al., 2017). The academic study of policy advisory systems, in general, remains largely focused on Western democracies and based mainly on qualitative case studies (Craft and Wilder, 2017) that can be difficult to generalize or translate into practice across varying contexts. As Craft and Howlett (2013) observed, “Despite a growing body of case studies … little is known about many important facets of advisory system behavior” (p. 188). As a subfield, the study of scientific advice similarly suffers from these deficits (Desmarais and Hird, 2014), with less attention to legislatures than regulatory policymaking within the executive (Akerlof, 2018; Tyler, 2013).

In the 1748 Spirit of the Laws, Baron de Montesquieu described the tripartite system of governance composed of legislative, executive, and judiciary branches (2011). In this paper, we focus on the legislative, by which we mean that part of the governance system responsible for making laws, typically parliaments or congresses (McLean and McMillan, 2009). In addition to passing laws, legislatures debate the issues of the day and scrutinize the work of the executive. By executive, we mean the part of the governance system responsible for executing the laws passed by the legislature (Bradbury, 2009). They are typically made up of government departments and agencies.

To improve understanding of the scientific advisory systems for legislatures internationally, we asked academics, science advisers, and policymakersFootnote 1 across the globe to identify the most pressing research needs that will improve the practice of science advice to legislatures and strengthen its theoretical and empirical foundations, using a three-stage research approach. Respondents were asked to identify, review and refine, and then rank the research needs they found of greatest import. Similar expert consultation exercises designed to elicit the most important questions in ecology and science policy have been effective in informing government strategy (Sutherland et al. 2011). In this paper we report on the findings from that process, presenting a collaboratively developed international research agenda for an emerging subfield within science policy—legislative science advice (LSA)—that has been relatively neglected within the study of science advisory systems. We identify the research needs of most importance to the producers, providers, and users of scientific information; point to issue domains of highest priority; characterize the participating actors and dynamics of most note to the global community of researchers and practitioners; and suggest the range of disciplines needed to study these systems. In so doing, we hope to contribute to the growth of a well-theorized academic study of science advice to legislatures that is inclusive and supports the needs of practitioners to facilitate the generation and use of science advice globally.

The distinctive nature of legislative science advice

Legislatures differ from the executive branch in both function and form (Kenny, Washbourne, et al., 2017; Tyler, 2013). The ratio of staff to political appointees is high for executive agencies, with each served by hundreds, if not thousands, of civil servants. By contrast, in most legislatures each elected representative has access to the expertise of just a handful or so of staff. This leads to two main differences in these respective science advisory systems. First, the smaller number of staff means that legislatures typically hire generalists, not specialists, outsourcing more in-depth expertise as needed (Nentwich, 2016, p. 15).Footnote 2 Most of the staff in agencies are career officials, not political hires, as in legislatures. Second, science advice to legislatures must serve a broader range of ideological viewpoints and interests than in the executive, tailored to meet the needs of elected officials of all political stripes. The term “legislative science advice” (LSA) is new, originating within the growing discourse of “government science advice” (Gluckman, 2016). LSA refers to the broad systems that provide scientific and technological information to legislatures, including—but not restricted to—legislative research services, committee support systems, technology assessment bodies, lobbyists, and advocacy coalitions.

How legislatures use scientific information

Use of research in policy can take many forms (Oh and Rich, 1996; Weiss, 1979; Whiteman, 1985), including some specific to legislatures. In technology assessment, these impacts have been described as increasing knowledge, promoting opinion formation, and initializing actions, e.g., influencing policy outcomes (Decker and Ladikas, 2004, p. 61). In one of the foundational typologies of research use, Weiss (1979) contrasts the typical view that research is used to inform policy with political and tactical use, in which research serves as a form of rhetorical ammunition, or its implementation as an excuse to delay action or deflect criticism. Indeed, Whiteman (1985) found that the predominant use of research in U.S. congressional committees occurs after policymakers have chosen a stance on an issue, not before.

Within legislatures, scientific and technical information is employed for many purposes that fall within these categories (Kenny, Rose, et al., 2017; Kenny, Washbourne, et al., 2017). For example, it can be utilized to support scrutiny of the executive branch by parliamentary committees or commissions, who draw on evidence in their conclusions or recommendations. This was the case in a 2016 UK parliamentary inquiry into microplastics (Environmental Audit Committee, 2016a), from which recommendations led to the government’s implementation of a ban on microbeads in cosmetics (Environmental Audit Committee, 2016b). Science and technology may also inform decision-making (Hennen and Nierling, 2015b) and inspire new activities. By French law, the Parliamentary Office for the Evaluation of Scientific Choices (OPECST) assesses the National Management Plan for Radioactive Materials and Waste every three years and makes recommendations for improving its function and anticipating future management concerns (OPECST, 2014). Throughout the legislative process, scientific and technical information may be harnessed by policymakers, issue coalitions, and others as new laws are drafted, old laws are revised, or bad proposed laws are avoided. Interest groups in Canada have used scientific evidence in attempting to sway parliamentary committee consideration of tobacco-control legislation (Hastie and Kothari, 2009). And experts have given testimony on the biology of embryonic development to inform parliamentary debate on the decriminalization of abortion in Argentina (Kornblihtt, 2018). A science-in-parliament event (“Ciencia en el Parlamento”) in 2018 in the Spanish Congress saw 75 parliamentarians draw on scientific evidence to engage in debate around 12 policy issues (Domínguez, 2018).

Legislative science advisory systems worldwide

In-house library and research services are one of the most common providers of scientific and technological information within legislatures, such as the Resources, Science and Industry Division of the Congressional Research Service (CRS) in the United States or, the Science and Technology Research Office (STRO) within the Research and Legislative Reference Bureau (RLRB) in Japan (Hirose, 2014). Both CRS and the RLRB provide information and analysis through original reports, as well as confidential research services on request. Globally, various models exist for incorporating more in-depth science and technology assessment directly into legislatures’ internal advisory capacity (Nentwich, 2016). These include the parliamentary committee model, with a committee leading a dedicated unit; the parliamentary office model, with a dedicated office internal to the parliament; and the independent institute model, where the advisory function is performed by institutes operating outside parliament but with parliament as one of their main clients (Hennen and Nierling, 2015b; Kenny, Washbourne, et al., 2017; Nentwich, 2016). An example of the first model, with a dedicated parliamentary committee, is France’s OPECST. An example of the second is the UK Parliamentary Office of Science and Technology (POST).

The third model—the independent institute—can be operationalized a variety of ways and may not work exclusively for the legislature, but also support the executive and engage with the public (Nentwich, 2016). A number of national academies provide LSA, such as the Uganda National Academy of Sciences (UNAS) (INASP, 2016) and the Rathenau Institute, an independent part of the Royal Academy of Arts and Sciences in the Netherlands (KNAW). Not all external LSA mechanisms are based in academies, however. Certain independent bodies, sometimes established by the executive, provide the service, such as Mexico’s Office of Scientific and Technological Information (INCyTU), which is part of the Science and Technological Advisory Forum, a think tank of the Mexican government. Thus, there is a high degree of variation in the way LSA is institutionalized.

Science advice is also delivered to legislatures through channels other than dedicated units. It may be provided informally, such as by constituents, lobbyists, and advocacy organizations, or formally through parliamentary procedures such as inquiries and evidence hearings. Insights may also be shared by scientists and engineers placed in legislatures in programs such as the American Association for the Advancement of Science (AAAS) Congressional Science & Engineering Fellowship and the Swiss Foundation for Scientific Policy Fellowships. Other initiatives directly pair scientists with policymakers, such as the UK’s Royal Society Pairing Scheme and the European Parliament MEP-Scientist Pairing Scheme. In yearly “Science Meets Parliament(s)” events in Europe and Australia, researchers and parliamentarians participate in discussions on science and policy issues (European Commission, 2019; Science and Technology Australia, 2019).

Boundary organizations can further facilitate in bridging research and policy processes. Some non-governmental organizations in Africa such as the African Institute for Development Policy (AFIDEP) are attempting to address the need for stakeholders to translate primary research data to science and technology policies and practices (AFIDEP, 2019).

Need for an international research agenda

The many ways in which LSA manifests, across a wide array of sociopolitical and governance contexts, make it a highly rich area for study. Furthermore, the distinct differences between the nature of legislative and executive science advice substantiate the need for building a research foundation that specifically addresses this subfield of government science advice. In order to initiate and foster a nascent international research-practice community that will spark further empirical, theoretical, and applied advances, we engaged in an expert consultation exercise to identify a core set of research questions for the field. We are in effect asking as our research question what research questions other people in the field of LSA think are most worthy to pursue. Similar exercises have been among the most downloaded in their journals and informed government science strategies (Sutherland et al., 2011). The process we undertook to do so—and the results—are as follows.

Methods

The study consisted of five stages. In Step 1, an online survey was first used to collect research questions from academics, science advisers, and policymakers worldwide. In Step 2, during a workshop at the International Network for Government Science Advice Conference on November 8, 2018, in Tokyo, Japan, participants scrutinized the set of research questions. In Step 3, the original submitted research questions were coded, and vetted for duplication and needed edits. Each of the subsequent 100 questions were coded into a unique category. In Step 4, the research team identified the most representative questions from each category based on their assessments and workshop participant feedback, reducing the set of research needs to be ranked to 50. Finally, in Step 5, a subset of the original survey participants ranked the research findings they would be most interested in learning. Because we could not include all study participants in the process of thematically categorizing the list, as has been done with smaller groups (Sutherland et al., 2012), we chose to do so with coders after achieving inter-rater reliability. We defined science in the survey as “research produced by any individual or organization in a rigorous, systematic way, which has made use of peer review. Research on technology may also fall within this broad definition.” Government was defined as “any governing body of a community, state, or nation.”

Research question collection and coding

We identified experts in science and technology advice, and particularly LSA, in three ways: (1) through an academic literature review and lists of organizational membership; (2) through a referral by another participant in the study (snowball sampling); and (3) from requests to join the study after seeing information advertised by science advice-related organizations. We recruited representatives and members of the following groups: the International Network for Government Science Advice (INGSA); European Parliamentary Technology Assessment (EPTA) member and associate nations; a European project on parliaments and civil society in technology assessment (PACITA); the International Science, Technology and Innovation Centre for South-South Cooperation under the Auspices of UNESCO (ISTIC); the European Commission’s Joint Research Centre (JRC) Community of Practitioners-Evidence for Policy; Results for All (a global organization addressing evidence-based policy); and the American Association for the Advancement of Science’s science diplomacy network. The research protocol for the study was approved by Decision Research’s Institutional Review Board [FWA #00010288, 277 Science Advice].

Expert participants

From September to November 2018, 183 respondents in 50 nations (Table 1) submitted 254 questions. Participants who were willing to be publicly thanked for their effort are listed in the supplementary materials (SI Table 1); a subset of them are also authors on this study. Approximately half of the respondents to our request for research questions were from nations categorized by the United Nations as developing (n = 91) and half from those considered developed (n = 92) (United Nations Statistics Division, 2019). While all had expertise in science and technology advice for policy, almost three-quarters (74%) said they also had specific experience with legislatures.

The roles of these experts in the science and technology advisory system differed greatly: producers of scientific information, providers, users, and those in related or combined positions (Table 2). (Please note, in Table 2, as in all tables within this text, percentages may not sum to 100% due to rounding error.) In open-ended comments, respondents clarified that they interpreted “research on governmental science advice” as both studying LSA processes and conducting research relevant to government questions. The one-fifth of respondents who listed “other” said that their roles were a combination of these categories or described them in other ways.

Survey measures used in collecting research questions

At the start of the online survey, we told respondents that we were interested in research questions that addressed the entire breadth of the legislative science and technology advisory system. We described the system as: (1) the processes and factors that affect people who produce and deliver scientific and technical information; (2) the processes and factors that affect people who use scientific and technical information; (3) the nature of the information itself; and (4) communication between users and producers, or through intermediaries. Because we assumed that participants outside of academia might not be practiced in writing research questions, we asked a series of open-ended questions building to the formal question submission: What is it that we don’t know about the use of scientific information in legislatures that inspires your research question?; What is the outcome you are interested in?; Which processes or factors are potentially related to the outcome?; Who—or what—will be studied?; What is the context?; Please tell us how you would formally state your research question. We also asked a series of follow-up questions to assess which academic disciplines and theories might be most applicable to each submitted research question, and whether some policy issue areas were more important to study than others (see measures, SI Table 2).

Coding the research questions

Coding categories for the questions were established based on frequency of occurrence (coding rules and reliability statistics, supplementary materials, SI Table 3). Inter-rater reliability for each category was ascertained with 2–3 coders. We coded LSA actors that were mentioned (policymakers, scientists, brokers, institutions, the public) in addition to advisory system dynamics (evidence use, evidence development, communication, ethics, system design). Coding was conducted first for any mention of the variable in the original “raw” research question submissions, in which multiple codes could be assigned to the text constituting the series of six questions building to, and including, the research question submission. After editing for clarity and condensing any duplicative questions, we then determined the primary category of each research question for the purposes of the final list. Reliabilities of α > 0.8 suggest consistent interpretability across studies (Krippendorff, 2004). Nineteen of the 24 variable codes—both the original submissions and final edited research questions—achieved inter-rater reliability at this level. Another four were at the level of 0.7, suitable for tentative conclusions, and one at 0.6 (coded with perfect reliability in the final edited questions). This last variable was particularly difficult to code because evidence development can occur throughout the advisory system—whether by scientists in universities, scientific reviews by intermediary institutions, or within legislatures as research staff compile information to support, or discount, policy options.

Analysis

Cluster analysis can be used to identify groups of highly similar data (Aldenderfer and Blashfield, 1984). In order to characterize the multiple combinations of coded variables that were most frequently presented in the research questions, we conducted a two-step cluster analysis, which can accommodate dichotomous variables, using statistical software SPSSv25 on both system actors and dynamics.

Workshop

At the International Network for Government Science Advice Conference in November 2018, a workshop on LSA was conducted by members of the author team (KA, CT, EH, MGS, AA). After presentations on research and practice in LSA, participants worked in small groups on subsets of the research questions to vet them: combining similar questions, adding to them, and highlighting those of greatest priority. Thirty-six people from 17 nations participated in the exercise, including six participants from developing countries. Workshop participants self-selected into seven tables of three to eight people. Questions were flagged as important and edited during this stage, and some were added, but none were dropped.

Ranking of research statements

Based on their expertise—role in LSA and geographic representation—90 participants in the original survey were asked after the workshop to rank what information they would be most interested in learning. Sixty-four individuals from 31 countries responded. All but one had experience specifically with legislatures. Thirty-three were from—or in one case studied—developing nations (52%), and 31 were from developed countries (48%). The percentages closely resemble those of the research question (50%) and collection (50%) respondents.

Because many of the experts identified with multiple roles in the science advisory process, we asked them to characterize these combinations (Table 3). Most said that their roles are distinct, whether as producers of scientific information (21%), providers (33%), or users (8%), but more than a third said that their work crossed these boundaries (38%). One participant said that their role was neither as user, provider, nor producer, but to facilitate connections between all three groups. This example demonstrates that while knowledge brokering can include knowledge dissemination (Lemos et al., 2014; Lomas, 2007), it may also focus primarily on network growth and capacity building (Cvitanovic et al., 2017).

The ranking was conducted using Q methodology, a technique used to identify groups of people with similar viewpoints and perspectives (Stephenson, 1965; Watts and Stenner, 2012) (additional findings are presented in a separate publication). Respondents ordered the statements in a frequency reflecting a normal curve, placing a prescripted number in each of nine labeled categories. “Extremely interested in learning” ranked high (9) and “extremely uninterested” ranked low (1). As sometimes occurs with this methodology, respondents told us in their comments that while they placed the questions in order of interest, the category labels did not always match their sentiment as they thought that most of the questions were of some interest. Thus, we put more weight on the ranking itself. We also posed a series of related questions to respondents. They were asked at the start of the survey: How would you describe the current state of evidence on the design and operation of legislative science advice systems? [Poor, adequate, good, very good]. At the conclusion of the ranking exercise, we asked follow-up questions for the top four research findings that the respondent would be most interested to learn. We evaluated their perceptions of the feasibility of generating this information, its generalizability, and its likelihood of contributing to the study and practice of LSA (see measures, SI Table 4).

Results

According to most experts (68%; n = 63) who ranked the questions, the state of the evidence on LSA is poor. Another 20% characterized the state of the field as “adequate” and 12% as good. In subsequent written comments, prompted after the closed-ended survey questions, many respondents qualified their responses by saying that the quality of information varied enormously across countries, and sectors of science and technology, with less evidence available that is applicable to developing or lower-middle income nations.

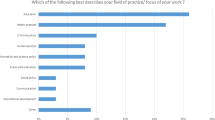

Contextualizing legislative science advice: policy issues and institutions

Legislatures worldwide are diverse, as are the many issues they face. More than a quarter (26%) of the submitted research questions mentioned one or more specific policy areas, such as climate change or agriculture, and 54% either a particular place or institution, like Zimbabwe or the U.S. Congress (coded data). When asked directly, slightly more than half of the experts (51%) said yes, that some policy issue areas are more important to focus on than others (34%, no; 15%, do not know) (see question wording, SI Table 2). Of those that said some policy areas should be a priority for the field (n = 86), a majority selected environment (78%), health (64%), and natural resourcesFootnote 3 (56%) as the preferred focus among the many options (Fig. 1).Footnote 4 Half pointed to education (50%) and technology (50%). Respondents also volunteered in a follow-up to the closed-ended question that other social issues should be a priority, such as welfare, migration, urbanization, demographic change, population growth, and sustainability (e.g., the UN Sustainable Development Goals).

Relevant academic disciplines and theoretical constructs to LSA research questions

Studying LSA is a transdisciplinary pursuit. For only 20% of the 254 originally submitted research questions did respondents say that one academic disciplinary field alone was adequate to provide an answer; most (60%) named two to four fields. Of the fields provided in the response options, political science and public policy were the most frequently chosen as germane (65% and 64%, respectively), followed by science and technology studies (52%), communication (46%), sociology (35%), psychology (25%), and anthropology (15%). Other fields and areas of expertise volunteered by the respondents included: economics, cognitive and decision sciences, computer science, design, ethics, evaluation, gender studies, history, information technology, international development, law, philosophy, statistics, and domains such as public health, agriculture, and education.

Approximately one-third of the respondents suggested theories or theoretical constructs related to their research questions (SI Table 5). While some concepts have been traditionally associated with the development and use of science for policy, such as mode 2 production of knowledge (Gibbons et al., 1994) and post-normal science (Funtowicz and Ravetz, 1993), others reflect less common approaches, for example from business and management (human resources theory) and development (failed states theory).

Fifty research questions on legislative science advice

Based on the 254 questions submitted during the initial online survey collection, workshop vetting process, and research team input, we created two final sets of research questions on LSA: a condensed set of 50 presented here and a full set of 100 included in the supplementary materials (SI Table 6). All are grouped under the headings of their primary categories, followed by italicized text with the full code description. The condensed set of 50 questions denotes the most representative questions from each of the categories; half from each were selected. They were chosen based on workshop recommendations and author assessments. The categories reflect diverse themes: evidence use and development; characteristics and/or capacity of system actors; system design and implementation; and ethics. After presenting the list of 50 research questions below, we then discuss (1) the characteristics of the questions that were submitted and what they may signify about the priorities of the community, and (2) how a subset of our respondents (n = 64) ranked 50 statements from these questions as to what they would be most interested to learn.

Information/evidence use

(Influence, use, or uptake of scientific information/science advice in policy—its impact or barriers—including measurement and evaluation)

-

1.

What types of scientific information are used in legislatures?

-

2.

How do the formal and informal practices of legislatures influence the consideration and use of scientific information?

-

3.

What are the ways in which scientific information is “used” in legislatures?

-

4.

What metrics can be used to assess the use of scientific information across different legislative contexts?

-

5.

What incentives motivate or compel legislatures to use scientific information?

-

6.

Under which conditions does use of scientific information change the framing of policy debates in legislatures?

-

7.

Does legislative use of evidence improve the implementation and outcome of social programs and policies?

Evidence development

(The creation of scientific information for the purposes of evidence)

-

8.

How can the scientific topics most relevant to the public and policymakers be determined to inform research?

-

9.

How is social relevance weighed in the production of academic research?

-

10.

How do policymakers and researchers work together in defining problems and processes for generating evidence?

Policymakers

(Policymakers, legislators, decision-makers)

-

11.

What value do legislators and staff place on scientific evidence, as opposed to other types?

-

12.

How do legislator and staff preferences for scientific evidence compare between countries?

-

13.

How do legislators and their staff assess the credibility of scientific information?

-

14.

What are the characteristics of the producers of scientific information most preferred by legislators and their staff? (e.g., are they partisan, make policy recommendations?)

-

15.

How do the Internet and social media affect the information-seeking behavior of legislators and staff?

-

16.

Under what conditions do legislators and staff seek out scientific information or use what is presented to them?

-

17.

What are the factors that legislators weigh in deciding whether to accept or reject a scientific recommendation?

-

18.

Can training for legislators and/or staff increase their use of scientific information, especially in lower-middle income countries (LMICs)?

Scientists

(Scientists, scientific advisers, scientific researchers)

-

19.

What information, skills, and training are needed for scientists to work with legislators and their staff?

-

20.

What individual and institutional factors motivate scientists to share their research with legislators and their staff?

-

21.

How do scientists and issue advocates try to manage the quality of scientific information and expertise used in legislatures?

-

22.

Which behaviors of scientists and other advisers increase the likelihood of evidence use?

Brokers

(Intermediaries, brokers)

-

23.

What role do intermediaries and research brokers play in getting scientific information before legislators and their staff? (e.g., helping shape research questions, communicate research, and/or serve as an engagement facilitator)

-

24.

What forms of evaluation can be used to measure the effect of “brokering” scientific information?

Institutions

(Organizations, legislatures, governments, committees)

-

25.

How can the institutions that deliver legislative science advice be characterized?Footnote 5

-

26.

How do culture, and political and economic context, affect the development of legislative science advice institutions? (e.g., new and emerging democracies, more authoritarian systems, levels of economic development)

-

27.

How do different institutional approaches to legislative science advice influence its nature, quality and relevance?

-

28.

What institutional approaches for legislative science advice are instructive for other countries?

-

29.

How do legislative research departments synthesize and translate scientific information for legislators?

-

30.

How can we measure the impact of legislative science advisory bodies on legislative processes using indicators?

-

31.

How does the staffing, budgetary, and political capacity of committees affect their ability to use scientific information in legislatures?

-

32.

How do internal and external organizations assess and meet the needs of legislatures for in-depth analysis?

The public

(Citizens, public)

-

33.

How does public participation affect legislative processes in which scientific information may be considered, including potential reductions in corruption?

-

34.

How can the impact of current citizen initiatives in legislative science advice be measured?

-

35.

What is the extent to which the public is aware of, and places value in, the scientific information being used in legislatures?

Communication

(Communication of science through engagement, access to information, effective information/knowledge transfer, relationships)

-

36.

What is the frequency of communication between legislative staff and scientists from inside and outside government?

-

37.

How does political polarization affect information flows to legislators and their staff?

-

38.

Does iterative engagement between researchers, legislators, and staff improve evidence use?

-

39.

How do different communication channels—hearings, face-to-face meetings, email, social media, etc.— affect informational trust and use?

-

40.

How can risk and uncertainty be communicated comprehensibly to legislators and staff?

-

41.

Which communication tools facilitate working with legislative decision-makers on scientific topics?

-

42.

How is scientific information embedded in policy debate rhetoric?

System design

(Structure, design, and implementation of LSA systems/processes/models both in developed and developing nations)

-

43.

How do the requirements and needs of a science advice system for policymaking differ across countries?

-

44.

How can the design of new structures, processes, and systems increase legislative capacity for science use?

-

45.

What lessons can be learned about how to manage scientific advice to legislatures from a systems approach?

-

46.

How do racial and gender biases affect researchers’ and practitioners’ activities and influence policy advisory systems?

-

47.

In societies without established science advice systems, how is scientific information used—if at all—by legislatures?

-

48.

What are examples of improvements to legislative science advisory systems in heavily resource-constrained countries?

Ethics

(Ethics of use of science in policy; appropriate role of scientists/scientific information providers in policy)

-

49.

What ethical principles for providing legislative science advice can be derived?

-

50.

How can values be made transparent in providing science advice?

Focal areas within the advisory system

Most research questions referenced multiple aspects of the advisory system: the people, organizations, and institutions that constitute it and the dynamics that support its functionality (e.g., evidence use and creation, communication, system design, ethics). To capture the interrelated nature of these system components in the research questions that were submitted to us, we conducted two cluster analyses on subsets of the variables: (1) policymakers, scientists, brokers, the public, and institutions; and (2) evidence use, evidence development, communication, ethics, and system design. We used automatic cluster selection based on the Bayesian Information Criterion (BIC) (Norusis, 2011). We ran the analyses with both Akaike Information Criterion (AIC) and BIC, selecting the BIC criterion based on the equivalent or smaller number and reasonable interpretation of its proposed clusters. The cluster analysis of the actors in the advisory system produced 9 categories and the analysis of system dynamics generated 5 (SI Fig. 1 and 2). We then crossed the variable sets to demonstrate the relative frequency in which the coded variables appear within clusters (Fig. 2 and 3).

The frequencies of actors represented in the 254 submitted research questions demonstrate relative interest by experts in the roles of these groups within advisory systems. The partitioning of the bar graphs shows the frequency with which these actors are mentioned in combination with various system dynamics in the research questions (COMM communication, E-USE evidence use, E-DEV evidence development; DESIGN system design; ETHICS ethics)

The frequencies of advisory system dynamics represented in the 254 submitted research questions highlight varying attention to these processes. The bar graph subdivisions show how often these dynamics co-occur in the research questions with groups of system actors (POL policymakers, SCI scientists, BRKR brokers, INST institutions, PUB the public)

In order to assess the “goodness” of a cluster analysis solution we compared the degree of similarity within clusters to the dissimilarity between clusters (Norusis, 2011). The silhouette coefficient is one such measure (Rousseeuw, 1987). It ranges from −1 to +1. The upper end of this range (+1) reflects highly differentiated clusters with great similarity between same-cluster values. Both silhouette measures reflect good fit (9 clusters, average silhouette = 0.8; 5 clusters, average silhouette = 0.6).

Coincidence of legislative science advice actors and dynamics within research questions

The ways that experts combined people, organizations, and problems in their research questions sheds light on their conceptualization of the parts of the advisory system that are most deserving of research and the perceived relationships among them. The cluster analysis on system actors revealed 9 ways in which these were combined within the 254 submitted research questions. The largest cluster of research questions (Cluster 1; 20%) included mention of all three of the following actors: policymakers, scientists, and institutions (SI Fig. 1). While both policymakers and institutions are referenced alone in individual questions (Clusters 6 and 8), scientists, brokers, and the public are always referenced in combination with other actors.

The cluster analysis on system dynamics revealed 5 ways in which these were combined within the 254 submitted research questions. The largest clusters of research questions featured communication largely by itself (Cluster 2; 23%) or with evidence use (Cluster 1; 22%) (SI Fig. 2). Evidence development appears only in combination with evidence use, communication, and system design. Similarly, system design is mentioned only in combination with evidence use and communication.

Frequency of actors and system dynamics

We then evaluated the frequency of the system dynamics and actors codes within the submitted research questions overall, and their co-occurrence with clusters of the opposing set (e.g., Fig. 2, actor frequencies and system dynamic clusters; Fig. 3, system dynamics frequencies and actor clusters). The frequencies tell us which codes appear most commonly across all of the research questions. Their distribution across the clusters of dynamics and actors indicate how these sets of variables interrelate. The research questions about system actors referenced policymakers (70%), institutions (62%), and scientists (53%) the most frequently (Fig. 2). All three most commonly co-occurred with the cluster representing evidence use, communication, and ethics (20%, 16%, 15%, respectively). The public (12%) and knowledge brokers (6%) occurred less frequently as actors in questions about legislative science advice, and they appeared in co-occurrence with all clusters of system dynamics at a low rate (1–3% of coded statements).

In regard to system dynamics, respondents asked predominantly about evidence use (63%) and communication (53%) (Fig. 3). Evidence use and communication both occur most frequently within questions that also include reference to the cluster with the broadest constellation of actors: policymakers, scientists, brokers, institutions, and the public (17%, 16%, respectively). Evidence development (15%), system design (23%), and ethics (3%) were less popular topics. They co-occur at low rates (0–4% of coded statements) with clusters of system actors. As an aside, within the “system design” code, we also identified research questions that referenced the need for best practices and models within developing or lower-middle income countries. Roughly one-quarter of the originally submitted questions that were coded as “system design” demonstrated the need to address these regions of the world (6% frequency within 254 submitted questions).

Expert ranking of the types of research information of most interest

We asked a subset of the experts who contributed research questions to rank their research needs, e.g., what was of most interest to them that could potentially be learned about LSA (n = 64 participants). The top 10 areas of information these experts would most like to know from the short list of 50 research questions addressed four of the five system dynamics: evidence use, communication, system design, and evidence development (Table 4). The remaining dynamic, ethics, was ranked toward the bottom: 42nd and 45th among the 50 questions (see the full list of rankings, SI Table 7). While information only on policymakers and intermediaries made the top 10 areas of potential research information experts were interested in learning, all five types of system actors—policymakers, scientists, brokers, institutions/organizations, and the public—appear in the top 20 ranked statements. The top 10 research areas were all very highly ranked, but the experts were most interested in learning: (1) whether legislative use of scientific evidence improves the implementation and outcome of social programs and policies; (2) under what conditions legislators and staff seek out scientific information or use what is presented to them; and (3) how different communication channels—hearings, face-to-face meetings, email, social media, etc.—affect informational trust and use. All of the top 10 LSA potential research areas were considered by the respondents who highly ranked them as at least slightly, if not moderately, feasible and likely to result in generalizable findings that would both contribute to LSA’s practice and study (SI Fig. 3).

Discussion

Supporting the capacity of legislatures worldwide to access and use scientific and technical information in their decision-making processes may be critical to their ability to govern through periods of massive social, technological, and environmental change. Underlying the more detailed results from this study are two broad findings. First, experts generally agree that the state of understanding of LSA is insufficient, especially for developing and lower-middle income nations. More than two-thirds of our second sample of legislative science experts (68%; n = 63) rated the state of the evidence as poor. Second, many fundamental questions about the function and design of legislative science advisory systems remain unanswered. Core questions about advisory system processes, such as how legislators and their staff assess the credibility of scientific information, were among the most highly prioritized by experts. Indeed, the relationship between bias and source credibility remains a theoretically murky area of social science, especially in application to highly political contexts such as legislatures (Akerlof et al., 2018). At the bottom of the expert’s priority list were questions about ethics, such as how values can be made transparent in providing science advice and what ethical principles for providing legislative science advice can be derived. The research questions most frequently addressed policymakers (70%), evidence use (63%), institutions (62%), communication (53%), and scientists (53%). Policymakers and institutions were also most often mentioned as the sole actors in research questions, and communication the sole dynamic. This reveals that study participants—only a minority of whom are decision-makers within government—were more focused in their research interests on the institutional/policymaker side of the system, rather than on scientists and information-generation, or on the information brokers who span between them. Yet, a wealth of literature on science usability and co-production of scientific knowledge highlights the importance of actors, interactions, and dynamics across the entire system (Lemos et al., 2012). The highly specific nature of many of the research questions—more than a quarter of the submitted research questions mentioned one or more specific policy areas (26%), and more than a half (54%) either a particular place or institution—alludes to one of the significant challenges in aggregating generalizable evidence on LSA practices. The inherently contextual nature of science and technical advice—set within specific policy problems, cultures, and national institutions—is potentially a very difficult issue for the successful maturation of the field. Environment and health are the most frequently mentioned domains to prioritize in LSA research. These are focal points of the United Nations Sustainable Development Goals, among the top priorities of publics such as in the United States (Jones, 2019) and Europe (European Commission, 2018), and are areas often regulated by government.

This study also captures the global LSA community’s desire for transdisciplinary research conducted in partnership with domain experts. Most of the survey participants (60%) selected two to four fields as important for answering their research question. Political science (65%) and public policy (64%) were at the top of the list, but participants also selected fields such as science and technology studies (52%), communication (46%), sociology (35%), psychology (25%), and anthropology (15%). However, it may be worthwhile to note that the transdisciplinarity of the field and diversity of issue domains have posed historical difficulties in defining common terms, such as “evidence,” “policy,” “policymakers,” and “use” (Cairney, 2016; National Research Council, 2012). This is a challenge that new research in the field will need to address.

Due to the heavily contextual nature of the roles that research can play in policy, measuring its impact has been historically difficult (Decker and Ladikas, 2004; National Research Council, 2012). A number of the research questions address measurement, evaluation, and metrics, including: what metrics can be used to assess the use of scientific information across different legislative contexts?; and how can we measure the impact of legislative science advisory bodies on legislative processes using indicators? Focusing on the specific roles of research (Decker and Ladikas, 2004), such as policy argumentation (Decker and Ladikas, 2004; National Research Council, 2012), is one strategy to address the challenge, as is mobilizing increasingly available digital data (van Hilten, 2018) and employing theories and methods from individual-level to system-level scales.

While some authors have suggested that the process of scientific knowledge exchange itself may be so context specific as to be unlikely to produce results with broad theoretical or applied relevance (Contandriopoulos et al., 2010), we are encouraged by emerging efforts to address these challenges at multiple scales. For example, a scientific review conducted by the European Commission’s Joint Research Centre (JRC) summarized research on the use of evidence in political decision-making from fields that study individual-level factors, such as psychology and neuroscience, and those that focus on higher-level units of analysis, like public policy, administration, and sociology (Mair et al., 2019).

In Western developed nations, much of the discussion on provision of science and technology advice to legislatures has focused on assessing and improving institutional structures for technology assessment (Guston et al., 1997; Hennen and Nierling, 2015a; Vig and Paschen, 2000). The interest demonstrated in the research questions for thinking about the design and implementation of advisory systems shows the need for a broader discourse that recognizes that many countries have no such institutions and that LSA necessarily includes a much wider array of formal and informal processes. Almost a quarter of the research questions asked about the design of systems (23%), such as how can the design of new structures, processes, and systems increase legislative capacity for science use? Of these, a quarter asked specifically about developing nations or LMICs (6% of all questions).

Study limitations

While our reach to experts was relatively broad globally and our efforts are comparable to many other initiatives of this type (Sutherland et al., 2011), this study’s limits include: (1) our inability to definitively define a global expert community for LSA; (2) potential language and cultural barriers; (3) incomplete coverage of all regions, such as Southeast Asia; (4) likelihood of response bias during all three stages of the study; and (5) the influence of the instrument—an online survey as opposed to interviews—on the nature of the data collected. As we have noted, there are many types of expert roles within these advisory systems. The networks that connect them are not always well-established, making it difficult to characterize and map the full population. The online surveys and workshop were conducted in English (though a few individuals submitted survey responses that were translated). While we anticipated that most experts would have working knowledge of English because of their professional positions, undoubtedly, we lost potential respondents in doing so. Further, those most interested in participating in the series of studies may be biased in ways that we cannot effectively parameterize.

Finally, we provided a definition of the LSA system to respondents at the start of the survey to provide them with a scope for their questions: addressing not just policymakers, but scientists, scientific information, and interactions between groups. This introduction may have primed respondents to think about questions that they might not have otherwise.

Conclusion

By collaborating with a nascent research-practice community for LSA in defining an international research agenda, we hope this project helps spur new initiatives globally on science and technology advice to inform legislatures. The linguistic and conceptual challenges encountered during the study, discussed in the previous section, highlight the need to develop a community of practitioners and scholars sharing a common set of concepts and the ability to relate those to their local context. We believe that both the product of our study and the collaborative process that led to it, are an important step in this direction. The results of the study create tangible objectives for this emergent field. Laying a cohesive groundwork for future goals in the legislative science advice (LSA) space may aid in opening new global channels of communication between scientists, legislatures, and the public that were previously unattainable. A shared set of research priorities can lay the groundwork for future collaborative research addressing the specifics of individual national systems within a common frame of reference, enabling mutual learning, and development and sharing of good practices. This could also provide the empirical basis for theoretical generalizations about the nature of scientific expertise and knowledge in legislative settings.

Data availability

The datasets generated for this study are available through OSF under the project files for “A collaboratively-derived international research agenda on legislative science advice,” located at osf.io/qu8t7.

Notes

By policymakers, we mean those in government who use science to make policy decisions, whether members of staff or elected representatives.

Sanni et al., (2016) call for the improvement of staff capacity in service to Nigerian lawmakers because of perceived deficits in expertise. In the United States, the question of whether serving as legislative staff counts as a “profession” with specific required expertise has been broadly called into question (Romzek and Utter, 1997). The average age of staff members is 31 (House) and 32 (Senate) (Legistorm, 2019), compared to almost 48 years for the federal civil service generally (OPM, 2017). House and Senate personal office and committee staff stay in their positions on average between 1.1 and 3.9 years, with longer durations for more senior positions (Petersen and Eckman, 2016a, 2016b, 2016c, 2016d), compared to an average of 14 years for the federal service (OPM, 2017).

The terms environment and natural resources are conceptually distinct. The environment is “the complex of physical, chemical, and biotic factors … that act upon an organism or an ecological community and ultimately determine its form and survival” while natural resources are “industrial materials and capacities (such as mineral deposits and water power) supplied by nature” (Merriam-Webster, n.d., n.d.).

Respondents could select more than one topic area.

Examples include: type of entity conducting the research; source of financing; demand or supply driven; organized by a legislative entity or another party; level of involvement of the legislative entity; public access to information; measure of stakeholder participation; political system; governmental level (international–municipal); institutionalized or project-based initiative.

References

AFIDEP (2019) AFIDEP. https://www.afidep.org/about-us/. Accessed 1 Apr 2019

Akerlof K (2018) Congress’s use of science: Considerations for science organizations in promoting the use of evidence in policy. American Association for the Advancement of Science: Washington, DC

Akerlof K, Lemos MC, Cloyd ET et al. (2018) Who isn’t biased?: perceived bias as a dimension of trust and credibility in communication of science with policymakers [Proceedings]. In: Iowa State University Summer Symposium on Science Communication, Ames, IA

Aldenderfer MS, Blashfield RK (1984) Cluster Analysis. Sage Publications: Thousand Oaks, CA

Baron de Montesquieu C-L de S (2011) The Spirit of Laws. Cosimo Classics: New York, NY

Bornmann L, Mutz R (2015) Growth rates of modern science: a bibliometric analysis based on the number of publications and cited references. J Assoc Inf Sci Technol 66(11):2215–2222. https://doi.org/10.1002/asi.23329

Bradbury J (2009) Executive. In: McMillan A and McLean I (eds) The Concise Oxford Dictionary of Politics. 4th ed. Oxford University Press: Oxford

Broughel J, Thierer AD (2019) Technological innovation and economic growth: A brief report on the evidence. Mercatus Center, George Mason University: Arlington, VA

Cairney P (2016) The Politics of Evidence-Based Policy Making. Springer Nature: London

Contandriopoulos D, Lemire M, Denis J-L et al. (2010) Knowledge exchange processes in organizations and policy arenas: a narrative systematic review of the literature. Milbank Q 88(4):444–483. https://doi.org/10.1111/j.1468-0009.2010.00608.x

Craft J, Howlett M (2013) The dual dynamics of policy advisory systems: the impact of externalization and politicization on policy advice. Policy Soc 32(3):187–197. https://doi.org/10.1016/j.polsoc.2013.07.001

Craft J, Wilder M (2017) Catching a second wave: context and compatibility in advisory system dynamics. Policy Stud J 45(1):215–239. https://doi.org/10.1111/psj.12133

Cvitanovic C, Cunningham R, Dowd A-M et al. (2017) Using social network analysis to monitor and assess the effectiveness of knowledge brokers at connecting scientists and decision-makers: an Australian case study. Environ Policy Gov 27(3):256–269. https://doi.org/10.1002/eet.1752

Decker M, Ladikas M (eds) (2004) Bridges between Science, Society and Policy: Technology Assessment-Methods and Impacts. Wissenschaftsethik und Technikfolgenbeurteilung; Bd. 22. Springer: Berlin

Desmarais BA, Hird JA (2014) Public policy’s bibliography: the use of research in US regulatory impact analyses. Regul Gov 8(4):497–510. https://doi.org/10.1111/rego.12041

Domínguez N (2018) The day that scientists entered Parliament and did not ask for money. El País, 7 November. Madrid, Spain. https://elpais.com/elpais/2018/11/06/ciencia/1541531335_450449.html. Accessed 3 Mar 2019

Environmental Audit Committee (2016a) Environmental impact of microplastics. Fourth Report of Session 2016–17 HC 179, 24 August. House of Commons: London. https://publications.parliament.uk/pa/cm201617/cmselect/cmenvaud/179/179.pdf

Environmental Audit Committee (2016b) Environmental impact of microplastics: Government Response to the Committee’s Fourth Report of Session 2016–2017. Fifth Special Report of Session 2016–17 HC 802, 14 November. House of Commons: London. https://publications.parliament.uk/pa/cm201617/cmselect/cmenvaud/802/802.pdf

European Commission (2019) Science meets Parliaments: what role for science in 21st century policy-making. https://ec.europa.eu/jrc/en/event/conference/science-meets-parliaments-2019

European Commission (2018) Public opinion. Available at: http://ec.europa.eu/commfrontoffice/publicopinion/index.cfm/Chart/getChart/themeKy/42/groupKy/208. Accessed 21 Feb 2019

Funtowicz SO, Ravetz JR (1993) Science for the post-normal age. Futures 25(7):739–755. https://doi.org/10.1016/0016-3287(93)90022-L

Gibbons M, Limoges C, Nowotny H et al. (1994) The New Production of Knowledge: The Dynamics of Science and Research in Contemporary Societies. Sage Publications: London, UK

Gluckman P (2016) Science advice to governments: an emerging dimension of science diplomacy. Sci Dipl 5(2):9

Gual Soler M, Robinson CR, Wang TC (2017) Connecting scientists to policy around the world: Landscape analysis of mechanisms around the world engaging scientists and engineers in policy. American Association for the Advancement of Science: Washington, DC

Guston DH, Jones M, Branscomb LM (1997) Technology assessment in the U.S. state legislatures. Technol Forecast Soc Change 54(2):233–250. https://doi.org/10.1016/S0040-1625(96)00146-1

Halligan J (1995) Policy advice and the public service. In: Peters BG, Savoie DJ (eds) Governance in a Changing Environment. Canadian Centre for Management Development. McGill-Queen’s Press, Montreal & Kingston. pp 138–172

Hastie RE, Kothari AR (2009) Tobacco control interest groups and their influence on parliamentary committees in Canada. Can J Public Health 100(5):370–375. https://doi.org/10.1007/BF03405273

Hennen L, Nierling L (2015a) A next wave of Technology Assessment? Barriers and opportunities for establishing TA in seven European countries. Sci Public Policy (SPP) 42(1):44–58

Hennen L, Nierling L (2015b) T A as an institutionalized practice-Recent national development and challenges. Parliaments and Civil Society in Technology Assessment (PACITA). http://www.pacitaproject.eu/wp-content/uploads/2015/03/Communication_package_def_2.pdf. Accessed 20 Feb 2019

Hirose J (2014) Enhancing our role as the “brains of the legislature”: Comprehensive and interdisciplinary research at the National Diet Library, Japan. In: IFLA Library and Research Services for Parliaments Section Preconference. https://www.ifla.org/files/assets/services-for-parliaments/preconference/2014/hirose_japan_paper.pdf

INASP (2016) Approaches to developing capacity for the use of evidence in policy making. INASP, Oxford: UK

Jones B (2019) Republicans and Democrats have grown further apart on what the nation’s top priorities should be. In: Pew Research Center. Available at: http://www.pewresearch.org/fact-tank/2019/02/05/republicans-and-democrats-have-grown-further-apart-on-what-the-nations-top-prioritiesshould-be/. Accessed 21 Feb 2019

Kenny C, Washbourne C-L, Tyler C et al. (2017) Legislative science advice in Europe: the case for international comparative research. Pal Commun 3:17030

Kenny C, Rose DC, Hobbs A et al. (2017) The role of research in the UK Parliament, Vol. 1. Houses of Parliament: London

Kornblihtt A (2018) Why I testified in the Argentina abortion debate. Nature 559:303. https://doi.org/10.1038/d41586-018-05746-1

Krippendorff K (2004) Content Analysis: An Introduction to Its Methodology, 2nd edn. Sage Publications: Thousand Oaks, CA

Kurzweil R (2004) The law of accelerating returns. In: Teuscher C (ed) Alan Turing: Life and Legacy of a Great Thinker. Springer: Berlin, Heidelberg, pp. 381–416. https://doi.org/10.1007/978-3-662-05642-4_16

Legistorm (2019) The 115th Congress by the numbers. https://www.legistorm.com/congress_by_numbers. Accessed 19 Mar 2019

Lemos MC, Kirchhoff CJ, Ramprasad V (2012) Narrowing the climate information usability gap. Nat Clim Change 2(11):789–794

Lemos MC, Lo Y-J, Kirchhoff C et al. (2014) Crop advisors as climate information brokers: building the capacity of US farmers to adapt to climate change. Clim Risk Manag 4–5:32–42. https://doi.org/10.1016/j.crm.2014.08.001

Lewandowsky S, Ecker UKH, Cook J (2017) Beyond misinformation: understanding and coping with the “post-truth” era. J Appl Res Mem Cognition 6(4):353–369. https://doi.org/10.1016/j.jarmac.2017.07.008

Lomas J (2007) The in-between world of knowledge brokering. BMJ 334(7585):129–132. https://doi.org/10.1136/bmj.39038.593380.AE

Mair D, Smillie L, La Placa G et al. (2019) Understanding our political nature: How to put knowledge and reason at the heart of political decision-making. European Commission, Joint Research Centre EUR 29783 EN. Publications Office of the European Union: Luxembourg

McLean I, McMillan A (2009) Legislature. In: The Concise Oxford Dictionary of Politics. Oxford University Press: Oxford. Available at: http://www.oxfordreference.com/view/10.1093/acref/9780199207800.001.0001/acref-9780199207800-e-732. Accessed 2 July 2019

National Research Council (2012) Using Science as Evidence in Public Policy. The National Academies Press: Washington, DC

Nentwich M (2016) Parliamentary Technology Assessment institutions and practices. A systematic comparison of 15 members of the EPTA network. Institut für Technikfolgen-Abschätzung. https://doi.org/10.1553/ITA-ms-16-02

Norusis M (2011) Cluster analysis. In: IBM SPSS Statistics 19 Statistical Procedures Companion. Addison Wesley, Upper Saddle River. pp. 375–404

Oh CH, Rich RF (1996) Explaining use of information in public policymaking. Knowl Policy 9(1):3. https://doi.org/10.1007/BF02832231

OPECST (2014) Evaluation of the National Plan for Radioactive Materials and Waste Management, 2013–2015. Office Parlementaire d´Evaluation des Choix Scientifiques et Technologiques of the French Parliament (OPECST), Paris. http://www.senat.fr/fileadmin/Fichiers/Images/opecst/quatre_pages/4_pages_PNGMDR_ENG_nov14.pdf. Accessed 2 Apr 2019

OPM (2017) Profile of federal civilian non-postal employees. https://www.opm.gov/policy-data-oversight/data-analysis-documentation/federal-employment-reports/reports-publications/profile-of-federal-civilian-non-postal-employees/. Accessed 31 Mar 2019

Petersen RE, Eckman SJ (2016a) Staff tenure in selected positions in House committees, 2006–2016. 9 November. Congressional Research Service: Washington, DC. https://fas.org/sgp/crs/misc/R44683.pdf

Petersen RE and Eckman SJ (2016b) Staff tenure in selected positions in House member offices, 2006–2016. 9 November. Congressional Research Service: Washington, DC. https://fas.org/sgp/crs/misc/R44682.pdf

Petersen RE, Eckman SJ (2016c) Staff tenure in selected positions in Senate committees, 2006–2016. 9 November. Congressional Research Service: Washington, DC. https://fas.org/sgp/crs/misc/R44685.pdf

Petersen RE, Eckman SJ (2016d) Staff tenure in selected positions in Senators’ offices, 2006–2016. 9 November. Congressional Research Service: Washington, DC. https://fas.org/sgp/crs/misc/R44684.pdf

Romzek BS, Utter JA (1997) Congressional legislative staff: political professionals or clerks? Am J Political Sci 41(4):1251–1279

Rousseeuw PJ (1987) Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math 20:53–65. https://doi.org/10.1016/0377-0427(87)90125-7

Sanni M, Oluwatope O, Adeyeye A et al (2016) Evaluation of the quality of science, technology and innovation advice available to lawmakers in Nigeria. Pal Commun 2:16095. https://doi.org/10.1057/palcomms.2016.95

Science and Technology Australia (2019) Science meets Parliament. https://scienceandtechnologyaustralia.org.au/what-we-do/science-meets-parliament/. Accessed 2 Apr 2019

Shugart MS (2006) Comparative executive-legislative relations. In: Rhodes RAW, Binder SA, Rockman BA (eds) The Oxford Handbook of Political Institutions. Oxford University Press: Oxford, pp 344–365

Stephenson W (1965) Perspectives in psychology: XXIII Definition of opinion, attitude and belief. Psychol Rec 15(2):281–288. https://doi.org/10.1007/BF03393596

Sutherland WJ, Fleishman E, Mascia MB et al. (2011) Methods for collaboratively identifying research priorities and emerging issues in science and policy. Methods Ecol Evolution 2(3):238–247. https://doi.org/10.1111/j.2041-210X.2010.00083.x

Sutherland WJ, Bellingan L, Bellingham JR et al. (2012) A collaboratively-derived science-policy research agenda. PLOS ONE 7(3):e31824. https://doi.org/10.1371/journal.pone.0031824

Tyler C (2013) Scientific advice in Parliament. In: Doubleday R, Wilsdon J (eds) Future Directions for Scientific Advice in Whitehall. University of Cambridge’s Centre for Science and Policy; Science Policy Research Unit (SPRU) and ESRC STEPS Centre at the University of Sussex; Alliance for Useful Evidence; Institute for Government; Sciencewise-ERC: London

United Nations Statistics Division (2019) Methodology: Standard country or area codes for statistical use (M49). https://unstats.un.org/unsd/methodology/m49/. Accessed 28 July 2019

van Hilten LG (2018) How does your research influence legislation? Text mining may reveal the answer. https://www.elsevier.com/connect/how-does-your-research-influence-legislation-text-mining-may-reveal-the-answer. Accessed 1 Apr 2019

Vig NJ, Paschen H (2000) Parliaments and technology: the development of technology assessment in Europe. The State University of New York Press: Albany, NY

Watts S, Stenner P (2012) Doing Q Methodological Research: Theory, Method and Interpretation. Sage Publications: London

Weiss CH (1979) The many meanings of research utilization. Public Adm Rev 39(5):426–431. https://doi.org/10.2307/3109916

Whiteman D (1985) The fate of policy analysis in congressional decision making: three types of use in committees. West Political Q 38(2):294–311

Acknowledgements

This material is based upon work supported by the National Science Foundation under Grant No. 1842117.

Author information

Authors and Affiliations

Contributions

All contributing authors confirm they satisfy the journal’s criteria for authorship.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Akerlof, K., Tyler, C., Foxen, S.E. et al. A collaboratively derived international research agenda on legislative science advice. Palgrave Commun 5, 108 (2019). https://doi.org/10.1057/s41599-019-0318-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-019-0318-6

This article is cited by

-

Global perspectives on scientists’ roles in legislative policymaking

Policy Sciences (2022)