Abstract

The European Commission’s Joint Research Centre (JRC) employs over 2000 scientists and seeks to maximise the value and impact of research in the EU policy process. To that end, its Knowledge management for policy (KMP) initiative synthesised the insights of a large amount of interdisciplinary work on the ‘evidence-policy interface’ to promote a new skills and training agenda. It developed this training initially for Commission staff, but many of its insights are relevant to organisations which try to combine research, policymaking, management, and communication skills to improve the value and use of research in policy. We recommend that such organisations should develop teams of researchers, policymakers, and ‘knowledge brokers’ to produce eight key practices: (1) research synthesis, to generate ‘state of the art’ knowledge on a policy problem; (2) management of expert communities, to maximise collaboration; (3) understanding policymaking, to know when and how to present evidence; (4) interpersonal skills, to focus on relationships and interaction; (5) engagement, to include citizens and stakeholders; (6) effective communication of knowledge; (7) monitoring and evaluation, to identify the impact of evidence on policy; and (8) policy advice, to know how to present knowledge effectively and ethically. No one possesses all skills relevant to all these practices. Rather, we recommend that organisations at the evidence-policy interface produce teams of people with different backgrounds, perspectives, and complementary skills.

Similar content being viewed by others

Introduction: why we need knowledge management for policy

The Knowledge management for policy (KMP) initiative was created by the European Commission’s Joint Research Centre (JRC), which seeks to maximise the value and impact of knowledge in the EU policy process. The JRC is at the centre of the ‘science-policy interface’, embedded inside the Commission, and drawing on over 2000 research staff to produce knowledge supporting most policy fields. Yet, it faces the same problem experienced by academic researchers (Oliver et al. 2014) and explored in a related series in this journalFootnote 1: there is often a major gap between the supply of, and demand for, policy-relevant research. This problem is not solved simply by employing researchers and policymakers in the same organisation or locating them in the same building. Rather, the gap relates primarily to key differences in the practices, expectations, incentives, language, and rules of researchers and policymakers, which is sometimes described as the ‘two communities’ problem (Gaudreau and Saner, 2014; Cvitanovic et al. 2015; Hickey et al. 2013; Cairney, 2016a, pp. 89–93)

Consequently, from 2015, the JRC developed the KMP Professionalisation Programme to address this problem and foster ‘evidence informed policymaking’. It follows Sarkki et al.'s (2014) description of this aim, in which the most credible, relevant, legitimate and robust facts are provided and understood in good time to be taken into account by policymakers. While some articles in this series focus on how individual researchers can engage effectively in the policy process (Cairney and Kwiatkowski 2017), KMP identifies the ways in which organisations containing researchers, policymakers, communicators, and knowledge brokers can adopt pragmatic ways to connect the demand and supply of policy-relevant knowledge.

To take forward this initiative, a JRC team synthesised the insights of a large amount of interdisciplinary work on the ‘evidence-policy interface’–drawing on published research and extensive discussions with experts–to promote a new skills and training agenda. It developed this training initially for Commission staff, but many of its insights are relevant to organisations which try to combine research, policymaking, management, and communication skills to improve the value and use of research in policy.

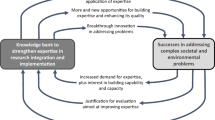

Therefore, we explain the KMP agenda as a particular case, to acknowledge that its aims are often specific to one context. However, we also demonstrate its wider relevance to organisations seeking to combine research, policymaking, and knowledge brokerage. First, we describe the methodological approach of the JRC-led team, which focused on combining an interdisciplinary literature review with expert feedback. Rather than proposing a new scientific protocol for evidence review, we describe the ways in which organisations, such as the JRC, try to combine academic rigour with a pragmatic research design. It should be flexible enough to (a) include academic and practitioner voices and (b) help produce a plan that is technically and politically feasible. Second, we show the eight challenges identified from this work (Table 1), and how they map onto eight skills (Fig. 1). Third, we discuss the potentially wider applicability and current limitations of the JRC’s KMP agenda.

Although clearly a work in progress, the JRC’s work already produces a profound moral for researchers interested in the rising importance of ‘impact’ (Boswell and Smith, 2017): only one of the eight skills relates to producing research, and the skill is to synthesise rather than produce new knowledge. This moral is in direct contrast to the most powerful driver for researchers: to produce new state of the art research in academic journals. High impact journals do not necessarily produce high impact in policy.

The context for the JRC’s methodological approach

The JRC’s approach is distinctive, to reflect its:

-

need to maintain an iterative research design, flexible enough to incorporate academic and policymaker input, and contribute to the JRC Strategy 2030

-

unusually high ‘convening power’, and ability to draw on the expertise of the most experienced people at the science-policy interface.

A method to produce an ambitious Learning and Development (L&D) strategy of a large organisation is not the same as the method to produce a narrowly focused piece of scientific research. There are too many actors and processes to contain within the parameters of, for example, a single systematic review with one question. Instead, the JRC brought together insights from many already-published reviews. It analysed a large and disparate literature on policymaking, evidence synthesis, psychology, science advice, communication, and citizen and stakeholder capacity building programmes, to identify the key skills for successful evidence-informed policymaking. It shaped the review by combining insights from multiple sources: (a) existing systematic reviews (e.g., Oliver et al. 2014) and narrative reviews (e.g., Cairney, 2016a; Parkhurst, 2017), with (b) expert and stakeholder feedback, combining semi-structured interviews, stakeholder consultation, academic-practitioner workshops, and online networks for knowledge exchange. We describe the key aspects of this process below, with more details of process and participants contained in Annex A and published documents (JRC 2017a, 2017b).

To help ‘operationalise’ the literature, the JRC facilitated workshops with leading academic experts, science advisors and organisations, and policymakers. It was unusually able to bring together global expertise, for example to encourage the authors of systematic reviews to work with experienced science advisors, policymakers, and the JRC to turn a wide range of perspectives into a focused L&D strategy.

For example, in a ‘brainstorming’ session with senior JRC managers, it became clear that capacity building was needed to equip staff to implement its new strategy. The JRC worked with the International Network for Government Science Advice (INGSA) to identify the skills needed by practitioners at the science-policy interface, and conducted stakeholder consultations including practitioners, and scholars from a diverse range of disciplines. The JRC then convened 40 leading experts–from EU institutions and member states, and New Zealand and Canada–for a participatory workshop. The aim was to reach a consensus on essential skills and to brainstorm on best practice in training. Expert feedback was harvested through world cafés and participatory workshops, combined with plenary sessions.

Consequently, the JRC review contained two mutually-reinforcing processes:

-

1.

Literature search and review: Identifying articles through multiple search methods: manual searching of key journals and online media; electronic searching of databases including the use of free-text, index terms and named author; reference scanning; citation tracking; and snowballing (restricted to English language). This search included peer reviewed journal articles and the ‘grey literature’ more likely to be produced or read by practitioners and policymakers (Davidson, 2017).

-

2.

Expert elicitation and expert reviews of our findings: Three experts–academic, practitioner, and knowledge broker–and one research organisation provided written comments and oral comments during semi-structured face-to-face interviews. One further organisation representing academics at an international level provided written feedback. The JRC sought feedback on the skills framework at three 2017 conferences–two contained primarily European academics, and one global policymakers–convened to discuss the science-policy interface.

These methods helped the JRC reach a saturation point at which no expert or study identified new practices or skills. The combination of approaches is crucial to practical ‘sense making’, in which we synthesise large bodies of knowledge to make it equally relevant to researchers and policymakers. It helps us decide which skills are suited to workshop training (e.g., research synthesis), routine organisational management (e.g., interpersonal skills), and longer term planning (e.g., evaluation).

In that context, rather than provide a comprehensive account of all the literature the JRC reviewed–much of which is covered in recent texts (e.g., Cairney, 2016a; Parkhurst, 2017) and this Palgrave Communications series–we show how bodies such as the JRC: have focused on key texts based on information searches and advice from their networks, made sense of texts in an organisational context, and turned their insights into an achievable KMP skills agenda.

Eight Skills for Knowledge Management for Policy

Synthesising research

Skill 1: employ methods to make better sense of the wealth of knowledge available on a given topic, particularly when driven by a research question ‘co-produced’ with policymakers.

Policymakers seek reliable ‘shortcuts’ to consider enough high quality evidence to make good decisions quickly (Cairney and Kwiatkowski, 2017). The sheer amount of available evidence is beyond the capacity of the human mind without the assistance of research synthesis. ‘Synthesis’ describes many methods–including systematic review and meta-analysis–to make better sense of knowledge. ‘Evidence-gap maps’ are particularly useful to bring together supply and demand. Their aim is to make sense of scientific debate or an enormous volume of scientific literature of variable quality, employing methods to filter information so that policymakers have access to robust scientific evidence. Synthesis helps identify, critically appraise, and summarise the balance of evidence on a policy problem from multiple sources (Davies, 2006).

In government, the demand for synthesis is growing to reflect the growth in primary research evidence. It has not always been rewarded by professional incentives (Rayleigh, 1885, p. 20; Chalmers et al. 2002), but systematic review is now a key part of scientific publication in fields such as health and education (Oliver and Pearce, 2017). Wilson’s (1998, p. 294) appeal sums up its role:

"we are drowning in information, while starving for wisdom. The world henceforth will be run by synthesizers, people able to put together the right information at the right time, think critically about it, and make important choices wisely."

Increasing the supply of synthesised research is only the first step. We need to recognise the the demand for evidence. There is a poor fit between researcher-led syntheses and policymaker needs (with exceptions, such as regulated markets which depend upon risk analysis models). Researchers are often not conscious of the synthesis needed for policymaking, and policymakers do not always recognise the potential contribution that synthesis can make (Fox and Bero 2014; Greenhalgh and Malterud, 2016; Davies 2006).

Therefore, the second step is to increase policy relevance by making sure that the research question is responsive to the needs of policymakers. We emphasise the need to understand how policymakers define an issue as a policy problem and therefore phrase a research question when making difficult trade-offs between competing interests and values. Possible questions can refer to:

-

Goals: Has policy x achieved its goals?

-

Comparative effectiveness: Is policy x likely to achieve the required outcomes more effectively than policy y?

-

Implementation: What is the most effective way to implement and what are the barriers?

-

Economic and strategic appraisal: What are the costs and benefits of policy x compared with policy y?

-

Policy impact: What is the likely impact of policy x on the environment, business, voluntary sector, bureaucracy, social equity?

-

Ethics and trade-offs: Is it right to implement policy x if, by doing so, it means not implementing policy y?

-

Strategic audit and benchmarking: How is country x performing in terms of social and economic change compared to others?

Likewise, by gaining a better understanding of different research tools, policymakers will become better equipped to procure the research they need. They will understand why knowledge will never accumulate to the point that it provides ‘proof’ of policy effectiveness or removes the need for deliberation and political choice.

Managing expert communities

Skill 2: Communities of experts, sharing a common language or understanding, are fundamental to applying knowledge to complex problems. Effective teams develop facilitation skills to reduce disciplinary and policy divides.

The JRC draws on psychological insights to promote collaboration across a ‘community of knowledge’ to harness the ‘wisdom of crowds’ (Sloman and Fernback, 2017). This aim comes partly from the idea that the mind is a social entity. To share and accumulate knowledge, people must be capable of sharing objectives and finding ways to establish common ground (Vygotsky, ; Tomassello, 1999; Sloman and fernback, 2017). It also reflects growing recognition that successful communities need to integrate disciplines to address complex policy challenges (Hirsch and Luzadis, 2013). The enormity, distribution, and diversity of accessible information requires new ways of working to focus on "wicked" problems that defy simple definitions and solutions (Rittell and Weber, 1973; Newman and Head, 2017). Communities of practice enable expertise to be transferred across organisations and disciplines, encouraging the co-creation of effective, interdisciplinary, and inter-policy solutions.

To achieve the wisdom of crowds requires community management skills. Crowdsourcing is dependent on convening power, and communities cannot operate effectively without well managed mapping strategies to combine the expertise of individuals and groups. The aim is to develop interdisciplinary and inter-policy networks committed to adapting expertise to the needs and reality of policymaking, making closer science-policy ties the norm. Success depends on generating a common sense of purpose, followed by a greater sense of trust and connection between members. As members share ideas and experience, they develop a common way of doing things which can lead to a shared understanding of the problem.

Communities of practice provide an important spark for innovation, through establishing a forum for researchers, policymakers, and other stakeholders to co-create research questions and answers. Their success requires skills such as ‘participatory leadership’, designed to include all community members when identifying policy objectives and developing responses. The participative style relies primarily on facilitation skills, such as Appreciative Inquiry (Whitney, 2003), World Café (Brown et al. 2005), Open Space Technology (Owen, 1997) and Ritual Dissent (Cotton, 2016).

Understanding policy and science

Skill 3: seek to better understand the policy process, which can never be as simple as a ‘policy cycle’ with linear stages. Effective teams adapt their strategies to a ‘messier’ context.

The ‘policy cycle’, as a series of linear policymaking ‘stages’, provides a simple way to project the policy process to the public (Cairney, 2012, 2015; Cairney et al. 2016). It suggests that we elect policymakers then: identify their aims, identify policies to achieve those aims, select a policy measure, ensure that the selection is legitimised by the population or legislature, assign resources, implement, evaluate, and consider whether or not to continue policy. More simply, policymakers aided by expert policy analysts make and legitimise choices, skilful public servants carry them out, and policy analysts assess the results using evidence. In each case, it is clear how to present policy-relevant evidence.

In the real world, academic studies - and testimonials by policy analysts and practitioners such as science advisors and senior policymakers–describe a far ‘messier’ process (John, 2012; Weible, 2017; Cairney, 2017a; Gluckman, 2016). First, policymakers do not simply respond to facts, partly because there are too many to consider, and not all are helpful to their aims. Instead, they use cognitive shortcuts to manage information (Cairney and Kwiatkowski, 2017). Second, they operate in a complex policy environment containing: many policymakers and influencers in many levels and types of government; each with their own rules and norms guiding collective behaviour and influencing the ways in which they understand policy problems and prioritise solutions; and, each responding to policy conditions-including demography, mass behaviour, and economic factors–often outside their control (Cairney and Weible, 2017).

The nature of policymaking has profound implications for researchers seeking to maximise the impact of their evidence. First, it magnifies the ‘two communities’ problem. In this (simplified) scenario, science and politics have fundamentally different:

-

Goals: The aim of science is to know, and the goal of politics is to act.

-

Rhythms: Policymakers often require quick answers. Research inquiry means spending a longer time addressing questions in depth.

-

Expectations of data: Policymakers might seek to consolidate data from a variety of disciplines to answer a policy question with certainty, often because their critics will exploit uncertainty to oppose policy. Academic research and dissemination is organised around disciplinary boundaries, and scientists are more comfortable with uncertainty when free to focus on long term research.

-

Expectations of each other: Politics is a mystery for many scientists, and the norms and languages of science seem obscure to many politicians.

These problems would be acute even if the policy process were easy to understand. In reality, one must overcome the two communities problem and decipher the many rules of policymaking. A general strategy is to engage for the long term to learn the ‘rules of the game’, understand how best to ‘frame’ the implications of evidence, build up trust with policymakers through personal interaction and becoming a reliable source of information, and form coalitions with people who share your outlook (Cairney et al. 2016; Weible et al. 2012; Stoker, 2010, pp. 55–57).

More collaborative work with policymakers requires contextual awareness. Researchers need to understand the political context and its drivers: identify and understand the target audience, including the policymaking organisations and individual stakeholders who are influential on the issue; and, understand their motives and how they respond to their policy environment. Although effective policymakers anticipate what evidence will be needed in the future, effective researchers do not wait for such demand for evidence to become routine and predictable.

Interpersonal skills

Skill 4: Effective actors are able to interact well with others in teams to help solve problems.

Successful policy is built on interaction: policy-relevant knowledge transpires from a myriad of interactions between scientists, policymakers, and stakeholders. Skills for social interaction–sending and receiving verbal and non-verbal cues–are central to policymaking success (Klein et al. 2006, p. 79; Reiss, 2015). They allow us to get along with peers, exchange ideas, information and skills, establish mutual respect, and encourage input from multiple disciplines and professions (Larrick, 2016). They are easier to describe in the abstract than in practice, but we can identify key categories:

-

1.

“Emotional intelligence”:’The personality traits, social graces, personal habits, friendliness, and optimism that characterise relationships.

-

2.

Collaboration and team-building: Scientists and policymakers who are able to navigate complex interpersonal environments are held in high esteem. Boundary-spanning organisations are characterised by a dynamic working environment full of individuals with different personalities and experiences. Self-directed collaborative working environments are replacing many traditional hierarchical structures, which makes it critical to have actors able to communicate and collaborate effectively and show new forms of leadership (Bedwell et al. 2014).

-

3.

The ability to manoeuvre: Scientists and policymakers are constrained by their environments, but can also make decisions to change them (Damon and Lewis, 2015; Chabal, 2003). Effective actors understand how and why people make decisions, to identify which rules are structures and which rules can be changed.

-

4.

Adaptability: Interpersonal skills are situation specific; what may be appropriate in one situation is inappropriate in another (Klein et al. 2006).

Such skills seem difficult to teach, but we can combine the primary aim of training workshops, to raise awareness among scientists and stakeholders about how their behaviour may be perceived and how it influences their interaction with others, with the initiatives of individual organisations, such as ‘360 degrees’ reviews of managers to help them reflect on their interaction with colleagues.

Engaging with citizens and stakeholders

Skill 5: Well-planned engagement with stakeholders, including citizens, can help combine scientific expertise with other types of knowledge to increase their relevance and impact.

Bodies such as the European Commission identify stakeholder engagement as a key part of their processes (Nascimento et al. 2014; European Commission, 2015, 2017). However, engagement comes in many forms, with the potential for confusion, tokenism, and ineffective participation (Cook et al. 2013a; Cairney and Oliver, 2017; Cairney, 2016a: pp. 100–102). Skilful and well planned engagement requires coordinators to address three questions.

1. What is the level, type, and aim of engagement?

Engagement comes in many forms, responding to different objectives, from consultative exercises at local level to cross-European or global deliberative exercises. Examples include public stakeholder consultations and stakeholder participation in advisory committees. Each can help obtain and integrate stakeholder voices into the policymaking process. Stakeholders may support or oppose the policy proposals, and have different degrees of influence within their community. Citizens can be involved directly or represented indirectly through professionalised bodies. Overall, there is high potential to appear tokenistic if we do not identify why we choose a particular type of engagement with clear aims and processes.

2. Can anyone be involved automatically, or do we first need to build capacity?

The diffusion of "low cost" and "low tech" media appears to allow citizens to engage with each other and with policymaking institutions like never before. Yet such advances do not guarantee that citizens are actually engaged or their voices heard. There are inequalities of engagement and impact relating to social status, access to technology, and the confidence and ability to engage (Matthews and Hastings, 2013). Consequently, key initiatives, such as the Better Regulation Agenda, encourage capacity building, (a) of the citizens and stakeholders they seek to engage, and (b) of researchers and policymakers to help them manage participatory approaches. Each type of engagement–policy deliberation, knowledge co-production, citizen science, informal one-on-ones–requires its own skills.

3. What amount of mutual learning should we seek?

One can engage on a notional spectrum, from a sincere aim to learn from citizens, to an instrumental aim to ‘transmit’ knowledge to ensure that citizens agree with your way of thinking. The latter relates to an outmoded ‘deficit model’ that describes disagreement between citizens and scientists because the former are less knowledgable. In contrast, mutual learning refers to the exchange of information and increased familiarity with a breadth of perspectives (European Commission, 2015). Engagement may help identify areas of agreement and disagreement and provide ways to understand the drivers behind differences. It may help to articulate the values of the most affected communities and align policy recommendations with their expectations (Lemke and Harris-Way, 2015). Further, citizens have recently become more involved in the research effort itself through do-it-yourself science and other bottom-up approaches (Nascimento et al. 2014; Martin 2017).

Modern policymaking institutions foster civic engagement skills and empowerment, increase awareness of the cultural relevance of science, and recognise the importance of multiple perspectives and domains of knowledge. They treat engagement as an ethical issue, pursuing solidarity through collective enterprise to produce values that are collectively decided upon and align policymaking with societal needs (European Commission, 2015). Skills for citizen and stakeholder engagement are crucial to policy legitimacy. Engagement is a policy aim as well as a process, prompting open, reflective, and accessible discussion about desirable futures.

In that context, the JRC aims to help researchers and policymakers develop skills, including:

-

Policy deliberation: Focus on long-range planning perspectives, continuous public consultation, and institutional self-reflection and course correction.

-

Knowledge co-production: Focus on intentional collaborations in which citizens engage in the research process to generate new knowledge.

-

Citizen science: Engage citizens in data gathering to incorporate multiple types of knowledge.

-

Informality: Encourage less structured one-on-one interactions in daily life between researchers and publics.

Communicating scientific knowledge

Skill 6: Research impact requires effective communication skills, from content-related tools like infographic design and data visualisation, to listening and understanding your audience.

Poor scientific communication is a key feature of the ‘barriers’ between evidence and policy (Oliver et al. 2014; Stone et al. 2001). Research results are often behind ‘paywalls’ or ‘coded’ for the academic community in jargon that excludes policymakers (Bastow et al. 2013). If policymakers do not have access to research, they are not able to make evidence-informed decisions. Policymakers use the best available information. If scientists want policymakers to choose the best evidence, they need to communicate more clearly, strategically, and frequently with policymakers (Warira et al. 2017).

Evidence-based ideas have the best chance of spreading if they are expressed well. Effective researchers recognise that the evidence does not speak for itself and that a ‘deficit model’ approach–transmit evidence from experts to non-specialists to change their understanding and perception of a problem–is ineffective (Hart and Nisbet, 2012). Rather, to communicate well is to know your audience (Cairney and Kwiatkowski, 2017).

‘Communication’ encompasses skills to share and receive information, in written and oral form, via digital and physical interaction, using techniques like infographic design and data visualisation, succinct writing, blogs, public speaking and social media engagement (Estrada and David, 2015; Wilcox, 2012). Some go further than a focus on succinct and visually appealing messages, to identify the role of ‘storytelling’ as a potent device (see skill 8, and Davidson, 2017; Jones and Crow, 2017).

Communication skills help researchers connect with policymakers, stakeholders and citizens, as well as they connect with their peers, by reducing the cognitive load required to understand the message, and/ or engaging the audience emotionally to increase interest and the memorability of the evidence (Cairney and Kwiatkowski, 2017). The adoption of these skills requires culture change. Fundamental communication questions need to be addressed from the onset of scientific projects, including the "so what?" question, considerations of "how will the results of this work affect your next door neighbour?", and "how will people understand this issue?". The packaging of the project’s results needs to be considered: is a scientific report needed, or would a user-friendly interactive infographic be a more efficient means of "translating" and disseminating the results? Techniques such as Pecha kuccha and the 3-minute presentation should become common-place.

Most incentives in science push researchers to communicate primarily to their peers, but many actors exhibit the intrinsic motivation to do more (Cairney and Weible, 2017). Effective communicators lead by example, showing how to do it effectively and demonstrating the payoffs to scientists and their audiences (Weible and Cairney, 2018). To do so, they must be supported by policymaking organisations providing new skills and incentives to communicate.

Monitoring and evaluation

Skill 7: Monitoring and evaluating the impact of research evidence on policymaking helps improve the influence of evidence on policymaking.

Senior policymakers in the European Commission have made a commitment to use evidence in policy, such as by developing the better regulation tool box and establishing the Regulatory Scrutiny Board to make sure the better regulation agenda is put into practice (EU Commission, 2017). Frans Timmermanns, First Vice President of the European Commission, stated in 2017 at the Regulatory Scrutiny Board Conference: "we have worked hard to embed better regulation into the DNA of the European Commission, installing a priority driven, evidence-based, disciplined, transparent and above all an inclusive policy process". Professor Vladimír Šucha, Director General of the JRC, has described the EU’s high commitment to evidence-use for evaluation as a way to provide legitimacy for its work (see Cairney, 2017b). Such statements give the JRC a mandate to support EU policies with evidence. Its raison d'etre is to bring evidence into policymaking and for evidence to produce an impact on policy.

However, dilemmas and obstacles remain. First, measurement should involve attempts to separate the effect of research on policy inputs, such as position statements, outputs, such as regulatory change, and outcomes, such as improved air quality or safer public transport (Renkow and Byerlee, 2014; Hazell and Slade, 2014; Gaunand et al. 2017; Almeida and Báscolo, 2006). This analytical differentiation is vital to measurement. It requires training to help actors embed an evaluation culture in an organisation.

Second, it is easier to monitor and evaluate the impact of knowledge on inputs and outputs than outcomes (Cohen et al. 2015), which can produce an over-reliance on short-term impacts. It can encourage attempts to ‘game’ systems to produce too-heroic stories of the impact of key individuals (Dunlop, 2018).

There are nascent metrics to gauge the ways in which, for example, government reports cite academic research, which could be supplemented by qualitative case studies of evidence use among policymakers. However, there remains a tendency for studies to define impact imprecisely (Alla et al. 2017). Therefore, at this moment, we describe evidence impact evaluation as a key skill for researchers seeking to monitor their effectiveness, but we recognise that each research organisation needs to develop its own methodology, which can be based on principles that must be adapted to specific objectives and institutional contexts.

Advising policymakers

Skill 8: Effective knowledge brokerage goes beyond simply communicating research evidence, towards identifying options, helping policymakers understand the likely impact of choices, and providing policy advice from a scientific viewpoint.

A key feature of the JRC annual conferences (Cairney, 2016b; 2017b) is the desire by many scientists and scientific advisors to remain as ‘honest brokers’ rather than ‘issue advocates’; they draw on Pielke’s (2007) distinction between (a) explaining the evidence-informed options and (b) expressing a preference for one.

Such distinctions are critiqued by scholars in science and technology studies, with reference to the argument that: (a) a broker/ advocate distinction relies on an unsustainable distinction between facts and values, or (b) an appeal to objectivity is a political project to privilege science in policy and use it as a vehicle for specific values (Jasanoff, 2008; Jasanoff and Simmet, 2017; Douglas, 2009). We communicate by telling stories, and the stories scientists tell about their objectivity are no exception (Davidson, 2017; Jones and Crow, 2017). Further, fact/ values distinctions have been overtaken by an era of ‘post-normal science’ in which we accept that scientific knowledge of urgent and complex problems is limited and ineffective if not combined with judgement and values (Funtowicz and Ravetz, 2003).

The idea of an ‘honest broker’ is often at odds with the demand by policymakers for evidence-informed recommendations or, at least, the demand that knowledge brokers consider the political context in which they are presenting evidence. Further, to present oneself as an objective and aloof researcher is to be of limited use to policymakers.

For example, the new vision of the JRC–"to play a central role in creating, managing and making sense of collective scientific knowledge for better EU policies"–is deliberately normative, and places more demands on researchers to explain the policy relevance of their evidence. It states that the JRC will complement its research work by 'managing' knowledge and communicating it to policymakers in a systematic and digestible manner from a trusted source. This is important support for policymakers, given the enormous quantity of scientific data, information, and knowledge they have to process, some of which has not been quality checked or has been published by organisations for their own political purposes. In these circumstances, to frame evidence for policymakers, we need to apply a political lens to understand the message and the motive of the messenger, which requires a sensitivity that does not come naturally to the ‘objective’ scientist.

However, we also need to develop skills that scientists are willing to use. For many researchers, an appeal to objectivity–or, at least, the systematic means to reduce the role of cognitive bias on evidence production–is part of a scientific identify. A too-strong call for persuasive policy advice may cause many experts to retreat from politics. They may feel that the use of persuasion in policy advocacy is crossing an ethical line between honest brokerage and manipulation. Many scientists do not accept the argument that an appeal to scientific objectivity is a way to exercise political power (Jasanoff and Simmet, 2017). Nor would all scientists accept the argument by Cairney and Weible, (2017, p. 5) that ‘policy analysis is inherently political, not an objective relay of the evidence … The choice is not whether or not to focus on skilful persuasion which appeals to emotion, but how to do it most effectively while adhering to key ethical principles’.

Consequently, the development of policy advice skills must be pragmatic, to incorporate a scientist identity and commitment to ethical principles that emphasise impartial policy advice. We accept that our encouragement of scientists to become better-trained storytellers will be a hard sell unless we link this training strongly to a meaningful discussion of the ethics of policy advice. Indeed, the pursuit of policy relevant scientific advice is quite modest, about making sure that scientists are ‘in the room’ to ensure that inferences are correctly drawn from the evidence and that politicians are clear when they are moving beyond the evidence. The skills of scientists in explaining and applying scientific methods, and articulating a ‘scientific way of thinking’ (if such a general way of thinking exists), helps ensure that decisions are well-founded, and that policymakers can consider how they combine facts and values to make decisions.

Discussion and reflection on current limitations

The JRC has sought to lead or coordinate discussions about the emergence of knowledge management teams. Further, the development of these skills could help make JRC staff more effective knowledge brokers. However, we have only begun to understand how to measure effectively the impact of knowledge and science on policymaking. Future success requires three main factors.

First, continuous reflection on the KMP agenda. For example, we can already identify overlaps in skills. We discuss the ‘co-creation’ or ‘co-production’ of knowledge as part of synthesising research, but it is also relevant to understanding policy and advising policymakers. Further, good storytelling can enhance communicating scientific knowledge and advising policymakers.

However, we do not yet know the extent to which the KMP agenda will involve major trade-offs. The science-policy interface contains many actors with profoundly different ideas about how we should produce and use science. Perhaps the most extreme example is the ontological and epistemological distance between the actors who favour a hierarchy of evidence privileging randomised control trials during systematic review, and those who encourage a diversity of perspectives during the ‘co-production’ of knowledge between researchers, practitioners, and service users. Within both extremes are many models which present contrasting ideas on how to ensure the production and use of evidence (Cairney and Oliver, 2017).

Other long term effects may be more difficult to anticipate, such as the potential of new knowledge management organisations to exacerbate gender divides in this field, such as between well-represented 50+ male scientists and early career female knowledge brokers.

Second, monitoring and evaluating current initiatives. The JRC is beginning its implementation phase, providing training to researchers and policymakers from the European Commission. It is working with policymakers and researchers to establish a baseline for assessing changes in the supply and demand of evidence for policy to which the KMP agenda may have contributed. Likewise, it is working with learning and development experts to (1) strengthen the pedagogical aspects of the training material; and (2) monitor and evaluate the effectiveness of this aspect of the skills framework. It is also piloting KMP in real life settings, such as through existing Horizon 2020 funded projects and in influencing the design of the call for FP9-funded projects. Part of this piloting involves identifying how skills develop in new organisations, then adapting the skills framework based on feedback. Still, the project’s main limitation regards uncertainty about how the KMP agenda will work, and how to evaluate its progress systematically.

Third, continuous learning from international experience. At this stage, we do not know the extent to which the JRC is setting an agenda to which many will follow, or if its experience proves to be unusual, providing few generalisable lessons. JRC will continue to discuss these issues, including lessons learnt during the implementation phase, in international fora, and all training material will be available online, free of charge. Finally, the JRC is launching a new research project, Enlightenment 2.0Footnote 2, with the objective of better understanding and explaining the drivers that influence policy decisions and political discourse. The findings of this research project will also be made available to all interested parties.

Conclusion

KMP requires eight skills which can help address the evidence-policy gap. If we initially think of these skills in the abstract, as key elements of an ideal-type organisation, we can describe how they contribute to an overall vision for effective KMP: policymakers justify action with reference to the best available evidence, high scientific consensus, and citizen and stakeholder ‘ownership’; and, researchers earn respect, build effective networks, tailor evidence to key audiences, provide evidence-informed policy advice (without simply becoming advocates for their own cause), and learn from their success. Table 2 highlights the proposed impact of each skill in an ideal-type organisation, compared with less effective practices that provide a cautionary tale.

In recommending eight skills, we argue that ‘pure scientists' and ‘professional politicians’ cannot do this job alone. Scientists need ‘knowledge brokers’ and science advisors with the skills to increase policymakers’ demand for evidence. Policymakers need help to understand and explain the evidence and its implications. Brokers are essential: scientists with a feel for policy and policymakers understanding how to manage science and scientists.

Many scientists are in a great position to move into this new profession, providing a more robust form of knowledge-based consultancy, built on a crucial understanding of scientific methods and evidence assessment. However, working between science and policy is hard to manoeuver, and the training we identify is more like a career choice than a quick fix. Science and policy worlds are interconnected, but not always compatible. Therefore, knowledge managers need to professionalise, to develop new skills, and work in teams with a comprehensive set of skills unlikely to be held by one person.

Data availability

Data sharing is not applicable to this paper as no further datasets (i.e., datasets not already in the public record) were analysed or generated.

Change history

18 September 2018

The affiliation for authors Lene Topp, David Mair and Laura Smillie was incorrectly stated as “Joint Research Centre, European Commission, Retieseweg 111, 2440, Geel, Belgium”. This has been corrected to “Joint Research Centre, European Commission, Brussels, Belgium”.

In Table 2, the row labeled “Advising policymakers”, in the column labeled “The cautionary tale” the cell reads “Researchers become marginalised and rarely trusted in day to day politics. Policymakers receive evidence but remain sure about its relevance and risk of inaction”. This has been corrected to “The cautionary tale” the cell reads “Researchers become marginalised and rarely trusted in day to day politics. Policymakers receive evidence but remain unsure about its relevance and risk of inaction”.

This has been corrected in both the HTML and PDF versions of this paper.

Notes

The politics of evidence-based policymaking: maximising the use of evidence in policy. https://www.nature.com/collections/xhxktjgpjc

References

Alla K, Hall WD, Whiteford HA, Head BW, Meurk CS (2017) How do we define the policy impact of public health research? A systematic review. Health Res Policy Syst 15(1):84

Almeida C, Báscolo E (2006) Use of research results in policy decision-making, formulation, and implementation: A review of the literature. Cad De Saúde Pública 22:S7–S19

Bastow S, Dunleavy P, Tinkler J (2013) The impact of the social sciences: How academics and their research make a difference. Sage, London

Bedwell W, Fiore S, Salas E (2014) Developing the future workforce: an approach for integrating interpersonal skills into the MBA classroom. Acad Manag Learning Educ 13:171–186

Boswell C, Smith K (2017) Rethinking policy ‘impact’: four models of research-policy relations. Pal Commun 3:44. https://www.nature.com/articles/s41599-017-0042-z

Brown J, Isaacs D, Wheatley M (2005) The World café: Shaping our Futures through Conversations that Matter. Berrett-Koehler Publishers, Oakland, CA

Cairney P (2015) How can policy theory have an impact on policy making?. Teach Public Adm 33(1):22–39

Cairney P (2016a) The politics of evidence-based policymaking. Palgrave Pivot, London

Cairney P (2016b) Principles of science advice to government: key problems and feasible solutions, Paul Cairney: Politics and Policy, https://paulcairney.wordpress.com/2016/10/05/principles-of-science-advice-to-government-key-problems-and-feasible-solutions/

Cairney P (2017a) A 5-step strategy to make evidence count, Paul Cairney: Politics and Policy, https://paulcairney.wordpress.com/2017/09/14/a-5-step-strategy-to-make-evidence-count/

Cairney P (2017b) #EU4Facts: 3 take-home points from the JRC annual conference, Paul Cairney: Politics and Public Policy https://paulcairney.wordpress.com/2017/09/29/eu4facts-3-take-home-points-from-the-jrc-annual-conference/

Cairney P, Kwiatkowski R (2017) How to communicate effectively with policymakers: combine insights from psychology and policy studies. Pal Commun 3:37, https://www.nature.com/articles/s41599-017-0046-8

Cairney P, Oliver K (2017) Evidence-based policymaking is not like evidence-based medicine, so how far should you go to bridge the divide between evidence and policy? Health Res Policy Syst 15:35. https://doi.org/10.1186/s12961-017-0192-x

Cairney P, Oliver K, Wellstead A (2016) To bridge the divide between evidence and policy: reduce ambiguity as much as uncertainty. Public Admir Rev Early View https://doi.org/10.1111/puar.12555

Cairney P, Weible C (2017) The new policy sciences. Policy Sci https://doi.org/10.1007/s11077-017-9304-2

Chabal P M (2003) Do ministers matter? The individual style of ministers in programes policy change. Int Rev Admir Sci 69(1):29-49

Chalmers I, Hedges L, Cooper H (2002) A brief history of research synthesis. Eval Health Professions 25(1):12–37

Cohen G, Schroeder J, Newson R, King L, Rychetnik L, Milat A et al. (2015) Does health intervention research have real world policy and practice impacts: testing a new impact assessment tool. Health Res Policy Syst 13(1):3

Cook B, Kesby M, Fazey I, Spray C (2013a) The persistence of ‘normal’ catchment management despite the participatory turn: Exploring the power effects of competing frames of reference. Social Stud Sci 43(5):754–779

Cotton D (2016) The MSart Solution Book: 68 tools of brainstorming, problem solving and decision making. FT Press, Harlow, UK

Cvitanovic C, Hobday AJ, van Kerkhoff L, Wilson SK, Dobbs K, Marshall NA (2015) Improving knowledge exchange among scientists and decisionmakers to facilitate the adaptive governance of marine resources. Ocean Coast Manag 112:25–35

Damon A, Lewis J (eds) (2015) making public policy decisions: expertise, skills and experience. Routledge, London

Davidson B (2017) Storytelling and evidence-based policy: lessons from the grey literature. Pal Commun 3:201793

Davies P (2006) What is needed from research synthesis from a policy-making perspective? In: Popay J (ed) Moving beyond effectiveness in evidence synthesis–methodological issues in the synthesis of diverse sources of evidence. National institute for Health and Clinical Excellence, London, pp 97–105

Douglas H (2009) Science, policy, and the value-free ideal. University of Pittsburgh Press, Pittsburgh

Dunlop C (2018) The political economy of politics and international studies impact: REF2014 case analysis. British Politics https://doi.org/10.1057/s41293-018-0084-x

Estrada F C R, David L S (2015) Improving visual communication of science through the incorporation of graphic design theories and practices into science communication. Sci Commun 37(I):140–148

European Commission, Joint Research Centre, DDG.01 Econometrics and Applied Statistics (2015) Dialogues: public engagement in science, technology and innovation. Publication Office of the European Union, Luxembourg, 2015

European Commission (2017) Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions, Completing the Better Regulation Agenda: better solutions for better results, SWD (2017) 675 final, 24.10.2017, https://ec.europa.eu/info/sites/info/files/completing-the-better-regulation-agenda-better-solutions-for-better-results_en.pdf

Fox D, Bero L (2014) Systematic Reviews: Perhaps "the answers to policy makers' prayers"? In: Environmental Health Perspectives, Vol. 22, Issue 10

Funtowicz S, Ravetz J (2003) Post-normal science. International Society forEcological Economics (ed.), Online Encyclopedia of Ecological Economics at http://www.ecoeco.org/publica/encyc.htm

Gaudreau M, Saner M (2014) Researchers are from Mars: Policymakers are from Venus. Institute for Science, Society and Policy, Ottawa. http://issp.uottawa.ca/sites/issp.uottawa.ca/files/issp2014-spibrief1-collaboration.pdf

Gaunand A, Colinet L, Matt M, Joly PB (2017) Counting what really counts? Assessing the political impact of science. J Technol Transfer 1–23. https://doi.org/10.1007/s10961-017-9605-9

Gluckman P (2016) The science-policy interface. Science 353(6303):969. Sep 2

Greenhalgh T, Malterud K (2016) Systematic review for policymaking: muddling through. In the American Journal of Public Health, January 2017, Vol 107, No 1

Hart PS, Nisbet EC (2012) Boomerang effects in science communication: How motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Commun Res 39:701–723

Hazell P, Slade R (2014) Policy Research: The Search for Impact. In Workshop on best practice methods for assessing the impact of policy-oriented research: Summary and recommendations for the CGIAR, Washington, DC, Hazell, Washington DC, IFPRI

Hickey G, Forest P, Sandall J, Lalor B, Keenan R (2013) Managing the environmental science—Policy nexus in government: Perspectives from public servants in Canada and Australia. Sci Public Policy 40(4):529–543

Hirsch PD, Luzadis VA (2013) Scientific concepts and their policy affordances: How a focus on compatibility can improve science-policy interaction and outcomes. Nat Cult 8(1):97–118

Jasanoff S (2008) Speaking honestly to power. Am Sci 6(3):240

Jasanoff S, Simmet H (2017) No funeral bells: Public reason in a ‘post-truth’ age. Social Stud Sci 47(5):751–770

John P (2012) Analysing public policy, 2nd edn. Routledge, London

Jones M, Crow D (2017) How can we use the ‘science of stories’ to produce persuasive scientific stories? Pal Commun 3:53 https://doi.org/10.1057/s41599-017-0047-7

JRC (Joint Research Centre) (2017a) Framework for Skills for Evidence-Informed Policy-Making (Final Version) (Brussels: JRC) https://ec.europa.eu/jrc/communities/community/evidence4policy/news/framework-skills-evidence-informed-policy-making-final-version

JRC (Joint Research Centre) (2017b) #EU4FACTS-Evidence for Policy Community (Brussels: JRC) https://ec.europa.eu/jrc/communities/community/evidence4policy

Klein C, DeRouin R, Salas E (2006) Uncovering workplace interpersonal skills: a review, framework, and research agenda. International Review of Industrial and Organizational Psychology, Vol. 21, p 79

Larrick RP (2016) The social context of decisions. Annu Rev Organ Psychol Organ Behav 3:441–467

Lemke A, Harris-Way J (2015) Stakeholder engagement in policy development: challenges and opportunities for human genomics. Genetics in medicine, 17(12), pp 949-957

Martin V (2017) Citizens science as a means for increasing public engagement in science, presumption or possibility? Scicence Commun, Sage J 39(Issue 2):142–168. 2017

Matthews P, Hastings A (2013) Middle-class political activism and middle-class advantage in relation to public services: a realist synthesis of the evidence base. Social Policy Adm 47(1):72–92

Nascimento S, Guimaraes Pereira A, Ghezzi A (2014) From citizens science to do it yourself science. An annotated account of an ongoing movement. Publications Office of the European Union, EUR 27095

Newman J, Head B (2017) Wicked tendencies in policy problems: rethinking the distinction between social and technical problems, Policy Soc https://doi.org/10.1080/14494035.2017.1361635

Oliver K, Innvar S, Lorenc T, Woodman J, Thomas J (2014) A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res 14(1):2

Oliver, K, Pearce (2017) Three lessons from evidence-based medicine and policy: increase transparency, balance inputs and understand power. Pal Commun 3:43, https://www.nature.com/articles/s41599-017-0045-9

Owen H (1997) Open space technology: a user's guide. Berrett-Koehler Publishers, Oakland, CA

Parkhurst J (2017) The politics of evidence. Routledge, London

Pielke Jr R (2007) The honest broker: making sense of science in policy and politics. Cambridge University Press, Cambridge

Rayleigh, The Right Honorable Lord (1885) Presidential address at the 54th meeting of the British Association for the Advancement of Science, Montreal, August/September 1884. London: John Murray

Reiss K (2015) Leadership coaching for educators–bringing out the best in school administrators, 2nd edn, Corwin Press, Thousand Oaks, CA

Renkow M, Byerlee D (2014) Assessing the impact of policy-oriented research: a stocktaking. In: Workshop on best practice methods for assessing the impact of policy-oriented research: Summary and recommendations for the CGIAR, Washington, DC

Rittell H, Weber M (1973) Dilemmas in a general theory of planning. Policy Sci 4:155–69

Smith KE, Stewart EA (2017) Academic advocacy in public health: Disciplinary ‘duty’or political ‘propaganda’? Social Sci Med 189:35–43

Sloman S, Fernback P (2017) The Knowledge Illusion: why we never think alone. Riverhead books, New York

Stoker G (2010) Translating experiments into policy. Ann Am Acad Political Social Sci 628:47–58

Weible C, Heikkila T, deLeon P, Sabatier P (2012) Understanding and influencing the policy process. Policy Sci 45(1):1–21

Weible C (2017) Theories of the Policy Process, 4th edn. Westview Press, Chicago

Sarkki S, Niemela J, Tinch R, van den Hove S, Watt A, Young J (2014) Balancing creidibility, relevance and legitimacy: a critical assessment of trade-offs in science-policy interfaces. Sci Public Policy 41:194–206

Stone D, Maxwell S, Keating M (2001) Proceedings from an international workshop funded by the UK Department for International Development: Bridging research and policy. Coventry, England, Warwick University

Tomassello M (1999) The cultural origins of human cognition. Harvard University Press, Cambridge

Warira D, Mueni E, Gay E, Lee M (2017) Achieving and sustaining evidence-informed policy making. Sci Commun 39(3):382–394

Weible C, Cairney P (2018) Practical lessons from policy theories, policy and politics

Whitney D, Stavros J, Fry R (2003) The appreciative inquiry handbook: the first in a series of ai workbook for leaders of change. In: Whitney D (ed) Berrett–Koehler Publishers

Wilcox C (2012) It's time to e-volve: taking responsibility for science communication in a digital age. In: Biological Bullentin, Guest Editorial. Vol. 222, No 2, The University of Chicago Press Journals

Wilson EO (1998) Consilience: the unity of knowledge, Vintage Books. Random House, New York

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Topp, L., Mair, D., Smillie, L. et al. Knowledge management for policy impact: the case of the European Commission’s Joint Research Centre. Palgrave Commun 4, 87 (2018). https://doi.org/10.1057/s41599-018-0143-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-018-0143-3

This article is cited by

-

Possibility of the optimum monitoring and evaluation (M&E) production frontier for risk-informed health governance in disaster-prone districts of West Bengal, India

Journal of Health, Population and Nutrition (2024)

-

Research evidence communication for policy-makers: a rapid scoping review on frameworks, guidance and tools, and barriers and facilitators

Health Research Policy and Systems (2024)

-

eHealth policy framework in Low and Lower Middle-Income Countries; a PRISMA systematic review and analysis

BMC Health Services Research (2023)

-

Digital Storytelling Through the European Commission’s Africa Knowledge Platform to Bridge the Science-Policy Interface for Raw Materials

Circular Economy and Sustainability (2023)

-

Understanding Conceptual Impact of Scientific Knowledge on Policy: The Role of Policymaking Conditions

Minerva (2022)