Abstract

We investigate the computational efficiency and thermodynamic cost of the D-Wave quantum annealer under reverse-annealing with and without pausing. Our demonstration on the D-Wave 2000Q annealer shows that the combination of reverse-annealing and pausing leads to improved computational efficiency while minimizing the thermodynamic cost compared to reverse-annealing alone. Moreover, we find that the magnetic field has a positive impact on the performance of the quantum annealer during reverse-annealing but becomes detrimental when pausing is involved. Our results, which are reproducible, provide strategies for optimizing the performance and energy consumption of quantum annealing systems employing reverse-annealing protocols.

Similar content being viewed by others

Introduction

Large-scale investments in quantum technologies are usually justified with promised advantages in sensing, communication, and computing1. Among these, quantum computing is probably the most prominent application since it has the potential to revolutionize information processing and computational capabilities. For certain tasks, quantum computers exploit the fundamental principles of quantum mechanics to perform complex calculations exponentially faster than classical computers2,3,4. The tremendous computational power offered by quantum systems has fueled excitement and exploration in various scientific, industrial, and financial sectors1,5,6,7,8,9,10,11,12. Consequently, there have been significant developments in the pursuit of quantum advantage that have propelled quantum computing from theoretical speculation to practical implementation13,14,15,16,17,18,19,20,21,22,23.

Major technology companies such as IBM, Google, Microsoft, Intel, and Nvidia have been investing massively in quantum research and development, leading to the establishment of quantum computing platforms and open-source frameworks, that enable researchers and developers to demonstrate and explore the potential of quantum algorithms and applications24. These advancements have been driven by breakthroughs in both hardware and algorithmic techniques, bringing us closer to realizing the potential of quantum computers25,26.

However, the rapid development of quantum technologies also raises critical questions about the energy requirements and environmental implications of quantum computation27,28. Energy consumption has become a focal point for researchers, policymakers, and society at large as the demand for computing power continues to rise, and concerns about climate change and sustainability intensify. Consequently, assessing the energy consumption of quantum computers is vital for evaluating their feasibility, scalability, and identifying potential bottlenecks29.

The energy consumption of quantum computers stems from various sources, including the cooling systems needed to maintain the delicate quantum states, the control and manipulation of qubits, and the complex infrastructure required to support quantum operations30,31. The superposition characteristic of qubits demands a sophisticated physical environment, with precise temperature control and isolation, leading to significant energy expenditures. These challenges call for synergic work between quantum information science, quantum engineering, and quantum physics to develop an interdisciplinary approach to tackle this problem32.

The theoretical framework to quantify the energy consumption of quantum computation is through quantum thermodynamics, which provides the necessary tools to quantify and characterize the efficiency of emerging quantum technologies, and therefore is crucial in laying a roadmap to scalable devices27,33,34. The field of quantum thermodynamics provides a theoretical foundation to understand the fundamental limits of quantum technologies, macroscopically, in terms of energy consumption and efficiency, by identifying the thermodynamic resources required to process and manipulate quantum information at the microscopic level27,35,36, such as quantum versions of Landauer’s principle37,38,39,40,41. The exploration of the thermodynamics of information is not limited to the equilibrium settings, as recent research has delved into the nonequilibrium aspects of quantum computation, particularly in the context of quantum algorithms and quantum simulation42,43. Understanding the thermodynamics of quantum systems, including the generation of entropy, heat dissipation, and non-equilibrium dynamics, serves to optimize the algorithmic performance, energy consumption, and resource utilization44.

The study of the thermodynamics of quantum computers has been an active research area with notable results that deepen our understanding of the energy landscapes, heat dissipation, and efficiency of quantum computation while also addressing challenges related to noise, decoherence, and thermal effects45,46,47,48. The optimization of the energy efficiency of quantum computers has been approached from several angles, for instance, to eluciate the minimization of the energy dissipation during computations, and to develop energy-efficient algorithms and architectures49,50,51,52,53,54,55,56,57. The focus is on reducing energy requirements and increasing the computational efficiency of quantum systems, paving the way for sustainable and practical quantum computing technologies. As quantum computers generate heat during operation, effective thermal management becomes essential to maintain qubit stability and mitigate thermal errors.

In this paper, we study the interplay between thermodynamic and computational efficiency in quantum annealing. In recent years, thermodynamic considerations of the D-Wave quantum annealer have become prevalent. For instance, some of us used the quantum fluctuation theorem to assess the performance of annealing48. Furthermore, the working mechanism of the D-Wave chip was shown to be equivalent to that of a quantum thermal machine, e.g. thermal accelerator, under the reverse-annealing schedule58. Here, we take a step further and analyze the energetic and computational performance of quantum annealing under reverse-annealing, and how to optimize it through the introduction of pausing in the annealing schedule. We demonstrate our approach on the D-Wave 2000Q quantum annealer and we show that a pause in the annealing schedule allows us to achieve better computational performance at a lower energetic cost. Additionally, we discuss the role and impact of the magnetic field on the performance of the chip.

Theory & figures of merit

We start by briefly outlining notions and notations. Quantum annealing consists of mapping the optimization problem to a mathematical model, that can be described using qubits, i.e. the Ising model59. The quantum annealer is initialized in a quantum state that is easy to prepare. The system is then evolved according to a time-varying Hamiltonian, which is a mathematical operator representing the problem’s objective function, and can be expressed as,

where \(\sigma _i^{\alpha }\), with \(\alpha =(x,z)\) are Pauli matrices, and \(h_i\) is the local magnetic field. \(s_t=t/\tau \) describes the annealing schedule that controls the rate of the transformation between the easy-to-prepare Hamiltonian \(H_0=\sum _i \sigma _i^x \) and the problem specific Hamiltonian \(H_p= \sum _i h_i \sigma _i^z + \sum _{\langle i,j \rangle } J_{i,j} \sigma _i^z \sigma _j^z \), with \(\tau \in [0,t]\). On the D-Wave machine, the annealing time t can be chosen from microseconds (\(\sim \!2\mu s\)) to milliseconds (\(\sim \!2000 \mu s\)).

The usual quantum annealing process, called forward annealing, starts with initializing the qubits in a known eigenstate of \(\sigma ^x\). The system is then driven adiabitically by varying the Hamiltonian parameters, the adiabatic evolution is a requirement for the system to remain in its instantaneous ground state60. Initially, the driver Hamiltonian \(H_0\) dominates, and the qubits are in a quantum superposition. As the annealing progresses, the problem Hamiltonian \(H_p\) gradually becomes dominant, and the qubits tend to settle into the low-energy states that correspond to the optimal solution of the problem.

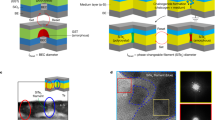

In this work, we employ a different protocol called reverse-annealing as depicted in Fig. 1, where the processor initially starts with a classical solution defined by the user, to explore the local space around a known solution to find a better one. Reverse-annealing has been shown to be more effective than forward annealing in some specific use cases, including nonnegative/binary matrix factorization61, portfolio optimization problems62, and industrial applications63. Moreover, reverse-annealing has unique thermodynamic characteristics with typically enhanced dissipation58,64.

To quantify the thermodynamic efficiency of the D-Wave 2000Q chip, we initialize the quantum annealer in the spin configuration described by a thermal state at inverse temperature \(\beta _1=1\), and we assume that initially the system (S)+environment (E) state is given by the joint density matrix,

where \(H_S\) and \(H_E\) denote, respectively, the Hamiltonian of the system and environment, while \(Z_S\) and \(Z_E\) describe, respectively, the partition function of the system and environment. \(\beta _2\) is the inverse temperature of the environment. The energy transfer between two quantum systems, initially at different temperatures, is described by the quantum exchange fluctuation theorem65,66,

where \(\Delta E_i, i=1,2\) are, respectively, the energy changes of the processor and its environment during the annealing time t, and \(p(\Delta E_1,\Delta E_2)\) is the joint probability of observing them in a single run of the annealing schedule. Equation (3) can be re-written in terms on the entropy production \(\Sigma =\beta _1 \Delta E_1 + \beta _2 \Delta E_2\) as67,68,

Example of the embedding of the \(300-\)spin Ising chain onto D-Wave 2000Q quantum processing unit (QPU) with the chimera architecture69. White-blue dots and lines are active qubits, and grey ones are inactive. This is one-to-one embedding, such that every physical qubit corresponds to one logical qubit.

Note that during our demonstrations, we have only access to the energy change of the processor \(\Delta E_1\). Therefore, the thermodynamic quantities: entropy production \(\langle \Sigma \rangle \), average work \(\langle W\rangle \), and average heat \(\langle Q\rangle \) are not directly accessible. However, they can be lower bounded by thermodynamic uncertainty relations70 as shown in Appendix B,

where \(g(x)=x\tanh ^{-1}{(x)}\), and \(\beta _2\) is the inverse temperature of the environment which can be estimated using the pseudo-likelihood method introduced in71. The factors influencing the tightness of the lower bounds, Eqs. (5), (7), are measurement errors and calibration issues, which we minimize by considering a large number of annealing runs (10000). Accordingly, the upper bound on the thermodynamic efficiency can be determined from

which is not upper bounded by the Carnot efficiency that is defined for heat engines, as quantum annealers under reverse-annealing protocols behave like thermal accelerators58. Moreover, we are interested in analyzing the computational efficiency of the quantum annealer, which we define as

and where have introduced the probability that ground state \(s^\star \) is found in the given annealing run,

This quantity is computed by dividing the number of successful runs (i.e., those which have found the ground state) by the total number of runs. We emphasize here that the definition Eq. (9), is not a universal metric for computational performance. It is specifically tailored to capture the unique computational characteristics of quantum annealers, which operate based on principles akin to the thermodynamic cycle of a thermal accelerator. The definition, Eq. (9), provides a contextual tool to evaluate the performance of quantum annealers in terms of energy consumption relative to computational output. Hence the dimension of 1/energy. Since information is physical, expressing computational efficiency in terms of energy provides a direct link to the physical process occurring during quantum annealing. The efficiencies defined by Eqs. (8) and (9) are the main figures of merit of our analysis.

It is also instructive to analyze the ratio of the theoretical (\(E_{\text {th}}=-299\)) ground state energy and the value read from the machine (\(E_{\text {exp}}\)),

which is averaged over the number of samples. The quality, Eq. (11), measures the accuracy between the experimentally obtained ground state energy from the quantum annealer and its theoretically predicted counterpart, normalized over the number of samples.

Results and discussion

All our demonstrations were performed on a D-Wave 2000Q quantum annealer. The physical properties of this machine are presented in Table 1 of Appendix A72. We considered an antiferromagnetic (i.e. \(\forall i \, J_{i, i+1} = 1\)) Ising chain on \(N\!=\!300\) spins, with Hamiltonian as defined in (1). However, one must first embed the given problem into the target quantum processing unit (QPU) architecture to perform quantum annealing. Here, embedding means finding a mapping between physical qubits presented in the machine and logical qubits (i.e. \(\sigma ^z_i\)) representing our problem. Figure 2 shows an example embedding of this Ising problem onto the QPU with Chimera architecture.

We used annealing schedules shown in Fig. 1. The system was initialized for both schedules by taking a sample thermal state at \(\beta =1\) using Gibbs sampling73. For reverse annealing, with a given annealing time \(\tau \), we ran \(s_t \rightarrow 1/2\) for \(t \in (0, \tau /2)\) and \(s_t \rightarrow 1\) for the remaining time. In reverse annealing with pausing \(s_t \rightarrow 1/2\) for \(t \in (0, \tau /3)\), next for \(t \in (\tau /3, 2\tau /3)\) \(s_t = 1/2\), as we pause the annealing process. Lastly, for \(t \in (2\tau /3, 1)\) we let \(s_t \rightarrow 1\).

Zero magnetic field – “naked performance”

We start with the case where the magnetic field h is turned off.

Reverse-annealing without pausing

Figure 3a,b report data from reverse-annealing demonstrations without the introduction of the pause. The success probability, Eq. (10), is shown in panel (a) where we see that under reverse-annealing without the pause, the success probability of finding the ground state energy is very low and does not exceed 10% in the whole annealing time. This means that in all 10000 samples taken in the demonstration, only less than 1000 samples provided the ground state energy. However, although the probability to reach the ground state energy is low, its quality, Eq. (11), which is shown in the same panel is very high and saturates at 0.95.

The results shown in panel (a) are consistent with the thermodynamic and computational efficiency, Eqs. (8) and (9) respectively, reported in panel (b). We see that under reverse-annealing without the pause, the computational efficiency of the chip \(\eta _{comp}\) grows with the annealing time t while being very low and not exceeding 4% in the whole annealing time, which follows the behavior of the success probability \({\mathscr {P}}_{GS}\). Energetically, the thermodynamic efficiency \(\eta _{th}\) decays exponentially with \(\tau \) and remains at a high value close to 1.

Reverse-annealing with pausing

The low computational performance of the chip for the simple Hamiltonian, Eq. (1), shows that the reverse-annealing protocol does not exploit the energetics of the chip efficiently. Ideally, one aims at finding the protocol that provides a high computational efficiency at the lowest possible thermodynamical cost. For this reason, we introduce a pause in the reverse-annealing protocol, as depicted in Fig. 1. Introducing a pause in the annealing schedule in quantum annealing has been shown to offer several benefits, such as: enhancing the probability of finding better solutions by efficient exploration of the solution space, which allows for a broader range of potential solutions to a given problem74,75. Furthermore, since the pausing duration can be manipulated by the user, this offers the ability to balance between exploration and exploitation, which allow for the fine-tuning of the solution quality. The flexibility offered by the pausing strategy also allows for adaptation to the characteristics of specific problem instances, which enhances the efficiency and effectiveness of quantum annealing for a wide range of applications76.

Figure 3c,d presents the results of applying a pause during the reverse-annealing schedule, as shown in Fig. 1. The success probability \({\mathscr {P}}_{GS}\) improves dramatically as shown in panel (c), where it grows quickly to 0.8 during the annealing schedule, which means that 80% of the 1000 annealing runs taken during the demonstration returned the ground state energy. The quality, Eq. (11), shown in the same panel also benefits from introducing the pause, where the overlap between the theoretical value and the energy read from the machine is almost unity. The power of pausing is even more significant for the thermodynamic and computational efficiency, Eqs. (8) and (9) respectively, reported in panel (d). We see that pausing allows for achieving high computational efficiency at a moderate thermodynamic cost, which is due to the concept of thermalization. Introducing a pause in the annealing schedule allows the chip to relax and thermalize after being excited by quantum or thermal effects near the minimum gap. However, pausing is not always beneficial, and it depends on several factors such as the relaxation rate, the pause duration, and the annealing schedule. The optimal protocol corresponds to a pause right after crossing the minimum gap and its duration should be no less than the thermalization time75.

Magnetically assisted annealing

The success probability \({\mathscr {P}}_{GS}\) (blue), Eq. (10), and the Quality \(Q_{\text {GS}}\) (red), Eq. (11), under reverse-annealing without the pause for different values of the magnetic field h and with respect to the total anneal time t. Each point is averaged over 100 annealing runs with 10 samples each.

The thermodynamic and computational efficiency, \(\eta _{th}\) (blue) Eq. (8) and \(\eta _{comp}\) (red) Eq. (9) respectively, under reverse-annealing without the pause for different values of the magnetic field h and with respect to the total anneal time t. Each point is averaged over 100 annealing runs with 10 samples each.

The success probability \({\mathscr {P}}_{GS}\) (blue), Eq. (10), and the Quality \(Q_{\text {GS}}\) (red), Eq. (11), under reverse-annealing with the pause for different values of the magnetic field h and with respect to the total anneal time t. Each point is averaged over 100 annealing runs with 10 samples each.

The thermodynamic and computational efficiency, \(\eta _{th}\) (blue) Eq. (8) and \(\eta _{comp}\) (red) Eq. (9) respectively, under reverse-annealing with the pause for different values of the magnetic field h and with respect to the total anneal time t. Each point is averaged over 100 annealing runs with 10 samples each..

Next, we perform demonstrations with the magnetic field switched on, under reverse-annealing with and without pausing. The local magnetic field plays a crucial role in shaping the energy landscape and controlling the behavior of the qubits during the annealing process. By manipulating the local magnetic field, quantum annealers can explore and optimize complex problem spaces more effectively.

Assisted reverse-annealing without pausing

The benefit of introducing the magnetic field is clear from the behavior of the success probability \({\mathscr {P}}_{GS}\), Eq. (10), reported in Fig. 4. In this case, without introducing a pause in the annealing schedule, the success probability of the ground state of the problem Hamiltonian is very high compared to the case when the magnetic field is off (c.f. Fig. 3a). Introducing the magnetic field influences the shape of the energy landscape that the qubits explore during the annealing process77. The landscape can be adjusted to promote or discourage certain configurations of the qubits, which can help guide the system toward the desired solution, and explains the slight improvement in the quality, Eq. (11), reported in the same panel. This dramatic improvement reflects itself on the thermodynamic and computational efficiency Eqs. (8) and (9) respectively, of the chip as reported in Fig. 5. In this case, introducing the magnetic field allows to guide the system in the energy landscape which is a more efficient strategy to exploit energy to perform computation.

Assisted reverse-annealing with pausing

Interestingly, in comparison with the case for \(h\!=\!0\) reported in Fig. 3c,d, introducing a pause in the annealing schedule with the magnetic field being present decreases the success probability \({\mathscr {P}}_{GS}\), Eq. (10), as can be seen from Fig. 6. Consequently, it decreases also the thermodynamic and computational efficiency, \(\eta _{th}\) Eq. (8) and \(\eta _{comp}\) Eq. (9) respectively, as can be seen from Fig. 7. Switching on the magnetic field in quantum annealing changes the qubit energy levels, and structure. On the other hand, for pausing to work it needs to be carefully applied while taking into account the energy level structure variation with the magnetic field. For this reason, the pause needs to be performed right after the minimum gap characterized by the value of the magnetic field h74,75.

Concluding remarks

We have investigated the optimization of the computational efficiency and the thermodynamic cost in the D-Wave quantum annealing systems employing reverse-annealing. By combining reverse-annealing with pausing, we have demonstrated improved computational efficiency while operating at a lower thermodynamic cost compared to reverse-annealing alone. Our results highlight the potential benefits of strategically incorporating pausing into the annealing process to enhance overall computational and energetic performance. Furthermore, our results indicate that the magnetic field plays a crucial role in enhancing computational efficiency during reverse-annealing. However, when pausing is involved, the magnetic field becomes detrimental to the overall performance. This suggests the need for careful consideration of the magnetic field configuration and its impact on the energy gap of the system during the annealing process.

While our demonstrations were performed on the D-Wave Chimera architecture, it will be interesting to extend our approach to the Pegasus and Zephyr architectures. These two models offer high tolerance to noise and a more complex structure, which allows us to investigate the trade-off between energetic performance and computational complexity. Additionally, exploring the scalability of these findings to larger-scale quantum systems and real-world applications remains a promising avenue for future research.

Data availability

The code of the demonstrations is publically available in the Github repository78.

References

Raymer, M. G. & Monroe, C. The us national quantum initiative. Quantum Sci. Technol. 4, 020504. https://doi.org/10.1088/2058-9565/ab0441 (2019).

McMahon, D. Quantum Computing Explained (Wiley, 2007).

Nielsen, M. A. & Chuang, I. Quantum computation and quantum information. Phys. Todayhttps://doi.org/10.1017/CBO9780511976667 (2002).

Sanders, B. C. How to Build a Quantum Computer 2399–2891 (IOP Publishing, 2017).

Riedel, M., Kovacs, M., Zoller, P., Mlynek, J. & Calarco, T. Europe’s quantum flagship initiative. Quantum Sci. Technol. 4, 020501. https://doi.org/10.1088/2058-9565/ab042d (2019).

Yamamoto, Y., Sasaki, M. & Takesue, H. Quantum information science and technology in japan. Quantum Sci. Technol. 4, 020502. https://doi.org/10.1088/2058-9565/ab0077 (2019).

Sussman, B., Corkum, P., Blais, A., Cory, D. & Damascelli, A. Quantum Canada. Quantum Sci. Technol. 4, 020503. https://doi.org/10.1088/2058-9565/ab029d (2019).

Knight, P. & Walmsley, I. Uk national quantum technology programme. Quantum Sci. Technol. 4, 040502. https://doi.org/10.1088/2058-9565/ab4346 (2019).

Roberson, T. M. & White, A. G. Charting the Australian quantum landscape. Quantum Sci. Technol. 4, 020505. https://doi.org/10.1088/2058-9565/ab02b4 (2019).

Ur Rasool, R. et al. Quantum computing for healthcare: A review. Future Internethttps://doi.org/10.3390/fi15030094 (2023).

Herman, D. et al. A survey of quantum computing for finance (2022). arXiv:2201.02773.

Domino, K. et al. Quantum annealing in the NISQ era: Railway conflict management. Entropyhttps://doi.org/10.3390/e25020191 (2023).

Kane, B. E. A silicon-based nuclear spin quantum computer. Nature 393, 133–137. https://doi.org/10.1038/30156 (1998).

Marx, R., Fahmy, A. F., Myers, J. M., Bermel, W. & Glaser, S. J. Realization of a 5-bit nmr quantum computer using a new molecular architecture (1999). arXiv:quant-ph/9905087.

Negrevergne, C. et al. Benchmarking quantum control methods on a 12-qubit system. Phys. Rev. Lett. 96, 170501. https://doi.org/10.1103/PhysRevLett.96.170501 (2006).

Lanyon, B. P. et al. Experimental demonstration of a compiled version of shor’s algorithm with quantum entanglement. Phys. Rev. Lett. 99, 250505. https://doi.org/10.1103/PhysRevLett.99.250505 (2007).

Tame, M. S. et al. Experimental realization of deutsch’s algorithm in a one-way quantum computer. Phys. Rev. Lett. 98, 140501. https://doi.org/10.1103/PhysRevLett.98.140501 (2007).

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473, 194–198. https://doi.org/10.1038/nature10012 (2011).

Saeedi, K. et al. Room-temperature quantum bit storage exceeding 39 minutes using ionized donors in silicon-28. Science 342, 830–833. https://doi.org/10.1126/science.1239584 (2013).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510. https://doi.org/10.1038/s41586-019-1666-5 (2019).

Unden, T. K., Louzon, D., Zwolak, M., Zurek, W. H. & Jelezko, F. Revealing the emergence of classicality using nitrogen-vacancy centers. Phys. Rev. Lett. 123, 140402. https://doi.org/10.1103/PhysRevLett.123.140402 (2019).

Cho, A. Quantum darwinism seen in diamond traps. Science 365, 1070–1070. https://doi.org/10.1126/science.365.6458.1070 (2019).

Krutyanskiy, V. et al. Entanglement of trapped-ion qubits separated by 230 meters. Phys. Rev. Lett. 130, 050803. https://doi.org/10.1103/PhysRevLett.130.050803 (2023).

Hassija, V. et al. Present landscape of quantum computing. IET Quantum Commun. 1, 42–48. https://doi.org/10.1049/iet-qtc.2020.0027 (2020).

Gyongyosi, L. & Imre, S. A survey on quantum computing technology. Comput. Sci. Rev. 31, 51–71 (2019).

National Academies of Sciences, Engineering, and Medicine, Quantum Computing: Progress and Prospects (Washington, DC: The National Academies Press, 2019).

Deffner, S. & Campbell, S. Quantum Thermodynamics 2053–2571 (Morgan & Claypool Publishers, 2019).

Auffèves, A. Quantum technologies need a quantum energy initiative. PRX Quantum 3, 020101. https://doi.org/10.1103/PRXQuantum.3.020101 (2022).

Elsayed, N., Maida, A. S. & Bayoumi, M. A review of quantum computer energy efficiency. In 2019 IEEE Green Technologies Conference (GreenTech), 1–3, https://doi.org/10.1109/GreenTech.2019.8767125 (IEEE, 2019).

Ikonen, J., Salmilehto, J. & Möttönen, M. Energy-efficient quantum computing. npj Quantum Inform. 3, 17. https://doi.org/10.1038/s41534-017-0015-5 (2017).

Fellous-Asiani, M. et al. Optimizing resource efficiencies for scalable full-stack quantum computers (2022). arXiv:2209.05469.

Likharev, K. K. Classical and quantum limitations on energy consumption in computation. Int. J. Theor. Phys. 21, 311–326. https://doi.org/10.1007/BF01857733 (1982).

Gemmer, J., Michel, M. & Mahler, G. Quantum thermodynamics: Emergence of thermodynamic behavior within composite quantum systems Vol. 784 (Springer, 2009).

Vinjanampathy, S. & Anders, J. Quantum thermodynamics. Contemp. Phys. 57, 545–579 (2016).

Goold, J., Huber, M., Riera, A., Del Rio, L. & Skrzypczyk, P. The role of quantum information in thermodynamics-a topical review. J. Phys. A: Math. Theor. 49, 143001. https://doi.org/10.1088/1751-8113/49/14/143001 (2016).

Binder, F., Correa, L. A., Gogolin, C., Anders, J. & Adesso, G. Thermodynamics in the quantum regime. Fund. Theories Phys. 195, 1–2 (2018).

Gea-Banacloche, J. Minimum energy requirements for quantum computation. Phys. Rev. Lett. 89, 217901. https://doi.org/10.1103/PhysRevLett.89.217901 (2002).

Bedingham, D. J. & Maroney, O. J. E. The thermodynamic cost of quantum operations. New J. Phys. 18, 113050. https://doi.org/10.1088/1367-2630/18/11/113050 (2016).

Cimini, V. et al. Experimental characterization of the energetics of quantum logic gates. npj Quantum Inform. 6, 96. https://doi.org/10.1038/s41534-020-00325-7 (2020).

Timpanaro, A. M., Santos, J. P. & Landi, G. T. Landauer’s principle at zero temperature. Phys. Rev. Lett. 124, 240601. https://doi.org/10.1103/PhysRevLett.124.240601 (2020).

Deffner, S. Energetic cost of hamiltonian quantum gates. EPL (Europhys. Lett.) 134, 40002. https://doi.org/10.1209/0295-5075/134/40002 (2021).

Lamm, H. & Lawrence, S. Simulation of nonequilibrium dynamics on a quantum computer. Phys. Rev. Lett. 121, 170501. https://doi.org/10.1103/PhysRevLett.121.170501 (2018).

Chertkov, E. et al. Characterizing a non-equilibrium phase transition on a quantum computer (2022). arXiv:2209.12889.

Meier, F. & Yamasaki, H. Energy-consumption advantage of quantum computation (2023). arXiv:2305.11212.

Gardas, B. & Deffner, S. Thermodynamic universality of quantum Carnot engines. Phys. Rev. E 92, 042126. https://doi.org/10.1103/PhysRevE.92.042126 (2015).

Gardas, B., Deffner, S. & Saxena, A. \(\cal{PT} \)-symmetric slowing down of decoherence. Phys. Rev. A 94, 040101. https://doi.org/10.1103/PhysRevA.94.040101 (2016).

Gardas, B., Dziarmaga, J., Zurek, W. H. & Zwolak, M. Defects in quantum computers. Sci. Rep. 8, 4539 (2018).

Gardas, B. & Deffner, S. Quantum fluctuation theorem for error diagnostics in quantum annealers. Sci. Rep. 8, 17191 (2018).

Mzaouali, Z., Puebla, R., Goold, J., El Baz, M. & Campbell, S. Work statistics and symmetry breaking in an excited-state quantum phase transition. Phys. Rev. E 103, 032145. https://doi.org/10.1103/PhysRevE.103.032145 (2021).

Soriani, A., Nazé, P., Bonança, M. V. S., Gardas, B. & Deffner, S. Three phases of quantum annealing: Fast, slow, and very slow. Phys. Rev. A 105, 042423. https://doi.org/10.1103/PhysRevA.105.042423 (2022).

Soriani, A., Nazé, P., Bonança, M. V. S., Gardas, B. & Deffner, S. Assessing the performance of quantum annealing with nonlinear driving. Phys. Rev. A 105, 052442. https://doi.org/10.1103/PhysRevA.105.052442 (2022).

Coopmans, L., Campbell, S., De Chiara, G. & Kiely, A. Optimal control in disordered quantum systems. Phys. Rev. Res. 4, 043138. https://doi.org/10.1103/PhysRevResearch.4.043138 (2022).

Kazhybekova, A., Campbell, S. & Kiely, A. Minimal action control method in quantum critical models. J. Phys. Commun. 6, 113001. https://doi.org/10.1088/2399-6528/aca3fa (2022).

Xuereb, J., Campbell, S., Goold, J. & Xuereb, A. Deterministic quantum computation with one-clean-qubit model as an open quantum system. Phys. Rev. A 107, 042222. https://doi.org/10.1103/PhysRevA.107.042222 (2023).

Carolan, E., Çakmak, B. & Campbell, S. Robustness of controlled hamiltonian approaches to unitary quantum gates (2023). arXiv:2304.14667.

Kiely, A., O’Connor, E., Fogarty, T., Landi, G. T. & Campbell, S. Entropy of the quantum work distribution. Phys. Rev. Res. 5, L022010. https://doi.org/10.1103/PhysRevResearch.5.L022010 (2023).

Zawadzki, K., Kiely, A., Landi, G. T. & Campbell, S. Non-gaussian work statistics at finite-time driving. Phys. Rev. A 107, 012209. https://doi.org/10.1103/PhysRevA.107.012209 (2023).

Buffoni, L. & Campisi, M. Thermodynamics of a quantum annealer. Quantum Sci. Technol. 5, 035013. https://doi.org/10.1088/2058-9565/ab9755 (2020).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse ising model. Phys. Rev. E 58, 5355–5363. https://doi.org/10.1103/PhysRevE.58.5355 (1998).

Albash, T. & Lidar, D. A. Adiabatic quantum computation. Rev. Mod. Phys. 90, 015002. https://doi.org/10.1103/RevModPhys.90.015002 (2018).

Golden, J. & O’Malley, D. Reverse annealing for nonnegative/binary matrix factorization. PLoS One 16, e0244026 (2021).

Venturelli, D. & Kondratyev, A. Reverse quantum annealing approach to portfolio optimization problems. Quantum Mach. Intell. 1, 17–30 (2019).

Yarkoni, S., Raponi, E., Bäck, T. & Schmitt, S. Quantum annealing for industry applications: Introduction and review (Rep. Prog, Phys, 2022).

Campisi, M. & Buffoni, L. Improved bound on entropy production in a quantum annealer. Phys. Rev. E 104, L022102. https://doi.org/10.1103/PhysRevE.104.L022102 (2021).

Jarzynski, C. & Wójcik, D. K. Classical and quantum fluctuation theorems for heat exchange. Phys. Rev. Lett. 92, 230602. https://doi.org/10.1103/PhysRevLett.92.230602 (2004).

Sone, A., Soares-Pinto, D. O. & Deffner, S. Exchange fluctuation theorems for strongly interacting quantum pumps. AVS Quantum Sci. 5, 032001. https://doi.org/10.1116/5.0152186 (2023).

Deffner, S. & Lutz, E. Nonequilibrium entropy production for open quantum systems. Phys. Rev. Lett. 107, 140404. https://doi.org/10.1103/PhysRevLett.107.140404 (2011).

Touil, A. & Deffner, S. Information scrambling versus decoherence–two competing sinks for entropy. PRX Quantum 2, 010306. https://doi.org/10.1103/PRXQuantum.2.010306 (2021).

McGeoch, C. & Farré, P. Advantage processor overview. Tech. Rep. 14-1058A-A, D-Wave Quantum Inc. (2022).

Uffink, J. & Van Lith, J. Thermodynamic uncertainty relations. Found. Phys. 29, 655–692 (1999).

Benedetti, M., Realpe-Gómez, J., Biswas, R. & Perdomo-Ortiz, A. Estimation of effective temperatures in quantum annealers for sampling applications: A case study with possible applications in deep learning. Phys. Rev. A 94, 022308. https://doi.org/10.1103/PhysRevA.94.022308 (2016).

Qpu-specific physical properties: Dw_2000q_6. Tech. Rep. D-Wave User Manual 09-1215A-D, D-Wave Quantum Inc. (2022).

Mossel, E. & Sly, A. Exact thresholds for Ising–Gibbs samplers on general graphs. Ann. Probab. 41, 294–328. https://doi.org/10.1214/11-AOP737 (2013).

Chen, H. & Lidar, D. A. Why and when pausing is beneficial in quantum annealing. Phys. Rev. Appl. 14, 014100. https://doi.org/10.1103/PhysRevApplied.14.014100 (2020).

Marshall, J., Venturelli, D., Hen, I. & Rieffel, E. G. Power of pausing: Advancing understanding of thermalization in experimental quantum annealers. Phys. Rev. Appl. 11, 044083. https://doi.org/10.1103/PhysRevApplied.11.044083 (2019).

Gonzalez Izquierdo, Z. et al. Advantage of pausing: Parameter setting for quantum annealers. Phys. Rev. Appl. 18, 054056. https://doi.org/10.1103/physrevapplied.18.054056 (2022).

Watabe, S., Seki, Y. & Kawabata, S. Enhancing quantum annealing performance by a degenerate two-level system. Sci. Rep. 10, 146 (2020).

Smierzchalski, T., Mzaouali, Z. & Gardas, B. Dwave-thermodynamics.

Acknowledgements

We would like to thank L. Buffoni and M. Campisi for valuable discussions. T.Ś. and Z.M. acknowledge support from the National Science Center (NCN), Poland, under Project No. 2020/38/E/ST3/00269. S.D. acknowledges support from the U.S. National Science Foundation under Grant No. DMR-2010127 and the John Templeton Foundation under Grant No. 62422. B.G. acknowledges support from Foundation for Polish Science (grant no POIR.04.04.00-00-14DE/ 18-00 carried out within the Team-Net program co-financed by the European Union under the European Regional Development Fund).

Author information

Authors and Affiliations

Contributions

The authors confirm their contribution to the paper as follows: study conception and data collection: T.Ś., Z.M.; analysis and interpretation of results: T.Ś., Z.M., S.D., B.G.; draft manuscript preparation: T.Ś., Z.M., S.D., B.G. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Appendix A: Physical properties of the D-Wave 2000Q

We report in this appendix the physical properties of the 2000Q chip72

Appendix B: Derivation of lower bounds

The thermodynamic uncertainty relation (TUR) relates the amount of energy a system dissipates to the uncertainty of a certain observable in that system. For a process described by a joint probability distribution \(p(\sigma ,\phi )\), the fluctuation relation is given by:

where \(p(\sigma ,\phi )\) is the probability of observing simultenously \(\sigma \) and \(\phi \) during the forward process, while \(p(-\sigma ,-\phi )\) describe the same probablity but during the backward process. Then applying TUR gives70:

with \(f(x)=\tanh ^2{(x/2)}\), and \(h^{-1}=(x\tanh {(x/2)})^{-1}\). The bound on \(\langle \sigma \rangle \) follows by manipulating Eq. (13):

with \(g(x)=x\tanh ^{-1}(x)\). Applying Eq. (14) on the fluctuation relation Eq. (4) gives:

Making use of \(\Sigma =\beta _1 \Delta E_1 + \beta _2 \Delta E_2\) and \(\langle W \rangle = \Delta E_1 + \Delta E_2\) yields to

Figures 8, 9, 10, 11 report the behavior of the average work \(\langle W\rangle \), heat \(\langle Q\rangle \), and the entropy production \(\langle \Sigma \rangle \), Eqs. (5), (6), under the annealing protocols in Fig. 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Śmierzchalski, T., Mzaouali, Z., Deffner, S. et al. Efficiency optimization in quantum computing: balancing thermodynamics and computational performance. Sci Rep 14, 4555 (2024). https://doi.org/10.1038/s41598-024-55314-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-55314-z

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.