Abstract

In pulmonary inflammation diseases, like COVID-19, lung involvement and inflammation determine the treatment regime. Respiratory inflammation is typically arisen due to the cytokine storm and the leakage of the vessels for immune cells recruitment. Currently, such a situation is detected by the clinical judgment of a specialist or precisely by a chest CT scan. However, the lack of accessibility to the CT machines in many poor medical centers as well as its expensive service, demands more accessible methods for fast and cheap detection of lung inflammation. Here, we have introduced a novel method for tracing the inflammation and lung involvement in patients with pulmonary inflammation, such as COVID-19, by a simple electrolyte detection in their sputum samples. The presence of the electrolyte in the sputum sample results in the fern-like structures after air-drying. These fern patterns are different in the CT positive and negative cases that are detected by an AI application on a smartphone and using a low-cost and portable mini-microscope. Evaluating 160 patient-derived sputum sample images, this method demonstrated an interesting accuracy of 95%, as confirmed by CT-scan results. This finding suggests that the method has the potential to serve as a promising and reliable approach for recognizing lung inflammatory diseases, such as COVID-19.

Similar content being viewed by others

Introduction

The emergence of the SARS-CoV-2 virus and the subsequent outbreak of one of the most lethal pandemics of the current century1, led to the development of advanced technologies for the early diagnosis of COVID-192,3,4,5,6. Various state-of-the-art solutions, including but not limited to ID NOW7, RDSS8, and others9,10,11, have been introduced to address the urgent need for accurate and rapid point-of-care (POC) diagnostics due to the multiple mutations in the virus12. Such developments aim to alleviate the strain on medical centers and enable individuals to conduct COVID-19 tests conveniently at home using rapid, reliable, and smartphone-based approaches13,14. However, there remains a need for rapid point-of-care diagnostic approaches that can be conveniently used outside laboratory settings to keep pace with the ongoing pandemic15.

While smartphone-based diagnostic devices have been a subject of research for years16,17, the convergence of artificial intelligence (AI) and portable systems, particularly smartphones, presents a compelling avenue for rapid POC diagnostics.

AI, as an accepted and popular method, has been recently employed for big data analysis not only for tackling COVID-19 pandemic18 and its management19,20 but also in different medical fields such as cancer pathology21,22, ovulation prediction23, X-ray image analysis24,25,26, and etc27,28. Compared to a human, AI-based devices are able to analyze the data with superior precision and accuracy. In particular, the use of convolutional neural networks (CNNs) in these devices has shown great promise for detecting various diseases. CNNs are a type of deep learning algorithm capable of recognizing patterns in images and other complex data types29. Researchers have recently explored the potential of smartphone-based devices and CNNs for point-of-care diagnostics of pulmonary inflammation30,31.

Using AI, lung-related diseases have been classified and detected in several studies28. With the use of an electronic stethoscope, Aykanat et al. devised a non-invasive method to classify respiratory sounds32. In their study, they found that CNNs and support vector machines (SVMs) accurately classified respiratory sounds. Through the use of high-resolution CT images and deep learning, Chen et al. developed an engine for detecting COVID-19 disease33. Deep learning model performance was comparable with that of expert radiologists, thereby improving radiologists' clinical efficiency. A transfer learning approach with deep CNNs was used by Eman et al. to determine which cases were tuberculosis and which were normal from chest radiographs34. According to David et al., lateral flow rapid diagnostic tests (RDTs) for SARS-CoV-2 can be read and interpreted with the help of a smartphone application. Especially for prospective validation of real-life scenarios, as well as for antibody, antigen and peptide detection tests, their AI algorithm has demonstrated excellent performance35. A smartphone-based breathing sound indicator may be a promising marker for COVID-19 cases, according to Mohanad et al. Based on their study, deep learning can be used as a pre-screening tool before RT-PCR, which is considered the gold standard in such cases36. However, smartphone-assisted AI diagnostics have not been explored in the context of detecting COVID-19 lung inflammation from sputum samples.

Here, we aim to bridge this technology gap by introducing a smartphone-based AI framework to trace pulmonary inflammation in COVID-19 cases by ferning pattern analysis of air-dried sputum samples using a low-cost, smartphone-compatible microscopy device. This paper presents the demonstration of an accessible deep learning-powered mobile platform for diagnosing respiratory inflammation without requiring CT infrastructure or extensive sample analysis. Our approach could allow rapid screening of COVID-19 positive patients to determine disease severity and need for intervention.

During the immunological phase of COVID-19, the lungs become inflamed as immune cells battle the virus37. This recruits additional immune cells, often disrupting blood vessels and increasing vascular permeability that allows fluid to fill the alveoli38,39. Currently, a chest CT scan diagnoses such inflammation. The inflamed state allows blood components like electrolytes to emerge in patient sputum. Thus, increased blood markers in respiratory secretions may indicate lung inflammation. Body fluids, especially blood, have long provided diagnostic indicators40,41,42, aside from its use of treatment purposes40,43,44. Various blood-related molecules found in sputum now serve as disease biomarkers, such as glucose in pneumonia45,46, salivary components for autoimmune diseases47, etc. Sputum electrolyte levels have also been exploited48,49, as salt crystals such as sodium chloride (NaCl) can create fern-like sputum patterns that associate with ovulation timing23 and conditions like cancer49 and Sjogren syndrome50. Despite using saliva/sputum to detect COVID-19, no smartphone-assisted deep learning approach has leveraged rising sputum electrolytes and fern-like patterns to trace COVID-19 inflammation. This capability could improve screening for severe lung involvement.

A patient's risk of severe COVID-19 rises with greater immune response, increasing chances of death from lung inflammation. Thus, rapidly diagnosing inflammation could improve outcomes by enabling earlier intervention. In this study, we utilized sputum samples from patients with positive lung CT scans as an indicator of pulmonary inflammation. We examined the fern patterns in their sputum using an affordable, simple, and portable smartphone-based device. In 10 μL sputum samples from CT-positive patients, these patterns were considered as biomarkers of inflammation. First, elevated sputum electrolytes (sodium, potassium) were confirmed in COVID-19 patients using standard analysis, agreeing with our vascular permeability hypothesis. Next, AI-based evaluation of smartphone-captured images showed this approach accurately diagnosed 160 patient-derived sputum sample images with 95% accuracy compared to CT-scan inflammation signs. This compact, low-cost methodology could aid the screening of any lung inflammatory diseases like COVID-19 and facilitate treatment decisions through point-of-care assessment of lung involvement.

Materials and methods

Experimental setup and sputum collection

COVID-19 patients suspected of pulmonary involvement, referred by their doctors for lung CT imaging, were selected and divided into two cohorts based on positive and negative CT results. A positive CT scan indicated the presence of common patterns such as ground glass opacification (GGO), consolidation, hazy patches, etc51. Sputum samples were collected from both groups, comprising a total of 70 participants, all of whom were duly informed and provided consent for the research. The sputum collection took place early in the morning, with volunteers fasting, and individuals with a history of tobacco and alcohol consumption were excluded from the cohort to prevent fern-like patterns unrelated to inflammation. Following collection, the sputum was left undisturbed for 30 min to allow for the precipitation of cells and other residues.

A portion of the collected sputum was used for electrolyte measurement, and a small volume (10 µl) was deposited on the surface of a sample slide, left at room temperature for air drying. Subsequently, the dried sample was inserted into the mini-microscope to capture fern patterns, which were then analyzed using an AI system.

Mini-microscope system

To capture fern patterns with the desired resolution using a smartphone camera, we employed a smartphone-based microscopy tool measuring 60 × 60 × 60 mm. The microscope structure was meticulously designed using SOLIDWORKS (Dassault Systèmes) and 3D printed from polylactic acid (PLA) using Ultimaker 2+. The imaging system comprises a plano-convex lens with a 5 mm diameter and a 6 mm focal length serving as an objective lens. Additionally, a commercial acrylic condenser lens compatible with the chosen light-emitting diode was incorporated. Illumination was provided by a 3 V and 5 W white LED, powered by a CR2032 battery (Camelion) series with a 1.2 kΩ resistor to achieve optimal light intensity. The entire electronic circuit is integrated into a Printed Circuit Board, with all lenses, including the smartphone lens and the LED, sharing a common optical axis.

The optical system's spacings were meticulously designed to achieve a 40× magnification within a 5 × 5 mm field of view. This design allows the visualization and processing of the entire fern-containing droplet within the field of view. To activate the illumination system and position the fern sample within the field of view zone, the glass slide containing the fern pattern is inserted into the imaging system. The fern sample is optimally focused within the working distance of the optical system, mitigating the need for manual focusing and minimizing perturbations caused by the user. It's worth noting that the auto-focus feature of the smartphone camera compensates for variations in the optical properties of different smartphones.

AI algorithm development

To detect ferning patterns in saliva on a smartphone, we used EfficentNet-B0 architecture. This model showed a top-1 accuracy of 77.3% and a top-5 accuracy of 93.5%, performing across 1000 classes of the ImageNet database52. To achieve this efficiency, EfficientNet B0 employs a compound scaling method to simultaneously adjust its depth, width, and resolution. By using this method, images can be classified more accurately with fewer parameters, resulting in more complex representations. EfficientNet-B0 is a mobile-sized architecture having 5.3 million trainable parameters. Such a complex neural network needs a significant number of images to optimize its parameters while training. Due to our insufficient dataset of 650 images, we utilized "transfer learning" to develop the algorithm. In this regard, EfficientNet-B0 was first pre-trained with 14 million images of ImageNet. ImageNet is an open-source data set containing 14 million classified images from various categories53. Then, we retrained and validated the pre-trained model using our dataset of 650 salivary images derived from 70 participants, categorized into ferning and non-ferning groups. The labeled image dataset was split into 80% (520) for training and 20% (130) for validation. Using an 80:20 convention balances providing adequate new sputum images to fine-tune the model for the desired classification task, while retaining sufficient previously “unseen” images to evaluate model performance on distinguishing ferning versus non-ferning patterns and check for overfitting. Other partition ratios (90:10 and 70:30) were tested during development to validate that this 80:20 allocation provided optimal performance. All the input images were resized to 224 × 224 pixels, and the retraining process was done for 80 training steps (epochs) with a learning rate of 0.001. Cross-entropy as a loss function and accuracy was measured to evaluate the learning process of the model. Training and validation accuracy are the percentages of correctly detected images by the model in the training and validation datasets. The variance between training and validation accuracies was calculated to know if the model was overfitting. At the 80th training step (epoch), the validation cross-entropy/loss and accuracy were 0.102and 98.23%, respectively. The weights corresponding to the best performance were saved and used for the model.

The image processing component outside the Convolutional Neural Network (CNN) encompasses a singular step, entailing the resizing of images to dimensions of 224 * 224 pixels. The neural network comprises two principal segments: the first being feature extraction, employing a pretrained EfficientNet, and the second involving the classification aspect, consisting of four dense layers. Within the initial three layers, Rectified Linear Unit (ReLU) activation is applied to mitigate the issue of gradient vanishing, while the output layer employs the softmax activation function to denote the probability of an image belonging to each respective class. For the training procedure, the cross-entropy loss function is selected, assessing the performance of a classification model outputting probability values within the range of 0 to 1, in alignment with the softmax function output.

The optimization algorithm opted for is RMSProp, utilized to minimize the cross-entropy function. The formulation of this optimization process is expounded upon in the subsequent discourse:

-

1.

Rectified Linear Unit (ReLU) Activation Function Formulation:

$$f\left(x\right)={\text{max}}(0,x)$$ -

2.

Softmax Activation Function Formulation:

$$softmax{\left(z\right)}_{i}=\frac{{e}^{{z}_{i}}}{\sum_{j=1}^{k}{e}^{{z}_{j}}}$$where \({z}_{i}\) is the input to the ith unit, and K is the total number of units in the layer.

-

3.

Cross-Entropy Loss Function Formulation:

$$CE\left(y,\widehat{y}\right)=-\sum_{i=1}^{N}{y}_{i}.{\text{log}}(\widehat{{y}_{i}})$$where N is the number of classes, \({y}_{i}\) is the true probability of class i, and \(\widehat{{y}_{i}}\) is the predicted probability of class i.

-

4.

Root Mean Square Propagation (RMSProp) Optimization Algorithm Formulation:

$${\uptheta }_{t+1} ={\uptheta }_{t} - \frac{\upeta }{\sqrt{{\text{E}}{\left[{{\text{g}}}^{2}\right]}_{t}+\epsilon }}.{{\text{g}}}_{{\text{t}}}$$where \(\theta\) is the parameter at time t, \(\eta\) is the learning rate, \({{\text{g}}}_{{\text{t}}}\) is the gradient at time t, \({\text{E}}{\left[{{\text{g}}}^{2}\right]}_{t}\) is the exponentially weighted moving average of squared gradients up to time t, and \(\epsilon\) is a small constant added for numerical stability.

Here is the neat sketch of the whole algorithm:

Algorithm: Proposed method for detecting COVID-19 lung inflammation using sputum ferning patterns

Input: Sputum images from COVID-19 patients

Output: Classification as lung inflammation positive or negative

-

1.

Collect sputum samples from patients sent for CT scans

-

2.

Preprocess Images

-

Resize to 224 × 224 resolution

-

Convert to grayscale

-

Normalize pixel intensities to [0,1]

-

Augment data via horizontal flips, rotations

-

-

3.

Construct CNN Architecture

-

Use pre-trained EfficientNet-B0 as base model

-

Append classification layers

-

-

4.

Prepare Training Data

-

Label images as ferning/non-ferning

-

Split images 80/20 into training/validation

-

-

5.

Train CNN

-

Train for 80 epochs

-

Use cross entropy loss, RMSprop optimization

-

Learning rate = 0.001

-

-

6.

Assess Performance

-

Evaluate accuracy on validation set

-

Check for over/under-fitting

-

-

7.

Classify Sputum Images

-

Capture microscope images

-

Predict ferning presence

-

Relate to lung inflammation

-

Algorithm evaluation

To evaluate the algorithm, receiving operative characteristic (ROC) curve was plotted. The output of the trained convolutional neural network is a continuous number between 0 and 1, representing the probability of the image belonging to a specific group. The ROC curve is plotted based on different threshold values to find the best operating point based on the purpose. The area under the ROC curve (AUC) indicates the proposed method’s ability to distinguish between different classes. Additionally, to assess the ability of the retrained model to predict accurately, 160 patient-derived sputum sample images, consisting of both ferning and non-ferning cohorts, were utilized.

Web application development

A web application was developed to analyze the presence of ferning patterns in salivary images. Initially, a drop of the sputum sample is placed at the center of the sample slide. After air-drying, the slide is inserted into the mini-microscope system. Then, the lens provides enough magnification (40X) to observe ferning patterns through any smartphone camera. Subsequently, images are captured and sent to the developed web application, where AI determines the presence of ferning patterns. Also, the designed web application, featuring a convenient and straightforward graphical user interface, enables users to access previous test records to track their disease progression.

Statistical analysis

A 2 × 2 confusion matrix was plotted to determine specificity sensitivity, positive predictive value (PPV), and negative predictive value (NPV) in comparison to the CT-scan results as the golden standard for validating pulmonary inflammation.

The obtained data in this study was gathered from at least three independent experiments and analyzed using the statistic software GraphPad Prism 8 and expressed as mean ± standard deviation (SD). Statically significant results were reported when the P values were less than 0.05 for a specific experiment.

Ethics and consent to participate

All methods employed in our study were conducted in strict accordance with relevant guidelines and regulations imposed by the University of Tehran. Informed consents were obtained for all participants who were made aware of the planned publication through consent forms, and all the experiments were conducted following pertinent guidelines approved by the University of Tehran.

Results and discussion

The background biology and microscopic translation of the sputum electrolyte

During the COVID-19 disease, the immune system signals many white blood cells (WBCs) to travel to the lung environment and combat the viruses. To facilitate this process, the tiny micro-vessels around the respiratory alveolus dilate and become permeable, allowing the immune cells to traverse. The increased permeability of the vessels leads to the filling of air sacs with blood fluid, resulting in acute respiratory distress syndrome (ARDS) and subsequent lung failure in certain areas. Our hypothesis posits that blood serum infiltration into the lung environment will alter the composition and concentration of sputum components, particularly electrolyte salts such as Na and K (Fig. 1). Currently, chest CT scans are conducted to monitor inflammation and respiratory involvement in patients. The images with signs of glass-ground opacification (GGO), patchy consolidation, etc., are fallen into this category (Fig. 2A).

During the inflammation phase of COVID-19, numerous immune cells are mobilized into the lung environment through the vasodilation process. The vessels surrounding the alveoli undergo increased permeability, allowing the entry of blood contents into the lung environment. This phenomenon has the potential to alter the concentration of sputum components, including electrolyte salts.

(A) CT scan from the two patients with COVID-19 disease and with and without lung inflammation. Signs such as glass ground opacification (GGO) in the CT images imply lung involvement. (B–D) Display the results of the Na, K, and NA + K concentrations in the negative and positive CT cases, respectively. (E) Workflow of the designed method for analyzing the fern structures in the dried sputum samples of the patients with different CT scan results. (F) Different ferning patterns in the air-dried samples of the CT positive and negative cases. (G) The percentage of the fern area in the sputum images of the CT positive and negative cases.

Following the CT results, patients were categorized into CT positive and CT negative groups (Fig. 2A). Subsequently, fasting sputum samples were collected from the patients, and an electrolyte analyzer measured their sodium and potassium ions. Sodium and potassium are primary electrolytes in blood serum. Conditions such as hypernatremia and hyperkalemia can contribute to variations in these electrolytes in the blood, potentially leading to various dangerous diseases.

The concentration of the Na and K ions can be observed in Fig. 2B–D. Notably, a significant increase in the concentration of both sodium and potassium is observable in the sputum samples from CT-positive patients. While the average concentration of Na and K in the CT-negative samples is approximately 29 mmol/L and 26 mmol/L, respectively, these values rise to about 53 mmol/L and 46 mmol/L in CT-positive cases, reflecting an increase of approximately 82% and 77%, respectively. These findings support the hypothesis that the electrolytes released into the respiratory system due to inflammation and subsequent vasodilation can be detected in the sputum samples.

As the next step, the increased salt concentration in the sputum was translated into a graphical picture (Fig. 2E). In this regard, a drop of the sputum sample was air-dried on a glass slide and then imaged by a mini-microscope. As shown in Fig. 2F, the branchy and fern-like patterns due to the crystallization of the Na and K salts could be seen in the dried samples. Moreover, in the sputum samples of the CT-positive patients, the fern patterns occupy more area of the whole drop, which confirms the previously obtained results. This number for the patients with negative CT is ~ 23% and ~ 61% for the positive cases (Fig. 2G).

Design of the mini-microscope

After dropping the sputum sample at the center of the sample slide (Fig. 3A) and allowing it to air-dry, the slide is inserted into the mini-microscope system (Fig. 3B and C) for visualizing the fern patterns. The portable mini-microscope system consists of two main components: a magnifier lens and the lightning board (Fig. 3D). The lens provides a 40× magnification, sufficient to see an air-dried sputum sample with a diameter of 5 mm. The lighting system also consists of an LED attached to an electronic board with a condenser lens for uniform sample illumination. The sample slide is placed between the two lenses in an optimized working distance for having the best focus on the fern structures. A microswitch is also placed for automatic on and off of the system as well as fixing the slide at the location. The cap of the system is designed in a way that could be utilized as the stand for holding the smartphone on top of the mini-microscope (Fig. 3E) and giving the possibility for adjusting the mobile camera with the lens. In the end, the taken image is processed by an AI-based application on the smartphone (Fig. 3F).

(A) Sample slide for dropping the sputum sample (B) Mini-microscope system for visualization and imaging of the air-dried sputum sample. (C) The main device and closed view (C-1) of the mini-microscope. (D) Exploded view of the designed mini-microscope for sputum analysis. (E) Placing the smartphone on the mini-microscope and imaging the sputum sample as well as its analysis by the (F) AI-based application.

CNN for intelligent detection of the ferning patterns

In this study, we employed artificial intelligence (AI), more precisely Convolutional Neural Networks (CNNs), to detect fern patterns in the sputum of an individual on a smartphone. A CNN is a deep learning model commonly used for computer vision tasks such as image classification, object detection, and segmentation29. Through a combination of convolutional layers, pooling layers, and fully connected layers, CNNs are designed to learn hierarchical representations of visual data automatically. Feature extraction is performed by convolutional filters across the input image, while downsampling reduces spatial dimensionality. After the features have been extracted, the fully connected layers map them to the output classes. CNNs have achieved state-of-the-art performance in various computer vision tasks and have become a cornerstone of deep learning research54. In this work, EfficientNet-B0 was utilized as a mobile-sized architecture pre-trained with the ImageNet dataset. This pre-trained model was retrained and validated by 650 labeled salivary images gathered from 70 participants (Fig. 4A). Input images are resized to 224 × 224 pixels through the model. The network is uniformly scaled in depth, width, and resolution than conventional structures through the EfficientNet. As shown in (Fig. 4B), this structure contains 7 main blocks, each containing a varying number of sub-blocks, which is followed with the added classifier layers for retraining and decide about the ferning patterns (Fig. 4C).

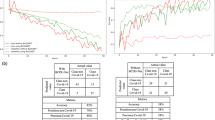

System performance. (A) Computational flow of data in the utilized neural network, for transfer learning, the pre-trained EfficientNet was retrained by using our dataset of 650 salivary images derived from 70 participants. (B) EfficientNet-B0 structure; this mobile-sized architecture contains 7 main blocks, each containing a varying number of sub-blocks. (C) Classifier layers added for retraining and decide about the ferning patterns. (D,E) training and validation curves for accuracy and cross-entropy of the network; after 80 epochs model achieved a validation accuracy of 98.23% on training set and the validation cross-entropy was 0.18. (F) To evaluate the diagnostic ability of the system, receiving operative characteristic (ROC) curve was plotted for different thresholds. The area under the ROC curve (AUC) showed a value of 0.99. (G) the confusion matrices for the test sets when smartphone-based device analyzed the patient-derived samples. True classes are determined by CT-scan results.

For transfer learning, the pre-trained EfficientNet was retrained with a learning rate of 0.0001and for 80 training steps (epochs). After 80 steps of training, the model reached an accuracy of 98.23%, while the cross-entropy loss function showed a number of 0.102(Fig. 4D and E). The difference between validation and training accuracies showed that no overfitting had occurred. The weights corresponding to this performance were saved and utilized for the final model. Additionally, the receiving operative characteristic (ROC) curve was plotted for different thresholds to evaluate the system's diagnostic ability. The area under the ROC curve (AUC) showed a value of 0.99, which is highly acceptable (Fig. 4F).

System performance on patient-derived salivary samples

To evaluate the system's performance in detecting respiratory inflammation through salivary ferning patterns, we gathered 160 saliva sample images out of 70 participants including healthy people. The samples were divided into two cohorts: inflammatory and non-inflammatory. All the samples were tested using our point-of-care smartphone-based AI method, and CT-scan results validated the presence of pulmonary inflammation. Consequently, the 2 × 2 confusion matrix (Fig. 4G) showed an accuracy of 95%. Meanwhile, the specificity and sensitivity were 95.89% and 94.25%, respectively. Further, for this dataset, the proposed method showed a positive predictive value (PPV) of 96.47% and a negative predictive value (NPV) of 93.33% (Table 1).

Discussion

The 95% accuracy of this smartphone-assisted AI approach for diagnosing lung inflammatory diseases via sputum analysis shows strong potential as an accessible point-of-care screening tool. Specifically, the ability to rapidly stratify COVID 19 patients based on presence of respiratory involvement could empower quicker treatment decisions and monitoring of disease progression. While CT imaging is the current gold standard, requirements for expensive infrastructure and radiologist availability greatly limit access, whereas this prototype system relies only a miniature microscope and mobile phone.

However, several limitations in the current methodology need to be considered. The dataset comprised a relatively small number of retrospective samples restricted mostly to confirmed COVID-19 cases from a single medical center. Expanding this framework to incorporate various respiratory illnesses and patient demographics could strengthen real-world validity and generalizability. Another limitation of the current smartphone-based AI system is potential interference in identifying lung inflammation for patients with a history of tobacco or alcohol use. The byproducts of smoking and drinking can directly impact sputum composition, including inducing fern-like patterns unrelated to inflammation. Thus, for accurate implementation in the clinical setting, adequate protocols would need to be developed regarding timing of sputum analysis relative to the patient's last smoke or drink. Analyzing samples only after sufficient clearance time of tobacco/alcohol traces would help prevent false positives for pulmonary involvement. Alternatively, collecting data across patient groups with varying smoker/drinker statuses could allow retraining the AI model to account for these factors in its diagnostic algorithm. Implementing such measures to eliminate or adjust for smoking/drinking effects will be important future steps for reducing confounders before widespread adoption.

Conclusion

In summary, we have introduced a new technique that can serve as an auxiliary method for the current CT scan technique in detecting pulmonary involvement and lung inflammation in patients with conditions like COVID-19. This method has not been applied to other pulmonary diseases such as asthma, COPD, etc. However, given their similar mechanisms to COVID-19, we anticipate that the system will function similarly for them.

The system comprises a portable mini-microscope with 40X optical zoom capability, a glass slide with a designated area for dropping and drying the sputum sample, and an AI-based application on a smartphone for detecting fern patterns on the sputum sample resulting from the crystallization of electrolytes from the blood serum.

To validate our hypothesis regarding the entry of serum electrolytes into the sputum during the inflammation phase of the disease, we initially measured electrolyte concentration (sodium and potassium) in sputum samples from both CT-positive and CT-negative cases. The results ultimately proved our hypothesis that the electrolyte amount is higher in the sputum of CT-positive patients. After that, the fern structures due to the crystallization of the sputum salts were assessed by the mini-microscope with the assistance of a smartphone with an AI-based application. In this regard, the images are sent to a smartphone application to be analyzed using a CNN to detect fern patterns in sputum as a sign of pulmonary inflammation. EfficientNet-B0 was utilized as a mobile-sized CNN architecture, pre-trained with the ImageNet dataset. Using transfer learning, this pre-trained model was retrained and validated by 650 labeled salivary images gathered from 70 participants. Evaluating on 160 patient-derived sputum samples, this method showed a noteworthy accuracy of 95% confirmed by CT-scan results.

Consequently, the study proposes a novel method for detecting inflammation and lung involvement in COVID-19 patients by identifying fern patterns in their sputum samples. An affordable and portable mini-microscope can be used to detect fern patterns in sputum samples using this method, which makes it simple and cost-effective. Using AI to detect the fern patterns, this smartphone application can differentiate between CT positive and negative cases. This method could potentially provide an early indication of lung involvement in COVID-19 patients, allowing for earlier intervention and treatment. We believe this portable chip system could be reliable assistance for doctors, especially in medical centers where CT scan facilities are not provided.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Phelan, A. L., Katz, R. & Gostin, L. O. The novel coronavirus originating in Wuhan, China: Challenges for global health governance. JAMA J. Am. Med. Assoc. https://doi.org/10.1001/jama.2020.1097 (2020).

Chen, Q. et al. Diagnostic technologies for COVID-19: A review. RSC Adv. 10, 35257–35264 (2020).

Merkoçi, A., Li, C.-Z., Lechuga, L. M. & Ozcan, A. COVID-19 biosensing technologies. Biosens. Bioelectron. https://doi.org/10.1016/j.bios.2021.113046 (2021).

Choi, J. R. Development of point-of-care biosensors for COVID-19. Front. Chem. https://doi.org/10.3389/fchem.2020.00517 (2020).

Khayamian, M. A. et al. A label-free graphene-based impedimetric biosensor for real-time tracing of the cytokine storm in blood serum; suitable for screening COVID-19 patients. RSC Adv. 11, 34503–34515 (2021).

Irkham, I., Ibrahim, A. U., Nwekwo, C. W., Al-Turjman, F. & Hartati, Y. W. Current technologies for detection of COVID-19: Biosensors, artificial intelligence and internet of medical things (IoMT): Review. Sensors 23, 426 (2023).

Basu, A. et al. Performance of abbott id now covid-19 rapid nucleic acid amplification test using nasopharyngeal swabs transported in viral transport media and dry nasal swabs in a New York city academic institution. J. Clin. Microbiol. https://doi.org/10.1128/JCM.01136-20 (2020).

Miripour, Z. S. et al. Real-time diagnosis of reactive oxygen species (ROS) in fresh sputum by electrochemical tracing; correlation between COVID-19 and viral-induced ROS in lung/respiratory epithelium during this pandemic. Biosens. Bioelectron. 165, 112435 (2020).

Rezaei, M. et al. Point of care diagnostics in the age of covid-19. Diagnostics https://doi.org/10.3390/diagnostics11010009 (2021).

Benda, A. et al. COVID-19 testing and diagnostics: A review of commercialized technologies for cost, convenience and quality of tests. Sensors https://doi.org/10.3390/s21196581 (2021).

Ganguli, A. et al. Reverse transcription loop-mediated isothermal amplification assay for ultrasensitive detection of SARS-CoV-2 in saliva and viral transport medium clinical samples. Anal. Chem. 93, 7797–7807 (2021).

Heather Scobie. Update on Omicron Variant. WHO (2021).

Roda, A. et al. Smartphone-based biosensors: A critical review and perspectives. TrAC Trends Anal. Chem. https://doi.org/10.1016/j.trac.2015.10.019 (2016).

Xu, X. et al. Advances in smartphone-based point-of-care diagnostics. Proc. IEEE 103, 236–247 (2015).

Valera, E. et al. COVID-19 point-of-care diagnostics: Present and future. ACS Nano 15, 7899–7906 (2021).

Hernández-Neuta, I. et al. Smartphone-based clinical diagnostics: Towards democratization of evidence-based health care. J. Intern. Med. https://doi.org/10.1111/joim.12820 (2019).

Banik, S. et al. Recent trends in smartphone-based detection for biomedical applications: A review. Anal. Bioanal. Chem. 413, 2389–2406 (2021).

Ghimire, A. et al. AI and IoT solutions for tackling COVID-19 pandemic. In 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA) 1083–1092 (2020). https://doi.org/10.1109/ICECA49313.2020.9297454.

Kumar, S., Raut, R. D. & Narkhede, B. E. A proposed collaborative framework by using artificial intelligence-internet of things (AI-IoT) in COVID-19 pandemic situation for healthcare workers. Int. J. Healthc. Manag. 13, 337–345 (2020).

Alam, F., Almaghthawi, A., Katib, I., Albeshri, A. & Mehmood, R. iResponse: An AI and IoT-enabled framework for autonomous COVID-19 pandemic management. Sustainability 13, 3797 (2021).

Janowczyk, A. & Madabhushi, A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. J. Pathol. Inform. 7, 29 (2016).

Echle, A. et al. Deep learning in cancer pathology: A new generation of clinical biomarkers. Br. J. Cancer https://doi.org/10.1038/s41416-020-01122-x (2021).

Potluri, V. et al. An inexpensive smartphone-based device for point-of-care ovulation testing. Lab Chip 19, 59–67 (2019).

Ibrahim, A. U., Kibarer, A. G., Al-Turjman, F. & Kaba, S. Large-scaled detection of COVID-19 from X-ray using transfer learning. Int. J. Imaging Syst. Technol. 33, 1116–1128 (2023).

Ibrahim, A. U., Ozsoz, M., Serte, S., Al-Turjman, F. & Yakoi, P. S. Pneumonia classification using deep learning from chest X-ray images during COVID-19. Cogn. Comput. https://doi.org/10.1007/s12559-020-09787-5 (2021).

Ibrahim, A. U., Kibarer, A. G. & Al-Turjman, F. Computer-aided detection of tuberculosis from microbiological and radiographic images. Data Intell. 5, 1008–1032 (2023).

Chen, Y.-C. et al. Smartphone-based artificial intelligence using a transfer learning algorithm for the detection and diagnosis of middle ear diseases: A retrospective deep learning study. EClinicalMedicine 51, 101543 (2022).

Belkacem, A. N., Ouhbi, S., Lakas, A., Benkhelifa, E. & Chen, C. End-to-end ai-based point-of-care diagnosis system for classifying respiratory illnesses and early detection of COVID-19: A theoretical framework. Front. Med. https://doi.org/10.3389/fmed.2021.585578 (2021).

Chen, L. et al. Review of image classification algorithms based on convolutional neural networks. Remote Sens. 13, 4712 (2021).

Wang, B. et al. Smartphone-based platforms implementing microfluidic detection with image-based artificial intelligence. Nat. Commun. 14, 1341 (2023).

Umar Ibrahim, A., Ozsoz, M., Serte, S., Al-Turjman, F. & Habeeb Kolapo, S. Convolutional neural network for diagnosis of viral pneumonia and COVID-19 alike diseases. Expert Syst. 39, e12705 (2022).

Liu, Y. X., Yang, Y. & Chen, Y. H. Lung sound classification based on Hilbert-Huang transform features and multilayer perceptron network. In Proceedings—9th Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, APSIPA ASC 2017 vols 2018-February (2018).

Chen, J. et al. Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography. Sci. Rep. https://doi.org/10.1038/s41598-020-76282-0 (2020).

Showkatian, E., Salehi, M., Ghaffari, H., Reiazi, R. & Sadighi, N. Deep learning-based automatic detection of tuberculosis disease in chest X-ray images. Pol. J. Radiol. 87, 118–124 (2022).

Bermejo-Peláez, D. et al. A Smartphone-based platform assisted by artificial intelligence for reading and reporting rapid diagnostic tests: Evaluation study in SARS-CoV-2 lateral flow immunoassays. JMIR Public Health Surveill. 8, e38533 (2022).

Alkhodari, M. & Khandoker, A. H. Detection of COVID-19 in smartphone-based breathing recordings: A pre-screening deep learning tool. PLoS ONE 17, e0262448 (2022).

Shi, Y. et al. COVID-19 infection: The perspectives on immune responses. Cell Death Differ. https://doi.org/10.1038/s41418-020-0530-3 (2020).

Teuwen, L. A., Geldhof, V., Pasut, A. & Carmeliet, P. COVID-19: The vasculature unleashed. Nat. Rev. Immunol. https://doi.org/10.1038/s41577-020-0343-0 (2020).

Li, L., Chow, V. T. K. & Tan, N. S. Targeting vascular leakage in lung inflammation. Oncotarget. https://doi.org/10.18632/oncotarget.4907 (2015).

Ghaderinia, M. et al. Capture-free deactivation of CTCs in the bloodstream; a metastasis suppression method by electrostatic stimulation of the peripheral blood. Biosens. Bioelectron. 183, 113194 (2021).

Jahangiri, M. et al. Low frequency stimulation induces polarization-based capturing of normal, cancerous and white blood cells: A new separation method for circulating tumor cell enrichment or phenotypic cell sorting. Analyst 145, 7636–7645 (2020).

Shalileh, S. et al. Label-free mechanoelectrical investigation of single cancer cells by dielectrophoretic-induced stretch assay. Sens. Actuators B Chem. 346, 130409 (2021).

Abadijoo, H. et al. Healing field: Using alternating electric fields to prevent cytokine storm by suppressing clonal expansion of the activated lymphocytes in the blood sample of the COVID-19 patients. Front. Bioeng. Biotechnol. https://doi.org/10.3389/fbioe.2022.850571 (2022).

Zandi, A. et al. Positive electrostatic therapy of metastatic tumors: Selective induction of apoptosis in cancer cells by pure charges. Cancer Med. 10, 7475–7491 (2021).

Mallia, P. et al. Role of airway glucose in bacterial infections in patients with chronic obstructive pulmonary disease. J. Allergy Clin. Immunol. 142, 815-823.e6 (2018).

Gill, S. K. et al. Increased airway glucose increases airway bacterial load in hyperglycaemia. Sci. Rep. https://doi.org/10.1038/srep27636 (2016).

Kalk, W. W. I. et al. Sialometry and sialochemistry: Diagnostic tools for Sjögren’s syndrome. Ann. Rheum. Dis. 60, 1110–1116 (2001).

Ravinder, R. et al. Saliva ferning, an unorthodox estrus detection method in water buffaloes (Bubalus bubalis). Theriogenology 86, 1147–1155 (2016).

Sharma, N. et al. Multifractal texture analysis of salivary fern pattern for oral pre-cancers and cancer assessment. IEEE Sens. J. 21, 9333–9340 (2021).

Maragou, M. et al. Tear and saliva ferning tests in sjögren’s syndrome (SS). Clin. Rheumatol. 15, 125–132 (1996).

Ye, Z., Zhang, Y., Wang, Y., Huang, Z. & Song, B. Chest CT manifestations of new coronavirus disease 2019 (COVID-19): A pictorial review. Eur. Radiol. 30, 4381–4389 (2020).

Tan, M. & Le, Q. V. EfficientNet: Rethinking model scaling for convolutional neural networks. In 36th International Conference on Machine Learning, ICML 2019 (2019).

Deng, J. et al. ImageNet: A large-scale hierarchical image database. (2010) https://doi.org/10.1109/cvpr.2009.5206848.

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Author information

Authors and Affiliations

Contributions

M.G.: software, investigation, data curation, methodology, formal analysis, writing—original draft. H.A.: data curation, formal analysis, validation, investigation, methodology. A.M.: software, validation, investigation. E.K., H.S.: investigation. R.S.: conceptualization, validation, data curation. S.T.: validation, investigation, data curation, formal analysis. A.K., A.A.M.-M.: formal analysis. M.K.: conceptualization, data curation, formal analysis, methodology, investigation, writing—original draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghaderinia, M., Abadijoo, H., Mahdavian, A. et al. Smartphone-based device for point-of-care diagnostics of pulmonary inflammation using convolutional neural networks (CNNs). Sci Rep 14, 6912 (2024). https://doi.org/10.1038/s41598-024-54939-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54939-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.