Abstract

Nowadays, virtual learning environments have become widespread to avoid time and space constraints and share high-quality learning resources. As a result of human–computer interaction, student behaviors are recorded instantly. This work aims to design an educational recommendation system according to the individual's interests in educational resources. This system is evaluated based on clicking or downloading the source with the help of the user so that the appropriate resources can be suggested to users. In online tutorials, in addition to the problem of choosing the right source, we face the challenge of being aware of diversity in users' preferences and tastes, especially their short-term interests in the near future, at the beginning of a session. We assume that the user's interests consist of two parts: (1) the user's long-term interests, which include the user's constant interests based on the history of the user's dynamic activities, and (2) the user's short-term interests, which indicate the user's current interests. Due to the use of Bilstm networks and their gradual learning feature, the proposed model supports learners' behavioral changes. An average accuracy of 0.9978 and a Loss of 0.0051 offer more appropriate recommendations than similar works.

Similar content being viewed by others

Introduction

In recent years, educators' use of learning resources has increased significantly. Many educational resources are distributed in different repositories that respond to a wide range of various educational topics and goals. However, due to information overload, many learners have difficulty finding relevant and valuable learning resources that meet their learning needs1.

Although existing recommender systems have been very successful in the field of e-commerce, there are challenges, such as differences in learners' characteristics such as learning style, knowledge level, and learning pattern in creating an accurate recommender of learning resources in the field of e-learning2. Many existing recommendation methods do not consider differences in the learners' characteristics. Of course, this problem can be improved by adding plugin information about learners to the recommendation process. In addition, many recommendation methods face issues such as cold start and data sparsit2

In recent years, deep learning has gained significant results in different problems3,4.

Recently, with the help of deep learning methods, recommender systems have grown significantly. Recurrent neural networks (RNNs) play an essential role in modeling session-based recommender systems and promise further improvements. Compared to many other recommender models, an RNN uses a sorted sequence, or a consistent history of users, to create a comprehensive user profile that helps improve the recommendation5,6,7.

So far, much work has been done in combining long-term and short-term user interests8,9,10. On the other hand, user preferences for items evolve continuously over time11, while these networks implicitly assume that user interests are static, which is a false assumption in many scenarios5,6,8,12. Therefore, these methods work very poorly when the interests are content-sensitive and transient. There are also session-based methods6,12 that deal with interest drift to solve the problem of the static interests of the user. In contrast, these methods assume that all items have the same effect in a session, which is a false assumption, especially considering user interactions with items with different properties. We propose a long-term and short-term attention-based model to recommend educational resources to solve this problem. In none of the mentioned studies, an educational recommender that automatically provides useful advice has been developed; however, such a recommender is very much needed in educational applications. Our model recommends better training resources using a hybrid attention-based RNN network that simultaneously includes two types of data (short-term and long-term users' interests and updating educational resources). We considered the attention-based technique on the short-term interests of the user, and in the compression phase, we weighed the user interactions based on the time vector. That is, the more practical attributes of the user were considered to have a more effective effect on the recommendation of the educational resource. Unlike clustering techniques that ignore the fewest iterations, all user interactions are preserved. In fact, earlier works used only the user's previous information for education. While having a network that looks both backward and forward in this study allows us to cover changes in the learner's behavior and suggest updated recommendations. Utilizing a bidirectional LSTM deep neural network involves the user's long-term and short-term interests. We expect the proposed method to improve the recommendation of the next item, especially at the beginning of sessions, and to deal with the problem of a cold start at the beginning of each session.

The study of session-based recommender systems is not a new research topic7,8,13 and is more suitable for learning dynamic and sequential user behaviors compared to traditional iterative systems. The recurrent systems aim to generate search results close to the user's needs and make predictions based on their priorities. In virtual learning environments, educational recommender systems carry learning objects based on the student's characteristics, priorities, and learning needs. A learning object is a unit of educational content that, once found and retrieved, can assist students in their learning process14. According to the IEEE3 definition, a learning object is a digital or non-digital entity with educational design features that can be used, reused, or referenced during computer-based training.

Because of the ability of deep learning to solve many tasks and produce excellent results, universities and industries compete with each other to apply deep learning in a wider range of applications15. Recently, deep architectural learning has dramatically transformed recommenders and improved their performance. Deep learning can effectively capture non-linear and non-trivial user-item relationships and encode more complex abstractions as high-level data representations. In addition, it can obtain complex relationships within data from other sources, such as conceptual, textual, and visual information15. The increasing volume of data in the big data age poses challenges for real-world applications. As a result, scalability is essential for the efficiency of recommendation models in real-world systems, and temporal complexity significantly affects model selection. Fortunately, deep learning is very effective and reliable16.

Literature review

In the following, the studies performed on educational recommenders will be reviewed.

Data mining methods

Many educational researchers focus on extracting information about learning progress to help students properly. Yago et al.15 introduced an ontology network-based student model for multiple learning environments (ON-SMMILE), which is a semantic web-based model to assist teachers in educating students13. It is a constructive learning model in which students are highly involved in learning. This student model provides sufficient assistance to obtain, analyze, and categorize meaningful information about students and their knowledge status. It can also identify possible weaknesses and mistakes in learning. This helps the educator decide what recommendation should be given to each student. One of the advantages of this model is the possibility of applying different processing methods. Applying a combination of supervision and data processing methods to education provides a consistent and broad automated view of student learning.

Knowing the information that helps us define the user profile and identify their interests is essential to producing a personal recommender. Qiao and Xu7 introduced an infrastructure that can extract user-profiles and educational content from the Facebook social network and suggest educational resources to him/his17. This is done by information extraction and semantic web methods to extract, enrich, and define the user profile and interests. The recommendation action is based on repositories of learning objects, related data, and video repositories and takes advantage of how long the user has been using the Web. Evaluating the user's profile and behavioral characteristics via social media has the advantage that relevant educational information can be offered to him/her that will lead to his/her academic success. In addition, through this, the user's personal information and even his/her educational interests are always up to date. Of course, there are challenges, such as access to information and information extraction methods.

Tarus et al.2 introduced a hybrid knowledge-based recommender system based on ontology and sequential pattern mining to recommend e-learning resources to learners2. An ontology is used to represent knowledge about learners and educational resources, while the SPM algorithm is used to discover the sequential learning patterns of learners. One of the advantages of this method is that it can reduce the problem of cold start and sparsity.

Kuznetsov et al.40 showed how the ontology of students' skills could reduce the problem of the cold start of educational recommender systems. Students' profiles are created using their results in university courses18. The aim is to offer students projects and industrial opportunities. In this work, they used collaborative filtering algorithms and rule-based recommendations derived from user interactions in their system. The profile-based recommendation has been used to overcome the problem of cold start.

Since the learners' profiles are different, to use recommender systems in issues such as personal learning in the field of e-learning, an educational program should be prepared according to the needs of the learners. With knowledge of learners' profiles, more appropriate learning objects can be recommended to improve the learning process. Bourkoukou and Bachari17 proposed a LearnFit system that can automatically adapt to learners' dynamic priorities19. This system recognizes different patterns of learners' learning methods and habits by testing their psychological model and extracting their web browsing reports. In this system, to overcome the problem of cold start, which is consistent with the learning method of the learner, a personalized learning scenario is first obtained using the Felder-Silverman model20. Then, the habits and priorities of the users are obtained using data mining methods. Finally, the learning scenario is reviewed and updated by combining the collaborative filtering method and association rule mining.

Ludewig and Jannach11 introduced a recommender system that helps learners in choosing their lessons21. Choosing the right lessons in the early years can improve the research process. In this combined method, the N-gram query classification and the ontology are used to retrieve useful information and make an accurate recommendation. Generally, the query is converted to N-gram in the preprocessing step. The query extension is then applied using WordNet to retrieve similar words. They are then retrieved, and duplicate lessons are removed. In the last step, the related lessons are extracted using the ontology of the lessons. This method is faster than classical methods and helps learners to improve their performance and level of satisfaction and have easier access to information.

Recommendation systems (RSs) have been used and adapted in education as a means of offering each learner the educational resources best suited to his or her profile or needs, as is done in the field of e-commerce According to the filtering methods, the RSs are based upon two essential techniques: the first focused on the specificities of the user and the second focused on the preferences and tendencies of the group to which the user belongs. In order to improve the quality of recommendations in education, the author’s article1 has experimented with a hybrid approach that combines content-based and the aborative filtering approaches. This article uses an experiment to determine whether hybridizing content-based and collaborative filtering methods can improve the relevance of recommendations in an online educational context. The results demonstrated that the hybrid recommendation approach works best when considering the public institute alone and when considering the public and private institutes together. It can be concluded that taking into account both the individual and social specificities of a learner, can improve the relevance of the recommendations of educational resources in an e-learning environment.

In order to ensure the quality of resource recommendation and solve the problems of low recommendation accuracy, long recommendation time, and high data loss rate in the process of resource recommendation in traditional methods, a personalized recommendation system of English teaching resources based on the multi-K nearest neighbor regression algorithm is designed.

According to the overall architecture of the personalized recommendation system of teaching resources, in2 designs the resource browsing function module, teaching resource detailed page recommendation module, and teaching resource database. Based on the basic idea of the multi-K nearest neighbor regression algorithm, in order to avoid the loss of important data in English teaching resource recommendation and reduce the data loss rate, a missing data reconstruction algorithm for English teaching resources is proposed. Finally, the path interest of student users is considered from the selection of browsing path and access time to realize the personalized recommendation of English teaching resources. experimental results show that the system has high resource recommendation accuracy, short recommendation time, and low data loss rate in13 focuses on the collaborative filtering algorithm and proposes a collaborative filtering recommendation algorithm with an improved user model.

Firstly, the algorithm considers the score difference caused by different user scoring habits when expressing preferences and adopts the decoupling normalization method to normalize the user scoring data; secondly, considering the forgetting shift of user interest with time, the forgetting function is used to simulate the forgetting law of score, and the weight of time forgetting is introduced into user score to improve the accuracy of recommendation; finally, the similarity calculation is improved when calculating the nearest neighbor set. Based on the Pearson similarity calculation, the effective weight factor is introduced to obtain a more accurate and reliable nearest neighbor set.

experimental results show that the proposed method has better performance in recommendation accuracy and recommendation efficiency.

Development of traditional methods

Traditional online learning systems are based on different filtering methods that often rely on user behavior in the face of different sources. Recommending resources extracted from users with similar behavior often does not have satisfactory results.

Hagemann et al.20 proposed a personal recommender to help students make informed decisions about their learning path22. This study aimed to improve the path of discovery of selected modules by students using a hybrid recommender system explicitly designed to help students better discover available options. By combining content-based similarity and dispersion based on structural information about module space, it is possible to improve the predictability of choices that are exclusively consistent with students' priorities and goals. One of the advantages of this is that it can add diversity to the set of recommendations.

Tseng et al.21 introduced an adaptive learning and recommendation platform as a tracking tool for educators to observe and monitor student learning activities23 Students can learn ALR using the learning path through the platform. The strengths and weaknesses of students' learning can be revealed through the analysis of their learning activities, learning process, and learning efficiency. The aim is to create a concept map for adaptive learning to provide an educational advisor for students. Alinani et al. (2016) proposed a heterogeneous educational resource recommender system based on user preferences24. This system not only meets users' needs but also reduces some of the problems of most recommender systems, such as cold start. To do this, the system recommends the user on the recent request process and learns from the user's behavior in the process. A key point of this is that each recommended resource is assigned a weight, which is calculated based on the user's response. Heterogeneous recommender resource allows users to quickly find different types of related resources, thereby increasing user productivity.

Bourkoukou and Achbarou23 aimed to build a personal recommender system that results in useful content and better recommendations in the shortest possible time25. The proposed system is a web-based client-side application that uses user profiles to form neighborhoods and calculates predictions using weights. To overcome the problem of a cold start, i.e. the lack of information about learners and their interests during the first communication, their profiles are created using the learning method. Resources that are of interest to the user are suggested through predictions calculated by the new functions and the collaborative filtering method. This method reduces both the problem of cold start and data sparsity.

Machine learning-based methods

Thanh-Nhan et al.24 introduced a method for integrating social networks into intelligent tutoring systems (ITA) that can predict student performance26. To do this, the matrix factorization method is used along with social networks. One of the advantages of this work is that the relationships between students can be used to build models, thereby improving the predictive results.

Pupara et al.25 aimed to generate a recommendation and modeling system that uses students' characteristics and opinions to accurately predict and select the most appropriate institution for specific students through data analysis methods27. This system consists of three main stages of design, development, and analysis to suggest to students a suitable university. To do this, the decision tree and association rules were used, providing acceptable results for the four factors of university compulsion, trust in institutions, learner skills, and family income.

Rodríguez et al. l12 proposed an educational recommender system based on argumentation theory that can combine content-based, collaborative, and knowledge-based recommendation methods or act as a new recommendation method. This method provides educational objects to the student that can generate further arguments to justify their competence14.

Artificial intelligence-based methods

Duque Méndez et al. l26 proposed a CBR-based intelligent personal assistant that can perform user-requested operations and access information from remote sources28. This system is a particular recommender system because of its use in web searches. Therefore, the personal assistant allows the user to interact, display, and select items according to needs and priorities. The proposed intelligent personal assistant enables users to select educational resources from learning repositories. In this regard, a recommender system has been implemented based on an artificial intelligence method called CBR. CBR is a method in artificial intelligence that tries to solve new problems like humans do, using the experiences they have gained in similar events to make decisions in similar cases29. In the method used, first, it is necessary to identify the elements in students' profiles and to learn object metadata. Then it is essential to define a criterion for retrieving the most similar items and specify the update of these items. One of the advantages of this article is the use of different educational resources.

Neto (2018)30 developed a multi-agent recommender system, which helps e-learning recommendation systems to offer students the most appropriate educational resources. This work utilizes multi-agent technology to develop a system that combines web usage and extraction algorithms, such as content-based methods and collaborative filtering to find the most appropriate training resources. The performance of this combined method is better than each algorithm.

Advances have also been made in building models for searching and retrieving learning objects stored in heterogeneous repositories. Paula Rodríguez (2013) proposes integrating two multi-agent models focused on delivering specific LO adapted to a student's profile, and delivering LO to teachers to assist them in creating courses31. This aims to have an integrated multi-agent model that meets the needs of students and educators and thus improves the learning and teaching process.

in3 a personalized education system based on hybrid intelligent recommendations. Specifically, a hybrid framework of artificial intelligence is proposed, which focuses on the way to provide targeted recommendations for the implementation of integrated standard lesson plans, which will be the main tool for creating flexible differentiated pedagogical programs that will perfectly meet the personal needs and particularities of each student.

In5, a multi-level methodological proposal for the automatic adaptation of open learning is presented resources, in order to provide tools that help access and properly use their metadata E-learning environments are researched with students with disabilities to determine their real needs and preferences, emphasizing the need to reinforce adequate explanations and coherent alternative text In pictures, correct subtitles in videos and audio to text conversion, data that is related to us Proposal. The purpose of the conducted research is to contribute to an automatic support tool in the production of Accessible learning resources that are properly tagged for search and reuse. This research also aims to Support researchers in AI applications to address age-old challenges and opportunities in Virtual training, in addition to providing an overview that can help training producers maintain their resources and interest in accessing them.

Methods based on neural networks and deep learning networks

Paradarami et al. (2017)32 proposed a hybrid recommender system for vote prediction using an artificial neural network framework that uses both the capabilities of the content-based method and collaborative filtering to model training. This method combines content (user and business), participation (comments and votes), and vote-related metadata under a single learning model with an observer that provides better results compared to collaborative filtering recommendation systems. In this method, a multi-class classification model is developed to predict the class of a vote. One of the advantages of this is that ANN can be extended to other classes and any user in the system, which makes the model highly scalable.

Xiao Wang et al. (2017) proposed an e-learning recommendation framework based on deep learning33. This model is based on deep learning that can learn from large-scale data. The deep network used is GRU34, a type of LSTM return network. One of the advantages of this work is the use of the K-nearest neighbor method to train the model, the accuracy of which is guaranteed. Second, it can recommend new items whose similarities cannot be calculated. Third, it dramatically reduces system performance, which benefits the actual applications of recommenders.

In [54], an advanced recommendation method called AROLS has been proposed. It is integrated with a comprehensive learning model style for online students. This work suggests recommendations by considering the learning method as prior knowledge. In this way, first, it creates clusters of different learning styles; second, the behavioral patterns presented by the learning resource similarity matrix and the communication rules of each cluster are extracted using students' review history. Finally, it creates a set of personal recommendations with variable sizes based on the data mining results of the previous steps. Experiments show that the method presents the recommendations more accurately while maintaining the computational advantage over the traditional recommendation of participatory filtering (CF).

In35, researchers develop a recommendation mechanism for the adaptive learning system, which considers the theory of learning style by combining traditional recommendation algorithms with the clustering technique. The experiments show that the proposed method can perform better and has a computational advantage over the conventional recommendation.

In36, a method that integrates the features of the online learning style in the recommendation algorithm is proposed. In general, it consists of the features of participatory filtering (CF), association rules criteria (ARM), and online learning style (OLS). The proposed method has a 25% improvement over the scheme without student characteristics.

In37, attention concentration neural networks (CNN) have been used to collect user information, predict user rankings, and recommend top courses. In this work, the participatory filter and an attention-based CNN method are integrated to enable real-time recommendations and reduce server workload. The learner behaviors and learning history are shown as feature vectors; Also, the attention mechanism is used to improve the relationship estimation by considering the difference between the estimated and the actual scores provided by users for neural network training. Finally, the trained model recommends courses to students. However, the proposed system may suffer by recommending similar courses due to a large number of them with the development of MOOCs. This module shows the type of learning and learning habits of the user, such as the time of study per week, dropout rate, etc.

With the rapid rise of MOOC platforms, online learning resources are on the rise. Since learners differ in their ability to recognize and structure knowledge, they cannot quickly identify the learning resources they are interested in. Traditional technologies that recommend collaborative filtering work very poorly and have problems such as data sparsity and cold start. In addition, duplicate recommended content and high-dimensional, non-linear data on online learning users cannot be effectively managed, leading to resource recommender inefficiencies. To increase learner productivity and enthusiasm, Zhang et al. (2018) introduced a highly accurate resource recommendation (MOOCRC) model based on deep belief networks (DBNs) in MOOC environments33.

This method deeply extracts the characteristics of the learners and their lesson content and incorporates the behavioral characteristics of the learners to construct the vector of user-lesson characteristics as the input of the deep model. Learners' scores on lessons are processed as supervised learning tags by the supervisor. The MOOCRC model is trained with supervisors without pre-training and is fine-tuned using supervisor feedback. In addition, this model is obtained by repeatedly adjusting the model parameters. To evaluate the effectiveness of MOOCRC, an experimental analysis is performed using selective data from learners obtained from the starC MOOC platform of Central China Normal University. Learners' actual participation data in lessons are used to assess the accuracy of the MOOCRC classification. The results show that MOOCRC has higher recommendation accuracy and faster convergence than other traditional recommender methods.

In the following, some specialized concepts will be stated, and in the next section, the details of the proposed method will be explained, which is a combined architecture of deep learning networks with compression technique and separation of activity time into two short-term and long-term. In the fourth section, the results of the implementation of the proposed algorithm will be reviewed, and finally, in the fifth section, the content will be summarized, and suggestions will be presented.

In13, we have used the resource advisor system as an educational environment to recommend educational resources to students so that these recommendations are tailored to the preferences and needs of each student. We present the resource recommending system as a combination of improved deep learning networks MLP, BiLSTM, and LSTM using the attention method. Compared to similar studies using DBN networks and focusing only on the interests and preferences of users in the recent previous, the proposed system, in addition to the previous long-term interests of the user, offers higher accuracy and more appropriate recommendations according to current interests.

Conclusion

Different techniques and methods have studied recommender systems and educational recommender systems. Most of these researches, especially regarding an educational recommender for recommending scientific resources, have tried to solve the problem with linear and data mining methods and models such as ontology7,15,17,19,23,38,39,40,41.

A large amount of information is one of the problems and limitations of these methods; they only introduce a framework for recommendations. An educational consultant that automatically provides useful advice is not much discussed. Some others have also benefited from machine learning and artificial intelligence methods, which have shown a more suitable efficiency for solving problems than the previous methods15,23,39. One of the best ways to solve the problem is to use the structure of artificial neural networks30,31,32,42. Continuing the evolution of neural networks, deep learning networks are one of the newest and most complete solutions.

These types of networks can solve problems with high accuracy due to the acceptance of a massive amount of problem data, neural network integration, learning techniques, and structural dynamics in forming the number of hidden layers. The issue of educational advisors was not exempted from this issue, and Most of the news articles presented in the field of recommendation have used this technique. It can be claimed that today's topic and solution in the field of recommenders is deep learning techniques and their combination models, along with the use of large-scale data (big data).

But in many of these methods, the exact weight is considered for all the users' interests in learning. Only the user's previous information is used for education. While in the present Article, having a network that looks both backward and forwards, it is possible to cover the changes in the learner's behavior and suggest more updated recommendations.

Previously, short-term and long-term user interests were not used in educational recommenders, and this structure in the proposed model of this article was able to cover the dynamics of users' interests and needs. On the other hand, in this article, by using deep learning networks, we have been able to use the large volume of available datasets with the effectiveness of the time of occurrence for model training.

Proposed method

The implementation process of our proposed recommender system includes 5 phases of data mining from the OULSD standard database, data preprocessing, and model construction with a combination of deep learning networks such as LSTM, MLP, GRU, Bilstm improved with attention technique43,44, weighting parameters, and training. Finally, using the trained network, we recommend resources to users.

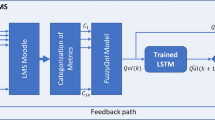

The proposed model Fig. 1 consists of two independent blocks for processing long-term interests and short-term interests.

The goal is to focus on feature extraction. We want to extract features in two different levels. We extract the interests and characteristics of the user in the short term and the long term separately. In fact, the change in user behavior can be found from long term to short term. The model learns to have better behavior and recommendations in relation to users whose interests change over time. Two independent blocks in the proposed architecture, after combining them together, try to extract such variable behavior patterns in users. Dataset records are divided into short-term and long-term sections based on a specific time axis. This separation of user interests and different views and valuing of interests based on the time axis, while increasing the system's accuracy and not encountering too many errors, suggest suitable and personalized resources based on the needs and tastes of students. Short-term interests play a more effective role than long-term interests in offering educational resources.

Researchers have looked at short-term interests as a fixed feature in many previous works and therefore assigned the same weight to the items. Then the compression algorithm presented in this article is applied to the long-term part, and the data is compressed in both row and column dimensions. In the following, for the users in the data bank who have not been active in a short period, we have considered their last activity in the long-term sector as the activity in the short-term sector. After the design and creation of the model, the training begins. At this stage, for each user, the first record of his activity in the short-term section is repeated for all his activities in the long-term section (this work is repeated for the number of records in the short-term section).

Finally, the remaining records from the database have been used to test the loss and accuracy of the test data set.

Data preprocessing

The steps in the preprocessing can be seen in Fig. 2. First, we extract the provided resources, student features, courses held, and student performance and evaluation in each course from the OULAD standard database. After merging the data, we proceed to categorize and map the features by Converting the string values of the quantitative variables in the database to numeric values, deleting the empty or incorrect data, and normalizing the features.

After merging the data with Formula(1), we proceed to normalize the feature in the domain [0,1].

where xmin represents the minimum, xmax is the maximum value, x* is the normalized value, and x is the original data.

Dataset (https://analyse.kmi.open.ac.uk/open_dataset) As input to the training and testing phase of our proposed model, we have used the standard analytical learning database of OULAD Free University, which is stored in CSV format45,46.

This database is collected from sample data from students, including demographic data, the courses attended, their set of study activities during the course, and the final results of each course. In detail, it contains the students' interactions with Virtual Learning Environment (VLE) for seven selected courses. The dataset includes 22 modules of over 30,000 students. The data is fetched by the daily summaries of student clicks on several resources. In OULAD, the tables are connected using unique identifiers; The number of data records used in this article after the initial stages of pre-processing is 10,403,715 and each record consists of 12 fields. Due to the large amount of data and the tendency to influence time in the model training process, it will be useful to use deep learning methods to solve the problem raised in the article. It should be noted, that the tables are stored in the CSV format. The utilized files are briefly described below:

-

Assessments: These contain information about assessments in module presentations.

-

Student Info: This file holds data about students' demographics along with their results. In addition, each student can have several records.

-

Student Vle: Includes students’ clicks and interactions with resources available in Vlr that can be in Html, pdf, etc. formats.

-

Student Assessment: keeps the results of the evaluations made during the course per student

-

Student Registration: This table encompasses information about the student registration time to participate in the module. Besides, it is recorded for students who have not registered the registration date.

-

Vle: Contains information about the tools existence in the VLE. It is usually html, pdf, etc. pages. Students have access to these resources online and their interactions with them are then recorded.

Table 1, shows an example of the values available in the original database (OULAD)46 that are mapped to numerical values in Table 2 (designed by the researcher).

Records labeling

Since the available data do not have a defined label, in this research, we have labeled the records as follows:

From the set of activities recorded for each student's joint courses, from the course that has the highest score in his assessments (which can be the effect of the resources studied), the source that has the most clicks (which can indicate the taste and interest of the student) has been chosen as the label.

The result of data labeling was the separation of sources into 562 categories. Their frequency can be seen in Fig. 3. At the end of the preprocessing phase, the data is divided into training, test, and validation sets.

Investigating the correlation between variables and labels

To investigate the possible correlation between the label and the existing variables that were used as input to train the model, we used the correlation test, and as you can see in Figure Fig. 4, there is no significant correlation between the variables and their label.

Symbols

In the recommendation system, one aspect of the choice is the user and the other vital aspect is the selected option, which could be a course by a student, a movie by a viewer, a track of music for a listener, or a meal by an eater and so on36.

Assuming U represents a collection of users and I is the items chosen by the user. In this scheme, the main goal is extracting the interests and priorities of users by looking at User-Item interactive events. For example, clicking on an educational source is considered an action for users. Also, for each user, u ∈ U is a sequential time window as \({W}_{u}=\{{w}_{1}u, {w}_{2}u,{w}_{3}u, \dots ,{w}_{t}u\}\) which t represents the total number of time windows; Also, \({w}_{t}u\) shows a collection of smaller time units as \({{\text{w}}}_{t}u=\left\{{d}_{1}, {d}_{2},\dots ,{d}_{x}\right\}\) where x indicates the length of time windows. Moreover, the related items of the user (u) in time windows (t) express by \({{\text{w}}}_{t}u\). There are some events in each time window as \(\{et, iu\in Rm |{\text{i}}=1, 2, ...,\mathrm{ t}\) } that iu and et describe the event i in time units of windows time (dx). Something else which should be mentioned is that user u interacts with the item |\(i\)∈I| in each event36.

For a time stage t, the Stu session represents the short-term interests of the user at time t, and the sessions before the time stage t represent the long-term interests of the user, which is defined as Lt−1u = S1u ∪ S2u ∪ … ∪ St−1u. Our goal is to predict the next learning resource (et,i+1u) in the Stu session.

In this scheme, the time axis that determines short-term interests and long-term interests is considered to be the last 6 months, which is equivalent to half an academic year. This means that user-item interactive events in the last six months of the user are in the short-term category, and other interactions since the user's birth are in the long-term category.

The data in the train set are divided into two categories: short-term interest data and long-term interest data, and then the resource recommendation is generated as the output of the model. After training the model to reach a certain error value, the test set can be used to test the efficiency of the recommendation model.

Short-term interests section

Researchers have looked at short-term interests as a fixed feature in many previous works and therefore assigned the same weight to the items. As a result, diversity in short-term interests has not been properly evaluated. To recommend the next source, the user's short-term interests are essential. Thus, the proposed model's architecture based on the attention technique has given weight to both long-term and short-term sessions. With this technique, the characteristics of the user u are fully taken into account. In addition, two-way LSTM is used to predict the recommendation to look at both the previous and the future and be sensitive to the variation in the user's short-term interests in both directions; this way, learners' behavioral changes can be covered.

To discover features and learners' behavioral changes, we propose a module based on two-way LSTM networks to extract periodic features and capture such time dependence of the input feature. In this research, we have evaluated single-layer and double-layer models.

The input with length 12, \({\text{I}}=\{{i}_{1},{i}_{2},\dots {i}_{12}\}\) is entered into the proposed model and the output \({y}_{t}\) is calculated according to formula (2).

As you will see in Table 6, the results obtained from the two-layer BiLSTM network, where the output \({y}_{t}\) is passed from the lower layer to the input of the upper layer, are more favorable.

The main idea of the attention technique is to learn to assign accurate (normalized) weights to a set of features. So, higher weights indicate that the corresponding feature contains more important information for the given taskFig. 5.

Attention techniques are divided into two categories based on calculating attention scores.

(1) Standard vanilla attention and (2) Collaborative attention. Note Vanilla uses a parameterized content vector, while collaborative attention is related to learning attention weights from two sequences. In this research, method 1 (formula (3)47) is used.

\({u}_{t}\): The vector of valuing the features.

\({\alpha }_{t}:\) Normalized weight of features obtained by softmax function.

\(v\): The sum of all input information that includes the sum of the weights of each \({h}_{t}\) With \({\alpha }_{t}\) as the corresponding weights.

Then the v vector is entered into a fully connected layer with softmax activation to perform the final classification. The recommendation is a vector \(y\in {R}^{2}\) with significant and non-significant probability. Using argmax, we select the highest probability as the model recommendation.

Long-term interests section

In the long-term interests section, due to the large volume of data records belonging to each student, we use the compression technique (Fig. 6) in both row and column dimensions 36.These data are entered into the system to display the interests and preferences of the user in the time period of his birth to the time axis of the short-term class, which is considered in this research, the last 6 months. The used compression algorithm weights resources in different time windows with a policy.

The block diagram of the proposed method36.

Compression in record level

First, one window (or limited number) is considered as a background. In this way, the user’s different activities appearing in a time window are observed. the different weights based on the average of constituent days are assigned for each window. With the help of these techniques, each user's activity with attention to the category of the time window is marked by proportional weight. Hence, the smaller weights can be considered for distant time windows. On the contrary, due to the crucial role of near-time windows, the bigger weights are selected for them. In the following, the weight of the feature is multiplied by the summation of the corresponding activity; Then, the outcome is aggregated with the results of the rest of the windows. Finally, each feature that is considered as a background is repeated only once in the section; and in the new field, it maintains the frequency of the merged features36.

Compression in feature level

After compression at the record level, the feature selection with the correlation matrix Pearson [Formula (2)] which measures linear dependence between two variables48 is applied to further compress data at the feature level. After implementing the Correlation Matrix with Pearson, we get a matrix n • n, with n being the number of features. Matrix values are the Correlation Coefficient values ranging from − 1.0 to + 1.0 with − 1.0 being a total negative correlation, 0.0 being no correlation and + 1.0 being a total positive correlation. With the help of this strategy, the feature pairs with the highest correlation value are selected36.

After applying compression on long-term data with a window length of 7, the available data will decrease from 12*10,543,682 to 11*5,167,599, and GRU is entered as input to a two-layer multi-cell network the output \({y}_{t}\) is calculated according to formula (4).

In the proposed model, to achieve better results in feature extraction, model parameters in each layer have been adjusted many times and the model has been trained, tested and evaluated.

The output from the training of short-term and long-term parts is connected, then it enters the MLP layer with a learning rate of 0.0001 and relu activation function. After applying dropout = 0.25 to avoid the effects of fully connected layers, preventing overfitting of the network in the last step enters the MLP layer with the softmax activation function. Finally, it provides one or more appropriate educational resources as output to the user. The cost function used in this structure is categorical cross-entropy. In the model training process, due to the large volume of input data, we used the minibatch method or size 1028.

In similar problems where the number of available classes is more than two classes, Softmax activation function and mutual entropy cost function are used in the output layer of the model.

Methods and tools of data analysis

We seek to predict the best educational resources to evaluate the performance of the proposed method.

A set of criteria are tested and evaluated as follows49

Indicates what percentage of experimental records are properly categorized.

Here x is a student from the set of all students X, R (x) represents the learning resources recommended for student x, and H (x) represents the learning resources observed by learner x.36.

Result and discussion

The available data have been used as network input in short-term and long-term sections. The short-term part contains 139968 records, and the long-term part contains 5,167,599 records after compression. can see in Table 3, To check the power of generalization, we have performed 4 different steps, and all cases show validation-split = 0.2 as a result. After the completion of the Epochs, as shown in Tables 12 and 13, our model has better suggestions for scientific resources due to the optimal structure in comparison with other methods.

Investigating the effect of the number of cells in each layer

Our input data is tabular; usually, a variety of long-term recursive networks converge to this type of data and texts earlier. Besides, the temporal nature of learning requires adopting methods that can use a period. So, in the first step, we implemented three types of LSTM, Gru, and Bilstm networks with single-cell structures in three single-layer, two-layers, and three-layer architectures. The aim is to investigate the effect of the number of layers in the structure of the recommender model. As you can see in Table 4, the results are not desirable. None of the 9 single-cell architectures has been able to find the pattern and the relationship between different features and their relationship in combination and fitting with different models. Even increasing the number of layers did not play an essential role in improving the results, and the decrease in the accuracy of the 3-layered model compared to the single-layered model shows that the model is moving toward overfitting.

In the second step, we achieved better results by increasing the number of cells in each layer, equivalent to the number of features available as model input. As seen in Table 5, the multi-cellular single-layer architecture implemented and studied in three single-layer LSTM, Bilstm, and Gru architectures has better results than single-cell architecture. Meanwhile, the Gru network with higher generalizability, an Loss of 0.2, and an accuracy of 0.91 has better performance than the two other types of networks.

Investigating the effect of the number of layers of network architecture

To evaluate the effect of increasing the number of layers, we have implemented and trained three two-layer multi-cellular architectures with LSTM, Gru, and Bilstm networks. The results in Table 6 show that two-layer architectures have been better than single-layer architectures. Also, they have been more successful in finding the pattern and the relationship of different features.

Investigating the effect of using the attention mechanism

The method of "attention" is derived from human visual attention. Just as man focuses on certain parts of visual inputs for cognition or perception. Integrating the "attention" technique with Rnns helps to process long and noisy inputs. Although LSTM can theoretically solve the problem of long memory, it still has problems when faced with long intervals. The attention-based network technique helps in remembering long-term inputs. Using the attention technique in recommender systems removes useless content while maintaining interpretability and selects the items with the most representation46]. We have evaluated the attention technique's effectiveness by implementing single-layer multi-cellular Lstm, and Bilstm networks. As seen in Table 7, the use of attention techniques in network architecture has positively affected the results.

The reason for the failure of the single Bilstm layer with the technique of paying attention to the proportion of the single Bilstm layer can be a large number of output neurons of the Bilstm layer. When Bilstm is used in a model, the output of two Lstm layers is actually stitched together and the number of generated features is doubled. Since the failure to extract features when faced with very long sequences has been one of the weaknesses of basic attention. This means that they have problems extracting the global features and only succeed in extracting local features. In the results of Table 7, you can see that the layer combined with the attention technique was weaker than in the case where the attention technique was not used.

Compression evaluation

To evaluate the compression method, we have trained and evaluated five architectures: LSTM, GRU, LSTM + Attention, GRU + Attention, and Bilstm in 3 different window lengths. Researchers sometimes miss network training due to a large amount of data and the lack of access to appropriate hardware. As shown in Table 8, this is possible by selecting the appropriate window length and designing the application model. We have trained and tested original and uncompressed data in 50 courses for different architectures. We have trained and tested similar architectures with 100 courses during 7, 14, and 30-day windows. In this way, you can see that in addition to using fewer hardware resources such as RAM, despite doubling the number of courses, a lot of time is saved.

As you can see in Table 9, by comparing the results of the original data and the accuracy and loss after data compression in the training and testing phase of the implemented models, the network with window lengths of 7 and 14 Learns with high speed and acceptable accuracy. As can be seen (Table 9), in the window with a time duration of 7, the average accuracy of training and testing of implemented models is maintained despite having the data and increasing the execution speed. Also, for a window with a length of 14, when our data volume has reached approximately 40, the accuracy has decreased by 0.10%.

According to Table 10, the results of our proposed architecture, with a loss rate of 0.005 and an accuracy of 0.997, are much more accurate and desirable than the proposed architecture46,47,48,49,50,51. Our proposed architecture has two short-term and long-term compressed layers with a window length of 7 and uses an improved two-sided bilstm structure with attention and GRU technique, which according to Table 8, has good generalizability. We also trained and tested our proposed architecture on compressed data with different window lengths, and the results (Table 10) were also very desirable with high window length compressions.

As can be seen in Fig. 7, the loss rate at the final AIPACs indicates that the number of selected AIPACs is appropriate, and since the validation and validation accuracy diagrams are approximately the same, it is clear that no overfitting occurred in this experiment. The decreasing slope of the loss charts in the early AIPACs also indicates the appropriateness of the learning rate selected in our training process.

5-fold cross-validation

Mutual validation is a model evaluation method that determines the extent to which statistical analysis results on a data set can be generalized and independent of educational data47. The data is divided into five subsets, each used for validation and the other 4 for training. This procedure is repeated 5 times, and finally, the average of the results is chosen as a final estimate. The results of the validation of the proposed model are shown in Table 11.

Comparison of the performance of the proposed model with other models

We have compared the results of the proposed model in the first row of Table 12 with other methods presented in related work or implemented by ourselves. As can be seen, the results are more desirable for different evaluation parameters of the proposed model than other implemented methods. All evaluations were performed on OULAD-shared data.

The proposed method50 has been implemented, trained, tested, and evaluated with OULAD data. As shown in Table 12, it performed worse than our proposed model in terms of both Loss and accuracy criteria.

In37, the three criteria, including Recall, Prec, and F1 for the three methods itemCF, Clustering + itemCF, and AROLS are examined. It shows that the proposed algorithm (AROLS) has a better Prec than the other two cases. Meanwhile, F1 and Recalls remain relatively steady at n top recommendation simultaneously.

The work51 shows that AROLS performs much better than traditional participatory filtering, especially User-AROLS calling, and accuracy, which has more than tripled. Also, the calling accuracy of UserCF is much smaller than ItemCF, probably because UserCF focuses more on the interests of learners who are more like a particular learner. At the same time, the ItemCF recommendation is more personal because it primarily suggests similar items based on the learner's interest. As can be seen in the first row, the proposed model performed better than all seven reviewed methods.

In52, the results show that OLS characters can make the recommendation algorithm more accurate and robust, but as seen in the first row, the proposed model performed better than both studied methods.

Investigating the scalability and time complexity of the proposed method

We have trained and tested the proposed model with different data volumes to check the scalability and time complexity. The results in Table 13 show that reducing the volume of input data reduces time complexity, but the accuracy obtained is still desirable, indicating our proposed model's scalability.

Conclusion and future works

Recommender systems, especially educational recommender systems, have been studied using different techniques and methods mentioned in the previous sections. Most of these studies, especially in the issue of the educational recommender to recommend scientific resources, have tried to solve the problem with linear methods and models and data mining such as ontology19,20,21,22,23,24,25,26,28. One of the problems and limitations of these methods is that they do not accept a large amount of information, and in some of them, only a framework for recommendation is introduced, and the training recommender who can automatically suggest useful advice has not been much discussed. Others have used machine learning methods and artificial intelligence and such techniques have shown better efficiency in solving the problem than previous methods15,29,30,31,32,33,34.

One of the best methods to solve the problem is to use the structure of artificial neural networks21,35,36,37. Following the evolution of neural networks, one of the newest and most complete solutions is the use of deep learning networks. These types of networks can solve problems with high accuracy due to the receptivity of a large amount of problem data, integration of neural networks, learning techniques, and structural dynamics in the formation of several hidden layers. The issue of the educational recommender is no exception to this case; most of the news articles presented in the field of recommendation have used this technique21,43,45. It can be claimed that deep learning techniques and their hybrid models and big-scale data (bulk data) are the topic and solution of the day in the field of recommenders.

However, in many of these methods, the same weight is considered in learning for all users' interests, and only the user's previous information is used in learning. While in the present dissertation, having a network that looks both backward and forwards, it is possible to cover changes in learner behavior and offer more up-to-date recommendations.

Due to the advancement of science and the production of new scientific resources, educational resources are constantly increasing, and the network needs to be trained permanently and gradually. However, in most systems, network learning is based on existing resources and does not look to future resources. As a result, due to the rapid growth of these educational resources, network offers will soon become unusable and outdated. This challenge can also be addressed by graduating from the network training process. However, given the following problems, more work needs to be done in the future to achieve effective recommendations. These problems include (1) incremental learning for non-stationary and current data such as large user volumes and input items, (2) computational efficiency for high-dimensional tensors and multimedia data sources 3) balancing model complexity and scalability despite increasing parameters exponentially. One area of inspiration for research is the acquisition of knowledge, which is addressed in the paper40 for learning small/intensive models for inference in recommender systems. The main idea is to train a smaller student model that absorbs knowledge from the larger teacher model.

Given that inference time is critical for real-time applications on a million/billion-user scale, this is another inspiring topic for future research. The next promising issue is to pay attention to compression methods39. High-dimensional input data can be compressed to reduce computational space and time during model learning.

Data availability

Datasets that have been used for experiments in this paper are available at: https://analyse.kmi.open.ac.uk/open_dataset.

Abbreviations

- LSTM:

-

Long and short term memory

- GRU:

-

Gated recurrent unit

- MLP:

-

Multi-layer perceptron

- DLS:

-

Deep learning system

- DSP:

-

Discriminative sequential pattern

- RNN:

-

Recurrent recommender network

- AROLS:

-

Adaptive recommendation based on online learning style

- CF:

-

Collaborative filtering

- ARM:

-

Association rules mining

- OLS:

-

Online learning style

- CNN:

-

Convolutional neural network

- RBM:

-

Restricted boltzmann machine

- OULAD:

-

Open university learning analytics dataset

References

Dascalu, M.-I. et al. Educational recommender systems and their application in lifelong learning. Behav. Inform. Technol. 35(4), 290–297 (2016).

Tarus, J. K., Niu, Z. & Yousif, A. A hybrid knowledge-based recommender system for e-learning based on ontology and sequential pattern mining. Futur. Gener. Comput. Syst. 72, 37–48 (2017).

Zhang, S. et al. Deep learning based recommender system: A survey and new perspectives. ACM Comput. Surv. 52(1), 1–38 (2019).

Quadrana, M., Cremonesi, P. & Jannach, D. Sequence-aware recommender systems. ACM Comput. Surv. 51(4), 1–36 (2018).

Hidasi, B., et al., Session-based recommendations with recurrent neural networks. arXiv Preprint at http://arxiv.org/abs/quant-ph/1511.06939, (2015).

Cheng, H.-T., et al. Wide & Deep Learning for Recommender Systems. In Proc. 1st Workshop on Deep Learning for Recommender Systems. (2016).

Qiao, C. & Hu, X. Discovering student behavior patterns from event logs: Preliminary results on a novel probabilistic latent variable model. In 2018 Ieee 18th International Conference on Advanced Learning Technologies (icalt) (eds Qiao, C. & Hu, X.) (IEEE, 2018).

Park, S. E., Lee, S. & Lee, S.-G. Session-based collaborative filtering for predicting the next song. In 2011 First ACIS/JNU International Conference on Computers, Networks, Systems and Industrial Engineering (eds Park, S. E. et al.) (IEEE, 2011).

Moore, J. L. et al. Taste over time: The temporal dynamics of user preferences. ISMIR 13(401), 406 (2013).

Hu, L., et al. Diversifying Personalized Recommendation with User-session Context. In International Joint Conference on Artificial Intelligence 1858-1864 (Melbourne, Australia,2017).

Ludewig, M. & Jannach, D. Evaluation of session-based recommendation algorithms. User Model. User-Adap. Inter. 28, 331–390 (2018).

Rodríguez, P. et al. An educational recommender system based on argumentation theory. AI Commun. 30(1), 19–36 (2017).

IEEE Learning Technology Standards Committee. IEEE Standard for Learning Object Metadata. IEEE Standard 1484 12 1 (2002)

Covington, P., J. Adams, and E. Sargin. Deep neural networks for youtube recommendations. In Proc. 10th ACM Conference on Recommender Systems (2016).

Yago, H. et al. On-smmile: Ontology network-based student model for multiple learning environments. Data Knowl. Eng. 115, 48–67 (2018).

Serrà, J. and A. Karatzoglou. Getting deep recommenders fit: Bloom embeddings for sparse binary input/output networks. In Proc. Eleventh ACM Conference on Recommender Systems. (2017).

Bourkoukou, O. & El Bachari, E. Toward a hybrid recommender system for e-learning personnalization based on data mining techniques. IJOIV: Int. J. Inform. Vis. 2(4), 271–278 (2018).

Felder, R. M. Learning and Teaching Styles In Engineering Education (North Carolina, 2002).

Gulzar, Z., Leema, A. A. & Deepak, G. Pcrs: Personalized course recommender system based on hybrid approach. Procedia Comput. Sci. 125, 518–524 (2018).

Hagemann, N., O’Mahony, M. P. & Smyth, B. Module advisor: Guiding students with recommendations. In Intelligent Tutoring Systems: 14 International Conference, ITS 2018, Montreal, QC, Cnada 11-15, 2018, Proceedings 14. 2018 (eds Hagemann, N. et al.) (Springer, 2018).

Tseng, H.-C. et al. Building an online adaptive learning and recommendation platform. In Emerging Technologies for Education: First International Symposium, SETE 2016, Held in Conjunction with ICWL 2016, Rome, Italy, October 26–29, 2016, Revised Selected Papers 1 (eds Tseng, H.-C. et al.) (Springer, 2017).

Alinani, K. et al. Heterogeneous educational resource recommender system based on user preferences. Int. J. Auton. Adapt. Commun. Syst. 9(1–2), 20–39 (2016).

Bourkoukou, O. & Achbarou, O. Weighting based approach for learning resources recommendations. JOIV Int. J. Inform. Vis. 2(3), 104–109 (2018).

Thanh-Nhan, H.-L., Huy-Thap, L. & Thai-Nghe, N. Toward integrating social networks into intelligent tutoring systems. In 2017 9th International Conference on Knowledge and Systems Engineering (KSE) (eds Thanh-Nhan, H.-L. et al.) (IEEE, 2017).

Pupara, K., Nuankaew, W. & Nuankaew, P. An institution recommender system based on student context and educational institution in a mobile environment. In 2016 International Computer Science and Engineering Conference (ICSEC) (eds Pupara, K. et al.) (IEEE, 2016).

Duque Méndez, N. D., Rodríguez Marín, P. A. & Ovalle Carranza, D. A. Intelligent personal assistant for educational material recommendation based on CBR. In Personal Assistants: Emerging Computational Technologies (eds Costa, A. et al.) (Springer International Publishing, 2018).

Rossille, D., Laurent, J.-F. & Burgun, A. Modelling a decision-support system for oncology using rule-based and case-based reasoning methodologies. Int. J. Med. Inform. 74(2–4), 299–306 (2005).

Neto, J. Multi-agent web recommender system for online educational environments. In Trends in Cyber-Physical Multi-Agent Systems. The PAAMS Collection-15th International Conference, PAAMS 2017 15 (ed. Neto, J.) (Springer, 2018).

Rodríguez, P., Duque, N. & Rodríguez, S. Integral multi-agent model recommendation of learning objects, for students and teachers. In Management Intelligent Systems: Second International Symposium (eds Casillas, J. et al.) (Springer, 2013).

Paradarami, T. K., Bastian, N. D. & Wightman, J. L. A hybrid recommender system using artificial neural networks. Expert Syst. Appl. 83, 300–313 (2017).

Wang, X. et al. E-learning recommendation framework based on deep learning. In 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (eds Wang, X. et al.) (IEEE, 2017).

Cho, K., et al., Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv Preprint at http://arxiv.org/abs/quant-ph/1406.1078 (2014).

Zhang, H. et al. MOOCRC: A highly accurate resource recommendation model for use in MOOC environments. Mobile Netw. Appl. 24, 34–46 (2019).

Najafabadi, M. M. et al. Deep learning applications and challenges in big data analytics. J. Big Data 2(1), 1–21 (2015).

Wang, J. et al. Attention-based CNN for personalized course recommendations for MOOC learners. In 2020 International Symposium on Educational Technology (ISET) (eds Wang, J. et al.) (IEEE, 2020).

Ahmadian Yazdi, H., Seyyed Mahdavi Chabok, S. J. & KheirAbadi, M. Effective data reduction for time-aware recommender systems. Control Opt. Appl. Math. 8(1), 33–53 (2023).

Li, R. et al. Online learning style modeling for course recommendation. In Recent Developments in Intelligent Computing, Communication and Devices: Proceedings of ICCD 2017 (eds Patnaik, S. & Jain, V.) (Springer, 2019).

Hagemann, N., M.P. O'Mahony, and B. Smyth. Visualising module dependencies in academic recommendations. In Proc. 24th International Conference on Intelligent User Interfaces: Companion. (2019).

Tseng, H.-C. et al. Building an online adaptive learning and recommendation platform. In International Symposium on Emerging Technologies for Education (eds Ting-Ting, W. et al.) (Springer, 2016).

Kuznetsov, S. et al. Reducing cold start problems in educational recommender systems. In 2016 International Joint Conference on Neural Networks (IJCNN) (eds Kuznetsov, S. et al.) (IEEE, 2016).

Felder, R. M. & Silverman, L. K. Learning and teaching styles in engineering education. Eng. Educ. 78(7), 674–681 (1988).

Zhang, H. et al. MOOCRC: A highly accurate resource recommendation model for use in MOOC environments. Mobile Netw. Appl. 24(1), 34–46 (2019).

Bonyani, M. et al. DIPNet: Driver intention prediction for a safe takeover transition in autonomous vehicles. IET Intell. Trans. Syst. https://doi.org/10.1049/itr2.12370 (2023).

Bonyani, M., Ghanbari, M. & Rad, A. Different gaze direction (DGNet) collaborative learning for iris segmentation. SSRN Electron. J. https://doi.org/10.2139/ssrn.4237124 (2022).

Allen, D. M. The relationship between variable selection and data agumentation and a method for prediction. Technometrics 16(1), 125–127 (1974).

Kuzilek, J., Hlosta, M. & Zdrahal, Z. Open university learning analytics dataset. Sci. Data 4(1), 1–8 (2017).

Winata, G. I., Kampman, O. P. & Fung, P. Attention-based lstm for psychological stress detection from spoken language using distant supervision. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (eds Winata, G. I. et al.) (IEEE, 2018).

Luong, H. H. et al. Feature selection using correlation matrix on metagenomic data with pearson enhancing inflammatory bowel disease prediction. In International Conference on Artificial Intelligence for Smart Community: AISC 2020, 17–18 December, Universiti Teknologi Petronas, Malaysia (eds Luong, H. H. et al.) (Springer, 2022).

Bonyani, M., Jahangard, S. & Daneshmand, M. Persian handwritten digit, character and word recognition using deep learning. Int. J. Doc. Anal. Recognit. 24(1–2), 133–143 (2021).

Wu, C.-Y., et al. Recurrent recommender networks. In Proc. Tenth ACM International Conference on web Search and Data Mining. (2017).

Hill, W. et al. Recommending and evaluating choices in a virtual community of use. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (eds Hill, W. et al.) (Springer, 1995).

Yan, L. et al. Learning Resource Recommendation in E-Learning Systems Based on Online Learning Style. In Knowledge Science, Engineering and Management: 14th International Conference, KSEM 2021, Tokyo, Japan, August 14–16, 2021, Proceedings, Part III (eds Yan, L. et al.) (Springer, 2021).

Ahmadian Yazdi, H., Seyyed Mahdavi Chabok, S. J. & Kheirabadi, M. Dynamic educational recommender system based on improved recurrent neural networks using attention technique. Appl. Artif. Intell. https://doi.org/10.1080/08839514.2021.2005298 (2022).

Acknowledgements

The authors are thankful to anonymous reviewers for their valuable comments and suggestions that helped improve the quality of the paper.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmadian Yazdi, H., Seyyed Mahdavi, S.J. & Ahmadian Yazdi, H. Dynamic educational recommender system based on Improved LSTM neural network. Sci Rep 14, 4381 (2024). https://doi.org/10.1038/s41598-024-54729-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54729-y

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.