Abstract

Brain disorders pose a substantial global health challenge, persisting as a leading cause of mortality worldwide. Electroencephalogram (EEG) analysis is crucial for diagnosing brain disorders, but it can be challenging for medical practitioners to interpret complex EEG signals and make accurate diagnoses. To address this, our study focuses on visualizing complex EEG signals in a format easily understandable by medical professionals and deep learning algorithms. We propose a novel time–frequency (TF) transform called the Forward–Backward Fourier transform (FBFT) and utilize convolutional neural networks (CNNs) to extract meaningful features from TF images and classify brain disorders. We introduce the concept of eye-naked classification, which integrates domain-specific knowledge and clinical expertise into the classification process. Our study demonstrates the effectiveness of the FBFT method, achieving impressive accuracies across multiple brain disorders using CNN-based classification. Specifically, we achieve accuracies of 99.82% for epilepsy, 95.91% for Alzheimer’s disease (AD), 85.1% for murmur, and 100% for mental stress using CNN-based classification. Furthermore, in the context of naked-eye classification, we achieve accuracies of 78.6%, 71.9%, 82.7%, and 91.0% for epilepsy, AD, murmur, and mental stress, respectively. Additionally, we incorporate a mean correlation coefficient (mCC) based channel selection method to enhance the accuracy of our classification further. By combining these innovative approaches, our study enhances the visualization of EEG signals, providing medical professionals with a deeper understanding of TF medical images. This research has the potential to bridge the gap between image classification and visual medical interpretation, leading to better disease detection and improved patient care in the field of neuroscience.

Similar content being viewed by others

Introduction

Brain disorders are a growing global health concern, particularly in low- and middle-income countries. Deaths and disabilities from brain disorders have surged by 39% and 15% in the last 3 decades, making them the second leading cause of global mortality, resulting in approximately 9 million deaths annually, as shown in Fig. 1. Access to neurological disorder services and support remains inadequate, especially in less affluent nations.

In response to this alarming trend, the World Health Organization (WHO) endorsed an action plan at the 75th World Health Assembly in May 2022. This plan focuses on improving diagnosis, treatment, care, research, and innovation while strengthening information systems for brain disorders. Our research aligns with the WHO’s strategic plan, aiming to automatically and accurately diagnose brain disorders using a novel technique. Electroencephalogram (EEG) signals provide valuable information about brain health but suffer from low amplitude, high noise, and limited interpretability1. While ML/DL models show promise in diagnosing brain diseases from EEG, they face challenges with limited datasets, inter-subject variability2, and generalization3. Many studies have explored automatic diagnosis of brain disorders from EEG signals, such as stroke in elders4, Parkinson’s disease (PD)5, Alzheimer’s6, autism7, and ADHD8. However, most focus on event-related potentials (ERPs) or Fourier-based power analyses, which have limitations in capturing the full spectrum of EEG data9.

To address these challenges, our research employs time–frequency (TF) transformations to enhance the visualization and interpretation of EEG data. This improved representation enables accurate classification using machine learning (ML) and deep learning (DL) models, aiding pathologists and neurologists.

Our research focuses on transforming complex EEG signals into interpretable representations, bridging the gap between DL models and medical professionals’ expertise. This empowers doctors to make more informed decisions based on visual data. This combined approach of visual interpretation and DL-based classification yields improved results.

Key contributions of our work include:

-

Channel selection method based on mean correlation coefficients (mCC): We propose a channel selection method that identifies key EEG channels for brain disorder classification using mCC. This method helps improve the accuracy of classification.

-

Transformation of EEG signals into 2D images using the Forward–Backward Fourier transform (FBFT): We enhance the interpretation of EEG signals by transforming them into 2D images using the novel FBFT technique. We compare this technique with other time–frequency transforms to demonstrate its effectiveness.

-

Concatenation of time–frequency images from selected channels: We propose a method to concatenate time–frequency images from selected channels into a single input for ML/DL models. This approach improves the classification accuracy of brain disorders.

-

Analysis and comparison of pre-trained DL models: We analyze and compare pre-trained DL models for diagnosing brain disorders using FBFT-transformed EEG images. This analysis helps identify the most effective models for accurate classification.

-

Naked-eye diagnosis approach: We propose a naked-eye diagnosis approach for brain disorders based on time–frequency images obtained through FBFT. This approach integrates visual interpretation with DL-based classification, improving diagnostic accuracy.

Several deep learning methods have been proposed for brain disorder’s classification10,11,12, but none of these methods have analyzed the models on different time–frequency transformed images of EEG. Additionally, most of these methods focus on diagnosing a single brain disorder from EEG Signals.

In this paper, we revolutionize EEG visual interpretation and provide novel time–frequency images for deep learning models. These images enhance diagnostic accuracy and foster effective collaboration between humans and machines, advancing medical imaging and diagnosis significantly. Our proposed models outperform the state-of-the-art in disease diagnosis.

Related work

In the realm of epileptic seizure classification, various innovative methodologies have been proposed, each offering distinct contributions to the field.

The authors of13 introduced a novel approach for epileptic seizure classification, utilizing Discrete Fourier Transform (DFT) and an Attention Network AttVGGNet. The method achieved an accuracy of 95.6% and other notable performance metrics. Similarly, the authors of14 improved epilepsy diagnosis accuracy using EEG recordings by combining DFT with brain connectivity measures and feeding the data into an Autoencoder Neural Network. The approach achieved an accuracy of 97.91%, sensitivity (SENS) of 97.65%, and specificity (SPEC) of 98.06%. In15, spectrogram and scalogram images from Short Time Fourier Transform (STFT) were employed for ictal-preictal-interictal classification using a Convolutional Neural Network (CNN), achieving 97% accuracy. Researchers like16 and17 also used CNNs for EEG classification from STFT, achieving accuracies of 91.71% and 97.75%, respectively. Utilizing Discrete Wavelet Transform, the authors of18 achieved an accuracy of 95.6% in ictal-interictal EEG signal classification. In19, an adaptive approach with Pattern Wavelet Transform and a Fuzzy classifier achieved ACC of 96.02% and SPEC of 94.5% for ictal-interictal classification. The authors of20 used a hybrid of DWT and LDA classifier, resulting in an accuracy of 99.6% and SENS of 99.8% for the ictal-interictal problem. In21, the authors proposed a multiscale short-time Fourier transform for feature extraction coupled with a 3D convolutional neural network. The approach demonstrated accurate seizure detection with a 14.84% rectified predictive ictal probability error and a 2.3s detection latency.

Several studies have shown promising outcomes in identifying neurological disorders like Alzheimer’s disease (AD) to enhance the quality of life for affected individuals. The authors of22 implemented a method using techniques for extracting distinctive attributes and categorizing EEG, achieving accuracies differentiating between AD patients, those with mild AD, and individuals in a healthy group. They utilized scalograms generated from Fourier and Wavelet Transforms, achieving accuracies of 83% for AD versus normal cases, 92% for healthy versus mild AD cases, and 79% for mild versus AD classification scenarios. The authors of23 used time-dependent power spectrum descriptors for CNN input, achieving an accuracy of 82.30% in a dataset of 64 AD, 64 MCI, and 64 HC subjects. Similarly, the authors of24 collected resting-state EEG signals from individuals with mild cognitive impairment (MCI), AD, and healthy controls (HC). They used functional connectivity measures from EEG data as input for a convolutional neural network (CNN), achieving recognition accuracy rates of 93.42% for MCI and 98.54% for AD.

Commencing with stress detection, notable studies have employed advanced techniques to identify and classify emotional states based on EEG data accurately. The authors of25 explored stress detection using the DEAP dataset, employing power spectrum-based feature extraction on all 32 channels with 5-second windows. The AlexNet architecture was utilized for classification, distinguishing between calm and distressed emotional states with an accuracy of 84%. The authors of26 conducted stress detection using EEGMAT data, applying the Discrete Wavelet Transform (DWT) to 19 out of 23 EEG channels. The classification, facilitated by a Convolutional Neural Network-Bidirectional Long Short-Term Memory (CNN-BLSTM) architecture, successfully differentiated between stressed and relaxed states, achieving an impressive accuracy of 99.2%. Similarly, the authors of27 employed the SEED dataset, combining filtering and a music model for feature extraction across all 62 EEG channels. Using an Artificial Neural Network (ANN), they classified emotions into neutral, positive, and negative categories, achieving an impressive accuracy of 97%. Another noteworthy study in28 utilized the DASPS dataset, employing power spectrum-based feature extraction on all 14 EEG channels. The classification, performed in 1-second windows with a total duration of 15 seconds, utilized K-Nearest Neighbors (KNN) to distinguish between binary and four-class anxiety levels, achieving an accuracy of 83.8%.

For the heart murmur, author29 conducted a study employing feature extraction through Fast Fourier Transform (FFT) on 942 cases with 6 EEG channels. The dataset was segmented into 4-second intervals with a 1-second overlap. The authors applied a combination of deep learning models, specifically DBResNet and XGBoost, achieving accuracies of 76.2% and 82%, respectively. Subsequently, the authors of30 utilized a convolutional neural network (CNN) for feature extraction. This study reported an enhanced classification accuracy of 87.2%. The authors of31 extended the investigation, focusing on an unspecified feature extraction method for the 942 cases. The study adopted an all-inclusive approach by considering all EEG channels and applying a CNN for classification, yielding an accuracy of 75.7%.

Methodology

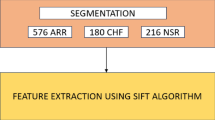

Our approach encompasses multiple steps, including EEG dataset collection for various brain disorders, signal pre-processing, TF analysis for 2D image generation, deep learning (DL), and human visual classification, as illustrated in Fig. 2. We’ve implemented this approach in Python, utilizing pre-trained neural network models on TF images from brain disorders such as epilepsy, Alzheimer’s, murmur, and stress EEG. Model evaluation employs established performance metrics.

Data description and pre-processing

EEG signals of subjects with various brain disorders, including epilepsy, Alzheimer’s, murmur, and stress, are analyzed in this study. For the diagnosis of epilepsy, the CHBMIT dataset developed in Boston Children’s Hospital is used32. 23 channels EEG sampled at 256 samples/s are recorded from 22 subjects, 5 males and 17 females, aged between 1.5 and 22 years old. For stress, we utilized the dataset by Bird et al.33, recorded using a Muse headband with four dry EEG sensors (TP9, AF7, AF8, and TP10). It covers three mental states: relaxed, neutral, and concentrating, comprising 25 recordings from five participants, each with two-minute sessions for each mental state.

For diagnosing Alzheimer’s disease (AD), we utilized the Open-Neuro dataset, comprising EEG data from 28 participants at the Department of Neurology, AHEPA General University Hospital of Thessaloniki, Greece. These participants were categorized into three groups: Alzheimer’s disease patients (AD), frontotemporal dementia patients (FTD), and a control group (CN) consisting of healthy age-matched adults34. The EEG signals were sampled at 500 Hz with a resolution of 10 V/mm, and the duration of EEG recordings varied across groups.

The study also covers heart murmurs, utilizing the MIT Physionet dataset35. This dataset comprises heart sound recordings collected during screening campaigns in Northeast Brazil in 2014 and 201536. It includes recordings for 1568 participants, ranging from 5 to 45 seconds in length, resulting in 5272 recordings. The recordings are categorized by the valve’s location (PV, AV, MV, TV, or other), and each participant is labeled for the presence, absence, or unknown status of heart murmurs.

EEG channels selection using Pearson’s correlation coefficient

Channel selection is crucial to effectively diagnose brain disorders from multi-channel EEG datasets. While using all channels is an option, it often results in redundancy, increased feature count, computational complexity, and memory demands37. Hence, selecting a subset of channels is a recommended practice. This selection can be performed visually by a neurophysiologist or through an automated algorithm. In our approach, we propose a correlation-based method. Initially, the correlation coefficient between two EEG channels, denoted as \(x_1\) and \(x_2\), is computed as follows:

where n is the total number of samples, \(\overline{x_1}\) and \(\overline{x_2}\) are the mean and \(x_{i1}\), \(x_{i2}\) are the ith samples of the two channels. This correlation coefficient is computed for each channel with all other channels, resulting in a correlation matrix (CorrMat) given as follows:

The mean correlation coefficient for each channel is calculated by computing the mean of each column in the CorrMat. Examples of the CorrMat and mean values for two randomly selected subjects, one with seizures and one without, are shown in Fig. 3. Channels with low mean correlation coefficients are selected and further validated by neurophysiologists. Highly correlated channels may capture redundant information, increasing noise and reducing classification accuracy. In contrast, uncorrelated channels provide unique information, improving accuracy. For the two subjects in Fig. 3, the channels with the lowest mean correlation coefficients are identified as FZ-CZ, FT9-FT10, FT10-T8, T7-FT9, and are selected for further feature extraction.

Time–frequency (TF) analysis to transform the EEG signals to 2D images

Time–frequency analysis (TF) of EEG signals offers advantages over both time and frequency domain analyses38. It tracks changes in brain wave amplitude and phase across time and frequencies, enhancing interpretability by measuring fundamental brain properties. TF analysis transforms signals into informative 2D time–frequency images, revealing variations among brain disorders, easily classifiable using deep CNN models. Previous studies have focused on a single TF method to create 2D scalogram images39,40. Common TF techniques include Short-time Fourier Transform (STFT)41, continuous wavelet transform (CWT)42, discrete wavelet transform (DWT)43, Hilbert transform (HT)44, and empirical mode decomposition (EMD)45.

Each TF method has its pros and cons. For instance, STFT is computationally efficient but can produce blurred TF representations due to windowing. Wavelet transforms offer precision but can be computationally demanding. The Hilbert transform is relatively straightforward but noise-sensitive, while EMD is robust but more complex to implement and interpret.

To address these limitations, we introduce the novel FBFT transform, providing superior 2D TF image visualization, detailed in the next subsection.

The FBFT transform

The Forward–Backward Fourier Transform (FBFT) process is a sophisticated signal processing technique employed to extract critical time information from Electroencephalogram (EEG) signals in the domain of brain activity analysis. This multifaceted method encompasses several essential steps. Initially, the EEG signal is partitioned into subarrays for streamlined processing. Subsequently, zero padding is applied to these subarrays, extending the signal’s length and enhancing the frequency analysis’s precision. The Fast Fourier Transform (FFT) is then executed on these zero-padded subarrays, facilitating the conversion of the signal from the time domain to the frequency domain for detailed analysis of its frequency components. The FBFT procedure involves an in-depth analysis of the minimum magnitude values resulting from both the forward and backward transformations, aiding in identifying dominant frequency components in the EEG signal. This comprehensive methodology allows for extracting essential spectral and time-varying features from EEG signals by eliminating signal frequency harmonies. This process provides valuable insights for disease diagnosis and analysis in neuroscience research. The FBFT process, which encapsulates the Forward–Backward Fourier Transform, can be mathematically represented by the following equation:

Where X(f, u) represents the result of the operation or transformation for a given frequency f and time u, f represents the signal frequency, u represents the time variable, e is the mathematical constant that represents the base of the natural logarithm, j is an imaginary unit, x(t) is the input signal as a function of time t, \(1_{\{t<u\}}\) is an indicator function that equals 1 if t belongs to the interval u and is zero otherwise. The mathematical foundation is further elucidated below.

Fourier transform The Fourier Transform is a powerful tool in signal processing and mathematics used to convert a signal from its original time or space domain into the frequency domain. The equation for the Fourier Transform is:

Inverse Fourier transform The Inverse Fourier Transform converts the frequency-domain signal back into its original time-domain form. This process is crucial for understanding how a signal can be reconstructed from its frequency components. It is given by the equation:

Unit function This function is fundamental in signal processing for representing binary states or switches.

Fourier transform of unit function

Where

\(\delta (f)\) is called Dirac Delta function and is crucial in sampling and reconstructing signals. It is infinitely high at \(f=0\) and zero elsewhere, with the integral over a small region around zero equal to 1.

Time-shifted Fourier transform For a time-shifted signal, if Fourier transform of x(t) is x(f)

If we put:

Fourier transform of \(1-U\left( t-t_0\right)\) :

It results in a combination of the delta function and a phase-shifted inverse frequency term.

Figure 4 visually demonstrates FBFT’s effectiveness in EEG signal analysis, offering insights into both time and frequency domains. It showcases FBFT’s ability to provide a comprehensive representation of a combined signal with frequencies of 10, 20, and 60, aiding in the understanding of the signal’s time-varying behavior and frequency component distribution.

Figure 5 demonstrates FBFT for forward and backward signal analysis applied to a simulated EEG signal. The accompanying pseudocode in Table 1 outlines the FBFT process for practical implementation.

Figure 6 provides a comparative analysis between FBFT, traditional signal processing techniques such as FFT41, Continuous Wavelet Transform (CWT)42, Discrete Wavelet Transform (DWT)43, the Power Spectrum (PS)46, and the Progressive Fourier Transform (PFT)47 when applied to a composite signal with known frequency components. FBFT distinguishes itself by its impressive capability to achieve precise time–frequency localization. In contrast, while FFT provides frequency information, it lacks the precision to pinpoint these frequency components in the time domain precisely. The Progressive Fourier Transform (PFT) technique enables the extraction of time–frequency-related insights from signals, but it doesn’t provide the same level of precision as FBFT when pinpointing and accurately characterizing frequency components within the time domain. Similarly, although CWT and DWT offer improved time–frequency resolution compared to FFT, they may still have limitations in capturing subtle shifts in the signal. The Power Spectrum (PS) represents another valuable analysis tool, illustrating the frequency content of the signal, but it may not provide the same time–frequency precision as FBFT. This illustrates the distinct advantages of FBFT in EEG signal analysis, combining the benefits of both time and frequency domain analysis, allowing for precise localization of frequency components.

A synthetic composite signal with three oscillations of different frequencies combined at different timings and its corresponding plots in time domain (i.e. (t vs x(t) top row), frequency domain (FFT, PSD) and TF CWT and DWT in the middle row, the PFT and the proposed TF plot using FBFT in the bottom row.

CNN-based classification of brain disorders

Deep learning, particularly CNNs, excels in pattern recognition and image classification, automatically extracting features from raw input images48. CNNs, including AlexNet49, GoogLeNet50, and SqueezeNet51, were employed for brain disorder diagnosis. These models have fixed input sizes: 256 \(\times\) 256 \(\times\) 3 for AlexNet, 224 \(\times\) 224 \(\times\) 3 for GoogleNet, and 227 \(\times\) 227 \(\times\) 3 for SqueezeNet. Input images were resized using cubic interpolation for each dataset. Random augmentations, such as flipping, translating, and scaling, were applied during training. Key parameters included the ADAM optimization algorithm, a mini-batch size of 16, an initial learning rate of 0.0001, and a maximum of 50 epochs. Model performance was assessed using metrics like accuracy, F1 score, sensitivity, recall, and precision, with an 80–20% train/test split.

Naked-eye classification of brain disorders using TF images of EEG

Our study primarily focuses on visually classifying brain diseases using transformed EEG images, a vital component to enhancing disease understanding and detection through visual and deep learning techniques. As part of our study, we conducted a brief 4-5 minute survey. Volunteers assess reference images of brain diseases (e.g., epilepsy, Alzheimer’s) and normal ones without disease indicators. Participants are initially tasked with visually studying these labeled images to identify distinct patterns or distinguishing features corresponding to each label. Subsequently, they are asked to provide their own judgment regarding whether an image appears normal or abnormal. Throughout this survey, we strictly adhere to ethical standards, encompassing informed consent, data privacy, voluntary participation, and institutional ethical approval. Our survey aims to collect valuable input and insights from participants, significantly contributing to the development of practical approaches for healthcare practitioners in the visual analysis-based detection of brain and heart diseases. The experimental protocols utilized in this study were approved by Hamad Bin Khalifa University Institutional Review Board (HBKU-IRB) under the reference number ’HBKU-IRB-2024-68’. The HBKU-IRB, as the named institutional review committee, conducted a comprehensive review in line with ethical standards and regulatory requirements. Participants provided informed consent, and the study was conducted in adherence to the approved protocols and principles covered in the CITI program.

Results

The main goal of this research is to improve the interpretability of EEG signals by using the novel FBFT transform to convert them into time–frequency (TF) images. This transformation enhances the accuracy of visual inspection and deep learning-based classification of brain diseases. By representing brain activity as sinusoidal oscillations instead of voltage changes at specific time points, we gain a better understanding of EEG signals. These sinusoidal wave-like patterns capture brain oscillations, and TF analysis provides insights into their frequency, amplitude, and phase over time.

For illustration, this study generates two types of TF images from the signals. The RGB and concatenating images to generate scalo- grams or spectrograms for each time-framed window of EEG data, as illustrated in Fig. 2. Table 2 shows an example of TF analysis and its corresponding 2D TF images for the stress dataset. Furthermore, Table 3 displays the FBFT images for the four datasets used in this study.

In parallel with these visual representations, three pre-trained models—GoogleNet, SqueezeNet, and AlexNet— were employed to diagnose various brain disorders. Performance metrics are summarized in Table 4, and Fig. 7a shows the confusion matrices for the best models. For epileptic seizure diagnosis32, the four selected channels ’T7-FT9/FT9-FT10/FT10-T8/’FZ-CZ’ were converted to TF RGB images using the proposed FBFT transform with a 1-second time window and 0.25-second overlap. The accuracies achieved by the three models were 99.56%, 99.82%, and 99.39%, respectively. For Alzheimer’s disease diagnosis52, six optimal channels P3/O2/T6/O1/F8/Pz were selected, and the FBFT with a 2-second time window and 1.5-second overlap was applied to obtain RGB TF images. The models achieved accuracies of 95.91%, 93.03%, and 91.72%, respectively. In the context of murmur detection, the MIT Physionet dataset is used, which contains heart sound recordings35. Following the removal of NaN values, the FBFT transform was applied to the selected four channels ’PV, TV, MV, and AV’, resulting in TF images for both murmur and no murmur classes. The models achieved accuracies of 85.1%, 81.56%, and 79.64%, respectively. For automatic detection of stress33, four channels ’TP9, AF7, AF8, and TP10’ were transformed to TF images using the proposed FBFT transform, with a 1-second time window and no overlap. The three pre-trained models achieved accuracies of 100%, 99.36%, and 99.36%, respectively.

Another significant contribution of this research involved the visual classification of brain disorders by medical experts without relying on automated algorithms. A survey with 125 participants was conducted. They visually classified disorders by examining novel FBFT images of EEG signals related to the four datasets. The survey included the classification of 10,000 images, resulting in overall accuracies of 78.6%, 71.9%, 82.7%, and 91.0% for epilepsy, Alzheimer’s disease (AD), murmurs, and mental stress, respectively. Detailed classification outcomes are presented in the confusion matrix in Fig. 7b.

Discussion

This study introduces FBFT, a novel method for transforming EEG signals into time–frequency (TF) images, enhancing their visual interpretability. These TF images are then used to train pre-trained CNN models for diagnosing epilepsy, Alzheimer’s disease, murmurs, and mental stress. Additionally, we explore manual classification by visually inspecting TF images, demonstrating the effectiveness of our models. Table 5 compares our model’s performance with existing methods for diagnosing these disorders. Our pre-trained models, utilizing FBFT-based TF images from just four to six EEG channels, consistently outperform alternatives in accuracy. This study also introduces a manual classification approach using TF images, showcasing its effectiveness. Expanding our approach to diverse neurological disorders presents challenges, including adapting to inherent EEG signal variability and securing representative datasets. The necessity for diverse datasets for each disorder may pose challenges in data availability. Considerations for generalization involve addressing individual variations in brain activity and potential use of disorder-specific feature extraction techniques. Computational demands of the FBFT and CNN models may increase, requiring optimizations for scalability. Addressing these challenges involves thoroughly understanding each disorder’s unique characteristics and continuous methodology refinement. While promising for specific disorders, applying the method to a broader range requires addressing challenges related to variability, dataset diversity, and computational efficiency. These considerations offer a nuanced view of the method’s applicability, signaling areas for future research and development.

The initial survey, involving 125 participants, has yielded promising accuracy, serving as a foundation for future research endeavors. Subsequent investigations will encompass more extensive participant cohorts and heightened statistical analyses. Although our current methodology demonstrates proficiency in classifying four specific disorders, its applicability extends to a broader spectrum of neurological conditions using EEG data. However, we acknowledge the necessity for validation in such expansions. Recognizing the critical nature of visual classification by medical professionals, our study diligently addresses the associated reliability and potential biases. We openly acknowledge the constraints of subjective interpretation, emphasizing the importance of inter-rater reliability assessments, and recognizing the impact of individual expertise on classification accuracy. To foster transparency and mitigate biases, we have expanded our discussion on ethical considerations, elucidating survey details such as participant numbers, duration, and specific instructions. Furthermore, we advocate for the continued enhancement of our manual classification approach by integrating additional diagnostic criteria and comparative analyses with automated algorithms. Detailed insights into classification outcomes, presented in Fig. 7b, offer valuable perspectives on observed patterns in the confusion matrix. Our outlined future research plans underscore a commitment to larger participant pools, more robust statistical analyses, and potential extensions to diverse neurological conditions, collectively contributing to a nuanced comprehension of the human interpretation and validation processes intrinsic to our study.

Conclusion

In summary, this study presents the Forward Backward Fourier Transform (FBFT), a groundbreaking method for transforming EEG signals into interpretable time–frequency (TF) images, showcasing its effectiveness in terms of accuracy and computational efficiency, particularly when applied to a limited number of EEG channels. The proposed FBFT time–frequency transform provides a robust means of converting EEG data into 2D images, a critical step in analyzing dynamic signals like EEG. Leveraging these TF images, we employed various pre-trained CNN models to diagnose brain disorders, achieving remarkable accuracy rates as detailed in the results section. Notably, our approach maintains computational efficiency by focusing on a selected set of EEG channels, rendering it practical for real-world applications.

A noteworthy innovation in this research is the introduction of naked-eye-based classification, where human experts visually assessed TF images. This manual evaluation achieved noteworthy accuracy rates of 78.6%, 71.9%, 82.7%, and 91.0% for epilepsy, AD, murmur, and mental stress, respectively, presented in Fig. 7b. This visual inspection underscores our method’s potential as a complementary tool for brain disorder diagnosis. Beyond its immediate applications, this methodology holds promise for developing advanced EEG-based diagnostic tools for various neurological disorders. Our approach is robust, adaptable, and extensible, offering the potential to expand the scope of neurological disorders studied and to create real-time applications that assist specialists in the efficient and automated identification of various neurological conditions from EEG data. However, several limitations should be considered. Firstly, the study focused on specific brain disorders, such as epilepsy, Alzheimer’s disease (AD), murmur, and mental stress, leaving room for exploration of its applicability to a more comprehensive spectrum of neurological conditions. Additionally, the reliance on pre-trained CNN models, while effective, introduces a dependency on the quality and applicability of these models to diverse datasets. It is crucial to acknowledge that the accuracy rates achieved, mainly through naked-eye-based classification, may vary based on the expertise of human assessors and the inherent subjectivity of visual inspection.

In conclusion, this study introduces the Forward Backward Fourier Transform (FBFT) as a groundbreaking method for transforming EEG signals into interpretable time–frequency (TF) images. The research showcases its effectiveness, particularly in accuracy and computational efficiency, mainly when applied to a limited number of EEG channels. The proposed FBFT time–frequency transform serves as a robust means of converting EEG data into 2D images, a critical step in analyzing dynamic signals like EEG. Leveraging these TF images, our study employed various pre-trained CNN models, achieving remarkable accuracy rates, as detailed in the results section. An essential advantage of our approach is its computational efficiency, emphasizing a selected set of EEG channels, making it practical for real-world applications. This efficiency ensures quicker and more accessible diagnosis, enhancing its potential for widespread clinical use and making it a valuable tool in the realm of neurological disorder detection and diagnosis.

As a future direction, we plan to conduct extensive testing across diverse datasets, exploring alternative neural network architectures beyond pre-trained CNN models. Additionally, we aim to develop user-friendly interfaces for real-time applications, streamlining the efficient and automated identification of neurological conditions by healthcare specialists. Collaborative efforts with experts in neurology and related fields will be instrumental in refining and validating the methodology across a broad spectrum of neurological disorders. Moreover, we anticipate conducting longitudinal studies and clinical trials to thoroughly assess the robustness and reliability of our approach in real-world medical scenarios.

Data availibility

The datasets analyzed during the current study are available in specified repositories. The Murmur Dataset can be accessed on PhysioNet at [https://doi.org/10.13026/g02k-a047]. The Epilepsy Dataset is available on PhysioNet at [https://doi.org/10.13026/C2K01R]. The Alzheimer Dataset is stored on OpenNeuro with the persistent web link [10.18112/openneuro.ds004504.v1.0.4]. Lastly, the Bird et al. Dataset is hosted on Kaggle, and the data can be accessed at [https://www.kaggle.com/datasets/birdy654/eeg-brainwave-dataset-mental-state] The data collected from the survey conducted for this study are not publicly available due to privacy restrictions. However, the survey link can be provided upon request for interested individuals to participate in the survey. The findings and analysis derived from the survey data will be presented and discussed in this paper without disclosing any personally identifiable information. For more information or to request access to the survey link, please contact niam27832@hbku.edu.qa.

References

Lopez, S., Suarez, G., Jungreis, D., Obeid, I. & Picone, J. Automated identification of abnormal adult eegs. in 2015 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), 1–5 (IEEE, 2015).

Congedo, M., Barachant, A. & Bhatia, R. Riemannian geometry for EEG-based brain–computer interfaces: A primer and a review. Brain–Comput. Interfaces 4, 155–174. https://doi.org/10.1080/2326263X.2017.1297192 (2017).

Y., L. Y. B. & G., H. Deep learning. Nature521, 436–444, https://doi.org/10.1038/nature14539 (2015).

Hussain, I. & Park, S. J. Healthsos: Real-time health monitoring system for stroke prognostics. IEEE Access 8, 213574–213586 (2020).

Zhang, R., Jia, J. & Zhang, R. Eeg analysis of Parkinson’s disease using time–frequency analysis and deep learning. Biomed. Sign. Process. Control 78, 103883 (2022).

Ozdemir, M. A., Cura, O. K. & Akan, A. Epileptic EEG classification by using time–frequency images for deep learning. Int. J. Neural Syst. 31, 2150026 (2021).

Radhakrishnan, M., Ramamurthy, K., Choudhury, K. K., Won, D. & Manoharan, T. A. Performance analysis of deep learning models for detection of autism spectrum disorder from EEG signals. Traitement du Signal38 (2021).

Chen, H., Song, Y. & Li, X. A deep learning framework for identifying children with ADHD using an EEG-based brain network. Neurocomputing 356, 83–96 (2019).

Morales, S. & Bowers, M. E. Time–frequency analysis methods and their application in developmental EEG data. Dev. Cogn. Neurosci. 54, 101067. https://doi.org/10.1016/j.dcn.2022.101067 (2022).

Acharya, U. R., Oh, S. L., Hagiwara, Y., Tan, J. H. & Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 100, 270–278 (2018).

Sun, M., Wang, F., Min, T., Zang, T. & Wang, Y. Prediction for high risk clinical symptoms of epilepsy based on deep learning algorithm. IEEE Access 6, 77596–77605 (2018).

Mao, W., Fathurrahman, H., Lee, Y. & Chang, T. Eeg dataset classification using CNN method. in Journal of Physics: Conference Series, vol. 1456, 012017 (IOP Publishing, 2020).

Zhang, J., Wei, Z., Zou, J. & Fu, H. Automatic epileptic EEG classification based on differential entropy and attention model. Eng. Appl. Artif. Intell. 96, 103975 (2020).

Akbarian, B. & Erfanian, A. A framework for seizure detection using effective connectivity, graph theory, and multi-level modular network. Biomed. Sign. Process. Control 59, 101878 (2020).

Gabr, R., Shahin, A., Sharawi, A. & Aouf, M. A deep learning identification system for different epileptic seizure disease stages. J. Eng. Appl. Sci. 67, 925–944 (2020).

Nasiri, S. & Clifford, G. D. Generalizable seizure detection model using generating transferable adversarial features. IEEE Sign. Process. Lett. 28, 568–572 (2021).

Zhang, B. et al. Cross-subject seizure detection in EEGs using deep transfer learning. Comput. Math. Methods Med.2020 (2020).

Mouleeshuwarapprabu, R. & Kasthuri, N. Nonlinear vector decomposed neural network based EEG signal feature extraction and detection of seizure. Microprocess. Microsyst. 76, 103075 (2020).

Harpale, V. & Bairagi, V. An adaptive method for feature selection and extraction for classification of epileptic EEG signal in significant states. J. King Saud Univ.-Comput. Inf. Sci. 33, 668–676 (2021).

Khan, K. A., Shanir, P., Khan, Y. U. & Farooq, O. A hybrid local binary pattern and wavelets based approach for EEG classification for diagnosing epilepsy. Expert Syst. Appl. 140, 112895 (2020).

Xu, Y., Yang, J., Ming, W., Wang, S. & Sawan, M. Deep learning for short-latency epileptic seizure detection with probabilistic classification. arXiv preprintarXiv:2301.03465 (2023).

Fiscon, G. et al. Combining EEG signal processing with supervised methods for Alzheimer’s patients classification. BMC Med. Inform. Decis. Mak. 18, 1–10 (2018).

Amini, M., Pedram, M. M., Moradi, A., Ouchani, M. et al. Diagnosis of alzheimer’s disease by time-dependent power spectrum descriptors and convolutional neural network using EEG signal. Comput. Math. Methods Med.2021 (2021).

Duan, F. et al. Topological network analysis of early Alzheimer’s disease based on resting-state EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 2164–2172 (2020).

Martínez-Rodrigo, A., García-Martínez, B., Huerta, Á. & Alcaraz, R. Detection of negative stress through spectral features of electroencephalographic recordings and a convolutional neural network. Sensors 21, 3050 (2021).

Malviya, L. & Mal, S. A novel technique for stress detection from EEG signal using hybrid deep learning model. Neural Comput. Appl. 34, 19819–19830 (2022).

Hossain, S. A. et al. Emotional state classification from music-based features of multichannel EEG signals. Bioengineering 10, 99 (2023).

Chatterjee, D., Gavas, R. & Saha, S. K. Detection of mental stress using novel spatio-temporal distribution of brain activations. Biomed. Signal Process. Control 82, 104526 (2023).

Walker, B. et al. Dual bayesian resnet: A deep learning approach to heart murmur detection. in 2022 Computing in Cardiology (CinC), vol. 498, 1–4 (IEEE, 2022).

Singstad, B.-J. et al. Phonocardiogram classification using 1-dimensional inception time convolutional neural networks. in 2022 Computing in Cardiology (CinC), vol. 498, 1–4 (IEEE, 2022).

Wen, H. & Kang, J. Searching for effective neural network architectures for heart murmur detection from phonocardiogram. in 2022 Computing in Cardiology (CinC), vol. 498, 1–4 (IEEE, 2022).

Shoeb, A. H. Application of Machine Learning to Epileptic Seizure Onset Detection and Treatment. Ph.D. thesis, Massachusetts Institute of Technology (2009).

Bird, J. J., Manso, L. J., Ribeiro, E. P., Ekart, A. & Faria, D. R. A study on mental state classification using eeg-based brain-machine interface. in 2018 International Conference on Intelligent Systems (IS), 795–800 (IEEE, 2018).

Miltiadous, A. et al. A dataset of scalp EEG recordings of Alzheimer’s disease, frontotemporal dementia and healthy subjects from routine eeg. Datahttps://doi.org/10.3390/data8060095 (2023).

Oliveira, J. et al. The circor digiscope phonocardiogram dataset. version 1.0. 0 (2022).

Oliveira, J. et al. The circor digiscope dataset: From murmur detection to murmur classification. IEEE J. Biomed. Health Inform. 26, 2524–2535 (2021).

Duun-Henriksen, J. et al. Channel selection for automatic seizure detection. Clin. Neurophysiol. 123, 84–92 (2012).

Morales, S. & Bowers, M. E. Time-frequency analysis methods and their application in developmental EEG data. Dev. Cogn. Neurosci. 54, 101067 (2022).

Zhou, M. et al. Epileptic seizure detection based on EEG signals and CNN. Front. Neuroinform. 12, 95 (2018).

Khan, N. A., Mohammadi, M., Ghafoor, M. & Tariq, S. A. Convolutional neural networks based time–frequency image enhancement for the analysis of EEG signals. Multidimens. Syst. Signal Process. 33, 863–877 (2022).

Mehla, V. K., Singhal, A., Singh, P. & Pachori, R. B. An efficient method for identification of epileptic seizures from EEG signals using Fourier analysis. Phys. Eng. Sci. Med. 44, 443–456 (2021).

Mallat, S. & Mallat, C. 7.2 classes of wavelet bases. A Wavelet Tour of Signal Processing; Elsevier Science & Technology: Amsterdam, The Netherlands 241–254 (1999).

Daubechies, I. Ten. Lectures on Wavelets (SIAM, 1992).

Alickovic, E., Kevric, J. & Subasi, A. Performance evaluation of empirical mode decomposition, discrete wavelet transform, and wavelet packed decomposition for automated epileptic seizure detection and prediction. Biomed. Signal Process. Control 39, 94–102 (2018).

Rout, S. K. & Biswal, P. K. An efficient error-minimized random vector functional link network for epileptic seizure classification using VMD. Biomed. Sign. Process. Control 57, 101787 (2020).

Priestley, M. Power spectral analysis of non-stationary random processes. J. Sound Vib. 6, 86–97 (1967).

Belhaouari, S. B., Talbi, A., Hassan, S., Al-Thani, D. & Qaraqe, M. Pft: A novel time-frequency decomposition of bold fMRI signals for autism spectrum disorder detection. Sustainability 15, 4094 (2023).

Khan, M. A. et al. A resource conscious human action recognition framework using 26-layered deep convolutional neural network. Multimed. Tools Appl. 80, 35827–35849 (2021).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst.25 (2012).

Zeng, G. et al. Going deeper with convolutions christian. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit.(CVPR), 1–9 (2015).

Iandola, F. N. et al. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size. arXiv preprintarXiv:1602.07360 (2016).

Miltiadous, A. et al. Alzheimer’s disease and frontotemporal dementia: A robust classification method of EEG signals and a comparison of validation methods. Diagnostics 11, 1437 (2021).

Jiang, L. et al. Seizure detection algorithm based on improved functional brain network structure feature extraction. Biomed. Sign. Process. Control 79, 104053 (2023).

Amiri, M., Aghaeinia, H. & Amindavar, H. R. Automatic epileptic seizure detection in EEG signals using sparse common spatial pattern and adaptive short-time fourier transform-based synchrosqueezing transform. Biomed. Sign. Process. Control 79, 104022 (2023).

Assali, I. et al. CNN-based classification of epileptic states for seizure prediction using combined temporal and spectral features. Biomed. Sign. Process. Control 82, 104519 (2023).

Hassan, K. M., Islam, M. R., Nguyen, T. T. & Molla, M. K. I. Epileptic seizure detection in EEG using mutual information-based best individual feature selection. Expert Syst. Appl. 193, 116414 (2022).

Xiong, Y. et al. Seizure detection algorithm based on fusion of spatio-temporal network constructed with dispersion index. Biomed. Sign. Process. Control 79, 104155 (2023).

Shen, M., Wen, P., Song, B. & Li, Y. An EEG based real-time epilepsy seizure detection approach using discrete wavelet transform and machine learning methods. Biomed. Sign. Process. Control 77, 103820 (2022).

Bird, J. J., Kobylarz, J., Faria, D. R., Ekárt, A. & Ribeiro, E. P. Cross-domain MLP and CNN transfer learning for biological signal processing: EEG and EMG. IEEE Access 8, 54789–54801 (2020).

Dehnavi, M. S., Dehnavi, V. S. & Shafiee, M. Classification of mental states of human concentration based on eeg signal. In 2021 12th International Conference on Information and Knowledge Technology (IKT), 78–82 (IEEE, 2021).

Bird, J. J., Pritchard, M., Fratini, A., Ekárt, A. & Faria, D. R. Synthetic biological signals machine-generated by GPT-2 improve the classification of EEG and EMG through data augmentation. IEEE Robot. Autom. Lett. 6, 3498–3504 (2021).

Miltiadous, A. et al. Enhanced Alzheimer’s disease and frontotemporal dementia EEG detection: Combining lightgbm gradient boosting with complexity features. In 2023 IEEE 36th International Symposium on Computer-Based Medical Systems (CBMS), 876–881 (IEEE, 2023).

Miltiadous, A., Gionanidis, E., Tzimourta, K. D., Giannakeas, N. & Tzallas, A. T. Dice-net: A novel convolution-transformer architecture for Alzheimer detection in EEG signals. IEEE Access (2023).

Acknowledgements

The authors would like to thank Hamad Bin Khalifa University (HBKU) and Qatar National Library for their invaluable support during this study. Open Access funding is provided by the Qatar National Library.

Author information

Authors and Affiliations

Contributions

Conceptualization, S.B.B., methodological approach, N.S.A., algorithm, N.S.A., experiments’ conduction, N.S.A., validation, N.S.A., detailed review, N.S.A., evaluation, N.S.A., S.B.B, writing manuscript preparation, N.S.A, supervision, S.B.B. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amer, N.S., Belhaouari, S.B. Exploring new horizons in neuroscience disease detection through innovative visual signal analysis. Sci Rep 14, 4217 (2024). https://doi.org/10.1038/s41598-024-54416-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54416-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.