Abstract

The quasi-Poisson regression model is used for count data and is preferred over the Poisson regression model in the case of over-dispersed count data. The quasi-likelihood estimator is used to estimate the regression coefficients of the quasi-Poisson regression model. The quasi-likelihood estimator gives sub-optimal estimates if regressors are highly correlated—multicollinearity issue. Biased estimation methods are often used to overcome the multicollinearity issue in the regression model. In this study, we explore the ridge estimator for the quasi-Poisson regression model to mitigate the multicollinearity issue. Furthermore, we propose various ridge parameter estimators for this model. We derive the theoretical properties of the ridge estimator and compare its performance with the quasi-likelihood estimator in terms of matrix and scalar mean squared error. We further compared the proposed estimator numerically through a Monte Carlo simulation study and a real-life application. We found that both the simulation and application results show the superiority of the ridge estimator, particularly with the best ridge parameter estimator, over the quasi-likelihood estimator in the presence of multicollinearity issue.

Similar content being viewed by others

Introduction

Regression analysis is widely used for predicting or identifying the factors associated with the response variable. Several types of regression models are developed that depend on the distribution of the response variable, and the type of relationship (linear vs non-linear) to measure the average relationship between the response variable and one or more explanatory variable variables such as the generalized linear model (GLM)1 and some other models. The GLM has various applications in science, engineering, business and some others2,3.

The count models are used to examine the factors that influence the response variable which is in positive integers such as 0, 1, 2, 3, 4…, etc.4,5. Several count regression type models are developed such as the Poisson regression model (PRM), Quasi-Poisson regression model (QPRM), negative binomial regression model (NBRM), bell regression model (BRM) and Conway Maxwell Poisson regression model (CMPRM). These models are used in various situations. The PRM6 is used when the response variable has a Poisson distribution with identical average and dispersion7.

In real-life datasets, the assumption of equal mean and variance, as postulated by PRM, often is violated. Over-dispersion occurs when the variance of the response variable exceeds its mean. The NBRM is used to handle over-dispersion8. However, NBRM requires a large number of samples and the response variable must have positive numbers. This model is commonly applied to count data and over-dispersion scenarios9. Conway and Maxwell introduced the Conway–Maxwell PRM (CMPRM) in 1962, adept at addressing both over and under-dispersion in count data10,11. Additionally, the QPRM is a generalization of the PRM and is commonly used for modeling an over-dispersed count variable12. The QPRM is an alternative to the NBRM13 and is recommended when the variance is a linear function of the mean13.

The quasi-likelihood estimator (QLE) is used to estimate the regression coefficients of the QPRM14. However, it produces inefficient estimates when the regressors are highly correlated known as multicollinearity. This term was used by Frisch15 for the first time in the linear regression model. When multicollinearity exists in a QPRM, the QLE produces large variances, leading to incorrectly signed regression coefficients and wider confidence intervals. This issue further results in inflated standard errors of regression coefficients, potentially leading to inaccurate outcomes16.

Biased estimation methods are commonly used to mitigate the impact of multicollinearity. Several biased estimation methods have been developed to diminish the impact of multicollinearity in producing more reliable estimates17,18,19. The ridge estimator (RE) is a popular method to deal with the issue of multicollinearity in the linear regression model20. It shrinks the coefficients of the model and diminishes the impact of multicollinearity. A key component of the RE is the biasing parameter k crucial for optimizing regression coefficients. Several studies found the optimal k value by minimizing the Mean Squared Error (MSE) to enhance the estimator's performance20,21,22,23,24,25,26,27,28. Various studies determine the optimal value of biasing parameters in RE with different probability-distributed models7,18,25,29,30,31,32,33.

Various studies proposed the REs to diminish the impact of multicollinearity in a count regression model such as, RE for Poisson ridge regression7,18,34,35, RE for the NBRM30,36, RE for Bell regression model32 and more recently, the RE for the CMPRM37.

It is evident from the literature that several studies proposed REs and some new biasing parameters for the linear regression model, count regression model and GLMs. Furthermore, these studies attempted to find the best ridge parameter estimators (RPEs) of the above models. However, no study has investigated RE for the QPRM. In this study, we aimed to investigate the RE for the QPRM to deal with multicollinearity and over-dispersion and compare the RE and the QLE in the QPRM. Furthermore, we aim to introduce diverse RPEs tailored for the QPRM's ridge estimator and assess their performance to identify the most effective one.

The rest of the paper is organized as follows: section “Methodology” will cover the structure, estimation, and properties of the QPRM. Additionally, we will introduce the RPEs in this section. The simulation study’s setting, its results and application will be discussed in section “Numerical evaluation”. Section “Application: apprentice migration dataset” will discuss the results of real-life application. The concluding remarks will be given at the end of this paper.

Methodology

The QPRM

The QPRM is used in the situation, where the dependent variables, \({y}_{i}\) is in counting form, follows the Poisson distribution and its variance is greater than its mean. The quasi-Poisson (QP) distribution is considered for analyzing the over-dispersion condition in the datasets. For detailed discussion and properties, see Efron38 and Istiana et al.39. The QP distribution has a relation with the Poisson distribution when the dispersion parameter is equal to 1. Let us consider Y, which represents the response variable selected from the QP distribution with parameters \(\mu {\text{ and }} \gamma\). The mean and variance of the QP are respectively given by

where µ > 0, and \(\gamma\) is an over-dispersion parameter. The close relationship between the expectation and variance shows that variance is a function of its average. The QPRM is characterized by the first two moments (mean and variance) as discussed by Wedderburn12, but Efron38 and Gelfand and Dalal40 showed how to create a distribution for this model; however, it requires re-parameterization. Estimation often proceeds from the first two moments and estimating equations14. The likelihood function for the QPRM (quasi-likelihood) does not require a specific probability density function to estimate regression parameters except for the response variable assumption41. The formation of the QL function begins in the same way as the usual likelihood function. The QLE is obtained by minimizing the Quasi-log likelihood function that is given by Wakefield42

Now differentiate (3) w.r.t. \({\mu }_{i}\), and equating to zero, we have the Quasi-score function

where \(\mu =exp\left(X\beta \right)\). Here \(X\) is a covariates matrix of order \(n \times (p + 1)\) and \(\beta\) is the column vector of regression coefficients of order \((p + 1) \times 1\).

As Eq. (4) is non-linear in \(\beta\), so, the regression parameters of the QP model can be calculated using iterative reweighted least square (IWLS). The equation used in calculating the IWLS with \(t+1\) iterations can be written as13

where \({\widehat{{\text{W}}}}_{i}^{\left[t\right]}=\frac{1}{V({\mu }_{i}^{\left[t\right]})}{\left(\frac{\partial {\mu }_{i}^{\left[t\right]}}{\partial {\eta }_{i}^{\left[t\right]}}\right)}^{2},\) \({m}_{i}^{\left[t\right]}={\eta }_{i}^{\left[t\right]}+\frac{\left({y}_{i}-{\mu }_{i}^{\left[t\right]}\right)}{\frac{\partial {g}^{-1}\left({\eta }_{i}^{\left[t\right]}\right)}{\partial {\eta }_{i}^{\left[t\right]}}}\), \({\eta }_{i}^{\left[t\right]}={x}_{i}^{\prime}{\beta }^{\left[t\right]}\),

where \({\mu }_{i}={\text{exp}}\left({\eta }_{i}\right)\). So, \({m}_{i}={\text{log}}\left({\mu }_{i}\right)+\frac{{y}_{i}-{\mu }_{i}}{{\mu }_{i}}\). The QLE of \(\beta\) at the final iteration is defined as

where \(F={{\text{X}}}^{{{\prime}}}\widehat{{\text{W}}}{\text{X and }}\widehat{\text{ W}}={\text{diag}}\left(\frac{1}{V(\widehat{\mu })}{\left(\frac{\partial \widehat{\mu }}{\partial \eta }\right)}^{2} \right).\) The QLE is normally distributed with a covariance matrix that corresponds to the inverse of the matrix of the second derivatives:

Furthermore, the MSE of the \({\widehat{\beta }}_{QLE}\) is given as

By applying the trace on both sides of Eq. (8), we have

where \({\lambda }_{j}\) is the jth eigenvalue of the matrix \(F\). When the explanatory variables in the QPRM are highly correlated, then the weighted matrix of cross-products of \(F,\) is ill-conditioned, and the QLE gives inefficient results with larger variances. In this condition, it is difficult to interpret the estimated coefficients since the vector of parameters is on average too large.

The quasi Poisson ridge regression estimator

When the multicollinearity is present among the explanatory variables in the QPRM, then the QLE does not perform efficiently, it gives a large variance of estimated coefficients. To minimize the effects of multicollinearity, the RR estimation method was introduced by Hoerl and Kennard20. In this study, we are proposing the quasi-Poisson ridge regression estimator (QPRRE) applied to the count and over-dispersed data that minimizes the effects of multicollinearity. So, the QPRRE is defined by

where \(F={{\text{X}}}^{{{\prime}}}\widehat{{\text{W}}}{\text{X}}\) and k is the biasing parameter and \({I}_{p+1}\) is the identity matrix with order \((p+1)\times (p+1)\). The bias, the covariance and the matrix MSE (MMSE) of the \({\widehat{\beta }}_{k}\) can be derived as follows

where \({\Lambda }_{k}=diag({\lambda }_{1}+k,{\lambda }_{2}+k,\ldots,{\lambda }_{p+1}+k)\) and \(\Lambda =diag({\lambda }_{1},{\lambda }_{2}, {\lambda }_{3}, \ldots,{\lambda }_{p+1})=T\left(F\right){T}^{\prime}\), where the orthogonal matrix \(T\) has eigenvectors of \(F\). Finally, the scalar MSE of the QPRRE can be estimated by applying trace on Eq. (13), which can be defined as

where \({\alpha }_{j}={{T}^{\prime}\widehat{\beta }}_{QLE}\) and \({\lambda }_{j}\) is the jth eigenvalue of the F matrix.

The superiority of the QPRRE to the QLE

To explore the superiority of the RR estimator over others, Hoerl and Kennard20 proposed the statements for the properties of the MSE of the RR estimators in the LRM. Here, we will prove that the theorems also hold for the QPRM and according to these theorems, we will explore the supremacy of the QPRRE over the QLE.

Theorem 3.1

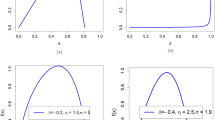

The variance \({M}_{1}\left(k\right)\) and squared bias \({M}_{2}\left(k\right)\) are respectively continues, monotonically decreasing and increasing functions of k, since \(k>0\) and \({\lambda }_{j}>0\).

Proof

The 1st derivative of \({M}_{1}\left(k\right)\) and \({M}_{2}\left(k\right)\) from Eq. (14) concerning k are.

and

The \(\widehat{\gamma },k,{\lambda }_{j}\) and \({{\alpha }_{j}}^{2}\) are positive, Eq. (15) shows that the \({M}_{1}\left(k\right)\) is a continuous and monotonically decreasing function of k, since \(k>0\) and \({\lambda }_{j}>0\). Equation (16) shows that the \({M}_{2}\left(k\right)\) is a continuous and monotonically increasing function of k.

Theorem 3.2

There always exists a \(k>0\) and the \(MSE\left({\widehat{\beta }}_{k}\right)<MSE\left({\widehat{\beta }}_{QLE}\right).\)

Proof

The 1st derivative of Eq. (14) concerning k is given by

Equation (17), clearly shows that the sufficient condition for \(\frac{dMSE\left({\widehat{\beta }}_{k}\right)}{dk}\) to be less than zero is \(\frac{\widehat{\gamma }}{{{\alpha }_{j}}_{max}^{2}}<0\).

Selection of the biasing parameters

The RR estimator is based on the RPE which has a main role in its estimation. To minimize the effects of high correlation among the explanatory variables, the optimal value of the shrinkage parameter (k) is the main concern of the RR. The RPEs for different regression models have been suggested by many investigators and find the optimal biasing parameter20,24,31,37,43,44. Firstly, Hoerl and Kennard20 presented the ridge estimation method to mitigate the effect of a high degree of correlation for the LRM. This estimator was also used for the gamma regression model (GRM)44, and for the CMPRM37. For the QPRRE, it is defined as

where \(\widehat{\gamma }\) is the estimated dispersion parameter.

Hoerl et al.24 proposed the shrinkage parameter estimator for the RR in the LRM and we are adapting this estimator for the QPRRE as

Amin et al.16,44 developed an RPE for the inverse Gaussian ridge regression (IGRR)

Akram et al.45 proposed the following RPEs of the GRM’s RR.

Given above stated studies, we proposed the following RPEs for the QPRRE as

Numerical evaluation

In this section, a Monte Carlo simulation will be conducted to examine the performance of the RPEs in the QPRRE along with the QLE.

Simulation layout

In the QPRM, the response variable is generated from the quasi-Poisson distribution \(({\mu }_{i}, \gamma )\), where

where \({\beta }_{p}\) shows the regression parameters of the QPRM. These parameters are selected under the condition of \(\sum_{j=1}^{p+1}{\beta }_{j}^{2}=1\). And, the following formula is used to generate the correlated regressors.

where \({\rho }^{2}\) shows the correlation between the regressors and \({z}_{ij}\) are the independent standard normal pseudo-random numbers. We consider different values of \(\rho\) corresponding to 0.80,0.90,0.95 and 0.99. We also consider different values of \(n,p, \gamma\). Here, \(n\) represents the sample size that is assumed to be 25, 50, 100, 150, 200 for p = 3, 6 and n = 50, 100, 150, 200 for p = 12, \(p\) shows the number of regressors that are assumed to be 3, 6, 12 and \(\gamma\) indicates the dispersion parameter that is taken to be 2, 4, 6. This simulation is replicated 2000 times with the different combinations of \(n, p, \gamma , \rho\). To check the dominance of our proposed ridge estimator with different RPEs, we use the MSE as the performance evaluation method defined by

where \(V\) represents the number of replications and \(\left({\widehat{\beta }}_{i}-\beta \right)\) shows the difference between the true parameter and predicted vectors of the proposed estimator and QLE at ith replication. The R programming language is used for all calculations related to our study.

Simulation results and discussions

The estimated MSEs of the QPRREs are given in Tables 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18. We evaluate the performance of different RPEs for the QPRRE as compared to the QLE in the presence of multicollinearity, the sample size, dispersion and the number of regressors.

The simulation findings extracted from Tables 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36 are summarized as follows:

-

(i)

The basic purpose of this simulation study is to study the performance of our proposed RPEs for the QPRRE in the presence of multicollinearity. As the multicollinearity level increases with fixed the number of predictors, the sample size, and dispersion, the estimated MSEs increase for all the estimation methods under study. However, at the high level of multicollinearity and with a larger sample size, mostly the QPRRE with RPEs \({\widehat{k}}_{3},\, {\widehat{k}}_{5},\, {\widehat{k}}_{9},\, {\widehat{k}}_{11},\, {\widehat{k}}_{12}\, and \,{\widehat{k}}_{16}\) gives the smaller MSEs as compared to the QPRRE with other RPEs and the QLE.

-

(ii)

The other factor that may affect the estimated MSEs of the QPRRE and the QLE is the number of regressors. According to the results of the simulation, we noticed that the estimated MSEs of the estimators and the number of regressors are directly proportional; it means that when the regressors increase by fixing the other factors, the estimated MSEs of QPRRE and the QLE also increase.

-

(iii)

When we see the effect of sample size on the MSEs of the estimators, it is observed that the relation between the estimated MSEs and the sample size is inverse. As the sample size increases by fixing the other factors the estimated MSEs decrease. Results show that mostly the QPRRE with RPEs \({\widehat{k}}_{3},\, {\widehat{k}}_{5},\, {\widehat{k}}_{9},\, {\widehat{k}}_{11},\, {\widehat{k}}_{12} \,and\, {\widehat{k}}_{16}\) performs well as compared to the other RPEs and the QLE based on minimum estimated MSE.

-

(iv)

According to the results, in every situation such as the different combinations of multicollinearity, different sample size, different number of explanatory variables and different levels of dispersion, the proposed QPRRE with different RPEs outperforms the QLE based on the minimum MSE.

-

(v)

According to the findings of simulation for all the conditions under study, the estimated MSE of the QLE is always greater than all suggested RPEs for QPRRE. We also noticed that the suggested QPRRE is significantly decreasing the estimated MSE. Finally, it is concluded that the proposed RPEs for the QPRRE perform well and give better results than the QLE due to the minimum estimated MSE under certain conditions. Mostly, the QPRRE with RPEs \({\widehat{k}}_{3},\, {\widehat{k}}_{5},\, {\widehat{k}}_{9},\, {\widehat{k}}_{11},\, {\widehat{k}}_{12}\, and\, {\widehat{k}}_{16}\) gives better results than the QPRRE with other RPEs. There are some situations, where the QPRRE with other RPEs i.e. \({\widehat{k}}_{4}{-}{\widehat{k}}_{8},\, and\, {\widehat{k}}_{15}\) gives better performance than others. By the evidence of simulation results, the study that is reported in this work is that the QPRR outperforms the QLE in the presence of multicollinearity. So, we suggest the researchers use QPRRE with the best biasing parameters \({\widehat{k}}_{3},\, {\widehat{k}}_{5},\,{\widehat{k}}_{9},\, {\widehat{k}}_{11},\, {\widehat{k}}_{12} \,and\, {\widehat{k}}_{16}\) that minimize the effects of the multicollinearity in QPRM due to their robust patterns.

Application: apprentice migration dataset

In this section, we explore the superiority of proposed estimators through a real-life example. We use this dataset46 to determine the performance of the proposed estimators. This real-life dataset is about apprentice migration between 1775 and 1799 to Edinburgh from Scotland. The dataset consists of a sample size of 33 (\(n=33)\) with one explained variable and 4 predictors \(\left(p=4\right).\) The explained variable \(y\) denotes the apprentice and the independent variable \({x}_{1}\) represents the distance, \({x}_{2}\) represents the population, \({x}_{3}\) represents the degree of urbanization and the \({x}_{4}\) represents the direction from Edinburgh. The fitness of the QP distribution is determined by the estimated value of dispersion and the value of dispersion for this real-life data set was found to be 9651.93. By considering the dispersion value, it can be seen that this data of the concerned application is over-dispersed, so QPRM is more appropriate than the PRM.

As there are four predictors, there may be a chance of multicollinearity. To test multicollinearity among predictors, we consider the most popular criteria i.e. the condition index (CI). The CI is the square root of the ratio of minimum eigenvalue and maximum eigenvalue of the independent variables matrix. The CI value is 63.81 which is greater than 30, this shows that severe multicollinearity exists among the independent variables.

The QPRM estimates are obtained by using the QLE. The QLE can give better results if the predictors are uncorrelated. In this case, when the predictors are highly correlated with each other, so, the QLE does not provide good estimates. So, the QPRRE is considered to overcome the effect of multicollinearity. Table 37 shows the estimated coefficients and scalar estimated MSEs of the QLE and QPRRE under proposed RPEs. The QPRM estimates using the QLE and QPREE are obtained using Eqs. (6) and (10) respectively. The estimated scalar MSEs of the QLE and the QPRRE are obtained by using Eqs. (9) and (14) respectively. According to the results of Table 37, it is observed that the MSE of the QPRRE with RPEs is less than the MSE of the QLE. It means that the QPRRE performs well and gives the best results than the QLE. More specifically, the performance of the QPRRE with RPE \({k}_{7}\) is best as compared to the QPRRE with other RPEs and the QLE based on minimum MSE.

In real datasets, sometimes the MSE criteria do not give good predictive performance of the estimators47,48,49. So, another model assessment criterion is recommended is the cross-validation (CV). The CV criteria are also known as the prediction sum of squares (PRESS/CV(1)) or a jackknife fit at given explanatory variables50. This criterion has also some limitations and different types51. The CV was considered by various authors for different models to assess the performance of their proposed estimators47,48,49,52,53. Here we consider the kfold CV and PRESS criterion for further evaluation of the proposed RPEs in the QPRRE. The procedure to compute the CV, we suggest to see the work of Akram et al.52 and Amin et al.53. While the PRESS is computed based on Pearson residuals for the QLE and QPRRE respectively as

where \({\chi }_{i}=\frac{{y}_{i}-{\widehat{\mu }}_{i}}{\sqrt{\widehat{\gamma }{\widehat{\mu }}_{i}}}\), and \({h}_{ii}\) are the ith diagonal elements of the hat matrix computed for the QLE and

, where \({\chi }_{ki}=\frac{{y}_{i}-{\widehat{\mu }}_{ki}}{\sqrt{\widehat{\gamma }{\widehat{\mu }}_{ki}}}, {\widehat{\mu }}_{ki}\) is the predicted response of the QPRRE under different RPEs and \({h}_{kii}\) are the diagonal elements of the hat matrix obtained for the QPRRE. The estimated results for CV and PRESS for the QLE and QPRRE with all RPEs are given in Table 37. Based on CV results, it can be seen that the QPRRE with RPEs \({k}_{3}, {k}_{9}{-}{k}_{12}\) and \({k}_{16}\) gives a better performance as compared to the QPRRE with other RPEs as well as the QLE. When we look at the results of the PRESS criterion, we observed that the QPRRE with RPEs \({k}_{1}{-}{k}_{8}\) show a better performance than others. In view of simulation and application findings and we suggest the researchers to mitigate the effects of multicollinearity in QPRM, use the QPRRE with RPEs \({k}_{3}{-}{k}_{9}\), \({k}_{11}, {k}_{12}\) and \({k}_{16}\).

Conclusion

When the dependent variable is in count form and over-dispersed, then the QPRM can be used for modeling such types of response variables. In this study, we proposed different RPEs for the QPRRE to minimize the problems of multicollinearity among the explanatory variables. To determine the superiority of the proposed ridge estimators, we conduct the simulation study under different parametric conditions such as different sample sizes, different numbers of predictor variables, different dispersion levels and different degrees of multicollinearity. Furthermore, the evaluation of the performance of proposed ridge estimators is done by analyzing the real-life dataset related to Apprentice migration data. According to the results of the simulation study and real-life dataset, it is observed that the QPRRE with some available and proposed RPEs outperforms as compared to the QLE in the presence of severe multicollinearity. The provided evidence showed that the QPRRE is a better estimation method than the QLE to combat the problem of multicollinearity among the explanatory variables for counts data with over-dispersion.

Data availability

All the datasets used in this paper are available from the corresponding author upon request.

References

Nelder, J. A. & Wedderbum, R. W. M. Generalized linear models. J. R. Stat. Soc. Ser. A 135(3), 370–384 (1972).

Agresti, A. An Introduction to Categorical Data Analysis 2nd edn. (Wiley, 2007).

Myers, R. H., Montgomery, D. C., Vining, G. G. & Robinson, T. J. Generalized Linear Models: With Applications in Engineering and the Sciences (Wiley, 2012).

Chatla, S. B. & Shmueli, G. Efficient estimation of COM-Poisson regression and generalized additive model. Comput. Stat. Data Anal. 121, 71–88 (2018).

Francis, R. A. et al. Characterizing the performance of the Conway–Maxwell Poisson generalized linear model. Risk Anal. 32(1), 167–183 (2012).

Sturman, M. C. Multiple approaches to analysing count data in studies of individual differences: The propensity for type 1 error, illustrated with the case of absenteeism prediction. Educ. Psychol. Meas. 59(3), 414–430 (1999).

Mansson, K. & Shukur, G. A Poisson ridge regression estimator. Econ. Model. 28(4), 1475–1481 (2011).

Boveng, P. L. et al. The abundance of harbor seals in the Gulf of Alaska. Mar. Mammal Sci. 19(1), 111–127 (2003).

Hilbe, J. M. Negative Binomial Regression (Cambridge University Press, 2007).

Conway, R. W. & Maxwell, W. L. A queuing model with state dependent service rates. J. Ind. Eng. 12(2), 132–136 (1962).

Guikema, S. D. & Goffelt, J. P. A flexible count data regression model for risk analysis. Risk Anal. 28(1), 213–223 (2008).

Wedderburn, R. W. Quasi-likelihood functions, generalized linear models, and the Gauss–Newton method. Biometrika 61(3), 439–447 (1974).

Ver Hoef, J. M. & Boveng, P. L. Quasi-Poisson vs. negative binomial regression: How should we model over dispersed count data. Ecology 88(11), 2766–2772 (2007).

Lee, Y. & Nelder, J. A. The relationship between double-exponential families and extended quasi-likelihood families, with application to modelling Geissler’s human sex ratio data. J. R. Stat. Soc. Ser. C Appl. Stat. 49(3), 413–419 (2000).

Frisch, R. Statistical Confluence Analysis by Means of Complete Regression Systems Vol. 5 (Universitetets Okonomiske Institute, 1934).

Amin, M., Akram, M. N. & Kibria, B. M. G. A new adjusted Liu estimator for the Poisson regression model. Concurr. Comput. Pract. Exp. 33(20), e6340 (2021).

Arum, K. C., Ugwuowo, F. I. & Oranye, H. E. Robust modified jackknife ridge estimator for the Poisson regression model with multicollinearity and outliers. Sci. Afr. 17, e01386 (2022).

Lukman, A. F., Adewuyi, E., Mansson, K. & Kibria, B. M. G. A new estimator for the multicollinearity Poisson regression model: Simulation and application. Sci. Rep. 11(1), 1–11 (2021).

Oranye, H. E. & Ugwuowo, F. I. Modified jackknife Kibria-Lukman estimator for the Poisson regression model. Concurr. Comput. Pract. Exp. 34(6), e6757 (2022).

Hoerl, A. E. & Kennard, R. W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 12(1), 55–67 (1970).

Dorugade, A. V. & Kashid, D. N. Alternative method for choosing ridge parameter for regression. Appl. Math. Sci. 4(9), 447–456 (2010).

Dorugade, A. V. New ridge parameters for ridge regression. J. Assoc. Arab Univ Basic Appl. Sci. 15(1), 94–99 (2014).

Gunst, R. F. & Mason, R. L. Biased estimation in regression: An evaluation using mean squared error. J. Am. Stat. Assoc. 72(359), 616–628 (1977).

Hoerl, A. E., Kennard, R. W. & Baldwin, K. F. Ridge regression: Some simulations. Commun. Stat. Simul. Comput. 4(2), 105–123 (1975).

Khalaf, G. & Shukur, G. Choosing ridge parameter for regression problems. Commun. Stat. Theorem Methods 34(5), 1177–1182 (2005).

Kibria, B. M. G. Performance of some new ridge regression estimators. Commun. Stat. Simul. Comput. 32(2), 419–435 (2003).

Nordberg, L. A procedure for determination of a good ridge parameter in linear regression. Commun. Stat. Simul. Comput. 11(3), 285–309 (1982).

Saleh, A. M. E., Arashi, M. & Kibria, B. M. G. Theory of Ridge Regression with Applications Vol. 285 (Wiley, 2019).

Algamal, Z. Y. Performance of ridge estimator in inverse Gaussian regression model. Commun. Stat. Theory Methods 48(15), 3836–3849 (2019).

Mansson, K. On ridge estimators for the negative binomial regression model. Econ. Model. 29, 178–184 (2012).

Muniz, G. & Kibria, B. M. G. On some ridge regression estimators: Empirical comparisons. Commun. Stat. Simul. Comput. 38(3), 621–630 (2009).

Amin, M., Qasim, M., Afzal, S. & Naveed, K. New ridge estimators in the inverse Gaussian regression: Monte Carlo simulation and application to chemical data. Commun. Stat. Simul. Comput. 51(10), 6170–6187 (2022).

Rahsad, N. K., Hamood, N. M. & Algamal, Z. Y. Generalized ridge estimator for the negative binomial regression model. J. Phys. Conf. Ser. 18(97), 1–9 (2021).

Kaçiranlar, S. & Dawoud, I. On the performance of the Poisson and the negative binomial ridge predictors. Commun. Stat. Simul. Comput. 47(6), 1751–1770 (2018).

Rashad, N. K. & Algamal, Z. Y. A new ridge estimator for the Poisson regression model. Iran. J. Sci. Technol. Trans. A Sci. 43, 2921–2928 (2019).

Alobaidi, N. N., Shamany, R. E. & Algamal, Z. Y. A new ridge estimator for the negative binomial regression model. Thailand Stat. 19(1), 115–124 (2021).

Sami, F., Amin, M. & Butt, M. M. On the ridge estimation of the Conway–Maxwell Poisson regression model with multicollinearity: Methods and applications. Concurr. Comput. Pract. Exp. 34(1), e6477 (2022).

Efron, B. Double exponential families and their use in generalized linear regression. J. Am. Stat. Assoc. 81, 709–721 (1986).

Istiana, N., Kurnia, A., & Ubaidillah, A. Quasi Poisson model for estimating under-five mortality rate in small area. In ICSA 2019. (Bogor, Indonesia, 2019).

Gelfand, A. E. & Dalal, S. R. A note on overdispersed exponential families. Biometrika 77(1), 55–64 (1990).

McCullagh, P. & Nelder, J. A. Generalized Linear Models 2nd edn. (Chapman and Hall, 1989).

Wakefield, J. Bayesian and Frequentist Regression Methods (Springer, 2013).

Mustafa, S., Amin, M., Akram, M. N. & Afzal, N. On the performance of link functions in the beta ridge regression model: Simulation and application. Concurr. Comput. Pract. Exp. 34(18), e7005 (2022).

Amin, M., Qasim, M., Ullah, M.A. & Afzal, S. On the performance of some ridge estimators in gamma regression. Stat. Pap. 61, 997–1026 (2020).

Akram, M. N., Kibria, B. M. G., Abonazel, M. R. & Afzal, N. On the performance of some biased estimators in the gamma regression model: Simulation and applications. J. Stat. Comput. Simul. 92(12), 2425–2447 (2022).

Lovett, A. & Flowerdew, R. Analysis of count data using Poisson regression. Prof. Geogr. 41, 190–198 (1989).

Amini, M. & Roozbeh, M. Optimal partial ridge estimation in restricted semiparametric regression models. J. Multivar. Anal. 136, 26–40 (2015).

Roozbeh, M. Optimal QR-based estimation in partially linear regression models with correlated errors using GCV criterion. Comput. Stat. Data Anal. 117, 45–61 (2018).

Roozbeh, M., Arashi, M. & Hamzah, N. A. Generalized cross-validation for simultaneous optimization of tuning parameters in ridge regression. Iran. J. Sci. Technol. Trans. A Sci. 44, 473–485 (2020).

Hastie, T. J. & Tibshirani, R. J. Generalized Additive Models (Chapman and Hall, 1990).

McLachlan, G. J. & Peel, D. Finite Mixture Models (Wiley, 2004).

Akram, M. N., Amin, M. & Amanullah, M. James Stein estimator for the inverse Gaussian regression model. Iran. J. Sci. Technol. Trans. A Sci. 45, 1389–1403 (2021).

Amin, M., Akram, M. N. & Majid A. On the estimation of Bell regression model using ridge estimator. Commun. Stat. Simul. Comput. 52(3), 854–867 (2023).

Funding

The study was funded by Researchers Supporting Project number (RSPD2023R749), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

A.S. writes the first draft and does the simulation study. M.A. Basic Idea, Supervision, and Review. W.E. Do the example results and financial support. M.F. Do the programing and review the whole study.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shahzad, A., Amin, M., Emam, W. et al. New ridge parameter estimators for the quasi-Poisson ridge regression model. Sci Rep 14, 8489 (2024). https://doi.org/10.1038/s41598-023-50085-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-50085-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.