Abstract

In magnetic resonance imaging (MRI), the perception of substandard image quality may prompt repetition of the respective image acquisition protocol. Subsequently selecting the preferred high-quality image data from a series of acquisitions can be challenging. An automated workflow may facilitate and improve this selection. We therefore aimed to investigate the applicability of an automated image quality assessment for the prediction of the subjectively preferred image acquisition. Our analysis included data from 11,347 participants with whole-body MRI examinations performed as part of the ongoing prospective multi-center German National Cohort (NAKO) study. Trained radiologic technologists repeated any of the twelve examination protocols due to induced setup errors and/or subjectively unsatisfactory image quality and chose a preferred acquisition from the resultant series. Up to 11 quantitative image quality parameters were automatically derived from all acquisitions. Regularized regression and standard estimates of diagnostic accuracy were calculated. Controlling for setup variations in 2342 series of two or more acquisitions, technologists preferred the repetition over the initial acquisition in 1116 of 1396 series in which the initial setup was retained (79.9%, range across protocols: 73–100%). Image quality parameters then commonly showed statistically significant differences between chosen and discarded acquisitions. In regularized regression across all protocols, ‘structured noise maximum’ was the strongest predictor for the technologists’ choice, followed by ‘N/2 ghosting average’. Combinations of the automatically derived parameters provided an area under the ROC curve between 0.51 and 0.74 for the prediction of the technologists’ choice. It is concluded that automated image quality assessment can, despite considerable performance differences between protocols and anatomical regions, contribute substantially to identifying the subjective preference in a series of MRI acquisitions and thus provide effective decision support to readers.

Similar content being viewed by others

Introduction

Whole-body magnetic resonance imaging (MR, MRI) is a key imaging technique in population-based cohort studies, due to its excellent spatial resolution and soft-tissue contrast, its capacity for standardization, and the absence of ionizing radiation. Several studies rely on this imaging modality to generate comprehensive data repositories that can provide insights into general health and are a valuable resource for radiomics. These studies include the German National Cohort (NAKO or NAKO Health Study), the UK Biobank (UKBB), the Multi-Ethnic Study of Arteriosclerosis (MESA), the Framingham Heart Study (FHS), and the Study of Health in Pomerania (SHIP)1,2.

Standardized image acquisition and reproducible image quality are crucial in such studies to ensure consistent post-processing, including automated segmentation and feature extraction. MRI protocol repetitions pose a particular challenge to these objectives from a quality control standpoint due to their variable origins and presentations. Repeating MRI protocols is generally considered in case of an unsatisfactory initial image acquisition, whose quality could have been degraded for multiple reasons. Examples include, depending on the protocol in question: an improper hardware or software setup, blurring from bulk patient or organ motion, synchronization failures such as mis-triggering in the cardiac cycle, susceptibility and off-resonance artifacts, fat–water shift and swaps, anatomic coverage issues, or premature scan abortions. In a sufficiently large cohort study, these and other different quality impairments will inevitably occur, and their appearance will be multifaceted. So will be their possible remedies, if these can be determined. For quality control and imaging optimization, it is essential to deduce the root cause of quality-impaired image acquisitions, and, if the protocol was repeated, to understand the quality differences within the series of acquisitions.

The current workflow in most studies and clinical departments involves a visual assessment of all images by radiologic technologists or radiologists during or directly after acquisition. Based upon this subjective expert assessment, the respective protocol may then be repeated, possibly with updated instructions given to the subject if appropriate. The preferred image data is then chosen based on subjective assessment. This approach can be time-consuming and therefore costly, especially for large cohort studies. It also lacks standardization, is likely error-prone, and may further discomfort among participants or patients due to prolonged examinations. Computerized tools for quality control and decision support in MRI are well-suited to improve on this approach and address its shortcomings. However, existing solutions generally have a narrower focus by being confined to a specific MRI protocol, anatomical domain, or artifact type. A conceptually broader automated image quality assessment using quantitative parameters has already demonstrated the ability to predict the need for repeating an acquisition, based on the radiologic technologists’ visual assessment in the NAKO as a reference3. The challenge remains to automatically choose the preferred of multiple image acquisitions in a subsequent step using a comparably unrestricted approach.

Recognizing this opportunity, we primarily aimed to identify quantitative image quality parameters that can differentiate between acquisitions that were preferred and chosen by human readers as opposed to those that were discarded – yielding a prediction tool suitable for automation. A secondary objective was to learn whether MRI protocol repetitions in the NAKO improved the perceived image quality.

Methods and materials

Study design and population

Our project was designed as a post-hoc analysis of data from the NAKO Health Study. The NAKO is an ongoing, prospective, multicenter, population-based cohort study conducted by a network of 25 institutions at 18 regional examination sites in Germany. Its main objective is to investigate risk factors for the development of common chronic diseases such as cancer, diabetes, cardiovascular, neurodegenerative/psychiatric, respiratory, and infectious diseases4,5. The baseline assessment was conducted between 2014 and 2019, and 205,415 participants from the general population aged 19 to 74 years were enrolled. They received various medical and psychological assessments, including interviews, questionnaires, and physical examinations. Of these participants, 30,861 were also enrolled in the NAKO MRI study, conducted at five dedicated imaging centers. For our study, we considered all available data at the time of investigation, which comprised examinations from 11,347 participants up to December 31, 2016. This excludes examination aborts before the first MRI protocol was fully recorded or if participants withdrew consent.

The NAKO Use and Access Committee approved this study based on the participants’ informed consent, accordance with the aims of the NAKO Health Study, and ethical approval from the Ethics Committee of the Medical Faculty of the University of Heidelberg (S-843/2020). This study conformed to the ethical guidelines of the 1964 Declaration of Helsinki and its later amendments.

MR imaging

MRI was performed with 3T whole-body MR scanners (MAGNETOM Skyra, Siemens Healthcare, Erlangen, Germany) running an identical software version. The baseline examination program consisted of a whole-body scan with twelve protocols from four focus groups (neurodegenerative, cardiovascular, thoracoabdominal, and musculoskeletal) without intravenous contrast agent application (Fig. 1). A detailed description of the rationale, design, and technical background of the NAKO MRI study has been provided previously1. All image acquisitions were performed following a standard operating procedure (SOP) by radiologic technologists who were specifically trained and certified for the NAKO MRI study. The technologists were instructed to repeat a protocol if anatomic coverage did not meet the SOP, if severe image artifacts occurred, or if the image quality was unsatisfactory for other reasons. Participants were given detailed information about the scanning procedure and were instructed to move as little as possible and to follow breathing instructions.

In our analysis, we controlled for setup changes between acquisitions to obtain a subsample in which the repetitions were performed under identical technical conditions as the initial acquisitions. The following technical parameters were considered: radiofrequency (RF) coil configuration (variations in RF coils and in the selection of receive RF coil elements), field of view size, slice position (field of view shifted along the x/y/z axis of the participant), and slice orientation (field of view rotated or angled differently).

Automated image quality assessment and choosing a preferred acquisition

After general data management and basic automated quality control, including verification of data completeness, conformance to predefined protocol parameters, and data uniqueness, all image series entered a processing pipeline to calculate two to eleven image(-based) quality parameters as previously reported (number varying between protocols)3. The results from this automated image quality assessment could be visualized through a web-based thin client that presented the image series along with the corresponding parameter values. The assessment comprised a universal quality index (‘UQI’) for general quality inspection, image sharpness, global and local signal-to-noise ratio (‘SNR’ and ‘specific SNR’), maximum and average estimates for structured image noise, maximum and average estimates of Nyquist ghosting levels (‘N/2 ghosting’), functional MRI (fMRI) signal drift and variation (‘variation over time’), and a geometric ratio between foreground and background (‘foreground ratio’). The universal quality index provides a crude, non-specific indication of image quality by considering original, noise-filtered, and edge-filtered image versions to calculate a score that increases with image noise and decreases with image blur3. If a protocol was repeated, the technologists defined the acquisition that they considered to be of better image quality through the thin client, as per SOP going solely by subjective image impression as long as the field of view was set correctly (Fig. 2). Only the chosen acquisition was then added to the main database for general use.

Examples of discarded (left) and chosen (right) acquisitions from four different protocols. (a) 2D FLAIR (considerable vs. no bulk patient motion), (b) MRA 3D SPACE STIR (considerable vs. moderate breathing motion), (c) Cine SSFP SAX (mistriggering vs. correct electrocardiographic gating), (d) T1w 3D VIBE DIXON (with vs. without fat–water swap artifact in the liver). The images in each pair were acquired with identical setups and taken from identical participants. In the NAKO MRI study, protocols were repeated if the initial acquisition did not meet the SOP, if severe image artifacts occurred, or if the image quality was otherwise unsatisfactory to the examining radiologic technologist.

Visual image quality rating

In the NAKO MRI study, board-certified radiologists performed a visual image quality rating for image stacks from chosen acquisitions as published previously1. The rating adhered to a detailed criteria catalog that considered anatomical coverage and differentiable structures along with a diverse range of potential artifacts, such as susceptibility, off-resonance, fat–water shift and swaps, banding, pulsation, and others. Scores were assigned according to a 3-point Likert scale: (1) ‘excellent’ image quality not impaired by artifacts, images appropriate for data post-processing; (2) ’good’ image quality with limited impairment by artifacts, images still appropriate for data post-processing; (3) ‘poor’ image quality due to artifacts or insufficient coverage, images generally not appropriate for post-processing. The protocols used for functional or quantitative imaging (Resting State EPI BOLD, MOLLI SAX, and Multiecho 3D VIBE) were not rated. The criteria catalog is shown in Supplemental Material Table S1. For our analysis, we examined the differences in quantitative image quality parameters between the visual quality ratings while, in the same manner as described above, controlling for setup changes between initial acquisitions and repetitions.

Statistics

Data on participants, acquisitions, and repetitions are presented as counts and percentages.

Given repetitions with the same setup as the initial acquisition, the mean differences in image quality parameters between discarded acquisitions and chosen acquisitions were assessed by paired t-tests or signed rank tests after testing for normality using Shapiro–Wilk tests. The mean differences in image quality parameters between the ordinal visual quality ratings were assessed by Kruskal–Wallis tests and limited to parameters that were available for > 70% of the acquisitions in the relevant subsample (‘UQI’, ‘SNR’, ‘sharpness’, and ‘foreground ratio’) to achieve sufficient statistical power.

Associations of single image quality parameters with outcome chosen vs. discarded were evaluated by logistic regression models providing odds ratios (OR) with corresponding 95% confidence intervals (CI) per standard deviation of image quality parameters. To investigate the effect of inter-individual variation, generalized linear mixed models with logit link and random intercept per participant were calculated.

Discriminative performance of the combined set of image quality parameters to distinguish between chosen and discarded acquisitions was assessed by regularized logistic regression with an elastic net penalty and hyperparameters computed by fivefold cross-validation. We calculated three models with alpha values corresponding to LASSO regression, ridge regression, and a balanced blend thereof (Elastic Net Regression). All models were run on 1000 bootstrap samples of the data to quantify the relative importance of the respective image quality parameters by their selection frequency over all samples. Area under the Receiver Operating Characteristic (ROC) curve (AUC) served as the measure of discriminative performance.

Analyses were conducted for all protocols combined, as well as stratified by protocol. P-values < 0.05 were considered to indicate statistical significance. SAS version 9.4 and R version 4.1 were used for all analyses.

Results

In 1,359 (12.0%) of the 11,347 participants, one or more initial acquisitions of a protocol were followed by at least one repetition. With these included, 135,845 acquisitions were performed, of which 134,239 (98.8%) were initial acquisitions and 1606 (1.2%) were repetitions. Most repetitions were limited to one with 1558 (1.2%) first repetitions, 46 (0.03%) second repetitions, and 2 (0.001%) third repetitions. Table 1 details repetition frequencies on a per-protocol level. Of all repetitions, 807 (50.2%) retained the setup of their respective initial acquisition with no changes to RF coil configuration, FOV size, slice position, or slice orientation. These parameters were changed in 81 (5.0%), 53 (3.3%), 791 (49.3%), and 203 (12.6%) repetitions.

The technologists were asked to choose a preferred acquisition for 2,342 series of initial acquisitions and repetitions. This is more than the underlying 1,558 initial acquisitions shown in Table 1 due to the individual assessment of the three orientations 2Ch, 3Ch, and 4Ch in the Cine SSFP LAX protocol used for cardiac imaging. The sample size decreased to 1,396 (59.6%) when limited to repetitions that retained the initial setup. For this particular subsample, the technologists chose the repetition over the initial acquisition in 1,116 (79.9%) instances, with a range of 73%-100% across protocols (Table 2). Considering all acquisitions without controlling for setup variations, this share increased to 90.4%.

Prediction of the technologists’ choice based on the quantitative image quality parameters

In an analysis limited to series where all repetitions retained the setup of the initial acquisition, the quantitative image quality parameters largely showed significant differences between chosen and discarded acquisitions when considering all protocols (Table 3). Only the parameter ‘UQI’ did not differentiate with statistical significance for this subsample, for which the parameters ‘drift’ and ‘variation over time’ were not tested due to insufficient sample size. Testing stratified by protocol revealed that differences were predominantly significant for protocols from the neurodegenerative and cardiac focus groups, which were also the ones with the largest sample sizes.

In logistic regression, the single image quality parameters showed varying associations with the outcome chosen vs. discarded (Table 4). The highest observed OR was 2.53 (p = 0.23) for ‘sharpness’ in the MOLLI protocol. In generalized linear mixed models, an effect of inter-individual variation could not be established (variance of random effects equal to zero).

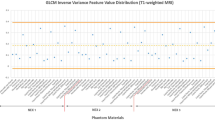

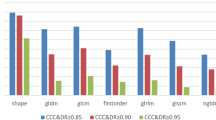

In regularized regression of the combined set of image quality parameters with the outcome chosen vs. discarded (Table 5), the discriminative performance was low if considering all protocols (excluding the parameter ‘specific SNR’ to minimize missing data) with an AUC of 0.58 (95% CI 0.56, 0.61) for LASSO regression as well as Elastic Net regression, and a similarly low AUC of 0.59 (95% CI 0.56, 0.61) for ridge regression. Stratified by protocol, however, the AUC varied considerably between 0.51 and 0.74, with the best discriminative performance for two protocols from the neurodegenerative focus group; T1w 3D MPRAGE with AUC 0.74 (95% CI 0.64, 0.82) and 2D FLAIR with AUC 0.73 (95% CI 0.68, 0.78), again identical for LASSO regression and Elastic Net regression and only slightly different for ridge regression (Fig. 3, Supplemental Material Fig. S1). Selection frequencies across all protocols on 1000 bootstrap samples (again excluding ‘specific SNR’) showed that the most relevant parameter for distinguishing chosen and discarded acquisitions was the maximum value of ‘structured noise’, followed by the average of ‘N/2 ghosting’. The strongest predictors also differed across the individual protocols (Fig. 4, Supplemental Material: Fig. S2).

ROC curves from regularized regression of the combined set of image quality parameters with the outcome ‘chosen vs. discarded acquisition’ for two protocol examples: (a) T1w 3D MPRAGE (above-average performance), (b) Cine SSFP LAX 4Ch (below-average performance). AUC with 95% CI corresponds to mean AUC and respective percentiles from the distribution over all bootstrap samples. Left to right: LASSO regression, Elastic net regression, ridge regression.

Variable selection frequencies from regularized regression with the outcome ‘chosen vs. discarded acquisition: (a) across all protocols on 1000 bootstrap samples*, (b) for T1w 3D MPRAGE (above-average performance), (c) for Cine SSFP LAX 4Ch (below-average performance). Left: LASSO regression, right: Elastic Net regression. As there is no variable selection in ridge regression, all selection frequencies are 100% (therefore not shown). *To minimize skewing towards protocols from the neurodegenerative focus group due to missing data, the parameter ‘specific SNR’ was excluded from potential predictors.

Differences in the quantitative image quality parameters between visual quality ratings

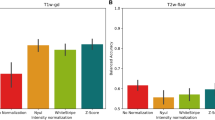

In the analyzed subsample of chosen acquisitions, visual quality ratings were available for 97.2% of the corresponding image stacks. Across all protocols, the radiologists rated 71.8% of the image stacks as ‘excellent’, 22.5% as ‘good’, and 5.7% as ‘poor’. The prevalence of the ‘poor’ rating ranged from 0 to 14.3% across protocols. Significance differences between the visual quality ratings were observed for the parameters ‘UQI’, ‘sharpness’, and ‘foreground ratio’, leading to decreased values of ‘UQI’ and ‘sharpness’ in lower rated and increased values of ‘foreground ratio’ in higher rated acquisitions. No significant differences were observed for ‘SNR’ (Fig. 5). The AUC for identifying ‘poor’ acquisitions from the combined parameters ranged from 0.61 (protocol: Cine SSFP LAX 2Ch) to 0.98 (protocol: T1w 3D MPRAGE).

Box plots and p-values from Kruskal–Wallis tests for quantitative image quality parameters grouped by visual quality ratings of the image stacks from chosen acquisitions. The visual quality rating followed objective criteria to assign a score based on a 3-point Likert scale (1: ‘excellent’, 2: ‘good’, 3: ‘poor’). Each plot considers all protocols for which the respective parameter was calculated. UQI universal quality index, SNR signal-to-noise ratio.

Discussion

Our analysis of the NAKO MRI study, comprising 11,347 whole-body examinations with 135,845 acquisitions using twelve protocols, showed that 134,239 (98.8%) were initial acquisitions and 1606 (1.2%) were repetitions. In a subsample limited to repetitions that retained the initial setup, radiologic technologists comparatively assessed 1396 series of initial acquisitions and repetitions to determine the highest-quality images, and by a rate of 79.9% chose a repetition. An automated image quality assessment demonstrated varying classification abilities for this task, with areas under the receiver operating characteristic curve (AUC) between 0.51 and 0.74, depending on parameter selection and protocol.

Existing approaches for the automation of quality control in MRI have a narrower focus by being confined to a specific MRI protocol, anatomical domain, or artifact type: Esteban et al. trained a random forests classifier on 1101 T1w brains scans of a multi-site dataset to predict a binary quality label (‘accept’ or ‘exclude’) from 14 image quality metrics6. Alexander-Bloch et al. used an estimate of microscopic head motion on fMRI time series as a proxy measure for motion during a preceding same-subject T1w acquisition7. Earlier studies on the mitigation of motion artifacts used optical tracking systems to measure and also prospectively correct microscopic head motion8. Ahmad et al. recently adapted a convolutional neural network to classify diffusion-weighted brain images into artifactual and non-artifactual based on motion-induced signal dropout, interslice instability, ghosting, chemical shift, and susceptibility9. Aside from neuroimaging, Tarroni et al. developed an extensive quality control pipeline for cardiac cine short-axis stacks from the UK Biobank considering heart coverage, inter-slice motion, and image contrast10,11. Other published approaches, while notably effective for a specific task, were similarly restricted. In our previous investigation of automated image quality assessment in the NAKO MRI study, we used a broader approach and found that the eleven parameters also used in the present study distinguished initial acquisitions that were seen necessary to repeat from those that were not with varying performance between protocols: Using different parameter combinations not narrowed to a specific artifact type (for a subsample again limited to acquisitions that were not associated with subsequent setup changes), the AUC ranged from 0.58 to 0.99, or up to 0.89 after removal of a debatable outlier3. The discriminative performance in that previous investigation was highest for protocols from the neurodegenerative focus group. Our current study extends this broad approach to the differentiation of chosen and discarded acquisitions on an intra-participant level. The overall slightly lower performance suggests less pronounced image quality differences in these relative to repeated and not repeated initial acquisitions that were compared on an inter-participant level. This is supported by the possibility that improvements in the same participant were not necessarily achieved, whereas initial acquisitions only qualified for repetition if they were noticeably different from the overall set of high-quality acquisitions. It is, however, somewhat contradicted by the 79.9% choice rate for repetitions, which does suggest obvious quality differences. But this rate may also be partially biased by the choice procedure, which was unblinded to the acquisition order (initial or repetition) as well as the quality parameters, even if those were not to be considered as per SOP. The performance of the regression models may have been better if chosen and discarded acquisitions with setup changes (such as variations in RF coils) and presumably starker image quality differences had been included. Yet for these, the choice was not one of preference but of adherence to the SOP and an automatic image quality assessment for decision support therefore inconsequential.

The discriminative performance of our approach varied considerably between the protocols, and different parameters or their combinations worked superiorly on some protocols compared to others. While the distinct physical properties of each parameter and their relevance for a certain protocol will be partly responsible for this, there are additional factors contributing to performance variations: In certain protocols, especially those with a cardiovascular focus, the diagnostically relevant image region is substantially smaller than the overall field of view. A parameter that is averaged across the entire three- or four-dimensional image stack may then, while technically correct, give a skewed representation of the images’ usability. Examples for the Cine SSFP SAX and LAX protocols are the presence of cardiac motion artifacts that typically arise from ineffective electrocardiographic gating (‘mistriggering’) or banding artifacts from off-resonance effects. The reverse may also apply: Low-quality image areas can degrade a parameter score without significantly degrading the images’ usability. For instance, in a T1w 3D MPRAGE protocol, organ motion artifacts from swallowing will negatively influence the overall image sharpness without affecting the depiction of intracranial structures. A solution to this problem could be the regional localization of image quality parameters through the implementation of bounding boxes for areas of interest or through organ segmentations. A similar point was made by Esteban et al.6. To preserve the automatic workflow, however, this would necessitate the automatic creation of such delineations. Appropriate segmentation algorithms are already available and continuously improving with further advances in machine learning12,13,14. Our approach could also be complemented by other automatic quality assessment techniques that assess the images’ metadata, perform cross-correlations between protocols, or investigate recreated k-spaces. In the detection of cardiac motion artifacts, for example, a k-space approach tested on UK Biobank data achieved an excellent classification performance with an AUC of 0.8915. Implemented into scan assistant software, by itself or as one element of a wider quality control pipeline, such an enhanced approach would likely optimize throughput in large cohort studies, screening programs, or clinical imaging by minimizing the need for human intervention. The accompanying image quality harmonization would benefit downstream post-processing algorithms that rely on consistently high image quality for segmentation tasks or computer-aided diagnosis. In its current form, however, our approach is best applicable to the protocols T1w 3D MPRAGE and 2D FLAIR based on their respective AUC values in the regression models and has considerably less value outside neuroimaging. If it can be augmented with the additional techniques described above, further usability seems plausible especially for protocols employed in cardiac imaging, which showed the next highest AUC values in the present study. Nonetheless, it may have to be discarded completely for some protocols.

A strength of this study is that it draws from a large database of MRI examinations that were performed in a highly controlled setting. The following image quality assessment was strictly standardized through its automated methodology, independent of specialized hardware such as phantoms or sensors, and not constricted to a specific MRI protocol or artifact type. A limitation is the aforementioned bias resulting from unblinded choices. Another important limitation is that we were unable to measure the differences in quality parameters between chosen and discarded acquisitions against the intra-participant intra-protocol variabilities in multiple satisfactory acquisitions since repeated measurements were only performed after an unsatisfactory initial acquisition and only one was then chosen. A further limitation is that the radiologic technologists were able to brief participants before performing a repetition: By reminding them to follow breathing instructions, cease motion, or otherwise better comply with the examination, the technologists introduced a selection bias and put the repetitions to an advantage, as was their responsibility to optimize imaging. Independent from these limitations, we showed that several quantitative image quality parameters also differed statistically significantly in mean values between the ‘poor’, ‘good’, and ‘excellent’ visual quality ratings assigned by board-certified radiologists, providing further evidence of an association between the parameters and the visual quality impression as evaluated with objective criteria. Our statistical analysis is constrained by the use of a diagnostic prediction model on a known dataset, as opposed to a prognostic prediction model on unknown data. The weak points listed above could be addressed by conducting further examinations in a blinded and controlled manner. The subsample analysis of repetitions without setup changes was restricted by particularly low counts for the protocols Resting State EPI BOLD (n = 1), PDw FS 3D SPACE (n = 2), T2 2D FSE (n = 2), and MOLLI SAX (n = 6), which meant the exclusion of the first three. A constraint inherent to the image quality parameters is interdependences between individual and compound parameters, such as ‘noise’ and ‘signal-to-noise ratio’. Lastly, as was the case in our previous study, the ability of this automated image quality assessment to generalize on clinical data with inherently less standardization has yet to be validated.

In conclusion, our approach for automated image quality assessment can, despite varying accuracy for different protocols and anatomical regions, contribute substantially to identifying the subjective preference in a series of MRI acquisitions and thus provide effective decision support to readers in large-scale imaging studies and potentially in clinical imaging.

Data availability

The data generated and/or analyzed during the current study are available upon request from the data transfer unit of the NAKO Health Study (https://transfer.nako.de or transfer@nako.de). All data requests and project agreements are subject to approval by the NAKO Health Study board.

Abbreviations

- AUC:

-

Area under the curve

- CI:

-

Confidence interval

- FHS:

-

Framingham heart study

- fMRI:

-

Functional MRI

- MESA:

-

Multi-ethnic study of arteriosclerosis

- MR:

-

Magnetic resonance

- MRI:

-

Magnetic resonance imaging

- NAKO:

-

German National Cohort / NAKO Health Study

- OR:

-

Odds ratio

- ROC:

-

Receiver operating characteristic

- RF:

-

Radiofrequency (coil)

- SHIP:

-

Study of Health in Pomerania

- UKBB:

-

UK Biobank

- SNR:

-

Signal-to-noise ratio

- SOP:

-

Standard operating procedure

- UQI:

-

Universal quality index

References

Bamberg, F. et al. Whole-body MR imaging in the German National cohort: Rationale, design, and technical background. Radiology 277, 206–220. https://doi.org/10.1148/radiol.2015142272 (2015).

Schlett, C. L. et al. Population-based imaging and radiomics: Rationale and perspective of the German National cohort MRI study. RoFo Fortschritte auf dem Gebiete der Rontgenstrahlen und der Nuklearmedizin 188, 652–661. https://doi.org/10.1055/s-0042-104510 (2016).

Schuppert, C. et al. Whole-body magnetic resonance imaging in the large population-based German National Cohort Study: Predictive capability of automated image quality assessment for protocol repetitions. Investig. Radiol. 57, 478–487. https://doi.org/10.1097/RLI.0000000000000861 (2022).

German National Cohort Consortium. The German National Cohort: Aims, study design and organization. Eur. J. Epidemiol. 29, 371–382. https://doi.org/10.1007/s10654-014-9890-7 (2014).

Peters, A. et al. Framework and baseline examination of the German National Cohort (NAKO). Eur. J. Epidemiol. 37, 1107–1124. https://doi.org/10.1007/s10654-022-00890-5 (2022).

Esteban, O. et al. MRIQC: Advancing the automatic prediction of image quality in MRI from unseen sites. PLoS One 12, e0184661. https://doi.org/10.1371/journal.pone.0184661 (2017).

Alexander-Bloch, A. et al. Subtle in-scanner motion biases automated measurement of brain anatomy from in vivo MRI. Hum. Brain Mapp. 37, 2385–2397. https://doi.org/10.1002/hbm.23180 (2016).

Maclaren, J. et al. Measurement and correction of microscopic head motion during magnetic resonance imaging of the brain. PLoS One 7, e48088. https://doi.org/10.1371/journal.pone.0048088 (2012).

Ahmad, A., Parker, D., Dheer, S., Samani, Z. R. & Verma, R. 3D-QCNet—A pipeline for automated artifact detection in diffusion MRI images. Comput. Med. Imaging Graph 103, 102151. https://doi.org/10.1016/j.compmedimag.2022.102151 (2023).

Tarroni, G. et al. Learning-based quality control for cardiac MR images. IEEE Trans. Med. Imaging 38, 1127–1138. https://doi.org/10.1109/TMI.2018.2878509 (2019).

Tarroni, G. et al. Large-scale quality control of cardiac imaging in population studies: Application to UK Biobank. Sci. Rep. 10, 2408. https://doi.org/10.1038/s41598-020-58212-2 (2020).

Rizwan, I., Haque, I. & Neubert, J. Deep learning approaches to biomedical image segmentation. Inform. Med. Unlocked https://doi.org/10.1016/j.imu.2020.100297 (2020).

Kart, T. et al. Deep learning-based automated abdominal organ segmentation in the UK Biobank and German national cohort magnetic resonance imaging studies. Investig. Radiol. 56, 401–408. https://doi.org/10.1097/rli.0000000000000755 (2021).

Kart, T. et al. Automated imaging-based abdominal organ segmentation and quality control in 20,000 participants of the UK Biobank and German National Cohort Studies. Sci. Rep. 12, 18733. https://doi.org/10.1038/s41598-022-23632-9 (2022).

Oksuz, I. et al. Automatic CNN-based detection of cardiac MR motion artefacts using k-space data augmentation and curriculum learning. Med. Image Anal. 55, 136–147. https://doi.org/10.1016/j.media.2019.04.009 (2019).

Acknowledgements

This project was conducted with data from the German National Cohort (NAKO Health Study) (www.nako.de). The NAKO Health Study is funded by the Federal Ministry of Education and Research (BMBF) [project funding reference numbers: 01ER1301A/B/C and 01ER1511D], federal states, and the Helmholtz Association with additional financial support by the participating universities and the institutes of the Leibniz Association. We thank the NAKO MRI Study Investigators. We further thank the participants who took part in the NAKO Health Study and the staff in this research program.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

C.S., S.R., D.C.H., and C.L.S. wrote the main manuscript text. C.S., S.R., and J.G.H., and C.L.S. prepared tables and figures. J.G.H., T.K., L.K., B.S., K.B.M., S.S., H.B., T.J.K., T.P., T.N., J.S., M.F., H.V., N.H., R.B., H.K., F.B., M.G., and C.L.S. are principal investigators or representatives in the NAKO MRI study. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schuppert, C., Rospleszcz, S., Hirsch, J.G. et al. Automated image quality assessment for selecting among multiple magnetic resonance image acquisitions in the German National Cohort study. Sci Rep 13, 22745 (2023). https://doi.org/10.1038/s41598-023-49569-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-49569-1

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.