Abstract

Hospital emergency departments frequently receive lots of bone fracture cases, with pediatric wrist trauma fracture accounting for the majority of them. Before pediatric surgeons perform surgery, they need to ask patients how the fracture occurred and analyze the fracture situation by interpreting X-ray images. The interpretation of X-ray images often requires a combination of techniques from radiologists and surgeons, which requires time-consuming specialized training. With the rise of deep learning in the field of computer vision, network models applying for fracture detection has become an important research topic. In this paper, we use data augmentation to improve the model performance of YOLOv8 algorithm (the latest version of You Only Look Once) on a pediatric wrist trauma X-ray dataset (GRAZPEDWRI-DX), which is a public dataset. The experimental results show that our model has reached the state-of-the-art (SOTA) mean average precision (mAP 50). Specifically, mAP 50 of our model is 0.638, which is significantly higher than the 0.634 and 0.636 of the improved YOLOv7 and original YOLOv8 models. To enable surgeons to use our model for fracture detection on pediatric wrist trauma X-ray images, we have designed the application “Fracture Detection Using YOLOv8 App” to assist surgeons in diagnosing fractures, reducing the probability of error analysis, and providing more useful information for surgery.

Similar content being viewed by others

Introduction

In hospital emergency rooms, radiologists are often asked to examine patients with fractures in various parts of the body, such as the wrist and arm. Fractures can generally be classified as open or closed, with open fractures occurring when the bone pierces the skin, and closed fractures occurring when the skin remains intact despite the broken bone. Before performing surgery, the surgeon must inquire about the medical history of the patients and conduct a thorough examination to diagnose fracture. In recent medical imaging, three types of devices, including X-ray, Magnetic Resonance Imaging (MRI), and Computed Tomography (CT), are commonly used to diagnose fracture1. And X-ray is the most widely used device due to its cost-effectiveness.

Fractures of the distal radius and ulna account for the majority of wrist trauma in pediatric patients2,3. In prestigious hospitals of developed areas, there are many experienced radiologists who are capable of correctly analyzing X-ray images; while in some small hospitals of underdeveloped regions, there are only young and inexperienced surgeons who may be unable to correctly interpret X-ray images. Therefore, a shortage of radiologists would seriously jeopardize timely patient care4,5. Specifically, some hospitals in Africa have even limited access to specialist reports6, which badly affects the probaility of the sucess of surgery. According to the survey7,8, the percentage of X-ray images misinterpreted have reached 26%.

With the advancement of deep learning, neural network models have been introduced in medical image processing9,10,11,12. In recent years, researchers have started to apply object detection models13,14,15 to fracture detection16,17,18,19, which is a popular research topic in computer vision (CV).

Deep learning methods in the field of object detection are divided into two-stage and one-stage algorithms. Two-stage algorithm models such as R-CNN13 and its improved models20,21,22,23,24,25,26 generate location and class probabilities in two stages. Whereas one-stage algorithm models directly produce the location and class probabilities of objects, resulting in the improvement of the model inference speed. In addition to the classical one-stage algorithm models, such as SSD27, RetinaNet28, CornerNet29, CenterNet30, and CentripetalNet31, You Only Look Once (YOLO) series algorithm models32,33,34 are preferred for real-time applications35 due to the good balance between the model accuracy and inference speed.

In this paper, we first use YOLOv8 algorithm36 to train models of different sizes on the GRAZPEDWRI-DX37 dataset. After evaluation of the model performances of YOLOv8, we train the models by using data augmentation to detect wrist fractures in children. We compare YOLOv8 models using our training method with YOLOv7 and its improved models, and the experimental results demonstrate that our models have the highest the mean average precision (mAP 50) value.

The contributions of this paper are summarized as follows:

-

We use data augmentation to improve the model performance of YOLOv8 model. The experimental results show that the mean average precision of YOLOv8 model using our training method for fracture detection on the GRAZPEDWRI-DX dataset reaches SOTA value.

-

This work develops an application to detect wrist fracture in children, which aims to help pediatric surgeons interpret X-ray images without the assistance of the radiologist, and reduce the probability of X-ray image analysis errors.

This paper is structured as follows: Section "Related work" describes the deep learning methods for detecting fracture, and describes the application of YOLOv5 model in medical image processing. Section "Proposed method" introduces the whole process of training and the architecture of our model. Section "Experiments" presents the improved performance of YOLOv8 model using our training method compared with YOLOv7 and its improved models. Section "Application" describes our proposed application to assist pediatric surgeons in analyzing X-ray images. Finally, Sect. "Conclusions and future work" discusses the conclusions and future work of this paper.

Related Work

In recent years, neural networks have been widely utilized in image data for fracture detection. Guan et al.38 achieved the average precision of 82.1% on 3,842 thigh fracture X-ray images using the Dilated Convolutional Feature Pyramid Network (DCFPN). Wang et al.39 employed a novel R-CNN13 network ParalleNet as the backbone network for fracture detection on 3842 thigh fracture X-ray images. In addition to thigh fracture detection, about arm fracture detection, Guan et al.40 used R-CNN for detection on Musculoskeletal-Radiograph (MURA) dataset41 and obtained an average precision of 62.04%. Ma and Luo43 used Faster R-CNN21 for fracture detection on a part of 1052 bone images of the dataset and the proposed CrackNet model for fracture classification on the whole dataset. Wu et al.42 proposed Feature Ambiguity Mitigate Operator (FAMO) model based on ResNeXt10147 and FPN48 for bone fracture detection on 9040 radiographs of various body parts. Qi et al.49 utilized Fast R-CNN20 with ResNet5050 as the backbone network to detect nine different types of fractures on 2,333 fracture X-ray images. Xue et al.44 utilized the Faster R-CNN model for hand fracture detection on 3067 hand trauma X-ray images, achieving an average precision of 70.0%. Sha et al.45,46 used YOLOv251 and Faster R-CNN21 models for fracture detection on 5134 CT images of spine fractures respectively. Experiments showed that the average precision of YOLOv2 reached 75.3%, which was higher than 73.3% of Faster R-CNN, and inference time of YOLOv2 for each CT image is 27 ms, which is much faster than 381 ms of Faster R-CNN. From Table 1, it can be seen that even though most of the works using R-CNN series models have shown excellent results, the inference speed is not satisfactory.

YOLO series models32,33,34 offer a balance of performance in terms of the model accuracy and inference speed, which is suitable for mobile devices in real-time X-ray images detection. Hržić et al.52 proposed a machine learning model based on YOLOv4 method to help radiologists diagnose fractures and demonstrated that the AUC-ROC (area under the receiver operator characteristic curve) value of YOLO 512 Anchor model-AI was significantly higher than that of radiologists. YOLOv5 model53, which was proposed by Ultralytics in 2021, has been deployed on mobile phones as the “iDetection” application. On this basis, Yuan et al.54 employed external attention and 3D feature fusion techniques in YOLOv5 model to detect skull fractures in CT images. Warin et al.55 used YOLOv5 model to detect maxillofacial fractures in 3407 maxillofacial bone CT images, and classified the fracture conditions into frontal, midfacial, mandibular fractures and no fracture. Rib fractures are a precursor injury to physical abuse in children, and chest X-ray (CXR) images are preferred for effective diagnosis of rib fracture conditions because of their convenience and low radiation dose. Tsai et al.56 used data augmentation with YOLOv5 model to detect rib fractures in CXR images. And Burkow et al.57 applied YOLOv5 model to detect rib fractures in 704 pediatric CXR images, the model obtained the F2 score value of 0.58. To identify and detect mandibular fractures in panoramic radiographs, Warin et al.58 used convolutional neural networks (CNNs) and YOLOv5 model to implement it. Fatima et al.59 used YOLOv5 model to localize vertebrae, which is important for detecting spinal deformities and fractures, and obtained an average precision of 0.94 at an IoU (Intersection over Union) threshold of 0.5. Moreover, Mushtaq et al.60 applied YOLOv5 model to localize the lumbar spine and obtained an average precision value of 0.975. Nevertheless, relatively few researches have been reported on pediatric wrist fracture detection using YOLOv5 model. While YOLOv8 was proposed by Ultralytics in 2023, we use this algorithm to train the model for the first time in pediatric wrist fracture detection.

Proposed method

In this section, we introduce the process of the model training, validation and testing on the dataset, the architecture of YOLOv8 model, and the data augmentation technique employed during training. Figure 1 illustrates the flowchart depicting the model training process and performance evaluation. We randomly divide the 20,327 X-ray images of the GRAZPEDWRI-DX dataset into the training, validation, and test set, where the training set is expanded to 28,408 X-ray images by data augmentation from the original 14,204 X-ray images. We design our model according to YOLOv8 algorithm, and the architecture of YOLOv8 algorithm is shown in Fig. 2.

Data augmentation

During the model training process, data augmentation is employed in this work to extend the dataset. Specifically, we adjust the contrast and brightness of the original X-ray image to enhance the visibility of bone-anomaly. This is achieved using the addWeighted function available in OpenCV (Open Source Computer Vision Library). The equation is presented below:

where \(Input_1\) and \(Input_2\) are the two input images of the same size respectively, \(\alpha \) represents the weight assigned to the first input image, \(\beta \) denotes the weight assigned to the second input image, and \(\gamma \) represents the scalar value added to each sum. Since our purpose is to adjust the contrast and brightness of the original input image, we take the same image as \(Input_1\) and \(Input_2\) respectively and set \(\beta \) to 0. The value of \(\alpha \) and \(\gamma \) represent the proportion of the contrast and the brightness of the image respectively. The image after adjusting the contrast and brightness is shown in Fig. 3. After comparing different settings, we finally decided to set \(\alpha \) to 1.2 and \(\gamma \) to 30 to avoid the output image being too bright.

Model architecture

Our model architecture consists of backbone, neck, and head, as shown in Fig. 4. In the following subsections, we introduce the design concepts of each part of the model architecture, and the modules of different parts.

Backbone

The backbone of the model uses Cross Stage Partial (CSP)61 architecture to split the feature map into two parts. The first part uses convolution operations, and the second part is concatenated with the output of the previous part. The CSP architecture improves the learning ability of the CNNs and reduces the computational cost of the model.

YOLOv836 introduces C2f module by combining the C3 module and the concept of ELAN from YOLOv732, which allows the model to obtain richer gradient flow information. The C3 module consists of 3 ConvModule and n DarknetBottleNeck, and the C2f module consists of 2 ConvModule and n DarknetBottleNeck connected through Split and Concat, as illustrated in Figure 4, where the ConvModule consists of Conv-BN-SiLU, and n is the number of the bottleneck. Unlike YOLOv553, we use the C2f module instead of the C3 module.

Furthermore, we reduce the number of blocks in each stage compared to YOLOv5 to further reduce the computational cost. Specifically, our model reduces the number of blocks to 3,6,6,3 in Stage 1 to Stage 4, respectively. Additionally, we adopt the Spatial Pyramid Pooling - Fast (SPPF) module in Stage 4, which is an improvement from Spatial Pyramid Pooling (SPP)62 to improve the inference speed of the model. These modifications lead to our model with a better learning ability and shorter inference time.

Neck

Generally, deeper networks obtain more feature information, resulting in better dense prediction. However, excessively deep networks reduce the location information of the object, and too many convolution operations will lead to information loss for small objects. Therefore, it is necessary to use Feature Pyramid Network (FPN)48 and Path Aggregation Network (PAN)63 architectures for multi-scale feature fusion. As illustrated in Fig. 4, the Neck part of our model architecture uses multi-scale feature fusion to combine features from different layers of the network. The upper layers acquire more information due to the additional network layers, whereas the lower layers preserve location information due to fewer convolution layers.

Inspired by YOLOv5, where FPN upsamples from top to bottom to increase the amount of feature information in the bottom feature map; and PAN downsamples from bottom to top to obtain more the top feature map information. These two feature outputs are merged to ensure precise predictions for images of various sizes. We adopt FP-PAN (Feature Pyramid-Path Aggregation Network) in our model, and delete convolution operations in upsampling to reduce the computational cost.

Head

Different from YOLOv5 model utilizing a coupled head, we use a decoupled head33, where the classification and detection heads are separated. Figure 4 illustrates that our model deletes the objectness branch and only retains the classification and regression branches. Anchor-Base employes a large number of anchors in the image to determine the four offsets of the regression object from the anchors. It adjusts the precise object location using the corresponding anchors and offsets. In contrast, we adopt Anchor-Free64, which identifies the center of the object and estimates the distance between the center and the bounding box.

Loss

For positive and negative sample assignment, the Task Aligned Assigner of Task-aligned One-stage Object Detection (TOOD)65 is used in our model training to select positive samples based on the weighted scores of classification and regression, as shown in Eq. 2 below:

where s is the predicted score corresponding to the labeled class, and u is the IoU of the prediction and the ground truth bounding box.

In addition, our model has classification and regression branches, where the classification branch uses Binary Cross-Entropy (BCE) Loss, and the equation is shown below:

where w is the weight, \(y_n\) is the labeled value, and \(x_n\) is the predicted value of the model.

The regression branch uses Distribute Focal Loss (DFL)66 and Complete IoU (CIoU) Loss67, where DFL is used to expand the probability of the value around the object y. Its equation is shown as follows:

where the equations of \(\mathscr {S}_n\) and \(\mathscr {S}_{n+1}\) are shown below:

CIoU Loss adds an influence factor to Distance IoU (DIoU) Loss68 by considering the aspect ratio of the prediction and the ground truth bounding box. The equation is shown below:

where \(\nu \) is the parameter that measures the consistency of the aspect ratio, defined as follows:

where w is the weight of the bounding box, and h is the height of the bounding box.

Ethics approval

This research does not involve human participants and/or animals.

Experiments

Dataset

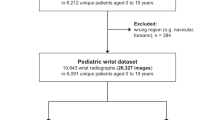

Medical University of Graz provides a public dataset named GRAZPEDWRI-DX37, which consists of 20,327 X-ray images of wrist trauma in children. These images were collected from 6,091 patients between 2008 and 2018 by multiple pediatric radiologists at the Department of Pediatric Surgery of the University Hospital Graz. The images are annotated in 9 different classes by placing bounding boxes on them.

To perform the experiments shown in Table 5 and Table 6, we divide the GRAZPEDWRI-DX dataset randomly into three sets: training set, validation set, and test set. The sizes of these sets are approximately 70%, 20%, and 10% of the original dataset, respectively. Specifically, our training set consists of 14,204 images (69.88%), our validation set consists of 4,094 images (20.14%), and our test set consists of 2029 images (9.98%). The code for splitting the dataset can be found on our GitHub. We also provide csv files of training, validation and test data on our GitHub, but it should be noted that each split is random and therefore not reproducible.

Evaluation metric

Intersection over Union (IoU)

Intersection over Union (IoU) is a classical metric for evaluating the performance of the model for object detection. It calculates the ratio of the overlap and union between the generated candidate bounding box and the ground truth bounding box, which measures the intersection of these two bounding boxes. The IoU is represented by the following equation:

where C represents the generated candidate bounding box, and G represents the ground truth bounding box containing the object. The performance of the model improves as the IoU value increases, with higher IoU values indicating less difference between the generated candidate and ground truth bounding boxes.

Precision-recall curve

Precision-Recall Curve (P-R Curve)69 is a curve with recall as the x-axis and precision as the y-axis. Each point represents a different threshold value, and all points are connected as a curve. The recall (R) and precision (P) are calculated according to the following equations:

where True Positive (\(T_P\)) denotes the prediction result as a positive class and is judged to be true; False Positive (\(F_P\)) denotes the prediction result as a positive class but is judged to be false, and False Negative (\(F_N\)) denotes the prediction result as a negative class but is judged to be false.

F1-score

The F-score is a commonly used metric to evaluate the model accuracy, providing a balanced measure of performance by incorporating both precision and recall. The F-score equation is as follows:

When \(\beta \) = 1, the F1-score is determined by the harmonic mean of precision and recall, and its equation is as follows:

Experiment setup

During the model training process, we utilize pre-trained YOLOv8 model from the MS COCO (Microsoft Common Objects in Context) val2017 dataset72. The research reports provided by Ultralytics36,53 suggests that YOLOv5 training requires 300 epochs, while training YOLOv8 requires 500 epochs. Since we use pre-trained model, we initially set the total number of epochs to 200 with a patience of 50, which indicate that the training would end early if no observable improvement is noticed after waiting for 50 epochs. In the experiment comparing the effect of the optimizer on the model performance, we notice that the best epcoh of all the models is within 100, as shown in Table 4, mostly concentrated between 50 and 70 epochs. Therefore, to save computing resources, we adjust the number of epochs for our model training to 100.

As the suggestion36 of Glenn, for model training hyperparameters, the Adam73 optimizer is more suitable for small custom datasets, while the SGD74 optimizer perform better on larger datasets. To prove the above conclusion, we train YOLOv8 algorithm models using the Adam and SGD optimizers, respectively, and compare the effects on the model performance. The comparison results are shown in Table 4.

For the experiments, we choose the SGD optimizer with an initial learning rate of 1\(\times 10^{-2}\), a weight decay of 5\(\times 10^{-4}\), and a momentum of 0.937 during our model training. We set the input image size to 640 and 1024 for training on a single GPU GeForce RTX 3080Ti 12GB with a batch size of 16. We train the model using Python 3.8 and PyTorch 1.8.2, and recommend readers to use Python 3.7 or higher and PyTorch 1.7 or higher for training. It is noteworthy that due to GPU memory limitations, we choose 3 worker threads to load data on GPU GeForce RTX 3080Ti 12GB when training our model. Therefore, using GPUs with larger memory and more computing power can effectively increase the speed of model training.

Ablation study

In order to demonstrate the positive effect of our training method on the performance of YOLOv8 model, we conduct an ablation study on YOLOv8s model by calculating each evaluation metric for each class, as shown in Table 2. Among all classes, YOLOv8s model has good accuracy in detecting fracture, metal and text, with mAP 50 of each above 0.9. On the opposite, the detection ability of bone-anomaly is poor, with mAP 50 of 0.11. Therefore, we increase the contrast and brightness of X-ray images to make bone-anomaly easier to detect. Table 3 presents the predictions of YOLOv8s model using our training method for each class. Compared with YOLOv8s model, the mAP value predicted by the model using our training method for bone-anomaly increased from 0.11 to 0.169, an increase of 53.6%. Figure 5 also shows that our model has a better performance in detecting bone-anomaly, which enables the improvement of the overall model performance. From the ablation study presented above, we demonstrate that the model performance can be improved by using our training method (data augmentation). In addition to the data enhancement, researchers can also improve model performance by adding modules such as the Convolutional Block Attention Module (CBAM)70.

Experimental results

Before training our model, in order to choose an optimizer that has a more positive effect on the model performance, we compare the performance of models trained with the SGD74 optimizer and the Adam73 optimizer. As shown in Table 4, using the SGD optimizer to train the model requires less epochs of weight updates. Specifically, for YOLOv8m model with an input image size of 1024, the model trained with the SGD optimizer achieves the best performance at the 35th epoch, while the best performance of the model trained with the Adam optimizer is at the 70th epoch. In terms of mAP and inference time, there is not much difference in the performance of the models trained with the two optimizers. Specifically, when the input image size is 640, the mAP value of YOLOv8s model trained with the SGD optimizer is 0.007 higher than that of the model trained with the Adam optimizer, while the inference time is 0.1ms slower. Therefore, according to the above experimental results and the suggestion by Glenn36,53, for YOLOv8 model training on a training set of 14,204 X-ray images, we choose the Adam optimizer. However, after using data augmentation, the number of X-ray images in the training set extend to 28,408, so we switch to the SGD optimizer to train our model.

After using data augmentation, our models have a better mAP value than that of YOLOv8 model, as shown in Table 5 and Table 6. Specifically, when the input image size is 640, compared with YOLOv8m model and YOLOv8l model, the mAP 50 of our model improves from 0.621 to 0.629, and from 0.623 to 0.637, respectively. Although the inference time on the CPU is increased from 536.4 ms and 1006.3 ms to 685.9 ms and 1370.8 ms, respectively, the number of parameters and FLOPs are the same, which means that our model can be deployed on the same computing power platform. In addition, we compare the performance of our model with that of YOLOv7 and its improved models. As shown in Table 7, the mAP value of our model is higher than those of YOLOv732, YOLOv7 with Convolutional Block Attention Module (CBAM)70 and YOLOv7 model with Global Attention Mechanism (GAM)71, which demonstrates that our model has obtained SOTA performance.

This paper aims to design a pediatric wrist fracture detection application, so we use our model for fracture detection. Figure 6 shows the results of manual annotation by the radiologist and the results predicted using our model. These results demonstrate that our model has a good ability to detect fractures in single fracture cases, but metal puncture and dense multiple fracture situations badly affects the accuracy of prediction.

Application

After completing model training, we utilize a Python library that includes the Qt toolkit, PySide6, to develop a Graphical User Interface (GUI) application. Specifically, PySide6 is the Qt6-based version of the PySide GUI library from the Qt Company.

According to the model performance evaluation results in Tables 5 and 6, we choose our model with YOLOv8s algorithm and the input image size of 1024, to perform fracture detection. Our model is exported to onnx format, and is applied to the GUI application. Figure 7 depicts the flowchart of the GUI application operation on macOS. As can be seen from the illustration, our application is named “Fracture Detection Using YOLOv8 App”. Users can open and predict the images, and save the predictions in this application. In summary, our application is designed to assist pediatric surgeons in analyzing fractures on pediatric wrist trauma X-ray images.

Conclusions and future work

Ultralytics proposed the latest version of YOLO series (YOLOv8) in 2023. Although there are relatively few research works on YOLOv8 model for medical image processing, we apply it to fracture detection and use data augmentation to improve the model performance. We randomly divide the dataset, consisting of 20,327 pediatric wrist trauma X-ray images from 6091 patients, into training, test, and validation sets to train the model and evaluate the performance.

Furthermore, we develop an application named “Fracture Detection Using YOLOv8 App” to analyze pediatric wrist trauma X-ray images for fracture detection. Our application aims to assist pediatric surgeons in interpreting X-ray images, reduce the probability of misclassification, and provide a better information base for surgery. The application is currently available for macOS, and in the future, we plan to deploy different sizes of our model in the application, and extend the application to iOS and Android. This will enable inexperienced pediatric surgeons in hospitals located in underdeveloped areas to use their mobile devices to analyze pediatric wrist X-ray images.

In addition, we provide the specific steps for training the model and the trained model in our GitHub. If readers wish to use YOLOv8 model to detect fracture in other parts of the body except the pediatric wrist, they can use our trained model as the pre-training model, which can greatly improve the performance of the model.

Data availability

The datasets analysed during the current study are available at Figshare under https://doi.org/10.6084/m9.figshare.14825193.v2. The implementation code and the trained model for this study can be found on GitHub at https://github.com/RuiyangJu/Bone_Fracture_Detection_YOLOv8.

References

Fractures, health, hopkins medicine. https://www.hopkinsmedicine.org/health/conditions-and-diseases/ fractures (2021).

Hedström, E. M., Svensson, O., Bergström, U. & Michno, P. Epidemiology of fractures in children and adolescents: Increased incidence over the past decade: A population-based study from northern Sweden. Acta Orthop. 81, 148–153 (2010).

Randsborg, P.-H. et al. Fractures in children: Epidemiology and activity-specific fracture rates. JBJS 95, e42 (2013).

Burki, T. K. Shortfall of consultant clinical radiologists in the UK. Lancet Oncol. 19, e518 (2018).

Rimmer, A. Radiologist shortage leaves patient care at risk, warns royal college. BMJ Br. Med. J. (Online) 359 (2017).

Rosman, D. et al. Imaging in the land of 1000 hills: Rwanda radiology country report. J. Glob. Radiol. 1 (2015).

Mounts, J., Clingenpeel, J., McGuire, E., Byers, E. & Kireeva, Y. Most frequently missed fractures in the emergency department. Clin. Pediatr. 50, 183–186 (2011).

Erhan, E., Kara, P., Oyar, O. & Unluer, E. Overlooked extremity fractures in the emergency department. Ulus Travma Acil Cerrahi Derg 19, 25–8 (2013).

Adams, S. J., Henderson, R. D., Yi, X. & Babyn, P. Artificial intelligence solutions for analysis of x-ray images. Can. Assoc. Radiol. J. 72, 60–72 (2021).

Tanzi, L. et al. Hierarchical fracture classification of proximal femur x-ray images using a multistage deep learning approach. Eur. J. Radiol. 133, 109373 (2020).

Chung, S. W. et al. Automated detection and classification of the proximal humerus fracture by using deep learning algorithm. Acta Orthop. 89, 468–473 (2018).

Choi, J. W. et al. Using a dual-input convolutional neural network for automated detection of pediatric supracondylar fracture on conventional radiography. Invest. Radiol. 55, 101–110 (2020).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. Proc. of the IEEE conference on computer vision and pattern recognition, 580–587 (2014).

Ju, R.-Y., Lin, T.-Y., Jian, J.-H., Chiang, J.-S. & Yang, W.-B. Threshnet: An efficient densenet using threshold mechanism to reduce connections. IEEE Access 10, 82834–82843 (2022).

Ju, R.-Y., Lin, T.-Y., Jian, J.-H. & Chiang, J.-S. Efficient convolutional neural networks on raspberry pi for image classification. J. Real-Time Image Proc. 20, 21 (2023).

Gan, K. et al. Artificial intelligence detection of distal radius fractures: A comparison between the convolutional neural network and professional assessments. Acta Orthop. 90, 394–400 (2019).

Kim, D. & MacKinnon, T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 73, 439–445 (2018).

Lindsey, R. et al. Deep neural network improves fracture detection by clinicians. Proc. Natl. Acad. Sci. 115, 11591–11596 (2018).

Blüthgen, C. et al. Detection and localization of distal radius fractures: Deep learning system versus radiologists. Eur. J. Radiol. 126, 108925 (2020).

Girshick, R. Fast r-cnn. Proc. of the IEEE international conference on computer vision 1440–1448 (2015).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Proc. Advances in neural information processing systems 28 (2015).

Cai, Z. & Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. Proc. of the IEEE conference on computer vision and pattern recognition 6154–6162 (2018).

Lu, X., Li, B., Yue, Y., Li, Q. & Yan, J. Grid r-cnn. Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 7363–7372 (2019).

Pang, J. et al. Libra r-cnn: Towards balanced learning for object detection. Proc. of the IEEE/CVF conference on computer vision and pattern recognition 821–830 (2019).

Zhang, H., Chang, H., Ma, B., Wang, N. & Chen, X. Dynamic r-cnn: Towards high quality object detection via dynamic training. Proc. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, Aug 23–28, 2020, Part XV 16, 260–275 (Springer, 2020).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. Proc. of the IEEE international conference on computer vision 2961–2969 (2017).

Liu, W. et al. Ssd: Single shot multibox detector. Computer Vision–ECCV 2016: 14th European Conference Oct 11–14, 2016, Part I 14, 21–37 (Amsterdam, The Netherlands, Springer, 2016).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. Proc. of the IEEE international conference on computer vision 2980–2988 (2017).

Law, H. & Deng, J. Cornernet: Detecting objects as paired keypoints. Proc. of the European conference on computer vision (ECCV), 734–750 (2018).

Duan, K. et al. Centernet: Keypoint triplets for object detection. Proc. of the IEEE/CVF international conference on computer vision 6569–6578 (2019).

Dong, Z. et al. Centripetalnet: Pursuing high-quality keypoint pairs for object detection. Proc. of the IEEE/CVF conference on computer vision and pattern recognition 10519–10528 (2020).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint arXiv:2207.02696 (2022).

Ge, Z., Liu, S., Wang, F., Li, Z. & Sun, J. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021).

Wang, C.-Y., Yeh, I.-H. & Liao, H.-Y. M. You only learn one representation: Unified network for multiple tasks. arXiv preprint arXiv:2105.04206 (2021).

Ju, R.-Y., Chen, C.-C., Chiang, J.-S., Lin, Y.-S. & Chen, W.-H. Resolution enhancement processing on low quality images using swin transformer based on interval dense connection strategy. Multimed. Tools Appl. 1–17 (2023).

Glenn, J. Ultralytics yolov8. https://github.com/ultralytics/ultralytics (2023).

Nagy, E., Janisch, M., Hržić, F., Sorantin, E. & Tschauner, S. A pediatric wrist trauma x-ray dataset (grazpedwri-dx) for machine learning. Sci. Data 9, 222 (2022).

Guan, B., Yao, J., Zhang, G. & Wang, X. Thigh fracture detection using deep learning method based on new dilated convolutional feature pyramid network. Pattern Recogn. Lett. 125, 521–526 (2019).

Wang, M. et al. Parallelnet: Multiple backbone network for detection tasks on thigh bone fracture. Multimed. Syst. 27, 1091–1100 (2021).

Guan, B., Zhang, G., Yao, J., Wang, X. & Wang, M. Arm fracture detection in x-rays based on improved deep convolutional neural network. Comput. Electric. Eng. 81, 106530 (2020).

Rajpurkar, P. et al. Mura dataset: Towards radiologist-level abnormality detection in musculoskeletal radiographs. In Medical Imaging with Deep Learning (2018).

Wu, H.-Z. et al. The feature ambiguity mitigate operator model helps improve bone fracture detection on x-ray radiograph. Sci. Rep. 11, 1–10 (2021).

Ma, Y. & Luo, Y. Bone fracture detection through the two-stage system of crack-sensitive convolutional neural network. Inf. Med. Unlocked 22, 100452 (2021).

Xue, L. et al. Detection and localization of hand fractures based on ga_faster r-cnn. Alex. Eng. J. 60, 4555–4562 (2021).

Sha, G., Wu, J. & Yu, B. Detection of spinal fracture lesions based on improved yolov2. Proc. 2020 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA) 235–238 (IEEE, 2020).

Sha, G., Wu, J. & Yu, B. Detection of spinal fracture lesions based on improved faster-rcnn. 2020 IEEE International Conference on Artificial Intelligence and Information Systems (ICAIIS) 29–32 (IEEE, 2020).

Xie, S., Girshick, R., Dollár, P., Tu, Z. & He, K. Aggregated residual transformations for deep neural networks. Proc. of the IEEE conference on computer vision and pattern recognition 1492–1500 (2017).

Lin, T.-Y. et al. Feature pyramid networks for object detection. Proc. of the IEEE conference on computer vision and pattern recognition 2117–2125 (2017).

Qi, Y. et al. Ground truth annotated femoral x-ray image dataset and object detection based method for fracture types classification. IEEE Access 8, 189436–189444 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. Proc. of the IEEE conference on computer vision and pattern recognition 770–778 (2016).

Redmon, J. & Farhadi, A. Yolo9000: better, faster, stronger. Proc. of the IEEE conference on computer vision and pattern recognition 7263–7271 (2017).

Hržić, F., Tschauner, S., Sorantin, E. & Štajduhar, I. Fracture recognition in paediatric wrist radiographs: An object detection approach. Mathematics 10, 2939 (2022).

Glenn, J. Ultralytics yolov5. https://github.com/ultralytics/yolov5 (2022).

Yuan, G., Liu, G., Wu, X. & Jiang, R. An improved yolov5 for skull fracture detection. Exploration of Novel Intelligent Optimization Algorithms: 12th International Symposium, ISICA 2021 Nov 20–21, 2021, Revised Selected Papers, 175–188 (Guangzhou, China, Springer, 2022).

Warin, K. et al. Maxillofacial fracture detection and classification in computed tomography images using convolutional neural network-based models. Sci. Rep. 13, 3434 (2023).

Tsai, H.-C. et al. Automatic rib fracture detection and localization from frontal and oblique chest x-rays. Proc. 2022 10th International Conference on Orange Technology (ICOT) 1–4 (IEEE, 2022).

Burkow, J. et al. Avalanche decision schemes to improve pediatric rib fracture detection. Proc. Medical Imaging 2022: Computer-Aided Diagnosis vol. 12033, 597–604 (SPIE, 2022).

Warin, K. et al. Assessment of deep convolutional neural network models for mandibular fracture detection in panoramic radiographs. Int. J. Oral Maxillofac. Surg. 51, 1488–1494 (2022).

Fatima, J., Mohsan, M., Jameel, A., Akram, M. U. & Muzaffar Syed, A. Vertebrae localization and spine segmentation on radiographic images for feature-based curvature classification for scoliosis. Concurr. Comput. Pract. Exp. 34, e7300 (2022).

Mushtaq, M., Akram, M. U., Alghamdi, N. S., Fatima, J. & Masood, R. F. Localization and edge-based segmentation of lumbar spine vertebrae to identify the deformities using deep learning models. Sensors 22, 1547 (2022).

Wang, C.-Y. et al. Cspnet: A new backbone that can enhance learning capability of cnn. Proc. of the IEEE/CVF conference on computer vision and pattern recognition workshops 390–391 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37, 1904–1916 (2015).

Liu, S., Qi, L., Qin, H., Shi, J. & Jia, J. Path aggregation network for instance segmentation. Proc. of the IEEE conference on computer vision and pattern recognition 8759–8768 (2018).

Tian, Z., Shen, C., Chen, H. & He, T. Fcos: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 44, 1922–1933 (2020).

Feng, C., Zhong, Y., Gao, Y., Scott, M. R. & Huang, W. Tood: Task-aligned one-stage object detection. Proc. 2021 IEEE/CVF International Conference on Computer Vision (ICCV) 3490–3499 (IEEE Computer Society, 2021).

Li, X. et al. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural. Inf. Process. Syst. 33, 21002–21012 (2020).

Zheng, Z. et al. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 52, 8574–8586 (2021).

Zheng, Z. et al. Distance-iou loss: Faster and better learning for bounding box regression. Proc. of the AAAI conference on artificial intelligence vol. 34, 12993–13000 (2020).

Boyd, K., Eng, K. H. & Page, C. D. Area under the precision-recall curve: point estimates and confidence intervals. Proc. Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2013 Sept 23–27, 2013, Part III 13, 451–466 (Prague, Czech Republic, Springer, 2013).

Woo, S., Park, J., Lee, J.-Y. & Kweon, I. S. Cbam: Convolutional block attention module. Proc. of the European conference on computer vision (ECCV) 3–19 (2018).

Liu, Y., Shao, Z. & Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv preprint arXiv:2112.05561 (2021).

Lin, T.-Y. et al. Microsoft coco: Common objects in context. Proc. Computer Vision–ECCV 2014: 13th European Conference Sept 6–12, 2014, Part V 13, 740–755 (Zurich, Switzerland, Springer, 2014).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Ruder, S. An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747 (2016).

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

R.J. conceived and conducted the experiments, and W.C. provided knowledge about the fracture and analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ju, RY., Cai, W. Fracture detection in pediatric wrist trauma X-ray images using YOLOv8 algorithm. Sci Rep 13, 20077 (2023). https://doi.org/10.1038/s41598-023-47460-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-47460-7

This article is cited by

-

Statistical Analysis of Design Aspects of Various YOLO-Based Deep Learning Models for Object Detection

International Journal of Computational Intelligence Systems (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.