Abstract

We determined if a convolutional neural network (CNN) deep learning model can accurately segment acute ischemic changes on non-contrast CT compared to neuroradiologists. Non-contrast CT (NCCT) examinations from 232 acute ischemic stroke patients who were enrolled in the DEFUSE 3 trial were included in this study. Three experienced neuroradiologists independently segmented hypodensity that reflected the ischemic core on each scan. The neuroradiologist with the most experience (expert A) served as the ground truth for deep learning model training. Two additional neuroradiologists’ (experts B and C) segmentations were used for data testing. The 232 studies were randomly split into training and test sets. The training set was further randomly divided into 5 folds with training and validation sets. A 3-dimensional CNN architecture was trained and optimized to predict the segmentations of expert A from NCCT. The performance of the model was assessed using a set of volume, overlap, and distance metrics using non-inferiority thresholds of 20%, 3 ml, and 3 mm, respectively. The optimized model trained on expert A was compared to test experts B and C. We used a one-sided Wilcoxon signed-rank test to test for the non-inferiority of the model-expert compared to the inter-expert agreement. The final model performance for the ischemic core segmentation task reached a performance of 0.46 ± 0.09 Surface Dice at Tolerance 5mm and 0.47 ± 0.13 Dice when trained on expert A. Compared to the two test neuroradiologists the model-expert agreement was non-inferior to the inter-expert agreement, \(p < 0.05\). The before, CNN accurately delineates the hypodense ischemic core on NCCT in acute ischemic stroke patients with an accuracy comparable to neuroradiologists.

Similar content being viewed by others

Introduction

Acute ischemic stroke (AIS) is the number one cause of disability and a leading cause of mortality in the United States and worldwide1,2. AIS due to large vessel occlusion (AIS-LVO) carries the worst prognosis, but timely endovascular thrombectomy treatment leads to reduced death and disability3. AIS-LVO patient treatment decisions are guided by the presence and severity of the acute ischemic core, which is considered to be irreversibly injured brain tissue4,5,6. The ischemic core is commonly assessed on computed tomography perfusion and diffusion-weighted imaging (DWI) magnetic resonance imaging (MRI). However, these imaging techniques are less widely available, and more generalizable means to identify and quantify the ischemic core on non-contrast head CT (NCCT) are needed. NCCT is the most commonly used imaging modality in AIS patients (>65%) given its widespread availability and low cost7,8,9.

Established semi-quantitative methods to assess an ischemic stroke on NCCT include the European Cooperative Acute Stroke Study (ECASS) 1 and Alberta Stroke Program Early CT Score (ASPECTS). ECASS defined a major infarct as involving more than 1/3 of the middle cerebral artery territory10, and ASPECTS evaluates 10 standardized regions within the middle cerebral artery territory and removes one point for the presence of hypodensity within each region. AIS-LVO patients with an ASPECTS \(\ge 6\) have been shown to benefit from thrombectomy in multiple studies11,12, and, more recently, AIS-LVO patients with an ASPECTS \(\ge 3\) have also been shown to benefit from thrombectomy13,14,15. ASPECTS is widely used, but it is limited by low reproducibility among raters and correlates only modestly to ischemic lesion volumes and symptom severity12,16,17. New imaging techniques that can identify and segment the ischemic core on NCCT with a more reliable inter-rater agreement would improve patient selection and identify AIS-LVO populations in need of further study to improve outcomes.

Supervised deep learning is a promising technique that has been successfully applied in medical image segmentation challenges, such as lesion segmentation on CT perfusion images of the brain18. Furthermore, benchmark deep learning models for out-of-the-box segmentation of diverse medical imaging datasets have been developed19, but they have been sparsely applied to ischemic stroke segmentation20. However, the low signal-to-noise ratio and ill-defined borders of the ischemic core on NCCT results in segmentation variability between experts21. This variability results in difficulty in defining the ground truth and in evaluating deep learning model performance against current segmentation methods (manual segmentation of experts)22,23.

We present a deep learning framework and evaluation process specifically designed for segmenting ischemic stroke lesions on NCCT scans. This framework allows us to not only compare the model’s segmentation with ground truth segmentation of the test set20,24,25,26, but to evaluate for non-inferiority when compared to two test experts. In this way, we may show that the model segmentations generalize to experts it was not trained with - measuring to which degree the model is consistent with the ischemic core as a biomarker with inherent variability between experts.

We hypothesized that a deep learning model trained against an experienced neuroradiologist may accurately identify and segment hypodensity that represents the ischemic core on NCCT. We also hypothesized that this trained deep learning model would segment the ischemic core non-inferiorly when compared to other neuroradiologists. We tested these hypotheses in NCCT studies of AIS-LVO patients enrolled in the DEFUSE 3 trial.

Results

Patient characteristics

All randomized (n = 146) and non-randomized (n = 86) patients from the DEFUSE 3 trial with a baseline NCCT study were included. The time from symptom onset to NCCT image acquisition (10 (IQR: 8–12)h and 10 (IQR: 9–12)h), and ASPECTS (8 (IQR: 7–9) and 8 (IQR: 7–9)) were similar in the training and test set, respectively. Additional patient characteristics were similar between the training and test set (Table 1).

Ischemic core hypodensity ground truth determination

The median volume of the ground truth on NCCT as determined by Expert A was 12 (IQR: 5–30) ml in the training set and 13 (IQR: 5–35) ml in the test set. Similar ischemic core volumes were determined by CT perfusion in the training set and in the test set (11 (IQR: 0–39) ml and 12 (IQR: 4–32) ml, respectively).

Evaluation of model

On the test set, the final model trained on expert A achieved the following performance: Surface Dice at Tolerance 5mm of 0.46 ± 0.09, Dice of 0.47 ± 0.13, and absolute volume difference (AVD) of 7.43 ± 4.31 ml (Table 2, last column). We observed similar performance on the validation sets and present further details of weaker-performing models in Supplementary Table S.1).

To put the results of the final model into perspective, the predicted segmentations on the test set were then compared to the test experts B and C.

With the chosen metrics and lower boundaries, the model-expert agreement (model trained on expert A compared to expert B and C) is non-inferior to the inter-expert agreement (expert A compared to expert B and C) (Fig. 4). For expert B, the model-expert is better than the inter-expert (Surface Dice at Tolerance of 5mm 0.63 ± 0.16 vs. 0.54 ± 0.09, Dice 0.56 ± 0.18 vs. 0.47 ± 0.16). For expert C, the model-expert and inter-expert are similar and within the testing boundary (Table 2).

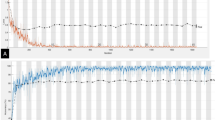

In addition, the volumetric inter-expert and model-expert agreements are visualized with scatter plots in Fig. 1 with Spearman correlation coefficient (R). The correlation between the predicted volumes of the model and the test expert is higher than that between experts (R=0.75 vs. R=0.74 for expert B (top row, blue lines), R = 0.79 vs. R = 0.63 for expert C (bottom row, yellow lines).

Scatter plots of volume agreement between experts and model on test set. Top row: Top row: Inter-Expert and Model-Expert Agreement for expert B, Bottom row: Top row: Inter-Expert and Model-Expert Agreement for expert C, R Spearman’s correlation coefficient, Gray Area 95% confidence region, Black dots individual data points. The gray areas are smaller in the model-expert comparisons (rightmost column) indicating a lower variance for the predicted volumes.

Figure 2 displays a qualitative comparison of the model’s prediction, comparing annotations by experts A, B and C in two patients with different image quality. The model prediction visually agrees with the training expert A (ground truth) as well as with the test experts B and C.

Qualitative analyses of experts A, B, and C and the prediction of the model. Patient 1 (left): higher quality NCCT Patient 2 (right): lower quality NCCT. Experts A, B, and C agree on the location and volume of the stroke. The model prediction (last row) agrees as well with the test experts B and C as with the training expert A.

Analyses on models that are trained on each of the other experts can be found in the Supplementary Tables S.2, S.3.

Discussion

In this study, a 3D convolutional neural network (CNN) segmented the hypodense ischemic core on NCCT in a manner that was non-inferior compared to expert neuroradiologists. Our results are notable because the segmentation of acute ischemic stroke is a challenging task compared to less complex tasks in which deep learning methods have shown promise27.

In addition, the non-inferiority of the model across comparisons to multiple different expert neuroradiologists suggests that our results are generally applicable to the identification and quantification of the ischemic core on NCCT, and not limited to the evaluation of any particular physician.

These results have important implications for the care of patients with AIS-LVO.

Segmentation of the ischemic core on NCCT is challenging and suffers from high inter-expert variability. This variability results in significant difficulty in ischemic core segmentation and in the definition of a gold standard. Our results have important implications for artificial intelligence approaches to detect and quantify ischemic brain injury on NCCT.

The detection of cerebral ischemia and the ischemic core differs between commonly used imaging modalities, such as MRI (DWI), NCCT, and CT perfusion. This variability may result in differences in an imaging modality’s ability to detect and localize the ischemic core. The sensitivity of NCCT for cerebral ischemia detection may be as low as 30%28,29. This variability hampers consistent evaluation of deep learning models and integration in clinical practice.

In order to create an optimal ground truth, prior work created a hypodense ischemic core lesion on NCCT from healthy patients by co-registering ischemic core lesions from DWI studies of acute ischemic stroke patients30. Other studies have also chosen DWI lesions as ground truth and co-registered to NCCT images from the same patient26,31. However, very few centers have large databases of patients with NCCT and DWI acquired within short time intervals to facilitate the development of CNN that uses the ischemic core on DWI as the ground truth. In addition, diffusion restriction is a unique phenomenon of DWI, especially in earlier time windows (\(<1\)h) where cytotoxic edema is the predominant abnormality that is imaged32. Hypodensity on NCCT is generally felt to largely reflect vasogenic edema, which normally develops \(> 1-4\)h after stroke onset33 suggesting irreversibly damaged brain tissue (ischemic core). We chose the ground truth segmentation based on the human reader with the most experience among expert neuroradiologists. Compared to related research, we report advancements in model development (Supplementary Table S.4). We show significant non-inferiority through a comprehensive statistical analysis incorporating multiple performance metrics20.

Cell death in ischemic stroke is time-sensitive and happens on a continuous temporal scale, which results in a very difficult segmentation task even for experienced neuroradiologists in AIS-LVO patients. The CNN developed in this study demonstrated strong performance in the delineation of the ischemic core across multiple expert neuroradiologists, which suggests that this approach is likely to be generalizable in AIS-LVO patients. Future studies should test this hypothesis. In addition, our results have the potential to increase the consistency and quality of stroke assessment on NCCT in the emergency setting across hospitals where expert neuroradiologists might not be always available.

This study has limitations. First, the dataset originates from the DEFUSE 3 trial that randomized stroke patients presented within 6-16 hours.However, to diversify we also included non-randomized patients who did not meet the inclusion criteria (Table 1). Second, we included the manual segmentation of three experts. Since the concept of absolutely correct ground truth core segmentation ischemic stroke is not well-defined, more experts might be necessary for more accurate validation of results21.

Conclusion

A CNN was non-inferior to expert neuroradiologists for the segmentation of the hypodense ischemic core on NCCT.

Methods

Study design and data

This post-hoc analysis of the DEFUSE 3 trial included 232 AIS-LVO patients with NCCT who were either enrolled in the study or screened but not enrolled5. This multi-center (38 U.S. centers with obtained IRB approval) trial investigated thrombectomy eligibility for patients with acute ischemic stroke with an onset time within 6-16 hrs (https://clinicaltrials.gov/ct2/show/NCT02586415). The patient cohort includes patients that met the inclusion criteria (symptom onset within 6-16 h, anterior circulation, NIHSS \(\ge\) 6) and patients that were excluded from randomization because of exclusion criteria (no LVO, within 6h of symptom onset). Further scanning parameters and details of the patient cohort are described in the original publication of the DEFUSE 3 trial5. All patients or their legally authorized representatives provided informed consent. Institutional review board approval from the Administrative Panel on Human Subjects in Medical Research at Stanford University was obtained for this study. All methods were performed in accordance with the relevant guidelines and regulations.

Ischemic core hypodensity ground truth determination

Three experienced neuroradiologists from the USA and Belgium with 4, 4, and 9 years of clinical experience post-fellowship in diagnostic neuroradiology were instructed to outline abnormal hypodensity on the NCCT that was consistent with acute ischemic stroke within 6-16 hrs of symptom onset. Segmentation was performed with the drawing tool in Horos (Horosproject.org, version 4.0.0). Experts had the option to not segment any tissue if no abnormal hypodensity was appreciated. Experts were blinded to all imaging other than the NCCT. For detailed instructions see the original instruction sheet (Supplementary Fig. S.2).

Data preparation and partitions

The NCCT image and corresponding manual segmentation mask were resized to a common resolution of 22–56 × 512 × 512, resampled, and normalized using an existing preprocessing pipeline19. A mirrored rigid co-registered version of each input image was computed using SimpleITK to provide the model with symmetry information of the opposite hemisphere (https://simpleitk.org/)30. Data augmentation was performed with the python package “batchgenerators” (version 2.0.0) including rotation, random cropping, gamma transformation, flipping, scaling, brightness adjustments, and elastic deformation19.

The data was divided into three steps. First, the experts were divided into training (expert A, ground truth) and test experts (experts B and C) by the amount of experience to approximate the most accurate ground truth for the model. Second, the cohort of 232 patients was randomly partitioned into 200 training and 32 test patients. Third, the training set was further split into five folds for cross-validation, with 160 patients for the training and 40 for the validation. Optimized model configurations were selected based on the result of fold 1 and further validated on folds 2 to 5 (Fig. 3, Table S.1). The highest-performing model from the 5-fold cross-validation, based on the Surface Dice at Tolerance at 5mm, was then evaluated on the test set.

Model architecture and training

A nnUNet was trained on the NCCTs with manual segmentations of the training expert A as reference annotations (Pytorch 1.11.0, Python 3.8, cuda 11.3). The model’s input comprised a brain NCCT, along with an NCCT of the same patient. In the latter scan, the ipsilateral hemisphere is replaced by a mirrored version of the contralateral hemisphere. The output of the model was a segmentation mask19. The final nnUNet configuration includes a patch size of 28 × 256 × 256 and spacing of (3.00, 0.45, 0.45), 7 stages with two convolutional layers per stage, leaky ReLU as an activation function, Soft Dice + Focal34 loss functions with equal weights, alpha of 0.5 and gamma of 2, a batch size of 2, stochastic gradient descent optimizer and He initialization (Supplementary Fig. S.1). We empirically set the epoch number to 350. In addition, we applied further regularization techniques such as L2 regularization, a dropout of 0.1, and a momentum of 0.85. Data augmentation included rotation, random crop, re-scaling, elastic transformation, and flipping. Please see Supplementary Table S.4 for a detailed discussion and analysis of technical procedures.

Metrics

The models were evaluated using a set of volume, overlap and distance metrics (for definitions see Supplementary Table S.5):

-

Volume-based metrics (volumetric similarity (VS) and absolute volume difference (AVD) [ml]).

-

Overlap metrics (dice, precision, and recall), and

-

Distance metrics (Hausdorff distance 95 percentile (HD 95) [mm], surface dice at tolerance of 5 mm).

The best configuration choice was chosen based on the ‘Surface dice at tolerance’ with a tolerance of 5 mm.

The surface dice at tolerance, also known as the normalized surface dice, quantifies the separation between individual surface voxels in the reference and predicted masks. The tolerance establishes the maximum acceptable distance for surface voxels in the reference and predicted masks to be classified as true positive voxels. This metric is especially useful if there is more variability in the outer compared to the inner border, as is the case for ischemic stroke segmentation35,36,37. In this work, we chose the tolerance to be 5mm based on the average surface distance between experts.

Statistical analysis

R (Version 2022.02.3) was used for statistical analysis. To evaluate the model performance for generalizability on unseen data, we measure to which degree the model segmentation on the test set is consistent with the ischemic core as a biomarker inherent to the variability between experts. For that, we compare the model segmentation against the test experts B and C (Fig. 4). We used each metric to evaluate how close the model segmentations were to the test experts.

We used the one-sided Wilcoxon rank sign test (\(\alpha\) = 0.05, n = 32) of the median metric values upon a negative Shapiro test for normality. We chose the following non-inferior boundaries:

-

For relative metrics with values between 0 and 1: The model-expert agreement is no worse than 20% of the metric range compared to the inter-expert agreement.

-

AVD: The model-expert absolute volume difference is at most 3 ml larger compared to the inter-expert agreement.

-

HD 95: The model-expert maximum distance is at most 3 mm larger compared to the inter-expert agreement.

We chose these boundaries based on the average difference in inter-expert agreements as a measure for variability (for metrics with a range of 0 to 1: 0.19, for metrics with SI Units: 2.53. This identifies whether model performance is comparable, within the normal bounds of variation, to experienced neuroradiologists24. This implies that the difference between the model-expert and inter-expert agreement is tested for being smaller than the average difference variability of agreement among experts to reach non-inferiority.

All p-values were adjusted for the total number of statistical tests presented in the paper using the Holm-Bonferroni method. The significant threshold is p < 0.05.

We report statistical analysis on the test set.

Data availability

To facilitate future studies, we have made the model evaluator tool and statistical tool freely available on GitHub (https://github.com/SophieOstmeier/UncertainSmallEmpty and https://github.com/SophieOstmeier/StrokeAnalyzer). The data set will be made available upon reasonable request. Please contact the corresponding author (Jeremy Heit).

References

Feigin, V. L. et al. Global regional and national burden of stroke and its risk factors, 1990–2019: A systematic analysis for the global burden of disease study 2019. Lancet Neurol. 20, 795–820. https://doi.org/10.1016/s1474-4422(21)00252-0 (2021).

Lakomkin, N. et al. Prevalence of large vessel occlusion in patients presenting with acute ischemic stroke: A 10-year systematic review of the literature. J. NeuroInterv. Surg. 11, 241–245. https://doi.org/10.1136/neurintsurg-2018-014239 (2019).

Campbell, B. C. et al. Endovascular thrombectomy for ischemic stroke increases disability-free survival, quality of life, and life expectancy and reduces cost. Front. Neurol. 8, 657 (2017).

Powers, W. J. et al. 2018 guidelines for the early management of patients with acute ischemic stroke: A guideline for healthcare professionals from the American Heart Association and American Stroke Association. Stroke 49, e46–e99 (2018).

Albers, G. W. et al. Thrombectomy for stroke at 6 to 16 hours with selection by perfusion imaging. N. Engl. J. Med. 378, 708–718. https://doi.org/10.1056/NEJMoa1713973 (2018).

Nogueira, R. G. et al. Thrombectomy 6 to 24 hours after stroke with a mismatch between deficit and infarct. N. Engl. J. Med. 378, 11–21. https://doi.org/10.1056/NEJMoa1706442 (2017).

Kim, Y. et al. Utilization and availability of advanced imaging in patients with acute ischemic stroke. Circ. Cardiovasc. Qual. Outcomes 14, e006989. https://doi.org/10.1161/CIRCOUTCOMES.120.006989 (2021).

McDonough, R., Ospel, J. & Goyal, M. State of the art stroke imaging: A current perspective. Can. Assoc. Radiol. J.https://doi.org/10.1177/08465371211028823 (2021).

Alexandrov, A. W. & Fassbender, K. Triage based on preclinical scores-low-cost strategy for accelerating time to thrombectomy. JAMA Neurol. 77, 681–682. https://doi.org/10.1001/jamaneurol.2020.0113 (2020).

Hacke, W. et al. Intravenous thrombolysis with recombinant tissue plasminogen activator for acute hemispheric stroke: The European Cooperative Acute Stroke Study (ECASS). JAMA 274, 1017–1025 (1995).

Jovin, T. G. et al. Thrombectomy within 8 hours after symptom onset in ischemic stroke. N. Engl. J. Med. 372, 2296–2306 (2015).

Goyal, M. et al. Endovascular thrombectomy after large-vessel ischaemic stroke: A meta-analysis of individual patient data from five randomised trials. Lancet 387, 1723–1731 (2016).

Huo, X. et al. Trial of endovascular therapy for acute ischemic stroke with large infarct. N. Engl. J. Med. 388, 1272–1283 (2023).

Sarraj, A. et al. Trial of endovascular thrombectomy for large ischemic strokes. N. Engl. J. Med.https://doi.org/10.1056/NEJMoa2214403 (2023).

Yoshimura, S. et al. Endovascular therapy for acute stroke with a large ischemic region. N. Engl. J. Med. 386, 1303–1313 (2022).

Barber, P. A., Demchuk, A. M., Zhang, J. & Buchan, A. M. Validity and reliability of a quantitative computed tomography score in predicting outcome of hyperacute stroke before thrombolytic therapy. Lancet 355, 1670–1674. https://doi.org/10.1016/S0140-6736(00)02237-6 (2000).

Schroeder, J. & Thomalla, G. A critical review of Alberta stroke program early CT score for evaluation of acute stroke imaging. Front. Neurol.https://doi.org/10.3389/fneur.2016.00245 (2017).

Hakim, A. et al. Predicting infarct core from computed tomography perfusion in acute ischemia with machine learning: Lessons from the ISLES challenge. Stroke 52, 2328–2337 (2021).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnu-net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211. https://doi.org/10.1038/s41592-020-01008-z (2021).

El-Hariri, H. et al. Evaluating nnU-Net for early ischemic change segmentation on non-contrast computed tomography in patients with acute ischemic stroke. Comput. Biol. Med. 141, 105033 (2022).

Goyal, M. et al. Challenging the ischemic core concept in acute ischemic stroke imaging. Stroke 51, 3147–3155 (2020).

Lampert, T. A., Stumpf, A. & Gançarski, P. An empirical study into annotator agreement, ground truth estimation, and algorithm evaluation. IEEE Trans. Image Process. 25, 2557–2572. https://doi.org/10.1109/TIP.2016.2544703 (2016).

Warfield, S. K., Zou, K. H. & Wells, W. M. Validation of image segmentation by estimating rater bias and variance. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 366, 2361–2375 (2008).

Hirsch, L. et al. Radiologist-level performance by using deep learning for segmentation of breast cancers on MRI scans. Radiol. Artif. Intell. 4, e200231 (2021).

Liu, C.-F. et al. Deep learning-based detection and segmentation of diffusion abnormalities in acute ischemic stroke. Commun. Med. 1, 61. https://doi.org/10.1038/s43856-021-00062-8 (2021).

Kuang, H., Menon, B. K., Sohn, S. I. L. & Qiu, W. EIS-Net: Segmenting early infarct and scoring ASPECTS simultaneously on non-contrast CT of patients with acute ischemic stroke. Med. Image Anal. 70, 101984. https://doi.org/10.1016/j.media.2021.101984 (2021).

Havaei, M. et al. Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31 (2017).

Cereda, C. W. et al. A benchmarking tool to evaluate computer tomography perfusion infarct core predictions against DWI standard. J. Cereb. Blood Flow Metab. 36, 1780–1789 (2016).

Lansberg, M. G., Albers, G. W., Beaulieu, C. & Marks, M. P. Comparison of diffusion-weighted MRI and CT in acute stroke. Neurology 54, 1557–1561 (2000).

Christensen, S. et al. Optimizing deep learning algorithms for segmentation of acute infarcts on non-contrast material-enhanced CT scans of the brain using simulated lesions. Radiol. Artif. Intell.https://doi.org/10.1148/ryai.2021200127 (2021).

Qiu, W. et al. Machine learning for detecting early infarction in acute stroke with non-contrast-enhanced CT. Radiology 294, 638–644 (2020).

Albers, G. W. Diffusion-weighted MRI for evaluation of acute stroke. Neurology 51, S47–S49 (1998).

Almandoz, J. E.D., Pomerantz, S.R., González, R.G. & Lev, M.H. Imaging of acute ischemic stroke: Unenhanced computed tomography. Acute Ischemic Stroke, 43–56 (2011).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, 2980–2988 (2017).

Nikolov, S. et al. Deep learning to achieve clinically applicable segmentation of head and neck anatomy for radiotherapy. Preprint at http://arxiv.org/abs/1809.04430 (2018).

Maier-Hein, L. et al. Metrics reloaded: Pitfalls and recommendations for image analysis validation. Preprint at http://arxiv.org/abs/2206.01653 (2022).

Ostmeier, S. et al. USE-evaluator: Performance metrics for medical image segmentation models supervised by uncertain, small or empty reference annotations in neuroimaging. Med. Image Anal. 90, 102927 (2023).

Author information

Authors and Affiliations

Contributions

Guarantors of the integrity of the entire study, S.O., J.J.H.; study concepts/study design or data acquisition or data analysis/interpretation, all authors; manuscript drafting or manuscript revision for important intellectual content, all authors; approval of final version of submitted manuscript, all authors; literature research, S.O., J.J.H.; clinical studies, S.O., J.J.H.; statistical analysis, S.O., B.A.; and manuscript editing, S.O., B.A., S.C., J.L., G.Z., J.J.H.

Corresponding author

Ethics declarations

Competing interests

Sophie Ostmeier: none Brian Axelrod: none Benjamin F.J. Verhaaren: none Soren Christensen: none Abdelkader Mahammedi: none Yongkai Liu: none Benjamin Pulli: none Li-Jia Li: none Greg Zaharchuk: co-founder, equity of Subtle Medical, funding support GE Healthcare, consultant Biogen Jeremy J. Heit: Consultant for Medtronic and MicroVention, Member of the medical and scientific advisory board for iSchemaView.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ostmeier, S., Axelrod, B., Verhaaren, B.F.J. et al. Non-inferiority of deep learning ischemic stroke segmentation on non-contrast CT within 16-hours compared to expert neuroradiologists. Sci Rep 13, 16153 (2023). https://doi.org/10.1038/s41598-023-42961-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-42961-x

This article is cited by

-

Hyperparameter-optimized Cross Patch Attention (CPAM) UNET for accurate ischemia and hemorrhage segmentation in CT images

Signal, Image and Video Processing (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.