Abstract

Binary code similarity analysis is widely used in the field of vulnerability search where source code may not be available to detect whether two binary functions are similar or not. Based on deep learning and natural processing techniques, several approaches have been proposed to perform cross-platform binary code similarity analysis using control flow graphs. However, existing schemes suffer from the shortcomings of large differences in instruction syntaxes across different target platforms, inability to align control flow graph nodes, and less introduction of high-level semantics of stability, which pose challenges for identifying similar computations between binary functions of different platforms generated from the same source code. We argue that extracting stable, platform-independent semantics can improve model accuracy, and a cross-platform binary function similarity comparison model N_Match is proposed. The model elevates different platform instructions to the same semantic space to shield their underlying platform instruction differences, uses graph embedding technology to learn the stability semantics of neighbors, extracts high-level knowledge of naming function to alleviate the differences brought about by cross-platform and cross-optimization levels, and combines the stable graph structure as well as the stable, platform-independent API knowledge of naming function to represent the final semantics of functions. The experimental results show that the model accuracy of N_Match outperforms the baseline model in terms of cross-platform, cross-optimization level, and industrial scenarios. In the vulnerability search experiment, N_Match significantly improves hit@N, the mAP exceeds the current graph embedding model by 66%. In addition, we also give several interesting observations from the experiments. The code and model are publicly available at https://www.github.com/CSecurityZhongYuan/Binary-Name_Match.

Similar content being viewed by others

Introduction

Binary code similarity analysis is used to determine whether binary functions compiled from the same source code are similar or different. Due to code reuse, the same source code can be compiled with cross-optimization levels -O[0, 1, 2, 3] to generate binary code of cross-platform such as X86 (a standardized numbering abbreviation for a family of intel general-purpose computers), ARM (Advanced RISC Machine, ARM), MIPS (Million Instructions Per Second, MIPS), the same binary code will appear in multiple released programs, or even in multiple parts of a program. Once a bug is found in a binary code, the similarity analysis techniques can be used to find the same bug code or similar bug code, so as to quickly and timely find the risk or vulnerability1. Since cross-platform code syntax varies greatly, such as opcodes, operands, function calls, and memory accesses, semantic similarity is usually computed on the comparison, and the best practice is to compare the similarity between the known binary code semantic and the unknown binary code semantic. David and Yahav2 calculates the number of identical strand code fragments in the two sets as the similarity. Huang et al.3 computes the similarity between the longest execution paths on the CFG (Control Flow Graph, CFG).

Recent research results [125] pointed out and verified that similarity results obtained by utilizing graph neural networks based on CFG perform best in binary code similarity comparison. The idea is that similar codes with less structural variation, structural stability, and semantic information from graphs, therefore, many scholars have utilized the stability of the CFG structure and the graph-carrying annotation semantics to represent binary code semantics. Xu et al.4 proposes a Gemini scheme based on manual feature extraction to compute binary function semantics with the help of graph neural networks by manually analyzing statistical and platform-related basic block features. The UFEBS (Unsupervised Features Extraction for Binary Similarity, UFEBS) scheme proposed in5 utilizes RNN (recurrent neural network, RNN) to compute the basic block semantics and converts CFGs into graph embeddings with the help of graph neural networks. Alrabaee et al.6 incorporate the properties of graphs to propose a semantically integrated graph containing CFG, call graph, and register flow graph. Qiu et al.7 incorporates inline functions and library functions called by CFG to propose Execution Dependence Graph. Zhang et al.8 use Reductive Instruction Dependent Graph.

Problem definition

Given two binary functions from different platforms, our goal is to determine whether the two functions come from the same source code. Despite deep learning’s ability to capture rich code features and stable graph structure information, this problem still faces three technical challenges.

Challenge 1: Instruction difference

The instruction difference of cross-platform varies greatly. Not only there is a huge syntactic gap between the instruction sets of binary generated by compiling the same source code for different platforms, but there are also differences in the real-value features extracted by manual means such as Gemini (“Model accuracy”). Different optimization options for the same platform can also cause instruction differences.

Challenge 2: CFG difference

Taking the EVP-PKEY-decrypt() function in OpenSSL (Open Secure Sockets Layer, OpenSSL) as an example, which carries the CVE-2021-3711 vulnerability. It is found that the function using IDA Pro analyzed carries 15 basic blocks on ARM platforms, 16 basic blocks on X86 platforms, and 13 basic blocks on MIPS platforms. Not only the differences in the number of basic blocks in the CFG, but the graph neural network that updates the states of nodes through neighboring nodes also affects the similarity comparison results, resulting in a higher FPR (False Positive Rate, FPR) when vulnerability matching (“Vulnerability search”).

Challenge 3: Little knowledge coreference

Much high-level knowledge of functions that are stable is not considered. Current solutions generate function embedding from low-level instructions and graph structure perspective, without considering high-level semantics that do not change with the platform, such as API (Application Programming Interface) names carried by binary functions.

Insights

It is well known that software is a new tool for the perpetuation and exchange of human knowledge. Similar to a human written language describing a work, software is created by humans with specific programming languages and knowledge. The knowledge contained in software is as limited as the knowledge described by natural language to reality constraints9 and practical exigencies, so software knowledge is stable, repeatable, and predictable10. For debugging and calling convenience, experienced software developers often give functions a name that represents their meaning, such as SendData may be on behalf of the function to achieve sending data. Software developers usually call an API or an implemented function within software code to speed up software iteration. These naming functions which are linguistically empowered by the developer’s human working experience on the software body, express the high-level semantics of the function. For binary code that contains a lot of low-level semantics such as opcodes, registers, memory addresses, these naming functions more clearly express the high-level semantics of a binary code.

Binary functions are usually stripped, and the debug information containing function names is removed entirely. To assign meaningful names for stripped binary code, David et al.11 proposed the concept of ACSG (Augmented Call Sites Graph, ACSG), each node \({callsite = (st_1,\ldots ,st_k,arg_1,\ldots ,arg_s)}\) in the ACSG is an API called by the binary code, where st is a subtoken sliced by API name, and arg is the parameter used by the API, as shown in Fig. 1a. According to11, the sequence of \({(callsite_1,\ldots ,callsite_n)}\) carried on binary code can represent names of binary functions. However, the ACSG has two shortcomings, one is inaccurate parameter recognition such as some parameter values are unkonwn (Fig. 1a), and the other is that it only supports the amd64 platform.

Inspired by the ACSG proposed by11, we refer to the API sequences that can be extracted from the binary code as naming function (Fig. 1b). Compared to the CFG, API is platform-independent and is not changed by the compiler or optimization, so the API sequence does not change with cross-platform, cross-compiler, and cross-optimization. Highly stable advanced knowledge apply to binary code similarity has several advantages. The more stable the naming functions, the smaller the distance between functions that come from the original code. The greater the variation in the naming functions, the greater the comparison distance between functions. At the same time, these naming function helps to explain clearly why they are similar, alleviating the current lack of deep learning interpretability. Lastly, compared to low-level assembly instructions, the naming function can deal with code obfuscation11.

Our approach

Two binary functions from the same source code have small high-level semantic differences, despite the large differences in the syntax of the low-level instructions expressed across platforms. Based on this natural observation, we propose a new binary code similarity model N_Match, which shields the underlying instruction differences by the common vector space, learns the stability semantics of neighbors using graph embedding techniques, extracts naming function to shield the differences arising from cross-platform and cross-optimization levels, and is well suited to the three challenges mentioned above.

(a) ACSG using callsite sequence as naming function in11; (b) N_Match using API sequence as naming function.

Common vector space for cross-platform instructions. Embedding refers to mapping an input space object x to another output space object y, making comparison result easy to compute. Since word embedding techniques automatically learn a distributed representation of a word form dataset, we trained a word embedding model x2v that maps instructions from different platforms to the same space representation, which shields the instruction differences of cross-platform and facilitates binary code similarity computation (“Common vector space embedding”) to address the challenge 1.

Graph embedding

The structural information of graph stabilization helps to enhance the similarity. Based on the common vector space embedding, each instruction in the basic block is regarded as a word and the basic block as a sentence, and the basic block embedding is generated using the sequence representation learning with self-attention mechanism (“Basic block embedding”). Then basic block embedding and adjacency matrix of CFG are fed into the graph neural network, and finally, CFG generates a graph embedding (“Graph embedding”) to address the challenge 2.

Naming function embedding

An API sequence of binary code is used as naming function. Each API is sliced to obtain subtoken sequence, and a subtoken is treated as a word, and the naming function is treated as a sentence, then using the sequence representation learning with a self-attention mechanism to generate naming function embedding (“Naming function embedding”). As the naming function does not change with the platform, it can shield the differences between the underlying different platforms to address the challenge 3 and significantly improve the accuracy of the code similarity metric (“Cross-platform comparison between the same optimization levels5.5”), and the experimental results show that the vulnerability query mAP(mean Average Precision) of N_Match is higher than the previous solutions such as Gemini4 and UFEBS5 (“Vulnerability search”).

Main contribution

This paper makes the following contributions.

-

1.

We propose a new binary code similarity model N_Match. The model combines graph embedding and naming function embedding, stable naming function semantics shields the differences of cross-platform and significantly improve the code similarity results.

-

2.

We propose a naming function embedding method and build a pre-trained k2v dictionary. To adequately represent naming function embedding, we extract simple paths on the CFG and slice the API names carried on the paths as sentences. In this way not only can each subtoken learn the contextual semantics of the sequence, but also the sequence of subtokens on the simple path can reflect the dynamic properties of the function at a given execution moment, and can fully express the function of binary code, which significantly improved comparative accuracy.

-

3.

We conduct several experimental tasks, and the results show that the model accuracy of N_Match outperformed the current model in terms of cross-platform, cross-optimization levels, and vulnerability search. The code and model are publicly available at https://www.github.com/CSecurityZhongYuan/Binary-Name_Match.

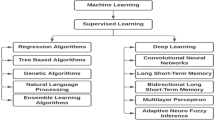

Background

In this section, we provide the necessary background for N_Match, including word embedding models, graph neural networks, recurrent neural networks, and self-attention mechanisms.

Word embeddings

Word2Vec is a neural networks language model proposed by Mikolov12, which also is a word embedding technique capable of converting a word into a vector representation. Word2Vec obtains word embedding \(\vec {w}\) based on the context context(w) of the current word, so the model training goal is to maximize p(\(w_i\) \(\mid \)context(\(w_i\))). Word2vec has CBOW and Skip-gram word representation strategies, in which CBOW predicts the current word based on context, and Skip-gram predicts the context based on the current word. Since Word2Vec can map words to the common vector space and the distance between word vectors reveals the semantic relationship, the joint deep learning model can explore the potential relationship between sentences, which is widely used in the field of natural language processing.

Graph neural networks

A binary function can be represented by a directed CFG = (V, E) equivalently, where V represents a set of vertices of basic blocks in the CFG, and edge (u,v)\(\in \)E denotes the TRUE-FALSE relationship between vertex u and vertex v. We do not distinguish such types of edges but treat all edges as simply a temporal sequential call relationship.

The graph neural network represented by Structure2Vec13 implements the update of the node v itself based on \(N_{(v)}\), where \(N_{(v)}\) representing the set of neighbors of node v. In each round of propagation operation, the network generates a new node representation based on the convergence of its own node features \(\vec {h}_v\) and neighbors features \(\vec {N_{(v)}}\). As shown in Fig. 2a, green nodes represent the neighbors of red nodes, and the red node feature value in the (k+1)-th round is a weighted average of red node feature value and green nodes feature values in the k-th round. Finally, after k rounds, all node features are aggregated as the final graph embedding result \(G_{out}\).

Recurrent neural networks

The RNN implements a sequence of input data to compute a fixed-length vector recursively. The input (\(x_1,\ldots ,x_n\)) represent a sentence, where \(x_i\) denotes i-th word in the sentence. As RNN requires a fixed sentence length n, long sentences over n need to be truncated and sentences not exceeding n need to be padded. To generate the sentence learning representation, RNN needs to obtain each word embedding from the pre-trained word embedding dictionary \(E^{d}_V\) via the embedding operation defined in (1), where d is word embedding size and V is the total number of words in the dictionary. Then a series of RNN14 units is used to encode the hidden states (\(h_1,\ldots ,h_n\)) .

The basic structure of RNN is shown in Fig. 2b, which consists of an initial state \(h_0\), an input time series \(x_t\) and an output time series \(h_t\). The final hidden state \(h_n\) is a sequence embedding result. When a sentence is received, \(h_n\) theoretically contains the meaning of a sentence. The LSTM14 (Long Short Term Memory, LSTM) model solves the problem of RNN short-term memory by adding a gates mechanism, allowing RNN to really make effective use of long-term information. An LSTM Cell at time t is shown in Fig. 2c, including the input data \(x_t\) at time t, the cell state \(C_t\), the hidden layer state \(h_t\), the forgetting gate \(f_t\), the memory gate \(i_t\) and the output gate \(o_t\). The implementation of each hidden state is to pass the remaining information obtained in the forgotten cell state, remember the new information, and compute the above information together with the current time input to generate the hidden layer state.

Self-attention mechanisms

The attention mechanism was first proposed in [111], followed by [112] which applies the attention mechanism to the field of NLP. Our implementation uses a self-attentive mechanism proposed in15. Initializes randomly a vector as the query vector and performs a similarity calculation with each word in a sentence, then uses softmax to generate the attention weight. A weighted summation of the hidden states (\(h_1,\ldots ,h_n\)) is performed and the result is the output of the attention mechanism.

Neural networks can be well integrated with attention mechanisms that focus on the key information. During the basic block embedding process, the attention mechanism captures important semantic features of the basic block by scoring to focus attention on important instructions.

Model overview

This section focuses on the overall framework of the N_Match model and the model evaluation criteria.

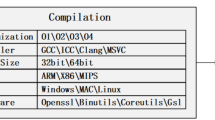

Framework

For clarity of interpretation, we summarize and give all the notations used in this paper in Table 1. Meanwhile, the relationships between instruction, basic block, function respectively, and embedding learning are described in Table 1. Let us first describe the similarity problem for two cross-platform binary functions. Two binary functions \(F_1\) and \(F_2\) are similar16 meaning that the results \(F^s_1\) and \(F^s_2\) generated by compiling the same original source s are similar, noted as \(F_1\simeq F_2\) . The compiler c (such as GCC, Clang) completes the conversion from source code s to binary function F. During the conversion process compiler c can be compiled to generate binary code for different platforms (e.g. X86, ARM, MIPS) and can carry different compilation levels -O[0,1,2,3]. In this way, a source code s can generate 12 binary functions on 3 platforms and 4 optimization levels.

We use the embedding model architecture to represent a binary function. As shown in Fig. 3, the overall framework of the model consists of naming function embedding and graph embedding. For a given binary function, naming function embedding online component and graph embeddings online component need to utilize pre-trained models offline component as dictionaries.

Graph embedding

To complete the process of converting a CFG into a graph embedding, it is first necessary to obtain the corresponding instruction vector by looking up the x2v dictionary (“Common vector space embedding”). Then utilizes the LSTM with a self-attention mechanism to complete the basic block embedding (“Basic block embedding”), and its embedding result is used as the initialized feature vector of the graph node, finally Structure2Vec is used to generate a graph embedding \(\vec {F^g}\) based on basic block embedding and adjacency matrix (“Graph embedding”).

Naming function embedding

Based on a pre-trained k2v dictionary, an LSTM carrying a self-attentive mechanism is used, which converts a naming function sequence into a naming function embedding \(\vec {F^k}\) (“Naming function embedding”). The LSTM is chosen for its ability to capture the long sequences of subtokens composing naming function, and the self-attentiveness mechanism is chosen both for its ability to capture the most important naming semantics. Our experiments have confirmed that these mechanisms can significantly improve performance.

The graph embedding \(\vec {F^g}\) and the naming function embedding \(\vec {F^k}\) are put together to represent the binary function embedding result \(\vec {F_{final}}\) defined in (2)

Evaluation criteria

Once a function F is represented by an embedding \(\vec {F}\) , the similarity between two binary functions can be converted into a comparison between vectors. We say \(F_1\simeq F_2\) is similar if and only if \(\vec {F_1}\simeq \vec {F_2}\) is similar. Thus, the key to binary code similarity is to find a suitable model \(\Psi \) to transform a binary function into a vector. Given the binary functions \(F_1^{s1}\),\(F_2^{s1}\)and \(F_3^{s2}\), cosine is used as the similarity measure such that \(cosine(\Psi (F_1^{s1}),\Psi (F_2^{s1}))\simeq 1\), and \(cosine(\Psi (F_1^{s1}),\Psi (F_3^{s2})) \simeq -1 \).

To generate the training dataset needed for model \(\Psi \), we define two binary functions compiled from the same source code as similar and set label = +1 indicates they are similar, otherwise set label = − 1. During each round of training, the model parameters are adjusted and the \(\Psi \) is updated continuously so that distance is minimized defined in (3), where \(\varepsilon = cosine(\Psi (F_1),\Psi (F_2))\). The smaller the distance, the more accurate the \(\Psi \) prediction, and vice versa. Similar to SAFE and UFEBS, accuracy was used as a model evaluation metric

To assess the quality of reproduction of the model \(\Psi \) transformation, the hits@N evaluation metric is introduced as search precision of binary functions cross-platform and cross-optimization levels. In the binary code search scenario, the search results are a ranked list of the functions with the highest similarity. Assuming that the total number of binary functions from the same source code is RightTotal. For N results returned by a query, if there is a result and query that are both compiled from the same source code, add 1 to RightCount. The result of comparing RightCount and RightTotal is hits@N defined in (4)

Take the vulnerability search as an example, suppose the source code carrying the vulnerability is compiled to generate 12 binary functions, using A2 as a query, after manual verification in the returned results top50, the RightCount value is 8, so the hits@50 = 66.67%. Therefore, for the same N, higher hits@N means better search results and search results obtained by artificial intelligence technology are more reliable.

The mAP (mean Average Precision) defined in (5) is introduced to evaluate the model’s average prediction results for binary functions

where q is the q-th prediction number, Q is the total number of predictions, and \(H_n(q)\) is the hits@N precision of the q-th prediction result.

N_Match implement

This section focuses on N_Match model implementations, respectively the common vector space embedding for cross-platform instructions (“Common vector space embedding”), basic block embedding (“Basic block embedding”), graph embedding (“Graph embedding”), naming function embedding (“Naming function embedding”) and Siamese network model (“Siamese network”*).

Common vector space embedding

Different platform binary functions compiled from the same source code show that low-level assembly instruction syntax features heterogeneous and high-level semantic correlation, in other words, they are completely different in syntax, but express the same semantics. To solve the similarity comparison problem, we pre-trained a x2v language model that maps different platform instructions to the common vector space. After lifting to the common vector space, the meanings represented by each instruction dimension are the same, so the embedding results obtained by the deep learning model are comparable and trustworthy.

Instructions consist of opcodes and operands. Due to the diversity of operands such as offset addresses and immediate numbers, not only does OOV (Out Of Vocabulary) occurs in the pre-trained language model but also degrades the quality of embedding if they are not normalized. Thus we do not consider the original instruction as a word, but a normalized representation of the instruction as a word using Algorithm 1.

Specifically, similar to5, the platform type \(i\_type\in \{A\_; X\_; M\_\}\) is first identified. Secondly, base address of the instruction is replaced by the special symbol MEM, e.g. an X86 instruction mov eax,[0x3456789] is replaced by X_mov_eax,_MEM. Immediate numbers over a certain range are replaced by the symbol HIMM, e.g. an ARM instruction b 0x4018c0 is replaced by A_b_HIMM. Immediate numbers in a certain range remain unchanged, e.g. a MIPS instruction daddiu t0, zero, 1 is replaced by M_daddiu_t0,_zero,_1. Finally, one unknown instruction is introduced for each instruction set, namely A_UNK, X_UNK, and M_UNK.

The input of Algorithm 1 is a basic block b, whose length is \(L^b\) and the platform type of instruction is i_type. Extract the opcode of each instruction (line 2), then traverse all the operands of instruction (line 3) and determine the operand type, if the operand type is immediate and above threshold (lines 4–6) replace it with the symbol HIMM. If the operand type is a base address then the symbol is replaced by MEM(lines 7–9). Finally, the instruction platform type, opcode, and operand are concatenated together and returned (lines 11–13).

Basic block embedding

Before completing the binary function graph embedding, the basic block nodes in the graph need to transform the feature vectors, and this work exists in the Gemini and UFEBS schemes as well. Since LSTM is more adapted to sequential data and has been proved in16 to have excellent results in basic block embedding, and also the attention mechanism can capture the important instructions of the basic block, we use LSTM carrying the attention mechanism to complete the basic block embedding. The basic block embedding results are used as feature vectors of nodes in the graph to provide node initialization for graph embedding. Similar implementations are included in previous works17,18. The basic block embedding is specified in Algorithm 2.

Lines 1–5, the basic blocks whose length exceeds seq_len are truncated, otherwise are padded. Next, each instruction embedding is looked up from the same space semantic x2v dictionary to generate a sequence of instruction embedding \((\vec x_1,\vec x_2,\ldots ,\vec x_n)\) (lines 6–8), then fed into the LSTM to obtain the hidden state \( H = (\vec h_1,\vec h_2,\ldots ,\vec h_n)\) of instruction (lines 9–10), where H is a \(seq\_len\times n\) matrix, n is the vector size of the hidden layer.

Subsequently, the self-attention weight matrix att is calculated (line 11). A fully connected layer fc1 is operated on after the transpose to achieve a linear transformation from one feature space to another, which can improve the classification effect, and then the attention is calculated using a two-layer neural network based on each instruction position. where \(W_1\) is a \(h \times h\) matrix, h is the dense size, and \(W_2\) is another \({h\times 1}\) matrix.

Finally, the weight matrix att is multiplied with H to obtain the basic block embedding and mapped to another feature space via a fully connected network fc2 (line 12), providing an initialize node feature representation for the graph embedding.

Graph embedding

Similar to the Gemini and UFEBS, based on the Structure2vec graph model inference algorithm, we update node representations with the aggregation results of neighborhood features. After K rounds of propagation, each graph node will generate a new real value vector that takes into account both graph characteristics and long-range interactions between nodes. Finally, we aggregate the embedding results of all graph nodes as the graph embedding. The CFG graph embedding is specified in Algorithm 3.

Make the number of basic blocks in the CFG equal to max_nodes by truncating or padding (lines 1–5). Lines 6–7 indicate initialization of nodes and message exchange, where node is a \(max\_nodes \times h\) matrix and \(W_{g1}\) is a \(h \times o\) weight matrix, h is dense size and o is graph size, adj is a \(max\_nodes \times max\_nodes\) matrix. The multiplication of adj and A is equivalent to a message exchange to achieve aggregation of neighboring nodes and represent it as \(A_{adj}\), however, \(A_{adj}\) does not contain the nodes themselves and does not implement node updates.

Lines 10–12 using the leaky_relu function to activate and map it to another semantic space by adding a T layer neural network. A variant of the Relu activation function, leaky_relu, is introduced here because the neuron cell cannot update the parameters when the Relu input is negative. In our implementation, T=2 is set.

Lines 14–15 update the nodes and message exchange. Neighboring feature B and node feature A are updated by an additive operation to generate a new node representation and a new message exchange matrix which is subsequently assigned to B for the next iteration (line 9).

After K iterative operations, the basic block embedding is aggregated to obtain the graph embedding \( \vec {F}^g \)(line 17), which is mapped to another feature space via a fully connected network to facilitate the code similarity calculation.

Naming function embedding

Inspired by11, we sequentially identify, extract and slice the API name carried on the binary code in linear address order to form a sequence of subtoken, then fed into an LSTM neural network with a self-attention mechanism to generate a naming function embedding. A binary naming function embedding is described in Algorithm 4.

Lines 1–7 traverse all the basic blocks on the CFG, extracting the API or called function name carried by the call instruction (line 3) and representing the result as name. If the call instruction does not carry any naming information, it is indicated by the special symbol noapi, ensuring that any binary function carries a name. The longer the naming function the more knowledge it carries. However, if a long name is treated as one word, OOV problems may occur during pre-training. So longer names are sliced (line 4) to produce the subtoken sequence (line 5).

For experienced software engineers, APIs or defined function names usually have certain rules such as camelcase or snake. Since the rules are relatively clear, we do not adopt the BPE (Byte Pair Encoding)19 used in the20, but analyze these rules to slice the longer name into multiple subtokens. Specifically, names satisfying the camelcase rule are sliced according to capital letters, e.g. flagWithResult is sliced into flag, with and result, and the snake rule are sliced according to underscores, e.g. Set_Flag_With_Result is sliced into set, flag, with and result. Finally, the subtoken sequence is fed into LSTM carrying the self-attention mechanism to generate naming function embedding (lines 8–12).

Siamese network

Siamese Network21 are widely used in binary code similarity computation4,5,16. We concatenate graph embedding and naming function embedding together as final binary function embedding, formally represented as \( \vec {F}_{final} = \vec {F}^g \bigoplus \vec {F}^k\). Then, Siamese Network architecture sharing the same network parameters and weights is placed on top of two binary functions embedding (Fig. 4).

First, for a given pair of functions \(F^1\) and \(F^1\) to be compared, the function embedding \(\vec {F}_{final}^1\) and \(\vec {F}_{final}^2\) are obtained using N_Match. Then similarity between function embedding is calculated and defined in (6). The \(W_{g1},W_a, W_b\) and \(W_j\) model parameters are continuously optimized by the stochastic gradient descent algorithm to minimize objective functions defined in (7)

Experiment and evaluation

This section evaluates and analyses the results of N_Match against the baseline model in terms of accuracy, cross-platform, cross-optimization, vulnerability detection, and real scenario search, and some interesting observations are given based on the study of binary code similarity representation learning.

Details

We implement N_Match with PyTorch which consists of data preprocessing, language pre-training models, and deep learning models.

Data preprocessing

We get the dataset in two ways. One is to download open-source software and compile it using a compiler with the -g option. The second is to use a publicly available binary dataset. Dataset preprocessing is performed using Radare2 to extract instructions and naming functions respectively. Pre-processing of the data showed large differences between the binary functions in terms of the number of basic blocks, the number of instructions, and the number of subtokens. Taking the OpenSSL dataset statistics as an example, excluding functions introduced by the compilation process in 610 binaries based on DWARF, it was found that on average each binary file contains about 8 functions, each function contains 8 basic blocks, with a maximum of 289 basic blocks, and basic blocks consist of 6 instructions, with a maximum of 5229 instructions. In terms of naming function, get an average of 29 subtokens per function, with a maximum of 807 subtokens.

Pre-training model

The language pre-training model uses the Word2Vec technique in gensim, including the x2v instruction pre-training model and the k2v naming function pre-training model.

-

1.

x2v Since the Skip-gram strategy outperforms the CBOW strategy in terms of accuracy and low-frequency words, we generate instruction embedding vectors based on Skip-gram’s Word2vec model for datasets from three platforms (ARM, X86, MIPS), trained with the same configuration parameters. We make use of the dataset provided in the22 and an i2v dictionary16 containing ARM and X86 instructions provided in the5. The 32-bit and 64-bit binaries on different platforms with different compilers and different optimization levels were compiled and generated, using the Radare2 tool to form the three datasets. The parameters of the model are as follows: frequency is 8, window size is 8, and word embedding size is 100. Lastly, the dictionaries from the three models are integrated to obtain a final x2v dictionary with a capacity of 696,779 \(\times \) 100.

-

2.

k2v Similar to x2v, Word2vec was used to generate a k2v dictionary. The dataset uses 464 binary files provided in the11, and function simple path extraction is performed using Algorithm 4 to generate a dataset containing 84 million lines fed into the Word2vec model for training. The parameters of the model are as follows: subtoken embedding size is 96, the window size is 5, and frequency is 5. Finally, a k2v dictionary containing 8756 \(\times \) 96 subtokens is obtained. The original naming function is seen as set A, and the subtoken is seen as set B. Statistical analysis of the dataset shows that the ratio of the number of elements in set A to set B is 36.3%. The reason for the decrease in proportion is that the snake and camelcase semantics are made up of subtoken that can reduce the dictionary size.

Deep learning model

N_Match deep learning model using PyTorch framework. Same to UFEBS5, the instruction length within the basic block \(L^b = 50\), the number of basic blocks \(L^g = 150\), the learning rate is 0.001, the optimizer is Adam, the loss function is MSE (Mean Square Error). The graph embedding and function named embedding size are 64, choosing the embedding size to be 64 is a good trade-off between the performance and efficiency4,5, so the final function embedding size is 128. In the Structure2vec model, the iterative number of rounds \(K = 2\), a number of layers \(T = 2\), and a single layer LSTM cell is used for the basic block embedding and naming function embedding.

Our experiments are conducted on the ubuntu16 operating system with two Intel Xeon E5-2650v4 CPUs (28 cores in total ) running at 2.20 GHz, 128 GB memory, and a Tesla P100 GPU card. During both training and evaluation, only 1 GPU card was used.

Baseline

Several previous works have been proposed for binary code similarity cross-platform search problems, such as17,23. Since Gemini4 and UFEBS5 achieve cross-platform similarity with the help of Structure2vec graph neural networks, which outperform other solutions in terms of accuracy and efficiency. Therefore, Gemini and UFEBS are used as baselines for model evaluation and comparison with N_Match. Our N_Match scheme is also compared with SAFE16 due to the inherently linear address sequence of binary, which it is more convenient to use RNN for function embedding.

Gemini4 uses a manual approach to extract basic block features which is a supervised feature extraction scheme. Each basic block is represented as a numeric feature vector. All the basic block features and an adjacency matrix of CFG are then fed into the Structure2vec to obtain the graph embedding. As the basic block feature is not modified by the training procedure of the neural network, it cannot be trained.

UFEBS5 uses Word2Vec to train instruction pre-training models for both X86 and ARM platforms respectively which is an unsupervised feature extraction scheme. GRU units carrying a self-attention mechanism are used to generate the basic block embedding. Subsequently, the basic block embedding and the adjacency matrix of CFG are fed into the Structure2vec to obtain the graph embedding.

SAFE16 uses the pre-trained i2v model and bidirectional GRU to generate a function embedding, then fed into a Siamese network with shared weights for model training. SAFE is also an unsupervised feature extraction scheme.

As UFEBS and SAFE utilize i2v to achieve similar computation between ARM and X86 only, we extend i2v to become x2v as a pre-trained model so that it supports MIPS, ARM, and X86.

DataSets

For descriptive convenience, the symbol Pn is introduced to denote a binary function at a certain platform with optimization level, where \(P \in \{X, A, M\}\) represents the platform environment on which the binary function runs, and n represents the optimization level, e.g. X3 represents the binary function generated using optimization level -O3 for X86 platform.

Model train datasets

Similar to the dataset used by Gemini and UFEBS, DataSet_A and DataSet_B are used for binary code similarity model training. Both DataSet_A and DataSet_B are compiled using the GCC compiler to generate binaries for different platforms, carrying the -O[0,1,2,3] optimization level. The training set, validation set, and test set are generated in the ratio of 80%:10%:10%.

DataSet_A: Compile the source code OpenSSL1.1 for two versions (k and m) to generate binary functions for X86, ARM, and MIPS platforms, resulting in a total of 730,458 pairs of functions.

DataSet_B: We compiled the open source code OpenSSL1.1.f to generate both X86 and ARM platforms. Here, we collect only functions with more than 5 basic blocks. In total, DataSet_B contains 126,786 pairs of functions.

Model evaluation datasets

DataSet_I, DataSet_II, DataSet_III, and DataSet_IV evaluate model accuracy at a granular level for cross-platforms and cross-optimization levels. Datasets are obtained from the22, including not only some files used by the training dataset, but also single file, individual software, and entire coreutils kernels program. The datasets are all generated binaries using the GCC8.2 compiler and carrying -O[0, 1, 2, 3] optimization levels for 64-bit X86, ARM, and MIPS platforms.

DataSet_I: This dataset is compiled for only one dir component of coreutils6.7, resulting in a total of 12 binaries.

DataSet_II: This dataset compiles the entire open source software coreutils6.7 consisting of 94 components such as dir and ls, resulting in a total of 1128 binaries.

DataSet_III: The dataset compiled by the open source software busybox1.21, resulting in a total of 12 binaries.

DataSet_IV: The dataset compiled by the open source software libssl, resulting in a total of 12 binaries.

Function search database

DataSet_V is a function search database for evaluating model search performance. The database is composed of which, time, tar, inetutils, gzip, findutils, coreutils etc. Compiled with CLANG (7.0/6.0/5.0/4.0) and GCC (8.2/7.3/6.4/5.5/4.9.4) compilers, carrying industry widely used -O[2,3] optimization level, to generate 2916 binary files on 64-bit ARM, MIPS, X86 platforms, then extracting 1,002,913 binary functions with the help of Radare2.

Vulnerability database

A source code with vulnerability compiled to generate 12 binary functions, which is used to evaluate the mAP.

Model accuracy

This experiment uses DataSet_A and DataSet_B to evaluate the accuracy of N_Match compared to the baseline model. We set epochs = 10, and shuffle the training dataset in each epoch. At the end of each epoch, the validation accuracy \(A_{val}\) is calculated on the validation dataset. if the current \(A_{val}\) is the highest, the test dataset calculation is performed to obtain the test accuracy \(A_{test}\). In this way, the best accuracy \(A_{val}\) and \(A_{test}\) are obtained for each model. The results of model training are shown in Table 2.

As found in Table 2, N_Match outperformed the SAFE and UFEBS by achieving \(A_{val}\) of 92.26% and \(A_{test}\) of 92.28% on the DataSet_A. On the DataSet_B, N_Match also performed well with a validation accuracy of 97.91% and a test accuracy of 97.93%. N_Match model is 19% more accurate than UFEBS and 30% more accurate than the Gemini. It is shown that the fusion of naming function embedding and graph embedding can improve model accuracy, indicating that stable and platform-independent features can greatly mitigate cross-platform variation.

It is found that Gemini performs the worst, with an accuracy rate of 69%. The reason is that the Gemini scheme4 is more adapted to function pairs with more than 20 basic blocks. On the DataSet_A, the SAFE accuracy is 9% higher than the UFEBS scheme. The reason may be that RNNs are good at learning representations of time-series instructions, while graph neural networks need to be improved in handling CFG composed of all possible paths.

In the following binary code analysis task, we will conduct experimental analysis around the model trained on the DataSet_A.

Cross-platform comparison between the same optimization levels

The task is to evaluate the accuracy of the same source code for binary functions on different platforms with the same compiler and same optimization level. Specifically, we extract A0, M0, and X0 function pairs to evaluate the model accuracy of N_Match, SAFE, and UFEBS in a real environment, and 3 groups of comparison results are obtained from evaluation datasets. The results of the comparisons are shown in Table 3.

The comparison in Table 3 shows that N_Match outperformed SAFE and UFEB, with an average accuracy of 90.85% for N_Match, 67.87% for SAFE and 87.25% for UFEBS. Although SAFE accuracy is 9% higher than UFEBS accuracy on the DataSet_A (Table 2), the average UFEBS accuracy is 20% higher than SAFE accuracy on the real evaluation dataset (Fig. 5a), show that stable nature of the graph structure improves the similarity results. We calculate the average of the accuracy results of the N_Match in four data sets. The results show that X86 and ARM are the most similar, and the accuracy of X0–A0 is 4% higher than that of X0–M0 (Fig. 5b).

(a) Cross-platform compare accuracy between N_Match, SAFE and UFEBS using O0 as an example; (b) cross-platform compare accuracy of N_Match using O0; (c) cross-optimization compare accuracy of N_Match; (d) cross-optimization compare accuracy between N_Match, SAFE and UFEBS; (e) comparison between optimized and unoptimized of N_Match; (f) optimized and unoptimized compare accuracy between N_Match, SAFE and UFEBS; (g) O2 vs O3 comparison accuracy between N_Match, SAFE and UFEBS in industrial scenarios; (h) O2 vs O3 comparison of N_Match.

Comparison of the same platform across optimization levels

This task is to evaluate the accuracy of functions with different optimization levels for the same compiler and the same platform using DataSet_III and DataSet_IV. Since each platform can generate 4 functions at different levels of optimization, each platform is 6 groups of comparison results for a total of 18 groups, and the results of the comparison are shown in Table 4.

The comparison in Fig. 5d shows that N_Match performed well on all 18 groups of comparison results with an average accuracy of N-Match 92.05%, that of SAFE is 87.81%, and that of UFEBS is 83.34%. The closely related optimization levels are more similar to each other, with O1 and O0 having the highest accuracy, followed by O1 and O2, and O0 and O3 being the least similar, as shown in Fig. 5c. This is because O1 is a basic optimization based on O0 and O2 is an upgraded version based on O1, thus presenting the above results.

In Table 4, it is found that the accuracy of SAFE is higher than that of N_Match in a few optimization levels, and also found that the accuracy of SAFE outperforms UFEBS in most optimization levels as shown in Fig. 5d, indicating that the sequence model outperforms the graph model for some optimization levels such as P2–P3. Overall, N_Match has the best model accuracy in comparisons of cross-optimization levels with the same platform.

Optimized and unoptimized

This task evaluates the accuracy of the model between unoptimized -O0 functions and optimized functions using DataSet_III and DataSet_IV. Each platform is 6 groups of results for a total of 18 groups, and the comparison results are shown in Table 5.

The comparison results show that the average accuracy of N_Match is 86.5%, SAFE is 76.4% and UFEBS is 79.77%. Except for the group of M0–X3, N_Match performs well on the remaining 17 groups of comparison results, as shown in Fig. 5f. Comparing the N_Match model for the same platform (Fig. 5c) and different platforms (Fig. 5e) shows that the similarity results of the optimization levels vary significantly cross-platforms. The top 5 ranking in Fig. 5e confirms that ARM and X86 are the most similar, and O1 and O0 have the highest similarity.

As seen in Fig. 5f, SAFE accuracy is lower than UFEBS accuracy at multiple optimization levels, indicating that graph structure stability helps to improve similar accuracy of cross-platforms and cross-optimization. The more stable the knowledge, the more similar the binary functions corresponding to the same source code on cross-optimization and cross-platform.

It is also found in Table 5 that N_Match is inferior to SAFE and UFEBS in comparing X0 with MIPS platforms, A0 with MIPS platforms, M0 with ARM platforms, and M0 with X86 platforms. Analyzing the reasons for this phenomenon, it is possible that the N_Match system does not fully recognize the MIPS call instructions and thus does not capture the semantics of the system calls contained in the functions.

Industrial scenarios

This task evaluates the accuracy of O2 and O3 comparisons in industrial scenarios using DataSet_III and DataSet_IV as datasets, for a total of 12 groups of comparison results, as shown in Table 6.

The comparisons show that the average accuracy of N_Match is 87.05%, SAFE is 78.49% and UFEBS is 81.98%. No solutions are able to outperform all 66 compared solutions across three platforms. As shown in Fig. 5g, on the X2–M2, X2–M3, X3–M2, and X3–M3 comparison results, N_Match accuracy is not as good as SAFE, which shows that the learning scheme using instruction sequence representation has an advantage in the similarity comparison of industrial scenarios. From Fig. 5h, it is clear that the N_Match model has the highest comparison accuracy in comparing the same optimization level for ARM and X86 platforms, such as X3–A3 and X2–A2. Indicating that optimization options carried by query have a greater impact on computational results. Therefore, identifying the optimization of a binary function can be used as a filtering condition for the binary code similarity task.

Vulnerability search

This task evaluates the hit@N of a query search using DataSet_III as the search database. Specifically, using a Pn as the query to return topN predictions from DataSet_III, which contains 33,286 binary functions.

We used CVE-2021-42374 as a query, which is located in the unpack_lzma_stream() of busybox software and contains 73 basic blocks (in the A2 binary function). As can be seen from the above that the same source code function generates 12 binary functions and therefore 12 known vulnerabilities in the Target database. For a query, RightTotal = 12 and RightCount is the true correct verified result manually in top N. Finally, hit@N and mAP are calculated based on (18) and (19).

Since SAFE and UFEBS have lower hit@N at N = 50, we only set N = 200, N = 100, and N = 50 for the experimental task and compared them, the results as shown in Table 8. Based on hit@N results, we compute the mAP metrics of the three models. The results show that N_Match far outperformed SAFE and UFEBS in terms of average mAP, with N_Match is 84.72%, SAFE is 12.73% and UFEBS is 18.29%. Even though UFEBS introduces a stable graph structure for similarity evaluation, the mAP of N_Match exceeds UFEBS by up to 66%. The vulnerability experiments illustrate that in the challenging task of binary code similarity comparison of cross-platform and cross-optimization levels, the current evaluation is only based on the model training accuracy, which still needs to be optimized and improved in the real industrial scene, additional evaluation metrics such as mAP should be introduced to assess the models comparison.

We continue to evaluate the N_Match model by setting N = 200, 100, 50, 20, 15, and 12 respectively, calculating the hit@N of the 12 queries, and the results are shown in Table 7. Even in top12, N_Match has a mAP value of 55.56%, and is able to find more than half of the binary functions that are compiled from the same source code but different platforms and different optimization. Also found in top12, optimization level O1 was able to find more than 80% of vulnerabilities on ARM and X86.

We also compared the mAP of 12 function searches (Fig. 6a). The statistical analysis found that A1 and X1 work best, and M0 and M1 work worst, with a 64% difference in mAP. On the same optimization level comparison, M2, X2, and A2 are smoother with a little gap of 80.56%, 81.94%, and 84.72% mAP respectively, however M3, X3, and A3 have a larger gap of 81.94%, 56.94% and 54.17% mAP respectively. This indicates that the O3 optimization level, which focuses on execution efficiency, may introduce more unpredictable program behavior such as errors and inline function, making the mAP unstable. Therefore O3 as query should be avoided when comparing similarities. We argue that reverse engineers should determine the optimization level and platform of query when searching for vulnerabilities. We also compare the search results of N_Match and Gemini (Fig. 6b). The N_Match outperforms the Gemini. Although Gemini and UFEBS also draw on graph structures, we argue that the graph structure may simply be stable semantics.

We performed a further comparison with the ComFU model proposed by us in24. ComFU does not utilize graph structure and employs data dependency analysis techniques to extract sequences of parameter slices around the APIs that trigger vulnerabilities within functions. The comparison reveals that ComFU filters out a large number of dissimilar functions, and the distance gap between real vulnerability functions and dissimilar functions is obvious. This suggests that vulnerability-oriented similarity detection, with functions as the comparison object of coarse granularity, is not as good as using the vulnerability key code fragments as the object.

Function search performance

This task evaluates the model search performance on DataSet_V by comparing model training time \(T_m\), function vector generation time \(T_g\), and function search time \(T_s\), which are used to guide the best industry practice of binary function search.

As shown in Table 9, in terms of model training time, the graph model costs far more than the sequence model. The sequence model takes the shortest training time, which is 15\(\times \) faster than the graph model. In terms of vector generation time, the sequence model is 20\(\times \) faster than the graph model. However, the vector search time of graph model and sequence model is the same, the difference comes from the time of dimension of vector computation. Although the N_Match vector size is 128 which is twice that of UFEBS and SAFE, a function search time from 1 million vector library is negligible.

Ablation study

In order to evaluate the contribution of the core components to our function representation, we compare the attention mechanism and graph neural network configurations.

In the evaluation of the attention mechanism, we compare GRU, RNN, LSTM, and Atttion+LSTM on sequences of instructions while the model was being trained, and the results are shown in Table 10. The comparison reveals that the accuracy of the model carrying the attention mechanism is 39% higher than that of the RNN model. This indicates that the attention mechanism can focus on the more critical information of the binary code similarity comparison task and improve the task accuracy. It is also found that Transformer is not as accurate as the LSTM that carries the attention mechanism. The reason for this may be analyzed because the Transformer lies in considering only attention, whereas instructions naturally have sequential meanings, or perhaps Transformer performs best when both encoding and decoding are employed simultaneously.

From the model comparison in Table 2, it is found that UFEBS with graph neural network is almost 9% lower than SAFE without graph neural network in terms of accuracy (see Table 2), but UFEBS is almost 6.5% higher than SAFE in terms of map metrics in terms of vulnerability matching (Table 8). This proves that graph neural network helps to reduce FPR.

Related work

This section presents work related to binary code similarity from the perspective of instruction sequence and CFG of binary function.

Similarity based on instruction sequences model

The natural linear address layout of binary function provides advantages for sequence-based analysis schemes. The simplest way to do this is to hash a fixed-length sequence of instructions, and if the hashes are the same the sequences are considered similar. Qiao et al.25 infers function similarity by counting similar basic blocks. Jin et al.26 computes the input–output behavior within the basic block. Hashing techniques retrieve matches efficiently and are able to sense changes in code. Differences in instruction syntax or graph structure will cause huge changes.

Several works extend the basic blocks. David and Yahav2 extracts continuous basic blocks from the CFG to form a trace, and uses the instruction alignment method and the longest common string matching to calculate the similarity between traces. Huang et al.3 extracts the longest execution path on the CFG, establishes the basic block mapping relationship between two binary functions, and computes the similarity value. The above solutions are less accurate on small code fragments, and do not solve the cross-platform similarity calculation.

Based on Word2Vec12 or Doc2Vec27, recent works utilize recurrent neural networks to obtain a function sequence learning representation. Redmond et al.17 treats instruction as a word and uses RNN to achieve similarity comparison between basic blocks. Ding et al.28 treats operand and operand as word, only the X86 platform similarity calculation is achieved. Zuo et al.18 implements similarity comparison between basic blocks through the machine translation NMT (Neural Machine Translation) technique, which only implements similarity computation between X86 and ARM platforms.

Similarity based on graph model

Current schemes based on CFG transform a CFG into a graph embedded with a graph neural network. Several works study code similarity based on CFG. Gao et al.29 constructs Labeled Semantic Flow Graphs and then extracts the feature vectors of each basic block to generate graph embedding. Yu et al.30 argues that the order of control flow graph nodes is important for graph similarity detection and using a BERT31 (Bidirectional Encoder Representations from Transformers) as a language per training model.

A number of schemes extend CFG. Alrabaee et al.6 combines CFG, function call graph, and register flow graph together into a semantic integrated graph. Zhang et al.8 argues that although the order of instructions has changed, the dependency graph between instructions remains the same and proposes a RIDG (Reductive Instruction Dependent Graph). Qiu et al.7 fuses inline functions and library functions called by CFG and proposes the EDG (Execution Dependence Graph).

Conclusions and future works

In this paper, we propose a binary code similarity model N_Match that fuses naming function and graph neural network, which goal is to find as many binary functions as possible from the same source code at different optimization levels on different platforms. The core idea of the model is to leverage platform-independent naming function, common vector space, and stable graph structure semantics. We evaluate and compare the model on multiple datasets and show that N_Match outperforms current models in terms of accuracy, cross-platform, cross-optimization level, and hit@N. Real-world vulnerability search experiments verify that N_Match is able to match more binary functions with a higher mAP. We give some comparative conclusions between cross-platform and cross-optimization based on deep learning. To this end, we make our dataset, code, and trained models publicly available at https://www.github.com/CSecurityZhongYuan/Binary-Name_Match.

Future works

In our future work, we can use the temporal and textual data of the nodes to compute the edge weights and then generate subgraphs with highly relevant nodes, and similarity comparisons are made through subgraphs32. Secondly, utilizing the abstract syntax tree of the pseudo-code which holds richer semantic information in each node, such as data types and statement execution order context information. Thirdly, variable names and their variable types can also express the semantics of the code. Based on big code10 and linguistic statistical models, with the help of neural network translation techniques, the recovered variable names and variable types33 will be used in code similarity. Finally, no matter how functionally identical code is changed, the core descriptions that determine the function of the code remain the same, and with the help of source code summarization34 generation techniques, a new means11 of comparing binary code naming descriptions is not lost.

Data availability

The data used to support the findings of the study are available at https://www.github.com/CSecurityZhongYuan/Binary-Name_Match.

References

Xia, B., Pang, J., Zhou, X. & Shan, Z. Survey on binary code similarity search. J. Comput. Appl. 42(4), 985–998, 4 (2022).

David , Y., & Yahav, E. Tracelet-based code search in executables. In ACM SIGPLAN Conference on Programming Language Design and Implementation, PLDI ’14, Edinburgh, United Kingdom-June 09–11 (O’Boyle, M. F. P. & Pingali, K. Eds.), 349–360 (2014). https://doi.org/10.1145/2594291.2594343.

Huang, H., Youssef, A. M., & Debbabi, M. Binsequence: Fast, accurate and scalable binary code reuse detection. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, AsiaCCS 2017, Abu Dhabi, United Arab Emirates, April 2–6 (Karri, R., Sinanoglu, O., Sadeghi, A. & Yi, X., Eds.), 155–166 (2017). https://doi.org/10.1145/3052973.3052974.

Xu, X., Liu, C., Feng, Q., Yin, H., Song, L., & Song, D. Neural network-based graph embedding for cross-platform binary code similarity detection. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS, Dallas, TX, USA, October 30–November 03 (Thuraisingham, B., Evans, D., Malkin, T. & Xu, D. Eds.), 363–376 (2017). https://doi.org/10.1145/3133956.3134018.

Baldoni, R., Luna, G. A. D., Massarelli, L., Petroni, F., & Querzoni, L.: Unsupervised features extraction for binary similarity using graph embedding neural networks (2018). arxiv:1810.09683 [CoRR].

Alrabaee, S., Shirani, P., Wang, L. & Debbabi, M. SIGMA: A semantic integrated graph matching approach for identifying reused functions in binary code. Digit. Investig. 12(Supplement 1), S61–S71 (2015).

Qiu, J., Su, X., & Ma, P. Library functions identification in binary code by using graph isomorphism testings. In 22nd IEEE International Conference on Software Analysis, Evolution, and Reengineering, SANER, Montreal, QC, Canada, March 2–6 (Guéhéneuc, Y., Adams, B. & Serebrenik, A. Eds.), 261–270 (2015). https://doi.org/10.1109/SANER.2015.7081836.

Zhang, X., Pang, J. & Liu, X. Common program similarity metric method for anti-obfuscation. IEEE Access 6(47), 557–565. https://doi.org/10.1109/ACCESS.2018.2867531 (2018).

Hindle, A., Barr, E. T., Gabel, M., Su, Z. & Devanbu, P. T. On the naturalness of software. Commun. ACM 59(5), 122–131. https://doi.org/10.1145/2902362 (2016).

Allamanis, M., Barr, E. T., Devanbu, P. & Sutton, C. A survey of machine learning for big code and naturalness. ACM Comput. Surv. 51(4), 1–37 (2018).

David, Y., Alon, U. & Yahav, E. Neural reverse engineering of stripped binaries using augmented control flow graphs. Proc. ACM Program. Lang. 4, 225:1-225:28. https://doi.org/10.1145/3428293 (2020).

Mikolov, T., Chen, K., Corrado, G., & Dean, J. Efficient estimation of word representations in vector space. In 1st International Conference on Learning Representations, ICLR , Scottsdale, Arizona, USA, May 2–4, Workshop Track Proceedings (Bengio, Y. & LeCun, Y. Eds.) (2013). arxiv:1301.3781.

Dai, H., Dai, B., & Song, L. Discriminative embeddings of latent variable models for structured data. In Proceedings of the 33nd International Conference on Machine Learning, ICML, New York City, NY, USA, June 19–24, ser. JMLR Workshop and Conference Proceedings (Balcan, M. & Weinberger, K. Q. Eds.), Vol. 48, 2702–2711 (2016).

Mikolov, T., Karafiát, M., Burget, L., Cernocký, J., & Khudanpur, S. Recurrent neural network based language model. In INTERSPEECH, 11th Annual Conference of the International Speech Communication Association, Makuhari, Chiba, Japan, September 26–30 (Kobayashi, T., Hirose, K. & Nakamura, S. Eds.), 1045–1048 (2010).

Wang, Z., & Yang, B. Attention-based bidirectional long short-term memory networks for relation classification using knowledge distillation from BERT. In IEEE International Conference on Dependable, Autonomic and Secure Computing, Calgary, AB, Canada, August 17–22, 562–568 (2020).

Massarelli, L., Luna, G. A. D., Petroni, F., Baldoni, R., & Querzoni, L. SAFE: Self-attentive function embeddings for binary similarity. In Detection of Intrusions and Malware, and Vulnerability Assessment-16th International Conference, DIMVA, Gothenburg, Sweden, June 19–20, Lecture Notes in Computer Science (Perdisci, R., Maurice, C., Giacinto, G. & Almgren, M. Eds.), Vol. 11543, 309–329 (2019). https://doi.org/10.1007/978-3-030-22038-9_15.

Redmond, K., Luo, L., & Zeng, Q. A cross-architecture instruction embedding model for natural language processing-inspired binary code analysis. arXiv:abs/1812.09652 [CoRR] (2018).

Zuo, F., Li, X., Young, P., Luo, L., Zeng, Q., & Zhang, Z. Neural machine translation inspired binary code similarity comparison beyond function pairs. In 26th Annual Network and Distributed System Security Symposium, NDSS, San Diego, California, USA, February 24–27 (2019).

Sennrich, R., Haddow, B., & Birch, A. Neural machine translation of rare words with subword units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, ACL , Berlin, Germany, August 7–12, Vol. 1 (2016). https://doi.org/10.18653/v1/p16-1162.

Chen, Q., Lacomis, J., Schwartz, E. J., Goues, C. L., Neubig, G., & Vasilescu, B. Augmenting decompiler output with learned variable names and types. In 31st USENIX Security Symposium, USENIX Security 2022, Boston, MA, USA, August 10–12 (Butler, K. R. B. & Thomas, K. Eds.), 4327–4343 (2022).

Chopra, S., Hadsell, R., & LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), 20–26 June 2005, San Diego, CA, USA, 539–546 (2005). https://doi.org/10.1109/CVPR.2005.202.

Kim, M., Kim, D., Kim, E., Kim, S., Jang, Y., & Kim, Y. Firmae: Towards large-scale emulation of iot firmware for dynamic analysis. In ACSAC ’20: Annual Computer Security Applications Conference, Virtual Event/Austin, TX, USA, 7–11 December, 733–745 (2020). https://doi.org/10.1145/3427228.3427294.

Shalev, N., Partush, N. Binary similarity detection using machine learning. PLAS ’18, 42–47 (Association for Computing Machinery, 2018). https://doi.org/10.1145/3264820.3264821.

Xia, B., Liu, W., He, Q., & Pang, J. Binary vulnerability similarity detection based on function parameter dependency, 1–19, 04 (2023). https://doi.org/10.4018/IJSWIS.322392.

Qiao, Y., Yun, X., & Zhang, Y. Fast reused function retrieval method based on simhash and inverted index. In 2016 IEEE Trustcom/BigDataSE/ISPA, Tianjin, China, August 23–26, 937–944 (2016). https://doi.org/10.1109/TrustCom.2016.0159.

Jin, W., Chaki, S., Cohen, C. F., Gurfinkel, A., Havrilla, J., Hines, C., & Narasimhan, P. Binary function clustering using semantic hashes. In 11th International Conference on Machine Learning and Applications, ICMLA, Boca Raton, FL, USA, December 12–15, Vol. 1, 386–391 (2012). https://doi.org/10.1109/ICMLA.2012.70.

Le, Q.V., & Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the 31th International Conference on Machine Learning, ICML, Beijing, China, 21–26 June, JMLR Workshop and Conference Proceedings, Vol. 32, 1188–1196 (2014).

Ding, S. H. H., Fung, B. C. M., & Charland, P. Asm2vec: Boosting static representation robustness for binary clone search against code obfuscation and compiler optimization. In 2019 IEEE Symposium on Security and Privacy, SP, San Francisco, CA, USA, May 19–23, 472–489 (2019). https://doi.org/10.1109/SP.2019.00003.

Gao, J., Yang, X., Fu, Y., Jiang, Y., & Sun, J. Vulseeker: A semantic learning based vulnerability seeker for cross-platform binary. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, ASE, Montpellier, France, September 3–7 (Huchard, M., Kästner, C. & Fraser, G. Eds.), 896–899 (2018). https://doi.org/10.1145/3238147.3240480.

Yu, Z., Cao, R., Tang, Q., Nie, S., Huang, J., & Wu, S. Order matters: Semantic-aware neural networks for binary code similarity detection. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI , New York, NY, USA, February 7–12, 1145–1152 (2020).

Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv:1810.04805 (arXiv e-prints) (2018).

Hosseini, S., Najafipour, S., Cheung, N.-M., Kangavari, M., Zhou, X., & Elovici, Y. Teals: Time-aware text embedding approach to leverage subgraphs, 1136–1174 (2020). https://doi.org/10.1007/s10618-020-00688-7.

Chen, Q., Lacomis, J., Schwartz, E. J., Goues, C. L., Neubig, G., & Vasilescu, B. Augmenting decompiler output with learned variable names and types. In 31st USENIX Security Symposium, USENIX Security 2022, Boston, MA, USA, August 10–12, 4327–4343 (2022). https://www.usenix.org/conference/usenixsecurity22/presentation/chen-qibin.

Alon, U., Brody, S., Levy, O., & Yahav, E. code2seq: Generating sequences from structured representations of code. In 7th International Conference on Learning Representations, ICLR, New Orleans, LA, USA, May 6–9 (2019). https://openreview.net/forum?id=H1gKYo09tX.

Acknowledgements

This work was supported by the “National Natural Science Foundation of China” under Grants (61802433, 61472447) and “key scientific and technological projects of Henan Province” under Grant (232102211088). We thank the anonymous reviewers for their comments that helped us improve the paper.

Author information

Authors and Affiliations

Contributions

B.X., J.P. and X.Z. wrote the main manuscript, and Z.S., F.Y. and J.W. prepared experimental part. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xia, B., Pang, J., Zhou, X. et al. Binary code similarity analysis based on naming function and common vector space. Sci Rep 13, 15676 (2023). https://doi.org/10.1038/s41598-023-42769-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-42769-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.