Abstract

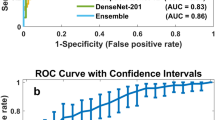

Mortality from breast cancer (BC) is among the top causes of cancer death in women. BC can be effectively treated when diagnosed early, improving the likelihood that a patient will survive. BC masses and calcification clusters must be identified by mammography in order to prevent disease effects and commence therapy at an early stage. A mammography misinterpretation may result in an unnecessary biopsy of the false-positive results, lowering the patient’s odds of survival. This study intends to improve breast mass detection and identification in order to provide better therapy and reduce mortality risk. A new deep-learning (DL) model based on a combination of transfer-learning (TL) and long short-term memory (LSTM) is proposed in this study to adequately facilitate the automatic detection and diagnosis of the BC suspicious region using the 80–20 method. Since DL designs are modelled to be problem-specific, TL applies the knowledge gained during the solution of one problem to another relevant problem. In the presented model, the learning features from the pre-trained networks such as the squeezeNet and DenseNet are extracted and transferred with the features that have been extracted from the INbreast dataset. To measure the proposed model performance, we selected accuracy, sensitivity, specificity, precision, and area under the ROC curve (AUC) as our metrics of choice. The classification of mammographic data using the suggested model yielded overall accuracy, sensitivity, specificity, precision, and AUC values of 99.236%, 98.8%, 99.1%, 96%, and 0.998, respectively, demonstrating the model’s efficacy in detecting breast tumors.

Similar content being viewed by others

Introduction

Cancer is a group of diseases that bring together cells in the body to form lumps called malignant tumors. In an uncontrolled way, these cells expand, and spread throughout neighbouring tissues, pushing out the normal cells. Cancer is considered to be one of the most significant diseases damaging human health, from the past to the present.

BC, lung cancer, and colorectal cancer will account for 52% of all new diagnoses in women in 2023, with BC alone accounting for 31% of female cancers. Since the mid-2000s, female BC incidence rates have been slowly increasing by roughly 0.5% per year, owing primarily to diagnoses of localized stage and hormone receptor-positive illness. This trend has been attributed, at least in part, to continued declines in fertility rates and increases in excess body weight, which may also contribute to an increase in uterine corpus cancer incidence of about 1% per year among women aged 50 and older since the mid-2000s and nearly 2% per year in younger women since at least the mid-1990s1,2,3. Figure 1 illustrates the estimated new cancer cases in 2023 for females in the United States.

One of the most important screening techniques for detecting BC is mammography, a special X-ray of a woman’s breast. Compared to mammography, a 3D mammogram is a powerful model. To create a 3D image of the breast, a 3D mammogram uses several breast X-rays. For women who have no signs or symptoms, a 3D mammogram is used to diagnose BC. It may also be used to look at other breast problems, such as breast mass, irritation, and nipple discharge4. The daily increase in the number of mammograms increases the radiologist’s workload, resulting in an increase in the misdiagnosis rate. However, regardless of the expertise of the clinicians studying mammography, extrinsic factors such as picture noise, fatigue, abstractions, and human delusion must be addressed, as the rate of breast mass misdiagnosis during early mammography screenings is more than 30%5.

Medical image processing algorithms for histopathology pictures are developing quickly, but it is still highly required to provide an automated methodology to achieve effective and highly accurate results. One of the Machine Learning (ML) applications is enhancement in health systems. In classical ML techniques, the dynamic nature of tasks such as pre-processing, feature extraction, etc. decreases the system’s accuracy and efficiency. The idea of DL has been implemented for extracting relevant knowledge from the raw images and using it effectively for classification processes in order to solve the problems of traditional ML techniques6.

Without the requirement for manual feature extraction, a convolutional neural network (CNN or ConvNet) model learns directly from the input. It’s powerful technique to detect objects, persons, and scenes by recognizing patterns in photographs. CNNs’ most impressive quality is their remarkable generalizability to different recognition tasks. In particular, CNNs learn to discriminate between different aspects of an input picture, and their architecture is designed to make use of the 2D nature of the image. Large annotated datasets, which are limited in the medical field, particularly for BC, are required to train deep CNNs7,8,9.

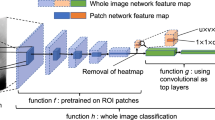

In DL applications, TL is widely used. In the medical sector, it has been very useful, where the amount of data is usually reduced. The aim of TL is to enhance learning by enhancing the target task by transferring information from the source task. Fig. 2 illustrates the TL process.

In this research, a novel DL-based model is created and used for breast tumor detection and classification. There are essentially two parts to the approach provided here. Firstly, five main steps after removing mammographic noise from the dataset (Normalization, segmentation, resizing, sampling, and data augmentation) are utilized to improve the image’s contrast. Next, the pre-trained SueezeNet and DenseNet CNNs are used to transfer the learned parameters to the breast tumor classification task. The full description of INbreast dataset can be founded at kaggle (https://www.kaggle.com/datasets/ramanathansp20/inbreast-dataset?resource=download). The main motivations for this paper have been outlined below:

-

1.

Improving the intensity of the images by applying six phases of data pre-processing.

-

2.

Reducing the training computation time by extracting only the affected regions using segmentation.

-

3.

Overcoming the overfitting problem which occurred due to the lack of medical images.

-

4.

Adapting the SqueezeNet and DenseNet architectures using LSTM and ADAM optimizer to improve the classification performance.

-

5.

Five various measures are applied to test the developed model namely: accuracy, sensitivity, specificity, precision, AUC, and F-score.

-

6.

Detecting and classifying the anomalies in breast mammography more precisely.

The structure of this paper is as follows: the associated work is detailed in “Related works”, whereas “Proposed model” presents a description of the suggested model for BC detection and classification utilizing TL approaches. “Results” presents the experimental outcomes compared to the actual data. Finally, the context is concluded in “Conclusion”.

Related works

Medical specialists sometimes make mistakes when recognizing a condition based on their experience. For the classification of BC, several approaches have been devised. To detect and diagnose the disease, ML and DL algorithms are being applied, with promising results in most situations. They analyze historical data acquired from previous patients to identify relationships between diseases, symptoms, and therapies for the patients.

The diagnosis can be 91.1% more accurate with the use of ML and DL technologies, compared to only 79.9% when performed by a qualified clinician6. However, there is still room to create and deploy a more effective BC diagnosis system using an acceptable method.

The innovative CNN for breast tumor classification was developed by Ting et al.10. This suggested CNN has only one input layer, twenty-eight hidden layers, and one output layer. The overfitting issue is fixed, and the data set is expanded through the rotation method. By using the BreastNet CNN, Toaçar et al.11 were able to extract the most useful information from their breast database. When compared to VGG16, VGG19, and AlexNet, the accuracy achieved by BreastNet (98.80 percent) is superior.

Breast mammography pictures may be classified as benign or malignant using a multi-layer DL model, which was first presented by Abbas12. Application to the MIAS dataset yielded values of 92% for sensitivity, 84.20% for specificity, 91.50% for accuracy, and 0.91 for AUC, while Mahmood et al.13. developed a novel DL model called ConvNet for diagnosing breast malignancy tissues and achieved an accuracy of 97%, sensitivity of 99%, and an AUC of 0.99.

Breast tumor categorization deep architecture was evaluated by Sha et al.14 using the MIAS. To improve the suggested CNN layers, the grasshopper optimization technique was used. In terms of sensitivity, specificity, and accuracy, the improved networks perform at 96%, 93%, and 92%, respectively.

The CNN suggested by Charan et al.15 has 13 layers (6 convolutions, 4 average-pooling, and 3 fully connected). Classification is performed using the softmax (SM) function on an input picture with dimensions of \(224 \times 224 \times 3. 65\%\) accuracy was achieved when compared to the MIAS database.

In16, Wahab et al. used the parameters learned from a pre-trained CNN to classify mitoses. Their approach yielded an accuracy of 0.50 and a recall of 0.80.

Lotter et al.17 fine-tuned the ResNet50 model to classify breast tumors. Their model could categorize breast images into five groups. For sensitivity, specificity, and AUC, their model achieved 96.2%, 90.9%, and 0.94, respectively.

The film mammography number 3 (BCDR-F03) dataset was utilized to fine-tune the GoogleNet and AlexNet models by Jiang et al.18. GoogleNet achieved 0.88 accuracy, while AlexNet achieved 0.83 accuracy.

In order to fine-tune GoogleNet, VGGNet, and ResNet models, Khan et al.19 used a common benchmark dataset. The accuracy of the model was 97.525 percent.

Cao et al.20 used random forest dissimilarity to improve TL performance on ResNet-125. After being put through its paces on the “ICIAR 2018” dataset, the model under test showed a classification accuracy of 82.90%.

Deniz et al.21 used the AlexNet and VGG16 CNN parameters learned on the BreaKHis mammographic dataset to train a target breast model. Overall, their model was accurate to the tune of 91.37 percent. Celik et al.22 used the DenseNet-161 CNN on the same dataset and got 91.57% accuracy.

The breast tumor categorization was enhanced by the application of the learned parameters from VGG-16, VGG-19, and Inception V3 by Abeer et al.23. The suggested model is tested on the MIAS dataset. In this study, we demonstrate that the VGG-16 can identify and categorize BC with an overall accuracy of 96.8%.

Abeer et al.24 proposed a DL model based on the TL technique. The proposed model contains two major parts. Their model is based on fine-tuned five of the most popular pre-trained CNNs (VGG16, VGG19, Inception-V3, Inception-ResNet, and ResNet-50). The extracted features, except the last three layers, are transferred to train the breast model. The MIAS data are used to train the last layers and to evaluate the presented model. The overall results are 98.96%, 97.83%, 99.13%, 97.35%, 97.66%, and 0.995 for accuracy, sensitivity, specificity, precision, F-score, and AUC.

Abeer et al.25 presented a DL methodology depending on the TL technique for breast tumor detection and classification. They used the INbreast dataset to fine-tune the VGG16 and VGG19 networks. The proposed model obtained 97.1%, 96.3%, 97.9%, and 0.988% for accuracy, sensitivity, specificity, and AUC, respectively.

Akselrod-Ballin et al.26 evaluated a DL model on the INbreast dataset for breast tumor classification based on a region-based CNN. The presented model achieved an accuracy rate of 78%.

Using GoogleNet, VGGNet, and ResNet, three pre-trained CNN architectures, Khan et al.19 constructed a TL model for breast tumor classification. On a common benchmark dataset, this model scored 97.525 percent accurately.

To categorize BC, Al-Antari et al.27 created a DL model using feedforward CNN, ResNet 50, and Inception ResNet-V2 networks. The accuracy of the shown model increased from 86.74% to 92.55% to 95.32% throughout the INbreast dataset while using the first, second, and third networks.

Lou et al.28 proposed a DL model based on fine-tuning the ResNet-50 pre-trained network for breast tumor classification. The presented model achieved 84.5%, 77.2%, 88.2%, and 0.931 for accuracy, sensitivity, specificity, and AUC, respectively, on the INbreast database.

El Houby et al.29 built a novel CNN to classify breast tumors as benign or malignant. The INbreast, MIAS, and DDSM datasets are used for network evaluation. The CLAHE algorithm is used to improve the image contract. On the INbreast database, the overall results are 96.5%, 96.6%, 96.5%, and 98.0 for accuracy, sensitivity, specificity, and AUC, respectively. Mahmood et al.5 implemented a hybrid DL model for improving mass recognition accuracy in mamographic images. First, the features are extracted using a deep CNN network and then used to train an SVM classifier for the best classification accuracy. The proposed model was evaluated using a combination of three breast datasets (MIAS, INbreast, and Private) and achieved an overall accuracy of 97.8%.

Singh et al.30 developed and implemented a ML framework for BC classification. The illustrated framework was evaluated using the INbreast dataset and achieved 90.4%, 92.0%, 88.0% for accuracy, sensitivity, and specificity, respectively.

Chakravarthy et al.31 presented a DL model based on the TL technique. The presented model transferred the learned parameters except the last three layers from AlexNet, GoogleNet, ResNet-50, and Dense-Net121 and fine-tuned these layers using the INbreast dataset. The support vector machine (SVM) classifier is used to classify the breast tumors classes. The presented model achieved an accuracy of 96.6%.

Proposed model

Data pre-processing

Median, Gaussian, and Bilateral filters are applied to remove any mammographic noises from the INbreast dataset. The proposed model in this paper contains two major components, as shown in Figs. 3 and 4. The first is for image preprocessing (IP) while the second is the updated architecture for the deep squeeze CNN for breast feature extraction and classification. The IP contains six phases to improve the image contrast, reduce the computation time, and improve the classification performance, as shown in Fig. 3.

-

1.

Normalization:

The process of normalizing modifies the range of pixel intensity levels. It is usually called contrast stretching. A group of data should have a consistent dynamic range to prevent mental fatigue or distraction.

-

2.

Morphological analysis:

The tumor is extracted to reduce the computation time using morphological analysis (MA) and segmentation techniques. The MA process is a very necessary step in which the non-breast regions have been removed using the structuring element (SE).

The mathematical morphological operation illustrated in Fig. 5. can be estimated as follows:

-

(a)

Image opening \((IM_O)\)

IM_O is important to remove the small object from the image.

$$\begin{aligned} IM_O=Input \ominus SE \oplus SE \end{aligned}$$(1) -

(b)

Image closing \((IM_C)\)

IM_C is useful for filling tiny holes in images while maintaining the shape and size of objects.

$$\begin{aligned} IM\_C=Input \oplus SE \ominus SE \end{aligned}$$(2) -

(c)

White Top-hat (WTH):

$$\begin{aligned} WTH=Input-IM_O \end{aligned}$$(3) -

(d)

Black Top-hat (BTH):

$$\begin{aligned} BTH=IM_C-Input \end{aligned}$$(4) -

(e)

Mathematical morphological \((M_M)\):

$$\begin{aligned} M\_M=Input+WTH-BTH \end{aligned}$$(5)

Where \(\ominus\) and \(\oplus\) refer to dilation and erosion operations, respectively.

-

(a)

-

3.

Segmentation:

The tumor tissues have been determined using a region-based segmentation method. Region-based interventions target pixels that share similar characteristics. These methods are simple to use and noise-resistant. Similarity criteria are used in an effective seed-pixel-based region-growing segmentation to assess and add neighboring pixels to a region. The method is repeated until no more pixels meet the requirements.

-

4.

Image resizing:

The input images are resized to 224 x 224 and translated to three channels to match the input size of the pre-trained Squeeze Net architecture.

-

5.

Data sampling:

The dataset is divided into 80% and 20% for training and testing, respectively.

-

6.

Data augmentation:

Data augmentation strategies involve adding slightly modified copies of current data or creating new synthetic data from existing data to enhance the amount of data. It functions as a regularizer and minimizes overfitting, while an ML model is being trained. Data analysis is closely associated with oversampling. Data augmentation is only performed after the data has been divided. For appropriate data augmentation execution, the generated data for training the model must be derived only from the training data.

The data augmentation methods include:

-

1.

Random cropping: Before splitting one image into several images, choose many correct corner points at random. This approach ensures that the cropped images are not duplicated.

-

2.

Rotation: The rotation of an image is based on an angle, such as 45 degrees, and it is repeated repeatedly.

-

3.

Color shifting: This approach adds or subtracts numbers from the red, green, and blue channels (RGB). It may aid in the creation of various color distortions.

-

4.

Flipping: The input images might be flipped vertically or horizontally.

-

5.

Intensity variation: To make the images brighter or darker.

-

6.

Translation: The pixels of the image can be modified with (tx, ty) pixels.

In this paper, the rotation and flipping methods are used to solve the scarcity of the data problem, as shown in Fig. 6.

Transferring the deep CNN parameters

In this paper, the pre-trained parameters are used to improve the BC classification results. The CNNs contain three main layers (convolution, pooling, and FCL). The squeeze net contains 18 layers and is trained over the ImageNet database. while, the term “Densely Connected Convolutional Networks” is abbreviated as “Densenet.” There is a block in the Densenet where various convolution layers are interconnected. The network complexity increases with each layer, allowing for the identification of larger sections of the images. It begins to identify larger elements of the object until it finally recognizes the desired object. The ImageNet can classify 1000 classes such as pens, trees, cats, dogs, animals, and others.

Next, the SqeezeNet and DenseNet networks are used to detect tumors in breast mammography and classify the detected tumors into benign or malignant using the TL technique. All layers except the last three layers on the squeeze net network are frozen. Then, the frozen layers are transferred, and the last three layers are trained using the pre-processed INbreast images to be able to classify the breast images as illustrated in Fig. 4. However, instead of sending the deep feature sequence directly to the fully connected layer for classification and delivers it to the LSTM layer. The CNN network extracts and recognizes the local and global structures of the image in the pixel series very effectively, whereas the LSTM network discovers long-short-term associations (temporal features). LSTM was utilized to improve the model’s sensitivity and the impact of crucial variables. CNN was implemented to boost the model’s capacity to perceive the sensitivity of the data in the features. As a result, the suggested CNN-LSTM model is better suited for classifying data more precisely.

SqueezeNet is intended to minimise the number of parameters in the network while retaining excellent picture classification accuracy. This is accomplished by combining 1x1 convolutional layers with pooling layers to minimize the spatial dimension of the input feature maps, followed by bigger convolutional layers to capture complicated information. SqueezeNet achieves great accuracy while being more computationally economical than other deep neural network architectures by minimizing the amount of parameters in the network32.

DenseNet is intended to address the issue of vanishing gradients in very deep neural networks. DenseNet does this by utilizing dense connections between layers, in which the output of each layer is concatenated with the output of all preceding layers in the network. This allows the gradient to flow more readily through the network, making very deep models easier to train. Furthermore, DenseNet has been proven to outperform other deep neural network architectures in terms of accuracy and parameter efficiency, making it a popular choice for image classification applications33. The SqueezeNet and DenseNet architectures are illustrated in Fig. 7.

For classification, the SM and multiclass SVM is employed. Using the mathematical formula SM, a vector of numbers is transformed into a vector of probabilities, with the probabilities of each value proportional to the vector’s relative scale. In a multi-class problem, SM assigns a decimal probability to each class. The sum of those decimal probabilities must equal 1.0. Just before the output layer, SM is implemented using a neural network layer. The output layer and the SM layer must both have the same number of nodes. The SM equation is computed, as follows:

Where \(\vec {V}\) is the input vector to the SM function, made up of \((V_0,... V_K),\) vi is the elements of the input vector to the SM function \(e ^{vi}\) The standard exponential function is applied to each element of the input vector.

\(\sum _{j = 1}^{N}e^{Vj}\) is the normalization term. It ensures that all the output values of the function will sum to 1 and each be in the range (0,1).

N is the number of classes.

SVM is a supervised ML technique that can be applied to solve problems like classification and regression. Its purpose is to find the most optimal boundary between the various outputs.

The goal of multi-class SVM classification is to map data points into a high-dimensional space so that two classes can be separated linearly. A One-to-One strategy separates the multiclass problem into numerous binary classification problems using this method.

Another strategy is to use the One-to-Rest approach. In this strategy, the breakdown is set to a binary classifier per class. This paper employs the One-to-One strategy, with the classifier employing (m (m − 1)) /2 SVMs, where m is the number of unique class labels.

Stochastic gradient distant with moment (SGDM) and Adam optimizers is used for fine-tuning using the same parameters before and after pre-processing34,35. The parameters for the SGDM and Adam optimizers are presented in Tables 1 and 2.

To ensure clarity and facilitate reproducibility, we have provided a detailed step-by-step description of our methods in the form of pseudo code. This can be found in Algorithm 1 of our revised manuscript. The pseudo code outlines the sequence of operations performed during our experimental process, including the initialization of models, data splitting, optimizer definition, model training, addition of an LSTM layer, and classification.

Results

Mammographic dataset

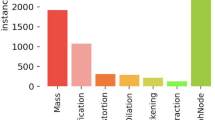

In this paper, the INbreast dataset is used to test the proposed model. INbreast is considered one of the most popular datasets in BC, as discussed in36. It contains 410 images for six classes in digital imaging and communications in medicine format. The dataset contains not only the image but also some related metadata The Breast Imaging-Reporting and Data System (BI-RADS) value is used to determine the type of tumor. Figure 8, shows the statistics of the most popular dataset usage in BC classification37. The detailed information of the INbreast dataset is illustrated in Table 3.

Experimental results

In this subsection, the experimental results of the suggested approach are presented in details. All experiments have been carried out using a dataset called INbreast. These images have been segmented into 20% for testing and 80% for training, with each class including either benign, malignant, or normal samples. SqueezeNet, as a Transfer-based (TL-based) method, is used in three different scenarios. The first experiment occurs before any pre-processing, the second experiment employs the SM classifier during pre-processing, and the last experiment employs the MSVM classifier.

To be able to have a fair comparison, all three experiments have been performed using Matlab 2021b on Windows 10 on Intel Corei7 machine, 2.67G CPU and 8.00 G of RAM.

The performance was measured using the evaluation metrics for three classes, as shown in Table 4. These metrics are Accuracy (Eq. 7), Sensitivity (Eq. 8), Specificity (Eq. 9), and Precision (Eq. 10).

Figure 9, shows the breast images after applying the pre-processing phases. The normalization and morphological operations improve the breast image for effective segmentation results while the segmentation results reduce the computation time.

The adapted pre-trained model is applied first using SGDM optimizer and then using the Adam optimizer. Using the SGDM optimizer, The SqueezeNet results before and after pre-processing per class are shown in Table 5. It’s obvious that from the pre mentioned table that using MSVM classifier gets the best results in accuracy, sensitivity, and AUC criteria. on the other hand, the classification results using the RF algorithm achieve the best results in specificity and precision criteria. The same network achieves best results in breast images classification using the ADAM optimizer as shown in Table 6. The results shown that the MSVM classifier is achieved the best results in almost all evaluation criteria as its results reached to 99.2%, 98.8%, 99.1%, 96%, and 0.998 for accuracy, sensitivity, specificity, precision, and AUC, respectively.

The results using the DenseNet architecture and SGDM optimizer before and after pre-processing are presented in Tables 7. The results from this table is shown that the MSVM classifier is achieved the best results in accuracy, sensitivity, precision, and AUC with 95.9%, 95.9%, 90.6%, and 0.998, respectively.While, the best specificity result is achieved using the RF classifier with 90.8%. The same network achieves the best results in breast images classification using the ADAM optimizer as shown in Table 8, its observed that the overall results are proved that the network optimized using the Adam optimizer is more accurate than the network optimized using the SGDM optimizer . The performance is compared with three other existing models. It can conclude that MSVM archives better results from SM and RF classifier in Adam in almost criteria i. e. accuracy, sensitivity, specificity, and AUC with 97.33%, 98%, 95.6%, and 99.8%, respectively.But, the best precision result is achieved using the RF classifier. The results analysis prove that the presented model performs better than other existing models in terms of accuracy, sensitivity, specificity, and precision.

Moreover, a comparison with different state-of-art approaches have been done with our proposed model as seen in Table 9. From this table, it can be seen that the developed model has better results comparing to recent studies existed in literature in terms of accuracy, sensitivity, specificity, precision, and AUC.

Conclusion

A DL model for breast tumor detection and classification in breast mammography was proposed in this paper. The goal of this model is to assist medical physicians in the detection and diagnosis of BC. The INbreast data were classified into three categories: benign, malignant, and normal. First, the mammographic dataset is preprocessed to improve the intensity of the images and reduce the computation time. Then, the data are increasingly augmented using data augmentation techniques. In the second phase, the SqueezeNet and DenseNet models are applied to enhance the breast features that are extracted from the input images. Finally, the SM, MSVM, and RF classifiers are employed for data classification. The experimental results using the MSVM classifier and Adam optimizer achieved the best results with accuracy, sensitivity, specificity, precision, and AUC values of 99.236%, 98.8%, 99.1%, 96%, and 0.998, respectively.

In future work, the Optimization algorithms can be used to enhance the developed algorithm38,39,40,41,42,43,44,45,46,47,48,49,50,51. For example, we can use Snake Optimizer52, Fick’s Law Algorithm (FLA)53, Dwarf Mongoose Optimization Algorithm (DMOA)54, Reptile Search Algorithm (RSA)55, Dandelion Optimizer56, and Aquila Optimizer (AO)57.

Data availability

Data is available from the authors upon reasonable request from crospending author. Data is available from the authors upon reasonable request.

References

Lowry, K. P. et al. Long-term outcomes and cost-effectiveness of breast cancer screening with digital breast tomosynthesis in the united states. JNCI J. Natl. Cancer Inst. 112, 582–589 (2020).

George, S. A. Barriers to breast cancer screening: An integrative review. Health Care Women Int. 21, 53–65 (2000).

Siegel, R. L., Miller, K. D., Wagle, N. S. & Jemal, A. Cancer statistics, 2023. CA Cancer J. Clin. 73, 17–48 (2023).

Saber, A., Sakr, M., Abo-Seida, O. M. & Keshk, A. Automated breast cancer detection and classification techniques—A survey. In 2021 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC). 200–207 (IEEE, 2021).

Mahmood, T., Li, J., Pei, Y. & Akhtar, F. An automated in-depth feature learning algorithm for breast abnormality prognosis and robust characterization from mammography images using deep transfer learning. Biology 10, 859 (2021).

Sakr, M., Saber, A., M Abo-Seida, O. & Keshk, A. Machine learning for breast cancer classification using k-star algorithm. Appl. Math. Inf. Sci. 14, 855–863 (2020).

Adnan, M. M. et al. An improved automatic image annotation approach using convolutional neural network-Slantlet transform. IEEE Access 10, 7520–7532 (2022).

Hassan, E., Shams, M. Y., Hikal, N. A. & Elmougy, S. Covid-19 diagnosis-based deep learning approaches for covidx dataset: A preliminary survey. Artif. Intell. Dis. Diagn. Prognosis Smart Healthc. 107 (2023).

Mahmoud, A. et al. Advanced deep learning approaches for accurate brain tumor classification in medical imaging. Symmetry 15, 571 (2023).

Ting, F. F., Tan, Y. J. & Sim, K. S. Convolutional neural network improvement for breast cancer classification. Exp. Syst. Appl. 120, 103–115 (2019).

Toğaçar, M., Özkurt, K. B., Ergen, B. & Cömert, Z. Breastnet: A novel convolutional neural network model through histopathological images for the diagnosis of breast cancer. Phys. A Stat. Mech. Appl. 545, 123592 (2020).

Abbas, Q. Deepcad: A computer-aided diagnosis system for mammographic masses using deep invariant features. Computers 5, 28 (2016).

Mahmood, T. et al. Breast lesions classifications of mammographic images using a deep convolutional neural network-based approach. Plos One 17, e0263126 (2022).

Sha, Z., Hu, L. & Rouyendegh, B. D. Deep learning and optimization algorithms for automatic breast cancer detection. Int. J. Imaging Syst. Technol. 30, 495–506 (2020).

Charan, S., Khan, M. J. & Khurshid, K. Breast cancer detection in mammograms using convolutional neural network. In 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET). 1–5 (IEEE, 2018).

Wahab, N., Khan, A. & Lee, Y. S. Transfer learning based deep CNN for segmentation and detection of mitoses in breast cancer histopathological images. Microscopy 68, 216–233 (2019).

Lotter, W. et al. Robust breast cancer detection in mammography and digital breast tomosynthesis using an annotation-efficient deep learning approach. Nat. Med. 27, 244–249 (2021).

Jiang, F., Liu, H., Yu, S. & Xie, Y. Breast mass lesion classification in mammograms by transfer learning. In Proceedings of the 5th International Conference on Bioinformatics and Computational Biology. 59–62 (2017).

Khan, S., Islam, N., Jan, Z., Din, I. U. & Rodrigues, J. J. C. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recogn. Lett. 125, 1–6 (2019).

Cao, H., Bernard, S., Heutte, L. & Sabourin, R. Improve the performance of transfer learning without fine-tuning using dissimilarity-based multi-view learning for breast cancer histology images. In International Conference Image Analysis and Recognition. 779–787 (Springer, 2018).

Deniz, E. et al. Transfer learning based histopathologic image classification for breast cancer detection. Health Inf. Sci. Syst. 6, 1–7 (2018).

Celik, Y., Talo, M., Yildirim, O., Karabatak, M. & Acharya, U. R. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit. Lett. 133, 232–239 (2020).

Saber, A., Sakr, M., Abou-Seida, O. & Keshk, A. A novel transfer-learning model for automatic detection and classification of breast cancer based deep CNN. Kafrelsheikh J. Inf. Sci. 2, 1–9 (2021).

Saber, A., Sakr, M., Abo-Seida, O. M., Keshk, A. & Chen, H. A novel deep-learning model for automatic detection and classification of breast cancer using the transfer-learning technique. IEEE Access 9, 71194–71209 (2021).

Ahmed, A. S., Keshk, A. E., Abo-Seida, M. O. & Sakr, M. Tumor detection and classification in breast mammography based on fine-tuned convolutional neural networks. IJCI Int. J. Comput. Inf. 9, 74–84 (2022).

Akselrod-Ballin, A. et al. A region based convolutional network for tumor detection and classification in breast mammography. In Deep Learning and Data Labeling for Medical Applications. 197–205 (Springer, 2016).

Al-Antari, M. A., Han, S.-M. & Kim, T.-S. Evaluation of deep learning detection and classification towards computer-aided diagnosis of breast lesions in digital x-ray mammograms. Comput. Methods Programs Biomed. 196, 105584 (2020).

Lou, M. et al. Mgbn: Convolutional neural networks for automated benign and malignant breast masses classification. Multimed. Tools Appl. 80, 26731–26750 (2021).

El Houby, E. M. & Yassin, N. I. Malignant and nonmalignant classification of breast lesions in mammograms using convolutional neural networks. Biomed. Signal Process. Control 70, 102954 (2021).

Singh, H., Sharma, V. & Singh, D. Comparative analysis of proficiencies of various textures and geometric features in breast mass classification using k-nearest neighbor. Vis. Comput. Ind. Biomed. Art 5, 1–19 (2022).

Sannasi Chakravarthy, S., Bharanidharan, N. & Rajaguru, H. Multi-deep CNN based experimentations for early diagnosis of breast cancer. IETE J. Res. 1–16 (2022).

Iandola, F. N. et al. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size. arXiv preprint arXiv:1602.07360 (2016).

Iandola, F. et al. Densenet: Implementing efficient convnet descriptor pyramids. arXiv preprint arXiv:1404.1869 (2014).

Hassan, E., Shams, M. Y., Hikal, N. A. & Elmougy, S. The effect of choosing optimizer algorithms to improve computer vision tasks: A comparative study. Multimed. Tools Appl. 82, 16591–16633 (2023).

Hassan, E., Hikal, N. A., Elmougy, S. et al. Deep skin cancer model based on knowledge distillation technique for skin cancer classification. (2022).

Moreira, I. C. et al. Inbreast: Toward a full-field digital mammographic database. Acad. Radiol. 19, 236–248 (2012).

Yengec Tasdemir, S. B., Tasdemir, K. & Aydin, Z. A review of mammographic region of interest classification. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 10, e1357 (2020).

Hussien, A. G., Khurma, R. A., Alzaqebah, A., Amin, M. & Hashim, F. A. Novel memetic of beluga whale optimization with self-adaptive exploration–exploitation balance for global optimization and engineering problems. Soft Comput. 1–39 (2023).

Hu, G., Wang, J., Li, M., Hussien, A. G. & Abbas, M. EJS: Multi-strategy enhanced jellyfish search algorithm for engineering applications. Mathematics 11, 851 (2023).

Zheng, R. et al. A multi-strategy enhanced African vultures optimization algorithm for global optimization problems. J. Comput. Des. Eng. 10, 329–356 (2023).

Hassan, M. H., Kamel, S., Shaikh, M. S., Alquthami, T. & Hussien, A. G. Supply-Demand Optimizer for Economic Emission Dispatch Incorporating Price Penalty Factor and Variable Load Demand Levels (Transmission & Distribution, IET Generation, 2023).

Hussien, A., Liang, G., Chen, H. & Lin, H. A double adaptive random spare reinforced sine cosine algorithm. CMES-Comput. Model Eng. Sci. 136, 2267–2289 (2023).

Hashim, F. A., Khurma, R. A., Albashish, D., Amin, M. & Hussien, A. G. Novel hybrid of AOA-BSA with double adaptive and random spare for global optimization and engineering problems. Alex. Eng. J. 73, 543–577 (2023).

Hussien, A. G., Hashim, F. A., Qaddoura, R., Abualigah, L. & Pop, A. An enhanced evaporation rate water-cycle algorithm for global optimization. Processes 10, 2254 (2022).

Wang, S., Hussien, A. G., Jia, H., Abualigah, L. & Zheng, R. Enhanced remora optimization algorithm for solving constrained engineering optimization problems. Mathematics 10, 1696 (2022).

Zheng, R. et al. An improved wild horse optimizer for solving optimization problems. Mathematics 10, 1311 (2022).

Yu, H., Jia, H., Zhou, J. & Hussien, A. Enhanced aquila optimizer algorithm for global optimization and constrained engineering problems. Math. Biosci. Eng. 19, 14173–14211 (2022).

Abualigah, L. et al. Lightning search algorithm: A comprehensive survey. Appl. Intell. 51, 2353–2376 (2021).

Abualigah, L. et al. Nature-inspired optimization algorithms for text document clustering—A comprehensive analysis. Algorithms 13, 345 (2020).

Hussien, A. G., Amin, M. & Abd El Aziz, M. A. comprehensive review of moth-flame optimisation: Variants, hybrids, and applications. J. Exp. Theor. Artif. Intell. 32, 705–725 (2020).

Assiri, A. S., Hussien, A. G. & Amin, M. Ant lion optimization: Variants, hybrids, and applications. IEEE Access 8, 77746–77764 (2020).

Hashim, F. A. & Hussien, A. G. Snake optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 242, 108320 (2022).

Hashim, F. A., Mostafa, R. R., Hussien, A. G., Mirjalili, S. & Sallam, K. M. Fick’s law algorithm: A physical law-based algorithm for numerical optimization. Knowl.-Based Syst. 260, 110146 (2023).

Al-Shourbaji, I. et al. Artificial ecosystem-based optimization with dwarf mongoose optimization for feature selection and global optimization problems. Int. J. Comput. Intell. Syst. 16, 1–24 (2023).

Sasmal, B., Hussien, A. G., Das, A., Dhal, K. G. & Saha, R. Reptile search algorithm: Theory, variants, applications, and performance evaluation. Arch. Comput. Methods Eng. 1–29 (2023).

Hu, G., Zheng, Y., Abualigah, L. & Hussien, A. G. Detdo: An adaptive hybrid dandelion optimizer for engineering optimization. Adv. Eng. Inform. 57, 102004 (2023).

Sasmal, B., Hussien, A. G., Das, A. & Dhal, K. G. A comprehensive survey on aquila optimizer. Arch. Comput. Methods Eng. 1–28 (2023).

Funding

Open access funding provided by Linköping University.

Author information

Authors and Affiliations

Contributions

A. S introduce the model & run the experiments. A. M. write the paper, formal anakysis A. A: validation, investigation A. G. H. : revise the whole paper W A. A: : revise the whole paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saber, A., Hussien, A.G., Awad, W.A. et al. Adapting the pre-trained convolutional neural networks to improve the anomaly detection and classification in mammographic images. Sci Rep 13, 14877 (2023). https://doi.org/10.1038/s41598-023-41633-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41633-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.