Abstract

In this work, we present a new approach to retrieve the optical phase map of an object which is projected by a single differentiated two-beam interference pattern. This approach is based on the differentiation of the intensity equation of the two-beam interference with respect to the carrier’s phase angle. Therefore, two interference patterns which are shifted by a very small phase angle can be obtained. Then, these two patterns are projected on the object. By exploiting the definition of the mathematical differentiation, the optical phase object’s variations are retrieved from the recorded intensity distributions of both projected patterns. According to this method, the extracted optical phase angles are raised as an inverse “sin” function. This means that the unwrapping process of this function limits the recovered phase angles between − π/2 and π/2. So, the unwrapping process of these unusual wrapped phase angles is explained. The proposed method is applied on (a) two objects which are simulated by combinations of multiple Gaussian functions and (b) a 3D real object. It is found that the inclination of the projected interference pattern on the object redistributes the intensity distribution due to the Lamber’s “cos” aw of illumination. This effect is considered in the retrieving process of the object’s phase map. The limitations of the presented method are discussed and the obtained results are found promising.

Similar content being viewed by others

Introduction

The three-dimensional (3D) real-time measurements of surface shapes are extremely important in different fields such as optics, engineering, industry, medicine, etc.…1,2. Optically-based profilometry techniques are commonly utilized to obtain 3D real-time surface shaping since they are considered noncontact and nondestructive tools1,3,4,5. Therefore, one can find different attempts which combine projection of interference fringes and phase-shifting methods for this purpose1,2,6,7,8,9. This combination is preferable for two reasons. On one hand, fringe-projection is based on the projection of pre-defined interference fringes on an object in order to change their phase distribution due to the shape of this object’s tomography1,2,5,6,7,8. On the other hand, the phase-shifting methods can be used for “pixel by pixel” extraction of an interference pattern’s phase distribution with high resolutions1,2,5,10,11,12.

By definition, the phase-shifting process requires more than one interference pattern, usually three or four2,5,9,10,11 (or even more13) phase-shifted interference patterns with known phase shifts. These shifted patterns can be used to extract the optical phase information with high accuracy. But, retrieving the phase values from multiple images is a time-consuming process which persecutes the real-time measurements1,2. Therefore, recent attempts are presented to overcome this problem; e.g., algorithms based on only two-step phase-shifting of a certain phase shift which is capable of speeding up the phase retrieving process with keeping the same quality level of the retrieved object’s shape1,2,12. Despite considering more than one pattern, the phase-shifting profilometry is still preferable compared to Fourier-transform profilometry which requires only one image for phase extraction. This is due to the influence of noise, filtration window and the boundary defects on the results’ accuracy in case of the Fourier transform profilometry2.

For the two-step phase-shifting methods, there are only two equations which can be established. These two equations are less than the three unknown parameters; (1) background term (or carrier), (2) intensity and (3) phase of each pixel in the interference pattern1,2,7,14,15. Therefore, different approaches are presented to extract the phase data using the two-step phase-shifting methods such as those based on the slow variable background term and the specific phase-shift2,12,16,17. Moreover, it was reported that the intensity of the slow variable background of the fringe patterns (i.e. carrier fringes), shifted by π/21, can be removed using an intensity derivative approach. The differentiation, in that approach, was respect to the position in the x-direction. The differentiation was performed on the two images (containing the object) and having a difference in phase equals π/2. We think that the differentiation in this case leads to transferring the position of the object when it is imaged. Additionally, that approach is only applicable when there is a variation of phase in the y-direction while the variation in x-direction could not be evaluated correctly.

Recently, an approach to retrieve the 3D surface map of an estimated object by projection of a couple of two-beam interference patterns was presented5. In that approach, a couple of two-beam interference patterns were produced to be shifted by a phase angle (π/2) and differentiated with respect to the carrier’s phase angle. For retrieving the phase object, four interference patterns were needed to be projected on the objects. Due to the (π/2) shift between the patterns, the resultant phase map is extracted by employing the conventional unwrapping process, i.e., inverse “tan” unwrapping. No real object was used in that work.

In the presented work, a developed approach is presented where a single two-beam intensity distribution is utilized. The two-beam intensity distribution is differentiated with respect to the carrier’s phase angle before projecting on the phase object. Therefore, the projected patterns are only two patterns with a small phase-shifting angle in between. Two estimated 3D objects are used to retrieve their phase maps in order to evaluate the accuracy of the method. Additionally, the method is applied on a 3D real object for demonstration of its validity.

Theory

The simplest form of the 1D intensity distribution of two-beam interference (say, in x-direction) can be given as follows:

where, Ic(x) can be called the carrier’s intensity distribution in the x-direction while the constants I1 and I2 are the intensities of the first and second interfered beams, respectively. δ(x) is the 1D spatial optical phase difference between the phases of the two interfered beams and it can be denoted as the phase angle of the carrier’s frequency.

The values of δ(x) can be reconstructed by, firstly, differentiation of Eq. (1) with respect to δ(x) which gives:

then, from the definition of the mathematical differentiation, we can write:

where, Ic,ϵ(x) is the carrier’s intensity when δ(x) suffers an infinitesimal variation (ϵ), i.e.:

Therefore, Eq. (3) can be rewritten as follows:

Equation (5) provides the phase angles distribution in the terms of inverse “sin”. So, the recovered wrapped phase angles will be limited between − π/2 and π/2 which is not usual to be obtained18,19,20. The unwrapping process in this case, along the x-direction, can be performed by dividing the phase angles into small intervals where each one of them represents the phase at \({\delta }_{j+1}\left(x\right)-{\delta }_{j}\left(x\right)\) along the x-direction. Then, running the summation over these intervals from j = 1 to j = M−1, where M is the total number of pixels in the x-direction. Therefore, the unwrapped phase angles of the carrier pattern Δc(x) can be obtained using the following formula.

where, j is the pixel’s order along the x-direction.

The above simple mathematical treatment means that the values of δ(x) can be retrieved using Eq. (5) for the two intensity distributions described by Eqs. (1) and (4) which represent 1D phase variations. The 2D interference patterns can be obtained by repeating these 1D phase variations in the y-direction. These two resultant patterns represent a pair of carrier fringes which are separated by the phase shift (ϵ). The value of ϵ is supposed to equal 2πf /M, where f is the spatial frequency of the fringes distributed over the image’s width M (pixels), i.e., ϵ is equivalent to only one pixel. The phase shift (ϵ) is too small to be observed by the naked eye.

In the presented study, we prepared three pairs of interference patterns with three different values (f = 0.5, 3 and 12). For more details about these interference patterns, see Supplementary Appendix 1. The purpose of these different frequencies will be clarified later. Each pair of these images has its own value of ϵ where M = 2000 pixels is kept fixed for all patterns. If each pair of these patterns is projected on a plane containing an object, they almost provide the same light intensities at each corresponding point on the object plane. This is due to the small value of ϵ. Therefore, many problems related to significant change in the intensities illuminating the object, e.g. reflectivity, color and the projector nonlinear response to the computer-generated signal could be avoided1.

Now, assume that the projected plane contains a 3D object which can cause a change in the optical phase’s distribution by the function φ (x,y). In this case, the intensity distribution in the presence of the object can be written as follows:

Similarly, when the phase angles δ(x) is changed with the small value ϵ, the intensity distribution takes the following form:

Following the same procedure described above (providing that \(\varphi \left(x,y\right)\) is not differentiable with respect to δ(x) because it depends on the rigid shape of the object), we can obtain the modified carrier phase angles distribution which is affected by the phase object as follows:

Similarly, the unwrapping process, described above, can be performed by applying the following summation where the same limitation of the inverse “sin” function is, also, raised here.

The extraction of the object optical phase map φ (x,y) can be obtained by subtracting Eq. (6) from Eq. (10).

Estimation of 3D objects

In this stage, we prefer to apply this approach on two estimated objects in order to make a confident evaluation of the proposed method before applying on a real object. Each of these estimated objects consists of a combination of nine 3D Gaussian formulae as follows:

where, \({\mathrm{G}}_{n}(x,y)\) is given as:

These 3D Gaussians are distributed on a plane of (2000 pixels × 2000 pixels) with different shape parameters, see Fig. 1. More information about the parameters of the surface objects can be found in Supplementary Appendix 2.

Projecting an estimated object by a pair of two-beam patterns

Change of phase due to the inclination of the incident light on the object

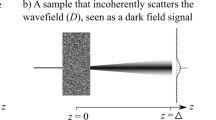

When a pair of two-beam interference images is projected on the object with an inclination angle (θ) between the light direction and the x-axis, the recorded fringes will be shifted according to the object’s height. This shift draws, in some way, the object’s topography. In this case, we consider the observer (recording camera) is facing the plane containing the object, see Fig. 2a. The relation between the object’s height (GT(x,y)) and the produced horizontal shift (xs) is depicted in Fig. 2b. So, the phase angles produced by the object are given as follows:

where, ξ (= 2πf/M (Rad./pixel)).

By substituting φ (x,y) in Eqs. (7) and (8), one can obtain the recorded estimated images of the two-beam interference which are deformed due to the presence of the object.

Change of intensity due to the inclination of the incident light on the object

Up to this point, we have the equations describing the variation of the phase due to the presence of the object. In fact, this object does not only deform the phase distribution of the projected image but it, also, redistributes the projected intensities. This is due to the inclination angle (β) between the incident light and the object’s surface at each point, see Fig. 2b.

where, α is given by Eq. (16) and it can be either positive or negative.

This inclination raises a change in the fringes’ intensity distribution on the object according to the Lambert’s “cos” law of illumination21. In fact, the effect of Lambert’s “cos” law must be considered when dealing with the intensities I1 and I2. This means that I1 and I2 are assumed to be homogenous illuminations in case of illuminating a plane. But, when illuminating a plane containing an object, I1 and I2 have to be projected directly on the object. Then, their recorded images (which are not homogeneous anymore) should be used instead of the constants I1 and I2. However, in our case of estimated objects with known parameters and controlled incidence angle, we are able to expect this intensity distributions I1(x,y) and I2(x,y) as follows:

Figure 3 illustrates how the two estimated objects seem when they are illuminated by the intensity distribution I2(x,y). It is clear that the illuminations are not homogeneous but they are rather redistributed due to the presence of the objects.

Illuminating the two estimated objects by the intensity distribution I2(x,y), given by Eq. (17).

Regarding Eqs. (7) and (8), they can be rewritten, when the effect of the inclination angle is considered, as follows:

and

Figure 4 illustrates the two estimated objects when they are projected with the intensity distribution given by Eq. (18) for fringes of (f = 0.5 and 12) and for the angle θ = 50°.

The projection of the interference pattern (Eq. (18)) on the two estimated objects (A,B) for f = 0.5 and 12.

Now, it is obvious that Eqs. (17)–(19) should be considered when dealing with Eqs. (6), (9)–(11) for recovering the object’s phase. For our case, Fig. 5 shows the obtained wrapped phase angle maps of the carrier and the two estimated objects (A, B) for f = 12 when we applied the above procedure.

To obtain the unwrapped phase distribution of the maps shown in Fig. 5, Eqs. (6) and (10) are applied. Figure 6 shows the unwrapped phase distributions of the wrapped phase maps illustrated in Fig. 5.

By subtracting the unwrapped carrier’s phase distribution (i.e., Fig. 6a) from the unwrapped maps of the two objects according to Eq. (11), one can recover the phase objects φ(x,y). For obtaining the recovered objects with their heights in pixels, the object’s phase values are divided on (ξ), see Eq. (14). The two reconstructed objects are illustrated in Fig. 7.

Limitations of the proposed method

Summations in Eqs. (6) and (10)

One can realize that when a phase angle tends to be − π/2 or π/2, there is an error due to these turning points. This error increases with increasing the number of the fringes (f) in the field while it can be minimized when, the number of pixels M in the field becomes higher, i.e., increasing the image’s resolution see Fig. 8. When we calculate the unwrapped resultant phase given by Eqs. (6) and (10), we can notice that it is probable that δj+1 = δj or (δ + φ)j+1 = (δ + φ)j which means that at the end of the summation, we will miss very small phase angles. The number of these missed phase angles depends on the number of the ± π/2 appeared on the phase angles and the value of the error each time is inversely proportional to the number of pixels (M). As demonstrated in Fig. 8, the error sources appear at phase angles of ± π/2.

Therefore, we apply three estimated fringe patterns on each of the estimated objects. These patterns have different fringes numbers of (f = 0.5, 3 and 12). The corresponding shift values (ϵ) in the carrier phase are (1.57 × 10–3 Rad., 9.42 × 10–3 Rad. and 37.7 × 10–3 Rad.), respectively. The dimensions of each image are 2000 pixels × 2000 pixels. The reconstructed object is subtracted from the estimated one in each case to calculate the differences between the simulated and the reconstructed objects. These differences are illustrated in Fig. 9 for the two objects. As it is clear, the error is reduced from ± 0.1 Rad. (in case of f = 12) to ± 2.5 × 10–3 Rad. (in case of f = 0.5) for the object (A). For the object (B), the error is reduced from ± 0.3 Rad. (in case of f = 12) to ± 8 × 10–3 Rad. (in case of f = 0.5). This is an important result and recommends minimizing the number of the projected fringes (for a certain pattern’s width in pixels) in order to reduce the error in case of using the proposed approach.

The boundaries of the incidence angle

The angle β, i.e., the incidence angle of the projected image given in Eq. (15), should be \(\le \frac{\pi }{2}\) to make sure that there is no discontinuity in the recorded fringes. This means that the following relation should be always satisfied.

Stating that the incidence angle (θ) should be always positive yields a limitation of θ to obey the following condition:

When α is negative, the inequality (21) is always fulfilled. On the other hand, when α is positive, it must not exceed θ. Otherwise we get a discontinuity in the recorded fringes. In this case, we recommend increasing the value of the inclination angle (θ) of the projected light.

Experimental verification of the proposed method

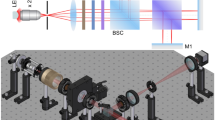

In order to verify our proposed method, we prepared a fringe projection setup as shown in Fig. 10. A flower-like object is fixed on a flat background of dimensions ~ 39 cm × 29.5 cm. An image of homogeneous illumination is projected on the object by the aid of a “BenQ smart 1080p” projector. The incidence angle of the light on the object ranges from 41° to 50° with respect to the x-axis. The modulated image, by the object, is recorded using a “Marlin F145B2” CCD camera from Allied Vision® and having a pixel pitch 4.65 µm. As it was discussed in “Change of intensity due to the inclination of the incident light on the object” section, the prepared image of homogeneous intensity equals \(2 \sqrt{{I}_{1 } {I}_{2}}\) is projected on the object. The recorded image in this case and its digitally filtered one are shown in Fig. 11a,b, respectively. The digital filtration process is applied for minimizing the noises appeared in the recorded images. One can notice that the homogeneous illumination is affected by the angle β(x,y), see Eq. (17).

Two interference patterns (carrier fringes) with a spatial frequency 0.5 and having \(\epsilon\) = 0.1 Rad. are prepared using Eqs. (1) and (4). These patterns are projected on the object to be recorded by the CCD camera. The first modulated carrier fringes are recorded and shown in Fig. 12a while their digitally filtered ones are shown in Fig. 12b. Similarly, the \(\epsilon\)-shifted carrier fringes modulated by the object are shown in Fig. 13a while their digitally filtered ones are shown in Fig. 13b.

The (a) recorded and (b) digitally filtered images of the flower-like object when it is projected by the carrier fringes prepared according to Eq. (1).

The (a) recorded and (b) digitally filtered images of the flower-like object when it is projected by the carrier fringes prepared according to Eq. (4).

Now using Eq. (9), we can obtain the phase object \(\varphi (x,y)\) in addition to the carrier phase \(\delta \left(x\right)\) by substituting the values of both \({I}_{o}(x,y)\) and \({I}_{o,\epsilon }\left(x,y\right)\). We can see that the images in Figs. 12b and 13b represent the quantities \({I}_{o}(x,y)\) and \({I}_{o,\epsilon }\left(x,y\right)\), respectively. The value of the denominator \(2 \epsilon \sqrt{{I}_{1 } {I}_{2}}\) is substituted from the image shown in Fig. 11b multiplied by the small phase shift \((\epsilon )\). The resultant phase map yielded from Eq. (9) is illustrated in Fig. 14. Similarly, the phase angle \(\delta \left(x\right)\) of the background field, given by Eq. (5), are obtained, see Fig. 15.

It is obvious that the phase maps of the object and carrier don’t exceed one cycle. In this case, the object phase \(\varphi (x,y)\) can be, directly, obtained by subtracting the phase map shown in Fig. 15 from that shown in Fig. 14. In this way, we obtained the \(\varphi (x,y)\) as illustrated in Fig. 16.

Once we get the object phase map (in Rad.), we can use Eq. (14) to calibrate the phase values to obtain the object’s height (in mm), where:

where, (ξ = 2πf/M (Rad./pixel)). Considering that f = 0.5 and M = 1280 pixels; ξ = 24.5 × 10–4 (Rad./pixel). Since the light coming from the projector is not parallel, \(\theta \left(x\right)\) is found to be ranging from 41° (at the farthest projected point) to 50° (at the nearest projected point). This is taken into consideration when we calculated \({\text{tan}}(\theta (x))\). In this way and by considering the real metric dimensions, one pixel is found equivalent to 0.306 mm. Figure 17 shows the object height (in mm) of the retrieved object.

We have to conclude that the above experimental work is our first attempt to verify the presented theory. The amount of the problems we faced was a surprise for us, particularly, the accompanied noise which we could not exactly identify its source. This noise might be due to the sensitivity of the used camera and its fast response to the incident light. However, as a first trial, we believe that it is not so bad and this work demonstrates that the theory is valid and promising to be used with better instruments to give results that are more qualified.

Conclusion

We presented an enhanced approach to recover the phase map of a three-dimensional object. The method is based on the differentiation of a single two-beam interference intensity distribution with respect to the phase angle. Only two estimated fringe patterns are projected on two estimated objects with an inclination angle 50°. A formula is proposed for unwrapping the phase values which are raised as inverse “sin”. This method shows that there is an error which grows with increasing the number of fringes in the projected pattern. This error is proved to be effectively minimized by reducing the frequency of the fringes in the projected field. The difference between the reconstructed and the estimated objects are determined. The difference in case of number of fringes in the projected pattern equals 0.5 doesn’t exceed 8 × 10–3 Rad. which is equivalent to π/375. The first attempt for experimental application, on a real 3D object, of the proposed approach is illustrated. By following the steps of the proposed method, we were able to retrieve the projected object and consequently, the tomography of the real object. The method presents a high sensitive way for recovering the phase map on a pixel scale. The obtained results are promising despite being having unexpected noise. Detecting the sources of the noise and solving this problem needs further work which is our outlook.

Data availability

All data generated or analyzed during this study are included in this published article and its Supplementary Information files.

References

Yang, F. & He, X. Two-step phase-shifting fringe projection profilometry: Intensity derivative approach. Appl. Opt. 46, 7172 (2007).

Yin, Z., Du, Y., She, P., He, X. & Yang, F. Generalized 2-step phase-shifting algorithm for fringe projection. Opt. Express 29, 13141 (2021).

Brown, G. M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 39, 10 (2000).

Cheng, H., Liu, H., Zhang, Q. & Wei, S. Phase retrieval using the transport-of-intensity equation. In 2009 Fifth International Conference on Image and Graphics 417–421. https://doi.org/10.1109/ICIG.2009.32 (IEEE, 2009).

Ramadan, W., Wahba, H. & El Tawargy, A. Optical phase retrieving by employing a differentiation of a couple of two-beam π/2 phase-shifted projected interference patterns. J. Opt. Soc. Am. B. https://doi.org/10.1364/JOSAB.494388 (2023).

Quan, C., He, X. Y., Wang, C. F., Tay, C. J. & Shang, H. M. Shape measurement of small objects using LCD fringe projection with phase shifting. Opt. Commun. 189, 21–29 (2001).

Quan, C., Tay, C. J., Kang, X., He, X. Y. & Shang, H. M. Shape measurement by use of liquid-crystal display fringe projection with two-step phase shifting. Appl. Opt. 42, 2329 (2003).

Wang, Z., Du, H. & Bi, H. Out-of-plane shape determination in generalized fringe projection profilometry. Opt. Express 14, 12122 (2006).

Huang, P. S. & Zhang, S. Fast three-step phase-shifting algorithm. Appl. Opt. 45, 5086 (2006).

El-Din, M. A. S. & Wahba, H. H. Investigation of refractive index profile and mode field distribution of optical fibers using digital holographic phase shifting interferometric method. Opt. Commun. 284, 3846–3854 (2011).

Malacara, D. Handbook of Optical Engineering (Marcel Dekker, 2001).

Flores, V. H., Reyes-Figueroa, A., Carrillo-Delgado, C. & Rivera, M. Two-step phase shifting algorithms: Where are we? Opt. Laser Technol. 126, 106105 (2020).

Liu, C.-Y. & Wang, C.-Y. Investigation of phase pattern modulation for digital fringe projection profilometry. Meas. Sci. Rev. 20, 43–49 (2020).

Flores, V. H. & Rivera, M. Robust two-step phase estimation using the simplified lissajous ellipse fitting method with gabor filters bank preprocessing. Opt. Commun. 461, 125286 (2020).

Zhang, H., Zhao, H., Zhao, J., Zhao, Z. & Fan, C. Two-shot fringe pattern phase demodulation using the extreme value of interference with Hilbert-Huang per-filtering. In Optical Measurement Systems for Industrial Inspection XI Vol. 151 (eds Lehmann, P. et al.) (SPIE, 2019).

Yin, Y. et al. Two-step phase shifting in fringe projection: Modeling and analysis. In Optical Micro- and Nanometrology VII Vol. 32 (eds Gorecki, C. et al.) (SPIE, 2018).

Yin, Y. et al. A two-step phase-shifting algorithm dedicated to fringe projection profilometry. Opt. Lasers Eng. 137, 106372 (2021).

Deng, J. et al. Edge-preserved fringe-order correction strategy for code-based fringe projection profilometry. Signal Process. 182, 107959 (2021).

Wahba, H. Digital Holography and Interferometric Metrology of Optical Fibres Digital Holographic Phase Shifting and Interferometric Characterization of Optical Fibers (VDM Verlag Dr. Müller, 2011).

Wahba, H. H. & Kreis, T. Characterization of graded index optical fibers by digital holographic interferometry. Appl. Opt. 48, 1573 (2009).

Born, M. & Wolf, E. Principles of Optics: Electromagnetic Theory of Propagation, Interference and Diffraction of Light (Cambridge University Press, 1999).

Funding

The open access funding is provided by the Egyptian Science, Technology & Innovation Funding Authority (STDF) in cooperation with the Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

W.A.R. presented the theory of this work. H.H.W. designed the programs of simulation and analyses. All authors contributed equally in writing and reviewing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ramadan, W.A., El-Tawargy, A.S. & Wahba, H.H. Optical phase retrieving of a projected object by employing a differentiation of a single pattern of two-beam interference. Sci Rep 13, 14840 (2023). https://doi.org/10.1038/s41598-023-41627-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41627-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.