Abstract

Snoring, as a prevalent symptom, seriously interferes with life quality of patients with sleep disordered breathing only (simple snorers), patients with obstructive sleep apnea (OSA) and their bed partners. Researches have shown that snoring could be used for screening and diagnosis of OSA. Therefore, accurate detection of snoring sounds from sleep respiratory audio at night has been one of the most important parts. Considered that the snoring is somewhat dangerously overlooked around the world, an automatic and high-precision snoring detection algorithm is required. In this work, we designed a non-contact data acquire equipment to record nocturnal sleep respiratory audio of subjects in their private bedrooms, and proposed a hybrid convolutional neural network (CNN) model for the automatic snore detection. This model consists of a one-dimensional (1D) CNN processing the original signal and a two-dimensional (2D) CNN representing images mapped by the visibility graph method. In our experiment, our algorithm achieves an average classification accuracy of 89.3%, an average sensitivity of 89.7%, an average specificity of 88.5%, and an average AUC of 0.947, which surpasses some state-of-the-art models trained on our data. In conclusion, our results indicate that the proposed method in this study could be effective and significance for massive screening of OSA patients in daily life. And our work provides an alternative framework for time series analysis.

Similar content being viewed by others

Introduction

Snoring is a breathing-related event, and it is generated by the vibration of anatomical structures in the upper airway during sleep, such as pharyngeal walls, soft palate, uvula, and tonsils1. Epidemiologic studies have shown that habitual snoring affects 9–17% of the worldwide adult population including about 40% of men and 24% of women2,3. Snoring disrupts seriously sleep both quantity and quality of snorers and anyone who shares the same sleeping space. Consequently, it often leads to daytime sleepiness and increased inattentiveness elevating the risk of accidents, as well as certain health conditions such as sleep disturbance, systemic arterial hypertension and coronary artery disease4. However, due to subjective neglect and limited medical resources, there are approximately 80–90% of OSA population in the developed world remaining undiagnosed and untreated, while the severity of this issue is even more pronounced in the developing world5,6.

In general, snoring occurs when the person sleeps on the back. For a snorer without any other nocturnal respiratory pathology (simple snore), it could be relieved naturally by sleeping on the side7. So, some gadgets have been developed to prevent snoring by providing some kind of notification to snorers when snoring occurs8,9,10. In addition, as one of the earliest and most prevalent nocturnal symptoms, snoring appears in 70–90% of obstructive sleep apnea (OSA) patients11. And studies have shown that the characteristics of snoring sounds, such as intensity12, spectral13, pitch-related features14,15, time and frequency-related parameters16, regularity parameters17 and some other features18,19, could be used to describe the different between simple snorers and OSA patients, as well as the severity of OSA. In addition, compared with the Polysomnography (PSG), the equipment recording audio signal is convenient, low-cost and non-contacting. Therefore, snoring signal has been emerged as one of primary physiological indicators to assess the condition of snorers. As a result, automatic detection of snoring sounds has garnered significant attention in academic research, becoming a prominent area of interest.

In light of the increasing community prevalence of OSA and growing demand for medical care personnel and supplies20, researchers have dedicated to achieving automated detection of snoring events with the help of computer technologies over the past two decades21. In early time, artificial extraction of physical or mathematical features was performed on the raw audio signal, which were subsequently applied to train classifiers, such as hidden Markov model (HMM) based on 12 Mel-frequency cepstral coefficients (MFCCs)22, unsupervised clustering algorithms based on formant frequencies or low-dimensional features by principal component analysis (PCA)23,24,25, AdaBoost classifier based on features in time and spectral domains26, quadratic discriminant analysis for formant frequencies27, K nearest neighbors (KNN) model trained by MFCCs and empirical mode decomposition28. Gaussian mixture model (GMM) trained by 40 features in time, energy and frequency domain29, linear regression models based on average normalized energy in subband30, support vector machine (SVM) based on multi-features in time domain31 and artificial neural network (ANN) band on temporal and spectral features32,33. The results have demonstrated the effectiveness of these methods in snoring recognition. However, it remains challenging to determine optimal feature set due to the diversity and nonlinearity of snoring sound.

In order to tackle the challenges of feature extraction, researchers have successfully applied deep learning algorithms, showing remarkable performance in feature representation of images and natural language, to analyze various physiological signals, including electrocardiogram (ECG)34, ballistocardiogram (BCG)35, vectorcardiography (VCG)36, electroencephalograph (EEG)37, electromyography (EMG)38, among others. And these algorithms have yielded promising results in characterizing the nocturnal sleep respiratory audio signal subsequently. For instance, Nguyen et al.39 and Çavuşoğlu et al.40 respectively utilized multilayer perceptron neural networks (MLP) to differentiate between snore and non-snore sounds; Arsenali et al.41 applied a long-short term memory (LSTM) model to classify snoring and non-snoring sounds after extracting MFCCs; Sun et al.42 proposed SnoreNet, a one-dimensional CNN (1D CNN) that directly operates on raw sound signals without manually crafted features; Khan et al.8 developed a two-dimension CNN (2D CNN) to analyze MFCCs images for automatically detecting snoring and applied it into a wearable gadget; Jiang et al.43 found an optional combination of Mel-spectrogram and CNN-LSTM-DNN for snoring recognition; Xie et al.44 employed a CNN to extract features from the constant-Q transformation (CQT) spectrogram, and then a recurrent neural network (RNN) was utilized to process the sequential CNN output for classifying the audio signal to snore or non-snore events. The comprehensive experiment settings and results of these researches are provided in Table 1.

Although most of deep learning algorithms performed well in classification of snoring and non-snoring episodes, there still are some drawbacks in these studies. Firstly, the training data was limited in availability and lacked diversity. So far, the data acquisition in most of researches was conducted in sleep laboratory or hospitals, where the sleep environment tends to be relatively quieter compared to a private bedroom at home. It means the signal to noise ratio (SNR) of recorded audio signal might be higher. In a few studies where experiments were performed at home, there only was less than 10 participants42. It is negative to train a robust classifier when these data recorded from the same period and a small number of subjects was used. Secondly, a new representation for snoring sound is needed. Some researches have shown that the effectiveness of spectrograms, Mel-spectrum in particular, in snoring detection while almost the same accuracy was obtained for many classifiers including single CNN8, LSTM41 and hybrid models43,44. It could be inferred that one of challenge in snoring detection is to find a better feature representation for raw audio signals at present.

In this study, we put forth several solutions to the aforementioned issues. In order to increase the diversity of samples, we design an experiment involved more than eighty participants and lasted more than ten months. Moreover, the data acquisition in this experiment was conducted at subject’s habitual sleep environment. In addition, the visibility graph (VG) method is introduced to represent nonlinearity of snoring sounds using mapped images. And then, a novel hybrid model combined 1D CNN and 2D CNN architectures is proposed for snoring recognition. Therein, the 1D CNN is used to for raw signal analysis and 2D CNN for corresponding images mapped by VG. And features generated by 1D CNN and 2D CNN are concatenated and analyzed by the next fully connected layer.

The main contributions of this paper are shown as following:

-

(a)

A larger and more diverse data set of nocturnal sleep respiratory audio signals recorded in subjects’ private homes is built in this work.

-

(b)

The VG method is first introduced to represent snoring sounds using mapped images.

-

(c)

A hybrid 1D-2D CNN framework is proposed for snoring sounds recognition, which is more accurate and robust than state-of-the-art deep learning models on our dataset.

The remainder of this paper is organized as follow. “Materials and methods” section describes the corpus of respiratory audios, and presents our processing methods including the visibility graph for data transformation and CNN model for classification in this paper. And then in “Experimental results” section, experimental results show the performance of our model in snoring classification task. The final section of this paper discusses our results and make some concluding remarks.

Materials and methods

Data recording

Support by the Affiliated of Hangzhou Normal University (Zhejiang, China), we recorded 88 individuals between 12 and 81 years old including 23 females and 65 males in our experiment from March 2019 until December 2019. All of them or their guardians on their behalf signed informed consent forms prior to our study. In our experiments, all methods met the ethical principles of the Declaration of Helsinki, the guidelines of the relevant guidelines and regulations. And the protocol for this data analysis study was approved by the Zhejiang Natural Sciences Foundation Committee and Ethics Board of Hangzhou Dianzi University.

Based on the aim of our research, a portable PSG and a high-fidelity sound acquisition equipment were used to recording respiratory sounds during overnight sleep in home environment instead of sleep center in studies before. Therein, the sound acquisition equipment was designed by ourselves, including a control module taking the I.MX6ULL as a core, a high-resolution microphone (NIS-80V, FengHuo Electronic Technology Co., Ltd, GuangDong, China; 20–2000 Hz frequency range, − 45 dB sensitivity), a power supply module, and a transmission module allowing online and offline data storage and transmission. During the experiment, it was placed around the subject at a distance in the range of 20–150 cm, and recorded monophonic nocturnal breathing sounds with a sampling frequency of 16,000 Hz. Finally, these signals were saved as some wave format files where each of them was 100 M. On the other hand, the portable PSG recorded various physiological signals including oxygen saturation, sphygmic and respiratory effort at a 10 Hz sampling frequency, which were used to diagnose OSAHS of subjects by a medical professional. In our experiment, the subjects contained simple snorers and OSAHS patients with different severity. Detail information of subjects are summarized in Table 2. The average duration of a respiratory sound recorded was 7 h and 26 min.

The long breathing sound signal recorded by microphone captures both snoring events and normal respiratory sound. First, some alternative sound episodes were extracted from the raw respiratory sounds by a clustering algorithm45. And then, these sound episodes were manually annotated as snore or non-snore in visual and auditory inspection. In order to ensure the diversity of snoring, part of subjects from normal snorers, mild, moderate, and severe groups were chosen. In total, 5441 sound episodes including 3384 snoring segments and 2057 non-snore ones were chosen in our experiment. The average duration of snoring episodes is approximately 1000 ms, and non-snore one is about 3000 ms in duration.

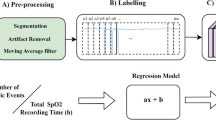

Preprocessing

In this paper, there are several preprocessing tasks performed for preparation. The methods involved and workflow of data processing is shown in Fig. 1.

Audio signal cropping

The audio signals in our database had various duration. It is a challenge in audio processing using the CNN for which the size of the input sample must be fixed and consistent. One of the most common methods to achieve this requirement of the CNN input layer is to split the audio signal into fixed-length fragments with the help of a sliding window.

For audio signals recorded in our experiments, we used a time window with appropriate width to capture continuous sound segments. In order to reserve information as much as possible, adjacent time windows may have a certain percentage of overlapping. The process of framing audio signals into frames was illustrated in Fig. 2. It is worth noting that the overlapping ratio of time windows varied across different groups of audio signals to prevent potential issues of data imbalance45 in our dataset. Meanwhile, the shorter frame of audio signal could keep the CNN model compact. In this study, based on the minimum duration of a snoring segment, length of the time window is set to be 0.3 s. And then, these sound clips were normalized by z-score standardization for improving precision and convergence speed of our deep learning model.

Visibility graph method

The visibility graph (VG) method, proposed by Lacasa et al.46, converts a time series into a graph based on a geometric principle of visibility. Researches have shown that the mapped visibility graph inherits several properties of the series in its structure: the periodic series is converted into regular graph, random series into random graphs and fractional series into scale-free graph46,47. So, it has been widely used to analyze time series in different fields including psychology, physics, medicine and economics48,49,50,51. In this paper, we regard the associated graph of time series as an image, which enables CNN-based analysis.

The criterion of image mapping by VG is established as follow: any arbitrary two data value \((t_{a} ,x_{a} )\) and \((t_{b} ,x_{b} )\) in the time series \(\{ t_{i} ,x_{i} \} (i{ = 1,2,} \cdots )\) will have visibility, and pixel value is 1 in corresponding position \((t_{a} ,t_{b} )\) of the mapped image, if any other data \((t_{c} ,x_{c} )\) with \(t_{a} < t_{c} < t_{b}\) satisfies

On the basis of this principle, the mapped image is a binary matrix where the value of corresponding element is 1 if two nodes is visible, otherwise it is 0. That is, the fluctuation of time series in time domain could be transformed as various geometric graph in time-time space. It provides a new perspective to explore complex dynamics of series more intuitively. In our study, each of sound clips were mapped into images with resolution of 4800 × 4800. Before transferred into the CNN model, these images were resized to 256 × 256 for simplified calculation.

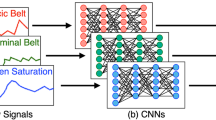

Convolutional neural networks

For the sound segment and its corresponding visibility graph, a deep neural network combined 1D CNN and 2D CNN was proposed to represent their characteristics, respectively. And then two fully connected layers were used to recognize these united features as snore or non-snore sound. The framework is shown in Fig. 3.

1D CNN topology

For the raw audio signals, a compact 1D CNN architecture with a reduced number of parameters is built in this paper as shown in Fig. 4. It is based on a 1D CNN architecture for environmental sound classification52. There are four trainable convolutional layers, interlaced with batch normalization layers53 and max pooling layers in this architecture. At last, the output of the last batch normalization layer is flattened by an average pooling layer, and they will be concatenated with features extracted by 2D CNN. Based on the assumption that the first layer in reflex arc has a more global view of the audio signal, the kernel size (called receptive field) in first convolutional layer is set to 1 × 64, and then decreases progressively to 1 × 8 for the next three convolutional layers. This architecture has been verified it is powerful enough to extract relevant low-level and high-level information from the small audio data set52.

2D CNN topology

So far, there have been lots of mature 2D CNN models widely used and done well in computer vision (CV) and natural language processing (NLP). Considering the sparse characteristics and multi-scale information carried by those nested quadrates of the mapped images generated by the VG method, we introduced an inception module in our 2D CNN model for snoring sound recognition54, as shown in Fig. 5a. The parallel multiscale convolutional layers could keep the computational budget constant while increasing the depth and width of the CNN models. It is helpful to overcome some problems such as computational expensiveness, over-fitting and vanishing gradient54. In our 2D CNN described by Fig. 5b, there are three convolutional layers, six inception modules and some pooling layers, which takes responsibility of feature representation. At last, two fully connected layers are applied to classify the concatenated features as snore or non-snore sounds.

In our model, the ReLU is chosen as the activation function for all convolutional layers, the ADAM algorithm and a binary cross entropy loss are applied to train optimal model based on error back propagation algorithm. In order to prevent over-fitting during training, we try our best to keep the CNN architecture simple and shallow as far as possible, L1, L2-norm with penalty coefficients of 0.001 were added to the loss function, and the dropout algorithm was applied to train our model. The learning rate had a 20-step gradual warmup from an initial value of 1e-6, and then it had a step-based decrement.

Postprocessing

In our database, there are a minimum of one sound frames and a maximum of about twenty frames extracted from a typical sound signal. And our CNN model is applied to process each sound clip. Therefore, for a sound event \(S\), its label \(C\) is decided by aggregating the CNN predictions of all split frames \(S_{1} ,S_{2} , \ldots ,S_{K}\) described as

where \(c_{i}\) is the prediction for the frame \(S_{i}\) (\(i = 1,2, \ldots ,K\)), which value is taken as 0 or 1 representing non-snore class and snore class respectively. The pseudocode of our 1D-2D CNN algorithm is given in Fig. 6.

System evaluation

The detection performance of our model is evaluated by confusion matrix. It described how many results were correctly classified and how many were incorrectly classified for each of categories. Further, accuracy, sensitivity, and specificity are calculated based on Eq. (3). Therein, accuracy evaluates the recognition capability overall of our model, which is a common assessment criterion in classification tasks. Sensitivity, also called as recall ratio, is the ratio of correct positive predictions in all true samples, and specificity is the ratio of correct negative predictions in all false ones. Both of them were supplemented to profile our model in more detail. In clinical medicine, the former is of great importance for preliminary screening of diseases and the later for their diagnosis. The possible values of above their indexes are from 0 to 1, and the higher their values, the better the performance of our classifier

where \(N_{TP,TN,FP,FN}\) represents the number of true positive (TP), true negative (TN), false positive (FP) and false negative (FN).

In addition, classification scores were obtained from a receiver-operating curve (ROC) and the area under this curve (AUC). The ROC is determined from the false positive rate and the true positive rates after applying different thresholds. The AUC score of 1.0 depicts perfect predictions, while a random classifier achieves an AUC score of 0.5. A higher AUC score means a better predictor. The characteristic of ROC and AUC is that their shape and score remain constant as the distribution of positive and negative samples while the samples change. So, it was a more objective index to assess our model performance.

Experimental results

We use the fivefold cross-validation strategy to train and test the proposed model. The 88 subjects were randomly divided into five groups including three eighteen-subject groups and two seventeen-subject groups. In each trial, four groups were used for training, while the remaining group was reserved for testing purposes. By ensuring independence at the subject level, we mitigate the risk of overvaluation caused by data from the same subject is both in training and testing sets.

Each snoring/non-snoring episode was divided into multiple fragments by methods in “Data recording” section. And its predictive label was decided by model outputs of all fragments based on Eq. (2). The performance of our model was evaluated by confusion matrices, indexes described in Eq. (3), and AUC. All preprocessing and analysis were performed using MATLAB R2019b (The MathWorks, Inc., Natick, MA, USA). The deep neural network, as shown in Figs. 3, 4 and 5, was constructed in Python 3.8 using Pytorch library 1.10. The model was trained on a desktop computer with Intel Core i9 (8 Cores) 3.5 GHz microprocessor, 64 GB BAM and NVIDIA GeForce RTX 3090 graphics processing unit (GPU).

In our experiment, a batch size of 150 samples was used for training our CNN model and it was trained up to 150 epochs. The learning rate followed a 20-step gradual warm-up starting from an initial value of 1e−6 at a rate of 1.6. Afterward, it was reduced to 10% of its original magnitude every 20 epochs. Figure 7 shows the variation of loss and accuracy of our model for snoring fragments recognition in a trail. It is obvious that our CNN model is converged gradually with the increasing of iterations and reaches stability after 70 iterations. In order to avoid over-fitting, the training was halted at 90 steps during iteration. After postprocessing, the results of the fivefold cross-validation for all 88 subjects are presented by confusion matrices in Table 3 and the calculated performance indices in Table 4. In our experiment, we achieved accuracy ranging from 86.4 to 91.2%, sensitivity between 88.1 and 91.6%, specificity between 83.0 and 91.8%, and AUC between 0.908 and 0.973.

Here, two snoring detection models including two proposed state-of-the-art models8,44 were chosen for comparison. And they were retained and their hyper-parameters were retuned based on our sound samples at subject level. At last, the results of these models on our dataset are summarized by Table 5, where the mean and standard deviation of indexes of all five runs are shown. All of these deep neural network algorithms extracted features automatically using CNN layers from raw audio signals or their transformations. Compared with datasets in these previous studies, our data is more diverse and lower signal-to-noise ratio at the case of little number of the event sounds. Affected by that, these three models trained by our data had poorer performances than that trained by their own dataset. By contrast, our method achieved better performances in terms of accuracy, sensitivity, specificity and AUC. And results show that the standard deviations of index values in five trials are smaller than other methods, which could be suggested that our algorithm is more robust. In addition, we trained a single 1D CNN with a same architecture shown in Fig. 4, and obtained a poor performance than our hybrid 1D-2D CNN model for snoring recognition. It could be inferred that images transformed by VG method provide some additional information for snore recognition.

Conclusion and discussion

In this paper, we build an audio database recorded by non-contact microphone in subject’s private bedroom and propose a new snoring detection algorithm based on the audio signal and a series of convolutional neural networks. Instead of manually engineered features, the proposed CNN learned information from the raw audio waveform and their VG maps, and was trained by audio data from 88 subjects. In our experiment, our algorithm achieves an average accuracy of 89.3%, which is higher than the accuracy obtained by two state-of-the-art methods in our dataset.

In addition, the VG maps transformed from the audio signal is first applied to snoring classification. The VG method can map the “visibility” relationship between features at different time points in time domain into real value at a particular location in two-dimensional space, and then the change of this relationship is visualized as specific geometrical shape. Experimental results shown in Table 5 indicate that the VG maps might provide higher-order and non-linear information as supplementary of raw audio signals. It is suggested that the VG maps could be an alternative method for time series classification.

Despite the superior performance of the proposed model compared to other state-of-the-art models on our dataset, it is not without its limitations. On one hand, the input size of model might be variable for different tasks. As a hyper-parameter, it should be chosen to balance the amount of information and computation. On the other hand, we assess the models' complexity in snoring detection by calculating the number of parameters and floating-point operations per second (FLOPS). The corresponding results are presented in Table 6. It is evident that the proposed model in this study exhibits a higher parameter count and FLOPS, indicating a greater demand for computational resources. And it is worth noting that this increased complexity does not necessarily confer an advantage in algorithm localization.

In addition, there are also shortcomings in our experimental settings. First, studies have shown that the sound characteristics of rare expiration snore sounds are different from the major dominant inspiration28,55. But we did not consider the phase of the respiratory cycle in which snore event occurs in our experiment. It makes it difficult for our method to recognize infrequency expiration snore sound. Secondly, the diversity of snore sounds due to different places of pharyngeal constriction56 is not taken into account in our study. If some kind of snore sounds is not involved in training, it could be missed by our algorithm.

Therefore, in future work, we will improve further the robustness and generalization of the current snore detection algorithm by adding more expiration snore sounds and considering more snore events due to distinct places of pharyngeal constriction. Furthermore, the original VG method could be replaced by other methods in VG family, which includes HVG, limited penetrable visibility graph (LPVG) and so on, for improvement of noise resistance and computation efficiency57. In addition, the VG map used in this study only contains the 0 and 1 elements representing whether the features in different points are visible. It could be improved by depicting degree of visibility between time points to inherit more information from raw time series, which might be meaningful for simplification of snore recognition.

Data availability

The data that support the findings of this study are available from The Affiliated Hospital of Hangzhou Normal University, but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of The Affiliated Hospital of Hangzhou Normal University.

References

Hoffstein, V. Snoring. Chest 109, 201–223 (1996).

Senaratna, C. V. et al. Prevalence of obstructive sleep apnea in the general population: A systematic review. Sleep Med. Rev. 34, 70–81 (2017).

Imran, S. & Seema, K. Snoring. Available online: http://sleepeducation.org/essentials-in-sleep/snoring/ (2022).

Norton, P. G. & Dunn, E. V. Snoring as a risk factor for disease: An epidemiological survey. Br. Med. J. (Clin. Res. Ed.) 291, 630–632 (1985).

de Silva, S., Abeyratne, U. R. & Hukins, C. A method to screen obstructive sleep apnea using multi-variable non-intrusive measurements. Physiol. Meas. 32, 445–465 (2011).

Elwali, A. & Moussavi, Z. Obstructive sleep apnea screening and airway structure characterization during wakefulness using tracheal breathing sounds. Ann. Biomed. Eng. 45, 839–850 (2017).

Melone, L. 7 Easy fixes for snoring. Available online: https://www.webmd.com/sleep-disorders/features/easy-snoring-remedies#1 (2022).

Khan, T. A deep learning model for snoring detection and vibration notification using a smart wearable gadget. Electronics 8, 987 (2019).

Theravent. Available online: https://www.theraventsnoring.com

Smartnora. Available online: https://www.smartnora.com/

Hoffstein, V., Mateika, S. & Anderson, D. Snoring: Is it in the ear of the beholder?. Sleep 17, 552–556 (1994).

van Brunt, D. L., Lichstein, K. L., Noe, S. L., Aguillard, R. N. & Lester, K. W. Intensity pattern of snoring sounds as a predictor for sleep-disordered breathing. Sleep 20, 1151–1156 (1997).

Ng, A. K. et al. Could formant frequencies of snore signals be an alternative means for the diagnosis of obstructive sleep apnea?. Sleep Med. 9, 894–898 (2008).

Sola-Soler, J., Jane, R., Fiz, J. A. & Morera, J. Pitch analysis in snoring signals from simple snorers and patients with obstructive sleep apnea. In Proceedings of the Second Joint 24th Annual Conference and the Annual Fall Meeting of the Biomedical Engineering Society, Engineering in Medicine and Biology, Vol. 2, 1527–1528 (2002).

Abeyratne, U. R., Wakwella, A. S. & Hukins, C. Pitch jump probability measures for the analysis of snoring sounds in apnea. Physiol. Meas. 26, 779–798 (2005).

Solà-Soler, J., Jané, R., Fiz, J. A. & Morera, J. Automatic classification of subjects with and without sleep apnea through snoring analysis. Annu. Int. Conf. IEEE EMBS 2007, 6094–6097 (2007).

Cavusoglu, M. et al. Investigation of sequential properties of snoring episodes for obstructive sleep apnoea identification. Physiol. Meas. 29, 879–898 (2008).

Ben-Israel, N., Tarasiuk, A. & Zigel, Y. Obstructive apnea hypopnea index estimation by analysis of nocturnal snoring signals in adults. Sleep 35, 1299–1305 (2012).

Hayashi, S. et al. A new feature with the potential to detect the severity of obstructive sleep apnea via snoring sound analysis. Int. J. Environ. Res. Public Health 17, 2951 (2020).

Romero, H. E., Ma, N., Brown, G. J., Beeston, A. V. & Hasan, M. Deep learning features for robust detection of acoustic events in sleep-disordered breathing. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019, 810–814 (2019).

Sun, J., Hu, X., Peng, S. & Ma, Y. A review on snore detection. World J. Sleep Med. 7, 552–554 (2020).

Duckitt, W. D., Tuomi, S. K. & Niesler, T. R. Automatic detection, segmentation and assessment of snoring from ambient acoustic data. Physiol. Meas. 27, 1047–1056 (2006).

Yadollahi, A. & Moussavi, Z. Formant analysis of breath and snore sounds. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2009, 2563–2566 (2009).

Azarbarzin, A. & Moussavi, Z. Unsupervised classification of respiratory sound signal into snore/no-snore classes. Annu. Int. Conf. IEEE EMBS 2010, 3666–3669 (2010).

Azarbarzin, A. & Moussavi, Z. Automatic and unsupervised snore sound extraction from respiratory sound signals. IEEE Trans. Biomed. Eng. 58, 1156–1162 (2010).

Dafna, E., Tarasiuk, A. & Zigel, Y. Automatic detection of whole night snoring events using non-contact microphone. PLoS ONE 8, e84139 (2013).

Shin, H. & Cho, J. Unconstrained snoring detection using a smartphone during ordinary sleep. Biomed. Eng. Online 13, 116 (2014).

Qian, K., Xu, Z., Xu, H., Wu, Y. & Zhao, Z. Automatic detection, segmentation and classification of snore related signals from overnight audio recording. IET Signal Process. 9, 21–29 (2015).

Dafna, E., Tarasiuk, A. & Zigel, Y. Automatic detection of snoring events using Gaussian mixture model. In International Workshop on Model and Analysis of Vocal Emissions for Biomedical Applications, 17–20 (2011).

Cavusoglu, C. et al. An efficient method for snore/nonsnore classification of sleep sounds. Physiol. Meas. 28, 841–853 (2007).

Wang, C., Peng, J., Song, L. & Zhang, X. Automatic snoring sounds detection from sleep sounds via multi-features analysis. Australas. Phys. Eng Sci Med. 40, 127–153 (2017).

Shokrollahi, M., Saha, S., Hadi, P., Rudzicz, F. & Yadollahi, A. Snoring sound classification from respiratory signal. Annu. Int. Conf. IEEE EMBS 2016, 3215–3218 (2016).

Swarnkar, V. R., Abeyratne, U. R. & Sharan, R. V. Automatic picking of snore events from overnight breath sound recordings. Annu. Int. Conf. IEEE EMBC 2017, 2822–2825 (2017).

Gupta, K., Bajaj, V. & Ansari, I. A. OSACN-Net: Automated classification of sleep apnea using deep learning model and smoothed gabor spectrograms of ECG signal. IEEE Trans. Instrum. Meas. 71, 1–9 (2022).

Gupta, K., Bajaj, V., Ansari, I. A. & Acharya, U. R. Hyp-Net: Automated detection of hypertension using deep convolutional neural network and Gabor transform techniques with ballistocardiogram signals. Biocybern. Biomed. Eng. 42, 784–796 (2022).

Gupta, K., Bajaj, V. & Ansari, I. A. An improved deep learning model for automated detection of BBB using S-T spectrograms of smoothed VCG signal. IEEE Sens. J. 22(9), 8830–8837 (2022).

Zhang, Y. Q. et al. An investigation of deep learning models for EEG-based emotion recognition. Frontiers 14, 622759 (2020).

Buongiorno, D. et al. Deep learning for processing electromyographic signals—A taxonomy-based survey. Neurocomputing 452, 549–565 (2021).

Nguyen, T. L. & Won, Y. Sleep snoring detection using multi-layer neural networks. Bio-Med. Mater. Eng. 26, S1749-1755 (2015).

Çavuşoğlu, M., Poets, C. F. & Urschitz, M. S. Acoustics of snoring and automatic snore sound detection in children. Physiol. Meas. 38, 1919–1938 (2017).

Arsenali, B. et al. Recurrent neural network for classification of snoring and non-snoring sound events. Annu. Int. Conf. IEEE EMBC 2018, 328–331 (2018).

Sun, J. et al. SnoreNet: Detecting snore events from raw sound recordings. Annu. Int. Conf. IEEE EMBC 2019, 4977–4981 (2019).

Jiang, Y., Peng, J. & Zhang, X. Automatic snoring sounds detection from sleep sounds based on deep learning. Phys. Eng. Sci. Med. 43, 679–689 (2020).

Xie, J. et al. Audio-based snore detection using deep neural networks. Comput. Methods Programs Biomed. 200, 105917 (2021).

Azarbarzin, A. & Moussavi, Z. Automatic and unsupervised snore sound extraction from respiratory sound signals. IEEE Trans. Biomed. Eng. 58, 1156–1162 (2011).

Lacasa, L., Luque, B., Ballesteros, F., Luque, J. & Nuño, J. C. From time series to complex networks: The visibility graph. Proc. Natl. Acad. Sci. 105, 4972–4975 (2008).

Li, R. et al. Fractal analysis of the short time series in is of the short time series in a visibility graph method. Physica A 450, 531–540 (2016).

Telesca, L., Pastén, D. & Muñoz, V. Analysis of time dynamical features in intraplate versus interplate seismicity: The case study of Iquique Area (Chile). Pure Appl. Geophys. 177, 4755–4773 (2020).

Acosta-Tripailao, B., Pastén, D. & Moya, P. S. Applying the horizontal visibility graph method to study irreversibility of electromagnetic turbulence in non-thermal plasmas. Entropy 23, 470 (2021).

Wang, N., Li, D. & Wang, Q. Visibility graph analysis on quarterly macroeconomic series of China based on complex network theory. Physica A 391, 6543–6555 (2012).

Zheng, M., Domanskyi, S., Piermarocchi, C. & Mias, G. I. Visibility graph based temporal community detection with applications in biological time series. Sci. Rep. 11, 5623 (2021).

Abdoli, S., Cardinal, P. & Koerich, A. L. End-to-end environmental sound classification using a 1D convolutional neural network. Expert Syst. Appl. 136, 252–263 (2019).

Loffe, S. & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International Conference on Machine Learning, 448–456 (2015).

Szegedy, C. et al. Going deeper with convolutions. In 2015 IEEE Conference on Computer Vision and Pattern Recognition, 1–9.

Whitelaw, W. Characteristics of the snoring noise in patients with and without occlusive sleep apnea. Am. Rev. Respir. Dis. 147, 635–664 (1993).

Janott, C. et al. Snoring classified: The Munich–Passau snore sound corpus. Comput. Biol. Med. 94, 106–118 (2018).

Wen, T., Chen, H. & Cheong, K. H. Visibility graph for time series prediction and image classification: A review. Nonlinear Dyn. 110, 2979–2999 (2022).

Acknowledgements

We would like to thank the doctors from the Affiliated Hospital of Hangzhou Normal University for their collaboration and assistance in data recording. Meanwhile, we also express our gratitude to patients who participated in this study for their understanding and support. This work was supported by Zhejiang Key Research and Development Project (2022C01048) and Zhejiang Public Welfare Technology Application Research Project (LGG22F010012).

Author information

Authors and Affiliations

Contributions

R.L. wrote the main manuscript text. R.Z. participated in sound segmentation and labelling of training samples. W.L. and K.Y. funded this work and gave some suggestions about experiment design. Y.L. contributed to revise of paper. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, R., Li, W., Yue, K. et al. Automatic snoring detection using a hybrid 1D–2D convolutional neural network. Sci Rep 13, 14009 (2023). https://doi.org/10.1038/s41598-023-41170-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41170-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.