Abstract

We test the quantumness of IBM’s quantum computer IBM Quantum System One in Ehningen, Germany. We generate generalised n-qubit GHZ states and measure Bell inequalities to investigate the n-party entanglement of the GHZ states. The implemented Bell inequalities are derived from non-adaptive measurement-based quantum computation (NMQC), a type of quantum computing that links the successful computation of a non-linear function to the violation of a multipartite Bell-inequality. The goal is to compute a multivariate Boolean function that clearly differentiates non-local correlations from local hidden variables (LHVs). Since it has been shown that LHVs can only compute linear functions, whereas quantum correlations are capable of outputting every possible Boolean function it thus serves as an indicator of multipartite entanglement. Here, we compute various non-linear functions with NMQC on IBM’s quantum computer IBM Quantum System One and thereby demonstrate that the presented method can be used to characterize quantum devices. We find a violation for a maximum of seven qubits and compare our results to an existing implementation of NMQC using photons.

Similar content being viewed by others

Introduction

Commercially available quantum computers (QCs) have arrived in the NISQ (noisy intermediate-scale quantum) era1. Equipped with 10s to 100s of of noisy qubits, these devices already enable the implementation of quantum operations and thus basic quantum algorithms2. Despite the lack of error correction, algorithms and techniques adapted to the strengths and shortcomings of the computers could facilitate non-classical computation within the near future. To compare the performance of the large range of different quantum devices and to find the best-suited QC for a specific problem, benchmarking, i.e., reproducibly measuring the performance of quantum devices, becomes especially important3.

To be independent of the architecture and capture the complexity of quantum machines, benchmarking protocols go beyond comparing the various hardware characteristics4,5. The goal is to find protocols that give maximal information about the performance of a quantum device2. Examples for such hardware benchmarks are randomised benchmarking6, cross-entropy benchmarks7, or the quantum volume4,8. Besides that, application benchmarks exist which test the performance of NISQ devices based on their execution of different applications or algorithms and help one to understand how good QCs can deal with different tasks2,5.

One fundamental type of application that can be used to benchmark quantum devices is the generation and verification of entanglement9,10,11,12,13,14,15,16,17,18. To this aim, various tests of multipartite entanglement have been implemented, e.g. utilising Mermin inequalities9,10,11,12 or multiparty Bell inequalities13,14, but also measuring the entanglement between all connected qubits in a large graph state15,16. In the case of Greenberger-Horne-Zeilinger (GHZ) states a feasible method to estimate the fidelity has been derived and implemented to verify the state generation of large numbers of qubits17,18.

In this work, we make use of a method called non-adaptive measurement-based quantum computation (NMQC) to characterise an IBM QC with 27 superconducting qubits.

The goal in NMQC is to compute a multivariate function. While local hidden variables (LHVs) can only output linear functions, quantum correlations can compute all Boolean functions. The success of such a computation can be related to the violation of a (generalised) Bell inequality and proves the advantage over classical resources19. So far, binary NMQC has been implemented with four-photon GHZ states20. Here, we use GHZ states on an IBM QC to implement NMQC with more than four qubits. This allows us to test the quantum correlations of the generated GHZ states and therefore the non-classicality of the respective IBM QC.

In particular, we implement NMQC for one two-bit function, three three-bit functions, and one four-bit, one five-bit, and one six-bit function on the superconducting quantum computing system IBM Quantum System One (QSO) and demonstrate that it exhibits multipartite entanglement. For qubit numbers lower or equal to five, we utilise quantum readout error mitigation21 to reduce noise from local measurement errors. For higher qubit numbers, we utilise the error mitigation tools provided by Qiskit22. We demonstrate violations of the associated Bell inequalities for up to seven qubits, which indicates the non-classical properties of the quantum computing system.

Background

NMQC

First, let us briefly describe the general idea of NMQC (for a detailed overview over the procedure see Fig. 1 and, e.g. Refs.19,23): starting from a classical n-bit input string \(x = (x_{0},x_{1},\ldots ,x_{n-1}) \in \{0,1\}^n\), which is sampled from a probability distribution \(\xi (x)\), the goal is to compute any multivariate Boolean function \(f: \{0,1\}^n \rightarrow \{0,1\}\). For this, one has access to a restricted classical computer limited to addition mod 2, which can be used for classical pre- and post-processing (see Fig. 1). The core of NMQC is embodied by non-adaptive measurements on an l-qubit resource state, with \(l\ge n\). It has been shown that if the measurement statistics are described by local hidden variables (LHVs)24, i.e. one uses a classical resource state, the output of NMQC is restricted to linear functions. As the pre-processor is already capable of outputting linear functions LHVs thus do not “boost” the pre-processor’s computational power19.

The figure shows the general scheme of NMQC. At the beginning, an input string \(x \in \{0,1\}^n\) is sent to the parity computer, which in turn computes the bit string \(s \in \{0,1\}^l\). This restricted computation can be seen as a matrix vector multiplication: \(s=(Px)_{\oplus }\), where P is an l-by-n binary matrix and \(\oplus\) denotes that the matrix vector product is evaluated w.r.t. mod 2 operations. Each bit \(s_{i} \in \{0,1\}\) in s now determines the settings for the measurement on the ith qubit of the l-qubit resource state. For each subsystem, there are two measurement operators \(\hat{m}_{i}(s_{i})\), one can choose from (here \(\hat{m}_{i}(0)\equiv X\) and \(\hat{m}_{i}(1)\equiv Y\), with X and Y denoting the Pauli operators). Each measurement yields one of two possible measurement results \(M_i \in \{-1,1\}\), which can be mapped to bits \(m_i \in \{0,1\}\): \(M_i = (-1)^{m_i}\). The measurements are performed on a correlated l-qubit resource and the measurement results \(m_{i} \in \{0,1\}\) are summed up by the parity computer: \(z \equiv \bigoplus _i m_i\). Finally, if \(z=f(x)\) for this input x, the computation was successful. Note that if \(z = f(x)\) for every x, we say that an NMQC scheme is deterministic. The figure has been adapted from Ref.23.

In contrast, non-local quantum correlations, can elevate the pre-processor to classical universality. The generalised l-qubit GHZ state

enables the computation of all functions \(f: \{0,1\}^n \rightarrow \{0,1\}\) with at most \(l=2^n-1\) qubits. The computation of a non-linear function requires non-locality25 and can be seen as a type of GHZ paradox19. This means that the successful execution of NMQC demonstrates non-locality. Note that in our case the non-locality is realised by single-qubit measurements on a multipartite entangled state and we thus use the two terms non-locality and multipartite entanglement interchangeably.

In general, it can be shown that the average success probability \(\bar{p}_{S}= p(z=f(x))\), i.e. the probability that the output z is identical to the value of the target function f(x), is related to a normalised Bell inequality \(\beta\) with a classical (LHV) bound \(\beta _c\) and a quantum bound \(\beta _q\)19:

The expectation values are defined:

where \(p(z=k|x)\) is the probability that z is equal to k for the input x.

It has been shown that the GHZ state always maximally violates the given Bell inequalities and minimises the number of required qubits for a violation26,27. It is thus optimal for NMQC19 and we will use it as a resource in the following investigations on IBM QSO.

Tested functions and Bell inequalities

The NMQC computations for the four-qubit GHZ state presented in this work result from the two-variate function:

and the three three-variate functions:

where \(\vee\) is the logical OR operator and \(\oplus\) denotes addition mod 2. Note that the same functions have been used to implement NMQC using a four-photon GHZ state in Ref.20.

In the case of the two-bit function \(\text{NAND}_2(x)\), we use a uniform probability distribution \(\xi (x)= \frac{1}{4}\), which yields the Bell inequality [see Eq. (2)]:

The relation between the measurement settings and the measurements, i.e., \(\hat{m}_{i}(s_{i}) = X/Y\) for \(s_{i}=0/1\) (\(i\in \{0,1,2,3\}\)) allows us to rewrite the Bell inequality (8) in terms of the four measurements:

where we additionally made use of the following relation between measurement settings and the input bits \(x_i\):

In the same manner one finds the Bell inequalities for the three three-variate functions given in Eqs. (5–7)20. The inequalities and the respective pre-processing implemented in this paper are shown in Table 1.

To perform NMQC for five- to seven-qubit GHZ states we use the generalisation of \(h_3(x)\), namely \(h_k(x)\), for \(k=4,\,5\) and 6:

For any k the sampling distribution is uniform, i.e. \(\xi (x)=1/2^k\), and the pre-processing is given by:

The Bell inequality induced by \(h_k(x)\) and defined by the pre-processing (12) and the uniform sampling distribution has the quantum bound \(\beta_q=1\). This can be seen by explicitly computing all expectation values. The classical bounds can either be found numerically or inferred from the connection between NMQC and classical Reed–Muller error-correcting codes, as pointed out in Ref.28. They are equal to \(\beta_c=2^{\frac{-k}{2}}\) for even k and \(2^{-\left( \frac{k-1}{2}\right) }\) for odd k. We elaborate on this in the Supplementary Information and further show that in order to compute the k-bit function \(h_k(x)\) with NMQC, one only requires \(k+1\) qubits.

NMQC on IBM Quantum System One

IBM QSO in Ehningen, Germany, is a 27-qubit QC, which we used to run NMQC for up to seven qubits. Testing a possible violation of Bell inequalities for different qubit configurations of the QC allows for a characterisation of the whole QC or a subset of qubits.

The QC’s architecture is shown in Fig. 2, where each qubit (vertex) is marked by its physical qubit number and edges denote physical connections between qubits. Here, physical connection means that two qubits are directly coupled, which allows for a direct implementation of two-qubit gates between those qubits. In the following, when mentioning the physical qubit numbers, we refer to the numbering depicted in Fig. 2. At the time of the experiment, the QC contained a Falcon r5.11 processor and its backend version was 3.1.9.

Architecture of IBM Quantum System One for different examples of l-qubit configurations. The nodes indicate qubits, marked by the physical qubit numbers and the edges denote which ones are physically connected. The qubit configurations are marked in red and the respective qubits are labelled by \(Q_k\), where k is the physical qubit number. (a) 4-qubit configuration 0–1–2–4. (b) 6-qubit configuration 8–9–11–14–16–19.

We perform two experiments on IBM QSO: (i) the physical qubits are chosen by Qiskit and the quantum circuit is optimised by Qiskit, and (ii) the physical qubits are chosen manually and the quantum circuit is optimised by our own method (see “Methods” section for details). In both experiments the goal is to generate generalised GHZ states [see Eq. (1)] as a resource to perform NMQC.

While in (i) we only test a single configuration, i.e. the one chosen by Qiskit, in (ii) we generate and test every possible l-qubit configuration, where l is the number of qubits. By “qubit configuration”, we mean the collection of l physical qubits that are physically connected in the QC (see Fig. 2). For each tested Bell inequality in (ii) we then average over all measured bounds for the distinct distributions to determine the measured bound of the whole QC.

Error mitigation

We post-process the measured data for up to five qubits, using the quantum readout error mitigation (QREM)21. This method has been used, for example, in Ref.18, where it has led to considerable improvements in the fidelity of a generated multi-qubit GHZ state. It aims at mitigating readout errors, which are errors during the measurement of the state of a single qubit and the main assumption is that these measurement errors are local. We explain the details in the “Methods” section.

To improve the results for NMQC using six and seven qubits, QREM seems to be insufficient. In fact, we observed a negative effect on the measured bounds and thus switch to the measurement error mitigation (MEM) provided by Qiskit22. In contrast to QREM Qiskit’s MEM does not assume measurement errors to be local but global. This means that instead of n \(2\times 2\) calibration matrices \(A_i\) one needs to determine a single \(2^{n}\times 2^{n}\) calibration matrix A by preparing and measuring all \(2^{n}\) basis states (see Ref.22 for details).

Measured bounds of the Bell inequalities, averaged over 70 runs with 1000 shots per circuit, induced by the functions \(\text{OR}_3(x)\), \(\text{OR}_3^{\oplus }(x)\), \(\text{NAND}_2(x)\) and \(h_k(x)\) for \(3 \le k \le 6\) and their standard deviations for (a) optimisation level 3 and the “dense” layout method and (b) optimisation level 3 and the “noise adaptive” layout method. The red (diagonally striped \\)/grey (dotted) bars denote the theoretically achievable quantum/classical bounds and the white (diagonally striped //) bars stand for the measured values. The exact values for all measured bounds are listed in Table 2.

Results

Here, we present the average values for the violations of the associated Bell inequalities of NMQC listed above. We start with the first experiment (i), in which the physical qubits are chosen by Qiskit. There, the circuits for NMQC were all transpiled using the option “optimisation level 3”, i.e. heavy optimisation including noise-adaptive qubit mapping and gate cancellation22. We differentiate between two sub-experiments: one, where the circuits were transpiled using the option “layout_method=dense”, which chooses the most connected subset of qubits with the lowest noise and one, where the circuits were transpiled using the option “layout_method=noise_adaptive”, which tries to map the virtual to physical qubits in a manner that reduces the noise22.

In the second experiment (ii) we choose the qubits manually, testing all possible qubit configurations to generate the l-qubit GHZ states and perform NMQC.

Experiment (i): transpilation optimisation level 3

Figure 3 shows the measured bounds of the Bell inequalities for optimisation level 3 and two different layout methods. Each Bell inequality was tested in 70 separate runs, where in each run every circuit, induced by the respective function, has been executed 1000 times, i.e. 1000 runs. One can see that for both methods all measured values, except for \(h_6(x)\), i.e. seven qubits, are above the classical bounds which translates to a quantum advantage in the associated NMQC games, even when taking into consideration the standard deviations determined from the 70 runs. This, in turn, means that the quantum average success probability of the probabilistic NMQC games is higher than the LHV one, indicating multipartite entanglement. For this experiment one can say that the performance of both methods “dense” and “noise adaptive” provided by Qiskit was similar.

Experiment (ii): transpilation optimisation level 0 and error mitigation

Figure 4a shows the measured bounds of the Bell inequalities averaged over all possible qubit configurations for four (\(\text{OR}_3(x)\), \(\text{OR}_3^{\oplus }(x)\), \(\text{NAND}_2(x)\) and \(h_3(x)\)), five (\(h_4(x)\)), six (\(h_5(x)\)), and seven (\(h_6(x)\)) qubits and the mitigated bounds improved by error correction. The error correction techniques applied are QREM (four and five qubits) and Qiskit’s integrated MEM (six and seven qubits). For every qubit configuration there is exactly one NMQC run with 1000 shots per circuit. Note that, if the calibration data of the backend had changed during the NMQC run, the data was discarded and the run repeated. Note further that before every run, the data needed for the error mitigation was generated. In Fig. 4b we show the measured bounds of the qubit configuration, which produced the highest violation (exact values and the physical qubit numbers are shown in Table 2).

(a) Measured average bounds of the Bell inequalities induced by the functions \(\text{OR}_3(x)\), \(\text{OR}_3^{\oplus }(x)\), \(\text{NAND}_2(x)\) and \(h_k(x)\) for \(3 \le k \le 6\) and their standard deviations for optimisation level 0 and the mitigated bounds. The red (diagonally striped \\)/grey (dotted) bars denote the theoretically achievable quantum/classical bounds and the white (diagonally striped //) bars stand for the measured values. The orange (plain) bars denote mitigated bounds. (b) Measured and mitigated bounds of the qubit configuration which produced the highest values, induced by the same functions for optimisation level 0. The exact values for all measured bounds as well as the qubit configurations are listed in Table 2.

One can see from the plots and the data (see Fig. 4a, b and Table 2) that not only the configurations, which produced the highest values, but also the averaged results are significantly higher than the classical bounds of the respective Bell inequalities for any tested function and number of qubits. Especially for more than three qubits the averaged values are higher than in the case of letting Qiskit choose the qubit configuration (see Fig. 3 and Table 2). In the case of the single configurations one should keep in mind that these results only express a single run (see Supplementary Information for details).

However, it is important to note that the performance of the QC strongly varies depending on the configuration and the time of execution (see Supplementary Information). This explains why performance differed across the four four-qubit experiments. For example, \(\text{OR}_3(x)\) performed significantly better than \(\text{OR}_3^{\oplus }(x)\).

The presented error margins correspond to the \(99\%\) confidence intervals of the measured values with respect to 1000 bootstrapped samples for each function except for \(h_6(x)\), where we used 100 bootstrapped samples. We chose bootstrapping29 instead of sampling at different moments in time as the performance of the quantum processor varied considerably. Due to the heavy bias introduced by the optimization procedure used in error mitigation, we omitted error margins as statistical errors are not a meaningful measure in this situation.

Comparison to photonic NMQC

In Ref.20 binary NMQC has been implemented using four-photon GHZ states, testing the functions \(\text{OR}_3(x)\), \(\text{OR}_3^{\oplus }(x)\), \(\text{NAND}_2(x)\) and \(h_3(x)\). Here, we compare our results using IBM QSO to the photonic results.

In Fig. 5a we show the measured bounds from the photonic experiments and the respective standard deviations. For a better comparison, we calculate the difference between these values and the results of experiment (ii), with and without error mitigation, i.e. \(\Delta \beta _{\text {meas/max}} = \beta _{\text {meas/max}}(\text {photons}) - \beta _{\text {meas/max}}(\text {QSO})\) and \(\Delta \beta _{\text {meas/max}}^{\text {corr}} = \beta _{\text {meas/max}}^{\text {corr}}(\text {photons}) - \beta _{\text {meas/max}}^{\text {corr}}(\text {QSO})\). In Fig. 5b we plot the difference to the measured bounds averaged over all qubit configurations (see Fig. 4a) and in Fig. 5c we plot the difference to the measured bounds of the qubit configuration which produced the highest values (see Fig. 4b).

We find that the photonic values are higher than the uncorrected results using IBM QSO comparing to both the averaged bounds and the highest bounds (except \(\Delta \beta _{\text {max}}\) for \(\hbox {OR}_3(x)\)). Using error mitigation the averaged values come closer to the photonic results but only exceed them in the case of \(\hbox {OR}_3(x)\). Only when applying error mitigation to the highest values produced by a single qubit configuration the photonic results are exceeded for every function. Additionally, one has to take into account that the values measured on IBM QSO strongly vary depending on the configuration and the time of execution (see Supplementary Information for details), which explains the different behaviour for \(\text{OR}_3(x)\). The possibility to go to larger numbers of qubits remains a big advantage of IBM QSO.

(a) Measured average bounds of the Bell inequalities induced by the functions \(\text{OR}_3(x)\), \(\text{OR}_3^{\oplus }(x)\), \(\text{NAND}_2(x)\) and \(h_3(x)\) and their standard deviations for a photonic implementation of NMQC using four-photon GHZ states20. The red (diagonally striped \\)/grey (dotted) bars denote the theoretically achievable quantum/classical bounds and the white (diagonally striped //) bars stand for the measured values. (b) and (c) Difference between the photonic results and the results of experiment (ii), with and without error mitigation, i.e. \(\Delta \beta _{\text {meas/max}} = \beta _{\text {meas/max}}(\text {photons}) - \beta _{\text {meas/max}}(\text {QSO})\) [white (diagonally striped //) bars] and \(\Delta \beta _{\text {meas/max}}^{\text {corr}} = \beta _{\text {meas/max}}^{\text {corr}}(\text {photons}) - \beta _{\text {meas/max}}^{\text {corr}}(\text {QSO})\) [orange (plain) bars]. (b) Difference to the averaged measured bounds. Without error mitigation the photonic values are always larger and \(\Delta \beta _{\text {meas}}\) is positive. With error mitigation the differences become smaller, but only for \(\hbox {OR}_3\) the mitigated result exceeds the photonic value. (c) Difference to the highest values produced by a single configuration. Even without error mitigation the differences \(\Delta \beta _{\text {max}}\) are small, however only for \(\hbox {OR}_3\) the photonic value is smaller and \(\Delta \beta _{\text {max}}\) becomes negative. With error mitigation the results produced by QSO exceed the photonic values and \(\Delta \beta _{\text {max}}^{\text {corr}}\) is always negative.

Discussion

On average, we have reached violations of all measured Bell inequalities for all tested functions listed in the “Background” section on the 27-qubit IBM QSO in Ehningen, Germany. In the cases where Qiskit has chosen exactely one configuration of physical qubits for every experiment, violations have been measured for all functions for up to six qubits. This is in contrast to the cases where we have tested all possible l-qubit configurations, configuration by configuration, where l is the number of qubits. There, the highest measured success probabilities of NMQC computations translate to violations of the tested Bell inequalities (with and without error mitigation) for up to seven qubits. Note that we observe this not only for the optimally performing qubit configuration but also for the measured success probabilities averaged over all possible qubit configurations. Since we have investigated every possible qubit configuration and averaged over all results, we have thereby tested the quantumness of the device. This means that we have shown a computational advantage in terms of NMQC using the device IBM QSO and thus demonstrated its non-local behaviour for up to seven qubits. Furthermore, we have compared our results using four qubits to an existing implementation of NMQC using four-photon GHZ states20.

An obvious question would be if it is possible to generalise NMQC as an indicator of non-locality to higher qubit numbers. The ratio between the quantum and the classical success probability of computing the k-bit pairwise AND function \(h_{k}(x)\) increases exponentially with the number k of input bits. It also requires only \(k+1\) qubits for its deterministic computation19. It is thus well suited for generalisation to higher input bit and therefore qubit numbers. To improve the results and carry out NMQC for \(h_k(x)\) for \(k>7\), one could apply more sophisticated error mitigation/correction techniques18. It would also be interesting to find other functions that translate to convenient Bell inequalities to test the non-classicality of QCs using this computational test. For this, one could use the relation between NMQC and Reed-Muller codes hinted at in the “Background” section. However, the performance of the qubits varies widely over time and this variance should definitely be taken into account in order to obtain larger GHZ state fidelities and thus better results. It is likely that, in the future, more sophisticated qubit mapping methods will be developed, such as30, which, in combination with error mitigation and error correction methods, could facilitate NMQC with large numbers of qubits.

Another possibility to reduce errors and noise in the generation of the GHZ states could be to minimise the depth of the quantum circuit. In Ref.31 a method has been discussed in which GHZ states of arbitrary size can be generated with constant circuit depth. Although additional ancilla qubits are needed, here the advantage gained from the constant circuit depth would presumably surpass problems caused by the increased number of qubits. From the generation of linear graph states on IBM QCs, which also has a constant depth, it is known that entangled states of much larger size can be generated14,16.

In conclusion, we have implemented NMQC for up to seven qubits using a 27-qubit IBM QC. We have shown that the calculation of non-linear Boolean functions and the simultaneous violation of multipartite Bell inequalities can be used to characterise quantum devices. This method can easily be extended to different quantum computing systems with qubits but also to higher-dimensional systems23.

Methods

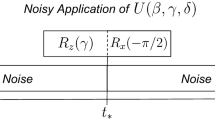

Creation of the GHZ state

The scheme used to generate the multi-qubit GHZ states in the first experiment (i) follows an easily scalable manner18, consisting of a single Hadamard (H) gate and \(n-1\) CNOT gates (see Fig. 6). Qiskit chooses the mapping of the virtual to the physical qubits and optimises the quantum circuit according to its highest optimisation level.

In the second experiment (ii), where we average over all possible qubit configurations, the qubit onto which the Hadamard gate acts is the one with the largest numbers of neighbours in the configuration and the one with the smallest readout-error rate. The readout-error rates are obtained from the backend’s calibration data which is updated before every NMQC run. If the calibration data changed during an NMQC run, the measured data was discarded and the run repeated. The CNOT gates are arranged in such a way that as many as possible of them can be carried out simultaneously, which minimises the circuit depth18. Note that CNOT gates are only applied between physically connected qubits.

Quantum readout error mitigation

In this section we explain the details of the quantum readout error mitigation (QREM) introduced in Ref.21. It aims to mitigate readout errors, which are errors during the measurement of the state of a single qubit. For example, a qubit might actually be in the state \(|1\rangle\), but the measurement device asserts that it is in the state \(|0\rangle\). The main assumption in QREM is that these measurement errors are local. This means that the measurement errors act on the probability vector \(\vec {p}\equiv (p(0,0,0,\ldots ,0), p(0,0,0,\ldots ,1),\ldots ,p(1,1,1,\ldots ,1))^T\), where \(p(m_{n-1},m_{n-2},\ldots ,m_1,m_0)\) is the probability of obtaining the measurement result \(m_i\) for the measurement (in the computational basis) of the ith qubit \(q_0\) (\(i\in \{0,1,\ldots ,n-1\}\)), in the following way:

The \(A_i\) are called the calibration matrices and \(p_i(x|y)\) are the probabilities of measuring the state x given that the ith qubit was actually prepared in the state y.

In order to mitigate the readout errors, one has to first compute the calibration matrices by preparing the qubits in the various states and then estimating the probabilities \(p_i(x|y)\) using the law of large numbers. The corrected probability vector \(\vec {p}\) is then obtained from the experimental probability vector \(\vec {p}\,'\) by inverting the calibration matrices. However, sometimes, as the estimation of \(A_i\) is not exact, the resulting \(\vec {p}\) may not be an actual physical probability vector, meaning that some element of it may be smaller than 0 or all of them do not sum up to 1. Therefore, if that is the case, we use an optimisation method to find the closest physical probability vector \(\vec {p}\,^{*}\) to \(\vec {p}\). To be exact, \(\vec {p}\,^{*}\) is given by21:

where \(\Vert \cdot \Vert\) is the euclidean norm.

Data availability

The data generated analysed during the current study are available from the corresponding author on reasonable request.

References

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79. https://doi.org/10.22331/q-2018-08-06-79 (2018).

Bharti, K. et al. Noisy intermediate-scale quantum algorithms. Rev. Mod. Phys. 94, 015004. https://doi.org/10.1103/RevModPhys.94.015004 (2022).

Eisert, J. et al. Quantum certification and benchmarking. Nat. Rev. Phys. 2, 382–390. https://doi.org/10.1038/s42254-020-0186-4 (2020).

Moll, N. et al. Quantum optimization using variational algorithms on near-term quantum devices. Quantum Sci. Technol. 3, 030503. https://doi.org/10.1088/2058-9565/aab822 (2018).

Lubinski, T. et al. Application-oriented performance benchmarks for quantum computing. IEEE Transactions on Quantum Engineering 4, 1–32. https://doi.org/10.48550/arXiv.2110.03137 (2023).

Helsen, J., Roth, I., Onorati, E., Werner, A. H. & Eisert, J. General framework for randomized benchmarking. PRX Quantum 3, 020357. https://doi.org/10.1103/PRXQuantum.3.020357 (2022).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510. https://doi.org/10.1038/s41586-019-1666-5 (2019).

Cross, A. W., Bishop, L. S., Sheldon, S., Nation, P. D. & Gambetta, J. M. Validating quantum computers using randomized model circuits. Phys. Rev. A 100, 032328. https://doi.org/10.1103/PhysRevA.100.032328 (2019).

Alsina, D. & Latorre, J. I. Experimental test of Mermin inequalities on a five-qubit quantum computer. Phys. Rev. A 94, 012314. https://doi.org/10.1103/PhysRevA.94.012314 (2016).

Swain, M., Rai, A., Behera, B. K. & Panigrahi, P. K. Experimental demonstration of the violations of Mermin’s and Svetlichny’s inequalities for W and GHZ states. Quantum Inf. Process. 18, 218. https://doi.org/10.1007/s11128-019-2331-5 (2019).

Huang, W.-J. et al. Mermin’s inequalities of multiple qubits with orthogonal measurements on IBM Q 53-qubit system. Quantum Eng. 2, e45. https://doi.org/10.1002/que2.45 (2020).

González, D., de la Pradilla, D. F. & González, G. Revisiting the Experimental Test of Mermin’s Inequalities at IBMQ. Int. J. Theor. Phys. 59, 3756–3768. https://doi.org/10.1007/s10773-020-04629-4 (2020).

Bäumer, E., Gisin, N. & Tavakoli, A. Demonstrating the power of quantum computers, certification of highly entangled measurements and scalable quantum nonlocality. npj Quantum Inf. 7, 117. https://doi.org/10.1038/s41534-021-00450-x (2021).

Yang, B., Raymond, R., Imai, H., Chang, H. & Hiraishi, H. Testing scalable Bell inequalities for quantum graph states on IBM quantum devices. IEEE J. Emerg. Sel. Top. Circuits Syst. 12, 638–647. https://doi.org/10.1109/JETCAS.2022.3201730 (2022).

Wang, Y., Li, Y., Yin, Z.-Q. & Zeng, B. 16-qubit IBM universal quantum computer can be fully entangled. npj Quantum Inf. 4, 46. https://doi.org/10.1038/s41534-018-0095-x (2018).

Mooney, G. J., White, G. A. L., Hill, C. D. & Hollenberg, L. C. L. Whole-device entanglement in a 65-qubit superconducting quantum computer. Adv. Quantum Technol. 4, 2100061. https://doi.org/10.1002/qute.202100061 (2021).

Wei, K. X. et al. Verifying multipartite entangled Greenberger–Horne–Zeilinger states via multiple quantum coherences. Phys. Rev. A 101, 032343. https://doi.org/10.1103/PhysRevA.101.032343 (2020).

Mooney, G. J., White, G. A. L., Hill, C. D. & Hollenberg, L. C. L. Generation and verification of 27-qubit Greenberger–Horne–Zeilinger states in a superconducting quantum computer. J. Phys. Commun. 5, 095004. https://doi.org/10.1088/2399-6528/ac1df7 (2021).

Hoban, M. J., Campbell, E. T., Loukopoulos, K. & Browne, D. E. Non-adaptive measurement-based quantum computation and multi-party Bell inequalities. New J. Phys. 13, 023014. https://doi.org/10.1088/1367-2630/13/2/023014 (2011).

Demirel, B., Weng, W., Thalacker, C., Hoban, M. & Barz, S. Correlations for computation and computation for correlations. npj Quantum Inf. 7, 29. https://doi.org/10.1038/s41534-020-00354-2 (2021).

Maciejewski, F. B., Zimborás, Z. & Oszmaniec, M. Mitigation of readout noise in near-term quantum devices by classical post-processing based on detector tomography. Quantum 4, 257. https://doi.org/10.22331/q-2020-04-24-257 (2020).

Qiskit contributors. Qiskit: An open-source framework for quantum computing. https://doi.org/10.5281/zenodo.2573505 (2023).

Mackeprang, J., Bhatti, D., Hoban, M. J. & Barz, S. The power of qutrits for non-adaptive measurement-based quantum computing. New J. Phys. 25, 073007. https://doi.org/10.1088/1367-2630/acdf77 (2023).

Bell, J. S. On the Einstein Podolsky Rosen paradox. Phys. Phys. Fizika 1, 195–200. https://doi.org/10.1103/PhysicsPhysiqueFizika.1.195 (1964).

Brunner, N., Cavalcanti, D., Pironio, S., Scarani, V. & Wehner, S. Bell nonlocality. Rev. Mod. Phys. 86, 419–478. https://doi.org/10.1103/RevModPhys.86.419 (2014).

Werner, R. F. & Wolf, M. M. All-multipartite Bell-correlation inequalities for two dichotomic observables per site. Phys. Rev. A 64, 032112. https://doi.org/10.1103/PhysRevA.64.032112 (2001).

Żukowski, M. & Brukner, Č. Bell’s theorem for general N-qubit states. Phys. Rev. Lett. 88, 210401. https://doi.org/10.1103/PhysRevLett.88.210401 (2002).

Raussendorf, R. Contextuality in measurement-based quantum computation. Phys. Rev. A 88, 022322. https://doi.org/10.1103/PhysRevA.88.022322 (2013).

Efron, B. & Tibshirani, R. An introduction to the bootstrap. In Chapman & Hall/CRC Monographs on Statistics & Applied Probability (Taylor & Francis, 1994).

Gerard, B. & Kong, M. String Abstractions for Qubit Mapping. Preprint at https://doi.org/10.48550/arXiv.2111.03716 (2021).

Piroli, L., Styliaris, G. & Cirac, J. I. Quantum circuits assisted by local operations and classical communication: Transformations and phases of matter. Phys. Rev. Lett. 127, 220503. https://doi.org/10.1103/PhysRevLett.127.220503 (2021).

Acknowledgements

We thank Jonas Helsen for the useful suggestions and explanations, Alexandra R. van den Berg for the fruitful discussions, Roeland Wiersema for the helpful tips and Chewon Cho (조채원) for the in-depth explanations. We thank Lukas Rückle and Christopher Thalacker for the explanations. We acknowledge support from the Carl Zeiss Foundation, the Centre for Integrated Quantum Science and Technology (\(\hbox {IQ}^\text {ST}\)), the Federal Ministry of Education and Research (BMBF, projects SiSiQ and PhotonQ), the Federal Ministry for Economic Affairs and Climate Action (BMWK, project PlanQK), and the Competence Center Quantum Computing Baden-Württemberg (funded by the Ministerium für Wirtschaft, Arbeit und Tourismus Baden-Württemberg, project QORA). We acknowledge the use of IBM Quantum services for this work. The views expressed are those of the authors, and do not reflect the official policy or position of IBM or the IBM Quantum team.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

J.M., D.B. designed the experiments; J.M. performed the experiments and acquired the experimental data. J.M. carried out theoretical derivations and wrote all the code; J.M., D.B., S.B. carried out theoretical calculations and the data analysis. J.M., D.B., S.B. wrote the manuscript. S.B. supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mackeprang, J., Bhatti, D. & Barz, S. Non-adaptive measurement-based quantum computation on IBM Q. Sci Rep 13, 15428 (2023). https://doi.org/10.1038/s41598-023-41025-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41025-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.