Abstract

There have been significant advances in biosignal extraction techniques to drive external biomechatronic devices or to use as inputs to sophisticated human machine interfaces. The control signals are typically derived from biological signals such as myoelectric measurements made either from the surface of the skin or subcutaneously. Other biosignal sensing modalities are emerging. With improvements in sensing modalities and control algorithms, it is becoming possible to robustly control the target position of an end-effector. It remains largely unknown to what extent these improvements can lead to naturalistic human-like movement. In this paper, we sought to answer this question. We utilized a sensing paradigm called sonomyography based on continuous ultrasound imaging of forearm muscles. Unlike myoelectric control strategies which measure electrical activation and use the extracted signals to determine the velocity of an end-effector; sonomyography measures muscle deformation directly with ultrasound and uses the extracted signals to proportionally control the position of an end-effector. Previously, we showed that users were able to accurately and precisely perform a virtual target acquisition task using sonomyography. In this work, we investigate the time course of the control trajectories derived from sonomyography. We show that the time course of the sonomyography-derived trajectories that users take to reach virtual targets reflect the trajectories shown to be typical for kinematic characteristics observed in biological limbs. Specifically, during a target acquisition task, the velocity profiles followed a minimum jerk trajectory shown for point-to-point arm reaching movements, with similar time to target. In addition, the trajectories based on ultrasound imaging result in a systematic delay and scaling of peak movement velocity as the movement distance increased. We believe this is the first evaluation of similarities in control policies in coordinated movements in jointed limbs, and those based on position control signals extracted at the individual muscle level. These results have strong implications for the future development of control paradigms for assistive technologies.

Similar content being viewed by others

Introduction

In recent years, there have been significant advances in the control of biomechatronic devices driven by biological signals derived from the user1,2,3,4,5,6,7,8,9. Various biological signals have been used for gesture intent recognition to drive such biomechatronic devices, including electrical signals such as surface electromyography (sEMG)10,11,12, electroencephalography13,14, electrocorticography15,16, as well as mechanical signals such as mechanomyography17 and sonomyography18,19,20,21,22,23,24. These signal extraction techniques have found broad applications in rehabilitation engineering25,26,27. However, these techniques have their own limitations. In some of these techniques, the users have control over only a few degrees of freedom, while in others, the user can proportionally control movement velocities, one gesture at a time, and need to use an ad hoc triggering mechanism to cycle through different gestures26,28,29. Clinically, there continues to be a significant unmet need to improve the control of biomechatronic devices. Prosthetic users can often reliably control only a small number of degrees of freedom of advanced multiarticulated prosthetic hands30. Nearly all of the individuals affected by stroke, cerebral palsy, or Parkinson’s disease often fail to attain normal function and activity levels even with the help of modern rehabilitation practices and assistive devices31. Exoskeletons have been designed to achieve better joint function32,33,34, but effective control over the exoskeleton is a critical limiting factor35.

To address these limitations there is extensive ongoing work on developing sophisticated machine learning algorithms to decode motor intent from non-invasive sensing modalities, as well as research on acquiring signals with increased signal to noise ratio and better specificity. Examples of ongoing algorithmic research includes pattern recognition36,37,38,39, artificial neural networks40 and regression-based techniques41,42,43,44,45,46. Examples of techniques being pursued to overcome the low signal-to-noise47,48, and low specificity49,50 of conventional sEMG include subcutaneously implanted electrodes51,52, or specialized surgical procedures like targeted muscle reinnervation53 as well as emerging noninvasive sensing modalities such as sonomyography18,19,20,21,22,23,24.

We chose to evaluate a human machine interface controller based on sonomyography, an emerging control method that uses real-time dynamic ultrasound imaging of deep lying musculature to infer motor intent. While the methods proposed in this paper can be extended to any human machine interface controller, we chose to use sonomyography because of its potential to achieve high signal to noise ratio for proportional positional control. Prior work from our group18,19,20,21 and others22,23,24 has shown real-time ultrasound imaging of forearm muscle deformations during volitional motor action can be used to decode motor intent during proportional isotonic movement54. In our previous work18, we showed that able bodied individuals as well as persons with limb deficiency were able to accurately and precisely control a virtual cursor using sonomyography.

In this paper, we investigate whether the concept of minimum jerk trajectory can be utilized to evaluate the performance of a human machine interface controller based on sonomyography. It has been shown humans follow typical minimum jerk trajectories during a variety of tasks such as arm reaching55,56,57, catching58, drawing movements59, vertical arm movements60, head movements61, saccadic eye movements62, chewing63, and several other motor skills64. Showing such a dynamic behavior of the task trajectories would be valuable to assess task performance from a motor control perspective. Although this minimum jerk trajectory has been shown for multi-joint coordinated movements such as reaching tasks, these studies predominantly measure the trajectory of some kinematic displacement. However, it has not been shown to exist during target acquisition tasks where the control signals are derived from an internal measurement using a sensor that is directly tracking muscle activation (i.e., not the resulting movement of the end-effector). Our objective in this paper is to evaluate the extent control signals derived at the muscle level follow the time course of minimum jerk trajectories.

To examine the extent sonomyography-based control signals mimic those of the intact limb, we developed two experiments. In Experiment 1, subjects controlled a virtual cursor position based on the position of a hand-held manipulandum. This data was collected to characterize typical baseline multi-joint coordinated movements. In Experiment 2, the same subjects moved a virtual cursor based on the control signals derived from sonomyography of the forearm muscles during wrist flexion-extension. Our research objective was to characterize the trajectories derived from the activation of the lower level muscle groups of the forearm (Experiment 2) and determine the extent to which they reflected the trajectories derived from multi-joint coordinated movement of the upper limb (Experiment 1). We hypothesized that similar to the point-to-point reaching movements performed using a hand-held manipulandum, the trajectories resulting from sonomyography would follow a minimum jerk trajectory, resulting in a systematic scaling in peak movement velocity magnitude and delay in time for the peak to occur as the movement distance increased. Such an analysis of movement trajectories provides not only all the standard metrics such as accuracy and path length, but also valuable time-domain information regarding the dynamics of the movement.

Methods

Participants

The same ten able-bodied individuals (mean age: 30 ± 5 years, 5 female) were recruited for both the experiments. Eight participants reported being right-hand dominant. All experiments described in this work were approved by the George Mason University Institutional Review Board and performed in accordance with relevant guidelines and regulations. All participants provided written, informed consent prior to participating in the study, and were also compensated for their participation.

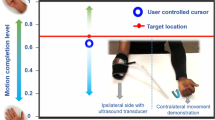

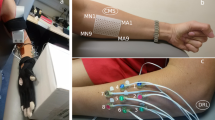

(a) Setup for Experiment 1. Subjects were seated in front of a digitizing tablet to capture the movement trajectories as they moved a manipulandum. A LCD monitor was placed above the tablet that showed the target location and the subjects’ manipulandum position. The subjects were asked to achieve the target positions displayed on the screen. (b) Setup for Experiment 2. Subjects were instrumented with an ultrasound transducer that recorded cross-sectional ultrasound images of their forearm, which resulted in a proportional signal correlating to their extent of flexion. The position of the subject-controlled cursor on the screen in front of them was driven by the proportional signal derived from sonomyography. Subjects were asked to achieve the target positions displayed on the screen. (c) Diagram showing the target acquisition task performed in Experiment 1. The dark circle on the left shows the starting position, while all the possible target locations are shown as faint circles on the screen. (d) Diagram showing the target acquisition task performed in Experiment 2. All possible targets are given by faint red lines. The ’X’ indicates the user controlled cursor. Flexing their wrist would make the cursor go left and extending their wrist would make the cursor go right (for a right handed person). It would be opposite for a left handed person. A fully extended wrist state would correspond with the cursor being at the right-most edge of the screen and a fully flexed state of the wrist would correspond with the cursor being in the left-most edge of the screen (for a right handed person).

Experimental setup and procedure

Experiment 1

Participants were asked to sit upright in front of a waist-high table. They sat in front of a horizontal 27-inch LCD monitor. The chair height was adjusted for each subject so that they could comfortably perform the task and view the screen. The experimental system included a monitor, a digitizing tablet, and a Windows PC to run the experimental paradigm and collect the behavioral data. The LCD monitor was mounted horizontally in front of subjects, displaying the target locations to achieve during each trial. The monitor was 10 inches above the digitizing tablet (Intuos4, Wacom) that tracked and recorded hand position at 60 Hz. Subjects grasped a cylindrical handle with their right hand, 2.5 cm in diameter, containing the tablet stylus inside. The hand/stylus moved on the tablet below the monitor. The position of the LCD monitor obstructed the vision of the tablet and the arm movements made by subjects. This setup is referred to as the ‘manipulandum’ setup (Fig. 1a,c).

The task was designed in PsychoPy and the data was collected and stored after being de-identified, with only a subject ID number. The participants were asked to move the manipulandum such that they were within a small circle (diameter 5 cm) to the left edge of the screen. Once they arrived at this point and held their position for 3 s, a target location was shown to the right of this position on the screen and they were prompted to acquire this newly presented target (diameter 5 cm). Once they moved the manipulandum to the correct target position, and held this new target position for 3 s, they were asked to move back to the original position to the left edge of the screen. This describes one trial. Seven such targets were displayed in each block of trials, and each block was repeated 5 times. This resulted in each participant performing the task 35 times (7 targets presented 5 times each). All the subjects performed the task with their right hand, with targets being presented to the right of the user and the user always starting from the left edge of the screen. The movement trajectory taken by the subject on each trial was sampled at 60 Hz and stored in separate tables. This data was analyzed post-hoc using custom-developed scripts in MATLAB.

Experiment 2

Participants were asked to sit upright with their elbow below their shoulder and the forearm comfortably secured to a platform on the armrest of the chair. Participants were instrumented with a clinical ultrasound system (Terason uSmart 3200T) connected to a low-profile, high frequency, linear, 16HL7 transducer. The imaging depth was set to 4 cm and the gain was set to 60. The transducer was manually positioned on the volar aspect of the forearm (dominant arm), in order to access the deep and superficial flexor muscles of the forearm. Subjects performed the task with their dominant limb. Left handed users started with their left wrist fully extended and the cursor to the left most edge of the screen with targets being presented to the right. Right handed users started with their right wrist fully extended and the cursor to the right most edge of the screen with targets being presented to the left. The transducer was secured in a custom designed probe holder and held in place with a stretchable cuff. In order to to prevent direct observation of the wrist and hand movements, participants placed their hand in an opaque enclosure. A USB-based video grabber (DVI2USB 3.0, Epiphan Systems, Inc.) was used to transfer ultrasound image sequences in real time to a PC (Dell XPS 15 9560). The acquired image frames were processed in MATLAB (The MathWorks, Inc.) using custom-developed algorithms18. The participants had a computer screen in front of them where they could see a real-time plot of their derived proportional position, along with the virtual targets. This setup is referred to as the ‘sonomyography’ setup (Fig. 1b,d).

Participants underwent a training procedure in which they performed repeated wrist extension and flexion. These movements were timed to a metronome such that the participant was cued to transition from full extension to full flexion within one second, hold the position for one second, return to full extension within one second, and hold the position for one second. This process was repeated five times. The ultrasound images corresponding with the full extension and flexion phases were averaged into a single representative image each, and added to a training database with a corresponding ‘extension’ or ‘flexion’ label. This formed the reference data set. The training captured the users’ flexion/extension end-states, and mapped those two end-states onto the two edges of the screen, such that all partial states would have a corresponding position on the screen for the cursor. Our training method has been described in detail in our previous work18. We only asked the users to move as accurately as possible, and told them that they had 10 s to do this, before the trial timed out. We asked the users to perform comfortable levels of flexion/extension during training, so that they would be able to perform these motions repeatedly.

During the actual trials, cross-sectional ultrasound images of the subject’s forearm were compared to this reference data set to derive a proportional signal as described in our prior work18. This derived proportional signal was sent to the computer, where the participant controlled an on-screen cursor that could move horizontally in proportion to the degree of muscle activation in the forearm (i.e., the cursor moved left in response to wrist flexion and right in response to wrist extension for right-handed subjects and opposite for left-handed subjects). The objective was to reach a vertical target line as accurately as possible before the trial ended, and retain the cursor at the target line until the trial ended. The interface presented a target position at random from a set of seven predefined, equidistant positions, which were identical to the target distances presented in Experiment 1. The target remained at each position for 10 s and then moved to the next position until all seven target points were presented. For each target position, the participant was prompted, via an automated audio cue, to move the cursor to rest position before the task began. After the seven targets were presented, the participant would rest for one minute and then repeat the task. They completed six blocks, with the first block for practice, and the following five blocks were used for analysis (7 targets, presented 5 times each).

Computation of movement parameters

For both the experiments, movement trajectories were recorded along with the associated timestamp and imported into MATLAB. No trials were discarded. The following metrics were computed.

Movement distance

Seven targets were presented at \(12.5\%\), \(25\%\), \(37.5\%\), \(50\%\), \(62.5\%\), \(75\%\), and \(87.5\%\) of screen width. For Experiment 1, the width of the manipulandum tablet was the same as the workspace shown on the 27 inch LCD screen above it (see Fig. 1a). For Experiment 2, a 27 inch computer monitor showed the cursor and target locations. The distance between the start point (always at 0% of screen width) and the target was the movement distance. The movement of the cursor in Experiment 2 was a virtual movement proportional to the range of motion, but scaled to the size of the screen, meaning that a fully extended state of the hand made the cursor go one extreme edge of the screen whereas a fully flexed state made it go to the other extreme. For example, 50% movement distance would require moving the cursor 13.5 inches on the screen. In this work, all movement distances and movement velocities have been expressed in terms of percentage of screen width. The target in Experiment 1 was a filled grey circle with a fixed radius, and the users were asked to reach a black dot at the center of the target as accurately as possible. In Experiment 2, the target was a filled red vertical band with a fixed width, and the users were asked to reach a one-pixel wide black line at the center of the target as accurately as possible.

Trial start and end point

The start point of each trajectory was set to the first sample where the movement velocity was positive. This was defined as the movement onset. The trial was marked as finished when the user-driven cursor was greater than \(95\%\) of the target for the first time.

Time to target

The time taken by the cursor to move from the start point to the end point was defined as the time to target for that trial. For every movement distance, we computed an average time to target and used it to compute the minimum jerk trajectory for that target.

Minimum jerk trajectory

The minimum jerk trajectory (MJT) minimizes the acceleration changes over the duration of the movement. This has been shown to be followed extensively throughout a wide variety of human movements. The MJT is represented as

The position and velocity profiles resulting from Eq. (1) as functions of time (t) are given65 by

where,

Position error

The average root mean square error between the cursor position and the minimum jerk trajectory over the duration of the movement was defined as the position error.

Path Efficiency

Path efficiency is the ratio of actual path length to the ideal path length. The actual path length was calculated by measuring the total distance traversed by the user to settle at the target. The ideal path length was defined as the distance between the starting point and the center of the target. For example, if for a given movement distance, the user-cursor trajectory started at 0 and traversed through points A-B-C to finally reach the target, then the path length would be 0A+AB+BC.

Statistical analysis

We employed a two-stage approach to assess the effects of the interactions between control modality and movement distance. We utilized the Scheirer–Ray–Hare (SRH) test, an extension of the Kruskal-Wallis test to experimental designs with multiple factors66,67. Similarly, the SRH test is a non-parametric analogue to multiple-factor analysis of variance (ANOVA).

In the first stage, we ran four total SRH tests corresponding to each response variable - peak velocity achieved during the trial, time taken to achieve the target, position error between target and cursor, and the time to velocity peak. The factors included control modality (Manipulandum and Sonomyography), movement distance (12.5%, 25%, 37.5%, 50%, 62.5%, 75%, and 87.5% of screen width), and an interaction effect between control modality and movement distance. The type of analysis performed in the second stage depended on whether the interaction term was significant in the first stage.

In the second stage, for response variables that did not have a significant effect with respect to the interaction term, we performed an updated set of SRH tests omitting the interaction term. For the response variables that had a significant interaction effect between control modality and movement distance, we performed two separate Kruskall–Wallis (KW) tests for subsets of the trials. The first subset consists of trials performed at the seven movement distances corresponding to the manipulandum modality, and the second subset includes the remaining trials performed at the seven movement distances obtained via sonomyography. After subsetting, note that there is just a single factor (movement distance) for each KW test. We elected to fix control modality for the Kruskal Wallis tests to minimize the total number of tests. However, one may choose to fix movement distance in future studies.

Two Brown-Forsythe tests were performed to test the effect of control modality on the variance of time to target and peak velocity across modalities. Two Brown-Forsythe tests were also performed to test the effect of movement distance on variance in path efficiency for the same control modality. A statistical significance level of p \(=\) 0.05 was used throughout this work.

Position traces versus time. The red line shows the mean position trace across all subjects, and the shaded yellow region shows one standard deviation. The horizontal axis represents time (seconds) from movement onset at time zero, and the vertical axis represents the distance to the target as a percentage of the workspace. The black line represents the minimum jerk trajectory based on the time to reach the target.

Velocity traces versus time. The red line shows the mean velocity trace across all subjects, and the shaded yellow region shows one standard deviation. The horizontal axis represents time (seconds) from movement onset at time zero, and the vertical axis represents the movement velocity as a percentage of the screen covered per second. The black line represents the minimum jerk trajectory based on the time to reach the target.

Results

In Experiment 1, we quantified the movement parameters while subjects made reaching movements of different amplitudes. Subjects moved a virtual cursor to one of seven different target positions. The cursor location reflected the location of the manipulandum moved by the subject. We analyzed performance by examining the trajectories of the cursor for each movement distance. As shown in prior literature55,68,69, the trajectories of the movement had a single velocity peak at approximately their mid-point, and a bell-shaped velocity profile (Figs. 2a and 3a).

In Experiment 2, we characterized performance when the cursor was driven using sonomyography. The movement distances were the same as those used in the arm reaching task with the manipulandum. In this case subjects moved the cursor to the required target by flexing their forearm muscles to the appropriate level. As above, we analyzed performance by examining the trajectories of the cursor for each movement distance. As was the case for the reaching movements, the trajectories taken by the subjects had a single velocity peak at approximately their mid-point, and a bell-shaped velocity profile (Figs. 2b and 3b).

In the first stage, a two-way Scheirer–Ray–Hare test was performed to test the effect of the control modality and the movement distance on the peak velocity achieved during a trial. The peak velocity increased significantly with respect to the movement distance across both control modalities (Fig. 4, p < 0.05, degrees of freedom = 6, H = 578.13). The peak velocity also increased significantly with respect to control modality (p < 0.05, degrees of freedom = 1, H = 57.27, higher for Experiment 2 than Experiment 1), but did not change significantly with the interaction term (p \(=\) 0.08, degrees of freedom 6, H = 11.12). In the second stage, an updated SRH test omitting the interaction term showed that the peak velocity increased significantly with respect to movement distance (p < 0.05) and increased significantly with respect to control modality (p < 0.05, higher for Experiment 2 than Experiment 1). A linear regression showed that the peak velocity increased linearly with respect to movement distance for Experiment 1 (slope = 1.08, \(R^2=0.96\)) as well as Experiment 2 (slope = 1.68, \(R^2=0.94\)).

In the first stage, a two-way Scheirer–Ray–Hare test was also performed to test the effect of the control modality and the movement distance on the time to target. The time taken by subjects to acquire the target increased significantly as the movement distance increased, for both control modalities (Fig. 5, p < 0.05, degrees of freedom = 6, H = 110.15). The time to target increased significantly with respect to control modality (p < 0.05, degrees of freedom = 1, H = 506.28, higher for Experiment 1 than for Experiment 2), but did not change significantly with the interaction term (p \(=\) 3.98, degrees of freedom = 6, H = 6.22). In the second stage, an updated SRH test omitting the interaction term showed that the time to target increased significantly with respect to movement distance (p < 0.05) and increased significantly with respect to control modality (p < 0.05, higher for Experiment 1 than Experiment 2). A linear regression showed that the time to target increased linearly with respect to movement distance for Experiment 1 (slope = 0.01, \(R^2=0.96\)) as well as Experiment 2 (slope = 0.01, \(R^2=0.53\)). These results showed that the peak velocity magnitudes and the time to target scaled with respect to the movement distance for the same control modality, but were significantly different when compared across control modalities.

Seven independent F-tests were conducted to test the difference in variance of peak velocity between the two experiments for the same movement distances. For example, peak velocities measured for a target distance of 50% from Experiment 1 were tested against peak velocities measured for a target distance of 50% from Experiment 2, and so on. All F-tests showed that the variance in peak velocities for all target distances was greater for Experiment 2 than the peak velocities for the corresponding target distances for Experiment 1 (p < 0.05, see Fig. 4).

Seven independent F-tests were also conducted to test the difference in variance of time to target between the two experiments at the same movement distances. All F-tests showed that the variance in time to target for all target distances was greater for Experiment 2 than the time to target for the corresponding target distances for Experiment 1 (p < 0.05, see Fig. 5).

Normalized time to target versus normalized peak velocity. Every trial’s peak velocity and time to target are normalized with respect to the average peak velocity and average time to target for the smallest movement distance. Each color represents a different movement amplitude. The ellipse is centered at the normalized mean of the distribution and the size of the major/minor axis represents the standard deviation along that axis. As the movement amplitude increases (from blue to magenta), the time to target (vertical location of each ellipse) does not change as much as the peak velocity (horizontal location of each ellipse).

Percentage of trials completed versus time. The data is grouped by movement distance. The percentage of trials completed within a certain time period was inversely proportional to the movement distance. Each color shows a different movement distance, whereas the black line shows the average number of trials across all movement distances. These figures also show that almost all the trials were completed within 2 s.

Each trial’s peak velocity was plotted against its time to target, normalized with respect to the peak velocity and time to target for the trial’s movement distance (see Fig. 6). Then ellipses were drawn such that the size of the axes corresponded with the standard deviation of peak velocities and time to target respectively, and the center of the ellipse was at coordinates given by the normalized time to target and normalized peak velocity respectively. The normalized peak velocity (normalized to the average peak velocity for the smallest target distance) increased to 4.24 times for the manipulandum and 4.93 times for sonomyography (from the shortest to the longest movement distance). However, the normalized time to target (normalized to the average time to target for the smallest movement distance) increased to only 2.46 times for the manipulandum and 1.83 times for sonomyography (from the shortest to the longest movement distance. For both control modalities, the relative difference in peak velocity (difference in the horizontal location) was more than the relative difference in time to target (difference in the vertical location).

Mean velocity traces were plotted against time to show scaling of peak velocities on average for every target, as well as the systematic shift in time to achieve peak velocity, which is a feature of the minimum jerk trajectory model (see Fig. 7). For Experiment 1, the time taken to achieve the peak velocity for the farthest target increased by 1.69 times compared to the smallest target, while it increased 0.97 times for Experiment 2.

In the first stage, a two-way Scheirer–Ray–Hare test was conducted to test the effect of control modality and movement distance on the time taken to achieve velocity peak. It increased significantly with respect to movement distance, for both control modalities (Fig. 8, p < 0.05, degrees of freedom = 6, H = 26.16). The time taken to achieve peak velocity also increased significantly with respect to control modality (p < 0.05, degrees of freedom = 1, H = 518.56, higher for Experiment 2 than Experiment 1), and also changed significantly with the interaction term (p < 0.05, degrees of freedom = 6, H = 26.18). Since the interaction effect was significant for time to velocity peak, in the second stage, two separate Kruskall–Wallis (KW) tests are conducted for subsets of the trials. The first subset consists of trials performed at the seven movement distances corresponding to the manipulandum modality, and the second subset includes the remaining trials performed at the seven movement distances obtained via sonomyography. After subsetting, note that there is just a single factor (movement distance) for each KW test. The test results indicated that movement distance is statistically significant for both levels of control modality (p < 0.05). We elected to fix control modality for the Kruskal Wallis tests to minimize the total number of tests.

To assess the performance of subjects, the trajectory of each trial was compared to the minimum jerk trajectory. For every target position, we computed the average time to target and used this time to compute the minimum jerk trajectory for that target position. The time series root mean square error was computed between the average position trace and the minimum jerk trajectory, and termed as position error. In the first stage, a two-way Scheirer–Ray–Hare test was conducted to test the effect of control modality and movement distance on the position error. It increased significantly with respect to movement distance, for both control modalities (Fig. 8, p < 0.05, degrees of freedom = 6, H = 59.74). The position error increased significantly with respect to control modality (p < 0.05, degrees of freedom = 1, H = 37.11, higher for Experiment 2 than Experiment 1), and also changed significantly with the interaction term (p < 0.05, degrees of freedom = 6, H = 24.002, higher for Experiment 2 than Experiment 1). Since the interaction effect was significant for position error, in the second stage, two separate Kruskall–Wallis (KW) tests are conducted for subsets of the trials. The first subset consists of trials performed at the seven movement distances corresponding to the manipulandum modality, and the second subset includes the remaining trials performed at the seven movement distances obtained via sonomyography. After subsetting, note that there is just a single factor (movement distance) for each KW test. The test results indicated that movement distance is statistically significant for both levels of control modality (p < 0.05).

A linear regression showed that the position error increased linearly with respect to movement distance for Experiment 1 (\(R^2=0.90\)) as well as Experiment 2 (\(R^2=0.52\)). The position error was not predominantly negative or positive, meaning on average, the user trajectory was not strictly above or below the minimum jerk trajectory. For Experiment 1, the average root mean square error between the users’ cursor position and the minimum jerk trajectory was between 1.2 and 5.78% of the screen width, increasing with target position. For Experiment 2, it was between 4.45 and 6.22% of the screen width, also increasing with target distance. All the position and velocity traces were tightly packed with the standard deviation across trials ranging from 0.53 to 2.36% for Experiment 1, and 4.27–5.82% for Experiment 2, both increasing with target distance. These results show that both control modalities had low variation across subjects.

All the trials for Experiment 1 , and 96.57% of the trials for Experiment 2 were completed within 2 s (see Fig. 9). The average path efficiency was \(101.03\% \pm 2.2\%\) for Experiment 1 and \(84.06\% \pm 16.81\%\) for Experiment 2. Seven independent F-tests were conducted to test the difference in variance path efficiencies between the two experiments at the same movement distances. All F-tests showed that the variance in path efficiencies for all target distances was greater for Experiment 2 than the variance in path efficiencies for the corresponding target distances for Experiment 1 (p < 0.05, see Fig. 10). The ideal path length was defined as the distance between the starting position and the center of the target position. However, during certain trials, subjects seem to have stopped moving after achieving the outer edge/radius of the target. This meant that the target was ”achieved”, but with a path length shorter than the ideal path length, making some path efficiencies greater than 100%.

Discussion

In this work, we used sonomyography to investigate the time course of control signals derived from imaging forearm musculature during a virtual target acquisition task, and compared it to control signals derived during cursor movements based on physical arm reaching movements. For both control modalities, the peak velocity and the time to target increased linearly with respect to movement distance. The change in time to target for the different movement distances was smaller for control signals derived from sonomyography than for the control signal derived from the manipulandum. Once the target was acquired, there was a greater deceleration phase for the manipulandum than for sonomyography (Fig. 7). The position traces of the cursor controlled by both modalities exhibited a trajectory close to the minimum jerk trajectory (Fig. 2), and the velocity profiles had a single bell-shaped peak at approximately the movement mid-point (Fig. 3). These results were consistent across subjects and across trials within each subject. Thus, the sonomyography-based movement trajectories derived from the users’ forearm muscles during wrist flexion/extension were consistent with behavior previously documented for coordinated multi-joint movements of the upper limb. This is novel since we report the existence of the minimum jerk trajectories using only internal measurement of muscle activation (sonomyography), rather than kinematic or external tracking of the effector (i.e. accelerometer on the arm).

Characterizing movement quality in control tasks

The virtual cursor control task is often used to evaluate the performance of human machine interfaces70,71,72,73,74,75. Traditionally, surface electromyography has been used to decode motor intent and drive the cursor during a virtual target achievement control task76,77,78,79,80. Various algorithms have been used to generate the control signal, including pattern recognition, linear regression, etc, and the derived signal can be used to control either the velocity or position of the cursor. The performance of the control paradigm is characterized using standard metrics such as movement time, path length, path efficiency, completion rate, accuracy, precision, etc.81. However, these metrics describe user performance without characterizing the time-course of the signal i.e. the evolution of the signal with respect to time. Therefore, with these standard metrics it is not possible to evaluate the movement quality in terms of its similarity to naturalistic human movements.

The minimum jerk trajectory during human movement

Hogan82 proposed that the central nervous system reflects the minimum jerk trajectory during the path-planning stage when a target is pre-selected. Others56,64,83,84,85,86,87 have shown that similar behavior is displayed during a variety of tasks such as arm reaching55,56,57, catching58, drawing movements59, vertical arm movements60, head movements61, saccadic eye movements62, chewing63, and several other motor skills64. Highly coordinated multi-joint upper limb movement has been shown to exhibit minimum jerk trajectories55,56,60,88, but to the best of our knowledge, these results have not been shown with respect to movement trajectories derived directly from imaging the musculature controlling a single joint. That is, the majority of the previous studies have documented the minimum jerk trajectory by tracking the kinematics of the moving body part, but not shown this to hold true when the control was derived using an internal measurement like ultrasound imaging of forearm muscles. Similar to the prior work described above, here we demonstrated that virtual end-point trajectories derived from sonomyography also follow a minimum jerk trajectory.

Typical point to point movement trajectories show a scaling of peak velocities and time to acquire targets based on target distance89,90. Neurologically healthy subjects follow typical movement trajectories based on distance to the target, and have very minimal abrupt changes due to error correction as they move closer to the target. During point to point reaching tasks, as the movement amplitude increases, the peak velocity (height of the bell-shaped velocity curve) increases proportionally. We have shown this behavior in the sonomyography trajectories as well (Fig. 3b). In prior work54 we have demonstrated that sonomyographic signals are proportional to motion completion level and here we offer evidence that the minimum jerk trajectory is followed at the single joint level. These two results suggest that it may be possible to use sonomyography to investigate how other motor control policies based on muscle activation apply at the single joint level. For example, sonomyography could also be used to investigate the properties of motor control at the single joint level in individuals with a limb deficiency or movement disorders.

Common motor control principles have been found to hold for various tasks under many different environmental and task performance conditions55,56,57,58,59,60,61,62,63,64,91. However, there is some debate over whether the typical motor behaviors are the result of internal properties of the motor system or they are simply the result of responses to visual and perceptual information. For certain periodic bimanual tasks, it has been argued that the motor system is subordinate to the visual/perceptual constraints while performing visuospatial motor tasks92. In the current work, users had complete access to visual feedback, and we did not perturb the visual representation of the task in real-time during the task. However, we have tested the effect of removing visual feedback of the cursor on task performance (errors, time to target, etc)93. In future work, we plan to test the effect of removing visual feedback on the dynamics of the performance reported here.

Applications in rehabilitation engineering

Our results demonstrate that it is possible to achieve naturalistic control using control signals derived from muscle. Sonomyography is an emerging modality that is being used for controlling upper and lower limb prosthetic devices94, but can also be used to control biomechatronic devices such as exoskeletons95,96,97 and prosthetic hands18,54,93.The results presented in this work have direct relevance to designing control strategies for such devices, that reflect the natural movements of the human body. Surface electromyography has been the predominant method of decoding motor intent in persons with movement disabilities using electrical signals from the surface of the skin26. This has proved to be a valuable tool, but it faces some challenges in terms of low signal to noise ratio and specificity due to the measurements being made at the surface level. However, techniques based on ultrasound imaging track the spatiotemporal patterns of deep lying musculature, giving access to information beyond surface level measurements.

Other applications of positional control

Sonomyography, as well as other modalities that enable robust positional control, could also be used to closely examine feedforward and feedback control mechanisms during grasping movements. These mechanisms are efficiently combined during grasping tasks98,99,100,101,102,103. The action usually starts with feedforward control until some form of haptic feedback is available from the environment. When such haptic feedback is available, there is a trade-off between energy efficiency and slip prevention, that allows the user to maintain a force that is higher than the minimum necessary force for just grasping the object104. Users often exhibit higher grip force while using only a feedforward control strategy in the absence of visual feedback105,106. Prosthetic users rely heavily on feedforward mechanisms107 as visual information is often the only type of feedback available to them. These control paradigms could be further studied using sonomyography, by comparing muscle deformation following onset of movement but before object interaction, with muscle deformation after object interaction has taken place. The magnitude and timing of these changes could be studied alongside electrical measurements preceding the muscle activation, to further inform our understanding of how the motor systems directs object manipulation in the real world. The current results show that it is possible to study the time course of such interactions and characterize how the movement quality is affected after the onset of a movement disorder or sensory loss. Several studies have shown that adaptation to errors induced by external forces is very natural for able bodied individuals108,109,110,111,112, but this mechanism is affected in persons with neuromuscular disorders113,114,115,116,117. Sonomyography could be another tool to study motor learning and adaptation by characterizing the time course of muscle movement as a reaction to external forces during certain tasks.

In our current study, the sonomyographic control signals did not directly track individual muscle boundaries or landmarks in the musculature. We aim to develop more advanced signal extraction techniques that will track individual muscle compartments in the future. In addition, the current studies were conducted on able bodied subjects. It would be very informative to investigate how these results compare to the movement trajectories exhibited by persons with limb deficiency as well as other neuromusculoskeletal impairments under the same protocol. In this work, although we placed the ultrasound transducer in an approximately similar spot for all participants as described in the methods section, we did not standardize the probe placement perfectly. Since our signal extraction algorithm is trained separately for every user, this was not a huge problem, but if we move towards tracking physical muscle/bone landmarks then we will also need to standardize probe placement. In this study, we showed all the results using only one hand motion (wrist flexion/extension). However, in our previous work18,54 that the sonomyography signal tracks motion completion level accurately for a range of hand motions. That is why, we believe that we could explore similar trajectory tracking using other motions in future work as well. Additionally, there is a slight difference in how the targets were presented for the two experiments, in terms of the mirroring of the hand used for control. For Experiment 2, we do not believe that this mirroring had a significant effect on the results, as the sonomyography signal has been shown previously to have an average stability error of 6.51% for able bodied subjects and persons with amputation. Our current study was not powered to evaluate differences in limb dominance. Future studies could investigate the impact of limb dominance on control trajectory performance.

Conclusions

We have shown in this work that (1) sonomyography is a tool capable of investigating the time course of muscle deformation when the users engage in isotonic movements, and (2) subjects demonstrate comparable planning and execution of a virtual cursor control task using a hand-held manipulandum or imaging of the forearm muscles. Movement trajectories based on isotonic activation of the limb muscles sensed through sonomyography, and those resulting from arm movement show similar characteristics: single bell-shaped velocity profiles, scaling of peak velocity based on movement distance, shift in time to achieving peak velocity, and increase in time to target based on movement distance. These results have been shown previously with respect to kinematics of whole limb movements, but we have shown that the same control relationships are reflected in control signals derived at the muscle level. In the future, these findings could enable the use of sonomyography and other robust position control signals to study the extent to which motor control relationships are preserved in individuals with neuromusculoskeletal impairments, and how these relationships are affected by multi sensory feedback modalities.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Birbaumer, N. Breaking the silence: Brain-computer interfaces (BCI) for communication and motor control. Psychophysiology 43, 517–532 (2006).

Zhu, M., He, T. & Lee, C. Technologies toward next generation human machine interfaces: From machine learning enhanced tactile sensing to neuromorphic sensory systems. Appl. Phys. Rev. 7, 031305 (2020).

Vuletic, T. et al. Systematic literature review of hand gestures used in human computer interaction interfaces. Int. J. Hum Comput Stud. 129, 74–94 (2019).

Hramov, A. E., Maksimenko, V. A. & Pisarchik, A. N. Physical principles of brain-computer interfaces and their applications for rehabilitation, robotics and control of human brain states. Phys. Rep. 918, 1–133 (2021).

Gu, X. et al. EEG-based brain-computer interfaces (BCIs): A survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications. IEEE/ACM Trans. Comput. Biol. Bioinf. 18, 1645–1666 (2021).

Qi, J., Jiang, G., Li, G., Sun, Y. & Tao, B. Intelligent human-computer interaction based on surface EMG gesture recognition. IEEE Access 7, 61378–61387 (2019).

Mueller, F. F. et al. Next steps for human-computer integration. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems 1–15 (2020).

Graimann, B., Allison, B. Z. & Pfurtscheller, G. Brain-Computer Interfaces: Revolutionizing Human-Computer Interaction (Springer Science & Business Media, 2010).

Zander, T. O., Brönstrup, J., Lorenz, R. & Krol, L. R. Towards BCI-based implicit control in human-computer interaction. In Advances in Physiological Computing 67–90 (Springer, 2014).

Saponas, T. S., Tan, D. S., Morris, D. & Balakrishnan, R. Demonstrating the feasibility of using forearm electromyography for muscle-computer interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems 515–524 (2008).

Asai, Y., Tateyama, S. & Nomura, T. Learning an intermittent control strategy for postural balancing using an EMG-based human-computer interface. PLoS ONE 8, e62956 (2013).

Zhu, B. et al. Face-computer interface (FCI): Intent recognition based on facial electromyography (fEMG) and online human-computer interface with audiovisual feedback. Front. Neurorobot. 15, 692562 (2021).

Hwang, H.-J., Kim, S., Choi, S. & Im, C.-H. EEG-based brain-computer interfaces: A thorough literature survey. Int. J. Hum. Comput. Interact. 29, 814–826 (2013).

Zander, T. O. et al. A dry EEG-system for scientific research and brain-computer interfaces. Front. Neurosci. 5, 53 (2011).

Miller, K. J., Hermes, D. & Staff, N. P. The current state of electrocorticography-based brain-computer interfaces. Neurosurg. Focus 49, E2 (2020).

Schalk, G. & Leuthardt, E. C. Brain-computer interfaces using electrocorticographic signals. IEEE Rev. Biomed. Eng. 4, 140–154 (2011).

Castillo, C. S. M., Wilson, S., Vaidyanathan, R. & Atashzar, S. F. Wearable MMG-plus-one armband: Evaluation of normal force on mechanomyography (MMG) to enhance human-machine interfacing. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 196–205 (2020).

Dhawan, A. S. et al. Proprioceptive sonomyographic control: A novel method for intuitive and proportional control of multiple degrees-of-freedom for individuals with upper extremity limb loss. Sci. rep. 9, 9499 (2019).

Akhlaghi, N. et al. Real-time classification of hand motions using ultrasound imaging of forearm muscles. IEEE Trans. Biomed. Eng. 63, 1687–1698 (2016).

Sikdar, S. et al. Novel method for predicting dexterous individual finger movements by imaging muscle activity using a wearable ultrasonic system. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 69–76 (2014).

Baker, C. A., Akhlaghi, N., Rangwala, H., Kosecka, J. & Sikdar, S. Real-time, ultrasound-based control of a virtual hand by a trans-radial amputee. In Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th Annual International Conference of the 3219–3222 (IEEE, 2016).

Castellini, C., Passig, G. & Zarka, E. Using ultrasound images of the forearm to predict finger positions. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 788–797 (2012).

Ortenzi, V., Tarantino, S., Castellini, C. & Cipriani, C. Ultrasound imaging for hand prosthesis control: a comparative study of features and classification methods. In 2015 IEEE International Conference on Rehabilitation Robotics (ICORR) 1–6 (IEEE, 2015).

Yin, Z. et al. A wearable ultrasound interface for prosthetic hand control. IEEE J. Biomed. Health Inform. 26(11), 5384–5393 (2022).

Castellini, C. & Van Der Smagt, P. Surface EMG in advanced hand prosthetics. Biol. Cybern. 100, 35–47 (2009).

Farina, D. et al. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 797–809 (2014).

Sun, Y. et al. From sensing to control of lower limb exoskeleton: A systematic review. Annu. Rev. Control. 53, 83–96 (2022).

Berger, N. & Huppert, C. R. The use of electrical and mechanical muscular forces for the control of an electrical prosthesis. Am. J. Occup. Ther. Off. Publ. Am. Occup. Ther. Assoc. 6, 110 (1952).

Amsuess, S., Goebel, P., Graimann, B. & Farina, D. Extending mode switching to multiple degrees of freedom in hand prosthesis control is not efficient. In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society 658–661 (IEEE, 2014).

Simon, A. M. et al. User performance with a transradial multi-articulating hand prosthesis during pattern recognition and direct control home use. IEEE Trans. Neural Syst. Rehabil. Eng. 31, 271–281 (2022).

Bjornson, K. F., Belza, B., Kartin, D., Logsdon, R. & McLaughlin, J. F. Ambulatory physical activity performance in youth with cerebral palsy and youth who are developing typically. Phys. Ther. 87, 248–257 (2007).

Dollar, A. M. & Herr, H. Lower extremity exoskeletons and active orthoses: Challenges and state-of-the-art. IEEE Trans. Rob. 24, 144–158 (2008).

Chen, B. et al. Recent developments and challenges of lower extremity exoskeletons. J. Orthop. Transl. 5, 26–37 (2016).

Ferris, D. P. The exoskeletons are here. J. Neuroeng. Rehabil. 6, 1–3 (2009).

Gasparri, G. M., Luque, J. & Lerner, Z. F. Proportional joint-moment control for instantaneously adaptive ankle exoskeleton assistance. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 751–759 (2019).

Scheme, E. & Englehart, K. Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use. J. Rehabil. Res. Dev. 48, 643–659 (2011).

Fougner, A., Stavdahl, Ã., Kyberd, P. J., Losier, Y. G. & Parker, P. A. Control of upper limb prostheses: Terminology and proportional myoelectric control—A review. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 663–677. https://doi.org/10.1109/TNSRE.2012.2196711 (2012).

Chu, J.-U., Moon, I. & Mun, M.-S. A real-time EMG pattern recognition system based on linear-nonlinear feature projection for a multifunction myoelectric hand. IEEE Trans. Biomed. Eng. 53, 2232–2239 (2006).

Hargrove, L. J., Scheme, E. J., Englehart, K. B. & Hudgins, B. S. Multiple binary classifications via linear discriminant analysis for improved controllability of a powered prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 18, 49–57 (2010).

Ameri, A., Akhaee, M. A., Scheme, E. & Englehart, K. Real-time, simultaneous myoelectric control using a convolutional neural network. PLoS ONE 13, e0203835 (2018).

Hahne, J. M. et al. Linear and nonlinear regression techniques for simultaneous and proportional myoelectric control. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 269–279 (2014).

Igual, C., Igual, J., Hahne, J. M. & Parra, L. C. Adaptive auto-regressive proportional myoelectric control. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 314–322 (2019).

Smith, L. H., Kuiken, T. A. & Hargrove, L. J. Use of probabilistic weights to enhance linear regression myoelectric control. J. Neural Eng. 12, 066030 (2015).

Amsuess, S., Goebel, P., Graimann, B. & Farina, D. A multi-class proportional myocontrol algorithm for upper limb prosthesis control: Validation in real-life scenarios on amputees. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 827–836 (2015).

Stango, A., Negro, F. & Farina, D. Spatial correlation of high density EMG signals provides features robust to electrode number and shift in pattern recognition for myocontrol. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 189–198 (2015).

Vujaklija, I. Novel control strategies for upper limb prosthetics. In International Conference on NeuroRehabilitation 171–174 (Springer, 2018).

Clancy, E. A., Morin, E. L. & Merletti, R. Sampling, noise-reduction and amplitude estimation issues in surface electromyography. J. Electromyogr. Kinesiol. 12, 1–16 (2002).

Fillauer, C. E., Pritham, C. H. & Fillauer, K. D. Evolution and development of the silicone suction socket (3S) for below-knee prostheses. J. Prosthet. Orthot. 1, 92–103 (1989).

Kong, Y. K., Hallbeck, M. S. & Jung, M. C. Crosstalk effect on surface electromyogram of the forearm flexors during a static grip task. J. Electromyogr. Kinesiol. 20, 1223–1229 (2010).

van Duinen, H., Gandevia, S. C. & Taylor, J. L. Voluntary activation of the different compartments of the flexor digitorum profundus. J. Neurophysiol. 104, 3213–3221 (2010).

Pasquina, P. F. et al. First-in-man demonstration of a fully implanted myoelectric sensors system to control an advanced electromechanical prosthetic hand. J. Neurosci. Methods 244, 85–93 (2015).

Weir, R. F., Troyk, P. R., DeMichele, G. A., Kerns, D. A. & Schorsch, J. F. Implantable myoelectric sensors for intramuscular EMG recording. IEEE Trans. Biomed. Eng. 56, 2009 (2009).

Kuiken, T. A. et al. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. JAMA 301, 619–628 (2009).

Patwardhan, S., Schofield, J., Joiner, W. M. & Sikdar, S. Sonomyography shows feasibility as a tool to quantify joint movement at the muscle level. In 2022 International Conference on Rehabilitation Robotics (ICORR) 1–5 (IEEE, 2022).

Flash, T. & Hogan, N. The coordination of arm movements: An experimentally confirmed mathematical model. J. Neurosci. 5, 1688–1703 (1985).

Morasso, P. Spatial control of arm movements. Exp. Brain Res. 42, 223–227 (1981).

Abend, W., Bizzi, E. & Morasso, P. Human arm trajectory formation. Brain J. Neurol. 105, 331–348 (1982).

Fligge, N., McIntyre, J. & van der Smagt, P. Minimum jerk for human catching movements in 3d. In 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob) 581–586 (IEEE, 2012).

Lacquaniti, F., Terzuolo, C. & Viviani, P. The law relating the kinematic and figural aspects of drawing movements. Acta Physiol. (Oxf) 54, 115–130 (1983).

Atkeson, C. G. & Hollerbach, J. M. Kinematic features of unrestrained vertical arm movements. J. Neurosci. 5, 2318–2330 (1985).

Chen, L. L., Lee, D., Fukushima, K. & Fukushima, J. Submovement composition of head movement. PLoS ONE 7, e47565 (2012).

Clark, M. & Stark, L. Time optimal behavior of human saccadic eye movement. IEEE Trans. Autom. Control 20, 345–348 (1975).

Yashiro, K., Yamauchi, T., Fujii, M. & Takada, K. Smoothness of human jaw movement during chewing. J. Dent. Res. 78, 1662–1668 (1999).

Viviani, P. & Terzuolo, C. 32 space-time invariance in learned motor skills. In Advances in psychology, vol. 1, 525–533 (Elsevier, 1980).

Shadmehr, R. & Wise, S. P. The Computational Neurobiology of Reaching and Pointing: A Foundation for Motor Learning (MIT Press, 2004).

Scheirer, C. J., Ray, W. S. & Hare, N. The analysis of ranked data derived from completely randomized factorial designs. Biometrics 32, 429–434 (1976).

Sinha, N. Non-parametric alternative of 2-way ANOVA (ScheirerRayHare). https://www.mathworks.com/matlabcentral/fileexchange/96399-non-parametric-alternative-of-2-way-anova-scheirerrayhare. MATLAB Central File Exchange, Accessed 17 July 2023.

Todorov, E. & Jordan, M. I. Smoothness maximization along a predefined path accurately predicts the speed profiles of complex arm movements. J. Neurophysiol. 80, 696–714 (1998).

Viviani, P. & Flash, T. Minimum-jerk, two-thirds power law, and isochrony: Converging approaches to movement planning. J. Exp. Psychol. Hum. Percept. Perform. 21, 32 (1995).

Daly, J. J. & Wolpaw, J. R. Brain-computer interfaces in neurological rehabilitation. Lancet Neurol. 7, 1032–1043 (2008).

Kim, S.-P. et al. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 19, 193–203 (2011).

Wolpaw, J. R., McFarland, D. J., Neat, G. W. & Forneris, C. A. An EEG-based brain-computer interface for cursor control. Electroencephalogr. Clin. Neurophysiol. 78, 252–259 (1991).

Trejo, L. J., Rosipal, R. & Matthews, B. Brain-computer interfaces for 1-d and 2-d cursor control: Designs using volitional control of the EEG spectrum or steady-state visual evoked potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 14, 225–229 (2006).

Felton, E., Radwin, R., Wilson, J. & Williams, J. Evaluation of a modified Fitts law brain-computer interface target acquisition task in able and motor disabled individuals. J. Neural Eng. 6, 056002 (2009).

Abiri, R. et al. A usability study of low-cost wireless brain-computer interface for cursor control using online linear model. IEEE Trans. Hum. Mach. Syst. 50, 287–297 (2020).

Lu, Z. & Zhou, P. Hands-free human-computer interface based on facial myoelectric pattern recognition. Front. Neurol. 10, 444 (2019).

Yin, Y. H., Fan, Y. J. & Xu, L. D. EMG and EPP-integrated human-machine interface between the paralyzed and rehabilitation exoskeleton. IEEE Trans. Inf Technol. Biomed. 16, 542–549 (2012).

Han, J.-S., Bien, Z. Z., Kim, D.-J., Lee, H.-E. & Kim, J.-S. Human-machine interface for wheelchair control with EMG and its evaluation. In Proceedings of the 25th annual international conference of the IEEE engineering in medicine and biology society (IEEE cat. no. 03ch37439), Vol. 2 1602–1605 (IEEE, 2003).

Leerskov, K., Rehman, M., Niazi, I., Cremoux, S. & Jochumsen, M. Investigating the feasibility of combining EEG and EMG for controlling a hybrid human computer interface in patients with spinal cord injury. In 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE) 403–410 (IEEE, 2020).

Rodríguez-Tapia, B., Soto, I., Martínez, D. M. & Arballo, N. C. Myoelectric interfaces and related applications: Current state of EMG signal processing-a systematic review. IEEE Access 8, 7792–7805 (2020).

Nowak, M., Vujaklija, I., Sturma, A., Castellini, C. & Farina, D. Simultaneous and proportional real-time myocontrol of up to three degrees of freedom of the wrist and hand. IEEE Trans. Biomed. Eng. 70(2), 459–469 (2022).

Hogan, N. An organizing principle for a class of voluntary movements. J. Neurosci. 4, 2745–2754 (1984).

Lacquaniti, F. & Soechting, J. F. Coordination of arm and wrist motion during a reaching task. J. Neurosci. 2, 399–408 (1982).

Morasso, P. & Ivaldi, F. M. Trajectory formation and handwriting: A computational model. Biol. Cybern. 45, 131–142 (1982).

Morasso, P., Ivaldi, F. M. & Ruggiero, C. How a discontinuous mechanism can produce continuous patterns in trajectory formation and handwriting. Acta Physiol. (Oxf) 54, 83–98 (1983).

Morasso, P. & Tagliasco, V. Analysis of human movements: Spatial localisation with multiple perspective views. Med. Biol. Eng. Comput. 21, 74–82 (1983).

Prablanc, C., Echallier, J., Komilis, E. & Jeannerod, M. Optimal response of eye and hand motor systems in pointing at a visual target. Biol. Cybern. 35, 113–124 (1979).

Georgopoulos, A. P., Kalaska, J. F. & Massey, J. T. Spatial trajectories and reaction times of aimed movements: Effects of practice, uncertainty, and change in target location. J. Neurophysiol. 46, 725–743 (1981).

Messier, J. & Kalaska, J. F. Comparison of variability of initial kinematics and endpoints of reaching movements. Exp. Brain Res. 125, 139–152 (1999).

Soechting, J. F. Effect of target size on spatial and temporal characteristics of a pointing movement in man. Exp. Brain Res. 54, 121–132 (1984).

Kerr, R. Diving, adaptation, and Fitts law. J. Mot. Behav. 10, 255–260 (1978).

Mechsner, F., Kerzel, D., Knoblich, G. & Prinz, W. Perceptual basis of bimanual coordination. Nature 414, 69–73 (2001).

Patwardhan, S. et al. Evaluation of the role of proprioception during proportional position control using sonomyography: Applications in prosthetic control. In 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR) 830–836 (IEEE, 2019).

Engdahl, S. M., Acuña, S. A., King, E. L., Bashatah, A. & Sikdar, S. First demonstration of functional task performance using a sonomyographic prosthesis: A case study. Front. Bioeng. Biotechnol. 10, 876836 (2022).

Bulea, T. C., Sharma, N., Sikdar, S. & Su, H. Next generation user-adaptive wearable robots. Front. Robot. AI 9, 920655 (2022).

Zhang, Q. et al. Evaluation of a fused sonomyography and electromyography-based control on a cable-driven ankle exoskeleton. IEEE Trans. Robot.https://doi.org/10.1109/TRO.2023.3236958 (2023).

Xue, X. et al. Development of a wearable ultrasound transducer for sensing muscle activities in assistive robotics applications. Biosensors 13, 134 (2023).

Johansson, R. S. & Flanagan, J. R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 10, 345–359 (2009).

Flanagan, J. R. & Beltzner, M. A. Independence of perceptual and sensorimotor predictions in the size-weight illusion. Nat. Neurosci. 3, 737–741 (2000).

Westling, G. & Johansson, R. S. Factors influencing the force control during precision grip. Exp. Brain Res. 53, 277–284 (1984).

Graziano, M. S. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc. Natl. Acad. Sci. 96, 10418–10421 (1999).

Sober, S. J. & Sabes, P. N. Flexible strategies for sensory integration during motor planning. Nat. Neurosci. 8, 490–497 (2005).

Štrbac, M. et al. Short-and long-term learning of feedforward control of a myoelectric prosthesis with sensory feedback by amputees. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 2133–2145 (2017).

Johansson, R. S. & Westling, G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp. Brain Res. 56, 550–564 (1984).

Bagesteiro, L. B. & Sainburg, R. L. Handedness: Dominant arm advantages in control of limb dynamics. J. Neurophysiol. 88, 2408–2421 (2002).

Patwardhan, S. et al. Sonomyography combined with vibrotactile feedback enables precise target acquisition without visual feedback. In 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) 4955–4958 (IEEE, 2020).

Dromerick, A. W., Schabowsky, C. N., Holley, R. J. & Monroe, B. Feedforward control strategies of subjects with transradial amputation in planar reaching. J. Rehabil. Res. Dev. 47, 201 (2010).

Melendez-Calderon, A., Masia, L., Gassert, R., Sandini, G. & Burdet, E. Force field adaptation can be learned using vision in the absence of proprioceptive error. IEEE Trans. Neural Syst. Rehabil. Eng. 19, 298–306 (2011).

Shadmehr, R. & Mussa-Ivaldi, F. A. Adaptive representation of dynamics during learning of a motor task. J. Neurosci. 14, 3208–3224 (1994).

Lackner, J. R. & Dizio, P. Rapid adaptation to Coriolis force perturbations of arm trajectory. J. Neurophysiol. 72, 299–313 (1994).

Kawato, M. & Gomi, H. A computational model of four regions of the cerebellum based on feedback-error learning. Biol. Cybern. 68, 95–103 (1992).

Karniel, A. Open questions in computational motor control. J. Integr. Neurosci. 10, 385–411 (2011).

Kitago, T. & Krakauer, J. W. Motor learning principles for neurorehabilitation. Handb. Clin. Neurol. 110, 93–103 (2013).

Shadmehr, R. & Krakauer, J. W. A computational neuroanatomy for motor control. Exp. Brain Res. 185, 359–381 (2008).

Nieuwboer, A., Rochester, L., Müncks, L. & Swinnen, S. P. Motor learning in Parkinson’s disease: Limitations and potential for rehabilitation. Parkinsonism Relat. Disord. 15, S53–S58 (2009).

Patton, J. L., Stoykov, M. E., Kovic, M. & Mussa-Ivaldi, F. A. Evaluation of robotic training forces that either enhance or reduce error in chronic hemiparetic stroke survivors. Exp. Brain Res. 168, 368–383 (2006).

Johnson, R. E., Kording, K. P., Hargrove, L. J. & Sensinger, J. W. Adaptation to random and systematic errors: Comparison of amputee and non-amputee control interfaces with varying levels of process noise. PLoS ONE 12, e0170473 (2017).

Acknowledgements

This work was supported by the National Science Foundation under Award No. 1646204, by the Department of Defense under Award No. W81XWH-16-1-0722, and by the National Institutes of Health under Award No. U01EB027601.

Author information

Authors and Affiliations

Contributions

S.P., W.M.J. and S.S. designed the experiments. K.A.G. created the data collection interface for Experiment 1. S.P. collected and analyzed the data. S.P., J.S.S., W.M.J., and S.S. interpreted the results. S.P. drafted the manuscript. B.S.L. reviewed and revised the statistical analyses. S.P., J.S.S., W.M.J., and S.S. revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Patwardhan, S., Gladhill, K.A., Joiner, W.M. et al. Using principles of motor control to analyze performance of human machine interfaces. Sci Rep 13, 13273 (2023). https://doi.org/10.1038/s41598-023-40446-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-40446-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.