Abstract

Wave-based analog computing has recently emerged as a promising computing paradigm due to its potential for high computational efficiency and minimal crosstalk. Although low-frequency acoustic analog computing systems exist, their bulky size makes it difficult to integrate them into chips that are compatible with complementary metal-oxide semiconductors (CMOS). This research paper addresses this issue by introducing a compact analog computing system (ACS) that leverages the interactions between ultrasonic waves and metasurfaces to solve ordinary and partial differential equations. The results of our wave propagation simulations, conducted using MATLAB, demonstrate the high accuracy of the ACS in solving such differential equations. Our proposed device has the potential to enhance the prospects of wave-based analog computing systems as the supercomputers of tomorrow.

Similar content being viewed by others

Introduction

A plethora of electronic and mechanical analog computers have been developed in the past two millennia to solve mathematical equations and perform mathematical operations with increased efficiency1,2,3, but they were later replaced by more advanced digital computers2,3. In view of the recent advancements in the field of metamaterials, interest in analog computing has been revived, with the focus being on wave-based analog computing1,3,8. These new computing systems leverage the properties of waves and metasurfaces to solve mathematical equations and perform mathematical operations to satisfy the need for ever-greater computational efficiency and capacity6,7 amidst the grim outlook for further augmentation of digital computers as Moore’s law approaches its physical limitations2,4,5.

Due to their powerful parallel processing, high computational efficiency, and minimal crosstalk, wave-based analog computing systems have been hailed as a potential future of computing1,8,9. It was the pioneering work of Silva et al.10 on computational metamaterials that set the stage for subsequent research into analog computing systems that perform mathematical operations and solve equations1,2,3,4,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,37, with a subset of these focusing on the use of the Fourier transform (FT) to do so3,6,9,10,26,27,28,37. More recently, Zangeneh-Nejad et al. provided a well-written, comprehensive overview of recent developments in this field as a whole1.

In the realm of acoustics, Zuo et al. designed and tested an acoustic analog computing system based on labyrinthine metasurfaces to solve \(n\) th-order inhomogeneous ordinary differential equations9. Many other studies on acoustic analog computing systems have also been carried out, but all such systems operate in the kilohertz (kHz) frequency range3,9,26,27,28. Even when thin planar metasurfaces are used, a physically bulky computing system is required for analog computing at such low frequencies (long wavelengths). In this paper, we propose a solution to this problem: a compact ultrasonic analog computing system (ACS) with a working frequency in the gigahertz (GHz) range. Due to the relatively shorter wavelength of GHz ultrasonic waves, our proposed ACS is far less bulky and can consequently be easily integrated into CMOS-compatible chips.

This paper is organized as follows. Firstly, we present the ACS’ architecture and elaborate on its working principle. Next, the ability of the ACS to solve differential equations is demonstrated, including a comprehensive error and accuracy optimization analysis for each type of differential equation and each type of function. Following this, we discuss our study’s key findings and conclusions, relating them to the wider context of wave-based analog computing. Finally, we provide a comprehensive account of our research methodology—including information on the design process for the ultrasonic metalens, the simulation of wave propagation through the ACS, and important considerations for the selection of simulation parameters.

Architecture and working principle of the analog computing system (ACS)

Architecture

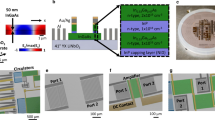

Our proposed ACS (Fig. 1a) is made up of three key components: an Ultrasonic Fourier Transform (UFT) block, a spatial filtering metasurface (SFM), and another UFT block. The pressure fields at the input and output planes of the ACS are \(P_{I} \left( {x,y} \right)\) and \(P_{O} \left( {x,y} \right)\), respectively. The side length of the entire ACS’ square cross-section is \(L\).

Schematic of the ACS and the UFT block. (a) The figure features a schematic of the proposed ACS, which consists of three main parts: a UFT block, an SFM, and another UFT block. (b) The figure features a schematic of the UFT block, which has three key components: a substrate layer, the ultrasonic metalens, and another substrate layer.

Referring to Fig. 1b, each UFT block consists of three main parts: a fused silica substrate layer, the ultrasonic metalens, followed by another fused silica substrate layer. If the input pressure field of the ultrasonic wave that is made to pass through the UFT block is \(P\), the output pressure field obtained at the other end of the UFT block is \(P_{FT}\), which has been shown to be proportional to \(P\)’s Fourier transform37. A key condition for obtaining the UFT through this block is that both the thickness of the substrate layers and the focal length of the metalens must be \(f\) 37. The metalens’ thickness is \(t_{m}\), as shown in Fig. 1b.

Our proposed ACS is designed to operate at a frequency of \(f_{wave} = 1.7\) GHz, which is a high ultrasonic frequency. This enables greater compactness, making it easier to integrate the ACS into CMOS-compatible chips. Each substrate layer has a thickness of \(f = 1.0886\) mm and is made of fused silica (which we chose for its isotropy as a material). In fused silica, the speed of ultrasonic waves is \(v_{wave} = 5880\) m s-1, from which we can calculate the wavelength to be \(\lambda = v_{wave} /f_{wave} = 3.46\) µm.

In Fig. 2a, the metalens is made up of several unit cells, each of which has a thickness of \(t_{m} = 16\) µm and a square cross-section of side length 3 µm (a subwavelength feature). Each unit cell (Fig. 2b) is composed of a square cuboid made of Si with a cylindrical post made of SiO2 embedded in it. According to the theoretical working principle of the ACS, the ultrasonic metalens ought to obey a paraboloidal phase profile

such that the pressure field \(P_{FT}\) would be proportional to the FT of \(P\). Due to the limited number of distinct unit cells available, however, discretization is required. Therefore, the cylindrical post radius of each unit cell must correspond to the interpolated phase shift at that point (after discretization). The process of interpolation transforms the ideal phase map (Fig. 2c) into the discretized phase map (Fig. 2d) that is later used for phase-to-radius mapping.

In addition, the transmission coefficient function \(T\left( {x,y} \right)\) of the SFM must correspond to the transfer function (TF) \(H\left( {k_{x} ,k_{y} } \right)\) required to solve a particular ordinary or partial differential equation.

Working principle

Uy and Bui37 have previously determined that the input \(P\) and the output \(P_{FT}\) of the UFT block are approximately (see Table 1 for list of approximations) related by

where \(j\) is the imaginary unit, \(\lambda\) is the wavelength, \(k\) is the wavenumber, and the operator \({\mathcal{F}}\) denotes the FT.

Now, let \(f\left( {x,y} \right)\) and \(g\left( {x,y} \right)\) be the input and output, respectively, of a particular ODE or PDE. It can be shown that \(f\left( {x,y} \right)\) and \(g\left( {x,y} \right)\) are mathematically related by the equation

where the operator \({\mathcal{F}}^{ - 1}\) denotes the inverse FT and \(H\left( {k_{x} ,k_{y} } \right)\) is the transfer function for a certain ODE or PDE.

At first glance, Eqs. (2) and (3) might seem to suggest that the ACS cannot compute the accurate result. For one, the UFT block yields an output \(P_{FT}\) that is only proportional to—but not actually equal to—the FT of the input \(P\). Moreover, it is essential to note that the correct output is obtained by taking the inverse FT of \(H\left( {k_{x} ,k_{y} } \right){\mathcal{F}}\left\{ {f\left( {x,y} \right)} \right\}\), whereas the second UFT block calculates the FT (not the inverse FT). However, these concerns do not actually hinder the ACS from yielding the desired output. In fact, the mirror image of the correct output \(g\left( {x,y} \right)\) is given by the equation

which is the additive inverse of the magnitude of the ACS’ output \(P_{O} \left( {x,y} \right)\). Therefore, to achieve the desired result \(g\left( {x,y} \right)\), we simply need to take the mirror image of the ACS’ output \(P_{O} \left( {x,y} \right)\) and subsequently obtain its additive inverse.

It is also important to recognize that the transmission coefficient can only have amplitudes of less than or equal to 1. If this condition is satisfied by \(H\left( {k_{x} ,k_{y} } \right)\), then all is well, and \(H\left( {k_{x} ,k_{y} } \right)\) would also be the transmission coefficient function. However, this is not necessarily satisfied by all transfer functions. In such cases, we would have to normalize the transfer function such that it satisfies this condition. Consequently, we would also not obtain the exact output \(g\left( {x,y} \right)\) but rather a scaled version of it. Nevertheless, we can recover the desired result by appropriately rescaling the output.

Results

A function that is space-limited has non-zero magnitude values only within in a finite region in the space domain, whereas a function that is bandlimited is one that has a finite spectral width that includes all spatial frequency components with non-zero magnitude values. It should be noted that it is not possible for a function to be both space-limited and bandlimited38. Furthermore, functions that are space-limited but not bandlimited, such as the rect function, are not of particular interest in the context of solving differential equations. In this paper, we thus consider two main kinds of functions: (1) bandlimited but not space-limited and (2) neither space-limited nor bandlimited.

In the wave propagation simulations, we used the Sinc and Gaussian functions as archetypal examples for each kind. The Sinc functions follow the general form \(f\left( x \right) = {\text{sinc}}\left( {x/w} \right)\), where the parameter \(w \in {\mathbb{R}}^{ + }\) is an indication of the Sinc function’s geometric spread. The Gaussian functions, on the other hand, take the form \(f\left( x \right) = \exp \left( { - \pi x^{2} /\gamma^{2} } \right)\), where the parameter \(\gamma \in {\mathbb{R}}^{ + }\) serves as a measure of the Gaussian function’s geometric spread. Additionally, we chose the root-mean-squared error (RMSE) after normalization as the error metric used to assess the accuracy of the ACS’ output, relative to the analytical solution.

Ordinary differential equations (ODE)

Mathematical basis

Generalizing the derivative property of the FT39,

Consider a general \(n\) th-order inhomogeneous ODE

Taking the FT of both sides of Eq. (6), we obtain

from which we can deduce that

Note that Eq. (8) can be re-expressed as

By definition, the spatial frequencies are \(k_{x} = - 2\pi x/\lambda f\) and \(k_{y} = - 2\pi y/\lambda f\), so we have \(dk_{x} = - \left( {2\pi /\lambda f} \right)dx\) and \(dk_{y} = - \left( {2\pi /\lambda f} \right)dy\). Therefore, using these substitutions and then replacing \(x\) with \(- x\) and \(y\) with \(- y\),

It is apparent from Eq. (10) that \(f\left( x \right)\) is the input and

is the transfer function needed to solve nth-order inhomogeneous ODEs of the form given in Eq. (6)9.

Simulation results

In the simulations, we used the ODE

For the first type of function, the solution \(g\left( x \right)\) is a Sinc function with parameter \(w = 18\). The RMSE after normalization, based on our simulation, was 0.00737. From Fig. 3a, we can observe that the analytical solution and the ACS’ output are in excellent agreement, especially near the center. There are some minor discrepancies towards the edges, but these are actually expected since we know that the FT is only obtained in the paraxial region (see approximations listed in Table 1). Furthermore, metalens aberration—as a result of discretization of the metalens’ phase profile—also contributes, albeit very minimally, to the observed deviations. It should also be noted that there is some undersampling due to truncation of the input function \(f\left( x \right)\) as well as aliasing arising from bandlimiting of the output function \(g\left( x \right)\).

Ordinary Differential Equation (ODE) Simulations. (a) Sinc function: Input (left) and output (right) magnitude profiles for \(w = 18\). The blue line with circled data points indicates the simulated output of the ACS, whereas the orange line shows the analytical solution. (b) Gaussian function: Input (left) and output (right) magnitude profiles for \(\gamma = 48\). The blue line with circled data points indicates the simulated output of the ACS, whereas the orange line shows the analytical solution. (c) Relationship between the RMSE and the parameter \(w\) for the Sinc function. (d) Relationship between the RMSE and the parameter \(\gamma\) for the Gaussian function.

The simulation for the second type of function was conducted with a Gaussian function with parameter \(\gamma = 48\) as the solution \(g\left( x \right)\). In this case, the RMSE after normalization was found to be 0.00975. Figure 3b shows that the output of the ACS and the analytical solution agree very well with each other. Granted, there are small lobes towards the edges, largely due to metalens aberration as well as the paraxial approximation requirement not being met. There are also minor errors associated with undersampling of the input and bandlimiting of the output. Be that as it may, the output nonetheless matches the analytical solution very well.

Optimization of accuracy

Figures 3c and d show the relationship between the RMSE and the geometric spread parameters \(w\) and \(\gamma\), respectively, for solving ODEs. We can observe that the RMSE initially decreases then increases as \(w\) or \(\gamma\) is increased. This two-part trend in the RMSE is mainly caused by two factors: (1) the level of aliasing and undersampling and (2) the validity of the paraxial approximations.

Firstly, as \(w\) or \(\gamma\) is initially increased, there is reduced aliasing in the output plane of the first UFT block and reduced undersampling in the input plane of the second UFT block, resulting in a fall in the RMSE. However, as \(w\) or \(\gamma\) continues to increase past a certain threshold, there is increased undersampling in the input plane of the first UFT block and increased aliasing in the output plane of the second UFT block that drive the observed rise in the RMSE.

Secondly, the paraxial approximations initially become more valid as \(w\) or \(\gamma\) increases, then it becomes less valid as \(w\) or \(\gamma\) increases further. As \(w\) or \(\gamma\) initially increases, the energy becomes less highly concentrated near the center of the first UFT block’s input plane and less extensively spread out in the first UFT block’s output plane. The energy also becomes less extensively spread out in the second UFT block’s input plane and less highly concentrated near the center of the second UFT block’s output plane. Then, as \(w\) or \(\gamma\) increases further, the energy becomes more extensively spread out in the first UFT block’s input plane and more highly concentrated near the center of the first UFT block’s output plane. Moreover, the energy becomes more highly concentrated near the center of the second UFT block’s input plane and more extensively spread out in the second UFT block’s output plane.

Therefore, to optimize accuracy, the input function \(f\left( x \right)\) can be scaled parallel to \(x\) such that the geometric spread parameter \(w\) or \(\gamma\) takes on a moderate value. The output \(P_{O} \left( {x,y} \right)\) of the ACS can then be appropriately rescaled back to obtain the desired solution \(g\left( x \right)\).

Refer to Supplementary Figs. S2 to S5 for additional diagrams in support of the above explanation.

Partial differential equations (PDE)

Mathematical basis

Consider the partial differential equation

Taking the FT of both sides of Eq. (13),

which can be re-arranged to get

In its integral form, Eq. (15) can be re-written as

Substituting \(dk_{x} = - \left( {2\pi /\lambda f} \right)dx\) and \(dk_{y} = - \left( {2\pi /\lambda f} \right)dy\) and replacing \(x\) with \(- x\) and \(y\) with \(- y\) then yield

Therefore, \(f\left( {x,y} \right)\) is the input and

is the transfer function needed to solve PDEs of the specified form28.

Simulation results

In the simulations, we used the PDE

The simulation for the first type of function was carried out with a two-dimensional Sinc function with parameter \(w = 18\) as the solution \(g\left( x,y \right)\). The RMSE after normalization, based on our simulation, was 0.00643. We can observe from Fig. 4a that the ACS’ output and the analytical solution are in excellent agreement, particularly near the center. There are some small deviations towards the edges, which can be attributed to the paraxial approximation not being met. In addition, metalens aberration also contributes to the discrepancies. There is also some undersampling due to truncation of the input function \(f\left( x,y \right)\) as well as aliasing arising from bandlimiting of the output function \(g\left( x,y \right)\).

Partial Differential Equation (PDE) Simulations. (a) Sinc function: Input (left) and output (right) magnitude profiles for \(w=18\). The blue line with circled data points indicates the simulated output of the ACS, whereas the orange line shows the analytical solution. (b) Gaussian function: Input (left) and output (right) magnitude profiles for \(\gamma =18\). The blue line with circled data points indicates the simulated output of the ACS, whereas the orange line shows the analytical solution. (c) Relationship between the RMSE and the parameter \(w\) for the Sinc function. (d) Relationship between the RMSE and the parameter \(\gamma\) for the Gaussian function.

For the second type of function, the solution \(g\left( x,y \right)\) is a two-dimensional Gaussian function with parameter \(\gamma = 18\). In this case, the RMSE after normalization was found to be 0.00266. Figure 4b shows that the output of the ACS and the analytical solution agree very well with each other, especially in the paraxial region for the same reason cited above. Due to metalens aberration as well as the paraxial approximation requirement not being met, there are some small lobes towards the edges. What is more, undersampling of the input \(f\left( x,y \right)\) and aliasing of the output function \(g\left( x,y \right)\) also contribute to the observed discrepancies. Nonetheless, the output for the significant magnitude values matches the analytical solution very well.

Optimization of accuracy

Figure 4c and d show the relationship between the RMSE and the geometric spread parameters \(w\) and \(\gamma\), respectively, for solving PDEs. We can observe that as \(w\) or \(\gamma\) is increased, the RMSE initially falls and subsequently rises. This trend in the RMSE can be largely ascribed to two key factors: (1) the level of undersampling and aliasing and (2) the paraxial approximations’ validity.

Firstly, as \(w\) or \(\gamma\) is initially increased, there is less aliasing in the first UFT block’s output plane and less undersampling in the second UFT block’s input plane, so the RMSE decreases. However, as \(w\) or \(\gamma\) continues to increase beyond a certain value, there is increased undersampling in the first UFT block’s input plane and increased aliasing in the second UFT block’s output plane, resulting in the observed increase in the RMSE.

Secondly, the paraxial approximations initially become more valid as \(w\) or \(\gamma\) initially increases, then it becomes less valid as \(w\) or \(\gamma\) increases further. Initially, as \(w\) or \(\gamma\) increases, the energy becomes less highly concentrated near the center of the input plane of the first UFT block and less extensively spread out in the output plane of the first UFT block. The energy also becomes less extensively spread out in the input plane of the second UFT block and less highly concentrated near the center of the output plane of the second UFT block. Then, as \(w\) or \(\gamma\) increases further, the energy becomes more extensively spread out in the input plane of the first UFT block and more highly concentrated near the center of the output plane of the first UFT block. Additionally, the energy becomes more highly concentrated near the center of the input plane of the second UFT block and more extensively spread out in the output plane of the second UFT block.

Thus, accuracy can be optimized by scaling the input \(f\left( x \right)\) parallel to \(x\) so that the geometric spread parameter takes on a moderate value. We can then appropriately rescale back the ACS’ output \(P_{O} \left( {x,y} \right)\) to obtain the desired solution.

Refer to Supplementary Figs. S6 to S9 for additional diagrams supporting the above explanation.

Conclusion

This paper introduces an analog computing system (ACS) that uses ultrasonic waves and metasurfaces to solve ordinary and partial differential equations. Through our simulations, we have clearly demonstrated the ability of the proposed ACS to yield highly accurate results when solving both types of differential equations involving both types of functions. In contrast to other studies in existing literature, a key contribution of our paper is the exploration of how the accuracy of the ACS’ output may be optimized through the selection of an appropriate (moderate) value of the geometric spread parameter \(w\) or \(\gamma\).

Our study’s findings are anticipated to advance the development of wave-based analog computing systems, potentially surpassing the constraints of digital computers. This has far-reaching implications for the fields of computing and signal processing, hopefully laying the foundation for technological breakthroughs in the future.

Methods

Ultrasonic metalens designing process

The process of designing the ultrasonic metalens is detailed below. Refer to Supplementary Fig. S1 for a flowchart summarizing the method.

To start, it is crucial to conduct unit cell simulations in order to establish the relationship between the radius of a cylindrical post and the associated phase shift for that particular unit cell. This correlation (Phase-to-radius Mapping) serves as a reference point for subsequent steps. Next, an array of the Ideal Phase Map can be generated. It consists of phase values at sampled points following the paraboloidal phase profile

required to achieve \(P_{FT} \propto {\mathcal{F}}\left\{ P \right\}\) theoretically37. The next step is to use the MATLAB function interp1 to interpolate the nearest available phase value from the unit cell simulations, and this results in the Discretized Phase Map, consisting of phase values that have a corresponding radius from the unit cell simulations. Subsequently, the phase-to-radius mapping can be used to create the Radius Map, an array of radius values at each sampled point. Finally, the MATLAB function viscircles can be used to generate a figure of the metalens, comprising unit cells with cylindrical posts whose radii correspond to the radius at that point (according to the Radius Map).

Semi-analytical wave propagation simulations

Using the exact solutions of the Kirchhoff-Helmholtz Integral (before Fresnel and paraxial approximations as listed in Table 1 were applied), we conducted semi-analytical simulations of the propagation of ultrasonic waves through our proposed ACS. The output of each simulation was then compared with the analytical solution.

To conduct these simulations, we used MATLAB to implement the code because it is far less computationally costly as opposed to Finite Element Method (FEM) software like COMSOL Multiphysics. This is to enable us to carry out simulations involving arrays of significantly larger size—key to understanding the proposed ACS’ true capabilities.

Propagation within each UFT block

Through an FFT-based convolution approach, we can obtain the pressure field \(\overline{P}_{M - } \left( {x,y} \right)\) at the plane before the ultrasonic metalens. This approach involves convolving the zero-padded input pressure field array \(\overline{P}_{S} \left( {\xi ,\eta } \right)\) with the convolution kernel

To avoid circular convolution errors, the \(N \times N\) array \(\overline{P}_{S} \left( {\xi ,\eta } \right)\) has to be padded by at least \(N - 1\) zeros37,43. According to convention, the convolution kernel array and the zero-padded pressure field array are of the same size37,43. The \(N \times N\) subarray at the center of the larger array generated as the output of FFT-based convolution is \(\overline{P}_{M - } \left( {x,y} \right)\).

Subsequently, we apply the phase shift due to the discretized metalens to obtain \(N \times N\) array \(\overline{P}_{M + } \left( {x,y} \right)\), which represents the pressure field at the plane after the metalens. This can be done by performing an element-wise multiplication of the \(N \times N\) array \(\overline{P}_{M - } \left( {x,y} \right)\) and the \(N \times N\) array \(\exp \left( {i\overline{\phi }_{discretized} } \right)\). Note that \(\overline{\phi }_{discretized}\) is the metalens’ discretized phase profile after the interpolation step in the ultrasonic metalens designing process.

Following this, we use FFT-based convolution to convolve the zero-padded array \(\overline{P}_{M + } \left( {x,y} \right)\) with the convolution kernel

in order to obtain the \(N \times N\) output pressure field array \(\overline{P}_{O} \left( {u,v} \right)\).

The convolution kernels in Eqs. (21) and (22) were derived from exact solutions to the Kirchhoff-Helmholtz Integral37.

Propagation through the SFM

The SFM theoretically applies the transfer function (TF) needed to solve a particular ODE or PDE. To simulate ultrasonic wave propagation through the SFM, we perform element-wise multiplication of the output array of the first UFT block and the transmission coefficient array \(T\left( {x,y} \right)\) of the SFM. The result is then the input array for the second UFT block. We subsequently repeat the same process described above for the simulation of wave propagation through a UFT block, which ultimately produces the output \(P_{O} \left( {x,y} \right)\) of the proposed ACS.

Simulation parameters

The process of selecting the most appropriate simulation parameters involves three key considerations. Firstly, convolution requires that the spacing \({\Delta }\) between adjacent metalens unit cells and that between the sampled points of the pressure fields must be the same37,43. Furthermore, an appropriate length \(L\) for the cross-section of the UFT block should be chosen, keeping in mind that the geometric spread parameter \(w\) or \(\gamma\) must take on a moderate value so that the significant space and spatial frequency components are within the sampled array bounds (appropriate truncation and bandlimiting). Finally, the focal length \(f\) ought to satisfy the condition

which can be derived by considering the sampling requirements of the convolution kernels’ exponential phase term36,37,40,41,42,43,44.

With these considerations in mind, the values of the parameters used in the simulations are presented in Table 2.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Zangeneh-Nejad, F., Sounas, D. L., Alù, A. & Fleury, R. Analogue computing with metamaterials. Nat. Rev. Mater. 6, 207–225. https://doi.org/10.1038/s41578-020-00243-2 (2020).

Cheng, K. et al. Optical realization of wave-based analog computing with metamaterials. Appl. Sci. 11, 141. https://doi.org/10.3390/app11010141 (2020).

Zangeneh-Nejad, F. & Fleury, R. Performing mathematical operations using high-index acoustic metamaterials. New J. Phys. 20, 073001. https://doi.org/10.1088/1367-2630/aacba1 (2018).

Hwang, J., Davaji, B., Kuo, J. & Lal, A. Focusing Profiles of Planar Si-SiO2 Metamaterial GHz Frequency Ultrasonic Lens. in 2021 IEEE International Ultrasonics Symposium (IUS) 1–4 (IEEE, 2021). https://doi.org/10.1109/IUS52206.2021.9593577.

MacLennan, B. J. The promise of analog computation. Int. J. Gen Syst 43, 682–696. https://doi.org/10.1080/03081079.2014.920997 (2014).

Cordaro, A. et al. High-index dielectric metasurfaces performing mathematical operations. Nano Lett. 19, 8418–8423. https://doi.org/10.1021/acs.nanolett.9b02477 (2019).

Abdollahramezani, S., Chizari, A., Dorche, A. E., Jamali, M. V. & Salehi, J. A. Dielectric metasurfaces solve differential and integro-differential equations. Opt. Lett. 42, 1197. https://doi.org/10.1364/OL.42.001197 (2017).

Rajabalipanah, H., Momeni, A., Rahmanzadeh, M., Abdolali, A. & Fleury, R. A single metagrating metastructure for wave-based parallel analog computing. arXiv:2110.07473 [physics] (2021).

Zuo, S., Wei, Q., Tian, Y., Cheng, Y. & Liu, X. Acoustic analog computing system based on labyrinthine metasurfaces. Sci. Rep. https://doi.org/10.1038/s41598-018-27741-2 (2018).

Silva, A. et al. Performing mathematical operations with metamaterials. Science 343, 160–163. https://doi.org/10.1126/science.1242818 (2014).

Youssefi, A., Zangeneh-Nejad, F., Abdollahramezani, S. & Khavasi, A. Analog computing by Brewster effect. Opt. Lett. 41, 3467. https://doi.org/10.1364/OL.41.003467 (2016).

Barrios, G. A., Retamal, J. C., Solano, E. & Sanz, M. Analog simulator of integro-differential equations with classical memristors. Sci. Rep. https://doi.org/10.1038/s41598-019-49204-y (2019).

AbdollahRamezani, S., Arik, K., Khavasi, A. & Kavehvash, Z. Analog computing using graphene-based metalines. Opt. Lett. 40, 5239. https://doi.org/10.1364/OL.40.005239 (2015).

Sihvola, A. Enabling optical analog computing with metamaterials. Science 343, 144–145. https://doi.org/10.1126/science.1248659 (2014).

Zhou, Y. et al. Analog optical spatial differentiators based on dielectric metasurfaces. Adv. Opt. Mater. 8, 1901523. https://doi.org/10.1002/adom.201901523 (2019).

Kou, S. S. et al. On-chip photonic Fourier transform with surface plasmon polaritons. Light: Science & Applications 5, e16034 (2016). https://doi.org/10.1038/lsa.2016.34.

Caulfield, H. J. & Dolev, S. Why future supercomputing requires optics. Nat. Photon. 4, 261–263. https://doi.org/10.1038/nphoton.2010.94 (2010).

Zhou, Y., Zheng, H., Kravchenko, I. I. & Valentine, J. Flat optics for image differentiation. Nat. Photon. 14, 316–323. https://doi.org/10.1038/s41566-020-0591-3 (2020).

Bykov, D. A., Doskolovich, L. L., Bezus, E. A. & Soifer, V. A. Optical computation of the Laplace operator using phase-shifted Bragg grating. Opt. Express 22, 25084. https://doi.org/10.1364/OE.22.025084 (2014).

Karimi, P., Khavasi, A. & Mousavi Khaleghi, S. S. Fundamental limit for gain and resolution in analog optical edge detection. Opt. Express 28, 898. https://doi.org/10.1364/OE.379492 (2020).

Lv, Z., Ding, Y. & Pei, Y. Acoustic computational metamaterials for dispersion Fourier transform in time domain. J. Appl. Phys. 127, 123101. https://doi.org/10.1063/1.5141057 (2020).

Liu, Y., Kuo, J., Abdelmejeed, M. & Lal, A. Optical Measurement of Ultrasonic Fourier Transforms. in 2018 IEEE International Ultrasonics Symposium (IUS) 1–9 (2018). https://doi.org/10.1109/ULTSYM.2018.8579938.

Hwang, J., Kuo, J. & Lal, A. Planar GHz Ultrasonic Lens for Fourier Ultrasonics. in 2019 IEEE International Ultrasonics Symposium (IUS) 1735–1738 (2019). https://doi.org/10.1109/ULTSYM.2019.8925662.

Hwang, J., Davaji, B., Kuo, J. & Lal, A. Planar Lens for GHz Fourier Ultrasonics. in 2020 IEEE International Ultrasonics Symposium (IUS) 1–4 (2020). https://doi.org/10.1109/IUS46767.2020.9251614.

Kwon, H., Sounas, D., Cordaro, A., Polman, A. & Alù, A. Nonlocal metasurfaces for optical signal processing. Phys. Rev. Lett. 121, 173004. https://doi.org/10.1103/PhysRevLett.121.173004 (2018).

Zuo, S.-Y., Wei, Q., Cheng, Y. & Liu, X.-J. Mathematical operations for acoustic signals based on layered labyrinthine metasurfaces. Appl. Phys. Lett. 110, 011904. https://doi.org/10.1063/1.4973705 (2017).

Zuo, S.-Y., Tian, Y., Wei, Q., Cheng, Y. & Liu, X.-J. Acoustic analog computing based on a reflective metasurface with decoupled modulation of phase and amplitude. J. Appl. Phys. 123, 091704. https://doi.org/10.1063/1.5004617 (2018).

Lv, Z., Liu, P., Ding, Y., Li, H. & Pei, Y. Implementing fractional Fourier transform and solving partial differential equations using acoustic computational metamaterials in space domain. Acta. Mech. Sin. https://doi.org/10.1007/s10409-021-01139-2 (2021).

Mohammadi Estakhri, N., Edwards, B. & Engheta, N. Inverse-designed metastructures that solve equations. Science 363, 1333–1338. https://doi.org/10.1126/science.aaw2498 (2019).

Zhu, T. et al. Plasmonic computing of spatial differentiation. Nat. Commun. 8, 15391. https://doi.org/10.1038/ncomms15391 (2017).

Zangeneh-Nejad, F. & Fleury, R. Topological analog signal processing. Nat. Commun. 10, 2058. https://doi.org/10.1038/s41467-019-10086-3 (2019).

Abdollahramezani, S., Hemmatyar, O. & Adibi, A. Meta-optics for spatial optical analog computing. Nanophotonics 9, 4075–4095. https://doi.org/10.1515/nanoph-2020-0285 (2020).

Solli, D. R. & Jalali, B. Analog optical computing. Nat. Photon. 9, 704–706. https://doi.org/10.1038/nphoton.2015.208 (2015).

Guo, C., Xiao, M., Minkov, M., Shi, Y. & Fan, S. Photonic crystal slab Laplace operator for image differentiation. Optica 5, 251–256. https://doi.org/10.1364/OPTICA.5.000251 (2018).

Macfaden, A. J., Gordon, G. S. D. & Wilkinson, T. D. An optical Fourier transform coprocessor with direct phase determination. Sci. Rep. 7, 13667. https://doi.org/10.1038/s41598-017-13733-1 (2017).

Goodman, J. W. Introduction to Fourier Optics (W. H. Freeman and Company, 2017).

Uy, R. F. & Bui, V. P. A metalens-based analog computing system for ultrasonic Fourier transform calculations. Sci. Rep. 12, 17124. https://doi.org/10.1038/s41598-022-21753-9 (2022).

Stark, H. Applications of Optical Fourier Transforms (Academic Press, 1982).

James, J. F. A student’s Guide to Fourier Transforms: With Applications in Physics and Engineering (Cambridge University Press, 2015).

Voelz, D. G. Computational Fourier Optics: A MATLAB Tutorial. (Spie Press, 2010).

Voelz, D. G. & Roggemann, M. C. Digital simulation of scalar optical diffraction: Revisiting chirp function sampling criteria and consequences. Appl. Opt. 48, 6132. https://doi.org/10.1364/AO.48.006132 (2009).

Zhang, H., Zhang, W. & Jin, G. Adaptive-sampling angular spectrum method with full utilization of space-bandwidth product. Opt. Lett. 45, 4416–4419. https://doi.org/10.1364/OL.393111 (2020).

Zhang, W., Zhang, H., Sheppard, C. J. R. & Jin, G. Analysis of numerical diffraction calculation methods: From the perspective of phase space optics and the sampling theorem. J. Opt. Soc. Am. A 37, 1748. https://doi.org/10.1364/JOSAA.401908 (2020).

Zhang, W., Zhang, H. & Jin, G. Frequency sampling strategy for numerical diffraction calculations. Opt. Express 28, 39916. https://doi.org/10.1364/OE.413636 (2020).

Acknowledgements

This work was supported by the A*STAR RIE2020 Advanced Manufacturing and Engineering (AME) Programmatic Fund [A19E8b0102].

Author information

Authors and Affiliations

Contributions

R.F.U. planned the research project, formulated the mathematical model for the ultrasonic Fourier transform, conducted the simulations, analyzed the resulting data, wrote the manuscript, and digitally created the diagrams. V.P.B. initiated the project and supervised R.F.U. Both authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Uy, R.F., Bui, V.P. Solving ordinary and partial differential equations using an analog computing system based on ultrasonic metasurfaces. Sci Rep 13, 13471 (2023). https://doi.org/10.1038/s41598-023-38718-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38718-1

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.