Abstract

Constructing an efficient and accurate epilepsy detection system is an urgent research task. In this paper, we developed an EEG-based multi-frequency multilayer brain network (MMBN) and an attentional mechanism based convolutional neural network (AM-CNN) model to study epilepsy detection. Specifically, based on the multi-frequency characteristics of the brain, we first use wavelet packet decomposition and reconstruction methods to divide the original EEG signals into eight frequency bands, and then construct MMBN through correlation analysis between brain regions, where each layer corresponds to a specific frequency band. The time, frequency and channel related information of EEG signals are mapped into the multilayer network topology. On this basis, a multi-branch AM-CNN model is designed, which completely matches the multilayer structure of the proposed brain network. The experimental results on public CHB-MIT datasets show that eight frequency bands divided in this work are all helpful for epilepsy detection, and the fusion of multi-frequency information can effectively decode the epileptic brain state, achieving accurate detection of epilepsy with an average accuracy of 99.75%, sensitivity of 99.43%, and specificity of 99.83%. All of these provide reliable technical solutions for EEG-based neurological disease detection, especially for epilepsy detection.

Similar content being viewed by others

Introduction

Epilepsy is a worldwide nervous system disease caused by sudden abnormal discharge of neurons. According to statistics, 50 million people worldwide are suffering from epilepsy. In recent years, the research on timely, accurate, and automatic epilepsy detection methods has attracted the attention of a large number of scholars. Taking the opportunity of studying epilepsy detection methods, this study aims to explore effective methods to depict brain states, design CNN architecture to enhance feature extraction, and provide valuable reference schemes for timely and accurate EEG-based nervous system disease detection.

So far, many epilepsy detection technologies have been developed. The most commonly used method of epilepsy detection is to analyze scalp electroencephalogram (EEG) signals, which are records of brain electrical activity. For example, Bhattacharyya and pachori1 combined multivariate empirical wavelet transform and well-known classifier to classify epileptic signals based on EEG. Zhang et al.2 used the FPE-complexity of EEG signals as input, and utilized extreme machine learning and support vector machine to detect epilepsy. Radman et al.3 combined the Dempster-Shafer Evidence Theory (DSET) and Ensemble Decision Tree (EDT) to conduct EEG-based epilepsy detection. In addition, brain is an extremely complex time series system with obvious nonlinearity and instability. The information from EEG signals constantly changes in both the time and frequency domains, and has strong correlation between brain electrodes (channels). The frequency and spatiotemporal information of EEG signals codetermine the brain state. Therefore, exploring effective fusion methods of multiple information (frequency, time, and brain regions) is the key to analyzing brain features in epileptic states and improving the accuracy of epilepsy detection4,5,6.

In recent years, the theory of complex networks has made significant progress7,8,9. Complex network is composed of nodes and edges connecting two nodes. It has been proven that complex networks are effective tools for analyzing complex systems from the perspective of network topology, which abstractly describes the specific behaviors of the studied system. Time series complex networks are an important branch of complex network theory. By constructing a network of time series, the complex features contained therein can be mapped to the network topology, thereby enabling the characterization of complex systems. Constructing time series complex network (brain network) related to brain behavior is one of the important ways to analyze the state of the brain 10,11,12. Specifically, the brain network can be constructed from multi-channel EEG signals, and then the brain state can be explored through network topology analysis. In general, the brain network can be inferred by setting the brain electrodes (channels) as nodes, and then the edges between the nodes can be determined by various related metrics. Moreover, multilayer network are the latest development of complex network theory13,14,15. The multilayer network has multiple layers and can describe different aspects of the studied system. The multilayer structure makes it possible to describe complex systems more comprehensively and accurately. Some successful applications of multilayer networks can be found in the fields of chemical systems16, EEG signal analysis17, 18 and traffic network analysis19.

The human brain has obvious multi-frequency characteristics. When multilayer network is introduced into brain research, spatiotemporal characteristics in each frequency band can be mapped into a single layer. MMBN considers the specific information of multiple frequency bands and can be used as an effective feature for epilepsy detection. However, it should be noted that in the analysis process, multilayer network are usually represented as a series of adjacency matrices. Each adjacency matrix corresponds to a single layer. In the face of such multidimensional samples, traditional classifiers, such as support vector machines, cannot be directly used for classification. As the most advanced theory in machine learning, deep learning20,21,22,23 has received extensive and continuous attention. Specifically, deep learning is an end-to-end learning framework that can extract deeper internal representations from the input itself24,25,26,27. So far, deep learning has shown great potential in epilepsy research. For instance, Li et al.28 proposed a deep learning method combining the fully convolution network and the long short-term memory (LSTM) to automatically detect epilepsy. Specifically, the full connection layer network and LSTM are used to extract EEG-based epileptic features and explore the inherent temporal correlation in EEG signals. Kemal et al.29 developed a stacking ensemble method to detect epilepsy using five deep neural network (DNN) models. Gao et al.30 used approximate entropy and recursive quantitative analysis to extract the features of EEG signals, and then establishes convolutional neural networks to detect epilepsy. Zhao et al.31 developed a novel one-dimensional CNN model to detect epilepsy with raw EEG signals. The model used three convolution blocks including BN layer and dropout layer for feature extraction. Naseem et al.32 employed a method integrating CWT and CNN to classify EEG data and detect seizures caused by epilepsy and brain tumors. The results show that the deep learning model is conducive to EEG classification and timely prediction of seizures to avoid damage caused by repeated seizures. Some researchers tried to add the attention mechanism module to the CNN model33,34,35. The attention mechanism introduces weight on the basis of the original model, and can help the new model focus on informative and important features.

Motivated by the above-described background and progress, we propose an epilepsy detection method combining multilayer brain network and deep learning. In detail, the EEG signals of each channel are decomposed into multiple frequency bands through wavelet packet decomposition and reconstruction. Then, constructing MMBN, where each layer is a spatiotemporal feature topology of EEG signals in a specific frequency band. Compared with single-layer network, this multilayer brain network integrates the information from multiple frequency bands, helping to provide a more comprehensive description of brain states. In addition, considering the strong ability of deep learning to learn structural features, we carefully developed a CNN model based on attention mechanism (AM-CNN) with MMBN as input. Through evaluation on the CHB-MIT dataset, this method achieved excellent performance with an accuracy of 99.75%. The results indicate that this study can effectively characterize the brain state during seizures, extract essential features for precise classification, and is expected to provide reference for other EEG signal based neurological disease detection. The overall structure of our work is shown in Fig. 1.

Results

MMBN analysis of brain-topological characteristic of epileptic

We randomly select eight subjects and calculate the measurement statistics of the MMBN obtained from the normal state and the seizure state respectively, and then t-test was performed on them. Four network measures are introduced, including average clustering coefficient \(\overline{C}\), clustering coefficient entropy \(E_{C}\), spectral radius \(R\) and graph energy \(E\). These statistical measures are defined as the following equations:

where \(N\) is the total number of nodes (channels) in the network, \(C\left( \nu \right)\) means clustering coefficient of node \(\nu\), the mathematical expression is

where \(t_{\nu }\) is the total number of closed triangles containing node \(\nu\), \(k_{\nu }\) is the degree of node \(\nu\), \(w_{\nu \kappa }\) represents the edge weight between nodes \(\kappa\) and \(\nu\), \(w_{\nu \alpha }\) represents the edge weight between nodes \(\alpha\) and \(\nu\), \(w_{\kappa \alpha }\) represents the edge weight between nodes \(\kappa\) and \(\alpha\).

where \(P_{C} \left( \nu \right)\) is expressed as

where \(\lambda_{\nu }\) denotes the \(\nu {\text{-th}}\) eigenvalue of the adjacency matrix(single-layer network).

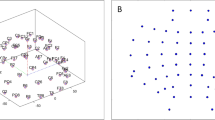

It can be seen from Table 1 that 93.75% of p-value values are less than 0.001, and 100% of p-value values are less than 0.05. Figure 2 shows two randomly selected 8-layer brain networks. One sub-graph corresponds to one layer of the MMBN and is related to a specific frequency band. The MMBN in the normal state is located in the upper row, and the MMBN in the seizure state is located in the lower row. As can be seen, the network topology shows obvious differences in different frequency bands. All the above indicates that the proposed multilayer brain network can effectively characterize the differences in the topology of brain networks between seizure and normal, and confirms the importance of frequency and electrodes (channels) in epilepsy detection research.

Attention mechanism-based epilepsy detection

Taking MMBN that integrates spatiotemporal features across eight frequency bands as input, the AM-CNN model was trained using Keras through a fully supervised process and used to perform epilepsy detection on 18 selected subjects. The final results indicated that the epilepsy detection scheme combining multilayer brain network and AM-CNN model can effectively distinguish between normal state and epileptic state, with a classification accuracy of 99.75% sensitivity of 99.43%, and specificity of 99.83%. Some existing research results on the CHB-MIT dataset are also listed in Table 2. The proposed method is better than them in terms of accuracy and sensitivity, and is extremely close to Dang's work in terms of specificity but superior to other works. All of these provide new ideas for the characterization of EEG signals, and also provide technical support for the construction of an efficient and accurate epileptic state detection system.

Discussions

Effectiveness of MMBN

The proposed method in this paper achieves an excellent epilepsy detection performance with an average accuracy of 99.75%. This reflects the overall effectiveness of using multilayer brain networks as new feature inputs. At the same time, we also analyze the effectiveness and contribution of each frequency band to epilepsy detection. Specifically, taking a single-layer network as input, epilepsy detection is performed on 18 subjects using the same AM-CNN architecture. The results are shown in Fig. 3. The average detection accuracy ranges from 79.06% to 92.97%. This indicates that all eight frequency bands divided in this study are helpful for epilepsy detection, but the contribution of each frequency band is different. Meanwhile, the contributions of each frequency band are also influenced by individual differences among the subjects. Taking frequency band \(F_{4}^{4,3}\) as an example, the detection results of different subjects fluctuate between 70.9% and 94.2%. This further confirms that brain function has multi-frequency characteristics, and MMBN fused with information from multiple frequency bands can more effectively decode epileptic brain states.

Effect of attention mechanism

In the methods section, AM-CNN architecture is designed to conduct feature extraction from multilayer brain networks. To further emphasize and illustrate the role of attention mechanism, we construct a comparison model to validate the effectiveness of the attention mechanism using the same inputs. The architecture and test results of comparative model is shown in Table 3. The performance of comparative model with accuracy of 93.54%, sensitivity of 93.18%, and specificity of 92.76% is significantly lower than those of AM-CNN. The complete AM-CNN does achieve the better performance. This indicates that attention mechanisms play a crucial role in enhancing feature extraction processes in this study.

Rationality of frequency band division

In order to further prove the rationality of frequency band division in this study, we provide classification results of no band division, two bands (\(F_{p,2}^{2,0}\) (0–32 Hz), \(F_{p,2}^{2,1}\) (32–64 Hz)), four bands (\(F_{p,3}^{3,0}\) (0–16 Hz), \(F_{p,3}^{3,1}\) (16–32 Hz), \(F_{p,3}^{3,2}\) (32–48 Hz), \(F_{p,3}^{3,3}\) (48–64 Hz)) and eight bands. The relevant results are shown in Fig. 4. As can be seen, the average detection accuracy of multiple frequency bands exceeds 90%. Especially for eight frequency bands, the accuracy reaches 99.75%. It is worth mentioning that when no band division is performed, the classification accuracy is only 82.16%. This is because features in different frequency bands cannot be used specifically, resulting in information confusion. In summary, considering multiple frequency bands, the proposed method achieves excellent results in epilepsy detection and is expected to provide valuable reference for other EEG-based neurological disease detection.

Methods

Experiment validation

Taking the designed MMBN as input, the proposed AM-CNN model is trained and tested on the CHB-MIT dataset which was created and provided by Boston Children's Hospital (CHB) and the Massachusetts Institute of Technology (MIT) and included 24 subjects (5 males, 3–22 years old; 19 females, 1.5–19 years old).

All scalp electroencephalograms in the CHB-MIT dataset were collected using the international 10–20 system electrode placement method. The electrode positions used in the dataset were FP1, FP2, F7, F3, FZ, F4, F8, FT9, FT10, T7, C3, CZ, C4, T8, P7, P3, PZ, P4, P8, O1, O2, and the sampling frequency was 256 Hz. The dataset adopts a bipolar measurement method, where the collected EEG signals are recorded in the form of voltage differences between adjacent electrodes in a longitudinal direction. The number of electrode pairs (channels) contained in different subsets and different signal segments in the same subset varies from 18 to 23. In this paper, considering data integrity, we used 18 subjects, all of whom had 23 channels of EEG signals (involving frontal lobe: F3, F4, F7, F8, FZ; frontal lobe: FP1, FP2; temporal lobe: T7, T8; occipital lobe: O1, O2; parietal lobe: P3, P4, P7, P8; central lobe: C3, C4, CZ; ft9, ft10). Samples were segmented using a sliding window with a length of 1 s. The sliding step is 0.5 s. In this paper, we obtained 11,150 samples in total, including 5410 normal state samples and 5740 seizure state samples. Aim to avoid contingency, ten-fold cross validation is conducted. For one fold, 90% of the whole samples were selected as the training set and the remaining 10% as the test set. 10% of the training samples as the validation set. The model is trained via Adam optimizer with cross-entropy loss function. The best model is recorded when the loss on the validation set reaches a minimum value. The number of epochs is set as 200. Batch size is 64. Learning rate is set as 0.001. Three performance metrics, including accuracy, sensitivity, and specificity, are introduced to evaluate the model’s performance. All experiments are completed on workstation which is equipped with Intel CPU (i9-10920X, 3.5 GHz), NVIDIA GPU (RTX 3090), and 32 GB RAM.

Multi-frequency multilayer brain network

We establish a multilayer brain network based on EEG signals to study epilepsy related brain states, where each layer corresponds to a specific frequency band. Taking p-channel EEG signals \(\left\{ {x_{p,l} } \right\}_{{l{ = }1}}^{L} p = 1,2, \ldots ,N\), with a length of L as an example, MMBN is constructed as follows. Firstly, we perform 4-layer wavelet packet decomposition on the EEG signals of each channel to obtain sixteen frequency bands. The mathematical expression of wavelet packet decomposition is defined as:

where \(F_{p,n}^{j,i}\) represents the sub-frequency band after n-layer wavelet packet decomposition of p-channel EEG signal. (j, i) is the node order of wavelet packet tree. \(h\left( \cdot \right)\) is a low pass filter. \(g\left( \cdot \right)\) is a high pass filter. m and n are the number of decomposition layers. The bandwidth of each frequency band is \({{\left( {{{f_{s} } \mathord{\left/ {\vphantom {{f_{s} } 2}} \right. \kern-0pt} 2}} \right)} \mathord{\left/ {\vphantom {{\left( {{{f_{s} } \mathord{\left/ {\vphantom {{f_{s} } 2}} \right. \kern-0pt} 2}} \right)} {2^{4} }}} \right. \kern-0pt} {2^{4} }} = 8\;{\text{Hz}}\), where \(f_{s} = 256\;{\text{Hz}}\) means sampling frequency. Due to the fact that the frequency of EEG signals reflecting epileptic brain state is mainly distributed within 70Hz42,43,44, this study used eight frequency bands, including \(F_{p,4}^{4,0}\) (0–8 Hz), \(F_{p,4}^{4,1}\) (8–16 Hz), \(F_{p,4}^{4,2}\) (16–24 Hz), \(F_{p,4}^{4,3}\) (2432 Hz), \(F_{p,4}^{4,4}\) (32–40 Hz), \(F_{p,4}^{4,5}\) (40–48 Hz), \(F_{p,4}^{4,6}\) (48–56 Hz), \(F_{p,4}^{4,7}\) (56–64 Hz). The selected wavelet base is dbN, which has been proven by existing research to be able to decompose EEG signals and has fast computational speed45, 46.

Secondly, we use the function \(wprcoef\left( \cdot \right)\) to reconstruct an approximation to raw EEG signals from selected nodes in the wavelet packet tree T. The signals for the reconstruction of sub-frequency band \(F_{p,n}^{4,i}\) is

Finally, in each frequency band, we define brain electrodes (or channels) as network nodes. The weight of edge between nodes \(\kappa\) and \(\nu\) is determined via the Spearman rank correlation coefficient. The mathematical expression is

where \({\text{rg}}\left( {\left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x}_{\kappa ,l}^{4,i} } \right\}} \right)\) and \({\text{rg}}\left( {\left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x}_{\nu ,l}^{4,i} } \right\}} \right)\) respectively represent the position of the \(l{\text{-th}}\) element in the sequence \(\left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x}_{\kappa ,l}^{4,i} } \right\}\) and \(\left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x}_{\nu ,l}^{4,i} } \right\}\) after sorting.

Through determining the edge between each channel pair (or node pair) via the above method, the network under this frequency band can be obtained. In different frequency bands, the correlation characteristics between channels are significantly different, making it possible to obtain different frequency-dependent brain networks. By repeating the above process at eight frequency bands, a multilayer brain network can be constructed, which has eight layers with \(N\) nodes per layer. For each layer, 30% of the edges with larger weights are reserved for subsequent analysis.

Convolutional neural network model based on attention mechanism

Here, take the obtained multilayer brain network as input, a convolutional neural network model based on attention mechanism (AM-CNN) is carefully designed for epilepsy detection. Figure 5 shows the detailed architecture. Table 4 lists the corresponding parameters.

The AM-CNN model consists of two blocks. The function of the first block is a feature extraction based on the attention mechanism (AM-FE module), which is used to acquire multilayer network features from eight frequency bands. Note that, each layer of the multilayer brain network can be represented as an adjacency matrix, which is a grid like data. Elements at positions \(\left( {\nu ,\kappa } \right)\) in the adjacency matrix represent weights \(w_{\nu ,\kappa }\). The first module has eight branches, and it exactly matches the structure of the multilayer brain network. Each branch shares the same structure. Specifically, each branch is provided with two convolution layers (layer 1 and layer 2). Convolutional layer realizes feature extraction by designing a certain number of convolution kernels, which shows obvious advantages in processing grid-like data, such as the adjacency matrix here. In the following description, Layer 1 is simplified to L1, and others similarly. L1 and L2 can be described by the following formula:

where \({\mathbf{A}}^{0}\) is the input data (i.e., adjacency matrix), \({{\varvec{\upsigma}}}_{k}^{1}\) and \({{\varvec{\upsigma}}}_{k}^{2}\) are \(k{\text{-th}}\) output characteristic maps of L1 and L2, respectively. \({\mathbf{w}}_{k}\) and \(b_{k}\) represent the weight matrix and deviation term of the \(k{\text{-th}}\) convolution kernel, and \(conv\left( \cdot \right)\) represents convolution operation. In each layer,\(K = 16\) convolution kernels are designed, and the size is set to \(3 \times 3\).

Due to the fact that convolution operations mainly handle local information of features. Directly processing the convolutional output features cannot effectively model the interrelationships between channels in the output features. To address this issue, we introduced channel-based attention mechanism after L2 to exploit the channel dependencies in outputs features \({{\varvec{\upsigma}}}^{2}\).

where \({{\varvec{\upsigma}}}_{k}^{2} \in {\mathbf{\mathbb{R}}}^{H \times W}\) is the feature maps corresponding to the k-th convolutional kernel with the heights of H and widths of W. Specifically, in L3, channel-wise statistic \({{\varvec{\upsigma}}}^{3} = \left[ {\sigma_{1}^{3} ,\sigma_{2}^{3} , \ldots ,\sigma_{K}^{3} } \right]\) is generated by using global average pooling, and the k-th element \(\sigma_{k}^{3}\) of \({{\varvec{\upsigma}}}^{3}\) is defined by

where \(\sigma_{k}^{2} \left( {\kappa ,\nu } \right)\) is an element at the position \(\left( {\nu ,\kappa } \right)\). In order to fully capture the dependencies between channels, we introduce two dense layers (L4 and L5) to form a bottleneck structure. The outputs of L5 is

where \({\mathbf{W}}_{1} \in {\mathbf{\mathbb{R}}}^{{\frac{K}{r} \times K}}\) and \({\mathbf{W}}_{2} \in {\mathbf{\mathbb{R}}}^{{K \times \frac{K}{r}}}\) are the weight matrix of L4 and L5, respectively.r represents the reduction ratio, and the value of r is 2 in this study via trade-off between performance and computational cost. In L6, the output of L5 is used to weight each feature map of L2. The mathematical expression is

In general, layers 3–6 constitute attention paths, which can enhance the effective features of L2.

L7 is a batch normalization (BN) layer that can mitigate overfitting and accelerate the training process. The implementation of BN is as follows:

where \(\theta\) and \(\pi\) are learnable parameters. In order to ensure the distribution consistency of \({{\varvec{\upsigma}}}_{k}^{7}\) and \({{\varvec{\upsigma}}}_{k}^{6}\), \({\hat{\mathbf{\sigma }}}_{k}^{6}\) is the normalized input data having the following form:

where \(mean\left( \cdot \right)\) and \(std\left( \cdot \right)\) represent the expected value and standard deviation of \({{\varvec{\upsigma}}}_{k}^{7}\).

The task of the second block is the feature fusion, which integrates the network features of multiple frequency bands, and then realizes the detection of epilepsy. Firstly, outputs \({{\varvec{\upsigma}}}_{k}^{1}\), \({{\varvec{\upsigma}}}_{k}^{2}\) and \({{\varvec{\upsigma}}}_{k}^{7}\) from eight frequency bands are concatenated together (L8). Then, we set a convolution layer (L9) with 32 kernels for feature fusion. The kernel size is \(3 \times 3\). The 32 feature maps of L9 are learned through another convolution layer (L10), and the kernel size is \(1 \times 1\). The outputs of L10 are flattened in L11. Finally, all learned features are input into a dense layer (L12) for epilepsy detection, using the softmax activation function.

Data availability

The data that support the findings of this study are openly available in CHB-MIT dataset at https://physionet.org/content/chbmit/1.0.0/.

References

Bhattacharyya, A. & Pachori, R. B. A multivariate approach for patient-specific EEG seizure detection usingempirical wavelet transform. IEEE Trans. Biomed. Eng. 64(9), 2003–2015 (2017).

Zhang, S. et al. A novel EEG-complexity-based feature and its application on the epileptic seizure detection. Int. J. Mach. Learn. Cybern. 10(12), 3339–3348 (2019).

Radman, M. et al. Multi-feature fusion approach for epileptic seizure detection from EEG signals. IEEE Sens. J. 21(3), 3533–3543 (2021).

Mumtaz, W. et al. A wavelet-based technique to predict treatment outcome for major depressive disorder. PLoS ONE 12(2), e0171409 (2017).

Yuan, S. et al. The earth mover’s distance and Bayesian linear discriminant analysis for epileptic seizure detection in scalp EEG. Biomed. Eng. Lett. 8(4), 373–382 (2018).

Ozcan, A. R. & Erturk, S. Seizure prediction in scalp EEG using 3D convolutional neural networks with an image-based approach. IEEE Trans. Neural Syst. Rehabil. Eng. 27(11), 2284–2293 (2019).

Gao, Z. et al. Multivariate multiscale complex network analysis of vertical upward oil-water two-phase flow in a small diameter pipe. Sci. Rep. 6, 20052 (2016).

Wang, W. et al. Coevolution spreading in complex networks. Phys. Rep. 820, 1–51 (2019).

Gao, Z. et al. Classification of EEG signals on VEP-based BCI systems with broad learning. IEEE Trans. Syst. Man. Cybern. Syst. 51, 7143–7151 (2021).

Avena-Koenigsberger, A. et al. Communication dynamics in complex brain networks. Nat. Rev. Neurosci. 19(1), 17–33 (2017).

Fathian, A. et al. The trend of disruption in the functional brain network topology of Alzheimer’s disease. Sci. Rep. 12(1), 14998 (2022).

Brodka, P. et al. Sequential seeding in multilayer networks. Chaos 31(3), 033130 (2021).

Ding, R. et al. Application of complex networks theory in urban traffic network researches. Netw. Spat. Econ. 19(4), 1281–1317 (2019).

Vaknin, D. et al. Spreading of localized attacks in spatial multiplex networks. New J. Phys. 19, 073037 (2017).

Yuvaraj, M. et al. Topological clustering of multilayer networks. Proc. Natl. Acad. Sci. U S A. 118(21), e2019994118 (2021).

Martinez-Amezaga, M. et al. Engineering multilayer chemical networks. Chem. Sci. 10(36), 8338–8347 (2019).

Dang, W. et al. Rhythm-dependent multilayer brain network for the detection of driving fatigue. IEEE J. Biomed. Health 25, 693 (2021).

Gao, Z. et al. Complex network analysis of time series. EPL 119, 50008 (2017).

Fan, L. et al. Robustness evaluation for real traffic network from complex network perspective. Int. J. Mod. Phys. C 32(08), 2150102 (2021).

Ditthapron, A. et al. Universal joint feature extraction for P300 EEG classification using multi-task autoencoder. IEEE Access 7, 68415–68428 (2019).

Sarigul, M. et al. Differential convolutional neural network. Neural Netw. 116, 279–287 (2019).

Gomez, C. et al. Automatic seizure detection based on imaged-EEG signals through fully convolutional networks. Sci. Rep. 10(1), 21833 (2020).

Liu, T. et al. Convolution neural network with batch normalization and inception-residual modules for Android malware classification. Sci. Rep. 12(1), 13996 (2022).

Lin, E. et al. A deep learning approach for predicting antidepressant response in major depression using clinical and genetic biomarkers. Front. Psychiatry 9, 30034349 (2018).

Ejaz, F. et al. Convolutional neural networks for approximating electrical and thermal conductivities of Cu-CNT composites. Sci. Rep. 12(1), 13614 (2022).

Jia, G. Y. et al. Variable weight algorithm for convolutional neural networks and its applications to classification of seizure phases and types. Pattern Recognit. 121, 108226 (2022).

Gramacki, A. & Gramacki, J. A deep learning framework for epileptic seizure detection based on neonatal EEG signals. Sci. Rep. 12(1), 13010 (2022).

Li, Y. et al. Automatic seizure detection using fully convolutional nested LSTM. Int. J. Neural Syst. 30(4), 2050019 (2020).

Akyol, K. Stacking ensemble based deep neural networks modeling for effective epileptic seizure detection. Expert Syst. Appl. 148, 113239 (2020).

Gao, X. et al. Automatic detection of epileptic seizure based on approximate entropy, recurrence quantification analysis and convolutional neural networks. Artif. Intell. Med. 102, 101711 (2020).

Zhao, W. et al. A novel deep neural network for robust detection of seizures using EEG signals. Comput. Math. Methods Med. 2020, 9689821 (2020).

Naseem, S. et al. Integrated CWT-CNN for epilepsy detection using multiclass EEG dataset. Comput. Mater. Contin. 69(1), 471–486 (2021).

Li, J. et al. Attention mechanism-based CNN for facial expression recognition. Neurocomputing 411, 340–350 (2020).

Yan, S. Y. et al. Image captioning via hierarchical attention mechanism and policy gradient optimization. Signal Process. 167, 107329 (2020).

Peng, Y. et al. Topic-enhanced emotional conversation generation with attention mechanism. Knowl.-Based Syst. 163, 429–437 (2019).

Zabihi, M. et al. Analysis of high-dimensional phase space via poincare section for patient-specific seizure detection. IEEE Trans. Neural Syst. Rehabil. Eng. 24(3), 386–398 (2016).

Fergus, P. et al. A machine learning system for automated whole-brain seizure detection. Appl. Comput. Inform. 12(1), 70–89 (2016).

Kaleem, M. et al. Patient-specific seizure detection in long-term EEG using signal-derived empirical mode decomposition (EMD)-based dictionary approach. J. Neural Eng. 15(5), 056004 (2018).

Ke, H. et al. Towards brain big data classification: epileptic EEG identification with a lightweight VGGNet on global MIC. IEEE Access 6, 14722–14733 (2018).

Chen, Z. et al. A unified framework and method for EEG-based early epileptic seizure detection and epilepsy diagnosis. IEEE Access 8, 20080–20092 (2020).

Dang, W. et al. Studying multi-frequency multilayer brain network via deep learning for EEG-based epilepsydetection. IEEE Sens. J. 21(24), 27651–27658 (2021).

Hopfengartner, R. et al. Automatic seizure detection in long-term scalp EEG using an adaptive thresholding technique: A validationstudy for clinical routine. Clin. Neurophysiol. 125, 1289–1290 (2014).

BalaKrishnan, M. et al. A multi-channel fusion based new-born seizure detection. Biomed. Sci. Eng. 7, 533–545 (2014).

Besio, W. G. et al. High-frequency oscillations recorded on the scalp of patients with epilepsy using tripolar concentric ring electrodes. IEEE Transl. Eng. Health Med. 2, 1–11 (2014).

Akerstedt, T. et al. Manifest sleepiness and the spectral content of the EEG during shift work. Sleep 14(3), 221–225 (1991).

Gurudath, N. & Riley, H. B. Drowsy driving detection by EEG analysis using wavelet transform and K-means clustering. Procedia Comput. Sci. 34, 400–409 (2014).

Funding

The funding was provided by The National Natural Science Foundation of China (Grant No. 61772249, 61772249), The Youth project of Liaoning Provincial Department of Education (Grant No. LJ2019QL024).

Author information

Authors and Affiliations

Contributions

H.-S.Y. and X.-F.M. devised the research project. H.-S.Y. analyzed the results and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, HS., Meng, XF. Characteristic analysis of epileptic brain network based on attention mechanism. Sci Rep 13, 10742 (2023). https://doi.org/10.1038/s41598-023-38012-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38012-0

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.