Abstract

Although we are currently in the era of noisy intermediate scale quantum devices, several studies are being conducted with the aim of bringing machine learning to the quantum domain. Currently, quantum variational circuits are one of the main strategies used to build such models. However, despite its widespread use, we still do not know what are the minimum resources needed to create a quantum machine learning model. In this article, we analyze how the expressiveness of the parametrization affects the cost function. We analytically show that the more expressive the parametrization is, the more the cost function will tend to concentrate around a value that depends both on the chosen observable and on the number of qubits used. For this, we initially obtain a relationship between the expressiveness of the parametrization and the mean value of the cost function. Afterwards, we relate the expressivity of the parametrization with the variance of the cost function. Finally, we show some numerical simulation results that confirm our theoretical-analytical predictions. To the best of our knowledge, this is the first time that these two important aspects of quantum neural networks are explicitly connected.

Similar content being viewed by others

Introduction

In recent years, there has been a great increase in interest in quantum computing due to its possible applications in solving problems such as simulation of quantum systems1, development of new drugs2, and resolution of systems of linear equations3. Quantum machine learning, which is an interdisciplinary area of study at the interface between machine learning and quantum computing, is also another possible application that should benefit from the computational power of these devices. In this sense, several models have already been proposed, such as Quantum Multilayer Perceptron4, Quantum Convolutional Neural Networks5,6,7, Quantum Generative Adversarial Neural Networks8,9, Quantum Kernel Method10, and Quantum-Classical Hybrid Neural Networks11,12,13,14. However, in the era of noisy intermediate scale quantum devices (NISQ), variational quantum algorithms (VQAs)15 are the main strategy used to build such models.

Variational quantum algorithms are models that use a classical optimizer to minimize a cost function by optimizing the parameters of a parametrization U. Several optimization strategies have already been proposed16,17,18,19, although this is an open area of study. In fact, despite the widespread use of VQAs, our understanding of VQAs is limited and some problems still need to be solved, such as the disappearance of the gradient20,21,22,23,24,25,26,27, methods to mitigate the barren plateaus issue28,29,30,31,32, how to build a parameterization U33,34, and how correct errors35.

In this article we aim to analyze how the expressivity of the parametrization U affects its associated cost function. We will show that the more expressive the U parametrization is, the more the average value of the cost function will concentrate around a fixed value. In addition, we will also show that the probability of the cost function deviating from its average will also depend on the quantum circuit expressivity.

The remainder of this article is organized as follows. In Section “Variational quantum algorithms”, we make a short introduction about VQAs. In Section “Expressivity”, we comment on how expressiveness can be quantified and what is its meaning. In the following section, Section “Main theorems, we present our main results. There we will give two theorems. In Theorem 1, we obtain a relationship between the concentration of the cost function and the expressiveness of the parametrization. In Theorem 2, we obtain the probability for the cost function to deviate from its average value, restricting it via a function of the quantum circuit expressivity. Then, in Section “Simulation results”, we present some numerical simulation results to confirm our theoretical analytical predictions. Finally, Section “Conclusion” presents our conclusions.

Variational quantum algorithms

Variational quantum algorithms are models where a classical optimizer is used to minimize a cost function, which is usually written as the average value of an observable O:

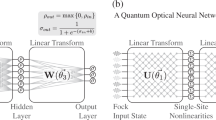

where \(|\psi \rangle := V(\pmb {x})|0 \rangle \). To do so, the optimizer updates the parameters \(\pmb {\theta }\) of the parameterization U. In Fig. 1, one can see a schematic representation of how a VQA works. In the first part, Fig. 1A, a quantum circuit runs on a quantum computer. In general, this circuit is divided in three parts. In the first part we have a V parametrization that is used to encode data in a quantum state. In quantum machine learning, this parametrization is used to bring our data, such as data from the MNIST36 dataset, into a quantum state. Next, we have the parametrization U that will depend on the parameters \(\pmb {\theta }\) that we must optimize. Finally, we have the measures that are used to calculate the cost function. In the second part, Fig. 1B, we have a classical computer that performs the task of optimizing the parametrization parameters. In general, for this task the gradient of the cost function is used. However, as shown in Refs.20,21,22,23,24,25,26,27, this method suffers from the problem of the gradient disappearance, which is associated with the fact that the derivative of the cost function tends to zero as the circuit size increases. Furthermore, in Ref.24 it was shown that this problem of gradient disappearance is also associated with parameterizations with high expressiveness.

In this article, the parametrization will be given by

where L is the number of layers, \(U'_{l}\) is a layer that depends on the parameters \(\pmb {\theta }\) and \(W_{l}\) is a layer that does not depend on the parameters \( \pmb {\theta }\). The construction of parametrizations is still an open area of study and, due to the complexity involved in its construction, some works have proposed using the automation of this process33,37. Furthermore, for problems such as quantum machine learning, where a V parameterization is used to encode data in a quantum state, the choice of V is also extremely important38, and several possible encoding forms have been proposed39.

Expressivity

Following Ref.40, here we define expressivity as the ability of a quantum circuit to generate (pure) states that are well representative of the Hilbert space. In the case of a qubit, this comes down to the quantum circuit’s ability to explore the Bloch sphere. To quantify the expressiveness of a quantum circuit, we can compare the uniform distribution of units obtained from the set \({\mathbb {U}}\) with the maximally expressive (Haar) uniform distribution of units of \({\mathcal {U}}(d)\). For this, we use the following super-operator24

where \(d\mu (V)\) is a volume element of the Haar measure and dU is a volume element corresponding to the uniform distribution over \({\mathbb {U}}\). The uniform distribution over \({\mathbb {U}}\) is obtained by fixing the parameterization U, where for each vector of parameters \(\pmb {\theta }\) we obtain a unit \(U(\pmb {\theta })\). Thus, given the set of parameters \(\{ \pmb {\theta }^{1},\pmb {\theta }^{2},\ldots , \pmb {\theta }^{m} \}\), we obtain the corresponding set of unitary operators:

The expressivity of a parametrization U is then given by the norm of the super-operator defined above:

Here we use the matrix 2-norm \(||A||_2^2 = Tr(A^\dagger A)\). So, for any operator X, from Eq. (3), we have that the smaller \(\Vert A_{{\mathbb {U}}}^{t}(X)\Vert _{2}\), the more expressive will be the parametrization U.

Main theorems

In this section, we present our main results. First, we obtain a relationship between the average value of the cost function, Eq. (1), with the expressivity of the parametrization U, Eq. (2). Afterwards, we will obtain a relationship between the variance of the cost function and the expressiveness of the parametrization. To do so, we start by writing the average of the cost function as

Therefore, using Eq. (3) in Eq. (6), we obtain the following relationship between the mean of the cost function and the expressivity of the parametrization, Theorem 1.

Theorem 1

(Concentration of the cost function). Let the cost function be defined as in Eq. (1), with observable O, parameterization U, Eq. (2), and encoding quantum state \(\rho := |\psi \rangle \langle \psi |\). Then it follows that

The proof of this theorem is presented in the first section of the Supplementary information. Therefore, Theorem 1 implies that the greater the expressiveness of the parameterization U, the more the cost function average will tend to have the value Tr[O]/d.

Despite Theorem 1 implying a tendency of the mean value of the cost function to go a fixed value, when executing the VQA, the cost function may deviate from its mean. To calculate this deviation we use the Chebyshev inequality,

which informs the probability for the cost function to deviate from its mean value.

Next, we present the Theorem 2, relating the modulus of the cost function variance with the expressiveness of the parametrization.

Theorem 2

Let us consider the cost function defined in Eq. (1) and the parameterization U defined in Eq. (2). The variance of the cost function can be constrained as follows:

with \( \beta := \frac{ Tr[O]^{2} + Tr[O^{2}] }{d^{2}-1}\bigg ( 1 - \frac{1}{d} \bigg )-\frac{Tr[O]^{2}}{d^{2}}\) and \(\alpha := \frac{2Tr[O]}{d}.\) Here \(d=2^{n}\), where n is the number of qubits in the variational quantum circuit.

The proof of this theorem is presented in second section of the Supplementary information. So, as the variance is a positive real number, we can use Theorem 2 to analyze the probability that the cost function deviates from its mean, Eq. (8). Therefore, from Theorem 2, we see that by defining the observable O and the size of the system, that is, the number of qubits used, the probability of the cost function deviating from its mean decreases as the expressivity increases. Furthermore, it also follows, from Theorem 1, that for maximally expressive parameterizations, i.e., for \(\Vert A_{{\mathbb {U}}}^{t}(X)\Vert _{2} = 0 \), the cost function will be stuck to the fixed value Tr[O]/d.

Simulation results

In this section, we will present some numerical simulation results. For this, we use twelve different parametrizations, which we call, respectively, Model 1, Model 2,…, Model 12. The quantum circuits corresponding to these parametrizations are shown in Figs. S1, S2, S3, S4, S5, S6, S7, S8, S9, S10, S11, and S12 in the third section of the Supplementary information. As we saw in Eq. (2), the parametrization is obtained from the product of L layers \(U_{l}\), where each layer \(U_{l}\) can be distinct from one another, that is, the gates and sequences we use in one layer may be different from another. However, in general, they are the same. For the results shown here, the \(U_{l}\) layers are the same, the only difference being the \(\pmb {\theta }\) parameters used in each layer.

For these results we define each \(U'_{l}\) as

where the index l indicates the layer and the index i the qubit. Also, we use \(R_{Y}(\theta _{i,l}) = e^{-i \theta _{j,i} Y/2} \) in all models. In the parametrizations of Model 3, Model 4, Model 6, Model 9, Model 10, and Model 12, the Figs. S3, S4, S6, S9, S10, and S12, respectively, in the Supplementary information, before for each \(U'_{l}\), we apply the Hadamard gate to all the qubits. Furthermore, in Model 2, Model 3, Model 8, and Model 9, the Figs. S2, S3, S8, and S9, respectively, in the Supplementary information, we use the controlled port \(R_{Y}\), or CRY. Finally, for the results obtained here, we used the PennyLane41 library. Furthermore, the codes used to obtain these results are available for access at42.

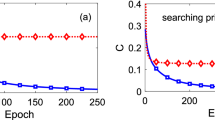

Initially, we numerically analyze Eq. (7) of Theorem 1. For this, we performed an initial set of simulations, Figs. 2, 3, and 4 , where we fixed the number of qubits and varied the number of layers L. For the results of Figs. 2, 3, and 4, we used four, five, and six qubits, respectively. Furthermore, for these simulations we consider the particular case \(O = |0 \rangle \langle 0|\) and \(\rho = |0 \rangle \langle 0|\).

We analytically calculate the value of \( \Vert A(\rho ) \Vert _{2} \), where we get24

with \(\mu (\rho ) = \int _{\pmb {\theta }}\int _{\pmb {\phi }} | \langle \psi _{\pmb {\theta }} | \psi _{\pmb {\phi }} \rangle |^{2} d\pmb {\theta } d\pmb {\phi }.\) Or, from Ref.40, we obtain

To estimate \(\Vert A(\rho ) \Vert _{2}\) by simulations, we generated 5000 pairs of state vectors. Although we have generated a large number of state vectors, it is still a small sample of the entire Hilbert space. So, the value we obtained for \(\mu (\rho )\) is an approximation. As a consequence, in some simulations we obtained a complex value for \( \Vert A(\rho ) \Vert _{2}\), Eq. (11). Therefore, whenever this occurred, we restarted the simulation. Furthermore, we also used 5000 units to average the cost function.

In Figs. 2, 3, and 4 is shown the behavour of the right hand side of Eq. (7), related to the expressivity, and of the average cost function term, the left hand side of Eq. (7). For producing these figures, four, five, and six qubits quantum circuits were used, respectively.

In Figs. 5, 6, and 7, we show the behavior of the numerically calculated variance, Var s, the left hand side of Eq. (9), and of the theoretical value, Var t, the right hand side of Eq. (9), where we again used four, five, and six qubits, respectively. Also, here we also used 5000 unitaries to compute the averages.

Conclusion

In this article, we analyzed how the expressiveness of the parametrization affects the cost function. As we observed, the concentration of the average value of the cost function has an upper limit that depends on the expressiveness of the parametrization, where the more expressive this parametrization is, the more the average of the cost function will be concentrated around the fixed value Tr[O]/d, as stated in Theorem 1. Furthermore, the probability for the cost function to deviate from its mean also depends on the expressiveness of the parWametrization, as stated by Theorem 2.

A possible implication of these results is related to the training of VQAs with highly expressive parametrizations. Once the more expressive the parametrization is, the more the average value of the cost function will be concentrated around Tr[O]/d, and the probability of the cost function deviating from this average also decreases, considering that, for the case where \( \Vert A(\rho )^{t} \Vert _{2} = 0\), the cost function will be stuck at the value Tr[O]/d. This result is in agreement with the one obtained in Ref.24, where it was shown that the phenomenon of gradient disappearance is related with parametrization having high expressivity. However, our results also imply that even if we manage to find an optimization method that does not suffer from the problem of the gradient disappearance induced by expressivity, the training of such models would still be impaired by expressivity since the cost function itself would be concentrated around a fixed value.

Another possible implication of our results is related to quantum machine learning models. In Ref.43, the authors mentioned that there is a correlation between expressiveness and accuracy, where the greater is the expressiveness, in general, the greater is the accuracy. To this end, the authors used Pearson’s correlation coefficient to quantify this correlation. However, our results imply that, not only is the training of highly expressive parametrized quantum machine learning models difficult, as it will suffer more from the problem of gradient disappearance, as indicated in Ref.24, but also the cost function itself will become stuck to a region close to the value Tr[O]/d.

In order to exemplify this statement, let us consider the following scenario. We will use a quantum machine learning model (QMLM) to classify handwritten digits, where given the image of any digit as input \(x_{i}\), we want the model to learn to inform the corresponding output \(y_{i}\). To do so, we will use as a cost function \(L = \sum _{i=1}^{N}( C_{i}-y_{i} )^{2}\), where \(C_{i}\) is the output of the quantum circuit, which will be described by Eq. (1), given the input \(x_{i}\). The goal when training the QMLM is to make the cost function L zero or as close to zero as possible. However, if we choose the observable such that \(Tr[O]= 0\) and if \( \Vert A(\rho )^{t} \Vert _{2} = 0\), then, from the Theorems 1 and 2 , we have that \(C_{i} = 0\ \forall i\). So, if, for example, \(y_{i}=1 \) for a given input \(x_{i}\), the model will not be able to learn this output.

Data availability

The numerical data generated in this work is available from the corresponding author upon reasonable request. The associated code is avalilable at https://github.com/lucasfriedrich97/quantum-expressibility-vs-cost-function.

References

Lloyd, S. Universal quantum simulators. Science 273, 1073 (1996).

Cao, Y., Romero, J. & Aspuru-Guzik, A. Potential of quantum computing for drug discovery. IBM J. Res. Dev. 62, 6 (2018).

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 103, 150502 (2009).

Shao, C.: A Quantum Model for Multilayer Perceptron. arXiv:1808.10561 (2018).

Wei, S. J., Chen, Y. H., Zhou, Z. R. & Long, G. L. A quantum convolutional neural network on NISQ devices. AAPPS Bull. 32, 2 (2022).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15, 1273 (2019).

Deng, D.-L. Quantum enhanced convolutional neural networks for NISQ computers. Sci. China Phys. Mech. Astron. 64, 100331 (2021).

Lloyd, S. & Weedbrook, C. Quantum generative adversarial learning. Phys. Rev. Lett. 121, 040502 (2018).

Huang, H.-L. et al. Experimental quantum generative adversarial networks for image generation. Phys. Rev. Appl. 16, 024051 (2021).

Schuld, M.: Supervised Quantum Machine Learning Models are Kernel Methods. arXiv:2101.11020 (2021).

Liu, J. et al. Hybrid quantum-classical convolutional neural networks. Sci. China Phys. Mech. Astron. 64, 290311 (2021).

Liang, Y., Peng, W., Zheng, Z.-J., Silvén, O. & Zhao, G. A hybrid quantum-classical neural network with deep residual learning. Neural Netw. 143, 133 (2021).

Xia, R. & Kais, S. Hybrid quantum-classical neural network for calculating ground state energies of molecules. Entropy 22, 828 (2020).

Houssein, E. H., Abohashima, Z., Elhoseny, M. & Mohamed, W. M. Hybrid quantum convolutional neural networks model for COVID-19 prediction using chest X-Ray images. J. Comput. Des. Eng. 9, 343 (2022).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625 (2021).

Friedrich, L. & Maziero, J. Evolution strategies: application in hybrid quantum-classical neural networks. Quantum Inf. Process. 22, 132 (2023).

Anand, A., Degroote, M. & Aspuru-Guzik, A. Natural evolutionary strategies for variational quantum computation. Mach. Learn. Sci. Technol. 2, 045012 (2021).

Zhou, L. et al. Quantum approximate optimization algorithm: Performance, mechanism, and implementation on near-term devices. Phys. Rev. X 10, 021067 (2020).

Fösel, T. et al. Quantum Circuit Optimization with Deep Reinforcement Learning. arXiv preprint arXiv:2103.07585 (2021).

McClean, J. R. et al. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 4812 (2018).

Cerezo, M., Sone, A., Volkoff, T., Cincio, L. & Coles, P. J. Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat. Commun. 12, 1791 (2021).

Patti, T. L., Najafi, K., Gao, X. & Yelin, S. F. Entanglement devised barren plateau mitigation. Phys. Rev. Res. 3, 033090 (2021).

Marrero, C. O., Kieferová, M. & Wiebe, N. Entanglement-induced barren plateaus. PRX Quantum 2, 040316 (2021).

Holmes, Z., Sharma, K., Cerezo, M. & Coles, P. J. Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quantum 3, 010313 (2022).

Uvarov, A. V. & Biamonte, J. D. On barren plateaus and cost function locality in variational quantum algorithms. J. Phys. A 54, 245301 (2021).

Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 12, 6961 (2021).

Arrasmith, A., Cerezo, M., Czarnik, P., Cincio, L. & Coles, P. J. Effect of barren plateaus on gradient-free optimization. Quantum 5, 558 (2021).

Friedrich, L. & Maziero, J. Avoiding barren plateaus with classical deep neural networks. Phys. Rev. A 106, 042433 (2022).

Grant, E., Wossnig, L., Ostaszewski, M. & Benedetti, M. An initialization strategy for addressing barren plateaus in parametrized quantum circuits. Quantum 3, 214 (2019).

Volkoff, T. & Coles, P. J. Large gradients via correlation in random parameterized quantum circuits. Quantum Sci. Technol. 6, 025008 (2021).

Verdon, G. et al. Learning to Learn with Quantum Neural Networks via Classical Neural Networks. https://doi.org/10.48550/arXiv.1907.05415 (2019).

Skolik, A., McClean, J. R., Mohseni, M., van der Smagt, P. & Leib, M. Layerwise learning for quantum neural networks. Quantum Mach. Intell. 3, 5 (2021).

Kuo, E.-J., Fang, Y.-L. L. & Chen, S. Y.-C.: Quantum Architecture Search Via Deep Reinforcement Learning, arXiv preprint arXiv:2104.07715 (2021).

Friedrich, L. & Maziero, J. Restricting to the Chip Architecture Maintains the Quantum Neural Network Accuracy, If the Parameterization is a 2-Design. arXiv preprint arXiv:2212.14426 (2022).

Zhenyu, Z. et al. Quantum Error Mitigation. arXiv preprint arXiv:2210.00921 (2022).

LeCun, Y. The MNIST Database of Handwritten Digits. http://yann.lecun.com/exdb/mnist/ (1998).

Zhang, S.-X., Hsieh, C.-Y., Zhang, S. & Yao, H. Differentiable quantum architecture search. Quantum Sci. Technol. 7, 045023 (2022).

Schuld, M., Sweke, R. & Meyer, J. J. Effect of data encoding on the expressive power of variational quantum-machine-learning models. Phys. Rev. A 103, 032430 (2021).

LaRose, R. & Coyle, B. Robust data encodings for quantum classifiers. Phys. Rev. A 102, 032420 (2020).

Sim, S., Johnson, P. D. & Aspuru-Guzik, A. Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. Adv. Quantum Technol. 2, 1900070 (2019).

Bergholm, V. et al. Pennylane: Automatic Differentiation of Hybrid Quantum-classical Computations. arXiv preprint arXiv:1811.04968 (2018).

https://github.com/lucasfriedrich97/quantum-expressibility-vs-cost-function.

Hubregtsen, T., Pichlmeier, J., Stecher, P. & Bertels, K. Evaluation of parameterized quantum circuits: On the relation between classification accuracy, expressibility, and entangling capability. Quantum Mach. Intell. 3, 9 (2021).

Collins, B. & Śniady, P. Integration with respect to the Haar measure on unitary, orthogonal and symplectic group. Commun. Math. Phys. 264, 773 (2006).

Puchała, Z. & Miszczak, J. A. Symbolic integration with respect to the Haar measure on the unitary group. Bull. Pol. Acad. Sci.-Tech. Sci. 65, 1 (2017).

Acknowledgements

This work was supported by the National Institute for the Science and Technology of Quantum Information (INCT-IQ), process 465469/2014-0, and by the National Council for Scientific and Technological Development (CNPq), processes 309862/2021-3 and 409673/2022-6.

Author information

Authors and Affiliations

Contributions

The project was conceived by L.F. and J.M. The manuscript was written by L.F. and J.M. L.F. proved the main results and made the simulations, with J.M. reviewing them.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Friedrich, L., Maziero, J. Quantum neural network cost function concentration dependency on the parametrization expressivity. Sci Rep 13, 9978 (2023). https://doi.org/10.1038/s41598-023-37003-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-37003-5

This article is cited by

-

Restricting to the chip architecture maintains the quantum neural network accuracy

Quantum Information Processing (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.