Abstract

This paper proposes a new gradient-descent algorithm for complex independent component analysis and presents its application to the Multiple-Input Multiple-Output communication systems. Algorithm uses the Lie structure of optimization landscape and toral decomposition of gradient matrix. The theoretical results are validated by computer simulation and compared to several classes of algorithms, gradient descent, quasi-Newton as well as complex JADE. The simulations performed showed excellent results of the algorithm in terms of speed, stability of operation and the quality of separation. A characteristic feature of gradient methods is their quick response to changes in the input signal. The good results of the proposed algorithm indicate potential use in on-line applications.

Similar content being viewed by others

Introduction

The area of application of the complex data is currently very wide. It is commonly used in physics, biomedical sciences, wireless communication, sensor array and signal processing etc. For example, most practical modulation methods as phase-shift keying (PSK) and quadrature amplitude modulation (QAM) are of complex type. These types of digital modulation techniques are used in various wireless communication systems. One technique used in this area of communication is the multi-antenna technique, which is used to improve the performance and robustness of wireless links. The Multiple-Input Multiple-Output (MIMO) system uses the multi-antenna technique on both the transmitting and receiving side. The modulated complex signal reaching the receiving antennas is a mixed signal from the transmitting antennas. The recovery of the useful signal requires use of the mixed signal separation techniques. Blind Source Separation (BSS) is a wide range of techniques for recovering the unobserved sources signals from the observed channel output without any quantitative information of the channel system. The BSS methods have solid theoretical foundations and extensive potential applications1. An excellent overview of the BSS techniques for interference cancellation in communication systems can be found in2. Constant modulus (CM) criterion is probably the best known and most studied higher order statistics based criterion in blind equalization3 and signal separation4,5 areas. It exploits the idea that certain communication signals have the constant modulus property. The Constant Modulus Algorithm (CMA) was initially designed for PSK signals6. In the case of constant modulus signals CMA has proven reasonable performances and desired convergence requirements. On the other hand using the CMA for nonconstant modulus signals such as QAM signals yields degraded performance because the CMA projects all signals points onto a single modulus. In order to improve the performance of CMA for QAM signals a number of modified methods have been introduced such as the algorithm known as MMA7 and constant norm algorithm (CNA) 8 whose CMA represents a particular case. The CMA can also be used in MIMO systems9. However this case is susceptible to the one-to-many problem. The CMA uses the CM feature and it may not identify the desired signal individually when several constant modulus signals coexists and often yield the same signal on the output channel. In order to omit this drawback a lot of extensions for CMA have been introduced. For example Least-squares multi-user CMA10, Recursive least-squares CMA11, kurtosis-based method (MUT)12, CMA using Givens rotation13 and Succesive interference cancellation least- squares CMA14.

A typical CMA algorithm is to minimize the cost function of the form \({J}_{CM}=E\left\{{\left({\left|y\right|}^{2}-\gamma \right)}^{2}\right\}\), where \(\gamma\) is a constellation-dependent parameter. These algorithms are therefore designed for specific digital communication signals, i.e. signals with the same constant modulus \(\gamma\). Therefore, in the available literature, the presented simulation experiments are designed for a small number of signals of the same type, e.g. MIMO 2 × 3 system with transmission of only BPSK signals15, 4 × 2 system with only PSK(4)16 or PSK(8)17, QAM(4)14,18, QAM(16)17 and QAM(64) signals8. In our experiment presented in Chapter 8, we used, among others, the MIMO 5 × 8 system with the transmission of the five above-mentioned digital modulation signals at once. The positive results of the experiment testify high flexibility of the proposed algorithm and the cost function used.

In this paper we deal with another very common technique in BSS known as Independent Component Analysis (ICA). This method and its related Independent Vector Analysis (IVA) are widely used in all kinds of areas requiring signal extraction19. The ICA method depends on the separation of independent components (i.e., sources) and relies on the assumption of their statistical independence. There are many methods for solving the ICA problem. The earlier methods include algebraic methods in which the demixing matrix is derived algebraically from different matrices containing specific signal statistics. In the FOBI20 method, it is the covariance matrix and the matrix based on fourth-order moments, and in the JADE21 method (Joint Approximate Diagonalization of the Eigenvalue matrices) it is cumulative matrices. The alternative approach to the ICA problem is based on the search of the extreme of a specially constructed real cost function in the complex domain. The essence of the construction of the cost function consists in its respective non-linearity generating higher-order statistics. The following approaches are used, i.e., as: Maximum Likelihood, Maximization of Mutual Information, Maximization of non-Gausianity and Information-Maximization framework. All these approaches are closely related to each other22. The ICA algorithms are primarily optimization-iterative algorithms, the speed of which depends to a large extent on the adopted optimization strategy. The gradient algorithms, also known as the steepest descent, use first-order information about the cost function and are characterized by a linear degree of convergence. In Newton or quasi-Newton algorithms where, in addition to first-order information about the cost function, second-order information is used and the degree of convergence is square or even cubic. These algorithms, due to the need to use elements of the Hessian matrix, are characterized by high computational complexity, but due to the speed of convergence, they are much faster than gradient algorithms. A typical example of this type of algorithm is the so-called a fixed point algorithm called FastICA, which was introduced in the complex version in23. The algorithm assumes circular nature of the source signals, i.e., their pdf are symmetrical in the complex plane. A variation of this algorithm for noncircular signals was introduced in24.

On the other hand, unlike Newton algorithms, gradient algorithms are characterized by a high speed of response to changes in the input signal, which means that they have a great potential for use in on-line applications. In classical gradient algorithms, the gradient of the cost function is determined in the Euclidean space. In the case of imposing a constraint on the optimization space, as in the case of the classic ICA (unitarity of the demixing matrix), the Lagrangian type of optimization methods by adding extra-penalty term is used, and the gradient is also determined in the Euclidean space. Such an approach causes that in each iteration the constraint condition is lost and the boundary surface is dropped (the demixing matrix loses the unitarity property). After each iteration, a re-unitarization operation is required to restore the constraint condition and return to the optimization surface. This classic approach does not take into account the special structure of the optimization parameter space. If this space is treated as a differential manifold then this constrained optimization problem can be seen from the point of view of Riemann geometry. In this case, the gradient is defined as the vector tangent to the constrain surface. In this case, also in each iteration step the boundary surface is exited, however the optimization movement always takes place in the tangent direction to the constraint surface which affects the speed and stability of the algorithms. Taking into account the algebraic structure (i.e., group structure) of the ICA model parameter space, the constraint condition is maintained in each iteration step. The classical complex ICA model has the structure of the unitary group \(\mathrm{U}(n)\), i.e., the optimization space is a group of matrices satisfying the condition \(W{W}^{H}={W}^{H}W=I\). This group is closed under the matrix multiplication operation. Thus, when using the multiplicative iteration procedure, the condition of the unitarity of the demixing matrix \(W\) is met. The unitary group \(\mathrm{U}(n)\) with its smooth differential structure forms the Lie group. The curve that minimizes the path between two points on a Riemannian manifold is called a geodesic carve and is equivalent to a straight line in an ordinary flat Euclidean space. Optimization methods based on this convenient optimization space structure are called geodesic methods and can be found in25,26. The optimization motion in this case follows the geodesic curve in each iteration step.

This paper is organized as follows. In section "Background on ICA", a basic model of complex ICA is provided. Various approaches to the ICA problem are presented as well. Section "Lie group and their Lie algebra" is devoted to the general concept of Lie groups and Lie algebras. Riemannian and Lie structure of unitary group, Riemannian gradient, geodesic motion in Lie group of unitary matrices are presented in section "Optimization on unitary group ". The toral geometry in optimization landscape is established in section "Toral geometry in optimization landscape". The computational complexity is studied in section "Computational complexity". The MIMO telecommunication system is described in section "Multiple-Input Multiple-Output systems". The application of the proposed algorithm in the MIMO system and comparison with other ICA algorithms is presented in Chapter 8.

Background on ICA

The classical linear model of ICA is defined as

and in noisy case

where \(x={({x}_{1},\dots ,{x}_{n})}^{T}\in {\mathbb{C}}^{n}\) are only known observed signals, \(A\in {\mathbb{C}}^{n\times n}\) is an \(n\times n\) complex-valued, unknown, invertible mixing matrix, \(s={({s}_{1},\dots ,{s}_{n})}^{T}\in {\mathbb{C}}^{n}\) are unknown complex-valued source signals, i.e., independent components, \(\vartheta \sim CN(0,{I}_{n})\) is circular zero-mean Gaussian noise with covariance matrix \({I}_{n}\) and \({\sigma }^{2}\) is the variance of added noise to the mixed signals. Sources \({s}_{i}\) are assumed to be mutually statistically independent. Sources estimates \(\widehat{s}={({\widehat{s}}_{1},\dots ,{\widehat{s}}_{n})}^{T}\) are given by inverse model (in the noiseless case)

where \(W\cong {A}^{-1}\) is called demixing matrix.

The aim of ICA is to find a demixing matrix \(W\) having only \(N\) realizations of the observed signal \(x\). Above systems can be solved up to complex scale accuracy (i.e., up to phase and modulus) and permutations of \({s}_{\mathrm{i}}\) provides that at most one sources is Gaussian. The classical ICA model assumes that the number of source signals \({s}_{\mathrm{i}}\) is known and is equal to the number of observed signals \({x}_{\mathrm{i}}\). In the general ICA model, this condition does not have to be met and a case in which the number of source signals is different from the number of observed signals is considered. In the extreme case, when a single observed signal is given, the problem is called Single Channel Source Separation, which is also possible to solve27,28,29.

In order to reduce the computational complexity, the whitening (or sphering) process is usually performed on observed signal in the preprocessing stage. In this stage observed signals are decorrelated using eigendecomposition of correlation matrix. It is assumed, without losing generality, that the sources have a mean value of zero and a unit variance, i.e., \(E\left\{s{s}^{H}\right\}=I\). Whitening process is given by \(y={\Lambda }^{-1/2}{U}^{H}\mathrm{x}\), where \(U\) is matrix of eigenvectors and \(\Lambda\) is diagonal matrix with eigenvalues on main diagonal. It is easy to check that whitened signals are decorrelated, i.e., \(E\left\{y{y}^{H}\right\}=E\{{\Lambda }^{-1/2}{U}^{H}x{x}^{H}U{\Lambda }^{-1/2}\}={\Lambda }^{-1/2}{U}^{H}E\{x{x}^{H}\}U{\Lambda }^{-1/2}=I\). Denoting \(V=U{\Lambda }^{-1/2}\) as the whitening transformation matrix it can be written \(y=Vx=VAs=\widetilde{A}s\), where \(\widetilde{A}\) is a new mixing matrix with unitary properties \(E\left\{y{y}^{H}\right\}=\widetilde{A}E\left\{s{s}^{H}\right\}{\widetilde{A}}^{\mathrm{H}}=\widetilde{A}{\widetilde{A}}^{H}=I\). It can be seen that whitening process simplifies the ICA problem from optimization on general linear group \(\mathrm{Gl}\left(n\right)\) (matrix \(W\) satisfies only invertibility property \(\mathrm{det}(W)\ne 0\)) to optimization on unitary group \(\mathrm{U}\left(n\right).\)

There are different approaches to solve ICA problem. Main two of them are optimization of cost function and algebraic methods. The first class includes methods like: Maximum Likelihood estimation (ML)30, Mutual Information Minimization or Information Maximization31 and Negentropy Maximization23. The second class includes, for example, the JADE and FOBI algorithms 20. First class of methods uses nonlinear cost function to archive signal independence. In ML approach the negative log-likelihood function is used as the cost function, which takes the form

where \({w}_{i}\) denotes i-th column of \(W\), \({p}_{i}(\cdot )\) is the pdf function of i-th source random variable \({s}_{i}\). Function \(\ell\left(\cdot \right)\) and \({p}_{i}(\cdot )\) are expressed in dual notation as \(\ell\left(W,{W}^{*}\right)\) and \({p}_{i}({s}_{i},{s}_{i}^{*})\). Using Wirtinger calculus (see Appendix) and the related matrix differential roles it can be shown32 that the gradient matrix takes the form

where \(\varphi \left(Wx\right)\equiv \varphi \left(s,{s}^{*}\right)={\left({\varphi }_{1}\left({s}_{1}, {s}_{1}^{*}\right),\dots ,{\varphi }_{n}\left({{s}_{n},s}_{n}^{*}\right)\right)}^{T}\) and

is the score function or nonlinearity of the i-th source variable. Stochastic gradient update rule for \(W\) can be written as in (Appendix A6)

Its relative (or natural) version introduced by Amari33 takes the form

Assuming \(\varphi \left( s \right) = 2\tanh\left( s \right)\), then this update rule is known as the complex Infomax algotithm31.

Another very important approach to ICA problem uses some non-Gaussianity measure as the cost function22. The natural cost function in that class is negentropy that measure the entropic distance of pdf of the source estimation from Gaussian distribution and can be written as

where \(H\left(\cdot \right)\triangleq -E\{\mathrm{log} p\left(\cdot \right)\}\) is the differential entropy of the given distribution and \(\nu ={\nu }_{R}+i{\nu }_{I}\) is the Gaussian-distributed complex variables with the same variance as source estimate \(s\). Since \(H\left(\nu \right)\) in (9) has a constant value for a given covariance matrix, maximizing negentropy is equivalent to minimizing the entropy of \(H(s)\). The main problem with this approach is that negentropy is computationally very difficult. Definition of negentropy requires knowledge of unknown distribution \(p\left(\cdot \right)\). In practical applications, some negentropy approximations based on the idea of Taylor expansion and maximum entropy methods are used. The Gram–Charlier and the Edgeworth expansions are used usually in this context. They lead to similar approximation based on Chebyshev-Hermite polynomials and higher order cumulants. In22 there is an excellent introduction to this topic. However, this approach leads sometimes, to rather poor approximation due to the fact that sample estimator of higher-order cumulants are very sensitive to outliers and cumulants themselves measure mainly the tails of the distribution and are not very sensitive to structure near the center of the distribution. To overcome this problem, the maximum entropy and nonpolynomial expansion are used, which in real case leads to approximation of negentropy of the form22

where \({G}^{i}, i=1,\dots ,n\) is any set of orthonormal nonquadratic function. Nonquadratic assumption results from the need for integrability of the pdf function obtained in the maximum entropy method and more robustness against outliers. A simple special case of this approximation can be obtained using only one nonquadratic function, which in the complex case takes the form

where \(G: {\mathbb{C}}\to {\mathbb{C}}\) is nonquadratic function which matches the source pdf by rather symbolic relation \({p}_{S}\left(s\right)={p}_{S}\left({s}_{R},{s}_{I}\right)=K\mathrm{exp}\left({\left|G\left(s\right)\right|}^{2}\right)\), where \(K\) is normalizing constant. In classical complex FastICA (cFastICA) algorithm23 cost function takes the form

where in this case nonlinear function \(G: {\mathbb{R}}\to {\mathbb{R}}\) is chosen as a smooth even function, i.e., \(G\left(u\right)=\frac{1}{2}{u}^{2}\) or \(G\left(u\right)=\mathrm{log}(0.1+u)\). It is worth noting that nonlinear function \(G: {\mathbb{C}}\to {\mathbb{C}}\) in (11) uses full complex nature of signals, i.e., phase and magnitude. While nonlinear function in (12) does not preserve phase information and uses only signal’s magnitude. Therefore, cFastICA assumes that the sources have a circular distribution and fails in the case of noncircular sources34.

Expressing cost function in (11) in dual form as

where \(s={W}^{H}x\) and using Wirtinger calculus, it is easy to derive the gradient of this cost function in the form

where \({G}^{^{\prime}}\left(s\right)=\frac{dG}{ds}\) is the derivative of \(G\), so the gradient descent update rule can be expressed as

where \(\mu\) denotes the step size.

More efficient algorithms use second-order information about cost function. In23 cFastICA algorithm was derived using constrained optimization approach and quasi-Newton technique. The cFastICA algorithm update rule takes the form

and \({W}_{n+1}\) must be orthogonalized in every iteration.

In algebraic complex ICA methods as FOBI the demixing matrix is determined algebraically by the simultaneous diagonalization of a covariance matrix and the matrix based on fourth-order moments. The JADE algorithm is a generalization of FOBI. Algorithm uses Givens rotations to transform the set of \({n}^{2}\) fourth-order moments to more diagonal form—two rows and two columns at a time. Unfortunately, it is well known that computational cost of JADE grows quickly with the number of components \(n\). For a comparison of these two methods see35.

Lie group and their Lie algebra

A Lie group is a topological group \(G\) which also has got the properties of a smooth differential manifold of class \({\mathrm{C}}^{\infty }\) where the algebraic and differential structures are compatible, i.e., the mapping \(G\times G\) into \(G\) given by the formula36

is also of class \({\mathrm{C}}^{\infty }\).

A Lie algebra is the linear space \(L\) over the commutative field \(K\), for which a bilinear operation is defined

called Lie bracket, satisfying the conditions

-

(a)

\(\left[a,b\right]=-[b,a]\) (antisymmetry)

-

(b)

\(\left[a,\left[b,c\right]\right]+\left[c,\left[a,b\right]\right]+\left[b,\left[c,a\right]\right]=0\) (Jacobi identity)

The important class of homeomorphic mapping called left and right translation by \(w\in G\) are associated with Lie groups and are defined as

Those homeomorphisms are diffeomorphisms inducing tangent mapping

where \({\Gamma }^{\infty }\left(G\right)\) is the vector field tangent to \(G\). Any vector field \({X\in \Gamma }^{\infty }\left(G\right)\) with property \({\left({L}_{w}\right)}_{*}X=X\) or \({\left({R}_{w}\right)}_{*}X=X\) for any \(w\in G\) is called left or right invariant. The left and right invariant fields are Lie algebra \(\mathfrak{g}\) and this algebra is isomorphic to tangent space \({T}_{e}G\) in the identity element \(e\) of the group \(G\)36. A space \({T}_{e}G\) with this Lie structure is called the Lie algebra of the Lie group. This algebra can be used to parameterize the neighborhood of identity element \(e\) via application of homeomorphism known widely as the exponential map or simply as exponential denoted as \(\mathrm{exp }: \mathfrak{g}\to G\) and given by the expression

where \({\gamma }_{X,e}\) is the integral carve of the left or right invariant field \(X\) on Lie group \(G\) passing through the identity element \(e\) and is called as one-parameter subgroup in \(G\). The inverse of the exponentiation is called the logarithmic mapping \(\mathrm{Log }:\mathrm{G}\to \mathfrak{g}\) and they are locally bijective. Figure 1 shows geometrical relationship between a Lie group and its Lie algebra.

Optimization on unitary group \(U\left( n \right)\)

Complex ICA problem can be effectively considered as the optimization on the unitary group \(\mathrm{U}(n)\) and applying the tools of Riemannian geometry. Matrix representation of the group \(\mathrm{U}\left(n\right)\) is defined as

with identity element \(e=I\).

The one-parameter subgroup \({\gamma }_{X,I}\left(t\right):t\to \mathrm{U}\left(n\right)\) of matrix representation of \(\mathrm{U}\left(n\right)\) satisfies the condition \({{\gamma }_{X,I}{\left(t\right)}^{H}\gamma }_{X,I}\left(t\right)=I\). By differentiating this equation with respect to \(t\), the condition defining the tangent space \({T}_{W}\mathrm{U}(n)\) at the point \(W\) is obtained and takes the form

The Lie algebra \(\mathfrak{u}\left(n\right)\) (of a Lie group \(\mathrm{U}\left(n\right)\)) is obtained by substituting \(W=I\) in (25)

This algebra is a set of skew-Hermitian matrices.

By defining the inner product, i.e., the metric, the Riemann structure is established on the group \(\mathrm{U}\left(n\right)\). Inner product induced from ambient Euclidean space \(\mathcal{A}={\mathbb{C}}^{n\times n}\) and its inner product \(\langle X,Y{\rangle }_{A}=\mathrm{tr}\left({X}^{H}Y\right)\) obtains the form37

and determines the canonical bi-invariant metric on \(\mathrm{U}\left(n\right)\).

Let \(f:\mathrm{U}\left(n\right)\to {\mathbb{R}}\) be a differentiable real-valued function defined on \(\mathrm{U}\left(n\right)\). The maximum rate of change \(f\) is obtained in the direction of the negative conjugate gradient of the function expressed by rather symbolic form as

where \({B}_{W}\) is the Hessian term. In gradient descent algorithms \({B}_{W}\) is the identity matrix \(I\), while in Newton or quasi-Newton methods \({B}_{W}\) is exact or some approximation of Hessian tensor. In the Riemannian manifold, a contravariant version of the gradient vector is considered. The conjugate gradient of the cost function \({\nabla }_{{W}^{*}}f\) in this version can be derived from

where \({\widehat{\nabla }}_{{W}^{*}}f\in {T}_{W}\mathrm{U}\left(n\right)\) is the contravariant version of the sought conjugate gradient \({\nabla }_{{W}^{*}}f\in {T}_{W}\mathcal{A}\) and \(X\in {T}_{W}\mathrm{U}\left(n\right)\) is any tangent vector. Taking into account the inner product on an ambient Euclidean space \(\mathcal{A}={\mathbb{C}}^{n\times n}\) and on an embedded manifold \(\mathrm{U}\left(n\right)\) defined above, it can be shown38 that the contravariant gradient of the cost function on Lie group \(\mathrm{U}\left(n\right)\) at the point \(W\) can be expressed as

In the Riemann manifold, the curve minimizing length between two points is called geodesic. Geodesic on unitary group of matrices emanating from \(I\) in \(X\) direction can be expressed using exponential map36

Exponentiation of \(n\times n\) matrices is given here by convergent power series \(\mathrm{exp}\left(X\right)={\sum }_{n=1}^{\infty }\frac{{X}^{n}}{n!}\). The left and right translation in (19) and (20) maps geodesic in point \(W\) to geodesic in identity \(I\) by the relation \({\gamma }_{X,I}\left(t\right)={W}^{-1}{\gamma }_{X,W}\left(t\right)={W}^{H}{\gamma }_{X,W}\left(t\right)\) and \({\gamma }_{X,I}\left(t\right)={\gamma }_{X,W}\left(t\right){W}^{-1}={\gamma }_{X,W}\left(t\right){W}^{H}\), therefore, the geodesic emanating from \(W\) has the form

and

using left and right translation, respectively.

In the case of matrix representation of unitary group \(\mathrm{U}(n)\) relationship (32) and (33) can be expressed as

and

where \(R\) is a complex rotation matrix and establishes a local parameterization. Gradient descent algorithms using this type of parameterization are called geodesic (or geodesic flow) methods. In this method the Lie algebra element is mapped exactly to the Lie group elements. The most popular geodesic parameterization used to describe a small neighborhood of the point in \(\mathrm{U}(n)\) is, the previously mentioned, exponential that can be expressed using Taylor expansion

In practice some approximation of exponential are used. An overview of the different types of approximations can be found in39. The most efficient of them are: diagonal Páde, Generalized Polar Decomposition, truncated Taylor series. Another popular parameterization method is Cayley transform of a form \(R=(I-tX{)}^{-1}(I+tX)\). Using Taylor expansion of \(R\) it can be shown that up to the second-order term Cayley transform is equivalent to the matrix exponential37.

The idea of the standard geodesic flow minimization procedure is to perform an optimization motion (in the Lie algebra \(\mathfrak{u}\left(n\right))\) in the direction of the negative gradient \(-{\widehat{\nabla }}_{{W}^{*}}f\) defined in (30) and then map to the Lie group \(\mathrm{U}\left(n\right)\) using some kind of local parameterization. The search direction is obtained by translating the contravariant gradient \({\widehat{\nabla }}_{{W}^{*}}f\in {T}_{W}\mathrm{U}\left(n\right)\) to the identity \(I\), i.e., into the Lie algebra \(\mathfrak{u}\left(n\right)\). Using expression (30) and tangent map (21) the search direction takes the form

which is obviously a skew-Hermitian matrix.

A set of skew-Hermitian matrices \({\mathfrak{u}}_{\Omega }\left(n\right)=\{t\Omega \left|\Omega \in \mathfrak{u}\left(n\right), t\in {\mathbb{R}}\}\right.\) also forms a Lie algebra known as the one-parameter subalgebra of the Lie algebra \(\mathfrak{u}\left(\mathrm{n}\right)\). In this case, however, it is the abelian (commutative) algebra associated with one-parameter subgroup \({R}_{\Omega }(t)=\mathrm{exp}(t\Omega )\). The one-parameter optimization methods consist of searching for a minimum of the cost function along the subalgebra \({\mathfrak{u}}_{\Omega }\left(n\right)\), i.e., for a chosen search direction \({\Omega }_{0}\) which corresponds to the search along the subgroup \({R\Omega }_{0}(t)\). After finding the \({t}_{opt}\), i.e., the value of the \(t\) parameter for which \(f\) reaches the minimum in the \({{W}_{0}R\Omega }_{0}(t)\) subgroup, where \({W}_{0}\) is the starting point, a new search direction \({\Omega }_{1}\) is calculated and the procedure is repeated until convergence. Optimization scheme has the form.

Toral geometry in optimization landscape

The procedure described above requires the use of computationally expensive matrix exponentiation at each step. The decomposition of the optimization movement in the \(\mathrm{U}\left(n\right)\) group into commutating rotations in complex orthogonal planes can be performed by any techniques leading to the diagonalization of the gradient matrix. The choice of Schur decomposition is dictated by the very structure of this type of decomposition. Schur decomposition produces a triangular matrix, which is assumed to be less computationally expensive than decomposition producing a diagonal matrix. The skew-Hermity of the gradient matrix (37) causes the Schur decomposition to produce, in this case, also a diagonal matrix, but with a lower computational cost than strict diagonalization techniques.

A well known fact is40

Theorem 1

If \(A={\mathbb{C}}^{n\times n}\) is a normal matrix, then there exists a unitary matrix \(Q={\mathbb{C}}^{n\times n}\) such that

is diagonal and each \({\lambda }_{i}\) are complex eigenvalues of \(A\).

Corollary 1

Unitary, Hermitian and skew-Hermitian matrices are normal and therefore diagonalizable.

Due to, that all skew-Hermitian matrices have purely imaginary eigenvalues \({\lambda }_{i}=i{\varphi }_{i}\), the gradient matrix \(\Omega\) represented in (37) can be decomposed in the form

where \(B=\mathrm{diag}(i{\varphi }_{1},\dots ,i{\varphi }_{m})\) is a matrix with imaginary eigenvalues on the main diagonal and zeros elsewhere and \(U\in \mathrm{U}(n)\). Because the matrix \(B\) can be decomposed in the form \(B={B}_{1}+\dots +{B}_{n}\) where \({B}_{i}=\mathrm{diag}(0,\dots ,i{\varphi }_{i},\dots ,0)\), therefore, \(W=\mathrm{exp}\Omega\) can be decomposed into the commutative mutually orthogonal rotations \({W}_{i}\)

where \({R}_{i}={e}^{i{\varphi }_{i}}=\mathit{cos}{\varphi }_{i}+i\mathit{si}n{\varphi }_{i}\) and \({W}_{i}=U\mathrm{diag}(1,\dots ,1,{R}_{i},1,\dots ,1){U}^{H}.\)

In this case, exponential of the gradient matrix \(\Omega\) is reduced to a simple and inexpensive calculation of function \(sin{\varphi }_{i}\) and \(cos{\varphi }_{i}\), which reduces the complexity of the optimization algorithm. The rotation matrices \({W}_{i}\) commute \({[W}_{i},{W}_{j}]=0\) for \(i\ne j\), so the optimization process can be performed in Lie algebra \(\mathfrak{u}\left(n\right)\).

In the special case of \(\mathrm{U}(2)\), a diagonal form of the skew-Hermitian matrix can be expressed as

Hence, the unitary matrix \(W\) has the form

This matrix represents the rotations in two mutually orthogonal complex plane and can be viewed as the geometry of the familiar doughnut shape, i.e., the toral geometry (Fig. 2).

This concept can be naturally transferred to the case of \(\mathrm{U}(n)\) for \(n>2\). The rotation matrix \(W\) expressed in the form (41) represents optimization motion in one-parameter subgroup \(\mathrm{exp}(t\Omega )\) as the rotation in n-mutually orthogonal complex plane. The commuting matrices \({W}_{i}\) represent this independent complex plane of rotations and it geometrically coincides with n-dimensional (maximal) torus in \(\mathrm{U}(n)\). The optimization procedure based on this concept consists of translating a skew-Hermitian gradient matrix \(\Omega \in \mathfrak{u}\left(n\right)\) to the diagonal form, thereby establishing the toral subalgebra. The new rotation matrix \(W\) is evaluated by determining n values of \(sin\) and \(cos\) function which correspond to n planes of rotations. Using some line-search procedure, the optimal step size \({t}_{opt}\) is found which minimizes the cost function and then the new skew-Hermitian gradient matrix \(\Omega^{\prime}\) is determined and again translated to the diagonal form which establishes the new toral subalgebra. This procedure is repeated until convergence.

Computational complexity

In this section, the computational complexity of the proposed ICA algorithm is examined and compared with the complexity of other popular ICA algorithms in the case of \(n\times m\) MIMO system. Most ICA algorithms consist of two main steps: the pre-processing stage including centering and whitening and a second stage being an optimization loop. Whitening stage includes the covariance matrix calculation, the eigenvalue decomposition (ED) and the computation of whitened signals, where the ED dominates the computational complexity through the entire pre-processing stage. Computational complexity of complex whitened process with ED calculation is equal to \(2N{m}^{2}+\frac{16}{3}{m}^{3}+4Nnm\) flops, where in the estimation we made the assumption that 1 complex multiplication is equal to 4 real floating point operations41. The optimization stage is primarily an iterative loop consisting mainly of calculating the gradient matrix of the cost function and updating the separation matrix via matrix exponential. There are many alternative methods to accurately and approximately matrix exponential39. In the simulation the Matlab's expm function was used that uses one of the most efficient methods, i.e., the diagonal \((q,q)\)-Padé approximation with scaling and squaring42. This approximation, in real case requires about \(\left(q+j+\frac{1}{3}\right){m}^{3}\) flops to evaluate, where \(j\) denotes the scaling factor39. In typical applications and in general matrix it is estimated between \(20 {m}^{3}\) and \(30{\mathrm{ m}}^{3}\) and for skew-symmetric matrices around \(10 {m}^{3}\)43,44. Moler and Van Loan in39 estimate the complexity of real Schur decomposition to be around \({5m}^{3}\) based on the complexity of the QR decomposition used to create the similarity eigenvectors matrix. By using the most computationally efficient and numerically stable approach for QR decomposition, i.e., the modified Gram-Schmidt procedure the complexity cost can be reduced to \({2m}^{3}\)45. The computational complexity of the individual stages of the proposed algorithm is presented in Table 1, where we estimate the complexity of the matrix exponential as \(4\cdot 2{m}^{3}\).

Based on this analysis, the computational cost of geodesic complex ICA algorithm with diagonal \((q,q)\)-Padé approximation using Matlab's expm function can be estimated as \({\mathrm{C}}_{\mathrm{w}}+\left(16N{m}^{2}+12{m}^{3}+4\left(q+j+\frac{1}{3}\right){m}^{3}\right)it\) flops, where \({\mathrm{C}}_{\mathrm{w}}\) is the complexity of preprocessing stage, and \(it\) is the number of iterations of optimization loop. The computational cost of the proposed geodesic complex ICA algorithm with Schur decomposition can be estimated as \({\mathrm{C}}_{\mathrm{w}}+\left(16N{m}^{2}+12{m}^{3}+{8m}^{3}\right)it\) flops.

The computational complexity of the classic Infomax algorithm used in our experiment (section "MIMO systems' simulation results") is equal to \({\mathrm{C}}_{\mathrm{w}}+4\left({m}^{2}+{m}^{3}+4m+5Nm\right)it\), where \(N\) is the number of data samples. The computational cost of fixed-point process (24) is \(12{m}^{2}N\) so the numerical complexity of symmetric complex FastICA algorithm is equal to \({\mathrm{C}}_{\mathrm{w}}+4\left({n}^{3}+\left(\frac{16{m}^{3}}{3}+ {m}^{2}+3N{m}^{2}\right)it\right)\) flops41. In Jacobi-like algorithms such as JADE the second stage is usually joint orthogonal diagonalization of the set of \(M\) matrices of cummulants of the size \(m\times m\). Its numerical complexity is equal to \(2Im\left(m-1\right)(4Mm+17M+4m+75)\) flops, where \(I\) stands for the number of executed sweeps and \(M\) is the number of matrices to be jointly diagonalized. Computational complexity of JADE algorithm requiring computation of \(2q\)-th order cumulant of \(m\)-dimensional random process which is equal to \({\mathrm{C}}_{\mathrm{w}}+12N{f}_{4}\left(m\right)+12N{m}^{2}+min\left(\frac{16{m}^{6}}{3},24{m}^{3}\left({m}^{2}+3\right)+2Im\left(m-1\right)(75+21m+4{m}^{2})\right)\) flops (in complex case) where \({f}_{4}\left(m\right)\) denotes the number of free entries of the cumulant array and is given as a function of \(m\). A detailed analysis of the computational complexity of popular ICA algorithms can be found in41.

The results of the computational complexity analysis of the algorithms used in the simulation are presented in Table 2.

Assuming that \(N>>m\) the leading component (per iteration) in the estimates shown in Table 2 is of the order of \(O(N{m}^{2})\). In the case of the proposed ICA algorithm, this component is \(16N{m}^{2}\) using both (in the exponentiation step) the Schur decomposition and the expm function. The difference is only in the computational cost of the exponentiation operation, which is \(8{m}^{3}\) in the former case and about \(40{m}^{3}\) in the latter. In the case of the Infomax algorithm, the leading component is \(20Nm\), however, it should be noted that the convergence of this type of classical gradient algorithms is achieved with a much higher number of iterations \(it\), so the total computational cost is higher than for the other algorithms. In the case of the JADE algorithm, the leading component is \(12N{m}^{2}\) and it is smaller than in the proposed algorithm however, the second leading component is even up to the order of \(O\left({m}^{6}\right)\), therefore, its computational complexity strongly depends on the number of transmitted signals \(m\), which is a well-known drawback of algebraic ICA algorithms. The computational cost of the cFastICA algorithm is less than the proposed one and its two leading components are \(12N{m}^{2}+(48{m}^{3})/3\) however, as shown in 46, this algorithm with the number of transmitted signals \(m\ge 6\) is characterized by instability and non-convergence, which were not observed in the case of the proposed approach.

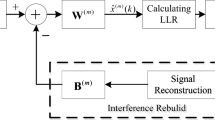

Multiple-Input Multiple-Output Systems

Multiple antenna techniques are used to improve the performance and robustness of wireless links in the telecommunication field. The Multiple-Input Multiple-Output system uses an array of antennas for both transmitting and receiving end. This system gives higher data rate, higher transmit and receive diversity through spatial multiplexing in wireless communication systems47. Wireless communication is currently facing a lot of problems due to increasing levels of interference. Multipath is a propagation phenomenon in which radio signals reach the receiving antenna along two or more paths. The main causes of multipath are atmospheric, dispersion, reflection from water bodies and ground objects such as mountains and buildings. Delayed signals are the results of reflection from terrain features such as trees, buildings or hills. These delayed waves interfere with the direct wave and causes interference that degrades network performance. In radio communication systems, i.e. GSM multipath can cause errors for example inter-symbol interference and affect the quality of communication. Copies of the signal coming via different paths can undergo different attenuation, distortions, delays and phase shift. This causes harmful interference which can in turn significantly reduce the strength of the received signal. This phenomenon is called fading. Fading is usually modeled as a time-varying random change in the amplitude and phase of the transmitted signal. There are several basic fading models such as Weibull, Nakagami, Rician, Log-Normal Shadowing or Rayleigh fading model48. The last model is quite reasonable for scattering mechanisms where there are many small reflections.

The time invariant MIMO channel can be described similarly to the noisy ICA model (2)

where \(x\in {\mathbb{C}}^{{n}_{t}}\), \(y\in {\mathbb{C}}^{{m}_{r}}\) and \(\vartheta \sim CN(0,{{\sigma }^{2}I}_{{n}_{t}})\) denoted the transmitted signal, received signal and circular symmetric Gaussian noise vector with zero mean and covariance matrix \({{\sigma }^{2}I}_{{n}_{t}}\), respectively. The \({n}_{t}\) and \({m}_{r}\) denoted the number of transmitting and receiving antennas and \(H\in {\mathbb{C}}^{{n}_{t}\times {m}_{r}}\) is the channel matrix. Element \({h}_{ij}\) of the channel matrix \(H\) is the channel gain from transmitting antenna \(j\) to receiving antenna \(i.\)

A very common MIMO fading model is the independent identically distribute (i.i.d.) Rayleigh fading model where entries of the channel gain matrix \(H\) are i.i.d. and circular symmetric complex Gaussian. Channel taps are independent random coefficients with power distributed according to Rayleigh distribution.

MIMO systems' simulation results

In this section the proposed algorithm was used for separation independent signals in MIMO system. The choice of the research facility in the form of the MIMO system was dictated by the ease of interpretation and evaluation of the results of the cICA algorithms. The graphical presentation of the results as in Fig. 3 allows for a quick evaluation of the signal separation results, which in other cases is not always easy and possible. In addition, the experiment was to show an example of the use of the proposed cICA algorithm in on-line applications where the speed of algorithms is of key issues. In the case of MIMO systems, other solutions such as coherent decomposition are used with great success. However, it should be emphasized that the purpose of the experiment was not to compare the proposed separation algorithm with the solutions used in MIMO systems, but only to compare it with other cICA-type algorithms in the context of mixed complex signals from a real system.

The proposed algorithm (denoted as Schur) was compared with the same algorithm but using exponential map (denoted as Exp) and with the classical Euclidean gradient descent algorithm (denoted as Euclid) which enforces unitarity of \(W\) after every iterations and with the Euclidean gradient algorithm with extra penalty term (denoted as EuclidPen). In addition, the cFastICA and complex JADE algorithms were tested, as representatives of quasi-Newton and algebraic ICA algorithms, respectively. The standard Matlab expm function was used in the Exp algorithm to map from Lie algebra \(\mathfrak{u}\left(n\right)\) to Lie group \(\mathrm{U}\left(n\right)\).

The simulation uses the classic form of the cost function based on the negentropy approximation expressed in (12) and presented in Section "Lie group and their Lie algebra". The nonlinear function was chosen in the form \(\mathrm{G}\left(u\right)=\frac{1}{2}{u}^{2}, u={\left|Wx\right|}^{2}\). The choice of such non-linearity was made on the basis of46, where the high efficiency of the application of such non-linearity in the algorithms of blind separation of digitally modulated signals was demonstrated.

A number of \({n}_{t}\) independent zero-mean signals are sent by using \({n}_{t}\) antennas and they are received by \({m}_{r}\) receiving antennas. In the experiment a frequency flat MIMO channel matrix \(H\) with the Rayleigh fading and AWGN model was used. The case of a symmetrical MIMO channel with \({n}_{t}={m}_{r}=n\) sources and a non-symmetrical MIMO channel with \({n}_{t}<{m}_{r}\) was simulated. In the first case, the operation of the algorithms was tested with the use of a different number of transmitted signals from \(n=2\) to \(n=8\). In the case of a non-symmetrical MIMO channel, the cases \({n}_{t}\times {m}_{r}=2 \times 3\), \(3 \times 5\) and \(5 \times 8\) were tested. The \(N=5000\) samples of the phase shifted keying signals, binary BPSK and PSK(8), quadrature amplitude modulated signals QAM(4), QAM(16) and QAM(64) were used. Figure 3a and b show the transmitted and received signals, respectively.

The software was implemented in Matlab 7.9 on a PC (Intel i7 2.8 GHz CPU, 8 GB RAM). An example of the simulation result using proposed algorithm is shown in Fig. 3c. In the line search stage an adaptive step-size selection procedure was used with bracketing and selection phases (B-S) as in49 with complex strong Wolf conditions and quadratic interpolation of the cost function. The experiment showed, however, despite of the small number of iterations, large convergence times of the algorithm which proves the high computational cost of multiple evaluation of cost function in B-S stage. So, the optimal step size \(\mu\) was chosen experimentally, as in46, and the rest of the experiment was carried out for the fixed \(\mu =0.5\). The convergence criterion was used as in the cFastICA algorithm23 denoted as F criterion. The M criterion which additionally takes into account the convergence of the cost function was also alternatively used. The F criterion is a measure of \(W\) deviation from unitarity, while in M criterion an additional condition of cost function convergence has been introduced in the form: if \({J}_{k}-{J}_{k-1}<{\varepsilon =10}^{-4}\) break.

In performance analysis, performance indicators, both classic for ICA methods and those with the application context, were taken into account. In the first part of the experiment, low-power noise was used, i.e. the signal to noise ratio was SNR = 30 dB. Performance of separation was measured by classical parameter more suitable for the noiseless ICA model, i.e. Amari Performace Index (API) defined as33

where \({p}_{ij}\) is \(\left(i,j\right)\)-th element of the global system matrix \(P=W{A}^{-1}\). In the second part of the experiment, an analysis of the separation quality at higher and various noise powers was carried out. In this case, an adequate performance indicator is the signal-to-interference-plus-noise ratio (SINR), for the k-th estimated signal with unit variance, defined by50

Traditionally, performance gain in MIMO systems is measured by bit error rate (BER). Performance analysis was therefore supplemented with an analysis of the impact of the SNR on the BER parameter in the case of AWGN and Rayleigh fading channel. The analysis of SINR and BER required removing the ambiguity of order, scale and sign of separated signals, which is inherent in all ICA methods. In addition, the BER analysis also required removing the phase ambiguity of the separated signals.

The results of running time of the algorithms and API were averaged over 100 trials. Examples of evolution of the cost function and API versus the number of iterations are presented in Fig. 4a and b, respectively. The results of the obtained averaged convergence times and API relative to the number of transmitted signals for tested proposed algorithm and of comparison with classic SD, cFastICA and complex JADE in application to symmetric MIMO system are presented in Fig. 5a and b for M and F criterion, respectively.

First of all, it should be noted that in general the convergence times of ICA algorithms in the case of the simulated MIMO channel are longer than in the case of the linear complex ICA problem presented in46, which proves the greater complexity of the simulated problem.

For the symmetrical MIMO channel, shorter convergence times of the algorithms while maintaining good separation quality (i.e., API) were obtained using the M convergence criterion (Fig. 5a). In the case of a symmetrical MIMO channel and for \(n<5\), the highest convergence speed was achieved for the JADE algorithm. The proposed Schur algorithm had the highest convergence speed among the tested algorithms for \(n\ge 7\) signals (speed increase of about 5.3% over JADE algorithm). The cFastICA algorithm gave good results in terms of convergence speed and separation quality for \(n<6\). For the number of transmitted signals \(n\ge 6\), cFastICA did not converge in the set maximum number of iterations, i.e., 200 iterations. The Euclid and EuclidPen algorithms do not operate in the appropriate parameter space, so the convergence speed was lower compared to the other tested algorithms.

In the case of a non-symmetrical MIMO channel, the separation quality using the M criterion with the parameter \({\varepsilon =10}^{-4}\) was not sufficient. Therefore, the value of this parameter was reduced to the level \({\varepsilon =10}^{-5}\), which ensured a similar quality of signal separation as in the symmetrical case. The results of the obtained averaged convergence times relative to the type of MIMO channel for tested algorithms in application to non-symmetric MIMO system are presented in Fig. 6a and b for M and F criterion, respectively. It should be noted that in the non-symmetrical case, the JADE algorithm completely failed in each of the tested MIMO channel types. The cFastICA algorithm worked correctly and the convergence speed was the highest when using the F criterion for the \(5 \times 8\) MIMO channel. In the case of \(2 \times 3\) and \(3 \times 5\), the fastest convergence was noted for the proposed Schur algorithm. When using the M criterion, the convergence speed of all tested algorithms was higher than that of cFastICA, and the highest speed in each of the tested non-symmetrical MIMO channel types was achieved by the proposed Schur algorithm (Fig. 6a).

Figures 7 show a comparative analysis of the SINR vs SNR parameter for all tested algorithms for the symmetrical 3 × 3 and non-symmetrical 5 × 8 MIMO system with AWGN and Rayleigh fading channel. The figures do not show the results for the Exp algorithm due to the fact that the results were identical to those obtained for the proposed Schur algorithm. Figure 8 shows the obtained BER values for each of the extracted signals separately for the case of the non-symmetrical 5 × 8 system. As could be expected, the largest error was obtained for signals with the most complicated constellation scheme, i.e. for QAM(16) and QAM(64) signals, which was also reflected in the results of the average BER value presented in Figs. 9.

Figure 9a was obtained for AWGN channel and the symmetrical 3 × 3 system where the BPSK, QAM(4) and PSK(8) signals were used, while Fig. 9b for the non-symmetrical 5 × 8 system where the QAM(16) and QAM(64) signals were additionally transmitted. As can be seen from the presented analysis of SINR and BER parameters, the proposed method is characterized by the highest separation quality parameters among the tested ICA algorithms for both symmetrical and non-symmetrical MIMO systems.

Based on the results of the experiment, it was found that the convergence speed of the proposed Schur algorithm in the case of a symmetrical MIMO channel is the highest among the tested algorithms in the case of a larger number of transmitted signals (\(n\ge 7\)). The quality of separation (API, BER and SINR) in this case of all tested algorithms was similar as shown in Figs. 5, 7 and 9. The proposed algorithm performed particularly well in the non-symmetric case. In each of the tested cases with M criterion the greatest convergence speed was achieved. The highest SINR result (Fig. 7) and the lowest BER result (Fig. 9). The average increase in convergence speed compared to the cFastICA algorithm was 56% and 25% compared to the other gradient algorithms (Fig. 6). It should be noted that the experiment also showed an increase in the speed of the exponentiation operation using toral decomposition of the gradient matrix compared to the standard Matlab expm procedure. The average increase in the speed of the Schur algorithm was 7% (6,6% for symmetrical and 7,8% for non-symmetrical case), which proves the lower computational cost of the proposed exponentiation procedure.

An important observation is that the classical representatives of ICA algorithms from the Newton and algebraic groups, i.e. cFastICA and complex JADE, which worked well in the case of linearly mixed signals46, failed in the case of the symmetrical (and large number of signals) and non-symmetrical MIMO systems, respectively. The gradient flow algorithms Exp and the proposed Schur in each of the tested cases gave good results both in terms of separation quality and speed, which proves the high universality of the proposed method and potential practical use in on-line applications.

Conclusion

In this paper, geodesic flow optimization algorithm with toral decomposition have been introduced and tested in the MIMO telecommunication system. This type of decomposition establishes in Lie algebra \(\mathfrak{u}\left(n\right)\) a toral subalgebra \(\mathfrak{t}(p)\), which allows for low computationally-expensive mapping to Lie group \(\mathrm{U}\left(n\right)\). The theoretical part of this paper presents Riemann and Lie structure of the group \(\mathrm{U}\left(n\right)\). The method of introducing the toral subalgebra \(\mathfrak{t}(p)\) using the diagonalization of skew-Hermitian gradient matrix is also presented. In the experimental part, the proposed algorithm was tested in the MIMO system and its operation was compared with other SD algorithms, as well as with the classic cFastICA and JADE algorithms. The simulation showed good results in terms of speed of operation and quality of separation, especially in an nonsymmetrical MIMO system. The experiment showed that the proposed method is a high-performance, low-cost, and therefore, fast algorithm that enables the solution of the complex ICA problem in practical application.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Luo, Z., Li, C. & Zhu, L. A comprehensive survey on blind source separation for wireless adaptive processing: Principles, perspectives, challenges and new research directions. IEEE Access 6, 66685–66708 (2018).

Raju, K. et al. Blind Source Separation for Interference Cancellation in CDMA Systems (Helsinki University of Technology, 2006).

Asharif, F. et al. Performance improvement of constant modulus algorithm blind equalizer for 16 QAM modulation. Int. J. Innov. Comput. Inf. Control 7(4), 1377–1384 (2013).

Li, C., Zhu, L., Luo, Z., Zhang, Z. & Yang, Y. Effective methods and performance analysis on data transmission security with blind source separation in space-based AIS. China Commun. 19(4), 154–165 (2022).

Khosravy, M. et al. Probabilistic Stone’s Blind Source Separation with application to channel estimation and multi-node identification in MIMO IoT green communication and multimedia systems. Comput. Commun. 157(1), 423–433 (2020).

Godard, D. Self-recovering equalization and carrier tracking in two-dimensional data communication systems. IEEE Trans Commun. 28(11), 1867–1875 (1980).

Oh, K. N., & Chin, Y. O. Modified constant modulus algorithm: blind equalization and carrier phase recovery algorithm. In Proceedings IEEE International Conference on Communications ICC'95. IEEE. pp. 498–502 (1995).

Ikhlef, A., Le Guennec, D., & Palicot, J. Constant norm algorithms for MIMO communication systems. In 2005 13th European Signal Processing Conference (pp. 1–4). IEEE (2005).

Sansrimahachai, P., Ward, D. B., & Constantinides, A.G. Multiple-input multiple-output least-squares constant modulus algorithms. In GLOBECOM'03. IEEE Global Telecommunications Conference (IEEE Cat. No. 03CH37489). IEEE. pp. 2084–2088 (2003).

Leary, J. Least-squares multi-user CMArray: A new algorithm for blind adaptive beamforming. In Conference Record of the Thirty-First Asilomar Conference on Signals, Systems and Computers (Cat. No. 97CB36136). IEEE. pp. 902–905 (1997)

Kang, X. F., Yang, J. W. & Shuo, M. Blind adaptive receiver based on RLS-CMA for TH-UWB system. J. China Univ. Posts Telecommun. 18(6), 38–43 (2011).

Papadias, C. B. Globally convergent blind source separation based on a multiuser kurtosis maximization criterion. IEEE Trans. Signal Process. 48(12), 3508–3519 (2000).

Ikhlef, A. et al. Constant modulus algorithms using hyperbolic Givens rotations. Signal Process. 104, 412–423 (2014).

Sansrimahachai, P., Ward, D. B. & Constantinides, A. G. Blind source separation of instantaneous MIMO systems based on the least-squares constant modulus algorithm. IEE Proc. Vis. Image Signal Process. 152(5), 616–622 (2005).

Shi, K. & Zhang, X. Blind equalization of MIMO systems based on orthogonal constant modulus algorithm. J. Electron. 23, 181–183 (2006).

Xiang, Y. Blind source separation based on constant modulus criterion and signal mutual information. Comput. Electr. Eng. 34(5), 416–422 (2008).

Ikhlef, A. & Le Guennec, D. A simplified constant modulus algorithm for blind recovery of MIMO QAM and PSK signals: A criterion with convergence analysis. EURASIP J. Wirel. Commun. Netw. 2007(1), 090401 (2007).

Castedo, L., Escudero, C. J. & Dapena, A. A blind signal separation method for multiuser communications. IEEE Trans. Signal Process. 45(5), 1343–1348 (1997).

Guo, R., Luo, Z. & Li, M. A survey of optimization methods for independent vector analysis in audio source separation. Sensors 23(1), 493 (2023).

Cardoso, J.- F. Source separation using higher order moments. In International Conference on Acoustics, Speech, and Signal Processing. IEEE. pp. 2109–2112 (1989).

Cardoso, J. –F., & Souloumiac, A. Blind beamforming for non Gaussian signals. In IEE proceedings F (radar and signal processing). IET Digital Library. pp. 362–370 (1993).

Aapo, H., Juha, K. & Erkki, O. Independent Component Analysis (Wiley, 2001).

Bingham, E. & Hyvärinen, A. A fast fixed-point algorithm for independent component analysis of complex valued signals. Int. J. Neural Syst. 10(1), 1–8 (2000).

Novey, M. & Adali, T. On extending the complex FastICA algorithm to noncircular sources. IEEE Trans. Signal Process. 56(5), 2148–2154 (2008).

Mika, D. & Jozwik, J. Lie group methods in blind signal processing. Sensors 20(2), 440 (2020).

Plumbley, M. D. Geometrical methods for non-negative ICA: Manifolds, Lie groups and toral subalgebras. Neurocomputing 67, 161–197 (2005).

Dariusz, M. I. K. A. & Kleczkowski, P. ICA-based single channel audio separation: new bases and measures of distance. Arch. Acoust. 36(2), 311–331 (2011).

Mika, D., Budzik, G. & Jozwik, J. Single channel source separation with ICA-based time-frequency decomposition. Sensors 20(7), 2019 (2020).

Mika, D., & Kleczkowski, P. Automatic clustering of components for single channel ICA-based signal demixing. Lisbon, Portugal. s.n., pp. 5350–5359 (2010)

Cardoso, J. -F., & Adali, T. The maximum likelihood approach to complex ICA. In 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings. IEEE. pp. V–V (2006).

Calhoun, V., & Adali, T. Complex infomax: Convergence and approximation of infomax with complex nonlinearities. In Proceedings of the 12th IEEE Workshop on Neural Networks for Signal Processing. IEEE. pp. 307–316 (2002).

Li, H. & Adali, T. T. Algorithms for complex ML ICA and their stability analysis using Wirtinger calculus. IEEE Trans. Signal Process. 58(12), 6156–6167 (2010).

Amari, S. et al. A new learning algorithm for blind signal separation. In Advances in Neural Information Processing Systems (eds Touretzky, D. et al.) (Morgan Kaufmann Publishers, 1996).

Novey, M. & Adali, T. Complex ICA by negentropy maximization. IEEE Trans. Neural Netw. 19(4), 596–609 (2008).

Miettinen, J. et al. Fourth moments and independent component analysis. Stat. Sci. 30(3), 372–390 (2015).

Wojtyński, W. Grupy i algebry Liego. Warszawa: PWN, 1986. Vol. Biblioteka matematyczna t.60.

Abrudan, T. E., Eriksson, J. & Koivunen, V. Steepest descent algorithms for optimization under unitary matrix constraint. IEEE Trans. Signal Process. 56(3), 1134–1147 (2008).

Absil, P. A., Mahony, R. & Sepulchre, R. Optimization Algorithms on Matrix Manifolds (Princeton University Press, 2009).

Moler, C. & Van Loan, C. Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Rev. 45(1), 3–49 (2003).

Serre, D. Matrices: Theory and Applications (Springer, 2002).

Albera, L. et al. ICA-based EEG denoising: A comparative analysis of fifteen methods. Bull. Polish Acad. Sci. Techn. Sci. 60(3), 407–418 (2012).

Higham, N. J. The scaling and squaring method for the matrix exponential revisited. SIAM J. Matrix Anal. Appl. 26(4), 1179–1193 (2005).

Del Buono, N., Lopez, L. & Peluso, R. Computation of the exponential of large sparse skew-symmetric matrices. SIAM J. Sci. Comput. 27(1), 278–293 (2005).

Del Buono, N., & Lopez, L., A survey on methods for computing matrix exponentials in numerical schemes for ODEs. InInternational Conference on Computational Science. pp. 111–120 (2003).

Golub, G. H. & Van Loan, C. F. Matrix Computations (JHU Press, 2013).

Mika, D. Fast gradient algorithm with toral decomposition for complex ICA. Mech. Syst. Signal Process. 178, 109266 (2022).

Gesbert, D., et al. MIMO wireless channels: Capacity and performance prediction. In Globecom'00-IEEE. Global Telecommunications Conference. Conference Record (Cat. No. 00CH37137). IEEE, pp. 1083–1088 (2000).

Tse, D. & Viswanath, P. Fundamentals of Wireless Communication (Cambridge University Press, 2005).

Wright, J. N. S. J. Numerical Optimization (Springer, 1999).

Koldovsky, Z., & Tichavsky, P. Methods of fair comparison of performance of linear ICA techniques in presence of additive noise. In 2006 IEEE international conference on acoustics speech and signal processing proceedings. IEEE. pp. V-V (2006).

Walter, R. Real and Complex Analysis. (1987).

Ollila, E., Koivunen, V., & Poor, H. V. Complex-valued signal processing—essential models, tools and statistics. In 2011 Information Theory and Applications Workshop (pp. 1–10). IEEE (2011).

Wirtinger, W. Zur formalen theorie der funktionen von mehr komplexen veränderlichen. Mathematische Annalen 97(1), 357–375 (1927).

Author information

Authors and Affiliations

Contributions

D.M. is the author of the full text of the manuscript and all related elements.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mika, D. Fast gradient algorithm for complex ICA and its application to the MIMO systems. Sci Rep 13, 11633 (2023). https://doi.org/10.1038/s41598-023-36628-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-36628-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.