Abstract

This paper describes an image processing-based technique used to measure the volume of residual water in the drinking water bottle for the laboratory mouse. This technique uses a camera to capture the bottle's image and then processes the image to calculate the volume of water in the bottle. Firstly, the Grabcut method separates the foreground and background to avoid the influence of background on image feature extraction. Then Canny operator was used to detect the edge of the water bottle and the edge of the liquid surface. The cumulative probability Hough detection identified the water bottle edge line segment and the liquid surface line segment from the edge image. Finally, the spatial coordinate system is constructed, and the length of each line segment on the water bottle is calculated by using plane analytical geometry. Then the volume of water is calculated. By comparing image processing time, the pixel number of liquid level, and other indexes, the optimal illuminance and water bottle color were obtained. The experimental results show that the average deviation rate of this method is less than 5%, which significantly improves the accuracy and efficiency of measurement compared with traditional manual measurement.

Similar content being viewed by others

Introduction

With the development of medicine and biology, medical animal experiments have become essential to clinical medicine1,2,3. The demand for laboratory animal samples is also increasing. Therefore, more and more medical institutions and animal breeding institutions choose to raise experimental animals on a large scale. In breeding experimental animals, the most important thing is to meet the needs of animals for water and food. Experimental animals are usually kept in special cages, where they drink water from water bottles placed in the cages. When the remaining water in the water bottle in the feeding cage is insufficient, it is necessary to supplement it on time. Accurate and timely detection of residual water in water bottles is a prerequisite for ensuring the drinking water needs of experimental animals.

Designing measurement schemes through various sensors is the most commonly used method for measuring the volume of water in containers. However, the narrow cage space and the frequent activity of animals in the cage will limit the installation of sensors, making it difficult to measure the remaining amount of water and food by installing sensors. In the existing feeding mode, the method of visually observing the water bottle by the breeder is usually selected to estimate the water amount roughly. Artificial methods require a lot of energy and time for breeders, but frequent personnel entry and exit may also damage the sterile growth environment necessary for experimental animals. In addition, artificial methods also limit the improvement of automation in the feeding process. Therefore, proposing a non-contact detection method for water residue in water bottles is significant.

Scholars in various fields have researched automated measurement methods for container liquid volume and proposed many solutions. Singh et al.4 proposed a liquid volume measurement method based on optical fiber sensors. Since the deformation degree of the fiber ring in the sensor is proportional to the liquid volume in the container, the liquid volume in the container can be measured by detecting the data fed back by the sensor. O. Alonso Hernandez et al.5 proposed a measurement system for residual oil volume in a fuel tank based on an infrared transmitter and receiver. This method measures the distance between the liquid level in the container and the receiver based on the intensity of the infrared signal received by the receiver by installing a float in the liquid container and then calculating the volume of the liquid. Zhang Jingyue et al.6 proposed a liquid level measurement method based on laser and machine vision technology to measure liquid volume in glass gauges. By analyzing the refraction phenomenon of laser light in the liquid to be measured, the corresponding liquid volume value can be obtained by calculating the liquid level height. Parisa Esmaili et al.7 proposed a detection scheme for container liquid levels based on pressure sensors. This scheme collects the pressure generated by the liquid in the container through sensors installed inside the container and then calculates the volume of the liquid. Kazuyuki Kobayashi et al.8 proposed a Doppler module-based method for container liquid level detection. This method judges the position of the liquid level line based on the different reflection characteristics of microwave waves on various materials. It can then obtain the volume of water in the container. Z. Zakaria et al.9 proposed a detection method based on ultrasonic sensors, which judges whether there is liquid at the detection location based on the differences in the propagation of ultrasonic waves in different media, and then infers the position of the liquid level line. Shaheen Ahmad et al.10 proposed a liquid level detection method based on capacitive sensors, which can reflect the changes in liquid level in the form of electrical capacity changes, thereby realizing the detection of liquid level position. Analyze the principles of the above methods and summarize the advantages and disadvantages of each method in Table 1.

After thoroughly studying the feeding environment and living habits of medical animals, it is concluded that the following characteristics are required for the measurement method of liquid volume in bottles used in this scenario: 1. The measurement method needs to be timely in order to determine whether the water in the bottle needs to be replenished on time; The measurement method should avoid affecting the expected growth of medical animals; Due to the small space of the feeding cage, the measurement method should have a relatively simple hardware structure; 4. The measurement method should have a good economy while ensuring accuracy.Summarizing the principles, advantages, and disadvantages of the above liquid volume measurement algorithms, the current mainstream detection schemes are mainly based on various sensors with relatively complex structures and high costs. They are not suitable for application in medical animal feeding scenarios. In addition, the installation location of the sensor too close to the animal being raised can also pose a potential hazard.

Machine vision is a technology involving sensors that simulate the human eyes collecting and processing images. By detecting the target image's position, shape, texture, and other characteristics, parameters such as object size can be quantified. Therefore, machine vision technology can replace manual measurement of residual water in water bottles11,12,13,14,15,16,17. Based on the specific needs of medical animal feeding scenarios, this paper proposes a method for detecting the remaining water volume in water bottles based on machine vision. In our research, edge detection algorithms, image segmentation algorithms, and line detection algorithms are used to process the captured water bottle image to obtain key feature parameters and calculate the volume of water in the water bottle. The experiment used the most common mice-feeding cage in medical animal feeding environments as an example. This method can achieve real-time non-contact detection of the volume of water in the bottle, avoiding adverse effects on the growth of medical animals. This detection method only requires light sources, cameras, and computers, without adding additional sensors. It has a simple structure and is easy to install, ensuring high measurement accuracy and good economy.

Materials and methods

Experimental setup and data collection

Experiments were conducted in December 2019 at Shandong University of Science and Technology, Qingdao, Shandong Province, China. The bottles were purchased from the breeding grounds of laboratory mice. The experiment was carried out at room temperature of 25℃. During the experiment, in order to reduce the error generated during the transfer of water from the measuring cylinder to the water bottle, the mass of water in each water bottle is determined by weighing with a calibrated electronic scale, and the volume in the bottle is calculated using the previously measured density. The image processing algorithms involved in this paper are all based on opencv 4.4.0.

Figure 1 shows the image acquisition system composed of a CMOS camera, light source, support frame, and computer. The resolution of the camera is 1920 pixels × 1080 pixels. The camera captured the water bottle at a horizontal angle of 45° from a high angle of 45°. The light source was in line with the camera and the water bottle to illuminate and enhance the contrast between the liquid level and the background. Sixty-eight water groups of different quality were weighed and bottled separately and placed in a laboratory mice incubator for images collection. The resolutions of the acquired images were adjusted to be 1344 pixels × 756 pixels to meet the actual detection speed.

Image acquisition system

The imaging system consisted of an HD camera (SY003-V01, WeiXinShiJie, Wuhan, China) mounted on a metal stand at 0.3 m(within the field of view) above the box shown in Fig. 1. The HD camera was connected via a USB port to a computer installed with an Intel i5- 7200U CPU, 2.5 GHz, 8 GB physical memory, Microsoft Windows 10 operation system, and Software Development Kit (SDK). Images were acquired from the HD camera using Visual Studio 2017 software with the image acquisition Toolkit. The images involved in this paper are all RGB images. The images were transferred to a 1 TB hard drive for subsequent analysis. Images are captured from each water bottle and shown in Figs. 2–5.

There are two mainstream laboratory mice drinking bottles on the market, as shown in Fig. 3 and 4. Figure 3 is white translucent bottles, and Fig. 4 is brown transparent bottles. Two different color water bottles were made for the experiment more rigorous to determine the influence of varying color bottles on the measurement speed and light source on the judgment of liquid level position of different color bottles. Figure 5 is green translucent bottles, and Fig. 6 is purple.

Foreground extraction

The Grabcut image segmentation algorithm was mainly used for the water bottle foreground extraction. The Grabcut algorithm is an improved algorithm based on the Graphcut algorithm.18,19,20,21,22,23,24,25 Through the Grabcut algorithm, the water bottle can be separated from the background to obtain a foreground target and processed.

In the foreground extraction process, the foreground area of the water bottle image is first annotated with a rectangular box. Due to the fixed shooting position of the image, the position of the water bottle as the foreground in the image is also fixed, so the position of the rectangular box is a predetermined unified value.

Create a K-dimensional full-covariance Gaussian Mixture Model (GMM) to process the pixels of the foreground and the background of the water bottle, These models will be used to determine the foreground or background probability of each pixel in the image. Using classification information for iterative segmentation, in each iteration, the algorithm updates the energy function using the classification results from the GMM, and performs segmentation by maximizing the energy function. The energy function involved in iterative segmentation is as follow:

In the formula, E is the Grabcut energy function, and the smaller its value, the better the image segmentation effect; U is the region term, indicating the probability of assigning pixels to the foreground or background; V is the smoothing term, indicating the similarity between adjacent pixels; α is opacity, α \(\in\) [0,1], 0 is the image's background, and 1 is in the foreground of the image; k represents the k-th Gaussian component in the GMM model; x is the input image. θ is a parameter in GMM, which is a set of mean vectors, covariance matrices, and weights, θ can be defined as:

where π (α, k) is the combined weight of each Gaussian component; μ (α, k) is the mean of each Gaussian model; \(\sum \left(\alpha ,k\right)\) is the covariance matrix. The parametric model performs an iterative operation. After each iteration, the current segmentation result is converted into a new image and used as the initial state of the next iteration until the iterative process converges and reaches an optimal state. After the iteration is completed, set the pixel value of the background part of the image to 0 and output the extraction result of the water bottle in the image. Take the white water bottle as an example, Fig. 6a is the original image, and Fig. 6b is the segmented image.

Edge detection

The surface of the water bottle has irregular textures, which will affect the detection effect of the algorithm on the edge line and liquid level line of the water bottle. Use Canny edge detection operator to eliminate the influence of bottle texture and improve features such as level and edge26,27,28,29,30.a. Gaussian filtering

The noise of the image affects the processing of the image, so the noise is preferentially filtered to prevent false detections. The image is convolved using a Gaussian filter to smooth the image, reducing the apparent noise effects on the edges.b. Calculate the gradient amplitude and direction

The edges can point in different directions. Use the edge difference operator to calculate the horizontal difference \({G}_{x}\) and the vertical difference \({G}_{y}\) as:

where \(M\left(i,j\right)\) is the gray value of the pixel of the gray image.

The modulus \(G\left(i,j\right)\) of the gradient is:

The gradient direction \(\theta \left(i,j\right)\) is:

c. Non-maximum suppression:

The gradient intensity of the current point is compared to that of the positive and negative gradient direction points. Retain the gradient strength of the current point if it is significant enough. Otherwise, the suppression is set to 0. The effect of non-maximum suppression is to refine the edges of the original blur.d. Double threshold:

The Canny algorithm detects the gradient value of boundary points and applies high and low thresholds to distinguish edge pixels. Set a high threshold H with a low threshold L. If \(G(i,j)\) > H, it means that \(G(i,j)\) is regarded as a strong edge point; if H ≤ \(G(i,j)\) ≤ L, it means that \(G(i,j)\) is regarded as a weak edge point; If \(G(i,j)\)< L, it means that the point \(G(i,j)\) is suppressed. After edge detection, strong edge points are determined to be the edge part of the image, while weak edge points are determined to be the edge part of the image based on whether they are adjacent to the strong edge. Because the position of the liquid level in the image belongs to the weak edge, to ensure that the liquid level position can be recognized in the later image processing, this paper reduces the threshold in the aspect of edge detection to ensure the accuracy of the liquid level position. In this paper, the high threshold is set to 120, the low threshold is set to 80, and the core size is set to 3 × 3. Both vertical and horizontal standard deviations are 0.8.Fig. 7a is the segmented image, and Fig. 7b is the image obtained by the Canny operator.

Because the position of the liquid level in the image belongs to the weak edge, to ensure that the liquid level position can be recognized in the later image processing, this paper reduces the threshold in the aspect of edge detection to ensure the accuracy of the liquid level position. Figure 7a is the segmented image, and Fig. 7b is the image obtained by the Canny operator.

Line detection

It is necessary to obtain parameters such as the edge of the water bottle, the edge of the liquid surface, and the inclination angle of the water bottle to calculate the volume of water in the water bottle. In this paper, the cumulative probability Hough transform31,32,33,34 is used to obtain key line segment's expression and the line segment's endpoint. Mark the pixel points in the extracted edge image, generate a set of lines passing through the point at different angles, and represent the set in polar coordinates. The mathematical expression is as follows:

In the equation, \(\left(x,\mathrm{y}\right)\) is the coordinate of a point on the line, \(r\) is the shortest distance from the origin to the line where the point \(\left(x,\mathrm{y}\right)\) is located; θ is the angle between the line equation of r and the x-axis.

The minimum voting threshold in this paper is set to 100. When the intersection points between curves exceed the minimum voting threshold, it is determined that the points forming these curves belong to the same line in the Cartesian coordinate system, and all lines in the image can be extracted by traversing all curves in the polar coordinate system. By filtering the extracted straight lines through a fixed slope range, the liquid level line and key contour line of the water bottle can be obtained. In order to more intuitively understand the effect of the algorithm, the detected straight line is marked on the water bottle. As shown in Fig. 8, according to needs, this paper selects straight lines representing the water bottle container's length, width, height, and liquid level respectively for calculation. The straight-line extracted by this method is relatively complete and has no fracture, which can meet the requirements of the following analysis.

Balance calculation

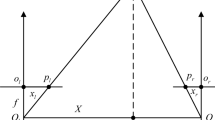

In the actual situation, the relative position of the water bottle and the camera remains the same, so projection and perspective are used to obtain the data of the water bottle. Use the projection length \({L}_{x}\) of each straight line of the water bottle body and the proportional relations \(\alpha\) of the perspective to calculate the volume of liquid. Because different sides of the water bottle have different positions, the projections’ length and perspective ratio are also different. In this research, take one of the edges as an example. The schematic is shown in Fig. 9. o-\({x}_{w}\)-\({y}_{w}\)-\({z}_{w}\) and o-\({x}_{c}\)-\({y}_{c}\)-\({z}_{c}\) are the world coordinate and camera coordinate systems, respectively. \(FC\) is a sideline of the water bottle, \({F}^{\mathrm{^{\prime}}\mathrm{^{\prime}}}{C}^{\mathrm{^{\prime}}\mathrm{^{\prime}}}\) is the projection of \(FC\) in the camera coordinate system o-\({x}_{c}\)-\({y}_{c}\)-\({z}_{c}\), and \(f\) is the camera focal length.

According to the projection principle, the projection of the line segment \(FC\) is \({{F}^{\mathrm{^{\prime}}}C}^{\mathrm{^{\prime}}}\), as shown in Fig. 10. The ratio \({\alpha }_{FC}\) of the length of line segment \(FC\) to line segment \({{F}^{\mathrm{^{\prime}}}C}^{\mathrm{^{\prime}}}\) is

As shown in Fig. 11, The proportional relationship between the projection plane and the imaging plane is obtained by the perspective principle

The setting of the world coordinate system is shown in Fig. 12. The measurement algorithm proposed in this paper can calculate the volume of liquid in the bottle based on the edge line and the position of the liquid level line of the water bottle in the image. On the premise of ensuring the integrity of the liquid level line on the bottle in the captured image, the camera position and shooting angle will not change the principle and results of the detection algorithm. In this paper, the camera coordinates are \(\left({x}_{s},0,{z}_{s}\right)\), The shooting angle is 45 degrees in the horizontal direction and 45 degrees in the vertical direction. In this case, the expression of the plane S where the camera is located is as follows:

Then the normal vector of the plane \(S\) is \(\overrightarrow{n}=\left(1,-\mathrm{1,1}\right)\). The coordinates of each point on the image and the expression of each edge in the bottle body can be obtained by the advance measurement.

Take one of the edges \(FC\) as an example, and set the vector \(\overrightarrow{FC}=\left({x}_{C}-{x}_{F},{{y}_{C}-y}_{F},{{z}_{C}-z}_{F}\right)\), the cosine of the vector \(\overrightarrow{FC}\) and the normal vector \(\overrightarrow{n}\) is:

Then the projection of the vector \(\overrightarrow{FC}\) on the plane is:

where \({L}_{FC}\) is the measured length of the known line segment.

Therefore, the ratio \(\alpha\) between the actual length of the line segment and the projected length is:

The ratio of the projection length of each line segment to the length of the image \(\beta\) is:

where \({L}_{{F}^{\mathrm{^{\prime}}\mathrm{^{\prime}}}{C}^{\mathrm{^{\prime}}\mathrm{^{\prime}}}}\) is the length of the line segment on the graph.

Then the formula for changing the length of the line segment on the image to the length of the corresponding actual line segment is:

where \(x\) is the corresponding line segment.

To facilitate the calculation, extract and complete the edge lines, as shown in Fig. 13. Since the water bottle is inserted obliquely on the laboratory mice incubator, and the bottleneck is irregular, the total volume of the water bottle minus the volume of the air can be used to obtain the volume of water in the water bottle. Since the existing inclination angle is known as \(\gamma\), the length of each line on the image is:

The air volume calculation formula is different for water bottles containing different amounts of water. By detecting the position of the liquid level line \(MN\), select the appropriate calculation formula. As shown in Fig. 14, when there is a large amount of water in the bottle, the formula for calculating the volume of air in the bottle can be obtained.

As shown in Fig. 15, when there is only a small amount of water in the bottle, the formula for calculating the air volume in the bottle can be obtained.

Then the remaining volume of water \({V}_{w}\) is:

In the formula: \(V\) is the fixed capacity of a bottle.

Results and discussion

Influence of light conditions and water bottle color

In the experiment, both the light intensity and the color of the bottle will affect the extraction of the liquid level line. Therefore, in this paper, four water bottles of different colors are selected and backlit by the auxiliary light source. The influence of water bottle color and light intensity on the algorithm is tested by adjusting the illuminance. Such as Figs. 16–19

The liquid level lines of four different color bottles were extracted under 10 illumination conditions. It is evident from the image that the liquid level position is not easy to recognize in the case of natural light. After the light source is involved, the liquid level position in the image becomes apparent due to color difference.However, with the increase of light source brightness, the liquid level position becomes blurred.

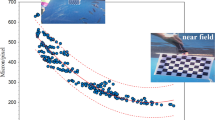

This paper takes the number of liquid level pixels detected by the Hough line as the quantitative index to quantify the detection effect. The larger the number of pixels, the better the detection effect is. Take 150 ml water and put it into a water bottle to test the number of pixels at the liquid level under four different illuminances. After the experiment, ten groups of data were obtained, as shown in Fig. 20.

When the color of the detected water bottle is different from the surrounding illuminances, the detection speed will change. Therefore, the detection speed in different cases is summarized. In Table 2, time is measured in milliseconds (ms).

It can be seen from Table 2, the Bottles of different colors have a specific impact on the detection speed . And the illuminance does not affect the detection speed.

According to Fig. 20, there is a specific relationship between illuminance and detection accuracy. Under the condition of specific illumination assistance, the accuracy of the detected liquid level position is relatively clear. However, with the increase of brightness, the detection accuracy decreases gradually. Because the size of the brown bottle is different from that of the other three-color bottles, the length of the liquid level will change under the same volume of water, which will lead to a great change in the number of pixels on the liquid level. The experimental results show that the effect of the liquid level line extraction algorithm is affected by the color of the bottle and the illumination intensity. The algorithm performs best under the medium illumination condition. In different applications, the effect of the liquid level line extraction algorithm can be enhanced by changing the color of the water bottle.

Algorithm performance evaluation

In order to objectively evaluate the performance of the algorithm, the volume of 276 samples was measured by this algorithm, and were compared with the actual measurements with digital weighing balance, respectively.

The experiment involved four different colors of water bottles: white, brown, green, and purple. Based on the different colors of the water bottles, the experiment was divided into four groups, each consisting of 24 samples (a large amount of water), 24 samples (a medium amount of water), and 21 samples (a small amount of water). The experimental results are shown in Table 3.

The experimental data was evaluated using two evaluation indicators. The coefficient of determination (R2) and Mean Absolute Percentage Error (MAPE) are used to determine how close the measured value is to the actual value. The value of R2 indicates closeness between the estimated and the measured volumes where R2 = 1 indicates a perfect correlation and R2 value close to zero indicates poor correlation. The value of MAPE represents the error between the Estimated volume and the actual volume. The lower the value, the closer the Estimated volume is to the actual volume.

The experimental results show that the algorithm has the best measurement accuracy for the volume of water in white water bottles, while the accuracy for the volume of water in brown water bottles is the worst. This is because the color of brown water bottles has the most significant interference on the linear detection effect, which affects the accuracy of subsequent algorithms. The following text takes the white water bottle with the best algorithm measurement effect as an example to analyze the impact of water volume on detection accuracy.

It can be seen from Figs. 21, 22, 23 and Table 3 that R2 = 0.9872 and MAPE = 1.24% in case of large water volume and R2 = 0.9932 and MAPE = 4.09% in case of medium water volume. The measured value is very close to the actual value, and the algorithm performs well. When the water volume is low, R2 = 0.9996, MAPE = 12.73%. Under these circumstances, the difference between the measured and actual values is significant. The accuracy of prediction results under low water volume is improved by establishing a linear regression model. Forty-eight samples were used for training purposes, and the regression equation with measured volume as the dependent variable and estimated volume as the independent variable was obtained.

The developed model is used to test the data of 10 samples under low water volume, and the results are shown in Fig. 24, Fig. 25, and Table 4. R2 = 0.9996 and MAPE = 0.53% in case of small water volume. The model has a good prediction effect under low water conditions.

The experimental results show that the average measurement accuracy of the algorithm is above 95% under the condition of high and medium water volume. Moreover, the difference between measured volume and actual volume is relatively significant under the condition of low water volume. The reason may be that when the amount of water is small, the shape of the liquid level line at the bottle mouth is irregular. The volume of liquid at the mouth of the bottle cannot be measured, which has a particular impact on the accuracy of straight-line detection. After using linear regression model correction, the algorithm performs well in low water conditions. On the whole, the measurement accuracy of the algorithm can meet the requirements of practical use.

Limitation

There may be some possible limitations in this study. Although this paper determined the optimal lighting intensity in the experiment, there was no specific study on the possible impact of lighting intensity on the accuracy of the algorithm. In addition, different shapes of water bottles can also affect the actual measurement performance of the algorithm. Further research can be conducted on the impact of different lighting intensities and water bottle shapes on the algorithm.

Conclusion

This paper describes an image processing-based technique used to measure the volume of residual water in the drinking water bottle for the laboratory mouse. First, the Grabcut algorithm was used to separate the foreground from the background, and the image of the drinking bottle was extracted. The Canny edge and Hough probability line detection were then used to detect the water bottle edge and liquid level positions. Based on the projection principle, the actual length of the upper line segment of the picture is calculated, and then the volume of water is calculated. By comparing the light sources at different positions, the illuminance and the bottle with different colors, and by detecting the speed and the number of pixels of liquid level, the optimal position, the optimal illuminance, and the bottle with the best color were obtained. Finally, a linear regression model corrects the volume measurement results under low water volume to ensure that the algorithm has excellent performance under different water volumes.

It can be seen from the experimental results that according to the comparison between the actual value and the measured value, the average deviation rate is less than 5%. It means that the algorithm proposed in this paper has high accuracy in estimating the residual water volume in a square water bottle. Compared with traditional manual measurement, the method proposed in this paper has the advantage of less work and improved feeding efficiency. It can accurately estimate the amount of water in the bottle with high accuracy, improve breeding efficiency, and can meet the detection needs of large-scale breeding of laboratory mice.

Data availability

The data used to support the findings of this study are included within the paper.

References

Uchikoshi, A. & Kasai, N. Survey report on public awareness concerning the use of animals in scientific research in Japan. J. Exp. Anim. 68(3), 307–318 (2019).

Shapiro, K. Human-animal studies: Remembering the past, celebrating the present, troubling the future. J. Soc. Anim. 28(7), 797–833 (2020).

Bernard, M., Jubeli, E., Pungente, M. D. & Yagoubi, N. Biocompatibility of polymer-based biomaterials and medical devices–regulations, in vitro screening and risk-management. J. Biomater. Sci. 6(8), 2025–2053 (2018).

Singh, H. K., Basumatary, T., Chetia, D. & Bezboruah, T. Fiber Optic sensor for liquid volume measurement. J. IEEE Sens. J. 14(4), 935–936 (2013).

Alonso-Hernández, O. et al. Fuzzy infrared sensor for liquid level measurement: A multi-model approach. J. Flow Meas. Instrum.. 72, 101696 (2020).

Jingyue, Z. et al. Volume metrology method for micro liquid based on laser and machine vision. J. Acta Metrol. Sin. 39, 504–509 (2018).

Esmaili, P., Cavedo, F. & Norgia, M. Characterization of pressure sensor for liquidlevel measurement in sloshing condition. J. Trans. Instrum. Meas. 69(7), 4379–4386 (2019).

Kobayashi, K., Watanabe, K., Yoshida, T., Hokari, M. & Tada, M. Level detection of materials in nonmetallic tank by the DC component of a microwave Doppler module. J. IEEE Sens. J. 19(4), 1554–1562 (2018).

Zakaria, Z., Idroas, M., Samsuri, A. & Adam, A. A. Ultrasonic instrumentation system for liquefied petroleum gas level monitoring. J. Nat. Gas Sci. Eng. 45, 428–435 (2017).

Ahmad, S., Khosravi, R., Iyer, A. K. & Mirzavand, R. Wireless capacitive liquid-level detection sensor based on zero-power RFID-sensing architecture. J. Sens. 23(1), 209 (2022).

Wenting, L., Zhongyi, L., Lin, L., Hong, G. & Guo, J. Automated lane marking identification based on improved canny edge detection algorithm. J. Southwest Jiaotong Univ. 53, 1253–1260 (2018).

Keqiang, R. & Jingran, Z. Extraction of plant leaf vein edges based on fuzzy enhancement and improved Canny. J. Optoelectron. Laser 29, 1251–1258 (2018).

Yan, D., Chenke, W., Hua, L. & Bijiao, W. Line detection optimization algorithm based on improved probabilistic hough transform. J. Acta Optica Sinica. 38, 170–178 (2018).

Siyi, G., Panfeng, H., Zhenyu, L. & Jia, C. A satellite solar panel support detection algorithm based on region growing Hough transform. J. Northwest. Polytech. Univ. 32, 220–226 (2014).

Liu, Y., Noguchi, N. & Liang, L. Development of a positioning system using UAV-based computer vision for an airboat navigation in paddy field. J. Comput. Electron. Agric. 162, 126–133 (2019).

Bietresato, M., Carabin, G., Vidoni, R., Gasparetto, A. & Mazzetto, F. Evaluation of a LiDAR-based 3D-stereoscopic vision system for crop-monitoring applications. J. Comput. Electron. Agric. 124, 1–13 (2016).

Gao, Y. et al. A contactless measuring speed system of belt conveyor based on machine vision and machine learning. J. Meas. 139, 127–133 (2019).

Ren, D., Jia, Z., Yang, J. & Kasabov, N. K. A practical Grabcut color image segmentation based on Bayes classification and simple linear iterative clustering. IEEE Access. 5, 18480–18487 (2017).

He, K., Wang, D., Tong, M. & Zhu, Z. An improved GrabCut on multiscale features. J. Pattern Recognit. 103, 107292 (2020).

Cheng, M. M., Mitra, N. J., Huang, X., Torr, P. H. & Hu, S. M. Global contrast based salient region detection. J. Trans. Pattern Anal. Mach. Intell. 37(3), 569–582 (2015).

Yu, S. et al. Efficient segmentation of a breast in B-mode ultrasound tomography using three-dimensional GrabCut (GC3D). J. Sens. 17(8), 1827 (2017).

Hernandez-Vela, A., Reyes, M., Ponce, V. & Escalera, S. GrabCut-based human segmentation in video sequences. Sensors 12(11), 15376–15393 (2012).

Nguyen, T. T. N. & Liu, C. C. A new approach using AHP to generate landslide susceptibility maps in the Chen-Yu-Lan watershed Taiwan. J. Sens. 19(3), 505 (2019).

Snyder, K. A. et al. Extracting plant phenology metrics in a great basin watershed: Methods and considerations for quantifying phenophases in a cold desert. J. Sens. 16(11), 1948 (2016).

Dieu Tien, B. et al. A novel ensemble artificial intelligence approach for gully erosion mapping in a semi-arid watershed (Iran). J. Sens. 19, 2444 (2019).

Ren, H., Zhao, S. & Gruska, J. Edge detection based on single-pixel imaging. J. Optics express. 26(5), 5501–5511 (2018).

Yuan, W., Zhang, W., Lai, Z. & Zhang, J. Extraction of Yardang characteristics using object-based image analysis and canny edge detection methods. J. Remote Sens. 12(4), 726 (2020).

Zhou, R. G. & Liu, D. Q. Quantum image edge extraction based on improved Sobel operator. J. Int. J. Theor. Phys. 58, 2969–2985 (2019).

Wang, W., Wang, L., Ge, X., Li, J. & Yin, B. Pedestrian detection based on two-stream UDN. J Appl. Sci. Basel 10(5), 1866 (2020).

Bharathiraja, S. & Kanna, R. B. Anti-forensics contrast enhancement detection (AFCED) technique in images based on Laplace derivative histogram. J. Mobile Netw. Appl. 24(4), 1174–1180 (2019).

Xin, Y., Wong, H. C., Lo, S. L. & Li, J. Progressive full data convolutional neural networks for line extraction from anime-style illustrations. J. Appl. Sci. Basel 10(1), 41 (2020).

Wang, J., Peilun, Fu. & Gao, R. X. Machine vision intelligence for product defect inspection based on deep learning and Hough transform. J. J. Manuf. Syst. 51, 52–60 (2019).

Lin, G., Tang, Y., Zou, X., Cheng, J. & Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. J. Precis. Agric. 21, 160–177 (2020).

Park, H. C., Lee, S. W. & Jeong, H. Image-based gimbal control in a drone for centering photovoltaic modules in a thermal image dagger. J. Appl. Sci. Basel 10(13), 4646 (2020).

Acknowledgements

This research was funded by the Key R & D plan of Shandong Province, China [grant number 2019GGX104048] and the projects of the Shandong Provincial Key Research and Development Project [grant number 2019SDZY01].

Author information

Authors and Affiliations

Contributions

Conceptualization: all authors; methodology: Z.H.L., X.Y., F.Y.L. and Q.L.Z.; Sofware:X.Y., F.Y.L.; Validation: Z.H.L. and Y.Y.; Formal analysis and investigation: Z.H.L., X.Y. and Y.Y.; Resources: Z.H.L. and Q.L.Z.;Data curation: X.Y. and F.Y.L.; Writing—original draf preparation: Z.H.L. and F.Y.L; Writing—review and editing: Z.H.L., Q.L.Z., F.Y.L. and Y.Y.; Project administration: Z.H.L.; Funding acquisition: Y.Y.; Supervision:Q.L.Z. and Y.Y. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, Z., Liu, F., Zeng, Q. et al. Estimation of drinking water volume of laboratory animals based on image processing. Sci Rep 13, 8602 (2023). https://doi.org/10.1038/s41598-023-34460-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-34460-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.