Abstract

The negative, mood-congruent cognitive bias described in depression, as well as excessive rumination, have been found to interfere with emotional processing. This study focuses on the assessment of facial recognition of emotions in patients with depression through a new set of dynamic virtual faces (DVFs). The sample consisted of 54 stable patients compared to 54 healthy controls. The experiment consisted in an emotion recognition task using non-immersive virtual reality (VR) with DVFs of six basic emotions and neutral expression. Patients with depression showed a worst performance in facial affect recognition compared to healthy controls. Age of onset was negatively correlated with emotion recognition and no correlation was observed for duration of illness or number of lifetime hospitalizations. There was no correlation for the depression group between emotion recognition and degree of psychopathology, excessive rumination, degree of functioning, or quality of life. Hence, it is important to improve and validate VR tools for emotion recognition to achieve greater methodological homogeneity of studies and to be able to establish more conclusive results.

Similar content being viewed by others

Introduction

Depressive disorders are one of the most prevalent causes of disability globally1, impacting over 300 million individuals with a prevalence in the general population of around 20% at least once in a lifetime2. The Diagnostic and Statistical Manual of Mental Disorders (DSM-5) defines depressive disorders as a diagnostic category characterized by common features such as affective, cognitive, and neurovegetative changes, with the primary symptoms being a sad, empty, or irritable mood and somatic and cognitive alterations that significantly affect an individual’s functioning3. Beck’s cognitive theory4, which emphasizes the role of an individual’s thoughts, interpretations, and attitudes in the pathogenesis of depression, is the most commonly accepted explanatory model of depression. This theory posits that the cognitive triad of depression, which includes a negative view of the self, the future, and the world, underlies the disorder5.

Social cognition, which encompasses the mental operations involved in identifying, perceiving, and interpreting social information and facilitating interpersonal interaction, is composed of four domains: emotional processing, theory of mind, social perception, and attributional style6. It is precisely the negative, mood-congruent, and therefore cognitively biased thinking found in depression that appears to interfere with social cognition7, particularly with attributional style but also emotional processing and theory of mind8,9,10,11. These deficits in social cognition can lead to isolation, which is a risk factor for the onset and maintenance of the illness12, as well as its long-term severity, since it clearly interferes with social support (perceived and real), which, if low, can become a high risk factor for developing a first major depressive episode and its subsequent recurrence13,14.

This article focuses on emotional processing, which is defined as the ability to identify, facilitate, regulate, understand, and manage emotions15. Emotional processing is divided into three subdomains: emotional understanding and emotional management, which are classified as higher perceptual level processes, and emotional facial recognition, which is considered a lower level process. Emotional facial recognition, or the ability to identify and categorize emotional states through facial expressions and non-facial signals such as the voice16, is considered the initial and most basic step in the whole process involving social cognition. In depression, there is a negative and mood-congruent cognitive bias that manifests as a preference for negative emotional information in memory encoding and recall tasks, slower reaction times when naming negative emotional words in the emotional Stroop task or responding to happy targets in the emotional Go/No-Go task, and a tendency to perceive and categorize ambiguous facial expressions as “negative emotions” in tests such as the Facial Emotions Recognition Test (FERT).

Additionally, certain cognitive styles, such as excessive rumination, have been associated with depression17. Excessive rumination is characterized as a type of maladaptive cognitive processing with depressive autofocus, a tendency to repetitive thinking about one’s own depressive symptoms and interpersonal problems, their possible causes, meanings, and implications18. This has been linked to deficits in social cognition19 and even in healthy people it has been related to a negative cognitive bias and negative evaluation of faces20. Research on social cognition in depression has primarily focused on studying facial emotion recognition, finding an inverse association with the level of psychopathological severity of depression. This association has been found in both the decompensation and euthymia phases21,22. Traditionally, a deficit in the ability to recognize facial emotions has been commonly observed in individuals with depression. This deficit is typically characterized by an ability to correctly identify sadness, but difficulty in identifying neutral and ambiguous expressions, which are often perceived as sadder or less happy. These observations are frequently attributed to the negative cognitive bias present in depression, and have been observed in both behavioral data23 and neural activity during emotion recognition tasks24.

The increased interest in social cognition, particularly facial emotion recognition, has prompted researchers to develop various evaluation tools and methods of classification. These include three main approaches: (1) non-behavior-dependent methodologies such as MRI and electroencephalography, (2) behavioral instruments, and (3) instruments or methods used to assess facial recognition impairment in health conditions25. Behavioral instruments have been the most widely used and developed. Initially, most behavioral tools were created in a natural format, using images or photographs. Later, videos were also used to present more realistic and genuine facial expressions, in an attempt to increase the validity of static stimuli26. However, while some videos have become standardized measures, most have not been validated and present various limitations in terms of format27. In recent years, behavioral instruments have focused on virtual reality (VR), which allows for the creation of controlled and practically real environments and situations28,29,30,31. This has led to the development of new computerized interventions and assessment tools for mental disorders with increased accessibility and ecological validity, making VR an attractive tool for clinical practice32,33,34.

Our multidisciplinary research team is working on developing a psychological intervention using VR to improve social cognition in individuals with major depressive disorder (MDD). However, for this work, it was decided to use a non-immersive desktop VR application due to concerns that patients would experience an overly uncomfortable moment and/or mild adverse events (e.g., headache, giddiness, and VR misuse behavior), as described in previous studies35,36. Although immersion is one of the most important aspects of VR technology, less immersive VR such as desktop VR has also received considerable attention37,38,39, especially in cases where higher levels of immersion are not recommended or possible. Currently, we are working on therapies with virtual humans that will involve the use of immersive VR equipment. However, this will be done in a step-wise manner. This means that patients will start by facing avatars on a computer screen, while increasing the level of immersion over time using head-mounted displays.

To achieve the aforementioned goal, several logical methodological steps have been taken. Firstly, the six basic emotions were represented by a new set of dynamic virtual faces (DVFs) designed using the action units of the FACS system26. Secondly, these faces were shown to 204 healthy individuals, and it was found that the DVFs were valid for accurately recreating facial expressions of human emotions40. In line of this, the current study focuses on examining facial emotion recognition scores through DVFs in 54 stable patients diagnosed with MDD, and comparing these scores to a group of healthy controls from the same demographic area. This is done in order to identify specific difficulties and develop more effective targeted interventions. The following hypotheses have been established:

-

Hypothesis 1 (H1): Patients with MDD will demonstrate a lower rate of emotional recognition compared to healthy controls.

-

Hypothesis 2 (H2): The MDD group will demonstrate a higher rate of recognition for sadness compared to the other emotions.

-

Hypothesis 3 (H3): For the MDD group, no association will be observed between emotional recognition and age of onset, duration of illness, and number of hospitalizations throughout life.

-

Hypothesis 4 (H4): For the MDD group, an association will be observed between emotional recognition and degree of psychopathology, excessive rumination, degree of functioning, and quality of life.

Results

Comparison of recognition scores between depression and healthy groups in emotion recognition (H1)

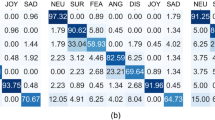

Regarding recognition scores, differences were found among both groups. The depression group presented a lower rate of emotional recognition than the healthy group, shown by the analyses carried out through the Mann–Whitney U test (\(U=\) 555.0, \(p<\) 0.001). The results of the recognition scores for healthy controls and patients is summarized in Table 1.

The average score for healthy controls is 88.19%, while it is 75.17% for the depression group. The greatest differences were in fear, with a score of 48.4% for the depression group and 77.3% for healthy controls; sadness, 63.0% and 83.4%; and disgust, 66.9% and 85.0%. In all cases, the score is higher for the control group. The differences in hits between the two groups cannot be attributed to a bias in the recognition of the 52 DVFs. It was not found that the number of recognition errors was very high for some DVFs or that there were obvious recognition differences between the two groups for certain specific DVFs.

Recognition scores for depressed and healthy groups in relation to age, gender and education level

The Kruskal–Wallis test revealed significant differences (\(\chi ^2_{(2)}\) = 15.487, \(p<\) 0.001) among the three age groups, consisting of young (\(n=\) 9), middle-aged (\(n=\) 27) and elderly (\(n=\) 18), for the hits in the depression group. There was a lower recognition score for the elderly compared to the young (\(p=\) 0.002) and middle-aged (\(p=\) 0.004). Statistically significant results were also found using Spearman’s rank correlation coefficient (\(r= -\) 0.628, \(p<\) 0.001) as a negative correlation was found indicating that the older the participant the fewer hits. As for the healthy group with the same three categories, young (\(n=\) 10), middle-aged (\(n=\) 27) and elderly (\(n=\) 17), no significant differences were found (\(\chi ^2_{(2)}\) = 0.135, \(p=\) 0.935). In this case, the Spearman test showed a similar but not significant negative correlation (\(r=-\) 0.050, \(p=\) 0.722) when age augmented.

Regarding gender (34 females, 20 males), no statistically significant differences were found using the Mann–Whitney U test, neither for the MDD group (\(U=\) 311.5, \(p=\) 0.609) nor for the healthy group (\(U=\) 367.5, \(p=\) 0.620). For education level based on the categories basic (\(n=\) 17), medium (\(n=\) 21) and high (\(n=\) 16), no statistically significant differences were found for the depression group using the Kruskal–Wallis test (\(\chi ^2_{(2)}\) = 3.274, \(p=\) 0.195). However, significant differences were found for the healthy group (\(\chi ^2_{(2)}\) = 10.923, \(p=\) 0.004). There are more hits for medium education level than for low (\(p=\) 0.09) and high (\(p=\) 0.026).

Recognition scores for the depression group (H2)

Recognition scores for the individual emotions in the depression group were analyzed by the Friedman test, and significant differences were found (\(\chi ^2_{(6)}\) = 167.671, \(p<\) 0.001). Specifically, results for the emotional recognition of sadness were also compared to the rest of basic emotions, and some significant differences were found, described to be worse recognized than neutral (\(p<\) 0.001), anger (\(p<\) 0.001), joy (\(p<\) 0.001) and surprise (\(p<\) 0.001). No significant differences were obtained between sadness and fear (\(p=\) 0.249), and sadness and disgust (\(p=\) 1.000).

Influence of age of onset, duration of illness and the number of hospitalizations on emotion recognition for the depression group (H3)

Statistically significant results were found for emotion recognition using the Spearman’s rank correlation coefficient (\(r=\) 0.384, \(p=\) 0.004) as a negative correlation was found indicating that the higher the age of onset, the fewer hits. The duration of illness, measured by the years of disease evolution, did not show a significant correlation to emotion recognition analyzed by Spearman’s rank correlation coefficient (\(r= -\) 0.133, \(p=\) 0.339).

Statistically significant results could not be found for emotion recognition using the Spearman’s rank correlation coefficient (\(r=-\) 0.224, \(p=\) 0.103). The number of hospitalizations showed to not be influential on the emotion recognition performance.

Influence of the results of different psychometric scales on emotion recognition for the depression group (H4)

The results of some psychometric scales referring to degree of psychopathology, excessive rumination, degree of functioning and quality of life of depression group are summarized in relation to emotion recognition.

Psychopathology

The Spearman’s rank correlation coefficient could not find a significant influence between the results of PANAS and emotion recognition, being these results referred to Positive PANAS (\(r=\) 0.075, \(p=\) 0.592) and Negative-PANAS (\(r=\) 0.009, \(p=\) 0.949). The kind of affectivity (positive or negative) experimented on two last weeks showed to not be relevant to emotion recognition in patients.

The influence of the degree of depression measured by the BDI-II on emotion recognition was studied through two different analyses. First, Spearman’s rank correlation coefficient was carried out to measure the relationship by considering BDI-II results as a continuous variable, with non-significant influence as finding (\(r= -\) 0.044, \(p=\) 0.752). A second analysis was carried out by the Kruskal–Wallis test, in which BDI-II results were studied as a discontinuous variable by creating three categories, composed of minimal (\(n=\) 25), mild and moderate (\(n=\) 17), and severe (\(n=\) 12) depression. No significant differences among groups were found (\(\chi ^2_{(2)}\) = 2.573, \(p=\) 0.276). BDI-II results are not a predictor of emotion recognition.

The degree of depression measured by the HRSD had no influence on emotion recognition; the Spearman’s rank correlation coefficient could not find a significant relationship between variables (\(r= -\) 0.106, \(p=\) 0.452). HRSD results cannot be associated with emotion recognition. The analysis of the influence between the results of STAI-T and emotion recognition did not show to be significant regarding Spearman’s rank correlation coefficient (\(r= -\) 0.048, \(p=\) 0.732).

Excessive rumination and related

Statistically significant results could not be found between depressive rumination measured by the SDRS and emotion recognition using the Spearman’s rank correlation coefficient (\(r=\) 0.124, \(p=\) 0.372).

The influence of IUS-12 results on emotion recognition was analyzed by considering it as continuous and discontinuous variable. As continuous variable, Spearman’s rank correlation coefficient showed no significant relationship regarding the Total IUS-12 (\(r= -\) 0.133, \(p=\) 0.340), the PIU (\(r= -\) 0.131, \(p=\) 0.344) and the IIU (\(r= -\) 0.124, \(p=\) 0.373). In order to convert IUS-12 performance in a discontinuous variable, the results were classified into two groups, 0 or 1, depending if they exceeded the cut-off point of the clinical reference sample63; the Mann–Whitney U test showed no influence referring to Total IUS-12 (\(U=\) 334.0, \(p=\) 0.620; cases 0, n = 25, cases 1, n = 29), PIU (\(U=\) 311.0, \(p=\) 0.393; cases 0, n = 24, cases 1, n=30), and IIU (\(U=\) 355.5, \(p=\) 0.986; cases 0, n = 23, cases 1, n = 31).

The Spearman’s rank correlation coefficient could not find a significant influence between the results of Mini-CERTS and emotion recognition, being these results referred to CET or constructive thinking (\(r=\) 0.098, \(p=\) 0.483), and to AAT or nonconstructive thinking (\(r= -\) 0.029, \(p=\) 0.834).

Functioning: functional assessment short test (FAST)

The FAST scale obtains outcomes that can be summarized in a value that ranges from 0 to 72, meaning 0 the best functioning of the patient and 72 the worst. No statistically significant correlation was found by the Spearman’s rank correlation coefficient between FAST and the recognition rates (\(r= -\) 0.205, \(p=\) 0.137). Therefore, the different values of the FAST score did not influence the number of emotions recognized.

Quality of life: World Health Organization Quality of Life Field Trial Version (WHOQOL-BREF)

Using the Spearman’s rank correlation coefficient, no statistical significance could be found in emotion recognition for any of the four domains that compose the scale: Physical health (\(r=\) 0.190, \(p=\) 0.170), Psychological (\(r= -\) 0.023, \(p=\) 0.871), Social relationships (\(r=\) 0.118, \(p=\) 0.396), and Environment (\(r= -\) 0.004, \(p=\) 0.975).

Discussion

The present work has focused on evaluation of facial recognition of emotions in 54 stable patients with a diagnosis of major depression, in its comparison with 54 healthy controls matched by age, gender and educational level. To carry out the evaluation, a tool previously validated by the research team was administered, using non-immersive virtual reality with DVFs. The hypotheses raised are discussed below, in light of the results obtained.

As hypothesized in previous studies41,42, patients with MDD showed a lower rate of emotional recognition on virtual emotional expressions when compared to healthy controls for all emotions included, which reinforces hypothesis H1. The differences between groups were more pronounced for some negative emotions (fear, sadness and disgust). Among the depressed group, the worst recognized emotions were three of the four negative emotions presented, highlighting fear, sadness and disgust (with success rates of 48.9, 63.0% and 66.9%, respectively), while the best recognized emotions were neutral (90.3%), surprise (89.6%) and joy (84.5%). Despite the growing interest in recent years in facial recognition of emotions with DVFs, a recent review on the recognition of emotions through VR revealed that there is still not enough research on the topic that has been able to establish standardized tools, being difficult to find studies with conclusive results on all basic emotions43. The comparison of our results with previous similar studies is therefore controversial, as most previous studies have been conducted with behavioral instruments using static stimuli. Nevertheless, worse facial emotion recognition in depression has been found to be a robust finding, consistent with previous studies41. Although it is difficult to know the causes of these differences, some authors have suggested that this poorer performance may be supported by the tendency to motor slowness and indecision shown as a symptom of MDD3, and by a relationship between emotion recognition and alexithymia, defending that the difficulties in recognizing and categorizing emotions may be mediated by another prior impairment in the identification and naming of even one’s own emotions44. Regarding the recognition scores of the MDD and healthy groups in relation to age, it was observed, consistent with previous results, that the ability to recognize emotions decreases after the age of 60. In this study, the few hits were particularly relevant for the MDD group the older the participant was.

Regarding intragroup emotion recognition in depression, contrary as expected in H2, sadness did not obtain the highest rate of emotional recognition compared to the other emotions. In fact, sadness has been the second worst recognized emotion after fear. Some studies found similar results45,46, showing better recognition rates for mainly joy and also anger, and thus discarding the traditionally described bias for sad faces. Anyway, an important variability among studies is evidenced when this issue is reviewed42,47. Authors have suggested, in an attempt to explain it, that an attenuation of the positive protective bias, typical of healthy people, could be more influential than the negative bias in depressive patients when emotional recognition is required48, especially when ambiguous and neutral stimuli are presented49.

In our study, the emotions that required the longest identification time for the depression group were neutral expression and sadness. The result of neutral expression was similar in the two groups (healthy controls and patients with depression) and probably had to do with the participant waiting until the emotional transition was complete to be able to respond. The case of sadness is different. In healthy controls it was the most rapidly recognized emotion [2.16 (0.77)], while in patients with depression it was the second emotion that took the longest to recognize [5.41 (2.75)], which supports the results of previous studies.

As expected, the duration of illness and number of hospitalizations throughout life were not correlated with successful emotional recognition. Nevertheless, contrary to what was hypothesized under H3, a significant negative relationship was observed between age of onset and emotional recognition, determining the older age of onset, the less hits. In research, the age of onset on depression is usually collected as a descriptive variable of the sample, and thus, its possible influence on emotion recognition has not been studied. Future literature about this issue would be interesting in order to increase data and to be able to compare results.

Regarding hypothesis H4, the results obtained did not confirm expectations. No relationship was found between the degree of psychopathology and emotion recognition, being this finding opposed to previous findings which have found worst performance associated to the severity of depression42,50,51, or even attentional biases only for high levels of depressive mood52. The expected relationships with excessive rumination (SDRS, IUS-12, MiniCERTS), the degree of functioning (FAST) or quality of life (WHOQOL-BREF) and emotion recognition were unconfirmed as well46. Perhaps, the endogenous factor of depression could be something to keep in mind to interpret these unexpected results. The manual of mental disorders most used by clinicians today, in which our diagnosis are based on (DSM-5), allows clinicians to diagnose the major depressive disorder by meeting the established criteria in which several types of depression can be included. Although some specifiers are available to refine a more specific diagnosis, they are rarely considered in research. In addition, it has to be noted that all patients of the depressed sample were being medicated, which could mediate on results as suggested by previous literature45,52,53,54.

The present study has also some limitations. First, antidepressants could interfere with emotional recognition of patients with depression. In the present study, all the patients that conformed the depression group were being treated with psychotropic drugs at the time of the assessment, and therefore, it could be a limitation of this study. In the future, it would be interesting to be able to compare results of a depressed sample with and without pharmacological interventions. Neurocognitive deficits and medication effects could be reported. On the other hand, although the inclusion of the six basic emotions can be understood as a strength of our study, it could also be considered a limiting aspect because it makes it more difficult for participants to make decisions among various options. Lastly, following the line of the present study, future research in this field could be oriented to analyze the content of the wrong responses in order to investigate possible response patterns and thus identify possible emotional biases.

Methods

Participants

The recruitment of participants, comprising of individuals with MDD and healthy controls, was conducted over a period of 6 months (June–November 2021) at the Mental Health Service of the Complejo Hospitalario Universitario de Albacete, which serves a population of approximately 300,000 inhabitants. The authors state that all procedures contributing to this study comply with the ethical standards set by relevant national and institutional committees on human experimentation and the Helsinki Declaration of 1975, as revised in 2008. The study was granted ethical approval by the Clinical Research Ethics Committee of the hospital on April 26, 2022, with the reference number 2020/12/141.

The sample size for the study consisted of 108 participants, comprising of 54 stable patients diagnosed with MDD and 54 healthy controls from the same demographic area. Participants were deemed stable when they had achieved complete remission of depression, having been without significant symptoms for a minimum of 2 months, scoring \(\le\) 6 on the Hamilton Rating Scale for Depression (HRSD), and having undergone no changes in medication in the last 3 months. The sample size was determined by the number of eligible stable patients available for inclusion in the study. A sensitivity test was performed using the G*Power program (version 3.1.9.6) to ensure that the sample size would provide an adequate level of statistical power, resulting in an effect size d = 0.640 for \(\alpha\) = 0.05, power = 0.95 (\(1 - \beta\)), two sample groups with n = 54, a non-centrality parameter \(\delta\) = 3.311 and a critical value t = 1.66. In line with our previous work40, participants were divided into three age groups (20–39, 40–59 and 60–79 years) and three educational levels (basic, medium and high), according to the Spanish National Institute of Statistics (INE).

Table 2 presents the sociodemographic data for both the patient and control groups. The gender and level of education were matched between the two groups, resulting in similar ages for both samples.

For the group of patients diagnosed with MDD, the inclusion criteria were as follows:

-

(a)

A diagnosis of depressive disorder as determined by the Structured Clinical Interview for DSM-5 (SCID).

-

(b)

Clinical stability for at least 3 months prior to completing the SCID.

-

(c)

Being an outpatients.

-

(d)

An age between 20 and 79 years old.

-

(e)

Adequate proficiency in and comprehension of the Spanish language.

-

(f)

Signing an informed consent form.

The criteria for exclusion were as follows:

-

(a)

Meeting diagnostic criteria for another major mental disorder on Axis I of DSM-5, with the exception of nicotine dependence.

-

(b)

Presence of intellectual disability.

-

(c)

Presence of a medical condition that may affect the ability to recognize facial expressions.

The inclusion criteria for healthy controls were d), e) and f) described for the depression group. The exclusion criteria were those b), c) described for the group with depression or having a personal history of mental illness.

Data collection procedure

Each patient’s eligibility for the study was determined through a screening evaluation conducted by the referring psychiatrist during a clinical appointment. Information on sociodemographic and clinical characteristics was collected during the baseline visit, including personal and family psychiatric history, comorbid medical conditions, substance use (current and/or past), current treatment (pharmacological and psychotherapeutic), previous hospitalizations and emergency department visits.

Data collected for sociodemographic variables included patient age, gender, race, marital status, place of residence (rural/urban), education level, employment status (active, unemployed, temporary incapacity for work, pensioner, housekeeper, student), and profession. Clinical data collected included personal somatic history (including neurological), toxic personal history, psychiatric history (including diagnosis, duration of illness, number of exacerbations and hospitalizations), current treatment regimen and relevant family history. The variables and measurement instruments used in the study were as follows:

-

Affectivity:

-

Severity of depressive symptoms:

-

Spanish version57 of the Beck Depression Inventory II (BDIII)58. It is a 21-item auto-administered scale with multiple choice answers. The obtained punctuation allows clinicians to determine the severity of depression: minimum (0–13), mild (14–19), moderate (20–28) and severe depression (29–63).

-

Spanish version59 of the 17-item Hamilton Rating Scale of Depression (HRSD)60. This scale was designed for patients previously diagnosed with depression in order to quantitatively assess the severity of symptoms and assess patient changes. It is composed of 17 items, which in turns have between three and five possible answers, scored from 0 to 2 or 0 to 4, respectively.

-

-

Anxiety trait:

-

Spanish version61 of the Trait subscale of State-Trait Anxiety Inventory (STAIA/STAI-T)62, which assesses self-reported anxious symptoms, referring to general or chronic experience of anxiety. We used the 7 items with a Likert-response format (0–3) of the STAIT63, since these items were identified through a factor analysis to be more strongly correlated with trait anxiety than depression64.

-

-

Rumination and related:

-

Spanish version65 of the Short Depressive Rumination Scale (SDRS) from the Leuven Adaptation of the Rumination on Sadness Scale (LARRS)66. It is an auto-administered scale formed by 4 items about rumination trends when depressed, in which frequency is rated from 1 to 10.

-

Spanish adaptation of the Intolerance of Uncertainty Scale, IUS-1267. This is a short scale of 12-item that assesses the level of intolerance of uncertainty using a Likert-response format (1–5). IUS-12 allows clinicians not only to evaluate the intolerance of uncertainty, but also to evaluate two more constructs: prospective anxiety (PIU) and inhibitory anxiety (IIU).

-

Spanish adaptation of the Mini-Cambridge-Exeter Repetitive Thinking Scale (Mini-CERTS)68, a 15-item scale with a Likert-response format (1–4) that classifies rumination into two dimensions: Concrete and Experiential Thinking (CET) or constructive thinking, and Analytic and Abstract Thinking (AAT) or nonconstructive thinking.

-

-

Functionality and quality of life:

-

The Functioning Assessment Short Test, FAST69. FAST is a 24-item scale designed to assess an individual’s functioning in six specific areas: autonomy, occupational functioning, cognitive functioning, financial issues, interpersonal relationships, and leisure time. The scale is quick and easy to administer, and responses are recorded using a Likert-response scale ranging from 0 to 3, with 0 indicating no difficulty and 3 indicating severe difficulty.

-

Spanish version70 of the WHOQOL-BREF Brief World Health Organization Quality Of Life, WHOQOL-BREF71. It is composed of 26 items: 24 are referred to the 24 aspects of the original WHOQOL-100, and the remaining 2 are global items about quality of life and general health. The answer format is a Likert-response scale (1–5), which correction gives rise to the evaluation of 4 domains: Physical, Psychological, Social relationships and Environment health.

-

The recruitment of healthy controls was performed in the same demographic area as the patients and primarily from similar cultural and social groups. The research team designed a data collection notebook that included both sociodemographic and clinical information. The sociodemographic data collected were identical to those collected from the patients with depression. In addition, clinical data such as personal somatic history (including neurological history), personal toxic and psychiatric history, and relevant family history were also collected. The data collection was conducted in a single individual session that lasted 30 min. If the participant met the study’s inclusion criteria, they were then presented with the facial stimulus.

All participants gave informed consent after receiving a detailed explanation of the study and before participating in the experiment. The acquired data were stored anonymously using dissociated databases.

Experimental procedure

The experiment was conducted in a single session, lasting between 40–50 min and including the administration of both sociodemographic and clinical scales. To begin, participants were given a brief tutorial to familiarize themselves with the task at hand. Throughout the session, all participants were presented with 52 DVFs on a 27-in. computer screen (see samples in Fig. 1). Each DVF began with a neutral expression, then transitioned to one of the six basic emotions (happiness, sadness, anger, fear, disgust, and surprise) or remained neutral before ending on a neutral expression.

A few DVF samples created by the authors using Unity version 2020.2.2f1 (https://unity.com/).

The total presentation time for each DVF was 2 s. After viewing each DVF, participants were required to identify the basic emotion depicted by selecting one of the seven options provided on the screen below the DVF. Of the 52 faces presented, 50% were interspersed with less dynamic movement (only the most characteristic facial features of each emotion moved) and 50% featured more dynamic faces (movement was represented by facial features and movements of the neck and shoulders).

The set of DVFs employed in the study were presented from three different perspectives: 50% were displayed in a frontal view, 25% were presented from the right profile, and 25% were presented from the left profile. Additionally, other physical characteristics were also taken into consideration in the selection of the avatars used. Specifically, the DVFs included two white avatars, both approximately 30 years of age, with varying eye color, skin tone, and hair characteristics, as well as two black avatars and two avatars of older age. Out of the 52 DVF presented, 8 were of black race and 8 were of older age. The complete characterization of the DVFs used in the study can be found in our previous experiment, in which they were validated by a sample of 204 healthy individuals26.

Statistical analysis

The statistical analyses were conducted utilizing IBM SPSS Statistics (version 24) and Microsoft Excel. Descriptive statistics were employed to analyze quantitative variables, including the mean and standard deviation, and qualitative variables were represented by percentages. As the distribution of hits did not follow a normal pattern, non-parametric tests were primarily utilized to test the hypotheses, with statistical significance set at a p value< 0.05. Comparisons between groups were performed using the Mann Whitney U test when comparing only two groups, and the Kruskal–Wallis test for three or more groups. The correlation between variables was examined utilizing the Spearman’s rank correlation coefficient.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

World Health Organization. Depression. https://www.who.int/news-room/fact-sheets/detail/depression (2021). Accessed 30 Mar 2022.

Ferrari, A. J. et al. Burden of depressive disorders by country, sex, age, and year: Findings from the global burden of disease study 2010. PLoS Med. 10, 1–12. https://doi.org/10.1371/journal.pmed.1001547 (2013).

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th Edition: DSM-5 (American Psychiatric Association, 2013).

Beck, A. T. Thinking and depression: I. Idiosyncratic content and cognitive distortions. Arch. Gen. Psychiatry 9, 324–333. https://doi.org/10.1001/archpsyc.1963.01720160014002 (1963).

Beck, A. T., Rush, A. J., Shaw, B. F. & Emery, G. Cognitive Therapy of Depression (Guilford Press, 1987).

Fernández-Sotos, P. et al. Social cognition remediation interventions: A systematic mapping review. PLoS One 14, 1–20. https://doi.org/10.1371/journal.pone.0218720 (2019).

Stubberud, J., Huster, R., Hoorelbeke, K., Hammar, A. & Hagen, B. Improved emotion regulation in depression following cognitive remediation: A randomized controlled trial. Behav. Res. Ther. 147, 103991. https://doi.org/10.1016/j.brat.2021.103991 (2021).

Cusi, A. M., Nazarov, A., MacQueen, G. M. & McKinnon, M. C. Theory of mind deficits in patients with mild symptoms of major depressive disorder. Psychiatry Res. 210, 672–674. https://doi.org/10.1016/j.psychres.2013.06.018 (2013).

Hilland, E., Landrø, N. I., Harmer, C. J., Maglanoc, L. A. & Jonassen, R. Within-network connectivity in the salience network after attention bias modification training in residual depression: Report from a preregistered clinical trial. Front. Human Neurosci.https://doi.org/10.3389/fnhum.2018.00508 (2018).

Inoue, Y., Yamada, K. & Kanba, S. Deficit in theory of mind is a risk for relapse of major depression. J. Affect. Disord. 95, 125–127. https://doi.org/10.1016/j.jad.2006.04.018 (2006).

Mattern, M. et al. Behavioral evidence for an impairment of affective theory of mind capabilities in chronic depression. Psychopathology 48, 240–250. https://doi.org/10.1159/000430450 (2015).

Cheeta, S., Beevers, J., Chambers, S., Szameitat, A. & Chandler, C. Seeing sadness: Comorbid effects of loneliness and depression on emotional face processing. Brain Behav. 11, e02189. https://doi.org/10.1002/brb3.2189 (2021).

Bora, E. & Berk, M. Theory of mind in major depressive disorder: A meta-analysis. J. Affect. Disord. 191, 49–55. https://doi.org/10.1016/j.jad.2015.11.023 (2016).

Disner, S. G., Beevers, C. G., Haigh, E. A. & Beck, A. T. Neural mechanisms of the cognitive model of depression. Nat. Rev. Neurosci. 12, 467–477. https://doi.org/10.1038/nrn3027 (2011).

Mayer, J. D., Salovey, P., Caruso, D. R. & Sitarenios, G. Emotional intelligence as a standard intelligence. Emotion 1, 232–242. https://doi.org/10.1037/1528-3542.1.3.232 (2001).

Pinkham, A. E. et al. The social cognition psychometric evaluation study: Results of the expert survey and RAND panel. Schizophr. Bull. 40, 813–823. https://doi.org/10.1093/schbul/sbt081 (2013).

Zhang, R. et al. Rumination network dysfunction in major depression: A brain connectome study. Prog. Neuropsychopharmacol. Biol. Psychiatry 98, 109819. https://doi.org/10.1016/j.pnpbp.2019.109819 (2020).

Segal, Z. V., Williams, J. M. G. & Teasdale, J. D. Mindfulness-Based Cognitive Therapy for Depression: A New Approach to Preventing Relapse (Guilford Press, 2002).

Raes, F., Hermans, D. & Williams, J. Negative bias in the perception of others’ facial emotional expressions in major depression: The role of depressive rumination. J. Nerv. Ment. Dis. 194, 796–799. https://doi.org/10.1097/01.nmd.0000240187.80270.bb (2006).

Suslow, T., Wildenauer, K. & Günther, V. Ruminative response style is associated with a negative bias in the perception of emotional facial expressions in healthy women without a history of clinical depression. J. Behav. Ther. Exp. Psychiatry 62, 125–132. https://doi.org/10.1016/j.jbtep.2018.10.004 (2019).

Inoue, Y., Tonooka, Y., Yamada, K. & Kanba, S. Deficiency of theory of mind in patients with remitted mood disorder. J. Affect. Disord. 82, 403–409. https://doi.org/10.1016/j.jad.2004.04.004 (2004).

Weightman, M. J., Air, T. M. & Baune, B. T. A review of the role of social cognition in major depressive disorder. Front. Psychiatryhttps://doi.org/10.3389/fpsyt.2014.00179 (2014).

Münkler, P., Rothkirch, M., Dalati, Y., Schmack, K. & Sterzer, P. Biased recognition of facial affect in patients with major depressive disorder reflects clinical state. PLoS One 10, 1–16. https://doi.org/10.1371/journal.pone.0129863 (2015).

Hamilton, J. P. et al. Default-mode and task-positive network activity in major depressive disorder: Implications for adaptive and maladaptive rumination. Biol. Psychiatr. 70, 327–333. https://doi.org/10.1016/j.biopsych.2011.02.003 (2011).

de Paiva-Silva, A. I., Pontes, M. K., Aguiar, J. S. R. & de Souza, W. C. How do we evaluate facial emotion recognition?. Psychol. Neurosci. 9, 153–175. https://doi.org/10.1037/pne0000047 (2016).

García, A. S. et al. Design of reliable virtual human facial expressions and validation by healthy people. Integrat. Comput. Aided Eng. 27, 287–299. https://doi.org/10.3233/ICA-200623 (2020).

Dyck, M. et al. Recognition profile of emotions in natural and virtual faces. PLoS One 3, 1–8. https://doi.org/10.1371/journal.pone.0003628 (2008).

Gutiérrez-Maldonado, J., Rus-Calafell, M. & González-Conde, J. Creation of a new set of dynamic virtual reality faces for the assessment and training of facial emotion recognition ability. Virtual Reality 18, 61–71. https://doi.org/10.1007/s10055-013-0236-7 (2014).

Maples-Keller, J. L., Bunnell, B. E., Kim, S.-J. & Rothbaum, B. O. The use of virtual reality technology in the treatment of anxiety and other psychiatric disorders. Harv. Rev. Psychiatry 25, 103–113. https://doi.org/10.1097/HRP.0000000000000138 (2017).

Eggleston, A., Tsantani, M., Over, H. & Cook, R. Preferential looking studies of trustworthiness detection confound structural and expressive cues to facial trustworthiness. Sci. Rep. 12, 17709. https://doi.org/10.1038/s41598-022-21586-6 (2022).

Witkower, Z., Hill, A. K., Koster, J. & Tracy, J. L. Is a downwards head tilt a cross-cultural signal of dominance? Evidence for a universal visual illusion. Sci. Rep. 12, 365. https://doi.org/10.1038/s41598-021-04370-w (2022).

Riva, G., Malighetti, C. & Serino, S. Virtual reality in the treatment of eating disorders. Clin. Psychol. Psychother. 28, 477–488. https://doi.org/10.1002/cpp.2622 (2021).

Lott, L. L., Spengler, F. B., Stächele, T., Schiller, B. & Heinrichs, M. EmBody/EmFace as a new open tool to assess emotion recognition from body and face expressions. Sci. Rep. 12, 14165. https://doi.org/10.1038/s41598-022-17866-w (2022).

Fu, G. et al. A method for diagnosing depression: Facial expression mimicry is evaluated by facial expression recognition. J. Affect. Disord. 323, 809–818. https://doi.org/10.1016/j.jad.2022.12.029 (2023).

Brimelow, R. E., Dawe, B. & Dissanayaka, N. N. Preliminary research: Virtual reality in residential aged care to reduce apathy and improve mood. Cyberpsychol. Behav. Soc. Netw. 23, 165–170. https://doi.org/10.1089/cyber.2019.0286 (2020).

Brimelow, R. E., Thangavelu, K., Beattie, R. & Dissanayaka, N. N. Feasibility of group-based multiple virtual reality sessions to reduce behavioral and psychological symptoms in persons living in residential aged care. J. Am. Med. Dir. Assoc. 23, 831-837.e2. https://doi.org/10.1016/j.jamda.2021.07.026 (2022).

Wang, Q. & Li, J. A desktop VR prototype for industrial training applications. Virtual Reality 7, 187–197. https://doi.org/10.1007/s10055-004-0127-z (2004).

Dubovi, I., Levy, S. T. & Dagan, E. Now i know how! the learning process of medication administration among nursing students with non-immersive desktop virtual reality simulation. Comput. Educ. 113, 16–27. https://doi.org/10.1016/j.compedu.2017.05.009 (2017).

de Mello Monteiro, C. B. et al. Short-term motor learning through non-immersive virtual reality task in individuals with down syndrome. BMC Neurol. 17, 71. https://doi.org/10.1186/s12883-017-0852-z (2017).

Fernández-Sotos, P. et al. Validation of dynamic virtual faces for facial affect recognition. PLoS One 16, 1–15. https://doi.org/10.1371/journal.pone.0246001 (2021).

Dalili, M. N., Penton-Voak, I. S., Harmer, C. J. & Munafò, M. R. Meta-analysis of emotion recognition deficits in major depressive disorder. Psychol. Med. 45, 1135–1144. https://doi.org/10.1017/S0033291714002591 (2015).

Krause, F. C., Linardatos, E., Fresco, D. M. & Moore, M. T. Facial emotion recognition in major depressive disorder: A meta-analytic review. J. Affect. Disord. 293, 320–328. https://doi.org/10.1016/j.jad.2021.06.053 (2021).

Marín-Morales, J., Llinares, C., Guixeres, J. & Alcañiz, M. Emotion recognition in immersive virtual reality: From statistics to affective computing. Sensorshttps://doi.org/10.3390/s20185163 (2020).

Senior, C., Hassel, S., Waheed, A. & Ridout, N. Naming emotions in motion: Alexithymic traits impact the perception of implied motion in facial displays of affect. Emotion 20, 311–316. https://doi.org/10.1037/emo0000546 (2020).

Akhapkin, R. V., Volel, B. A., Shishorin, R. M., Ustyuzhanin, D. V. & Petelin, D. S. Recognition of facial emotion expressions in patients with depressive disorders: A prospective, observational study. Neurol. Ther. 10, 225–234. https://doi.org/10.1007/s40120-02100231-w (2021).

Ronold, E. H., Joormann, J. & Hammar, A. Facing recovery: Emotional bias in working memory, rumination, relapse, and recurrence of major depression; an experimental paradigm conducted five years after first episode of major depression. Appl. Neuropsychol. Adult 27, 299–310. https://doi.org/10.1080/23279095.2018.1550406 (2020).

de Lima Bomfim, A. J., dos Santos Ribeiro, R. A. & Chagas, M. H. N. Recognition of dynamic and static facial expressions of emotion among older adults with major depression. Trends Psychiatry Psychother. 41, 159–166. https://doi.org/10.1590/2237-6089-2018-0054 (2019).

Duque, A. & Vázquez, C. Double attention bias for positive and negative emotional faces in clinical depression: Evidence from an eye-tracking study. J. Behav. Ther. Exp. Psychiatry 46, 107–114. https://doi.org/10.1016/j.jbtep.2014.09.005 (2015).

Bone, J. K. et al. Variation in recognition of happy and sad facial expressions and self-reported depressive symptom severity: A prospective cohort study. J. Affect. Disord. 257, 461–469. https://doi.org/10.1016/j.jad.2019.06.025 (2019).

Blanco, I. et al. Attentional biases in dysphoria when happy and sad faces are simultaneously presented. J. Behav. Ther. Exp. Psychiatry 65, 101499. https://doi.org/10.1016/j.jbtep.2019.101499 (2019).

Hümmer, K., Coenen, J., Konstantinidis, I., Lausberg, H. & Helmich, I. Impaired recognition of nonverbal expressions of emotions in depressed individuals. Psychiatry Res. 302, 114031. https://doi.org/10.1016/j.psychres.2021.114031 (2021).

Dawson, G. R., Post, A., Smart, T. S., Browning, M. & Harmer, C. J. Accuracy in recognising happy facial expressions is associated with antidepressant response to a nop receptor antagonist but not placebo treatment. J. Psychopharmacol. 35, 1473–1478. https://doi.org/10.1177/02698811211044684 (2021).

Pringle, A., McCabe, C., Cowen, P. & Harmer, C. Antidepressant treatment and emotional processing: Can we dissociate the roles of serotonin and noradrenaline?. J. Psychopharmacol. 27, 719–731. https://doi.org/10.1177/0269881112474523 (2013).

Shiroma, P. R., Thuras, P., Johns, B. & Lim, K. O. Emotion recognition processing as early predictor of response to 8-week citalopram treatment in late-life depression. Int. J. Geriatr. Psychiatry 29, 1132–1139. https://doi.org/10.1002/gps.4104 (2014).

Sandín, B. et al. Escalas PANAS de afecto positivo y negativo: Validación factorial y convergencia transcultural. Psicothema 11, 37–51 (1999).

Watson, D., Clark, L. A. & Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070. https://doi.org/10.1037/0022-3514.54.6.1063 (1988).

Sanz, J., Perdigón, A. L. & Vázquez, C. The Spanish adaptation of Beck’s Depression Inventory-II (BDI-II): 2. Psychometric properties in the general population. Clin. Salud 14, 249–280 (2003).

Beck, A. T., Steer, R. A. & Brown, G. K. Manual for the Beck Depression Inventory-II (Psychological Corporation, 1998).

Ramos-Brieva, J. A. Validation of the Castillian version of the Hamilton rating scale for depression. Actas Luso-Espanolas Neurol. Psiquiatria Cie. Afines 14, 324–334 (1986).

Hamilton, M. A rating scale for depression. J. Neurol. Neurosurg. Psychiatry 23, 56–62. https://doi.org/10.1136/jnnp.23.1.56 (1960).

Buela-Casal, G., Guillén-Riquelme, A. & Seisdedos-Cubero, N. Manual del Cuestionario de Ansiedad Estado-Rasgo (STAI) (TEA Ediciones, 1982).

Spielberger, C. D., Gorsuch, R. L., Lushene, R., Vagg, P. R. & Jacobs, G. A. Manual for the State-Trait Anxiety Inventory (Consulting Psychologists Press, 1983).

Jensen, D., Cohen, J. N., Mennin, D. S., Fresco, D. M. & Heimberg, R. G. Clarifying the unique associations among intolerance of uncertainty, anxiety, and depression. Cogn. Behav. Ther. 45, 431–444. https://doi.org/10.1080/16506073.2016.1197308 (2016).

Bieling, P. J., Antony, M. M. & Swinson, R. P. The State-Trait Anxiety Inventory, Trait version: Structure and content re-examined. Behav. Res. Ther. 36, 777–788. https://doi.org/10.1016/S0005-7967(98)00023-0 (1998).

Ricarte, J. J., Aizpurúa, E., Ros, L., Latorre, J. M. & Raes, F. Psychometric properties of the Spanish short depressive rumination scale in a nonclinical sample. Span. J. Psychol. 21, E34. https://doi.org/10.1017/sjp.2018.35 (2018).

Raes, F., Hermans, D., Williams, J., Bijttebier, P. & Eelen, P. A “triple w’’-model of rumination on sadness: Why am I feeling sad, what’s the meaning of my sadness, and wish I could stop thinking about my sadness (but I can’t!). Cogn. Ther. Res. 32, 526–541. https://doi.org/10.1007/s10608-007-9137-y (2008).

Carleton, R. N. et al. Increasingly certain about uncertainty: Intolerance of uncertainty across anxiety and depression. J. Anxiety Disord. 26, 468–479. https://doi.org/10.1016/j.janxdis.2012.01.011 (2012).

Douilliez, C., Heeren, A., Lefèvre, N., Watkins, E., Barnard, P. & Philippot, P. Validation of the version française d'un questionnaire évaluant les pensées répétitives constructives et non constructives. Dog. J. Behav. 46(2), 185. https://doi.org/10.1037/a0033185 (2014).

Rosa, A. R. et al. Validity and reliability of the functioning assessment short test (FAST) in bipolar disorder. Clin. Pract. Epidemiol. Ment. Health 3, 5. https://doi.org/10.1186/1745-0179-3-5 (2007).

Espinoza, I., Osorio, P., Torrejón, M. J., Lucas-Carrasco, R. & Bunout, D. Validation of the WHOQOL-BREF quality of life questionnaire among Chilean older people. Rev. Med. Chil. 139, 579–586. https://doi.org/10.4067/S0034-98872011000500003 (2011).

The WHOQOL Group. Development of the World Health Organization WHOQOL-BREF Quality of Life Assessment. Psychol. Med. 28, 551–558. https://doi.org/10.1017/S0033291798006667 (1998).

Acknowledgements

Grants PID2020-115220RB-C21 and EQC2019-006063-P funded by MCIN/AEI/10.13039/501100011033 and by “ERDF A way to make Europe”. This work was also partially funded by CIBERSAM-ISCIII and co-funded by “ERDF A way to make Europe”.

Author information

Authors and Affiliations

Contributions

A.F.-C. and P.F.-S. conceived the experiment, M.M. and A.S.G., conducted the experiment, J.J.R. and M.J.M. analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Monferrer, M., García, A.S., Ricarte, J.J. et al. Facial emotion recognition in patients with depression compared to healthy controls when using human avatars. Sci Rep 13, 6007 (2023). https://doi.org/10.1038/s41598-023-31277-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-31277-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.