Abstract

This work proposes a stochastic variational deep kernel learning method for the data-driven discovery of low-dimensional dynamical models from high-dimensional noisy data. The framework is composed of an encoder that compresses high-dimensional measurements into low-dimensional state variables, and a latent dynamical model for the state variables that predicts the system evolution over time. The training of the proposed model is carried out in an unsupervised manner, i.e., not relying on labeled data. Our learning method is evaluated on the motion of a pendulum—a well studied baseline for nonlinear model identification and control with continuous states and control inputs—measured via high-dimensional noisy RGB images. Results show that the method can effectively denoise measurements, learn compact state representations and latent dynamical models, as well as identify and quantify modeling uncertainties.

Similar content being viewed by others

Introduction

Understanding the evolution of dynamical systems over time by discovering their governing laws is essential for science and and engineering1. Traditionally, governing equations are derived from physical principles, such as conservation laws and symmetries. However, the governing laws are often difficult to unveil for many systems exhibiting strongly nonlinear behaviors. These complex behaviors are typically captured by high-dimensional noisy measurements, which makes it especially hard to identify the underlying principles. On the other hand, while measurement data are often abundant for many dynamical systems, physical equations, if known, may not exactly govern the actual system evolution due to various uncertainties.

The progress of Machine Learning2 and Deep Learning3, combined with the availability of large amounts of data, has paved the road for new paradigms for the analysis and understanding of dynamical systems1. These new paradigms are not limited to the discovery of governing laws for system evolution, and have brought revolutionary advancements to the field of dynamical system control. In particular, Reinforcement Learning4 (RL) has opened the door to model-free control directly from high-dimensional noisy measurements, in contrast to the traditional control techniques that rely on accurate physical models. RL has found its success in the nature-inspired learning paradigm through interaction with the world, in which the control law is solely a function of the measurements and learned by iteratively evaluating its performance a posteriori, i.e., after being applied to the system. Especially, RL stands outs in the control of complex dynamical systems5. However, RL algorithms may suffer from high computational cost and data inefficiency as a result of disregarding any prior knowledge about the world.

While data are often high-dimensional, many physical systems exhibit low-dimensional behaviors, effectively described by a limited number of latent state variables that can capture the principal properties of the systems. The process of encoding high-dimensional measurements into a low-dimensional latent space and extracting the predominant state variables is called, in the context of RL and Computer Science, State Representation Learning6,7. At the same time, its counterpart in Computational Science and Engineering is often referred to as Model Order Reduction8.

Reducing the data dimensionality and extracting the latent state variables is often the first step to explicitly represent a reduced model describing the system evolution. Due to their low dimensionality, such reduced models are often computationally lightweight and can be efficiently queried for making predictions of the dynamics9 and for model-based control, e.g., Model Predictive Control10 and Model-based RL4. The problem of dimensionality reduction and reduced-order modeling is traditionally tackled by the Singular Value Decomposition11 (SVD) (depending on the context, the SVD is often referred to as Principal Component Analysis12 or Proper Orthogonal Decomposition13). Examples include the Dynamics Mode Decomposition14,15, sparse identification of latent dynamics16 (SINDy), operator inference17,18,19, and Gaussian process surrogate modeling20. More recently, Deep Learning3, especially a specific type of neural network (NN) termed Autoencoder3 (AE), has been employed to learn compact state representations successfully. Unlike the SVD, an AE learns a nonlinear mapping from the high-dimensional data space to a low-dimensional latent space through an NN called encoder, as well as an inverse mapping through a decoder. AEs can be viewed as a nonlinear generalization of the SVD, enabling more powerful information compression and better expressivity. AEs have been used for manifold learning21, in combination with SINDy for latent coordinate discovery22, and in combination with NN-based surrogate models for latent representation learning towards control23,24.

Whether we aim to identify parameters of a physical equation or learn the entire system evolution from data, we may face an unavoidable challenge stemming from data noise. Inferring complex dynamics from noisy data is not effortless, as the identification, understanding and quantification of various uncertainties is often required. For example, uncertainties may derive from noise-corrupted sensor measurements, system parameters (e.g., uncertain mass, geometry, or initial conditions), modeling and/or approximation processes, and uncertain system behaviors that may be chaotic (e.g., in the motion of a double pendulum) or affected by unknown disturbances (e.g., uncertain external forces or inaccurate actuation). When data-driven methods consider stochasticity and uncertainties quantification, AEs are often replaced with Variational AEs25 (VAEs) for learning low-dimensional states as probabilistic distributions. Samples from these distributions can be used for the construction of latent state models via Gaussian models26,27,28,29,30 and nonlinear NN-based models31,32. However, NN-based latent models often disregard the distinction among uncertainty sources, especially between the data noise in the measurements and the modeling uncertainties stemming from the learning process, and only estimate the overall uncertainty on the latent state space through the encoder of a VAE model. We argue, however, that disentangling the uncertainty sources is critical for identifying the governing laws and discovering the latent reduced-order dynamics.

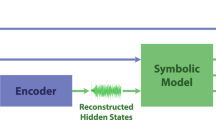

In this work, we propose a data-driven framework for the dimensionality reduction, latent-state model learning, and uncertainty quantification based on high-dimensional noisy measurements generated by unknown dynamical systems (see Fig. 1). In particular, we introduce a Deep Kernel Learning33 (DKL) encoder, which combines the highly expressive NN with a kernel-based probabilistic model of Gaussian process34 (GP) to reduce the dimensionality and quantify the uncertainty in the noisy measurements simultaneously, followed by a DKL latent-state forward model that predicts the system dynamics with quantifiable modeling uncertainty, and an NN-based decoder designed to enable reconstruction, prevent representation-collapsing, and improve interpretability. Endowed with quantified uncertainties, such a widely applicable and computationally efficient method for manifold and latent model learning is essential for data-driven physical modeling, control, and digital twinning.

Preliminaries

In scalar-valued supervised learning, we have a set of M d-dimensional input samples \({\textbf{X}}=[{\textbf{x}}_1, \dots , {\textbf{x}}_M] \in {\mathscr {X}} \subset {\mathbb {R}}^d\) and the corresponding set of target data \({\textbf{y}}=[{y}_1, \dots , {y}_M]^T\) \(\in {\mathscr {Y}} \subset {\mathbb {R}}\) related by some unknown function \(f^{\#}: {\mathscr {X}} \rightarrow {\mathscr {Y}}\). The goal is to find a function f that best approximates \(f^{\#}\). Many function approximators can be used to learn f, but here we introduce Gaussian process regression (GPR)34—a non-parametric method for data-driven surrogate modeling and uncertainty quantification (UQ), deep NNs—a popular class of parametric function approximators of Deep Learning, and the Deep Kernel Learning33 (DKL) that combines the nonlinear expressive power of deep NNs with the advantages of kernel methods in UQ.

Gaussian process regression

A GP is a collection of random variables, any finite number of which follow a joint Gaussian distribution34.

where the GP is characterized by its mean function \(\mu ({\textbf{x}}) = {\mathbb {E}}[f({\textbf{x}})]\) and covariance/kernel function \(k({\textbf{x}},{\textbf{x}}';\varvec{\gamma })=k_{\varvec{\gamma }}({\textbf{x}},{\textbf{x}}')={\mathbb {E}}[(f({\textbf{x}})-\mu ({\textbf{x}}))(f({\textbf{x}}')-\mu ({\textbf{x}}'))]\) hyperparameters \(\gamma\), \({\textbf{x}}\) and \({\textbf{x}}'\) being two input locations, and \(\varepsilon\) is an independent added Gaussian noise term with variance \(\sigma _\varepsilon ^2\). A popular choice of the kernel is the automatic relevance determination (ARD) squared exponential (SE) kernel:

where \(\sigma _f\) is the standard deviation hyperparameter and \(l_j\) (\(1\le j\le d\)) is the lengthscale along each individual input direction. The optimal values of GP hyperparameters \([\varvec{\gamma },\sigma _\varepsilon ^2]=[\sigma _f^2, l_1, \dots , l_d,\sigma _\varepsilon ^2]\) can be estimated via maximum marginal likelihood given the training targets \({\textbf{y}}\)34:

Optimizing the GP hyperparameters through Eq. (3) requires to repeatedly inverse the covariance matrix \(k_{\varvec{\gamma }}({\textbf{X}},{\textbf{X}})+\sigma _\varepsilon ^2{\textbf{I}}\), which can be very expensive or even untrackable in the cases of high-dimensional inputs (e.g., images with thousands of pixels) or big datasets (\(M\gg 1\)).

Given the training data of input-output pairs \(({\textbf{X}},{\textbf{y}})\), the Bayes’ rule gives a posterior Gaussian distribution of the noise-free outputs \({\textbf{f}}^*\) at unseen test inputs \({\textbf{X}}^*\):

Deep neural networks

NNs are parametric universal function approximators35 composed of multiple layers sequentially stacked together. Each layer contains a set of learnable parameters known as weights and biases. Collected in a vector \(\theta\), these NN parameters are optimized via backpropagation3 for a function f that best approximates \(f^{\#}\):

where \(g({\textbf{x}};\theta )\) denotes an NN with input \({\textbf{x}}\) and parameters \(\theta\). There are three prominent types of NN layers3: fully-connected, convolutional, and recurrent. In practice, the three types of layers are often combined to deal with different characteristics of data and increase the expressivity of the NN model.

Deep kernel learning

To mitigate the limited scalability of GPs to high-dimensional inputs, often referred to as the curse of dimensionality, Deep Kernel Learning33,36,37 was developed to exploit the nonlinear expressive power of deep NNs to learn compact data representations while maintaining the probabilistic features of kernel-based GP models for UQ. The key idea of DKL is to embed a deep NN, representing a nonlinear mapping from the data to the feature space, into the kernel function for GPR as follows:

where \(g({\textbf{x}};\varvec{\theta })\) is an NN with input \({\textbf{x}}\) and parameters (weights and biases) \(\varvec{\theta }\). Similar to conventional GPs, different kernel functions can be chosen. The GP hyperparameters and the NN parameters are jointly trained by maximizing the marginal likelihood as in Eq. (3).

Thanks to its strong expressive power and versatility, DKL has gained attention in many fields of scientific computing, such as computer vision33,38,39, natural language processing40, robotics36, and meta-learning41. However, DKL still suffers from computational inefficiency due to the need for repeatedly inverting the \(M\times M\) covariance matrix in Eq. (3) when the dataset is large (\(M\gg 1\)). In addition, the posterior will be intractable if we change to non-Gaussian likelihoods, and there is no efficient stochastic training3 (e.g., stochastic gradient descent) that is available for DKL models. All these facts make DKL unable to handle large datasets. To overcome these three limitations, stochastic variational DKL42 (SVDKL) was introduced. SVDKL utilizes variational inference34 to approximate the posterior distribution with the best fitting Gaussian to a set of inducing data points sampled from the posterior. Our framework is built upon the SVDKL model.

Rather than other popular deep learning tools, SVDKL is chosen for three main reasons: (i) compared with deterministic NN-based models, GPs—kernel-based models—offer better quantification of uncertainties33,34, (ii) compared with Bayesian NNs43, SVDKL is computationally cheaper and feasible to the integration of any deep NN architecture, and (iii) compared with ensemble NNs, SVDKL is memory efficient as only a single model needs to be trained.

Methods

In our work, we consider nonlinear dynamical systems generally written in the following form:

where \({\textbf{s}}(t) \in {\mathscr {S}} \subset {\mathbb {R}}^n\) is the state vector at time t, \({\textbf{u}}(t) \in {\mathscr {U}} \subset {\mathbb {R}}^m\) is the control input at time t, \({\mathscr {F}}: {\mathscr {S}} \times {\mathscr {U}} \rightarrow {\mathscr {S}}\) is a nonlinear function determining the evolution of the system given the current state \({\textbf{s}}(t)\) and control input \({\textbf{u}}(t)\), \({\textbf{s}}_0\) is the initial condition, and \(t_0\) and \(t_f\) are the initial and final time, respectively. In many real-world applications, the state \({\textbf{s}}(t)\) is not directly accessible and the function \({\mathscr {F}}\) is unknown. In spite of this, we can obtain indirect information about the systems through measurements from different sensor devices (measurements can derive, for example, from cameras, laser scanners, or inertial measurement units). Due to the time-discrete nature of the measurements, we indicate with \({\textbf{x}}_t\) the measurement vector at a generic time-step t, and \({\textbf{x}}_{t+1}\) the measurement at time-step \(t+1\).

Given a set of M d-dimensional measurements \({\textbf{X}}=[{\textbf{x}}_1, \dots , {\textbf{x}}_M] \in {\mathscr {X}} \subset {\mathbb {R}}^d\) with \(d \gg 1\) and control inputs \({\textbf{U}}=[{\textbf{u}}_1, \dots , {\textbf{u}}_{M-1}] \in {\mathscr {U}}\), we consider the problem of learning: (a) a meaningful representation of the unknown states, and (b) a surrogate model for \({\mathscr {F}}\). However, the high-dimensionality and noise corruption of measurement data makes the two-task learning problem extremely challenging.

Learning latent state representation from measurements

To begin with, we introduce an SVDKL encoder \(E: {\mathscr {X}} \rightarrow {\mathscr {Z}}\) used to compress the measurements into a low-dimensional latent space \({\mathscr {Z}}\). Due to the measurement noise, rather than being deterministic, E should map each measurement to a distribution over the latent state space \({\mathscr {Z}}\). The SVDKL encoder is depicted in Fig. 2. A latent state sample can be obtained as:

where \(z_{i,t}\) is the sample from the \(i\)th GP with kernel k and mean m, \(g_E({\textbf{x}}_t; \varvec{\theta }_E)\) is the feature vector output of the NN part of the SVDKL encoder E, \(\varepsilon _E\) is an independently added noise, and \(|{\textbf{z}}|\) indicates the dimension of \({\textbf{z}}\).

Because we have no access to the actual state values, we cannot directly use supervised learning techniques to optimize the parameters \([\varvec{\theta }_E,\varvec{\gamma }_E, \varvec{\sigma }^2_E]\) of the SVDKL encoder. Therefore, we utilize a decoder neural network D to reconstruct the measurements given the latent state samples. These reconstructions, denoted by \(\hat{{\textbf{x}}}_t\), are also used to generate trainable gradients for the SVDKL encoder, which is a common practice for training VAEs. Similar to VAEs, an important aspect of the architecture is the bottleneck created for the low dimensionality of the learned state space \({\mathscr {Z}}\). While the SVDKL encoder E learns \(p({\textbf{z}}_t|{\textbf{x}}_t)\), the decoder D learns the inverse mapping \(p(\hat{{\textbf{x}}}_t|{\textbf{z}}_t)\) in which \(\hat{{\textbf{x}}}_t\) is the reconstruction of \({\textbf{x}}_t\). We call this autoencoding architecture SVDKL-AE. To the best of our knowledge, this is the first attempt at training a DKL model without labeled data (unsupervisedly).

Given a randomly sampled minibatch of measurements, we can define the loss function for an SVDKL-AE as follows:

where \(\hat{{\textbf{x}}}_t|{\textbf{z}}_t\sim {\mathscr {N}}(\varvec{\mu }_{\hat{{\textbf{x}}}_t},\varvec{\Sigma }_{\hat{{\textbf{x}}}_t})\) is obtained by decoding the samples of \({\textbf{z}}_t|{\textbf{x}}_t\) through D. By minimizing the loss function in Eq. (9) with respect to the encoder and decoder parameters, as analogously practiced with VAEs, we can obtain a compact representation of the measurements.

Though our SVDKL-AE resembles a VAE in terms of network architecture and training strategy, we highlight two major advantages of the SVDKL-AE, which have motivated its use in this work:

-

The SVDKL encoder explicitly learns the full distribution \(p({\textbf{z}}|{\textbf{x}})\) from which we can sample the latent states \({\textbf{z}}\) reduced from the full-order states \({\textbf{x}}\). A VAE only learns the mean vector and covariance matrix (often chosen to be diagonal) of an assumed joint Gaussian distribution. Clearly, SVDKL-AE should be able to deal with different types of complex distributions more effectively.

-

SVDKL-AE can exploit the kernel structure of a Gaussian process to quantify uncertainties, even effectively in low-data regimes38,40,42. The kernel choice can be tailored to incorporate prior knowledge into the data-driven modeling.

Learning latent dynamical model

We aim to learn a surrogate dynamical model F predicting the system evolution given the latent state variables sampled from \({\mathscr {Z}}\) and the control inputs in \({\mathscr {U}}\). Due to the uncertainties present in the system, we learn a stochastic model \(F:{\mathscr {Z}} \times {\mathscr {U}} \rightarrow {\mathscr {Z}}\). Similar to E, the dynamical model F is constructed using an SVDKL architecture. The next latent states \({\textbf{z}}_{t+1}\) can be sampled with F:

where \(z_{i,t+1}\) is sampled from the \(i^{th}\) GP, \(g_F({\textbf{z}}_t,{\textbf{u}}_t; \varvec{\theta }_E)\) is the feature vector output of the NN part of the SVDKL dynamical model F, and \(\varepsilon _F\) is a noise term.

Again, we do not have access to the true state values obtained by applying the (unknown) control law, but only the sequence of measurements at different time-steps. Here we employ a commonly used strategy in State Representation Learning31,32,44, which encodes the measurement \({\textbf{x}}_{t+1}\) into the distribution \(p({\textbf{z}}_{t+1}| {\textbf{x}}_{t+1})\) through the SVDKL encoder E, and uses such a distribution as the target for \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) given by the dynamical model F. Therefore, the dynamical model F is trained by minimizing the Kullback-Leibler divergence between the distributions \(p({\textbf{z}}_{t+1}| {\textbf{x}}_{t+1})\) and \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) (more details in Appendix). The loss for training F is formulated as follows:

where \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) is obtained by feeding a sample from \(p({\textbf{z}}_t|{\textbf{x}}_t)\) and a control input \({\textbf{u}}_t\) to F.

Joint training of models

Instead of training E and F separately, we train them jointly by allowing the gradients of the dynamical model F to flow through the encoder E as well. The overall loss function is

in which \(\beta =1.0\) is used to scale the contribution of the two loss terms.

Variational inference

The two SVDKL models in this work utilize variational inference to approximate the posterior distributions in (8) and (10) with a known family of candidate distributions (e.g., joint Gaussian distributions). The need for variational inference stems from the stochastic gradient descent optimization procedure used for the modeling training42. Therefore, we add two extra items to the loss function in Eq. (12), one for each SVDKL model in the following form:

in which \(p({\textbf{v}})\) is the posterior to be approximated over a collection of sampled locations \({\textbf{v}}\) termed inducing points, and \(q({\textbf{v}})\) represents an approximating candidate distribution. Similar to the original SVDKL work42, the inducing points are placed on a grid.

Results

Numerical example

For our experiments, we consider the pendulum described by the following equation:

where \(\phi\) is the angle of the pendulum, \(\ddot{\phi }\) is the angular acceleration, m is the mass, l is the length, and g denotes the gravity acceleration. We assume no access to \(\phi\) or its derivatives, and the measurements are RGB images of size \(84 \times 84 \times 3\) obtained through an RGB camera. Examples of high-dimensional and noisy measurements are shown in Fig. 3.

The measurements are collected by applying torque values u sampled from a random control law with different initial conditions. The training set is composed of 15000 data tuples \(({\textbf{x}}_t,{\textbf{u}}_t, {\textbf{x}}_{t+1})\), while the test set is composed of 2000 data tuples. Different random seeds are used for collecting training and test sets. The complete list of hyperparameters used in our experiments is shown in Appendix.

Denoising

In Fig. 4, we show the denoising capability of the proposed framework by visualizing the reconstructions of the high-dimensional noisy measurements. The measurements are corrupted by additive Gaussian noise \({\mathscr {N}}(0, \sigma ^2_x)\):

Moreover, in Fig. 5, we show the reconstructions of the next latent states \({\textbf{z}}_{t+1}\) sampled from the dynamic model distribution \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) when the control input \({\textbf{u}}_t\) is corrupted by Gaussian noise \({\mathscr {N}}(0, \sigma ^2_u)\):

Reconstructions \(\hat{{\textbf{x}}}_{t+1}\) with different noise levels in the control inputs \({\textbf{u}}_t\). As shown by the sharp reconstructions of \({\textbf{z}}_{t+1}\), the SVDKL forward model can denoise the corrupted control inputs \({\textbf{u}}_t\) and predict the dynamics accurately.

Eventually, in Fig. 6, we show the reconstructions when \({\textbf{x}}_t\) and \({\textbf{u}}_t\) are simultaneously corrupted by Gaussian noises \({\mathscr {N}}(0, \sigma ^2_x)\) and \({\mathscr {N}}(0, \sigma ^2_u)\), respectively.

Reconstructions \(\hat{{\textbf{x}}}_{t+1}\) with different noise levels in both the measurements \(\tilde{{\textbf{x}}}_t\) and the control inputs \(\tilde{{\textbf{u}}}_t\). With both the measurements and control inputs corrupted by significant noise, the proposed model shows good performance in denoising.

In all the three cases, our framework can properly denoise the input measurements by encoding the predominant features into the latent space. To support this claim, we show, in Fig. 7, the means of the current and next latent state distributions with different noise corruptions. It is worth noting that the means of such distributions are a high-quality representation of the actual dynamics of the pendulum. Due to the dimensionality (\(>2\)) of the latent state space, we use t-SNE45 to visualize the results in 2-dimensional figures with the color bar representing the actual angle of the pendulum. The smooth change of the representation with respect to the true angle indicates its high quality. Moreover, it is worth mentioning that, as the level of noise in the measurements and control inputs is increased dramatically, the changes in the means of learned distributions are insignificant because of the denoising capability of the proposed model.

t-SNE visualization for the means of current (top) and next (bottom) latent state distributions with different noise levels in the measurements and control inputs. The color bar represents the true angle of the pendulum. As expected for a good denoising scheme, the change in the means of latent states is inconsiderable while the level of noise in the measurements and control inputs is increased significantly.

Prediction of dynamics

To better demonstrate how well the framework performs in prediction under uncertainties, we modify the pendulum dynamics in Eq. (14) to account for stochasticity due to, for example, external disturbances:

Again, we include an independently added Gaussian noise. While \(\varepsilon _{\textbf{x}}\) and \(\varepsilon _{\textbf{u}}\) are noise terms added to the noise-free measurements \({\textbf{x}}_t\) and control inputs \({\textbf{u}}_t\) to model, for example, the noise deriving from the sensor devices, \(\varepsilon _{dyn}\) approximates an unknown disturbance on the actual pendulum dynamics.

In Fig. 8, we show the means of \(p({\textbf{z}}_t|{\textbf{x}}_t)\) and \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) with different noise levels, and the corresponding (decoded) reconstructions of \({\textbf{z}}_{t+1}\) samples from \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\). From the mean of \(p({\textbf{z}}_t|{\textbf{x}}_t)\), we can notice that the SVDKL encoder properly denoises the measurements and extracts the latent state variables when both measurement noise and disturbance on the actual pendulum dynamics exist. The SVDKL dynamical model recovers the mean of \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) when the dynamical evolution of the pendulum is affected by an unknown stochastic disturbance. Even with high level of disturbance, though the system evolution over time becomes stochastic and more difficult to predict, \(p({\textbf{z}}_{t+1}|{\textbf{z}}_t, {\textbf{u}}_t)\) can still capture and predict the evolution. Eventually, we can visualize the overall uncertainty in the dynamics reflected by the reconstruction of \({\textbf{z}}_{t+1}\) via the decoder D. Note that the reconstructions in Fig. 8 are obtained by averaging 10 independent samples per data point.

Uncertainty quantification

In this subsection, we show that the proposed SVDKL-AE enables the quantification of uncertainties in model predictions. It is worth mentioning that visualizing UQ properly is a commonly recognized challenging task in unsupervised learning.

The learned latent state vector \({\textbf{z}}\) is 20-dimensional. To visualize the UQ capability of the proposed model, we select the ith-component (\(i=12\) and \(i=13\) in Figs. 9 and 10, respectively) of the state vector that is correlated with the physical states, and depict its predictive uncertainty bounds for different noise levels (\(\sigma _x^2=0.0, \sigma _u^2=0.7\) and \(\sigma _x^2=0.5, \sigma _u^2=0.5\), respectively). Because we are investigating an unsupervised learning problem, the latent variables may not have a direct physical interpretation. However, a good latent representation should present strong correlation with the physical states, and proper UQ should reflect the existence of noise in the measurements \({\textbf{x}}\) and/or control inputs \({\textbf{u}}\).

Estimated uncertainties over the 12th-components of the latent state vectors \({\textbf{z}}_t\) (a) and \({\textbf{z}}_{t+1}\) (b) predicted by the proposed model for a sampled trajectory. The y-position of the pendulum is given in (c), and the corresponding high-dimensional noisy measurements (\(\sigma _x^2=0.0, \sigma _u^2=0.7\)) are shown in (d). The uncertainty bands are given by ± two standard deviation in the predictive distributions.

Estimated uncertainties over the 13th-components of the latent state vectors \({\textbf{z}}_t\) (a) and \({\textbf{z}}_{t+1}\) (b) predicted by the proposed model for a sampled trajectory. The corresponding high-dimensional noisy measurements (\(\sigma _x^2=0.5, \sigma _u^2=0.5\)) are shown in (c). The uncertainty bands are given by ± two standard deviation in the predictive distributions.

As seen in the figures, the proposed framework achieves a good system representation with latent variables that are highly correlated with physical quantities of interest (see Figs. 9a–c and 10a,b), devised by uncertainty bands reflecting data noise and modeling errors (see Fig. 9a,b in comparison with 10a,b.)

Discussion and future work

Though well researched in supervised learning46, uncertainty quantification is still an understudied topic in unsupervised dimensionality reduction and latent model learning. However, the combination of these two tasks has the potential to open new doors to the discovery of governing principles of complex dynamical systems from high-dimensional noisy data. Our proposed method provides convincing indications that combining deep NNs with kernel-based models is promising for the analysis of high-dimensional noisy data. Our general framework relies only on the observations of measurements and control inputs, making it applicable to all physical modeling, digital twinning, weather forecast, and patient-specific medical analysis.

Learning compact state representations and latent dynamical models from high-dimensional noisy observations is a critical element of Optimal Control and Model-based RL. In both, the disentanglement of measurement and modeling uncertainties will play a crucial role in optimizing control laws, as well as in devising efficient exploration of the latent state space to aid the collection of new, informative samples for model improvement. The quantified uncertainties can be exploited for Active Learning47 to steer the data sampling48.

Conclusions

SVDKL models are integrated into a novel general workflow of unsupervised dimensionality reduction and latent dynamics learning, combining the expressive power of deep NNs with the uncertainty quantification abilities of GPs. The proposed method has shown good capability of generating interpretable latent representations and denoised reconstructions of high-dimensional, noise-corrupted measurements, see Figs. 4, 5, 6 and 7, respectively. It has also been demonstrated that this method can deal with stochastic dynamical systems by identifying the source of stochasticity.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Brunton, S. L. & Kutz, J. N. Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control (Cambridge University Press, 2022).

Mitchell, T. M. & Mitchell, T. M. Machine Learning Vol. 1 (McGraw-hill, New York, 1997).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 2018).

Arulkumaran, K., Deisenroth, M. P., Brundage, M. & Bharath, A. A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 34, 26–38 (2017).

Lesort, T., Díaz-Rodríguez, N., Goudou, J.-F. & Filliat, D. State representation learning for control: An overview. Neural Netw. 108, 379–392 (2018).

Botteghi, N., Poel, M. & Brune, C. Unsupervised representation learning in deep reinforcement learning: A review. arXiv preprint arXiv:2208.14226 (2022).

Quarteroni, A. et al. Reduced Order Methods for Modeling and Computational Reduction Vol. 9 (Springer, Berlin, 2014).

Hesthaven, J. S., Pagliantini, C. & Rozza, G. Reduced basis methods for time-dependent problems. Acta Numer 31, 265–345 (2022).

Camacho, E. F. & Alba, C. B. Model Predictive Control (Springer, Berlin, 2013).

Wall, M. E., Rechtsteiner, A., & Rocha, L. M. Singular value decomposition and principal component analysis. In A Practical Approach to Microarray Data Analysis, pp. 91–109 (Springer, 2003).

Wold, S., Esbensen, K. & Geladi, P. Principal component analysis. Chemomet. Intell. Lab. Syst. 2, 37–52 (1987).

Berkooz, G., Holmes, P. & Lumley, J. L. The proper orthogonal decomposition in the analysis of turbulent flows. Ann. Rev. Fluid Mech. 25, 539–575 (1993).

Schmid, P. J. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 656, 5–28 (2010).

Proctor, J. L., Brunton, S. L. & Kutz, J. N. Dynamic mode decomposition with control. SIAM J. Appl. Dyn. Syst. 15, 142–161 (2016).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 113, 3932–3937 (2016).

Ghattas, O. & Willcox, K. Learning physics-based models from data: Perspectives from inverse problems and model reduction. Acta Numer 30, 445–554 (2021).

Guo, M., McQuarrie, S. A. & Willcox, K. E. Bayesian operator inference for data-driven reduced-order modeling. Comput. Methods Appl. Mech. Eng. 402, 115336 (2022).

Peherstorfer, B. & Willcox, K. Data-driven operator inference for nonintrusive projection-based model reduction. Comput. Methods Appl. Mech. Eng. 306, 196–215 (2016).

Guo, M. & Hesthaven, J. S. Data-driven reduced order modeling for time-dependent problems. Comput. Methods Appl. Mech. Eng. 345, 75–99 (2019).

Lee, K. & Carlberg, K. T. Model reduction of dynamical systems on nonlinear manifolds using deep convolutional autoencoders. J. Comput. Phys. 404, 108973 (2020).

Champion, K., Lusch, B., Kutz, J. N. & Brunton, S. L. Data-driven discovery of coordinates and governing equations. Proc. Natl. Acad. Sci. 116, 22445–22451 (2019).

Wahlström, N., Schön, T. B. & Deisenroth, M. P. From pixels to torques: Policy learning with deep dynamical models. arXiv preprint arXiv:1502.02251 (2015).

Assael, J.-A. M., Wahlström, N., Schön, T. B. & Deisenroth, M. P. Data-efficient learning of feedback policies from image pixels using deep dynamical models. arXiv preprint arXiv:1510.02173 (2015).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).

Fraccaro, M., Kamronn, S., Paquet, U. & Winther, O. A disentangled recognition and nonlinear dynamics model for unsupervised learning. Adv. Neural Inf. Process. Syst. 30, 1 (2017).

Krishnan, R. G., Shalit, U. & Sontag, D. Deep kalman filters. arXiv preprint arXiv:1511.05121 (2015).

Karl, M., Soelch, M., Bayer, J. & Van der Smagt, P. Deep variational bayes filters: Unsupervised learning of state space models from raw data. arXiv preprint arXiv:1605.06432 (2016).

Buesing, L. et al. Learning and querying fast generative models for reinforcement learning. arXiv preprint arXiv:1802.03006 (2018).

Doerr, A. et al. Probabilistic recurrent state-space models. In International Conference on Machine Learning, pp. 1280–1289 (PMLR, 2018).

Hafner, D. et al. Learning latent dynamics for planning from pixels. In International Conference on Machine Learning, pp. 2555–2565 (PMLR, 2019).

Hafner, D., Lillicrap, T., Ba, J. & Norouzi, M. Dream to control: Learning behaviors by latent imagination. arXiv preprint arXiv:1912.01603 (2019).

Wilson, A. G., Hu, Z., Salakhutdinov, R. & Xing, E. P. Deep kernel learning. In Artificial Intelligence and Statistics, pp. 370–378 (PMLR, 2016).

Williams, C. K. & Rasmussen, C. E. Gaussian Processes for Machine Learning (MIT Press Cambridge, MA, 2006).

Chen, T. & Chen, H. Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Trans. Neural Networks 6, 911–917 (1995).

Calandra, R., Peters, J., Rasmussen, C. E. & Deisenroth, M. P. Manifold gaussian processes for regression. In 2016 International Joint Conference on Neural Networks (IJCNN), pp. 3338–3345 (IEEE, 2016).

Bradshaw, J., Matthews, A. G. d. G. & Ghahramani, Z. Adversarial examples, uncertainty, and transfer testing robustness in gaussian process hybrid deep networks. arXiv preprint arXiv:1707.02476 (2017).

Chen, X., Peng, X., Li, J.-B. & Peng, Y. Overview of deep kernel learning based techniques and applications. J. Netw. Intell. 1, 83–98 (2016).

Ober, S. W., Rasmussen, C. E. & van der Wilk, M. The promises and pitfalls of deep kernel learning. In Uncertainty in Artificial Intelligence, pp. 1206–1216 (PMLR, 2021).

Belanche Muñoz, L. A. & Ruiz Costa-Jussà, M. Bridging deep and kernel methods. In ESANN2017: 25th European Symposium on Artificial Neural Networks: Bruges, Belgium, April 26-27-28, 1–10 (2017).

Tossou, P., Dura, B., Laviolette, F., Marchand, M. & Lacoste, A. Adaptive deep kernel learning. arXiv preprint arXiv:1905.12131 (2019).

Wilson, A. G., Hu, Z., Salakhutdinov, R. R. & Xing, E. P. Stochastic variational deep kernel learning. Adv. Neural Inf. Process. Syst. 29, 1 (2016).

Kononenko, I. Bayesian neural networks. Biol. Cybern. 61, 361–370 (1989).

Hafner, D., Lillicrap, T., Norouzi, M. & Ba, J. Mastering atari with discrete world models. arXiv preprint arXiv:2010.02193 (2020).

Van der Maaten, L. & Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 9, 1 (2008).

Abdar, M. et al. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Inf. Fus. 76, 243–297 (2021).

Settles, B. Active learning. Synth. Lect. Artif. Intell. Mach. Learn. 6, 1–114 (2012).

Fasel, U., Kutz, J. N., Brunton, B. W. & Brunton, S. L. Ensemble-sindy: Robust sparse model discovery in the low-data, high-noise limit, with active learning and control. Proc. R. Soc. A 478, 20210904 (2022).

Acknowledgements

The second author acknowledges the support from Sectorplan Bèta (NL) under the focus area Mathematics of Computational Science.

Author information

Authors and Affiliations

Contributions

N.B. and M.G. conceived the mathematical models, N.B. implemented the methods and designed the numerical experiments, N.B. and M.G. interpreted the results, N.B. wrote the first draft, and M.G. and C.B. reviewed the manuscript. All authors gave approval for the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Botteghi, N., Guo, M. & Brune, C. Deep kernel learning of dynamical models from high-dimensional noisy data. Sci Rep 12, 21530 (2022). https://doi.org/10.1038/s41598-022-25362-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-25362-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.