Abstract

Despite great advances in explaining synaptic plasticity and neuron function, a complete understanding of the brain’s learning algorithms is still missing. Artificial neural networks provide a powerful learning paradigm through the backpropagation algorithm which modifies synaptic weights by using feedback connections. Backpropagation requires extensive communication of information back through the layers of a network. This has been argued to be biologically implausible and it is not clear whether backpropagation can be realized in the brain. Here we suggest that biophotons guided by axons provide a potential channel for backward transmission of information in the brain. Biophotons have been experimentally shown to be produced in the brain, yet their purpose is not understood. We propose that biophotons can propagate from each post-synaptic neuron to its pre-synaptic one to carry the required information backward. To reflect the stochastic character of biophoton emissions, our model includes the stochastic backward transmission of teaching signals. We demonstrate that a three-layered network of neurons can learn the MNIST handwritten digit classification task using our proposed backpropagation-like algorithm with stochastic photonic feedback. We model realistic restrictions and show that our system still learns the task for low rates of biophoton emission, information-limited (one bit per photon) backward transmission, and in the presence of noise photons. Our results suggest a new functionality for biophotons and provide an alternate mechanism for backward transmission in the brain.

Similar content being viewed by others

Introduction

Learning is the process of gaining or improving knowledge or behavior by observing or interacting with the environment1,2,3. In the brain, learning is dependent on the synaptic modifications between neurons4,5,6,7. However, the realization of learning in the brain is not completely understood8. Existing theories mainly focus on neural electrochemical signals and study their capabilities to be the brain’s information carriers9.

Backpropagation is an important part of our current understanding of learning in artificial neural networks and is most often used to train deep neural networks10. Inspired by the fact that the brain learns by modifying the synaptic connections between neurons, the error signals are fed back to inner layers to update synaptic weights11,12. The broad applications and success of backpropagation and backpropagation-like algorithms as well as its core idea of using feedback connections to adjust synapses encouraged us to investigate if the brain’s learning process is based on the principle of the backward flow of information7,13,14,15,16,17,18,19. However, it is not clear if and how backpropagation is implemented by the brain. It has been argued that some of its main assumptions such as having exactly the same weight for each feedback connection and its feedforward counterpart as well as the need for separate distinct forward and backward pathways of information are biologically unrealistic20. Recent works suggest that symmetric weights are not necessary for effective learning21,22,23,24,25,26; however, they are implicitly assuming a separate feedback pathway17,20. In this paper, we suggest a new potential photonic mechanism for the backward flow of information that avoids the above mentioned assumptions.

Biophotons are spontaneously emitted by living cells in the range of near-IR to near-UV frequency (350–1300 nm wavelength) with low rates and low intensity, on the order of \(1{-}10^3\) photons/(s cm\(^{2}\))27. These photons have been observed from microorganisms including yeast cells and bacteria28,29, plants and animals30, and different biological tissues31,32 including brain slices33,34,35, yet it is unknown whether they have a biological function. In 1999, Kobayashi et al. performed in vivo imaging of biophotons from a rat’s brain for the first time33. They demonstrated the correlation between biophoton emission intensity and neuronal activities of the brain with electroencephalographic techniques and suggested that biophoton emission from the brain originates from mitochondrial activities through the production of reactive oxygen species33. Moreover, several experiments studied the response of neurons and generally the brain to the external light36,37,38,39,40 and showed that the brain has photosensitive properties.

The existence of these biophotons as well as the evidence that opsin molecules deep in the brain respond to light40 prompt the question of whether biophotons could serve as communication signals guided through the brain41. Axons have been proposed to be potential photonic waveguides for such optical communication42,43,44. The detailed theoretical modeling of myelinated axons shows that optical propagation is possible in either direction along the axon42. Recent experimental evidence for light guidance by the myelin sheath supports the theoretical model45. Also, there is some older indirect experimental evidence in supporting light conduction by axons34,43. Given the advantage that optical communication provides in terms of precision and speed in a technical context and the growing evidence that photons are practical carriers of information, one may wonder whether biological systems also exploit this modality.

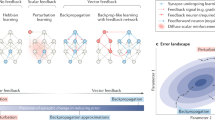

In this study, we propose that if any backpropagation-like algorithm is employed by the brain, biophotons guided through axons are a plausible choice for carrying the backward information in the brain in addition to the well-known electrochemical feed-forward signaling. To support our hypothesis, we model the backward path of information as a communication channel (see Fig. 1) The stochastically emitted biophotons update a random subset of synaptic weights in each training trial, meaning that only a percentage of the neurons transmit backward information at any given time. We consider realistic conditions and evaluate the learning efficacy of the mechanism. We demonstrate that even with a small proportion (a few percent) of neurons sending stochastic biophotons backward to the upstream neuron, networks with one hidden layer and photonic emission can still learn a complex task. We examine our model for the case that photons carry only one bit of information. We further incorporate noise (e.g. due to ambient light) in our model. Our results show that the network can still learn the task of MNIST digit recognition considering these realistic imperfections.

Schematic of a simplified network of neurons trained by backpropagation with stochastic photonic feedback. Three sets of neurons are represented as input, hidden, and output layers. For clarity, three neurons are shown in the hidden layer here. Connection of the dendrite of the post-synaptic neuron blue and the axonal terminal of the pre-synaptic neuron yellow at the synaptic cleft is enlarged (top-right, enlarged). The strength of the synapse (or synaptic weight) is greater as the result of more working ion channels (orange oval-shaped gates) in the post-synaptic neuron46. This is in accordance with the greater amount of ATP (adenosine triphosphate, the energy carrier molecules) usage in the post-synaptic neuron47. That results in more biophoton production by the post-synaptic mitochondria which can transfer backward information to the pre-synaptic neuron. The myelinated axon (top-left, enlarged) can guide the received backward photons along the axon. These biophotons might be later absorbed by opsin molecules in the brain40,48 (bottom-left, enlarged, gray molecules in the yellow neuron) which might trigger the emission of further biophotons, thus relaying the backward information across the network (see also main text).

Results

Here we describe our proposed mechanism for the backward flow of information in the brain (as shown in Fig. 1). Biophotons are produced stochastically with fairly low rates as expected based on experimental observations of biophotons from the brain33,34. In a neural network, post-synaptic neurons consume more ATP molecules than pre-synaptic ones which results in more working ion channels in the post-synaptic neurons47. As more ion channels are working and the synaptic weight increases46, more biophotons are produced by the post-synaptic mitochondria (see “Discussion” section for more explanation). These stochastically generated biophotons can facilitate the backward flow of information about the synaptic weight changes from the post-synaptic neuron to the pre-synaptic one by being guided through the myelinated axon. The biophotons can be absorbed by photo-sensitive molecules in the brain, such as opsins which are known to be present in deep brain tissues40,48. Opsin-mediated suppression of thermogenesis (heat production) in response to the detected light40 and balancing of ATP production by mitochondria versus thermogenesis49 may result in more biophoton production (see “Discussion” section for more explanation). This can explain how biophotons might relay information backwards across the different layers of the neural network in the brain.

In our (computational) experiments, we consider a network with three sets of layers that are categorized into three classes input, hidden, and output layers (Fig. 1). The hidden layer consists of 500 units (neurons) and the number of neurons in the other two layers depends on the task to be trained. The backpropagation learning algorithm calculates the gradient of an error function for each individual synapse with respect to the network weights and propagates the gradients backward all through the network to the upstream neuron. The goal of the training is to reduce the loss function (Eq. (4)) which is the distance between the target output and the calculated output of the network. The synaptic weights are updated stochastically to mimic the random emission and propagation of biophotons that carry the information backward. We train the network for the MNIST digit recognition task50,51 in an online fashion. The mathematical details of the model are described in the “Methods” section.

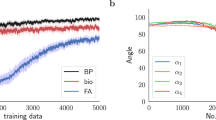

Neurons are trained with stochastically backpropagated photons

Here we provide numerical evidence that our described model is trainable, even with partial backpropagation of errors (teaching signals), by testing it on the classification task of MNIST digits. The MNIST dataset of handwritten digits consists of 60,000 training examples and 10,000 test examples. Each training example in the dataset is a grey-scale image of 28 by 28 pixels of handwritten single digits between 0 and 9, in which the handwritten digits are recognized. The task is to classify a given image of a handwritten digit into one of the 10 classes (see Fig. 2a). After evaluating the network and calculating the weights of connections, a random proportion q of the neurons releases photons. The photon travels backward to transmit the error signal to its pre-synaptic neuron (see Fig. 1) to update the pre-synaptic weights (see Eqs. (7, 8) and the discussion for Stochastic photonic updates afterward in “Methods”). For small values of q, the photon emission is sparser and as it gets closer to 1, more photons travel backward (see Fig. 2b), and the model performs closer to the original backpropagation algorithm where all weights get updated (see “Methods”, Stochastic photonic updates discussion). For stability, we keep the learning rate \(\epsilon \) small (the learning rate is a tunable parameter between 0 and 1 that controls the step size of each iteration of weight updates in the training of the neural network) and of the order of 0.001 in most of the simulations. We show that training error converges to a small constant value after \(6\times 10^4\) number of trials for reasonable values of q. Here, the training error is the average error of the last 100 samples. While the training is in progress, we use a validation dataset to estimate how accurately the model performs and avoid overfitting. The validation error is computed every 500 steps. Figure 2c shows that we can still train a network for small values of q of the order of \(10^{-4}\). This shows that with even low emission rates of biophotons, the backpropagation-like channel can still learn the task. We also compare the output of the trained model with the expected output (test dataset, independent from the training dataset) and calculate the test error to check the performance of the trained model, shown in Fig. 2d. Next, we restrict the amount of information that each photon transmits back in the model.

Training of a 3-layered artificial neural network with 500 neurons in the hidden layer by the stochastic photonic updates. (a) The network is trained for an MNIST hand-written recognition task. For each training trial, the network receives sets of handwritten digits as input and their corresponding digit classes as the target output. (b) The network computes the feedforward weights and updates them with stochastic photonic feedback. The parameter q is the probability of transmitting one photon per neuron, and as it gets larger (closer to 1), the network sends more backward photons and behaves closer to the conventional backpropagation algorithm. (c) As the trial number grows, the error rate (that is the moving average of the past 100 trial errors, see Eq. (11) converges to a small value and the training completes. This convergence happens even for small values of q but after greater numbers of trials. Here, the learning rate, \(\epsilon =0.01\), has been kept small for the stability of the network. (d) The test error, which measures the distance between the target and the output of the trained model, is averaged over 10 repetitions of the test experiment for each different values of q and \(\epsilon \) (see “Methods” for details).

Neurons can learn even if each stochastic backpropagated photon carries only one bit of information

As it may not be realistic to assume that a single photon can carry unlimited detailed information, we investigated if photons with more limited information could still lead to learning. In order to limit the amount of information carried by each photon, we discretize the gradient information into binary increases (\(+\epsilon \)) and decreases (\(-\epsilon \)) as shown in Fig. 3a with blue and red signals, see “Methods” Eqs. (12, 13) for the implementation of this specific weight update. Depending on their type (e.g. two different polarizations or frequencies), they might increase or decrease the weight by a fixed amount of \(\epsilon \). Figure 3 summarizes the result of implementing this limitation of information transmission and that the network can still learn. In Fig. 3b, we have increased the size of the network from 500 units in the hidden layer to 1000 and 5000 units. As we increase the size of the network, the error rate converges to smaller amounts. In Fig. 3c, the test error demonstrated with respect to each mesh point has been averaged over 10 trials of training the different networks. The standard deviation of the trials is shown in Fig. 3d.

Training of a 3-layered artificial neural network by the stochastic photonic updates with carrying one bit of information. (a) Stochastic weight updates transmit binary information and each update either increases or decreases the weight by a fixed amount, \(\epsilon \). For more details see Eqs. (12) and (13) in “Methods”. (b) Here, \(q=0.1\) and \(\epsilon =0.1\). With stochastic binary updates, the training of MNIST classification task is still successful. As the number of hidden units increases the error rate converges to smaller values. (c) To obtain mean test error, for each values of \(\epsilon \) and q, 10 different networks with 500 hidden units were evaluated and the test error was averaged. d, Standard deviation of the test error is calculated per mesh point.

The network can be trained even in presence of uncorrelated random photonic updates

As the biophoton emission rates are low, the impact of ambient light as noise should be considered52. Although waveguiding of biophotons by the axons would mitigate the effect of such noise42, it is still important to consider. Other possible sources of noise could be some uncorrelated photon emissions and dark counts by detectors. We investigated biophoton-assisted learning in the presence of noise. We model the noise as uncorrelated random photons that impair the photonic updates. As shown in Fig. 4a, noise photons (dashed ones) are also backpropagated. They disturb the process of weight adjustment and increase the error rate (see Fig. 4b). The model was simulated for a 3-layered network with 500 units in the hidden layer and \(q=0.1\) for different values of \(n_p\), where \(n_p\) is the proportion of neurons that emit noise (meaningless) photons. As long as the \(n_p\) is smaller than q (signal to noise ratio is smaller or equal to one), the training error converges according to Fig. 4b. The comparison between Figs. 3c and 4c shows that for some areas of the parameter space learning still works even in the presence of noise with very low standard deviation of test error (see Fig. 4d).

Training of a 3-layered artificial neural network by the noisy stochastic photonic updates with binary signal limit. (a) After evaluation of the network, binary photonic signals are transmitted back to update weights, however, in the presence of uncorrelated noisy photons. (b) Here, the deterministic photon emission rate, \(q=0.1\), and the learning rate, \(\epsilon =0.1\), are given for a network with 500 units in the hidden layer. The error rate depends on the noisy photon rate, \(n_p\) which defines the probability of emitting noisy photon update. (c) The average error rate after 10 trials has increased due to the noise in the system. Here, \(n_p=0.01\). There are still areas in the graph where learning is happening. This figure is generated after training 10 different networks for each mesh point and taking the average on the test error. (d) The standard deviation of the test error after running 10 different networks.

Discussion

We have shown that backpropagation-like learning is possible with stochastic photonic feedback, inspired by the idea that axons can serve as photonic waveguides, and taking into account the stochastic nature of biophoton emission in the brain. Considering realistic imperfections in the biophoton emission, we trained the network when each photon carried only one bit of information and showed that the network learned. We also examined the learning in presence of background noise and our results demonstrated its success. Here we discuss the biological inspiration for our suggested mechanism, address a few related questions, and propose experiments to test our hypothesis.

Synaptic weights are considered as the amount of influence that firing a pre-synaptic neuron has on the post-synaptic one4,5,53. This is directly related to the number of ion channels affected in the post-synaptic neuron54,55. The greater the synaptic weight is, the more ion channels are working in the post-synaptic neuron, which requires more metabolic activity46,47. That, in turn, escalates ATP usage resulting in more active post-synaptic mitochondria56. As mitochondria in the post-synaptic neuron work harder and consume more energy, more reactive oxygen species (ROS) are produced57,58,59,60. The emission of biophotons has been linked to the production of reactive species such as ROS and carbonyl in mitochondria33,57,61,62. Thus, a higher production rate of ROS leads to a higher production rate of biophotons in the post-synaptic neuron. That directly relates the production of biophotons in the post-synaptic neuron to the synaptic weight changes. Proportionality of the photon emission to the weights is part of what is required for backpropagation (see Eq. (10) in “Methods”). In addition, neurons may have evolved to encode the error signals in the photonic flux, e.g. by modulating biophoton emission as a function of incoming biophotons received.

An important question is how photonic information could be relayed across multiple network layers. Opsins are well-known for their ability of light detection in retina63 and skin64 of mammals. But they also exist in the deep brain tissues of mammals65 and are highly conserved over evolution. The existence of such light absorbent proteins in deep brain tissues suggests that they might serve as biophoton detectors. Moreover, a biological effect of external light mediated by opsins deep in the brain has recently been demonstrated, namely the opsin-mediated suppression of thermogenesis (heat production) in response to light40. On the other hand, mitochondria always balance ATP production versus thermogenesis49. Such suppression of thermogenesis via opsin-mediated photon detection could lead to more production of ATP by mitochondria which results in more photon production. Thus, it could constitute a relay across the neuron in the photonic backpropagation channel.

In this proposal, we addressed one of the main biologically problematic assumptions of backpropagation, of needing identical forward and backward network of neurons. The forward flow of information is due to action potentials and action potentials go one way through the neural paths66. Our suggested mechanism for backward communication, which does not require a separate network of neurons, has the attractive feature that the error signals do not interfere with the forward flow of information. That is due to the fact that the electrochemical signaling pathway is not likely manipulated by biophotons on short time scales.

In our modeling, we have considered the fact that the amount of information carried by each photon may be limited, for example to one bit. This information could be encoded in the polarization of the photons, or in their wavelengths67,68,69. The amount of information that can be successfully transmitted also depends on the detection mechanism, e.g. there could be different opsins responding to different wavelengths.

Although low rates of biophoton emission might be a concern52, guiding them by axons could be part of the solution because it will limit the loss of signal photons and reduce the impact of background light42. Biophoton emission rate from a slice of a mouse brain was measured at one photon per neuron per minute34 at rough estimation. This reported rate is one to two orders of magnitude lower than the electro-chemical signaling rate in the brain70. If biophotons are guided through the axons, it should be noted that the measured rates of brain emission only reflect the scattered photons and there could be more light propagating in a guided way than the experimental observations from the outside.

To verify the role of biophotons in learning in the brain, we propose some in-vivo experimental approaches. One type of tests is to genetically modify possible photon detectors in the brain, such as opsins, using well-studied optogenetics methods71,72,73 in order to impair biophoton reception by the network and observe the effects on the learning process. Another type of test could be using the RNA interference process74,75,76 in non-genetically engineered animals to target the silencing of specific sequences in genes that involve the generation or reception of biophotons, which could affect learning. Also, one could introduce background light into the neural network in vivo or add noise into the axon to see if that affects the learning. We have modeled noise by adding uncorrelated photons into the network. One could implement the idea of extra uncorrelated photons by introducing luciferase and luciferin (whose reaction produces bioluminescence without requiring an external light source) into the brain of the living animal by using optogenetic tools77,78.

It has been suggested that biophotons in the brain could transmit not only classical but also quantum information42,79,80, however, this still requires experimental confirmation. In the context of the present work, which is focused on a potential role for photons in learning, the possibility of transmitting quantum information by biophotons could be connected to the field of quantum machine learning81,82,83, which studies potential advantages of quantum approaches to learning.

If the brain’s biophotons are involved in learning by transmitting information backward through the axons, then it would reveal a new feature of the brain and can answer some fundamental questions about the learning process. It is also worth noting that our stochastic backpropagation-like algorithm might be of interest beyond the biophotonic context and could have applications in other fields such as neuromorphic computing84,85,86 and photonic reservoir computing87,88,89,90,91.

Methods

Network equations

We consider a basic 3-layer artificial neural network, with \(N_i\) input nodes, \(N_h\) hidden layers, and \(N_o\) output nodes. We label the output or activity of each node with the variable \(a^\mu _k\), where \(\mu = i,h,o\) stands for the input, hidden, and output layers, respectively. Neurons of the hidden and output layers perform some non-linearity, \(\sigma (x)\), on their inputs. We introduce non-linearity into the network with help of the logistic function which is a differentiable activation function \(\sigma (x)=\frac{1}{1+e^{-x}}\) and has a convenient derivative of \({\frac{d\sigma (x)}{dx}}=\sigma (x)(1-\sigma (x))\). In an artificial neural network, the non-linear activation function produces a new representation of the original data that ultimately allows the non-linear decision boundaries for the network. The network equations then are

where \(b^h_k\) is a commonly included “bias term”.

Standard backpropagation

Suppose we consider a finite sequence of inputs \(\{x_{[1]},\ldots x_{[m]} \}\) with a matched sequence of outputs \(\{y_{[1]},\ldots y_{[m]} \}\) as the training data set and we want to train the network such that the network output \(a^0_{[n]}\) approximates the target output \(y_{[n]}\), as n grows. Note that subscript [n] denotes the corresponding vector values at iteration \(n=1,2,\ldots \). In the online learning approach, the backpropagation algorithm iteratively updates the weights \(w^{h,i}, w^{o,h}\) to minimize the loss (error function) at each time. For each trial, the error function \(L_n\) will be

After each forward pass of information, the weights should be updated such that the network output gets closer to the target output. Thus, for the next training trial (\([n+1]\)), the weights \(w^{h,i}\) and \(w^{o,h}\) are updated as

where \(\epsilon \) is the learning rate. After evaluating the requisite derivatives, we have the following:

where \((\delta _l^o)_{[n]}\) denotes the error signal of the output layer and \((\delta _l^h)_{[n]}\) denotes the error signal of the hidden layer, that are given by:

In order to update the weights, the error signal \((\delta _l^o)_{[n]}\) is transmitted back to the hidden layer and \((\delta _l^h)_{[n]}\) is transmitted back to the input layer.

Training error rate and test error

To evaluate the performance of the training trials we calculate the error of each trial, which is a function of the error signal of the output layer, given by

where

The training error simply indicates whether the network output matches the target data. The training error rate is calculated as the moving average of the training errors over the past 100 trials.

If the training is successful the error rate converges to a negligible value. To make sure the network has truly leaned the task, we evaluate the performance of the network by using a new set of data called the test data set. The test error for each test experiment is the average number of times where the network output does not match the target output of the test data set.

Proposed photonic feedback

We propose photonic backward propagation of error signals that is modeled under three main realistic limitations.

-

1.

Stochastic photonic updates To model the stochasticity of biophoton emissions in the brain, in our proposed system, instead of updating all the weights, we only adjust \(w^{h,i}\) for a random \(q.(N_h N_i)\) number of weights, and \(w^{o,h}\) for a random \(q.(N_h N_o)\) number of weights where q is the proportion of neurons that release photons. As q gets larger (closer to 1), more photons are transmitted backward and for the case of \(q=1\), it is the same original backpropagation algorithm.

-

2.

Stochastic photonic updates carrying only one bit of information In our model, when photons only transmit one bit of backward information, instead of Eqs. (7) and (8), the weight updates for the determined random number of weights, \(q.(N_h N_i)\) or \(q.(N_h N_o)\), are given by:

$$\begin{aligned} \left( w^{o,h}_{l,k}\right) _{[n+1]}= \, & {} \left( w^{o,h}_{l,k}\right) _{[n]} - \epsilon \cdot \textsf{Sgn}\left( (\delta _l^o)_{[n]} \cdot (a_k^h)_{[n]} \right) , \end{aligned}$$(12)$$\begin{aligned} \left( w^{h,i}_{k,j} \right) _{[n+1]}= \, & {} \left( w^{h,i}_{k,j} \right) _{[n]} - \epsilon \cdot \textsf{Sgn}\left( \left( \sum _{l=1}^{N_o} (\delta _l^o)_{[n]} \cdot w^{o,h}_{l,k} \right) \cdot \sigma '\left( (a_k^h)_{[n]} \right) \cdot (x_j)_{[n]} \right) , \end{aligned}$$(13)where \(\textsf{Sgn}\) is the sign function defined as:

$$\begin{aligned} \textsf{Sgn}(x) = \left\{ \begin{array}{cr} 1 &{} \text {if~} x>0 \\ 0 &{} \text {if~} x=0 \\ -1 &{} \text {if~} x<0 \end{array} \right. . \end{aligned}$$ -

3.

Stochastic photonic updates carrying only one bit of information in presence of noise To model the noise in feedback updates, the weights are first updated according to Eqs. (12) and (13). Then we select a random \(np.(N_h N_i)\) number of \(w^{h,i}\) weights, and a random \(np.(N_h N_o)\) number of \(w^{o,h}\) weights where np is the proportion of neurons that release uncorrelated noise photons. The new weight updates are given by

$$\begin{aligned} \left( w^{o,h}_{l,k}\right) _{[n+1]}= \, & {} \left( w^{o,h}_{l,k}\right) _{[n]} - \epsilon \cdot (\eta ^o_{l,k})_{[n]}, \end{aligned}$$(14)$$\begin{aligned} \left( w^{h,i}_{k,j} \right) _{[n+1]}= \, & {} \left( w^{h,i}_{k,j} \right) _{[n]} - \epsilon \cdot (\eta ^h_{k,j})_{[n]}, \end{aligned}$$(15)where \(\eta ^o_{l,k}\) and \(\eta ^h_{k,j}\) are independent random variables takings values over \(\{-1, +1\}\).

Data availability

The datasets analyzed during the current study as well as our machine learning codes are available on GitHub via: https://github.com/pzarkeshian/Photonic-backprop.

References

Marton, F. & Booth, S. Learning and Awareness (Routledge, 2013).

Gross, R. Psychology: The Science of Mind and Behaviour 7th edn. (Hodder Education, 2015).

Rogers, A. & Horrocks, N. Teaching Adults (McGraw-Hill Education, 2010).

Hebb, D. O. The Organization of Behavior: A Neuropsychological Theory (Psychology Press, 2005).

Markram, H., Lübke, J., Frotscher, M. & Sakmann, B. Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215 (1997).

Bi, G.-Q. & Poo, M.-M. Synaptic modifications in cultured hippocampal neurons: Dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18, 10464–10472 (1998).

Payeur, A., Guerguiev, J., Zenke, F., Richards, B. A. & Naud, R. Burst-dependent synaptic plasticity can coordinate learning in hierarchical circuits. Nat. Neurosci. 1–10 (2021).

Humeau, Y. & Choquet, D. The next generation of approaches to investigate the link between synaptic plasticity and learning. Nat. Neurosci. 22, 1536–1543 (2019).

Dayan, P. & Abbott, L. F. Theoretical neuroscience: computational and mathematical modeling of neural systems (Computational Neuroscience Series, 2001).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Hecht-Nielsen, R. Theory of the backpropagation neural network. In Neural Networks for Perception, 65–93 (Elsevier, 1992).

Zipser, D. & Andersen, R. A. A back-propagation programmed network that simulates response properties of a subset of posterior parietal neurons. Nature 331, 679–684 (1988).

Lillicrap, T. P. & Scott, S. H. Preference distributions of primary motor cortex neurons reflect control solutions optimized for limb biomechanics. Neuron 77, 168–179 (2013).

Cadieu, C. F. et al. Deep neural networks rival the representation of primate it cortex for core visual object recognition. PLoS Comput. Biol. 10, e1003963 (2014).

Khaligh-Razavi, S.-M. & Kriegeskorte, N. Deep supervised, but not unsupervised, models may explain it cortical representation. PLoS Comput. Biol. 10, e1003915 (2014).

Lillicrap, T. P., Santoro, A., Marris, L., Akerman, C. J. & Hinton, G. Backpropagation and the brain. Nat. Rev. Neurosci. 21, 335–346 (2020).

Sacramento, J., Costa, R. P., Bengio, Y. & Senn, W. Dendritic cortical microcircuits approximate the backpropagation algorithm. arXiv preprint arXiv:1810.11393 (2018).

Whittington, J. C. & Bogacz, R. Theories of error back-propagation in the brain. Trends Cogn. Sci. 23, 235–250 (2019).

Guerguiev, J., Lillicrap, T. P. & Richards, B. A. Towards deep learning with segregated dendrites. Elife 6, e22901 (2017).

Koščak, J., Jakša, R. & Sinčák, P. Stochastic weight update in the backpropagation algorithm on feed-forward neural networks. In The 2010 International Joint Conference on Neural Networks (IJCNN), 1–4 (IEEE, 2010).

Lillicrap, T. P., Cownden, D., Tweed, D. B. & Akerman, C. J. Random synaptic feedback weights support error backpropagation for deep learning. Nat. Commun. 7, 1–10 (2016).

Lee, D.-H., Zhang, S., Fischer, A. & Bengio, Y. Difference target propagation. In Machine Learning and Knowledge Discovery in Databases, (eds Appice, A. et al.) 498–515 (Springer International Publishing, 2015).

Liao, Q., Leibo, J. & Poggio, T. How important is weight symmetry in backpropagation? In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30 (2016).

Samadi, A., Lillicrap, T. P. & Tweed, D. B. Deep learning with dynamic spiking neurons and fixed feedback weights. Neural Comput. 29, 578–602 (2017).

Moskovitz, T. H., Litwin-Kumar, A. & Abbott, L. F. Feedback alignment in deep convolutional networks arXiv preprint arXiv:1812.06488 (2019).

Cifra, M. & Pospíšil, P. Ultra-weak photon emission from biological samples: Definition, mechanisms, properties, detection and applications. J. Photochem. Photobiol. B Biol. 139, 2–10 (2014).

Konev, S., Lyskova, T. & Nisenbaum, G. Very weak bioluminescence of cells in the ultraviolet region of the spectrum and its biological role. Biophysics 11, 410–413 (1966).

Vogel, R. & Süssmuth, R. Weak light emission patterns from lactic acid bacteria. Luminescence 14, 99–105 (1999).

Prasad, A. & Pospíšil, P. Towards the two-dimensional imaging of spontaneous ultra-weak photon emission from microbial, plant and animal cells. Sci. Rep. 3, 1–8 (2013).

Kobayashi, M., Kikuchi, D. & Okamura, H. Imaging of ultraweak spontaneous photon emission from human body displaying diurnal rhythm. PLoS ONE 4, e6256 (2009).

Prasad, A. & Pospišil, P. Two-dimensional imaging of spontaneous ultra-weak photon emission from the human skin: Role of reactive oxygen species. J. Biophotonics 4, 840–849 (2011).

Kobayashi, M. et al. In vivo imaging of spontaneous ultraweak photon emission from a rat’s brain correlated with cerebral energy metabolism and oxidative stress. Neurosci. Res. 34, 103–113 (1999).

Tang, R. & Dai, J. Spatiotemporal imaging of glutamate-induced biophotonic activities and transmission in neural circuits. PLoS ONE 9, e85643 (2014).

Wang, C., Bókkon, I., Dai, J. & Antal, I. Spontaneous and visible light-induced ultraweak photon emission from rat eyes. Brain Res. 1369, 1–9 (2011).

Leszkiewicz, D. N., Kandler, K. & Aizenman, E. Enhancement of NMDA receptor-mediated currents by light in rat neurones in vitro. J. Physiol. 524, 365–374 (2000).

Wade, P. D., Taylor, J. & Siekevitz, P. Mammalian cerebral cortical tissue responds to low-intensity visible light. Proc. Natl. Acad. Sci. 85, 9322–9326 (1988).

Vandewalle, G., Maquet, P. & Dijk, D.-J. Light as a modulator of cognitive brain function. Trends Cogn. Sci. 13, 429–438 (2009).

Starck, T. & Nissil, J. Stimulating brain tissue with bright light alters functional connectivity in brain at the resting state. World J. Neurosci. 2 (2012).

Zhang, K. X. et al. Violet-light suppression of thermogenesis by opsin 5 hypothalamic neurons. Nature 585, 420–425 (2020).

Zarkeshian, P., Kumar, S., Tuszynski, J., Barclay, P. & Simon, C. Are there optical communication channels in the brain?. Front. Biosci. 23, 1407–1421 (2018).

Kumar, S., Boone, K., Tuszyński, J., Barclay, P. & Simon, C. Possible existence of optical communication channels in the brain. Sci. Rep. 6, 1–13 (2016).

Sun, Y., Wang, C. & Dai, J. Biophotons as neural communication signals demonstrated by in situ biophoton autography. Photochem. Photobiol. Sci. 9, 315–322 (2010).

Zangari, A., Micheli, D., Galeazzi, R. & Tozzi, A. Node of Ranvier as an array of bio-nanoantennas for infrared communication in nerve tissue. Sci. Rep. 8, 1–19 (2018).

DePaoli, D. et al. Anisotropic light scattering from myelinated axons in the spinal cord. Neurophotonics 7, 015011 (2020).

Voglis, G. & Tavernarakis, N. The role of synaptic ion channels in synaptic plasticity. EMBO Rep. 7, 1104–1110 (2006).

Harris, J. J., Jolivet, R. & Attwell, D. Synaptic energy use and supply. Neuron 75, 762–777 (2012).

Porter, M. L. et al. Shedding new light on opsin evolution. Proc. R. Soc. B Biol. Sci. 279, 3–14 (2012).

Li, Y. et al. MFSD7C switches mitochondrial ATP synthesis to thermogenesis in response to heme. Nat. Commun. 11, 1–14 (2020).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. In Proceedings of the IEEE vol. 86, no. 11, 2278–2324 (1998).

Grother, P. J. Nist Special Database 19 Handprinted Forms and Characters Database (National Institute of Standards and Technology, 1995).

Kučera, O. & Cifra, M. Cell-to-cell signaling through light: Just a ghost of chance?. Cell Commun. Signal. 11, 1–8 (2013).

Fröhlich, F. Chapter 4—synaptic plasticity. In Network Neuroscience, (ed Fröhlich, F.) 47–58, https://doi.org/10.1016/B978-0-12-801560-5.00004-5 (Academic Press, 2016).

Debanne, D., Daoudal, G., Sourdet, V. & Russier, M. Brain plasticity and ion channels. J. Physiol. 97, 403–414 (2003).

Meriney, S. D. & Fanselow, E. Synaptic Transmission (Academic Press, 2019).

Stoler, O. et al. Mitochondria decode firing frequency and coincidences of postsynaptic APs and EPSPs. bioRxiv (2021).

Pospíšil, P., Prasad, A. & Rác, M. Role of reactive oxygen species in ultra-weak photon emission in biological systems. J. Photochem. Photobiol. B Biol. 139, 11–23 (2014).

Turrens, J. F. Mitochondrial formation of reactive oxygen species. J. Physiol. 552, 335–344 (2003).

Murphy, M. P. How mitochondria produce reactive oxygen species. Biochem. J. 417, 1–13 (2009).

Lambert, A. J. & Brand, M. D. Reactive oxygen species production by mitochondria. Mitochondrial DNA 165–181 (2009).

Miyamoto, S., Martinez, G. R., Medeiros, M. H. & Di Mascio, P. Singlet molecular oxygen generated by biological hydroperoxides. J. Photochem. Photobiol. B Biol. 139, 24–33 (2014).

Pospíšil, P., Prasad, A. & Rác, M. Mechanism of the formation of electronically excited species by oxidative metabolic processes: Role of reactive oxygen species. Biomolecules 9, 258 (2019).

Buhr, E. D. et al. Neuropsin (opn5)-mediated photoentrainment of local circadian oscillators in mammalian retina and cornea. Proc. Natl. Acad. Sci. 112, 13093–13098 (2015).

Buhr, E. D., Vemaraju, S., Diaz, N., Lang, R. A. & Van Gelder, R. N. Neuropsin (opn5) mediates local light-dependent induction of circadian clock genes and circadian photoentrainment in exposed murine skin. Curr. Biol. 29, 3478–3487 (2019).

Yamashita, T. et al. Evolution of mammalian Opn5 as a specialized UV-absorbing pigment by a single amino acid mutation. J. Biol. Chem. 289, 3991–4000 (2014).

Purves, D. et al. Neuroscience 4th edn. (Sinauer Associates, 2008).

Senior, J. M. & Jamro, M. Y. Optical Fiber Communications: Principles and Practice (Pearson Education, 2009).

Hui, R. Introduction to Fiber-Optic Communications (Academic Press, 2019).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information: 10th Anniversary Edition Anniversary. (Cambridge University Press, 2010).

Buzsáki, G. & Mizuseki, K. The log-dynamic brain: How skewed distributions affect network operations. Nat. Rev. Neurosci. 15, 264–278 (2014).

Deisseroth, K. Optogenetics: 10 years of microbial opsins in neuroscience. Nat. Neurosci. 18, 1213–1225 (2015).

Adamantidis, A. R., Zhang, F., de Lecea, L. & Deisseroth, K. Optogenetics: Opsins and optical interfaces in neuroscience. Cold Spring Harbor Protocols 2014, pdb-top083329 (2014).

Beyer, H. M., Naumann, S., Weber, W. & Radziwill, G. Optogenetic control of signaling in mammalian cells. Biotechnol. J. 10, 273–283 (2015).

Hannon, G. J. RNA interference. Nature 418, 244–251 (2002).

Summerton, J. E. Morpholino, siRNA, and S-DNA compared: Impact of structure and mechanism of action on off-target effects and sequence specificity. Curr. Top. Med. Chem. 7, 651–660 (2007).

Gao, K. et al. Active RNA interference in mitochondria. Cell Res. 31, 219–228 (2021).

Land, B., Brayton, C., Furman, K., LaPalombara, Z. & DiLeone, R. Optogenetic inhibition of neurons by internal light production. Front. Behav. Neurosci. 8, 108 (2014).

Park, S. Y. et al. Novel luciferase-opsin combinations for improved luminopsins. J. Neurosci. Res. 98, 410–421 (2020).

Simon, C. Can quantum physics help solve the hard problem of consciousness?. J. Consciousness Stud. 26, 204–218 (2019).

Smith, J., Zadeh Haghighi, H., Salahub, D. & Simon, C. Radical pairs may play a role in xenon-induced general anesthesia. Sci. Rep. 11, 1–13 (2021).

Paparo, G. D., Dunjko, V., Makmal, A., Martin-Delgado, M. A. & Briegel, H. J. Quantum speedup for active learning agents. Phys. Rev. X 4, 031002 (2014).

Crawford, D., Levit, A., Ghadermarzy, N., Oberoi, J. S. & Ronagh, P. Reinforcement learning using quantum boltzmann machines. arXiv preprint arXiv:1612.05695 (2016).

Xia, Y., Li, W., Zhuang, Q. & Zhang, Z. Quantum-enhanced data classification with a variational entangled sensor network. Phys. Rev. X 11, 021047 (2021).

Esser, S. K., Appuswamy, R., Merolla, P., Arthur, J. V. & Modha, D. S. Backpropagation for energy-efficient neuromorphic computing. Adv. Neural Inf. Process. Syst. 28 (2015).

Torrejon, J. et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017).

Marković, D., Mizrahi, A., Querlioz, D. & Grollier, J. Physics for neuromorphic computing. Nat. Rev. Phys. 2, 499–510 (2020).

Paquot, Y. et al. Optoelectronic reservoir computing. Sci. Rep. 2, 1–6 (2012).

Duport, F., Schneider, B., Smerieri, A., Haelterman, M. & Massar, S. All-optical reservoir computing. Opt. Express 20, 22783–22795 (2012).

Tanaka, G. et al. Recent advances in physical reservoir computing: A review. Neural Netw. 115, 100–123 (2019).

Argyris, A. Photonic neuromorphic technologies in optical communications. Nanophotonics 11, 897–916 (2022).

Davies, M. et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Acknowledgements

This work was supported by the Natural Sciences and Engineering Research Council (NSERC) through its Discovery Grant program as well as the CREATE grant ’Quanta’. Partial funding was also provided by the Mitacs Accelerate program. The authors would like to thank Sourabh Kumar, Daniel Oblak, and Rana Zibakhsh Shabgahi for useful discussions.

Author information

Authors and Affiliations

Contributions

C.S. and W.N. conceived the project. The theoretical approach was developed by P.Z., R.G, C.S., and W.N. The numerical simulations were performed by P.Z. and T.K. with guidance from W.N. The paper was written by P.Z. with feedback from the other authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zarkeshian, P., Kergan, T., Ghobadi, R. et al. Photons guided by axons may enable backpropagation-based learning in the brain. Sci Rep 12, 20720 (2022). https://doi.org/10.1038/s41598-022-24871-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-24871-6

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.