Abstract

With the rise of machine learning, a lot of excellent algorithms are used for settlement prediction. Backpropagation (BP) and Elman are two typical algorithms based on gradient descent, but their performance is greatly affected by the random selection of initial weights and thresholds, so this paper chooses Sparrow Search Algorithm (SSA) to build joint model. Then, two sets of land subsidence monitoring data generated during the excavation of a foundation pit in South China are used for analysis and verification. The results show that the optimization effect of SSA on the gradient descent model is remarkable and the stability of the model is improved to a certain extent. After that, SSA is compared with GA and PSO algorithms, and the comparison shows that SSA has higher optimization efficiency. Finally, select SSA-KELM, SSA-LSSVM and SSA-BP for further comparison and it proves that the optimization efficiency of SSA for BP is higher than other kind of neural network. At the same time, it also shows that the seven influencing factors selected in this paper are feasible as the input variables of the model, which is consistent with the conclusion drawn by the grey relational analysis.

Similar content being viewed by others

Introduction

Considering that the utilization of surface space is close to saturation, an increasing number of functional buildings are developing in the direction of super large and high, so deep foundation pit projects are inevitable1,2,3. During construction, land subsidence has a huge impact on the construction safety and surrounding buildings, simultaneously, because of various sources of uncertainties, it’s hard to predict accurately4,5. However, many studies show that the settlement around the foundation pit is affected by multiple factors which including physical parameters of soil and external conditions during construction6,7. Then, it is of great significance to establish a model that can map the potential nonlinear relationship and provide reference for subsequent safe construction8,9. In the past ten years, the prediction of land subsidence has attracted the attention of many scholars in geotechnical engineering. In 2014, Su.et al. used Kalman filter in a subsidence monitoring method and analyzed it by means of forward modeling, the result shows that it is feasible to predict the settlement of the subsequent construction by training the data the previous stage10. In 2017, Nejad and Jaksa proposed a supervised learning algorithm that uses the CPT data for the load settlement simulation, however, excessive input variables will seriously affect the speed and accuracy of the network training and decrease the generalization ability11. In 2017, Cao et al. explored the influence of different input variables on settlement through the parameter sensitivity analysis formula, and the study proved that a single variable cannot well explain the settlement results12. And related research shows that when linear loading conditions are met, the subsidence and excavation time can be fitted to an S-shaped curve13, in view of the non-linear characteristics of foundation pit settlement, so it’s crucial to select suitable nonlinear mapping models14. Some researchers choose BP, Support Vector Machine (SVM) and gray Verhulst models14,15,16 to predict the foundation settlement. Compared with traditional methods, these nonlinear prediction models have better performance. However, due to the lack of self-learning and error correction capabilities, when the short-term settlement data fluctuates greatly, the gray Verhulst model is not stable in the prediction17. For the limitation of insufficient sample size and weak linear feature performance, the SVM model can exert its unique advantages, but it also has some shortcomings that are sensitive to the choice of parameters and kernel functions18. Since BP was proposed in 1986, it has been successfully used in various engineering fields with its powerful self-learning, nonlinear mapping and error feedback adjustment capabilities19. However, like most neural networks that use gradient descent to optimize parameters in a negative feedback process, the random selection of initial weights and thresholds greatly affects the prediction performance of BP. This also makes it difficult for the BP algorithm to achieve the overall optimum, but tends to converge to a local minimum point, and the convergence speed is also slow20,21. In 1991, J. L. Elman established the Elman model to solve some speech processing problems22. Guo et al. applied Elman to the deformation prediction of foundation pit, and it showed that the prediction accuracy was high, but the sample size was too small and it is difficult to jump out of the local optimal solution23.

SSA is a swarm intelligence optimization algorithm that was proposed in 2020, which can optimize the mapping relationship between the input and output variables of the prediction model24. SSA can efficiently optimize the weights and thresholds of BP and Elman, and improve the prediction accuracy of the model. That's why it was chosen.

Prior knowledge needed to know

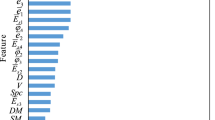

In the previous research, only a single influencing factor of time was usually considered, and the unit was weeks or months. However, setting a larger time unit will lose the engineering significance of prediction, and has little guiding significance. Therefore, this paper selects two groups of continuous monitoring data whose time unit is day, and each group has 170 pieces of data. These data are arranged strictly according to the time of excavation, so they cannot be disrupted during machine learning training, this is different from the random shuffling of the data set in general machine learning. Feng et al. show that factors including excavation depth, the number of internal supports, etc. can affect the settlement of the foundation pit25. And during excavation, when the soil geological conditions are good, the land subsidence is relatively small, while the subsidence may be relatively large when it’s poor26. The reason that the previous studies seldom consider the soil’s mechanical parameters is that it’s impractical to record the daily excavation depth and the daily soil type. But, in this paper, first, timely and accurately record the specific time and corresponding level information of each support when it is arranged. Then, the daily excavation depth can be measured by the support elevation, or the two adjacent support elevations and their excavation time spans can be recorded first and then interpolated. Finally, according to the geological data obtained from on-site drilling and the above-mentioned data of excavation time and depth recorded accurately every day, the soil type and parameters for real-time excavation can be determined. Obviously, the supports also have a certain influence on the settlement, so the number of supports is used as an input variable in this paper. In addition, there also attempts to use the groundwater level, soil permeability, internal friction angle, gravity and cohesion as input variables. In order to make the settlement prediction of the model more accurate, the input variables of the model should take into account the potential factors affecting settlement as much as possible. Therefore, this paper conducts Grey relation analysis on the original data set. The basic idea of grey relational analysis is to determine whether the relation between each column of data to be evaluated and the parent sequence is close by determining the relational value. The analysis results are shown in Fig. 1.

After the grey relation analysis, factors with the correlation value greater than 0.6, indicating higher correlation degree, were selected as the input variables. In Fig. 1, d, s, φ, γ, w, k and c represent the excavation depth, the number of internal supports, the internal friction angle, soil gravity, the groundwater level, the permeability coefficient and cohesion, respectively.

Method

Introduction to BP and Elman neural network

BP is multi-layer feed-forward network, error back propagation is the meaning of the name of BP. In effect, it is a linear or non-linear mapping of the relationship between input variables and output variables. Mathematical derivation proves that a three-layer neural network structure can approximate any continuous function within acceptable accuracy. The first stage of the BP is that the training samples are propagated through the input layer and the hidden layer, then output layer gets the corresponding output, the second stage is the back propagation of the error, and the third stage is the weights and thresholds update until the end condition is met27,28,29. Figure 2 shows its basic structure. Equation (1) is its mathematical calculation expression:

According to Eq. (1), the error E can be reduced by updating the weights and thresholds, and this is where SSA comes into play.

Elman is a typical dynamic neural network, compared with BP, it adds an undertaking layer with feedback function to the hidden layer, and it also has better prediction accuracy, so it’s more suitable for the prediction of foundation pit30. However, like BP, Elman is also based on gradient descent to reduce the error, so the training of the model tends to fall into local optimum but not global optimum31. Figure 3 is its basic structure.

The Elman learning indicator function also uses the error sum of squares function:

where \(Y_{t} \left( \omega \right)\) and \(Y_{t}^{^{\prime}} \left( \omega \right)\) represent the output value and the expected value, respectively.

Introduction to Sparrow Search Algorithm

Inspired by the foraging and anti-predation behaviours of sparrow populations in nature, in 2020, Xue and Shen proposed SSA, idealizing the following behaviours of sparrows and briefly formulate corresponding rules to understand the process of sparrow optimization clearly32.

-

Rule (1). Discoverers usually has the ability to expand the scope of the food search and provide real-time regional location information to all other joiners, The higher the fitness value in the model, the higher the energy reserve of the sparrow.

-

Ruler (2). When sparrows find danger, they will send out an alarm signal, and when the alarm value exceeds the safety threshold, the discoverers will take joiners to other areas.

-

Rule (3). The ratio of discoverers to joiners in the entire population is constant, but as long as a richer source of food can be found, every sparrow can become a discover, but if one sparrow becomes a discoverer, another sparrow must become a joiner.

-

Rule (4). Joiners with poor fitness have poorer foraging positions in the population, and naturally, they are more likely to fly away from these places.

-

Rule (5). During foraging, discoverers with better food resources will always attract those discoverers to grab food from them or to forage around them.

-

Rule (6). When the feeding area in which the sparrows are no longer safe, they will quickly move away from the danger area, and sparrows that do not feel danger will walk randomly to get close to other sparrows.

Establishment of the joint models

Based on the above idealized model, the SSA optimization process is as follows: (1) Eliminate abnormal data in the data set, and then select variables that have a greater impact on settlement through grey correlation analysis. (2) Establish the initial network structure and select the appropriate transfer function. (3) Select SSA parameters, including fitness function, population size, proportion of sparrows and maximum number of iterations. (4–5) Calculate and find the best fitness value of the sparrow and its corresponding global best position, and then update the positions of the three kinds of sparrows in the population (6) Determine whether the end condition is met, if not, go back to (4–5), if satisfied, go to (7). (7) The optimal thresholds and weights obtained by SSA are assigned to the initial model.

Figures 4 and 5 are the simple flow charts of SSA optimizing BP and Elman, respectively.

Engineering examples and parameter selection

Brief description of the project

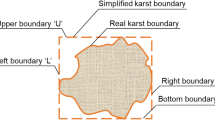

The foundation pit is located in a city in South China, and as shown in Fig. 6, the settlement monitoring point P1 is close to the road and P2 is close to the bridge. Excessive settlement will affect driving and building safety therefore, so it is of great importance to predict settlement. At the same time, the foundation pit is located in an area with heavy rainfall. Therefore, the influence of the groundwater level around the foundation pit cannot be ignored. SW1, SW2, SW3, SW4 represent four groundwater level monitoring points, in this paper, the water level values near the monitoring points P1 and P2 are taken respectively.

The soil layer distribution and elevation of the supporting structure are shown in Fig. 7. There are five layers in total until the excavation depth, which are plain fill, muddy soil, silt sand, muddy soil and fine sand, respectively.

By analyzing the samples collected along different drilling depths, combined with geological exploration and drilling data, the thickness of each soil layer and the physical properties such as the internal friction angle, weight and cohesion of the soil can be obtained. Table 1 lists the relevant parameters of each soil layer. The survey data also show that the foundation pit and surrounding soil layers are relatively uniform, and there are no active faults in the affected area. Therefore, the inhomogeneity of the geological structure and the anisotropy of the foundation pit structure are not considered when selecting the input parameters.

Model dataset creation

The subsidence data of the two monitoring points P1 and P2 were selected, each group has 170 sets of data, the time unit is days, and both of datasets are 8-dimensional, the first 7 dimensions are input variables, the last dimension is output variables. The dataset cannot be shuffled in this paper, selecting data with this rule reduces the prediction accuracy of the model to a certain extent, because it cannot fully learn the characteristics of the test set, but it must be considered that these settlement data are sorted in time series. Therefore, the 150 pieces of data in the training set and the 20 pieces of data in the test set are chosen strictly in order.

Model parameter settings

The models are trained in MATLAB in this paper. In BP model, the input layer, hidden layer, and output layer select "tansig", "logsig", and "purelin" function, respectively. The nodes number n of hidden layer can be roughly determined according to empirical Eq. (3).

where d is the dimension of the input layer, d = 7; l is the number of nodes in the output layer, and l = 1, \(\sigma\) is a natural number from 0 to 10.

The Elman model is a four-layer structure, compared with BP, it adds a layer of undertake layer, the number of nodes in the input and output layers is also the same as BP, the number of hidden layer nodes can also refer to Eq. (3).

The population size and evolution number of sparrows were chosen to be 20 and 30, respectively, and the number of discoverers was set to 20% of the total.

Model performance comparison and analysis

Performance analysis of the joint model under the P1 monitoring point

The solution of the evolutionary computing method will be different each time, so this paper will make multiple consecutive predictions of a model at the same monitoring point, and then calculate the mean predicted value, MSE and predicted value variance.

Figure 8 shows the comparison of predicted and measured values for BP, SSA-BP models. It can be seen from Fig. 8 that before day 157, the prediction accuracy of the optimized model was slightly higher than that of BP. After the 157th day, the predicted value of the BP model gradually deviates from the measured value, while the predicted value of SSA-BP closely matches the measured value.

In order to compare the prediction stability of the models, the variances of the predicted values of BP and SSA-BP models were calculated, and the results are shown in Table 2. BP model has a total of 20 predicted values, the first row of Table 2 represents the variance of the 1st to 10th predicted values, and the second row represents the variance of the 11th to 20th predicted values. The same is true for SSA-BP. By comparing the variance values, it can be seen that the stability of SSA-BP model is higher than that of BP model.

For further comparison, the evaluation index values and average running time (The number of iterations in the paper is set to 1000) of the two models are listed in Table 3.

From the data in Table 3, it can be seen that the five evaluation indicators of the optimized model have been improved to varying degrees after optimization, which proves that SSA has indeed exerted its optimization ability. The running time of SSA-BP has been greatly increased compared with BP, and the long solution time is indeed a major disadvantage of the optimization algorithm. But this runtime is acceptable relative to a dataset where the time unit is days. In summary, it can be seen that SSA can exert its excellent global search and local optimization capabilities to generate optimal weights and thresholds, thereby improving the prediction accuracy and generalization ability of the BP model.

Next, Elman and SSA-Elman models are selected to further demonstrate the optimization capability of SSA. The comparison between the predicted values and the measured value is shown in Fig. 9.

From Fig. 9, the prediction accuracy of the two models before the 161st day is similar, but after the 161st day, the predicted value curve of SSA-Elman fits the measured value curve better than that of Elman. Furthermore, the variance of the predicted values of the two models and related evaluation indicators are listed in Tables 4 and 5.

From the comparison results in Table 4 that the variance of predicted value of SSA-Elman is lower than that of Elman, indicating that the stability of the model has been improved. From Table 5, it can be seen that the running time of the optimized model becomes longer, indicating that the solution time has increased significantly, while the changes of other evaluation indicators indicate that the optimized model has higher prediction accuracy and generalization ability.

Verify the effectiveness of the joint model on the P2 monitoring point

In order to verify the validity of joint model, the data of P2 monitoring points were selected for verification again. From Fig. 10, it can be seen that the predicted value curve of the optimized model is obviously better than the initial model in terms of prediction accuracy and curve fit. From the data comparison analysis in Tables 6 and 7, it can be concluded that the optimized model is better than the initial model in terms of stability and prediction performance.

At point P2, the Elman model's predictive performance is similar to that of BP. As shown in Fig. 11, its predicted value curve deviates significantly from the measured value curve, which indicates that its generalization ability needs to be enhanced. While, the predicted value of the SSA-Elman model is relatively more fitting to the measured value.

The data in Tables 8 and 9 again demonstrate that the optimized model has better predictive performance.

Performance comparison between SSA and other optimization algorithms

To further verify the superiority of SSA, other algorithms including Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) were selected to optimize BP, and then the predicted values of P1 and P2 monitoring points were compared respectively. The predicted values of the three models at P1 are shown in Fig. 12. It can be seen that the predicted value curve of PSO-BP deviates significantly from the measured value curve after the 158th day. The predicted value curve of GA-BP deviates not much, but it is still not as good as that of SSA-BP.

To more directly compare the prediction performance of the three models, the variance of the predicted value of the models, the five evaluation indicators and the average running time are listed in Tables 10 and 11, respectively.

A comprehensive comparative analysis of the data in Tables 10 and 11 shows that the variance of predicted values of the three models is ranked from low to high as SSA-BP, GA-BP and PSO-BP. From the perspective of the five evaluation indicators of the model, SSA-BP is also the most Excellent, followed by GA-BP, and finally PSO-BP. In terms of average solution time, PSO-BP is much less time-consuming than the other models. But overall, the optimization efficiency of SSA is better than the other algorithms.

Select the data of the P2 monitoring point to verify again and the predicted results are plotted in Fig. 13. It can be seen that the predicted value curve of the GA-BP model started to fluctuate after day 162, whereas the predicted value curve of the PSO-BP model performed poorly before day 160. In conclusion, the fitness of the SSA-BP model is better than that of other models.

At point P2, the variance of the predicted values of the three models and the model evaluation index are shown in Tables 12 and 13 respectively.

Comprehensively comparing and analyzing the data in Tables 12 and 13, at the P2 monitoring point, it can still be concluded that the optimization efficiency of SSA is better than other algorithms.

Comparison of optimization performance of SSA for other kind of neural network

A Kernel Extreme Learning Machine (KELM) and Least Squares Support Vector Machine (LSSVM) were selected and their parameters were optimized using SSA Among them, the two parameters that KELM needs to optimize are the regularization coefficient and the kernel function parameter, and the two parameters that LSSVM need to optimize are the penalty factor and the kernel parameter. The data of P1 and P2 monitoring points are selected for prediction and comparison data are presented in Table 14.

Judging from the data of the P2 monitoring point in Table 14, it can be concluded that when the basic model itself does not perform well, the optimization effect of SSA is more significant; from the data of the P1 monitoring point, when the basic model itself has a good performance, SSA can still be able to achieve a small improvement of its prediction performance.

Figures 14 and 15 are the comparisons between the predicted and measured values of the three models at points P1 and P2, respectively. From Fig. 14, the SSA-LSSVM performs significantly worse than SSA-BP model from day 155 to day 161, while the SSA-KELM performs worse than SSA-BP model before day 153 and after day 167. Overall, the prediction performance of SSA-BP at the P1 monitoring point is slightly better than the other two optimization models. From Fig. 15, it is still the same conclusion at the P2 monitoring point.

Table 15 shows the evaluation index values of the three models at monitoring points P1 and P2 and their average solution time. At the two monitoring points P1 and P2, from the data in the table, although the solution time of SSA-BP is longer than the other models, the evaluation index of the SSA-BP model is slightly better than the other optimization models. Since the time unit of the dataset is day, the increase degree in runtime is perfectly acceptable by comparison. Therefore, comprehensive analysis shows that the performance of the joint model constructed by SSA and BP neural network is slightly better than the joint model constructed by SSA and other kind of neural network.

Conclusion

In summary, the following conclusions can be drawn from this paper:

-

1.

SSA has a significant effect on the optimization of gradient descent neural networks. After the BP and Elman neural networks selected in this paper are optimized by SSA, the prediction performance of the model is greatly improved, and its stability is also improved. Although the solution time of the optimization model is significantly increased, which is also a disadvantage of evolutionary algorithms, however, relative to the time unit of the dataset, this is acceptable.

-

2.

This paper first selects the data of the P1 monitoring point for predictive analysis, and draws the conclusion in (1), and then selects the data of the P2 monitoring point again for prediction to verify its effectiveness. The result analysis shows that the optimization model established in this paper is effective and reliable.

-

3.

In this paper, by conducting predictive analysis at point P1 and verifying its effectiveness at point P2, it can be concluded that SSA has more efficient optimization performance than GA and PSO algorithms. In general, the predictive performance of SSA-Gradient descent models outperforms other types of optimization models.

-

4.

In this paper, in addition to the BP model, the Elman, KELM and LSSVM models are also selected for optimization with SSA, and then the prediction analysis and verification are carried out at the P1 and P2 monitoring points respectively. The data show that SSA can effectively optimize the above model and improve its prediction performance, and it also shows that the optimization efficiency of SSA for BP and Elman neural networks is slightly better than other kind of neural network.

-

5.

The seven factors considered in this paper are feasible as input variables of the model. However, it is not certain that 7 is the optimal number of input parameters. Because, there are many factors that may affect the settlement around the foundation pit. Subsequent work will consider potential influencing factors in more detail and comprehensively, so as to make more reasonable and accurate predictions.

Data availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Yang, L. L., Xu, W. Y., Meng, Q. X. & Wang, R. B. Investigation on jointed rock strength based on fractal theory. J. Centr. South Univ. https://doi.org/10.1007/s11771-017-3567-9 (2017).

Xu, Q., Bao, Z., Lu, T., Gao, H. & Song, J. Numerical simulation and optimization design of end-suspended pile support for soil-rock composite foundation Pit. Adv. Civ. Eng. 2021(2), 1–15. https://doi.org/10.1155/2021/5593639 (2021).

Zhang, C. M. Applications of soil nailed wall in foundation pit support. Appl. Mech. Mater. 353–356, 969–73. https://doi.org/10.4028/www.scientific.net/AMM.353-356.969 (2013).

Tao, Y., Sun, H. & Cai, Y. Predicting soil settlement with quantified uncertainties by using ensemble Kalman filtering. Eng. Geol.. 276(6), 105753. https://doi.org/10.1016/j.enggeo.2020.105753 (2020).

Zhou, N., Vermeer, P. A., Lou, R., Tang, Y. & Jiang, S. Numerical simulation of deep foundation pit dewatering and optimization of controlling land subsidence. J. Eng. Geol. 114(3–4), 251–260. https://doi.org/10.1016/j.enggeo.2010.05.002 (2010).

Shreyas, S. K. & Dey, A. Application of soft computing techniques in tunnelling and underground excavations: State of the art and future prospects. Innov. Infrastruct. Solut. 4, 46.1-46.15. https://doi.org/10.1007/s41062-019-0234-z (2019).

Zhu, R., Gao, Q. & Qi, G. Settlement of buildings nearing a foundation pit under the condition of deep mixing pile retaining. J. Univ. Sci. Technol. Beijing 28(8), 721–4. https://doi.org/10.1016/S1005-8885(07)60042-9 (2006).

Yang, H. X. The prediction model of foundation settlement in linear loading condition. J. Appl. Mech. Mater. 256–259, 477–80. https://doi.org/10.4028/www.scientific.net/AMM.256-259.477 (2013).

Liu, H. M., Zhou, X. G., Wang, Z. W., Huang, D. & Yang, H. H. Prediction of subgrade settlement using PMIGM(1,1) model based on particle swarm optimization and Markov optimization. J. Chin. J. Geotech. Eng. 41(S1), 205–8. https://doi.org/10.11779/CJGE2019S1052 (2019).

Su, J. Z., Xia, Y., Xu, Y. L., Zhao, X. & Zhang, Q. L. Settlement monitoring of a supertall building using the Kalman filtering technique and forward construction stage analysis. J. Adv. Struct. Eng.. 17(6), 881–893. https://doi.org/10.1260/1369-4332.17.6.881 (2014).

Nejad, F. P. & Jaksa, M. B. Load-settlement behavior modeling of single piles using artificial neural networks and CPT data. Comput. Geotech. 89(Sep.), 9–21. https://doi.org/10.1016/j.compgeo.2017.04.003 (2017).

Cao, M. S. et al. Neural network ensemble-based parameter sensitivity analysis in civil engineering systems. J. Neural Comput. Appl. 28, 1–8. https://doi.org/10.1007/s00521-015-2132-4 (2017).

Mei, G. & Zai, J. Proof and application of s-shape settlement-time curve for linear or nearly linear loadings. China Civ. Eng. J. https://doi.org/10.1007/s11769-005-0030-x (2005).

Yu, H. & Shangguan, Y. Settlement prediction of road soft foundation using a support vector machine (SVM) based on measured data. J. MATEC Web Conf. 67, 07001. https://doi.org/10.1051/matecconf/20166707001 (2016).

Lv, Y., Liu, T., Ma, J., Wei, S. & Gao, C. Study on settlement prediction model of deep foundation pit in sand and pebble strata based on grey theory and BP neural network. Arab. J. Geosci. 13(23), 1238. https://doi.org/10.1007/s12517-020-06232-7 (2020).

Song, Y. H. & Nie, D. X. Verhulst mode for predicting foundation settlement. J. Rock Soil Mech. https://doi.org/10.1142/S0252959903000104 (2003).

Zhang, C., Li, J. Z. & Yong, H. E. Application of optimized grey discrete Verhulst–BP neural network model in settlement prediction of foundation pit. J. Environ. Earth Sci. https://doi.org/10.1007/s12665-019-8458-y (2019).

Fan, Z.D., Cui, W.J., Feng, S.R. & Feng, X. Influence of SVM Kernel function and parameters selection on prediction accuracy of dam monitoring model. J. Water Resour. Power 33(2), 78–80 (2015).

Basheer, I. A. & Hajmeer, M. Artificial neural networks: Fundamentals, computing, design, and application. J. Microbiol. Methods 43(1), 3–31. https://doi.org/10.1016/S0167-7012(00)00201-3 (2000).

Song, Y., Huang, H. & Chen, Y. The method of BP algorithm for genetic simulated annealing algorithm in fault line selection. J. Phys. Conf. Ser. 1650(3), 032187. https://doi.org/10.1088/1742-6596/1650/3/032187 (2020).

Zhang, M., Zhang, Y., Gao, Z. & He, X. An improved DDPG and its application based on the double-layer BP neural network. J. IEEE Access. 8(99), 177734–177744. https://doi.org/10.1109/ACCESS.2020.3020590 (2020).

Elman, J. L. Distributed representations, simple recurrent networks, and grammatical structure. J. Mach. Learn. https://doi.org/10.1007/BF00114844 (1991).

Guo, Q., Jia, Z. & Yinbo, J. Deformation prediction of foundation pit based on finite element and Elman neural network. J. China Sciencepaper. 14(10), 6 (2019).

Liao, G. C. Fusion of improved Sparrow Search Algorithm and long short-term memory neural network application in load forecasting. Energies https://doi.org/10.3390/en15010130 (2021).

Feng, T., Wang, C., Zhang, J., Zhou, K. & Qiao, G. Prediction of stratum deformation during the excavation of a foundation pit in composite formation based on the artificial bee colony–back-propagation model. Eng. Optim. https://doi.org/10.1080/0305215X.2021.1919100 (2021).

Liu, J. T. & Chen, X. The design and construction of foundation pit dewatering under poor geological conditions. Adv. Mater. Res. 926–930, 665–668. https://doi.org/10.4028/www.scientific.net/AMR.926-930.665 (2014).

Fukumizu, K. Chapter 17 Geometry of neural networks: Natural gradient for learning. J. Handb. Biol. Phys. 4, 731–69. https://doi.org/10.1016/S1383-8121(01)80020-8 (2001).

Yang, L., Li, Z., Wang, D. S., Miao, H. & Wang, Z. B. Software defects prediction based on hybrid particle swarm optimization and Sparrow Search Algorithm. J. IEEE Access. 9(99), 60865–60879. https://doi.org/10.1109/ACCESS.2021.3072993 (2021).

Ms, A., Yl, A., Lq, B., Zhen, Z. A. & Zh, A. Prediction method of ball valve internal leakage rate based on acoustic emission technology. Flow Meas. Instrum. https://doi.org/10.1016/j.flowmeasinst.2021.102036 (2021).

Chen, S. Z. Application of a refined BP algorithm based Elman network to settlement prediction of soft soil ground. J. Eng. Geol. https://doi.org/10.1016/S1872-2040(06)60039-X (2006).

Zhang, Z., Tang, Z. & Vairappan, C. A novel learning method for Elman neural network using local search. J. Neural Inf. Process. Lett. Rev. 11, 181–188 (2007).

Xue, J. K. & Shen, B. A novel swarm intelligence optimization approach: sparrow search algorithm. Syst. Sci. Control Eng. 8(1), 22–34. https://doi.org/10.1080/21642583.2019.1708830 (2020).

Acknowledgements

This study was financially supported by the Natural Science Foundation of Jilin Province (Grant No.20220101172JC).

Author information

Authors and Affiliations

Contributions

L.Z.D. proposed ideas and implementation steps. Z.C.L. designed the experiments and drafted the manuscript. Data were preprocessed and analyzed by X.M.H. and C.C. C.Y.L. and Y.L.H. made several revisions to the manuscript. Y.F.Y. provided initial data and approved submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Z., Hu, X., Chen, C. et al. Multi-factor settlement prediction around foundation pit based on SSA-gradient descent model. Sci Rep 12, 19778 (2022). https://doi.org/10.1038/s41598-022-24232-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-24232-3

This article is cited by

-

ANN deformation prediction model for deep foundation pit with considering the influence of rainfall

Scientific Reports (2023)

-

A novel combined intelligent algorithm prediction model for the tunnel surface settlement

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.