Abstract

The adaptive block size processing method in different image areas makes block-matching and 3D-filtering (BM3D) have a very good image denoising effect. Based on these observation, in this paper, we improve BM3D in three aspects: adaptive noise variance estimation, domain transformation filtering and nonlinear filtering. First, we improve the noise-variance estimation method of principle component analysis using multilayer wavelet decomposition. Second, we propose compressive sensing based Gaussian sequence Hartley domain transform filtering to reduce noise. Finally, we perform edge-preserving smoothing on the preprocessed image using the guided filtering based on total variation. Experimental results show that the proposed denoising method can be competitive with many representative denoising methods on the evaluation criteria of PSNR. However, it is worth further research on the visual quality of denoised images.

Similar content being viewed by others

Introduction

Among many methods of image denoising, domain transformation filtering is one of the most important research projects1. The idea of domain transform filtering is to transform the noise image from the spatial domain to the transform domain. Then, the transform coefficients are processed by the inherent characteristics of the transform domain to reduce noise. Finally, the output image is reconstructed by inverse domain transform2,3. Many excellent denoising methods based on domain transformation filtering have been proposed for decades, such as Wavelet Transform4, Multiscale Geometric Analysis (MGA)5–8, Block-matching and 3D-collaborative filtering algorithm (BM3D)9, The Principal Component Analysis with Local Pixel Grouping (LPG-PCA)10, etc. And there are methods for domain transformation using dictionary learning11–13, etc. These methods achieve excellent denoising performance.

Among the numerous methods, BM3D effectively combines the non-local similarity of images and domain transform filtering, and achieves good denoising performance. Through these two key operations, similar blocks in an image are grouped and aggregated into 3D groups. Then, the grouped blocks are realized domain transformation (sparse representation), and get individual estimates through collaborative filtering. Finally, the denoised image is obtained by aggregation. The excellent structure and denoising effect make BM3D one of the best denoising methods and the evaluation standard of denoising effect, it is one of the methods that must be learned in the research of image denoising. Moreover, BM3D is well worth further research and improvement. For example, Zhong et al. proposed to modified BM3D algorithm by nonlocal centralization prior14, Feng et al. improved BM3D algorithm in terms of Gaussian threshold and angular distance respectively15, In addition, various researches have been proposed to improve the denoising effect, retain more image details, enrich the application mode of BM3D, and so on16–19.

In this paper, in order to make BM3D have better practicability and denoising effect, we improve BM3D in three aspects: adaptive noise variance estimation, domain transformation filtering and nonlinear filtering. Unlike images with known noise variance in experiments, the noise variance of real noisy images is unknown, i.e. blind noise images. First, the noise variance value is a precondition for the application of BM3D, which plays a very important role in collaborative filtering and weight calculation. Second, the wavelet transform cannot fully utilize the geometric features of the image, so it cannot represent the image sparsely effectively, which affects the denoising effect of the domain transformation filtering. Third, the Wiener filter for denoising in the second stage of BM3D is a linear filter, which will cause the destruction of image edges in the filtering process.

In the study of noise variance estimation, the error of almost all estimation methods increases with the increase of noise variance. In order to get more accurate estimation, we improve Principal Component Analysis (PCA) noise variance estimation method with multilayer wavelet decomposition. It is found that after multi-layer wavelet decomposition, there are multiple approximate representations of image in these low-frequency wavelet subbands, which filter the information of image contour and texture. Moreover, the feature distributions of image and noise in the wavelet subband are different, and the noise in the wavelet subband is consistent with the feature distribution in the image. Based on these observation, we try to estimate the noise variance more accurately. We first perform multi-layer wavelet decomposition on the image, then select appropriate layers and estimate the noise variance of each layer, and finally synthesize the results of each layer to obtain the noise variance estimate.

For better sparse representation of images, inspired by Compressed Sensing (CS)20, we propose a domain transformation filtering method. According to the construction of the sensing matrix in CS, we combine the radial basis function (RBF) kernel of the Gaussian process with the discrete Hartley transform (DHT) to construct the Gaussian sequence Hartley transform (GHT), which is used to realize the domain transformation21. Then, the modified threshold shrinkage based on basis pursuit denoising (BPDN) is used to filter the noise coefficients22, finally the preliminary denoised image is obtained by inverse transformation and restoration of filtered coefficients, which can be defined as the basic estimation.

In order to preserve the image edges as much as possible while denoising, we use guided filter (GF) instead of Wiener filter to remove residual noise in the preliminary denoised image23. Guided filter is an excellent edge-preserving filter, but the quality of the guided image will seriously affect the filtering performance. In order to get good denoising effect, we improve the guided filter by optimizing the relationship between the guided image and the input image with the total variation (TV) regularization term24.

The adaptive noise variance estimation makes the proposed method more practical and contributes to the blind noise image denoising. And then, block-matching domain transform filtering and improved guided filtering are successively used for image denoising. Experimental results show that the proposed method has better visual effect and higher PSNR compared with BM3D. Compared with other benchmark and representative image denoising methods, the proposed method also has strong competitiveness.

The remainder of this paper is organized as follows. In Sect. “Related works”, we briefly introduce the related works. In Sect. “The proposed method”, we present the proposed method. Experimental results are shown in Sect. “Experimental results” and conclusion is given in Sect. “Conclusion”.

Related works

Noise variance estimation

In image denoising, noise variance estimation is one of the most fundamental problems and an important factor in most denoising methods. To obtain accurate estimation, many excellent noise variance estimation methods have been proposed over the years, among which PCA-based noise variance estimation is a very outstanding method25. In the PCA-based noise variance estimation, it is considered that the image mean eigenvalues converge to the noise variance:

where \(\overline{\uplambda }\) is the average value of image eigenvalues, \({\sigma }^{2}\) is the noise variance value of image, big \(O\) notation means that \(\exists C\), \(\exists {N}_{0}\) such that \(\forall N\ge {N}_{0}\mathrm{\rm E}\left(\left|\overline{\uplambda }-{\sigma }^{2}\right|\right)\le C{\sigma }^{2}/\sqrt{N}\), and \(C\) does not depend on the distribution of image and noise.

However, with the increase of noise variance, the error of noise variance estimation increases with the increase of noise variance, as shown in Fig. 1. This is because the distribution difference between noise and image feature has become smaller, especially the high-frequency information in the image, and it is difficult to distinguish noise from image texture.

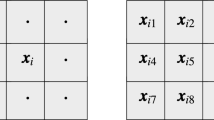

BM3D and wavelet transform filtering

BM3D reduces noise through two stages: Basic estimation and Final estimation. Grouping, collaborative filtering, and aggregation are performed sequentially at each stage. Similar blocks are grouped and stacked into 3D groups in Grouping. These 3D groups are then transformed from spatial domain to wavelet domain by wavelet transform, and the third-dimension transform is performed by Hadamard-transform. After that, threshold shrinkage filtering and Wiener filtering are performed in two stages to filter the noise coefficients, which is called collaborative filtering. After all blocks have been processed, the estimates of all the overlapping blocks were weighted average and aggregated to obtain an exact estimate of image. The flow chart is shown in Fig. 2.

An important reason for the great success of signal processing based on wavelet analysis in many scientific research fields is that it can sparsely represent bounded signals or variation functions. After wavelet transform, the wavelet coefficients corresponding to the signal contain important information, and the amplitude is large and the number is small. The wavelet coefficients corresponding to the noise are uniformly distributed, with small amplitude and large number4. Since the coefficients corresponding to the signal can be sparsely represented with fewer coefficients, domain transformation can generally be considered as a sparse representation. Then, with the threshold shrinkage filtering, it is possible to preserve image information as much as possible while denoising. However, the wavelet transform extended from one-dimensional discrete wavelet transform to two-dimensional image processing has limited directionality, cannot fully utilize the geometric features of images, and cannot perform sparse representation particularly effectively. As a result, the feature distribution of noise and image cannot be distinguished effectively in the traditional wavelet transform domain, and the denoising effect achieved by threshold shrinkage is not very good.

Wiener filtering and guided filtering

After domain transformation filtering, there is still noise with large coefficient in the image. In order to filter the remaining noise, wiener filtering is used in the second stage of BM3D. If the image model with noise is assumed to be \(y\left(u,v\right)=H\left(u,v\right)*x\left(u,v\right)+n(u,v)\), Wiener filtering can be expressed as follows:

where \(x\left(u,v\right)\) is the clean image, \(H\left(u,v\right)\) is the degenerative process, \(y(u,v)\) is the noise image, \(*\) is the convolution process, \(n(u,v)\) is the additive noise.\({\left|H\left(u,v\right)\right|}^{2}={H}^{*}\left(u,v\right)H\left(u,v\right)\) and \({(\cdot )}^{*}\) is the complex conjugate, \({S}_{\sigma }\left(u,v\right)={|n\left(u,v\right)|}^{2}\) and \({S}_{x}\left(u,v\right)={|x\left(u,v\right)|}^{2}\), \({S}_{\sigma }\left(u,v\right)/{S}_{x}\left(u,v\right)\) is the Noise signal power ratio, \(\widehat{y}(u,v)\) is the image after wiener filtering. Unfortunately, as a linear spatial filtering method, wiener filtering not only removes noise, but also corrupts image information.

The idea of guided filtering is to use a guided image to generate weight, so as to process the input image. This process can be expressed as

where q is the output image, I is the guided image and p is the input image. \(i\) and \(j\) are the index of pixels in the image. An important assumption of guided filtering is that there is a local linear relationship between the output image and the guided image in a local window \({w}_{k}\), the linear relationship can be expressed as

\({a}_{k}\) and \({b}_{k}\) are linear coefficients in \({w}_{k}\), their values are constant. The required coefficient \({a}_{k}\) and \({b}_{k}\) should minimize the difference between p and q, then a cost function is defined as

where \(\upepsilon\) is the regularization parameter that prevents \({a}_{k}\) from becoming too large. Accordingly, the coefficients \({a}_{k}\) and \({b}_{k}\) are computed as

where \({\mu }_{k}\) and \({\sigma }_{k}^{2}\) are the mean and variance of the guided image in \({w}_{k}\), \(|w|\) is the total number of pixels, \(\overline{{p }_{k}}\) is the mean pixel value of the input image. Since the window has dimensions, a pixel will be calculated with linear coefficients in different windows, and different windows have different output values. Therefore, the values of \({a}_{k}\) and \({b}_{k}\) need to be averaged

As can be seen, the pixel value of the output image mainly depends on the guided image, it is considered that the quality of guided image determines the filtering effect. If the noise image is used as the guided image, the noise in guided image will cause the wrong weight and inaccurate gradient, and some noise coefficients will be amplified.

The proposed method

Adaptive noise variance estimation

According to Donoho’s theory4, when the noise image is subjected to wavelet decomposition, the wavelet coefficients corresponding to noise are evenly distributed at each scale, and the amplitude of the coefficient decreases with the increase of the scale. Moreover, the characteristic distribution of noise in wavelet subband and image is consistent. Based on the above, we assume that the noise variance can be estimated more accurately by the approximate representation in the first n-layers of low-frequency subbands. To test this hypothesis, we conducted a large number of simulation experiments. We calculate the noise variance of the approximate representation at different scales for noise images with known variance. An example is presented in Table 1 (the image ‘Boat’ is taken and the noise variance is set to 20).

The improved estimation method is as follows: the noise image is decomposed through multi-layer wavelet, then the noise variance of each layer is estimated by PCA-based estimation method, and finally the data of multi-layer is synthesized to get the final estimation25. However, it can be seen from Table 1 that only the first few layers of data are effective, and the effective layer of different images is different. So it is necessary to choose the appropriate level when applying.

As described in Donoho-robust noise variance estimation method5, the noise coefficients are concentrated in the high frequency subband after wavelet decomposition, and the noise variance estimation is defined as

where the value 0.6745 is a highly robust attenuation value of noise coefficient amplitude. In the noise variance estimate of the proposed method, we take the robust attenuation value into account. When the coefficient scaling ratio of adjacent layer exceeds this value, the data in this layer is considered to be distorted, and the previous layer is selected for the constraint layer. In order to avoid the layer selection error caused by the PCA-based estimation error alone, we also use the method of image local block variance statistics to calculate the coefficient amplitudes under different wavelet decomposition scales and select the appropriate layer. To more easily understand the improved noise variance estimate method, the flow chart of estimate method is shown in Fig. 3. And there are some problems needing attention in the improved PCA-based noise variance estimation method:

-

(1)

In some wavelet subband, the coefficient amplitude varies greatly between different layers, it is difficult to obtain accurate estimation. In order to select the appropriate wavelet basis, we compare the orthogonality, compact support, support width, vanishing moment and other characteristics of various wavelet basis, and select the wavelet basis ‘Symlets’.

-

(2)

The texture, contour and other details in the image will greatly reduce the estimation. In the smooth region, the influence of image details can be reduced so that the noise variance can be better calculated26, 27.

Block-matching domain transformation filtering

In this section, we introduce a block-matching domain transformation filtering based on compressed sensing (CS). Compressed sensing is a technique to search for sparse solutions of underdetermined linear systems, it is used to obtain and reconstruct sparse or compressible signals. Compared with Nyquist’s sampling theory, compressed sensing can recover the whole original signal from fewer measured values through the sparse characteristic of the signal. It should be noted that compressed sensing does not break Nyquist's limit. In compressed sensing, sampling and compression of signal are carried out simultaneously, rather than sampling and then compression in Nyquist's theorem.

In compressed sensing, it is considered that if the signal can be sparsely represented by the transformation matrix of the domain transformation, it means that the signal is sparse in this transform domain, and it can be transformed from a high-dimensional space to a low-dimensional space by a measurement matrix independent of the transformation matrix. Then, by solving an optimization problem, the original signal can be reconstructed from these projections with a high probability, which can be expressed as

where \({\Phi }\) is the measurement matrix, \(x\) is the signal. However, \(x\) is usually not sparse and needs to be represented sparsely with a sparse basis

\({\Psi }\) is the transformation matrix, then the function (10) can be written as

where the combination of \(\Phi\) and \(\Psi\) can form the sensor matrix in compressed sensing.

It can be concluded from the above that there are two conditions for compressed sensing, which are also the conditions for constructing domain transformation:

-

(1)

Sparsity. When the signal is sparse or approximately sparse, it can perform compressed sensing and restore the signal with fewer measured value.

-

(2)

Incoherence. To ensure convergence, the measurement matrix should satisfy Restricted Isometry Property (RIP)28, that is, for any strictly k-sparse vector C, the following functional constraints should be satisfied.

$$1 - \varepsilon \le \frac{{\left| {\left| {{\Phi c}} \right|} \right|_{2} }}{{\left| {\left| {\text{c}} \right|} \right|_{2} }} \le 1 + \varepsilon ,\varepsilon > 0$$(13)

Baraniuk proved that the equivalent condition of RIP is that the measurement matrix and the sparse matrix should be independent29. And Candès and Tao proved that the independent and identically distributed Gaussian random measurement matrix can be a universal measurement matrix.

In the sparse representation of signal, an appropriate sparse basis can minimize the number of signal sparseness, which is conducive to improve the signal acquisition rate and reducing the resources occupied by storage and transmission. We choose the Discrete Hartley transform (DHT) for sparse representation in the proposed domain transformation. The reason is that DHT has no complex number operation and the calculation is small. The forward transformation and the inverse transformation are the same, which are the integral transformation of the strict reciprocal of a pair of real numbers. The advantages of DHT make it very suitable for spectral analysis and convolution operation of real data, and also contribute to the research of domain transformation about sensor matrix. The function of DHT is defined as

where \(cas\left( {\frac{2\pi }{N}nk} \right) = \cos \left( {\frac{2\pi }{N}nk} \right) + \sin \left( {\frac{2\pi }{N}nk} \right)\), \(k = 0,1,2 \ldots ,N - 1\).

According to the sensor matrix, the proposed domain transformation is constructed using Gaussian process combined with sparse matrix. Gaussian process is determined by its mathematical expectation and covariance function, and its properties are closely related to its covariance function, while some covariance functions in Gaussian process are kernel functions. The mathematical expectation of a stationary Gaussian process is a constant, so the Gaussian process is completely defined by the kernel function. Therefore, the proposed domain transformation can be simplified as finding a suitable kernel function and combining the sparse function to construct the domain transformation function. And we choose Radial Basis Function (RBF) kernel for the research.

where \(r\) is the difference between the two eigenvectors, \(l\) is the width parameter of the function.

Accordingly, the positive transformation function of the proposed domain transformation is obtained as

where \(cas\left( {\frac{2\pi }{N}nk} \right) = \cos \left( {\frac{2\pi }{N}nk} \right) + \sin \left( {\frac{2\pi }{N}nk} \right),k = 0,1,2 \ldots ,N - 1\). The inverse transformation can be derived as

and \(cas\left( {\frac{2\pi }{N}nk} \right) = \cos \left( {\frac{2\pi }{N}nk} \right) + \sin \left( {\frac{2\pi }{N}nk} \right),n = 0,1,2 \ldots ,N - 1\).

The pair of forward and inverse transformation functions constitute the domain transformation of Gaussian sequence Hartley transformation (GHT). In different transform domains, the feature distributions of noise and signal are different. In GHT, we need a corresponding threshold shrinkage method to filter the noise coefficients. In compressed sensing, in order to reduce the influence of noise in signal, the basis pursuit denoising (BPDN) model is proposed22. If the noise model is assumed to be

where \(x\) is the clean image, \(y\) is the noise image, \(\sigma z\) is the noise affected by noise variance \(\sigma\), the model of BPDN is

The solution \(a^{\left( \lambda \right)}\) is a function of the parameter \({\uplambda }\). It yields a decomposition into signal-plus-residual.

where \(\lambda\) controls the size of the residual. The variable \(a\) can be split into its positive and negative parts, \(a=u-v, u\ge 0,v\ge 0.\) These relationships are satisfied by \({u}_{i}={({a}_{i})}_{+}\) and \({v}_{i}={({-a}_{i})}_{+}\) for all i = 1,2…n. where \({(\cdot )}_{+}\) denotes the positive-part operator as \({(a)}_{+}=\mathrm{max}\{0,a\}\). Then there are \({||a||}_{1}={1}_{n}^{T}u+{1}_{n}^{T}v,\) where \(1_{n} = \left[ {1,1 \ldots ,1} \right]^{T}\) is the vector consisting of \(n\) ones. The function (19) can be rewritten as the following bound-constrained quadratic program (BCQP)

It can be written in more standard BCQP form

where \(z = \left[ {u,v} \right]^{T} ,{\text{b}} = A^{T} y, c = \tau 1_{2n} + \left[ { - b,b} \right]^{T}\), and \(B = \left[ {\begin{array}{*{20}c} {A^{T} A} & { - A^{T} A} \\ { - A^{T} A} & {A^{T} A} \\ \end{array} } \right]\).

According to the BCQP form, then BPDN can be transformed into a perturbed linear programming problem

where A = (\(\Phi ,-\Phi\)), b = y, c = 1. Perturbed linear programming is a quadratic programming problem, but it retains a similar structure to linear programming. Then the denoising method with BPDN refers to minimizing the least square fit error plus a penalizing term22:

the penalizing parameter \(\lambda\) in BPDN optimization model can be set to the value

where \(p\) is the cardinality of the dictionary, and assuming the dictionary is normalized,. The threshold shrinkage function can be derived as

Setting threshold shrinkage as a hard-threshold can better retain image details in image denoising. The proposed domain transformation filtering replaces wavelet transform filtering, and the flow chart of the proposed block-matching domain transformation filtering is shown in Fig. 4.

Improved guided filtering

As mentioned above, the deficiency of wiener filtering results in the decrease of image denoising effect, which is verified by the denoising results, as shown in Table 2. We can see from Table 2 that the PSNR of the final estimation is generally lower than the basic estimation. This is almost consistent with the analysis of the shortcomings about linear filtering, which causes image information damage while denoising. And the wiener filtering function in BM3D can be expressed as

where \(\theta\) is the noise image coefficient, \(\widehat{\theta }\) is the coefficient of basic estimation, \({\sigma }^{2}\) is the noise variance. After Grouping and domain transformation filtering, wiener filtering is used to filter the array of noise image coefficient. But wiener filtering reduces both the noise coefficient and the image coefficient during Collaborative Filtering, resulting in the degradation of the image quality.

To remedy this deficiency, we try to use the guided filtering to filter the remaining noise. Guided filtering is a kind of edge preserving filter that preserves image information as much as possible while denoising. Compared with other edge-preserving filter, guided filtering overcomes the problem of gradient flipping and has high computational efficiency. The structure diagram of guided filtering is shown in Fig. 5. However, there is only the noise image can be used as the guided image in image denoising, which seriously affect the performance of guided filtering. So we propose an improved guided filtering to get better denoising effect.

When we take the derivative of the function (4)

This shows that when the gradient of the guide image changes locally, the gradient of the output image also changes at the corresponding position. And \({a}_{k}\) is the decisive factor in gradient calculation of guided filtering. In noise images, noise creates many extra gradients. In the image denoising method based on gradient prior, it is believed that the gradient (total variation) of an image is limited, and the image denoising problem can be transformed into a total variation optimization problem30. The mathematical definition of total variation (TV) is

where \(p\) is the input image, \(i\) and \(j\) are the index of pixels. On the other hand, the function (6) of \({a}_{k}\) can be written as

where \({\mu }_{k}\) and \(\overline{{p }_{k}}\) are the mean of the guided image and the input image respectively, which can be regarded as \({I(\mu }_{k})\) and \(p(\overline{{p }_{k}})\), that is, the related terms of the guided image and the input image. \(\left|w\right|\cdot ({\sigma }_{k}^{2}+\upepsilon )\) is the scaling factor and its value is constant, therefore, \({a}_{k}\) can be regarded as an optimization model of the relationship between the input image \(p\) and the guided image \(I\). Then, the optimization model can be regarded as

Based on the above, we believe that noise adversely affects the relationship model in (31), resulting in an abnormal increase in gradients and reducing the output image quality. Therefore, we proposed an improved guided filtering by using total variation to optimize the relationship of \(a_{k}\)

where \({\lambda }_{sf}\) is the smoothing factor of TV regularization term. Since guided filtering is a windowed operation, \({\lambda }_{sf}\) needs to be normalized, \({\lambda }_{sf}={\lambda }_{TV}/(size(image))\), and \({\lambda }_{TV}\) is a negative value to reduce the impact of noise in the guided image. In the improved guided filtering, the solutions of linear coefficients \({a}_{k}\) and \({b}_{k}\) are

where \({\lambda }_{sf}={\lambda }_{TV}/(size(image))\), \({\lambda }_{TV}<0\).

Adaptive blind-noise image denoising

The proposed adaptive blind noise image denoising method consists of three parts: noise estimation, basic estimation and final denoising. To better understand the proposed method, we draw the flow chart, as shown in Fig. 6.

First, the noise variance is calculated by the improved PCA-based noise variance estimation method, which can facilitate image denoising. Then, in basic estimation, similar blocks in the image are grouped and aggregated into a 3D array, and the arrays is subjected to GHT-based domain transformation and BPDN-based threshold shrinking to filter noise coefficients. After block-matching domain transformation filtering, the processed coefficients are restored to a preliminarily denoised image by inverse transformation and aggregation. Finally, the improved guided filtering based on total variation is used to filter the remaining noise. In the filtering, the basic estimation and the noise image are used as the input image and the guided image respectively. After the second-order filtering process, the denoised image is obtained.

Experimental results

In order to verify the performance of the proposed blind-noise image denoising method, we use the standard images provided in The USC-SIPI Image Database to carry out the denoising experiment, some experimental test images are shown in Fig. 7. Some representative denoising methods are used to compare with the proposed method, and PSNR is used as the evaluation of denoising effect. The proposed method can be divided into two parts: noise variance estimation and image denoising. Therefore, our experiments will verify the effect of noise variance estimation and denoising effect respectively. And all the simulations are performed under the MATLAB 2018b, with Intel(R) Core(TM) i7-8750H CPU @ 2.20 GHz CPU and 16 GB RAM environment. The testing images will be corrupted by various levels of additive Gaussian, which is implemented by function ‘imnoise’.

Results of noise variance estimation

For decades, many noise variance estimation methods have been proposed. Among them, the representative ones are Donoho robustness estimation31, local image block noise variance distribution analysis32, PCA-based noise variance estimation33, Laplace-based noise variance estimation34, and noise level estimation using weak textured patches (WTPS)35, which are used for comparative experiments. The comparison results are shown in Table 3, and the following conclusions are drawn from the experimental results:

-

(1)

Among the numerous estimation methods, the PCA-based noise variance estimation method and the proposed method perform well in various situations. Although the proposed method improves the accuracy of the estimation, the error still exists. The estimation method still needs further research.

-

(2)

Since the gray level of the image is limited, when the noise variance is too large, the polluted pixel value will exceed the maximum gray level, but the limitation of the gray level makes this part of the noise not manifested in the image. Therefore, the noise in the simulation experiment is sometimes not fully manifested. This part of the noise is difficult to estimate accurately.

-

(3)

If there is an image with a lot of contour and texture details, and less flat areas. When the noise value is low, the multi-layer wavelet decomposition does not distinguish the high-frequency information and noise of the image very well, and the obtained image approximates that only the first layer of information is available, so the obtained results are the same as the PCA-based estimation method.

The PCA-based estimation method has a good performance when the noise variance value is not high. In order to improve the efficiency of the proposed estimation method, we can directly use the PCA-based estimation method results when the noise variance value is lower than a certain threshold (the threshold is 1/0.6745 = 14.8).

Results of block-matching domain transformation filtering

In this section, we compare the denoising results of the proposed method and BM3D, the denoising results include basic estimation and final estimation. The results of basic estimation verify the performance of the block-matching domain transformation filtering, and the results of final estimation verify the performance of the wiener filter and the improved guided filter. The smoothing factor \({\lambda }_{TV}\) of the improved guided filtering is set to − 0.1 for convenience. In experiments, we also compare the denoising results obtained using accurate noise variance values and noise variance estimates to demonstrate the impact of estimation errors. In this section, we mainly discuss the performance of block-matching domain transformation filtering. The experimental results are shown in Table 4, and the following conclusions are drawn:

-

(1)

The proposed method has better denoising performance in both basic estimation and final estimation, and the final estimation proves that the improved guided filtering can effectively preserve image information while denoising.

-

(2)

The noise is easily confused with the texture, contour and other details of the image, and it is difficult to remove noises in these areas. The improved guided filtering also inevitably damages image information while denoising.

Part of the denoised image is shown in Fig. 8. It can be seen that BM3D suffers from halo artifacts. Although the proposed method reduces the halo artifacts, it also causes over-smoothing of the image. This issue deserves further research and improvement.

Results of the improved guided filtering

In this section, we compare the denoising effect of guided filtering and the improved guided filtering. The guided images in both guided filtering and improved guided filtering are selected to be consistent with the input images. Improved methods based on total variation require multiple iterations to obtain optimal results, and we show the effect of different smoothing factors on the results, as shown in Table 5 and Fig. 9. The bolded numbers in each row is the number with the highest PSNR. It can be concluded from the experimental results that:

-

(1)

The improved guided filtering gives a good improvement in image denoising, even with a small absolute value smoothing factor (|− 0.1|).

-

(2)

In most cases, there are smoothing factors that maximizes PSNR, and the smoothing factor is not unique, a small change in the value of smoothing factor does not change PSNR.

-

(3)

In different images, the appropriate smoothing factor is different. The use of iteration to find suitable smoothing factors makes the improved guided filtering have high computational complexity.

Results of the proposed method

In this section, the proposed image denoising method is compared with several representative denoising methods, such as NCSR11, MCWNNM36, TWSC13, Quantum mechanics-based (QM-based)37, NLH38. These methods are of great significance to the research of image denoising, and have reference value for the evaluation of denoising effect. The experimental results are shown in Table 6 and Fig. 10. The bolded numbers in each row is the number with the highest PSNR. The running times of various methods are shown in the Table 7, and the value of running time is the median value of multiple experiments.

From the experimental results, it can be seen that in most cases, the denoising effect of the proposed method is better than other methods, and it has a lower computational complexity. For the visual quality of denoised images, both denoising and detail-preserving should be taken into account. Compared with other methods, the proposed method reduces artifacts, does not cause over-smoothing, and has a better compromise on denoising and detail preservation. However, the proposed method causes some image blurring. It is a worthy project in the further study.

Conclusion

In this paper, a blind noise image denoising method is proposed, which consists of noise variance estimation, block matching domain transform filtering and improved guided filtering. First, we improve the PCA-based noise variance estimation method by using multilayer wavelet decomposition to obtain a more accurate noise variance estimation. Then, according to the learning and analysis of BM3D, a block-matched domain transform filter based on compressed sensing is proposed to reduce noise. Finally, we improve the denoising effect of guided filtering by optimizing the relationship model between the input image and the guided image. Experimental results show that the proposed method is competitive with many benchmark and representative image denoising methods. However, the proposed method has some shortcomings, and we will improve the performance of proposed method in future work.

Data availability

The datasets generated and/or analyzed during the current study are available in the [The USC-SIPI Image Database] repository, [https://sipi.usc.edu/database/database.php].

References

Mallat, S. A Wavelet Tour of Signal Processing (China Machine Press, 2009).

Ramchandran, K. et al. Wavelets, subband coding, and best bases. Proc. IEEE 84(4), 541–560 (1996).

Taswell, C. The what, how, and why of wavelet shrinkage denoising. Comput. Sci. Eng. 2(3), 12–19 (2000).

Johnstone, D. I. M. Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3), 425–455 (1994).

Donoho, D. L. Wedgelets: Nearly minimax estimation of edges. Ann. Stat. 27(3), 859–897 (1999).

Candès, E. J. & Donoho, D. L. New tight frames of curvelets and optimal representations of objects with piecewise C2 singularities. Commun. Pure Appl. Math. 57(2), 219–266 (2010).

Pennec, E. L. & Mallat, S. Bandelet representations for image compression. In International Conference on Image Processing. IEEE (2001).

Unser, M. & Aldroubi, A. Shift-orthogonal wavelet bases using splines. IEEE Signal Process. Lett. 3(3), 85–88 (2002).

Dabov, K. et al. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2082–2094 (2007).

Zhang, L. et al. Two-stage image denoising by principal component analysis with local pixel grouping. Pattern Recogn. 43(4), 1531–1549 (2010).

Dong, W., Zhang, L. & Shi, G. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 22(4), 1620–1630 (2013).

Zoran, D. & Weiss, Y. From learning models of natural image patches to whole image restoration. In International Conference on Computer Vision 479–486 (2011).

Xu, J., Zhang, L. & Zhang, D. A trilateral weighted sparse coding scheme for real-world image denoising. In European Conference on Computer Vision (2018).

Zhong, H., Ma, K. & Zhou, Y. Modified BM3D algorithm for image denoising using nonlocal centralization prior. Signal Process. 106, 342–347 (2015).

Feng, Q. et al. BM3D-GT&AD: An improved BM3D denoising algorithm based on Gaussian threshold and angular distance. IET Image Process. 14(3), 431–441 (2019).

Wen, Y. et al. Hybrid BM3D and PDE filtering for non-parametric single image denoising. Signal Process. 11, 108049 (2021).

Danielyan, A., Katkovnik, V. & Egiazarian, K. BM3D frames and variational image deblurring. IEEE Trans. Image Process. 21(4), 1715–1728 (2012).

Abubakar, A. et al. A block-matching and 3D filtering algorithm for Gaussian noise in DoFP polarization images. IEEE Sens. J. 18(18), 7429–7435 (2018).

Huang, S. et al. Image speckle noise denoising by a multi-layer fusion enhancement method based on block matching and 3D filtering. Imaging Sci. J. 67(3–4), 224–235 (2020).

Donoho, D. L. Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006).

Candes, E. J., Romberg, J. & Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006).

Chen, S. S. & Saunders, D. Atomic decomposition by basis pursuit. SIAM Rev. 43(1), 129–159 (2001).

He, K., Jian, S. & Tang, X. Guided image filtering. In European Conference on Computer Vision (Berlin, Heidelberg, 2010).

Zhao, W. et al. Texture variation adaptive image denoising with nonlocal PCA. IEEE Trans. Image Process. 28(11), 5537–5551 (2019).

Pyatykh, S., Hesser, J. & Zheng, L. Image noise level estimation by principal component analysis. IEEE Trans. Image Process. 22(2), 687–699 (2013).

Abramov, S. K. et al. Segmentation-based method for blind evaluation of noise variance in images. J. Appl. Remote Sens. https://doi.org/10.1117/1.2977788 (2008).

Uss, M. et al. Image informative maps for estimating noise standard deviation and texture parameters. J. Adv. Signal Process. 2011(1), 1–12 (2011).

Candès, E. J. The restricted isometry property and its implications for compressed sensing. Comptes Rendus Math. 346(9–10), 589–592 (2008).

Baraniuk, R. G. Compressive sensing. IEEE Signal Process. Mag. 24(4), 118–121 (2007).

Dey, N. et al. Richardson-Lucy algorithm with total variation regularization for 3D confocal microscope deconvolution. Microsc. Res. Tech. 69(4), 260–266 (2006).

Donoho, D. L. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 41(3), 613–627 (1995).

Fang, Z. & Yi, X. A novel natural image noise level estimation based on flat patches and local statistics. Multimed. Tools Appl. 78(13), 1–22 (2019).

Pyatykh, S., Hesser, J. & Lei, Z. Image noise level estimation by principal component analysis. IEEE Trans. Image Process. 22(2), 687–699 (2013).

Immerk, R. J. Fast noise variance estimation. Comput. Vis. Image Underst. 64(2), 300–302 (1996).

Liu, X., Tanaka, M. & Okutomi, M. Noise level estimation using weak textured patches of a single noisy image. In IEEE International Conference on Image Processing. IEEE (2013).

Xu, J., Lei, Z., Zhang, D. et al. Multi-channel weighted nuclear norm minimization for real color image denoising. In0 IEEE International Conference on Computer Vision (ICCV) (2017).

Dutta, S., Basarab, A., Georgeot, B. & Kouam, D. Quantum mechanics-based signal and image representation: Application to denoising. IEEE Open J. Signal Process. 2, 190–206 (2021).

Hou, Y. et al. NLH: A blind pixel-level non-local method for real-world image denoising. IEEE Trans. Image Process. 29, 5121–5135 (2020).

Acknowledgements

This work was supported by National Natural Science Foundation of China (No.61976124).

Author information

Authors and Affiliations

Contributions

H.J. wrote the main manuscript text, Q.Y. and M.L. gave advice. All authors reviewed the Manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jia, H., Yin, Q. & Lu, M. Blind-noise image denoising with block-matching domain transformation filtering and improved guided filtering. Sci Rep 12, 16195 (2022). https://doi.org/10.1038/s41598-022-20578-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-20578-w

This article is cited by

-

Exploring fetal brain tumor glioblastoma symptom verification with self organizing maps and vulnerability data analysis

Scientific Reports (2024)

-

On the reduction of mixed Gaussian and impulsive noise in heavily corrupted color images

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.