Abstract

Phishing is an attack characterized by attempted fraud against users. The attacker develops a malicious page that is a trusted environment, inducing its victims to submit sensitive data. There are several platforms, such as PhishTank and OpenPhish, that maintain databases on malicious pages to support anti-phishing solutions, such as, for example, block lists and machine learning. A problem with this scenario is that many of these databases are disorganized, inconsistent, and have some limitations regarding integrity and balance. In addition, because phishing is so volatile, considerable effort is put into preserving temporal information from each malicious page. To contribute, this article built a phishing database with consistent and balanced data, temporal information, and a significant number of occurrences, totaling 942,471 records over the 5 years between 2016 and 2021. Of these records, 135,542 preserve the page’s source code, 258,416 have the attack target brand detected, 70,597 have the hosting service identified, and 15,008 have the shortener service discovered. Additionally, 123,285 records store WHOIS information of the domain registered in 2021. The data is available on the website https://piracema.io/repository.

Similar content being viewed by others

Background and summary

Phishing is a type of social engineering attack where the attacker develops a fake page that presents itself as a trusted environment, inducing its victims to submit sensitive data, such as, for example, access credentials to a certain genuine service1. The word “phishing” first emerged in the year 1996, when criminals stole passwords from American Online (AOL) users2. When analyzing the timeline of phishing attacks, one can see an evolution from generic fraud attempts without defined targets to exploitation based on trends, facts, and opportunities. In other words, attackers have realized that the more valuable the target, the more resources (mostly money) they raise in an attack campaign3.

According to Kaspersky report4, in 2019, attacks of this type caused monetary losses close to 1.7 billion dollars. In 2020, the number of phishing attacks doubled during the first month of the quarantine5, reflecting the need to digitize companies and the mass migration of small businesses to e-commerce at the time. In 2021, the number of attacks continued to increase, with a 50% increase in attacks over the previous year6.

In the fight against phishing attacks, numerous solutions have been proposed3,7,8. Currently, those that adopt machine learning techniques (Machine Learning) have grown in number and importance9,10,11. However, a noticeable problem in machine learning solutions in many areas is a need (dependence) on datasets to train and test. For example, Tang et al.12 employ an old (built in 2012) and unbalanced dataset, called Drebin, to detect security vulnerabilities in Android applications. Similarly, Qi et al.13 proposed a novel privacy-aware data fusion and prediction approach for the smart city industrial environment tested in a dataset built in 2012. Already Ma et al.14 create a dataset to identify cybersecurity entities in unstructured text. However, how collected the data and why were chosen are not described. These aspects difficult the reproducibility in other works.

According to Allix et al.15, machine learning models learn from the input data, and the impact of their performance (i.e., predictive capacity) is related to the datasets (set data) used for training. Therefore, an “adequated” dataset must-have features such as complete data, actuality, and diversity. Li et al.16 state that reduced datasets (manually verified) are generally used to evaluate anti-phishing mechanisms. This is due to the various inconsistencies that can appear in large repositories, such as PhishTank, which does not have a cleaning mechanism to remove invalid and offline URLs, resulting in an incorrect database.

The problem is how to create this “adequated” dataset? A dataset is defined by an expert analyst who designs more specific structured information. These informations are from a repository values the volume of evidence, that is, with a more general purpose, thus enabling the building of distinct datasets from the same repository.

Analyzing many of the phishing datasets available (someones discussed in related work section), it notes that almost then obtained their data from phishing platforms like PhishTank, OpenPhish, and PhishStats. Although academic and commercially recognized, these platforms present problems such as, for example, data disorganization (lack of format), data inconsistency, and lacking information (null or absent values). For these reasons, before building a dataset, it is necessary to pre-treat the database information to ensure that the analysis results are consistent and unbiased. To address this problem, this article presents a public phishing database, organized and consistent, to help studies that need to build a dataset. The base was used previously in the study by Silva et al.17,18, analyzing static and dynamic aspects of phishing, which justifies its relevance for data reuse. By storing information such as WHOIS and page content, the base includes details regarding temporal aspects, i.e., phishing behaviors that can be loosed over time due to their volatile nature, which is why this base receives the term snapshot. The database records phishing incidents from 2016 to 2021, totaling 942,471 records.

Related works

Works like Roy et al.19, Shantanu et al.20, Al-Ahmadi et al.21, Alkawaz et al.22, Al-Ahmadi23, Orunsolu et al.24, and others, propose new methods of detecting phishing pages using machine learning methods (Random Forest—RF, Recurrent Neural Networks—RNN, Support Vector Machine—SVM, K-Nearest Neighbours—KNN, and Multilayer Perceptron—MLP). Although they use different classifiers, these works tipically train your models in your own datasets created from PhishTank, OpenPhish, and other platforms.

The problem with these works is the lack of information about the process and steps of building these datasets. The same can be said when comparisons are made between these datasets and pages of the repositories. In addition to the lack of information regarding the construction, which makes it impossible to reproduce the datasets, the non-disclosure of the instances (samples) collected and used also makes a more realistic comparison impossible. For example, is fair compar a dataset built with phishing pages released in 2022 with existing pages in a repository released in 2012? Are there the same characteristics in both of the data?

Based on the above, it is possible to observe that these studies needed to create or seek a knowledge base to evaluate their proposals. The purpose of Piracema is to offer a repository with a volume of information capable of showing patterns in phishing attacks. We believe that many researchers, who aim to mitigate phishing attacks, will have well-structured, information-rich, and integrated data to build their datasets for their intelligent solutions. Given this, we believe that our proposal is an excellent contribution to the Open Source Intelligence (OSINT) scenario for the academic community that wants to combat Web fraud.

Methods

The database defined by this study, called Piracema, contains records of fraudulent pages extracted from 3 whistleblowing platforms that make their records available for free: PhishTank (https://phishtank.org/), OpenPhish (https://openphish.com/), and PhishStats (https://phishstats.info/). We note that each page on these platforms was reported by the community, analyzed, and received a verdict, judging whether it is phishing or a legitimate page. In addition, the pages in Piracema, dating from 2016 to 2021, were collected and organized by the reported year. Figure 1 illustrates the extraction process in each repository as well as the average number of records collected each period.

The building process of Piracema is detailed in the following sections: Data Source, where phishing reporting platforms are explained, from the suspicion of fraud to its confirmation. Data extraction, which aims to describe the data collection process on the whistleblower platforms. Moving on to Data Treatment, the improvements applied to the data will be exposed to maintain the base with consistent information. In Features are defined the extracted features from the data, their collection processes, improvements, and statistical data. Subfeatures exemplifies some implicitly features on the base, the result of a decomposition of the features presented in the previous section. Finally, Threats aims to present the found obstacles during the previous steps or possible limitations for the data use contained in the database.

Data source

There are several platforms available that serve as support for phishing detection. In this study we used PhishTank, OpenPhish, and PhishStats, due to the greater diversity of records, availability and processed data.

PhishTank is a free community platform where anyone can submit, verify, track and share phishing data (https://bit.ly/39qG5bj). It also provides an open API to share your anti-phishing data with free third-party applications. It is important to point out that the PhishTank team does not consider the platform as a protective measure (https://bit.ly/3HpuYw0). For them, PhishTank information serve a subsidy for incident response mechanisms in various organizations (https://bit.ly/3MKJHCt), such as Yahoo!, McAfee, APWG, Mozilla, Kaspersky, Opera and Avira.

PhishTank is described as a community because it supports a large number of users who collaborate on phishing data on the Web. Its collaborative nature refers to the fact that all registered users have the possibility to feed the phishing database through voluntary reports.

Regarding confirmation, PhishTank allows a user to submit a suspicious URL and for other users to carry out a voting system to determine the verdict on the report, that is, to consider the phishing as valid or invalid. As for availability, the platform observes whether the phishing is online or offline. It is important to note that unavailable phishing means that the request returned HTTP code 400 or 500, that is, inaccessible, assuming the status “offline”. The lifecycle between phishing, the platform and its users is divided into 5 stages as illustrated in Fig. 2.

In step 1, the attacker publishes their malicious page on a web server, made available for propagation across the web. Step 2 forwards the discovery of the malicious URL by a user. Subsequently, they access PhishTank and report the URL, thus performing step 3. Step 4 describes the moment when the platform waits for community votes on the newly reported URL. Finally, step 5 occurs when the voting system receives a satisfactory amount to consider the URL malicious or not. It is worth noting that the “sufficient” amount of votes is not explained, the platform declares that it may vary according to the history of complaints (https://bit.ly/3tXnCub). In addition to this, due to its high dependence on the community, there is a delay on the part of the community regarding the confirmation of the reported complaint, between steps 3 and 5 (the difference between confirmation time and submission time), resulting in a temporal vulnerability window.

It is worth mentioning that the same process occurs analogously on the platforms OpenPhish and PhishStats.

Data extraction

The records extraction, which was carried out by Silva et al.18 in their work, started in August 2018 and ended in January 2019, with data from 2016 to 2018. Records from 2019 to 2021 were obtained between 2020 and 2022, completing this database.

Some platforms, such as PhishTank, establish a voting criterion made by the community to confirm the reports they receive; in this way, it is possible to avoid the occurrence of false positives. However, as these platforms do not define a deadline for the voting verdict, it was necessary to adopt an interval margin for the extraction process. The collection was carried out following the metric of 1 month before the current month, i.e., the pool of January was closed until the last day of February. The unique requirement was the records had the submission date belonging to January. The extraction process followed successively for the other months.

To build Piracema, it was necessary to obtain a significant amount of phishing categorized as “valid”, whether online or offline. Taking PhishTank as an example, the platform provides a web service that provides a JSON file (https://bit.ly/3OfqTwi). It is updated every 1 h, containing approximately 15,000 records. In addition to the URL, status, confirmation, and publication date, the confirmation date and target are also available. The confirmation date refers to when the verdict appears (phishing or legitimate) for a URL. Since several organizations make simultaneous requests to the API, a system is adopted to avoid overloading the platform’s servers. Each request to the file is made with a key to identify the user. Then, this key is informed in the HTTP header with the limits and intervals of requests to be performed periodically (https://bit.ly/3aXnvrR). Currently, the platform does not provide registration for new keys.

However, the process had some obstacles. About 90% of the URLs were kept in the other subsequent files. Considering that each compressed JSON had 9MB, it overloaded the platform, giving a 509 bandwidth limit that exceeded errors, indicating that the key in the request had been banned. To circumvent this problem, previous registrations of several keys were performed to be replaced each time a key was banned, as shown in the flowchart on the left in Fig. 3.

Despite being rich in information, JSON has many limitations. One of these limitations is the option, on the part of the entity issuing the file, to keep only “valid” and “online” phishing records, thus disregarding temporal aspects that may impact the data. For example, this impact can be seen in the file downloaded on January 15, 2019, where the months of January and February 2018 had 358 and 617 records. However, the same file has more recent months, such as November and December of the same year, with 1524 and 1791 entries.

As an option to circumvent the limitations imposed by the JSON file, the platform offers the phish archive function (https://bit.ly/3Hqb2c7). Unfortunately, this functionality only allows the observation of information and not its download for storage and application in other activities. Therefore, several requests were made for access to the platform’s registry collection, but all attempts ended with no response from the PhishTank team. Therefore, with the lack of response from the platform, it was decided to develop a Web Crawler capable of collecting and storing the information available in the phish archive function. This process can be seen in Fig. 3.

On the other hand, JSON has a lot of useful information. For instanece, the “valid records” option allows to keep only phishing and “online” records, thus disregarding the temporal aspects that can impact data. This impact can be seen, for example, in the file downloaded on 01/15/2019, where the months of January and February 2018 had 358 and 617 records. However, the same file has more recent months, such as December of the same year, with 1524 and 1791 records for November.

As a service option provided as defined by the JSON file, a platform with phish file function (https://phishtank.org/developer_info.php). This feature of viewing, chronologically and through filters, of all URLs reported on the platform, that is, you can observe the platform records over time, still filtering as occurrences based on their validation status, “valid” or “invalid”, or by their availability of access, “online” or “offline”. This functionality only allows the observation of information, not its download for storage and application in other activities. Therefore, several requests for access to the platform collection were broadcast, but no attempt got response by PhishTank team. Thus, with the lack of response from the platform, it was decided to develop a Web Crawler capable of collecting and storing the information available in the phish archive function. This process can be seen in Fig. 3.

More efficient than JSON files, the data collection system through the Web crawler also faces some difficulties, such as the record list page, which limits the display of the long URL to the first 70 characters, abstracting the rest. Of the address and replacing it with “...”. As a workaround for this limitation, it was necessary to access the record’s detail page, where the web crawler could find the full URL version. At this point, it is essential to highlight that some entries were inaccessible on the details page, so, as a slight anomaly confined to several entries, it was decided to discard this data.

With the application of the web page collection mechanism, it was possible to extract the following information: record ID, URL, submission time, verification time (“valid” or “invalid”), and its availability (online or offline). This way, 942,471 records were obtained, spread over 6 years, from 2016 to 2021.

Data processing

The proposed consistent basis for its data refinement processes includes removing duplicates, removing false positives, and correcting inappropriate data. In this way, a more balanced basis is possible. During this process, as illustrated in Fig. 4, a refinement was obtained in the registered records, removing pages with an invalid host, motivated by the use of URLs with invalid syntax. The figure of the data performed, since information after an example cannot be previously or non-process obtained from the request, since information after a model cannot be obtained from the requirement of the process page has a registered domain, among other situations.

An analysis of the potential brands present in the sample was carried out to investigate the brands involved in the process. The PhishTank JSON has a “target” field. However, it was possible to observe that it has several records assigned as “Other”. In addition, it was also found that many values in this field did not match the target brand in question. In addition, the solution was to extract the marks involved through textual and visual search. Based on Table 10, it was possible to observe the most exploited brands in phishing attacks between the years 2016 to 2021.

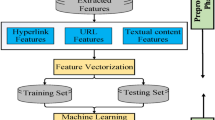

Features

For the Piracema composition, each piece of information collected underwent individual pre-processing, which varied according to the nature of the data. In this process, several manipulations were performed, providing a refinement of the worked features. The description, relevance, and improvement applied to each feature are described in the following items:

-

URL: This is the URL of the fraudulent page. This feature is the starting point for the other. We suggest the idea of analyzing the sub-features arising from it, such as the most explored TLD, URL size, number of subdomains, domain size, URL path analysis, if the port is different from 80, if the IP is exposed or not, among others, according to the anatomy illustrated in Fig. 5. This data was extracted from phishing repositories and invalid, duplicate, and notoriously false positive URLs were removed.

-

Report Time: This field is the date the phishing was confirmed as a fraud by the repository. According to the time of year, it is possible to notice an increase in the number of cases of phishing attacks, motivated, for example, by the approaching a commemorative date, such as Christmas, or even promotional events, such as Black Friday, or even the pandemic caused by the Coronavirus. From the phishing Report Time, it is possible to analyze the seasonality and volatility of attacks (assuming that the date in question begins the phishing period). This information could be extracted from phishing repositories, where it did not need treatment, as illustrated in Fig. 6.

-

Status Code: is the HTTP code of the page, which allows us to know which pages have a code of the family 200, 300, 400, or 500, with the response code 400 being the most present among the records since phishing pages tend to be highly volatile, with a very small period of activity. The extraction of this feature was performed via a Python script and did not need any specific treatment. The codes most found during the analysis of fraudulent pages are described in Tables 1 and 2, where the Status Code number is available, followed by the number of occurrences each year (2016-2021), the total sum of occurrences over the analyzed period and finally the percentage that this value represents about the total records. For more easily, this information can also be visualized in Fig. 7 and the Tables 1 and 2.

-

Response content: As illustrated in Fig. 8, this field refers to the content of the page body (HTML, CSS and JS source code). The source code can help in analyzing the behavior of a malicious page, resulting in subfeatures, as it happens when analyzing the URL. In case the content of the source code is significant, you can analyze features such as cross-domain forwarding and clickjacking attempts and fake user errors25. This last feature is present in attacks aimed at mobile devices, where the fraud is developed according to the devices resolution and if the user tries to open the link through a desktop browser, an HTTP error is displayed that was simulated by the attacker18.

-

Hosting service: Defines whether the page in question is hosted on a hosting service. This kind of information is important because pages of this nature do not have a registered domain, therefore, it will not be possible to detect their age through WHOIS. In addition, it is possible to analyze the hosting services most exploited for crime. This type of service can generate a series of benefits for the attacker, enhancing the publication of fraud. We can cite as an example 000webhost, which offers free and easy-to-use hosting, reason that leads it to be among the most used hosting services in phishing attacks, as seen in Fig. 9 and in more detail in Table 3. It can also be seen in Table 3 the presence of hostings with the name of Google services and Microsoft services; these items refer to services made available by Google, such as: blogspot.be, blogspot.com, docs.google.com, drive.google.com, firebaseapp.com, forms.gle, googleapis.com, sites.google.com and web.app. The term Microsoft services is the grouping of services as: myspace, office.com, onedrive.live.com and sharepoint.com. Therefore, all these services were considered as a single item associated with the company to which it belongs. The data was extracted via Python script, with NLP and Regex resources. Prior to the extraction, APWG reports were consulted to identify the most exploited hosting services in phishing attacks between 2016 and 2021. Next, the data were used as a knowledge base to carry out the detection.

-

Target brand: It is the target brand identified in the fraud; its identification can be an important premise in preventing the phishing attack, especially in targeted attacks, which are usually very sensitive to aspects of the visual identity of a particular brand. It is possible to consider that certain elements offer greater trustworthiness to the attack, increasing its effectiveness. Given this, through social engineering, the attacker observes visual aspects of the content, context and URL of the page. The whole motivation for this effort, on the part of the attacker, is to create a greater susceptibility of the end user to phishing attacks18. It was possible to extract this feature using a Python script, supported by NLP and Regex. Before starting to extract the feature, we used APWG reports, identifying the most exploited brands in phishing attacks between 2016 and 2021. Then, we created a list with these marks, using as a base of previous knowledge for the detection algorithm. To start the brand detection, the hosting service detection was previously performed, that is, if the page in question was hosted on a hosting service. For example, in the link https://sites.google.com/s/paypal-secure-access, as it was previously detected that it is a google hosting service, the detection mechanism discards the keyword check on the domain, focusing only on the subdomain and URL path, avoiding false positives in relation to the target brand. The most common marks in phishing attacks are illustrated in Fig. 10 and have their detailed information in the Table 4.

-

Shortener Service: Defines if the page has the use of a URL shortener service, where a website has its URL converted into a short URL code. This type of service is widely used in an attempt to hide features of the fraudulent URL, leading the end user to access the page in question, since it is not possible to analyze in advance aspects of the URL such as the domain name. The most commonly used URL shortening services in phishing attacks are shown in Fig. 11 and detailed in Table 5. The data was extracted via Python script, using NLP and Regex techniques. A survey was done previously, looking for the most used shorteners on the web, followed by APWG reports to filter the most exploited shorteners in phishing attacks between 2016 and 2021. In order to cover as many records as possible, services with lesser popularity were added, searching for domains with a length of less than 5 characters, as well as domains whose host and domain were the same and there was no subdomain. Finally, we used all occurrences as a knowledge base to carry out the detection.

-

WHOIS: It is the creation date of the domain, important to know the age of the domain that was registered; this information is useful for analyzing phishing volatility patterns. It was extracted via a python script, looking only for records where there is no page on hosting services, thus avoiding data coming from a domain registered by the owner of the hosting service and not something registered by the malicious person for their fraudulent page.

Subfeatures

From the analysis of the features presented in the base, it is possible to observe a significant variety of secondary features, such as domain size and number of subdomains, which can be extracted from the URLs, or the time of activity of the fraudulent pages, derived from the relationship between the date of registration of the domain and the date of its identification. Such features, created from the decomposition of larger features, are called subfeatures. These features can be extremely valuable for application in ML models, since they can help in the detection of behavioral patterns, capable of identifying the perversity of fraudulent pages.

Additionally, it is possible to link these subfeatures to certain phishing behaviors, whose some aspects are debated in the literature, such as spread and volatility. Based on studies by Silva et al.17,18, see the nomenclatures and definitions adopted by this study to explain the behaviors commonly observed in phishing:

-

Trustworthiness, describes the high richness of fraud details compared to the genuine page. In view of this, the attacker extracts profiles of each target involved, which translate into a set of behaviors that serve as a subsidy for the elaboration of the malicious page26. In theory, the higher the quality of the profile, the greater the trustworthiness. Also, the attacker can also carry out other activities, such as registering or hijacking a domain, in order to assign arbitrary combinations through keywords. This behavior is also sensitive in the detection of brands involved in the process, distinguishing conventional phishing from targeted phishing18.

-

Obfuscation, which describes the fraudster’s attempts to hide information that could be visible to the end user, but due to high or low amount of characters, some details may not be observed. It is not uncommon for malicious actors to apply techniques that forge behavior through JavaScript, simulating errors or restrictions in order to target their attacks to a particular region or device.

-

Propagation, which describes some behaviors that aim to increase the reach of frauds on a large number of users, such as bypassing techniques in blocking lists. In the same vein, the exploitation of services on the Web, such as hosting and domain registration services, end up driving the spread of fraud.

-

Seasonality, which describes the sensitivity of phishing to annual calendar events. Interestingly, in Fig. 19, about the Data Records section, an apparently stable pattern of occurrences between the months of August and October is noticeable, as well as a peak pattern in the months of November in the last 6 years. On the other hand, in this same annual window, it is possible to observe outliers, one higher case in July 2020 and two lower cases in the same month in 2016 and 2018, such facts can be justified by some seasonal event. It is important to analyze these outliers, as data that differs drastically from all others can cause anomalies in systems that analyze patterns of behavior.

-

Volatility, which refers to the short lifespan, showing that the fraud is quickly abandoned by its creator. Volatility can be an obstacle in the studies of phishing behavior, since much evidence of fraud can be analyzed in the source code, a resource of which ends up being available for a very imminent time.

In Tables 6 and 7, some subfeatures are named and briefly defined, followed by the behaviors to which they can be related, the type of the variable and from which main feature they can be collected. These subfeatures are implicitly at the base, explained in this section because they came from our analysis, which made it possible to glimpse them. But the number of possible subfeatures is not restricted to the ones mentioned above, since analyzes by other authors may result in new subfeatures.

Threats

This section describes the threats and barriers to be considered by the study.

Regarding the sample collection process, it was not uncommon to find duplicate occurrences between the 3 reporting platforms, so the final amount of fraudulent pages to be analyzed dropped significantly. Although 8 features considered relevant were gathered, a significantly large amount for a single database, some important features may have been left out.

When capturing the Report Time of a phishing page, there may be cases where the phishing activity did not necessarily start in the informed period (the same may have acted much earlier, as there may be a delay in the community report).

Since the extractions started between 2019 and 2020, when we gathered the Status Code of the pages, the predecessor years end by having fewer records showing code 200 errors or similar, due to the volatility of phishing. To do so, the process was extracted via a python script, then content that offered an error page from the hosting service was removed, where the page had been removed and only a standard redirect warning was displayed. The entire process was supported by the Status Code information, as can be seen in Table 8. Similarly, the Response feature ends up being affected by the volatility of phishing, so that in recent years, the number of pages with the source code ends up being higher.

Hosting services and URL shorteners most used in attacks were detected. However, there may be other services that are not on our prior knowledge list that could be used to host malicious pages. The high volatility of the phishing scenario causes hosting service maintainers to have a considerable delay in identifying the use of their services for fraudulent purposes (that is, when they do), causing the malicious user to keep migrating from service each time it is banned. Such a delay can also end up making the conduct policy of these services unfeasible, motivating fraudsters to increasingly explore a particular service. Similarly, the target brand is also detected by a list of prior knowledge generated from the latest reports from the APWG, then the detection engine may experience the same overfitting mentioned earlier.

Finally, in the WHOIS capture process, the limitation refers to the fact that the information base of domain registrars is is limited to .COM, .NET, .EDU. Another point worth mentioning are the cases of phishing attacks that hijack legitimate domains, such as cases when a malicious person manages to inject a malicious page through exploitation via upload on a legitimate domain with a registered domain. In these cases, for the most part, they were removed by the refinement process because they were assumed to be false positives, considering that, once the server maintainer removes the malicious file, your domain will no longer be dangerous.

However, in some situations, such as the case of overfitting due to absence from the previous list, the WHOIS may end up resulting in a very old activity date (because it is a hijacked legitimate domain), which would bias the results, however, we believe that there are few cases of this nature, since we performed a screening in search of significant outliers.

Data records

This section describes the contents present in each database file. As shown in Table 9, the base keeps files divided by year, individually, with phishing page data from 2016 to 2021, followed by the number of occurrences for each year. In Table 10 it is possible to observe the extracted features and their descriptions.

Technical validation

The main contributions proposed by the Piracema database are highlighted in Table 11, including comparisons of some features present in the PhishTank, OpenPhish, and PhishStats repositories. Note that some items in Table are marked with “*” to represent some reservations about how the content is obtained or presented in the base to which it is related.

Initially, the platforms have a common way of obtaining new records, where PhishTank and PhishStats allow users to submit and view phishing URLs that are updated daily on the websites. In OpenPhish, the submission of new complaints is via e-mail. Piracema does not allow the community to report malicious URL because its registry base essentially comes from other platforms. However, we do intend to implement this functionality in the future.

Another point is the number of features and aspects analyzed in each platform. For example, PhishTank and PhishStats have a “date” field that tells you the date and time when phishing occurred. However, OpenPhish has the “time” field that provides only the time of the occurrence, which leads to the assumption that the day of the occurrence would be the date the URL was published on the platform. As a differential, Piracema offers additional information compared to other databases, such as page_content, whois_creation_time, and other fields exposed in the “Data offered” column of the Table 11.

Regarding the pre-processing carried out in the repositories, PhishTank is the only one that performs some data analysis. For example, it describes which pages are “online” or “offline”, as well as pages that have been confirmed as threatening or benign. The problem is that, due to the volatility of phishing, it is not possible to track this information in real-time. So it’s not uncommon to find pages marked “online” but no longer available.

Another problem, specific to PhishTank, is the inconsistency in cataloging the target of phishing attacks, where the “target” field had a generic value (“Other”) or a tag that was not the true target of the attack. For these cases, our proposal circumvented the situation through NLP techniques, making the field reliable and consistent. More details on the applied NLP technique are available in Silva et al.18 study.

Duplicate records were also found, whether caused by the same URL in different repositories or even two or more URLs registered in the same repository. For this reason, during the construction of the Piracema database, care was taken to identify these duplicates and remove them from the data through an analysis based on hash collision.

Finally, the Table 11 also exposes the presence or absence of an API to query the platform records. It is possible to observe that the OpenPhish or PhishStats platforms do not have this feature, at least for free and without limitations. In PhishTank, there is only a limitation on the limit of bandwidth per api_key. The Piracema platform has an API to check records, including to detect whether a given URL is malicious or not, through a classification model based on machine learning. Queries can be carried out on the website itself or through an extension for Google Chrome and Mozilla Firefox. More details about the classification model can be found in Silva et al.18 study.

From the phishing behaviors that are described in the work by Silva et al.18 and observing the data and observing the data and its structure described in the Tables 9 and 10, it is possible to analyze aspects of phishing such as:

-

Trustworthiness: Textual and visual identity of a brand. Examples: logos, template and keywords.

-

Obfuscation: Concealing details or subterfuge of information. Examples: behavior simulations via JavaScript.

-

Propagation: Multiplicity and cloning. Examples: (content hash collision, variables that modify the URL to bypass, hosting and more exploited tld (among other information that can be extracted from the URL).

-

Seasonality: Calendar events. Examples: planned events, celebrations, emergency situations.

-

Volatility: Reputation based on Lifecycle. Examples: analysis of activity via the WHOIS protocol (via the URL).

As for the static aspects, such as the URL, hosting service, target brand, among others, such information provides a relevant data set for the detection of targeted phishing, that is, with high trustworthiness. Another point is the sample diversity present in the database, which favors support for studies aimed at proposals for new solutions for phishing prediction based on static behaviors, as in the study by Silva et al.17, which did logistic regression to observe behavior patterns in the URL.

Regarding the dynamic aspects, although only 14.38% (135,542) of the records provide the source code of the page, it is still a satisfactory amount for researchers to observe patterns of dynamic behaviors such as homographic attempts, explorations by seasonality and behavior techniques forged. In addition, the data obtained by WHOIS present dynamic features of phishing related to the time it has been in operation, according to a study by Silva et al.18 who did logistic regression to look at patterns of phishing lifecycle behavior.

A partir das contribuições mencionadas acima, ainda é possível afirmar que apesar da base Piracema ser uma abordagem diferente e apresentar informações adicionais sobre o conteúdo das páginas maliciosas, a mesma não invalida a existência de outros repositórios, uma vez que o Piracema é constituído a partir de ocorrências das outras 3 fontes de registros phishing mencionadas neste trabalho. Dito isso, a base de dados proposta será atualizada futuramente e irá recorrer às mesmas plataformas apresentadas e, possivelmente outras, e dará continuidade aos mesmos aprimoramentos apresentados e novos que possam surgir. Dessa forma, a proposta se mostra relevante para a literatura por ser uma opção rica em pré-processamento de informações, podendo servir diretamente como auxílio para propostas que visam construir datasets com intuito de mitigar ataques de phishing, como é o caso da pesquisa de Orunsolu et al.24, que analisa as features de ocorrências originadas do PhishTank para dar suporte ao seu modelo preditivo, bem como os trabalhos de Tang et al.28, He et al.29, Qi et al.30 e Ma et al.31, que utilizam técnicas de machine learning para aprimorar a detecção de ameaças virtuais.

Code availability

All information about the phishing records present in the base is available on our website (https://piracema.io/repository). On the website, it is also possible to navigate between records, observing fraud elements such as URL, registration time and other information in a more visual and interactive way. All files with content extracted from malicious pages are available for download from https://bit.ly/piracema-raw and currently, the files are kept in Zip file format. ***The password for zip file: ScientificReports2022@#$***.

References

Mohammad, R. M., Thabtah, F. & McCluskey, L. Tutorial and critical analysis of phishing websites methods. Comput. Sci. Rev. 17, 1–24 (2015).

Watson, D., Holz, T. & Mueller, S. Know your enemy: Phishing. In Know your Enemy: Phishing, https://honeynet.onofri.org/papers/phishing/ (2005).

AlEroud, A. & Zhou, L. Phishing environments, techniques, and countermeasures: A survey. Comput. Secur. 68, 160–197 (2017).

Kaspersky. How to deal with bec attacks. Kaspersky oficial blog, https://www.kaspersky.com.br/blog/what-is-bec-attack/14811/ (2020).

Kaspersky. Coronavirus-related cyberattacks surge in brazil. Kaspersky oficial blog, https://www.zdnet.com/article/coronavirus-related-cyberattacks-surge-in-brazil/ (2020).

Apps, S. C. Cyberattacks 2021: Phishing, ransomware & data breach statistics from the last year. In Cyberattacks 2021: Phishing, Ransomware & Data Breach Statistics From the Last Year, https://spanning.com/blog/cyberattacks-2021-phishing-ransomware-data-breach-statistics/ (2022).

Vayansky, I. & Kumar, S. Phishing—Challenges and solutions. Comput. Fraud Secur. 2018, 15–20 (2018).

Sheng, S. et al. An empirical analysis of phishing blacklists. In CEAS 2009—Sixth Conference on Email and Anti-Spam (2009).

Nguyen, L. A. T., To, B. L., Nguyen, H. K. & Nguyen, M. H. A novel approach for phishing detection using url-based heuristic. In 2014 International Conference on Computing, Management and Telecommunications (ComManTel), 298–303 (IEEE, 2014).

Carta, S., Fenu, G., Recupero, D. R. & Saia, R. Fraud detection for e-commerce transactions by employing a prudential multiple consensus model. J. Inf. Secur. Appl. 46, 13–22 (2019).

Marchal, S. et al. Off-the-hook: An efficient and usable client-side phishing prevention application. IEEE Trans. Comput. 66, 1717–1733 (2017).

Tang, J., Li, R., Wang, K., Gu, X. & Xu, Z. A novel hybrid method to analyze security vulnerabilities in android applications. Tsinghua Sci. Technol. 25, 589–603. https://doi.org/10.26599/TST.2019.9010067 (2020).

Qi, L. et al. Privacy-aware data fusion and prediction with spatial–temporal context for smart city industrial environment. IEEE Trans. Ind. Inform. 17, 4159–4167. https://doi.org/10.1109/TII.2020.3012157 (2021).

Ma, P., Jiang, B., Lu, Z., Li, N. & Jiang, Z. Cybersecurity named entity recognition using bidirectional long short-term memory with conditional random fields. Tsinghua Sci. Technol. 26, 259–265. https://doi.org/10.26599/TST.2019.9010033 (2021).

Allix, K., Bissyandé, T. F., Klein, J. & Le Traon, Y. Are your training datasets yet relevant? In International Symposium on Engineering Secure Software and Systems, 51–67 (Springer, 2015).

Li, J.-H. & Wang, S.-D. Phishbox: An approach for phishing validation and detection. In 2017 IEEE 15th International Conference on Dependable, Autonomic and Secure Computing, 15th International Conference on Pervasive Intelligence and Computing, 3rd International Conference on Big Data Intelligence and Computing and Cyber Science and Technology Congress (DASC/PiCom/DataCom/CyberSciTech), 557–564 (IEEE, 2017).

Silva, C. M. R., Feitosa, E. L. & Garcia, V. C. Heuristic-based strategy for phishing prediction: A survey of URL-based approach. Comput. Secur. 88, 101613 (2019).

da Silva, C. M. R., Fernandes, B. J. T., Feitosa, E. L. & Garcia, V. C. Piracema.io: A rules-based tree model for phishing prediction. Expert Syst. Appl. 191, 116239. https://doi.org/10.1016/j.eswa.2021.116239 (2022).

Roy, S. S., Karanjit, U. & Nilizadeh, S. Evaluating the effectiveness of phishing reports on twitter. In 2021 APWG Symposium on Electronic Crime Research (eCrime), 1–13 (IEEE, 2021).

Janet, B., Kumar, R. J. A. et al. Malicious url detection: a comparative study. In 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), 1147–1151 (IEEE, 2021).

Al-Ahmadi, S., Alotaibi, A. & Alsaleh, O. PDGAN: Phishing detection with generative adversarial networks. IEEE Access 10, 42459–42468. https://doi.org/10.1109/ACCESS.2022.3168235 (2022).

Alkawaz, M. H., Steven, S. J. & Hajamydeen, A. I. Detecting phishing website using machine learning. In 2020 16th IEEE International Colloquium on Signal Processing & Its Applications (CSPA), 111–114. https://doi.org/10.1109/CSPA48992.2020.9068728 (2020).

Al-Ahmadi, S. PDMLP: Phishing detection using multilayer perceptron. Int. J. Netw. Secur. Appl. (IJNSA) 12, 1–14 (2020).

Orunsolu, A. A., Sodiya, A. S. & Akinwale, A. A predictive model for phishing detection. J. King Saud Univ. Comput. Inf. Sci. 34, 232–247 (2019).

Akhawe, D., He, W., Li, Z., Moazzezi, R. & Song, D. Clickjacking revisited: A perceptual view of UI security. In 8th USENIX Workshop on Offensive Technologies (WOOT 14) (USENIX Association, 2014).

Khonji, M., Iraqi, Y. & Jones, A. Phishing detection: A literature survey. IEEE Commun. Surv. Tutor. 15, 2091–2121 (2013).

Liu, D. et al. Don’t let one rotten apple spoil the whole barrel: Towards automated detection of shadowed domains. In Proceedings of the 2017 ACM SIGSAC, CCS ’17, 537–552 (Association for Computing Machinery, 2017).

Tang, J., Li, R., Wang, K., Gu, X. & Xu, Z. A novel hybrid method to analyze security vulnerabilities in android applications. Tsinghua Sci. Technol. 25, 589–603 (2020).

He, Z. & Zhou, J. Inference attacks on genomic data based on probabilistic graphical models. Big Data Min. Anal. 3, 225–233 (2020).

Qi, L. et al. Privacy-aware data fusion and prediction with spatial–temporal context for smart city industrial environment. IEEE Trans. Ind. Inform. 17, 4159–4167 (2020).

Ma, P., Jiang, B., Lu, Z., Li, N. & Jiang, Z. Cybersecurity named entity recognition using bidirectional long short-term memory with conditional random fields. Tsinghua Sci. Technol. 26, 259–265 (2020).

Acknowledgements

This work was partially funded by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) and Fundação de Amparo a Ciência e Tecnologia do Estado de Pernambuco (FACEPE).

Author information

Authors and Affiliations

Contributions

J.C.G.B.: article writing, data review and article review. C.M.R.S.: data collection, data validation, structuring database, article writing, article review. L.C.T.: article writing, data review and article review. B.J.T.F.: article review. J.F.L.O.: article review. E.L.F.: article review. W.P.S.: article review, H.F.A.: article review. V.C.G.: article review.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gomes de Barros, J.C., Revoredo da Silva, C.M., Candeia Teixeira, L. et al. Piracema: a Phishing snapshot database for building dataset features. Sci Rep 12, 15149 (2022). https://doi.org/10.1038/s41598-022-19442-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-19442-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.