Abstract

Phase amplitude coupling (PAC) is thought to play a fundamental role in the dynamic coordination of brain circuits and systems. There are however growing concerns that existing methods for PAC analysis are prone to error and misinterpretation. Improper frequency band selection can render true PAC undetectable, while non-linearities or abrupt changes in the signal can produce spurious PAC. Current methods require large amounts of data and lack formal statistical inference tools. We describe here a novel approach for PAC analysis that substantially addresses these problems. We use a state space model to estimate the component oscillations, avoiding problems with frequency band selection, nonlinearities, and sharp signal transitions. We represent cross-frequency coupling in parametric and time-varying forms to further improve statistical efficiency and estimate the posterior distribution of the coupling parameters to derive their credible intervals. We demonstrate the method using simulated data, rat local field potentials (LFP) data, and human EEG data.

Similar content being viewed by others

Introduction

Neural oscillations are thought to play a fundamental role in the dynamic coordination of brain circuits and systems1. At individual frequencies, oscillations reflect the temporal coordination of activity across populations of neurons, and can be observed experimentally in neuronal spiking time series, multi-unit activity, local field potentials (LFP), and even non-invasively using magnetoencephalogram or electroencephalogram (EEG) recordings. In the past decade, a major advance has been the realization that oscillating neural activity can have higher-order interactions in which oscillations at different frequencies interact2,3,4. This cross-frequency coupling (CFC) appears to be nearly as ubiquitous as oscillations themselves, occurring during learning and memory, varying across different states of arousal and unconsciousness, and changing in relation to neurological and psychiatric disorders2,4,5,6,7,8,9,10,11,12,13,14,15. If distinct oscillations stem from specific neural circuit architectures and time constants16, it seems plausible that cross-frequency coupling could serve as a way of coordinating activity among otherwise disparate circuits and systems2. Amplitude–Amplitude17 and Phase-Phase coupling3,18 have been reported, but phase-amplitude coupling (PAC), in which the phase of a slower wave modulates the amplitude of a faster one, remains the most frequently described phenomenon.

The explosion of interest in CFC has led to the growing concern that existing methods for analysis may be prone to error and misinterpretation. In a recent article, Aru and colleagues19 point out that existing cross-frequency coupling analyses are very sensitive to frequency band selection, noise, sharp signal transitions, and signal nonlinearities. Depending on the scenario, true underlying CFC can be missed, or spurious coupling can be detected. For example, temporal signals with sharp transitions, such as square or triangular waves, cannot be represented by a single sinusoidal component. Their Fourier decompositions include multiple phase locked harmonics that are not independent oscillations. Methods have been proposed to handle such non-sinusoidal EEG signals but they rely on multi-region comparison20 or multiple band pass filters, whose parameters can be difficult to establish, to target and remove harmonic content21. In addition, cross-frequency coupling methods tend to be statistically inefficient, requiring substantial amounts of data, making them unsuitable for time-varying scenarios or real-time applications. Finally, in the absence of an appropriate statistical model, analysts typically employ surrogate data methods for statistical inference on cross-frequency coupling, making it difficult to properly answer even basic questions about the nature of the coupling, such as the size of the effect or its confidence or credible interval.

We describe here a novel method to estimate PAC that addresses these problems. A major source of error in existing methods stems from their reliance on traditional bandpass filtering. These filters can remove meaningful oscillatory coupling components (i.e., sidebands), and introduce spurious transients that resemble cross-frequency coupling. In our approach, we use a state space oscillator model to separate out the different oscillations of interest. These models can preserve the relevant coupling terms in the signal and are resilient to noise and sharp signal transitions. We choose a particular model formulation, ingeniously proposed by Matsuda and Komaki22, that makes it straightforward to estimate both the phase and amplitude of oscillatory components. To further improve statistical efficiency, we introduce a parametric representation of the cross-frequency coupling relationship. A constrained linear regression estimates modulation parameters which can in addition be incorporated into a second state space model representing time-varying changes in the modulation parameters. Finally, we combine these statistical models to compute credible intervals for the observed coupling via resampling from the estimated posterior distributions. We demonstrate the efficacy of this method using simulated data, rat LFP data, and human EEG data.

We show that our method accurately estimates the parameters describing the oscillatory and modulation dynamics, provides improved temporal resolution, statistical efficiency, and inference compared to existing methods. Furthermore, we show that it overcomes the common problems with existing PAC methods described earlier, namely, band selection and spurious coupling introduced by sharp signal transitions and nonlinearities. The improved performance and robustness to artifacts should help improve the efficiency and reliability of PAC methods, and could enable novel experimental studies of PAC as well as novel medical applications.

Results

Overview of the state-space PAC (SSP) method

In the conventional approach to phase and amplitude estimation, the signal is bandpass filtered to estimate the slow and fast components. The Hilbert transform is then applied to synthesize their imaginary counterparts. Finally, the slow component phase and the fast component amplitude are computed and used to calculate a Phase Amplitude Coupling (PAC) metric. In our approach, we use a state space model to estimate the oscillatory components of the signal, using the oscillation decomposition framework described by Matsuda and Komaki22. We assume, for the moment, that the observed signal \(y_t \in \mathbb {R}\) is a linear combination of latent states representing a slow and a fast component \(x^{\text {s}}_t\) and \(x^{\text {f}}_t \in \mathbb {R}^{2}\). Note that we introduce our method with two latents but provide a general derivation for an arbitrary number of oscillations (and their harmonics) as well as model selection tools and illustrative experiments below and in Supplementary Materials (see for example S1). Each of the 2 dimensional latent states are assumed to be independent and their evolution over a fixed step size is modeled as a scaled and noisy rotation. For \(j= \text {s}, \text {f}\)

where

is a 2-dimensional rotation of angle \(\omega _j\) (the radial frequency), \(a_j\) is a scaling parameter and \(\sigma _j^2\) the process noise variance. An example of this state space oscillation decomposition is shown in Fig. 1a–d. This approach eliminates the need for traditional bandpass filtering since the slow and fast components are directly estimated under the model. Perhaps more importantly, the oscillations’ respective components can be regarded as the real and imaginary terms of a phasor or analytic signal. As a result, the Hilbert transform is no longer needed. Thus the latent vector’s polar coordinates provide a direct representation of the slow instantaneous phase \(\phi ^{\text {s}}_t\) and fast oscillation amplitude \(A^{\text {f}}_t\) (Fig. 1f–g). We note \(x_t= [x^{\text {s} \intercal }_{t} x^{\text {f} \intercal }_t]^\intercal\) and obtain a canonical state space representation23

where \(\Phi \in \mathbb {R}^{4 \times 4}\) is a block diagonal matrix composed of the rotations described earlier, Q the total process noise covariance, R the observation noise covariance and \(M \in \mathbb {R}^{1 \times 4}\) links the observation with the oscillation first coordinate. We estimate \((\Phi , Q, R)\) using a Expectation-Maximization (EM) algorithm whose general formulation (multiple oscillations and harmonics) is derived in the Supplementary Materials S1.

The oscillation decomposition for an EEG time-series from a human subject during anesthesia-induced unconsciousness using propofol. From the raw EEG trace (a), we extract a 6 s window (b) and decompose it into a slow (c) and a fast (d) oscillation using our state space model (e). We then deduce the slow oscillation phase (f) and the fast oscillation amplitude (g). Finally, we use a linear model (e) to regress the alpha amplitude (j) and to the estimate modulation parameters (h,j) and their distributions. Here, we used \(200 \times 200\) resampled series (dark grey) to compute the 95\(\%\) CI.

The standard approach for PAC analysis uses binned histograms to quantify the relationship between phase and amplitude24 which is a major source of statistical inefficiency. Instead, we introduce a parametric representation of PAC based on a simple amplitude modulation model used in radio communications. To do so, we consider a linear regression problem of the form

where \(X(\phi ^{\text {s}}_t) = \left[ \begin{matrix} 1&\cos (\phi ^{\text {s}}_t)&\sin (\phi ^{\text {s}}_t) \end{matrix} \right]\). We term \(\beta \in \mathbb {R}^3\) the modulation vector and impose the additional constraint \((\beta _1^2 + \beta _2^2) < \beta _0^2\) on its component. Defining \(K^{\text {mod}} = \sqrt{\beta _1^2+\beta _2^2} / \beta _0\), \(\phi ^{\text {mod}} = \tan ^{-1}(\beta _2 / \beta _1)\) and \(A_0 = \beta _0\). Equation (4) becomes

\(K^{\text {mod}}\) controls the strength of the modulation while \(\phi ^{\text {mod}}\) is the preferred phase around which the amplitude of the fast oscillation \(x^{\text {f}}_t\) is maximal (Fig. 1h–j). For example, if \(K^{\text {mod}}= 1\) and \(\phi ^{\text {mod}}=0\), the fast oscillation is strongest at the peak of the slow oscillation. On the other hand, if \(\phi ^{\text {mod}}=\pi\), the fast oscillation is strongest at the trough or nadir of the slow oscillation.

Finally, instead of relying on surrogate data19 to determine statistical significance, which decreases efficiency even further, our model formulation allows us to estimate the posterior distribution of the modulation parameters \(p(K^{\text {mod}},\phi ^{\text {mod}} | \{ y_t \}_t)\) and to deduce the associated credible intervals (CI) (Fig. 1f–j).

We refer to our approach as the State-Space PAC (SSP) method. Because physiological systems are time varying, we apply it over multiple non-overlapping windows. In a variation of our method, we model the temporal continuity on the modulation parameters across windows. To do so, we fit an autoregressive (AR) model with noisy observations to the modulation vector \(\beta\) by solving and optimizing Yule-Walker type equations numerically (see Supplementary Materials S1), yielding what we term the double State Space PAC estimate (dSSP). In other words, given \(Q_{\beta }\) and \(R_{\beta }\) process and observation covariances, and, for a time-window T, we model

where \(\beta _{_{T}}^{\text {{\tiny SSP}}}\) represents the modulation vector estimated in (4) in time window T and \(\beta _{_{T}}^{\text {{\tiny dSSP}}}\) represents the smoothed modulation vector.

Human EEG data

Propofol-induced unconsciousness in a human subject monitored with EEG. Increasing target effect-site concentrations of propofol were infused (a) while loss and recovery of consciousness were monitored behaviorally with an auditory task from which a probability of response was estimated (b). \(R^2\) value of our modulation regression (c). dSSP was used to estimate the parametric spectrogram (d), the phase amplitude modulogram (e) and the modulation parameters (f) \(K^{\text {mod}}\) and \(\phi ^{\text {mod}}\) alongside their CI computed with \(200 \times 200\) samples.

To demonstrate the performance of our methods we first analyzed EEG data from a human volunteer receiving propofol to induce sedation and unconsciousness (Fig. 2). As expected, as the concentration of propofol increases, the subject’s probability of response to auditory stimuli decreases. The power spectral density changes during this time, developing beta (12.5–25 Hz) oscillations as the probability of response begins to decrease, followed by slow (0.1–1 Hz) and alpha (8–12 Hz) oscillations when the probability of response is zero (Fig. 2d) as in25. Here, the spectrogram is estimated using our model parameters (and Eq. (44) from Supplementary Materials S1) but we compare it to multitaper spectral estimation38 in Fig. S2. For a window T, we estimate the modulation strength \(K^{\text {mod}}_{T}\) and phase \(\phi ^{\text {mod}}_{T}\) (and CI) with dSSP (Fig. 2f) and we gather those estimates in the Phase Amplitude Modulogam: \(\text {PAM}(T,\psi )\) (Fig. 2e). For a given window T, \(\text {PAM}(T,.)\) is a probability density function (pdf) having support \([- \pi , \pi ]\). It assesses how the amplitude of the fast oscillation is distributed with respect to the phase of the slow oscillation. When the probability of response is zero, we observe a strong ”peak-max” (\(K^{\text {mod}}_T \approx 0.8\), \(\phi ^{\text {mod}}_T \approx 0\)) pattern in which the fast oscillation amplitude is largest at the peaks of the slow oscillation. During the transitions to and from unresponsiveness, we observe a ”trough-max” pattern of weaker strength (\(K^{\text {mod}}_T \approx 0.25\) , \(\phi ^{\text {mod}}_T = \pm \pi\)) in which the fast oscillation amplitude is largest at the troughs of the slow oscillation. Note that the coefficient of determination \(R^2\) for the modulation relationship mirrors the coupling strength \(K^{\text {mod}}\) since \(A_t^{\text {f}}\) is better predicted by our model when the coupling is strong.

The phase amplitude coupling profile of a subject infused with increasing target effect site concentrations of propofol. Left: response probability curves (a) aligned with modulograms (c) (distribution of alpha amplitude with respect to slow phase) computed with standard (top) and state-space parametric (bottom) methods. Right: propofol infusion target concentration (b) aligned with corresponding modulation indices (d). Standard technique significance was assessed using 200 random permutations and CI where estimated using \(200\times 200\) samples.

When averaged over long, continuous and stationary time windows, conventional methods provide good qualitative assessments of PAC. However, in many cases, analyses over shorter windows of time may be necessary if the experimental conditions or clinical situation changes rapidly. In previous work25, we analyzed PAC using conventional methods with relatively long \(\delta t = 120\) s windows, appropriate in this case because propofol was administered at fixed rates over \(\sim\) 14 min intervals. The increased statistical efficiency of the SSP and dSSP methods makes it possible to analyze much shorter time windows of \(\delta t = 6\) s, which we illustrate in two subjects, one with strong coupling (Fig. 3) and another with weak coupling (Fig. S3). To do so, we compare SSP, dSSP and standard methods used with \(\delta t = 120\) s or \(\delta t = 6\) s based on the modulogram and on the Modulation Index (MI) estimates. The latter assesses the strength of the modulation by measuring, for any window T how different \(\text {PAM}(T,.)\) is from the uniform distribution. The Kullback-Leibler Divergence is typically used for this purpose. Thus, any random fluctuations in the estimated PAM will increase MI, introducing a bias. Our model parametrization is used to derive PAM, MI and associated CI but standard non-parametric analysis typically rely on binned histogram. As a results they estimate statistical significance by constructing surrogate datasets and reporting p-values19.

Both subjects exhibit the typical phase amplitude modulation profile previously described when they transition in and out of unconsciousness. Nevertheless, since SSP more efficiently estimates phase and amplitude22 and produces smooth PAM estimates even on short windows, MI estimates derived from SSP show less bias that the standard approach. For the same reasons, \(\phi ^{\text {mod}}\) estimates show less variance than the standard approach. The dSSP algorithm provides a temporal continuity constraint on the PAM, making it possible to track time-varying changes in PAC while further reducing the variance of the PAM estimates. Finally, our parametric strategy provides posterior distributions for \(K^{\text {mod}}\), \(\phi ^{\text {mod}}\) and MI, making it possible to estimate CI for each variable and assesses significance without resorting to surrogate data methods.

Rat LFP data

To illustrate the performance of our approach in a different scenario representative of invasive recordings in animal models, we analyzed rat LFP during a learning task hypothesized to involve theta (6–10 Hz) and low gamma (25–60 Hz) oscillations. We applied dSSP on 2 s windows (Fig. 4) and confirmed that theta-gamma coupling in the CA3 region of the hippocampus increases as the rat learned the discrimination task, as originally reported in Tort et al.4. In our analysis using dSSP, we did not pre-select the frequencies of interest, nor did we specify bandpass filtering cutoff frequencies. Rather, the EM algorithm was able to estimate the specific underlying oscillatory frequencies for phase and amplitude from the data, given an initial starting point in the theta and gamma ranges. Thus we illustrate that our method can be applied effectively to analyze LFP data, and that it can identify the underlying oscillatory structure without having to specify fixed frequencies or frequency ranges.

Rats show increased theta-gamma coupling when learning a discrimination task in hypocampus CA3 region. Top row: Standard4 processing for phase-amplitude modulogram (left), multitaper spectrogram38 (middle), and standard modulation index (right). Bottom row: dSSP applied on 2 s windows (left), parametric spectrogram (middle), modulation index based on dSSP (right).

Simulation studies

To test our algorithms in a more systematic way as a function of different modulation features and signal to noise levels, we analyzed multiple simulated data sets. By design, these simulated data were constructed using generative processes or models different than the state space oscillator model; i.e., the simulated data generating processes were outside the ”model class” used in our methods. Here, we focus on slow and alpha components to reproduce our main experimental data cases. In doing so, our intent is not to provide an exhaustive characterization of the precision and accuracy of our algorithm, since this would strongly depend on the signal to noise ratio, the signal shape, etc. Instead, we aim to illustrate how and why our algorithm outperforms standard analyses in the case of short and noisy time-varying data sets.

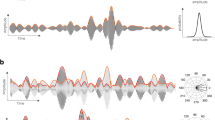

We first compare the resolution and robustness of dSSP with conventional techniques on broadband signals with modulation parameters varying on multiple time scales. Results are reported for different generative parameters (See Methods, \(\Delta f^{\text {gen}}_{\text {s}}=\Delta f^{\text {gen}}_{\text {f}}\), \(\sigma _\text {s}\) and \(\sigma _\text {f}\)) in Fig. 5 and Fig. S4 and associated signal traces are illustrated Fig. S5. Although robust when averaged on long windows with stationary coupling parameters, standard techniques become ineffective when the modulation parameters vary rapidly across windows. The modulation cannot be resolved when long windows are used. However if we reduce the window size to compensate, the variance of the estimates increases significantly. A trade-off has to be found empirically. On the other hand, we see that, applied on 6-s windows, (d)SSP can track the rapid changes in amplitude modulation even in the case of a low signal to noise ratio. The dSSP algorithm also provides estimates of the posterior distribution of the modulation parameters, making it straightforward to construct CI and perform statistical inference. By comparison, the surrogate data approach becomes infeasible as there are fewer and fewer data segments to shuffle.

Comparison of the modulation estimates using standard methods and our new dSSP method. Slow and fast oscillations were generated by filtering white noise around \(f_{\text {s}}\) = 1 Hz and \(f_{\text {s}}\) 10 Hz with \(\Delta f_{\text {s}}^{\text {gen}}\) = 3 Hz and normalized to standard deviation \(\sigma _{\text {s}}\) = 0.5 and \(\sigma _{\text {f}}\) = 2. The time scale over which \(K^{\text {mod}}\) and \(\phi ^{\text {mod}}\) changed varied between 20–5 and 2 min. See Fig. S5 for typical signal traces.

In a recent paper, Dupré la Tour et al.26 designed an elegant nonlinear PAC formulation, described as a driven autoregressive (DAR) process, where the modulated signal is a polynomial function of the slow oscillation. The latter, referred to as the driver, is filtered out from the observation around a preset frequency and used to estimate DAR coefficients. The signal parametric spectral density is subsequently derived as a function of the slow oscillation. The modulation is then represented in terms of the phase around which the fast oscillation power is preferentially distributed. A gridsearch is performed on the driver, yielding modulograms for each slow central frequency over a range of fast frequencies. The frequencies associated with the highest likelihood and/or strongest coupling relationship are then selected as the final coupling estimate.

This parametric representation improves efficiency, especially in the case of short signal windows, but because it relies on an initial filtering step, it also shares some of the limitations of conventional techniques. As we will see, spurious CFC can emerge from abruptly varying signals or nonlinearities. Additionally, this initial filtering step might contaminate PAC estimates from short data segments with wideband slow oscillations.

To compare our methods with standard techniques and the DAR method, we generated modulated signals with the scheme described in Dupré la Tour et al.26 (Eq. (13), \(\lambda =3\), and \(\phi ^{\text {mod}}=-\pi /3\)) using different frequencies of interest (\(f_{\text {s}}\) and \(f_{\text {f}}\)) spectral widths (\(\Delta f_{\text {s}}^{\text {gen}}\)) and Signal to Noise Ratios (SNR). Typical signal traces for those generating parameters are reported in Figs. S7 and S8. We then compare how well these methods recover the slow oscillation and the fast oscillation (Figs. S7 and S8) or the modulation phase (Fig. 6 and Fig. S6). Contrary to the other methods presented here, SSP does not compute the full comodulograms to select frequencies of interest but rather identifies them by fitting the state space oscillator model. Coupling is only estimated in a second step. Although we used tangible prior knowledge in previous sections to initialize the algorithm, we adapt an initialization procedure from27 (See Supplementary Materials S1) to provide a fair comparison. For each condition, we generated 400 six-second windows. When necessary, the driver was extracted using \(\Delta f_{\text {s}}^{\text {filt}} = \Delta f_{\text {s}}^{\text {gen}}\).

Modulation phase \(\phi ^{\text {mod}}\) estimation and comparisons with standard methods (black), DAR (pink) and SSP (blue). 400 windows of 6 s were generated with: a slow oscillation (filtered from white noise around \(f_{\text {s}}= 1\) Hz with bandwidth \(\Delta f_{\text {s}}^{\text {gen}}\), normalized to standard deviation \(\sigma _{\text {s}}\)) and a modulated fast oscillation (\(\phi ^{\text {mod}}=-\pi /3\), modeled with a sinusoid \(f_{\text {s}}=10\) Hz and normalized to \(\sigma _{\text {f}}\)). We added unit normalized Gaussian noise and we used 3 Signal To Noise Ratio (SNR) conditions (\((\sigma _{\text {s}},\sigma _{\text {s}})=(2,1.5)\), (1, 0.6) and (0.7, 0.3)). We show typical signal traces for these different conditions Fig. S7.

We find that our algorithm better retrieves fast frequencies in each case (Figs. S7 and S8) especially when the slow oscillation is wider-band. It also outperforms the other methods when estimating modulation phase (Fig. 6 and Fig. S6): our algorithm is stable in the case of broadband (\(\Delta f_{\text {s}}^{\text {gen}}= 3\) Hz) or weak (\((\sigma _{\text {s}},\sigma _{\text {s}})=(0.7,0.3)\)) slow oscillations and \(\phi ^{\text {mod}}\) is estimated accurately with very few outliers and a smaller standard deviation in virtually all cases considered.

Overcoming key limitations of CFC analysis: sharp transitions, nonlinearities, and frequency band selection

Despite the central role that CFC likely plays in coordinating neural systems, standard methods of CFC analysis are subject to many caveats that are a source of ongoing concern19. Spurious coupling can arise when the underlying signals have sharp transitions or nonlinearities. On the other hand, true underlying coupling can be missed if the frequency band for bandpass filtering is not selected properly. Here we illustrate how our SSP method is robust to all of these limitations. We also show how our method is able, counterintuitively, to extract nonlinear features of a signal using a linear model.

Signals with abrupt changes and/or harmonics

Oscillatory neural waveforms may have features such as abrupt changes or asymmetries that are not confined to narrow bands28. In such cases, truncating their spectral content with standard bandpass filters can distort the shape of the signal and can introduce artefactual components that may be incorrectly interpreted as coupling.

The state space oscillator model provides an alternative to bandpass filtering that can accommodate non sinusoidal wave-shapes. In this section, we extend the model to explicitly represent the slow oscillatory signal’s harmonics, thus allowing the model to better represent oscillations that have sharp transitions and those that may be generated by nonlinear systems. To do so, we optimize h oscillations with respect to the same fundamental frequency \(f_{\text {s}}\) (see Supplementary Materials S1). We further combine this model with information criteria (Akaike Information Criteria -AIC-29 or Bayesian Information Criteria -BIC-30) to determine (i) the number of slow harmonics h and (ii) the presence or the absence of a fast oscillation. We select the best model by minimizing \(\Delta \text {IC} = \text {IC} - \text {min}(\text {IC})\). We only report AIC-based PAC estimation here although both AIC and BIC perform similarly. When multiple slow harmonics are favored, we use the phase of the fundamental oscillation to estimate PAC.

We first simulated a non-symmetric abruptly varying signal using a Van der Pol oscillator (Eq. (14), \(\varepsilon =5 \text {, } \omega =5s^{-1}\)) to which we added observation noise (\(v_t \sim \mathscr {N}(0,R)\), \(\sqrt{R}=0.15\)). We then considered two scenarios: one with a modulated fast sinusoidal wave (Fig. 7a, \(A_t^{\text {f}} = A_0 \left( 1+\cos \phi ^{\text {s}}_t \right)\), \(A_0 = 2\sqrt{R}\) and \(f_\text {f} = 10 \text {Hz}\)), and one without (Fig. 7b). Because our model is able to fit the sharp transitions, both AIC and BIC (not shown) identify the correct number of independent components (Fig. 7a–c-4 : the minimum is reached for the correct number of components). As a consequence, when no clear fast oscillation is detected, no PAC is calculated (Fig. 7a-6). On the other hand, when no fast oscillation is present, standard techniques extract a fast component stemming from the abruptly changing slow oscillation, leading to the detection of spurious coupling (Fig. 7a-3).

Nonlinear inputs arising from signal transduction harmonics are a similar hurdle in CFC analysis. If we consider a slow oscillation \(x_t^{\text {s}}=\cos (\omega _{s}t)\) non-linearly transduced as \(y_t = g(x_t^{\text {s}})\), we can write a second order approximation

If \(\omega _s/(2\pi ) = 1\) Hz, bandpass filtering \(y_t\) around 0.9–3.1 Hz to extract an oscillation peaking at \(f_\text {f}=2\) Hz would yield19

In such a case, standard CFC analysis infers significant coupling (Fig. 7c-3) while oscillation decomposition correctly identifies a harmonic decomposition without CFC (Fig. 7c-6).

This model selection strategy does not guarantee that the correct model will always be selected. Furthermore, the oscillation decomposition itself is often a non convex optimization problem. However, we observe that the (extended) state-space oscillator is better suited to model physiological signals than narrow band components. In addition, the model selection paradigm combined with prior knowledge of the signal content (e.g., propofol anesthesia slow-alpha or rodent hippocampal theta-gamma oscillations) allows us to study PAC in a more principled way.

PAC analysis of 6-s signals with harmonic content using standard methods and SSP. The signal was either generated using a Van der Pol oscillator alone (a-1), a Van der Pol oscillator with a modulated alpha oscillation (b-1), or with a nonlinearity according to Eq. (7) (c-1). The standard method use conventional filters to extract the oscillation (0.1–1 Hz and 6–14 Hz (ab-2) and 0.6–1.2 Hz and 0.9–3.1 Hz (c-2)). SSP was combined with an Akaike Information Criteria (AIC, abc-4) to select the optimal number of independent oscillations (one, two or three) and the number of slow harmonics (abc-5). PAC is reported as the distribution of the fast amplitude with respect to the slow phase (abc-3,abc-6). For SSP 100 samples were drawn from the posterior to generate CI (b-6).

Frequency band selection

If bandpass filters with an excessively narrow bandwidth are applied to a modulated signal, the modulation structure can be obliterated. Let us consider the following signal:

Developing \(y_t\) yields 4 frequency peaks: the slow and fast frequencies \(\omega _{\text {s}}\) and \(\omega _{\text {f}}\) and two sidelobes centered around \(\omega _{\text {f}}-w_{\text {s}}\) and \(\omega _{\text {f}}+\omega _{\text {s}}\)

Decomposition (a,d), power spectral density (b,e) and modulogram (c,f). The top row shows the result of applying a narrow bandpass filter that removes the modulation side lobes. The bottom row shows the result of applying the oscillation decomposition used in SSP and dSSP, which preserves the modulation structure. (\(K^{\text {mod}}=0.6 \text {, } \phi ^{\text {mod}}=-\pi /3\), \(R=4\), \(A^{\text {s}}_t = 4\) and \(A^{\text {f}}_t = 1\)).

As a consequence, if the fast oscillation is extracted without its side lobes, no modulation is detected, as illustrated Fig. 8a–c. Our SSP algorithm uses a state-space oscillator decomposition which does not explicitly model the structural relationship giving rise to the modulation side lobes (Eq. (9)). Yet, we see that the modulation is successfully extracted, as observed in the fitted time series (Fig. 1) and in the spectra (Fig. 8d–f). The model is able to achieve this by making the frequency response of the fast component wide enough to encompass the side lobes. The algorithm does this by inflating the noises covariances R and \(\sigma ^2_\text {f}\) and \(\sigma ^2_{\text {f}}\) and deflating \(a_{\text {f}}\). In theory it might be possible to use a higher order model like an ARMA(4,2) (which would represent the product of 2 oscillations whose poles are in \(a_{\text {s}} a_{\text {f}} e^{\pm i (w_{\text {f}} \pm w_{\text {s}})}\)), or to directly model coupling through a nonlinear observation. However, in both cases, we found that such models were difficult to fit to the data, and quickly became underconstrained when applied to noisy, non-stationary, non-sinusoidal physiological signals. Instead, we found that our simpler model was able to extract the modulated high-frequency component robustly.

In summary, the first stage of our algorithm can extract nonlinearities stemming from the modulation before fitting them with a regression model in the second stage. The main consequence of this approach is to inflate the variance in the fast component estimation. See for example the wide CI in the fast oscillation estimate in Fig. 1g. In turn, we resample the fast oscillation amplitudes from a wider distribution than is actually the case. Although this does not affect the estimates of \(\phi ^{\text {mod}}\), it does produce a conservative estimate when resampling \(K^{\text {mod}}\), i.e., the credible intervals are wider than they might be otherwise. Even so, we find that our approach still performs better than previous methods (Figs. 3, 5 and 6).

Discussion

We have presented a novel method that integrates a state space model of oscillations with a parametric formulation of phase amplitude coupling (PAC). Under this state space model we represent each oscillation as an analytic signal22 to directly estimate the phase or amplitude. We then characterize the PAC relationship using a parametric model with a constrained linear regression. The regression coefficients, which efficiently represent the coupling relationship in only a few parameters, can be incorporated into a second state space model to track time-varying changes in the PAC. We demonstrated the efficacy of this method by analyzing neural time series data from a number of applications, and illustrated its improved statistical efficiency compared to standard techniques using simulation studies based on different generative models. Finally, we showed how our method is robust to many of the limitations associated with standard phase amplitude coupling analysis methods.

The efficacy of our method stems from a number of factors. First, the state-space analytic signal model provides direct access to the phase and amplitude of the oscillations being analyzed. This linear model also has the remarkable ability to extract a nonlinear feature (the modulation) by imposing ”soft” frequency band limits which are estimated from the data. The oscillation decomposition is thus well-suited to analyze physiological signals that are not confined to strict band limits. We also proposed a harmonic extension that can represent nonlinear oscillations (e.g., Van der Pol, Fig. 7), making it possible to better differentiate between true and spurious PAC resulting from bandpass filtering artifacts. The parametric representation of the coupling relationship can accommodate different modulation shapes and increases the model efficiency even further.

Overall, we addressed a majority of the significant limitations associated with current methods for PAC analysis. The neural time series are processed more efficiently (Fig. 3), frequency bands of interest are automatically selected (Fig. 4), extracted (Fig. 8d–e), and more realistically modeled (Fig. 7). Contrary to standard methods, we do not need to average PAC-related quantities across time, reducing the amount of contiguous time series data required. Moreover, the posterior distributions of the signals of interest are well-defined under our proposed model. Sampling from them bypasses the need to build surrogate data, which can obscure non-stationary structure in the data and underestimate the false positive rate19. Conversely, because SSP estimates the modulation parameters’ posterior distribution, we report CI and provide information on the statistical significance of our results as well as the strength and direction of the modulation. Our dynamic estimation of PAC hence makes it possible to base inference on much shorter windows—as short as 6 s for slow 0.1–1 Hz signals. Other novel models have been proposed to represent PAC, including driven autoregressive models (DAR)26 and generalized linear models (GLM)31. As we saw earlier, SSP performs better than the DAR and standard approaches, particularly when the signal to noise is low. The GLM represents the modulation relationship parametrically as we do, but in a more general form, and provides confidence intervals using the bootstrap31. Both the DAR and GLM approaches remain reliant on traditional bandpass filtering for signal extraction, and thus remain vulnerable to the crucial problems introduced by these filters19. Our method is the first to use state space models combined with a parametric model of the modulation, the latter of which could be generalized in the manner described by31.

Our methods could significantly improve the analysis of future studies involving CFC, and could enable medical applications requiring near real-time tracking of CFC. One such application could be EEG-based monitoring of anesthesia-induced unconsciousness. During propofol induced anesthesia, frequency bands are not only very well defined, but the PAC signatures strongly discriminates deep unresponsiveness (peak-max) from transition states (through-max), most likely reflecting underlying changes in the polarization level of the thalamus32. Thus, PAC could provide a sensitive and physiologically-plausible marker of anesthesia-induced brain states, offering more information than spectral features alone. Accordingly, a recent study33 reported cases in which spectral features could not perfectly predict unconsciousness in patients receiving general anesthesia. In this same data, CFC measures (peak-max) could more accurately indicate a fully unconscious state from which patients cannot not be aroused34. In the operating room, drugs can be administered rapidly through bolus injection, drug infusion rates can change abruptly, and patients may be aroused by surgical stimuli, leading to corresponding changes in patients’ brain states over a time scale of seconds35,36. These rapid transitions in state can blur modulation patterns estimated using conventional methods. Faster and more reliable modulation analysis could therefore lead to tremendous improvement in managing general anesthesia. Conventional methods are impractical since they require minutes of data to produce one estimate; in contrast our method can estimate CFC on a time-scale compatible with such applications.

Since CFC analysis methods were first introduced into neuroscience, there has been a wealth of data suggesting that CFC is a fundamental mechanism for brain coordination in both health and disease15,37. Our method addresses many of the challenging problems encountered with existing techniques for estimating CFC, while also significantly improving statistical efficiency and temporal resolution. This improved performance could pave the way for important new discoveries that have been limited by inefficient analysis methods, and could enhance the reliability and efficiency of PAC analysis to enable their use in medical applications.

Methods

All methods were carried out in accordance with relevant guidelines and regulations4,25.

Data sets

Experimental design and procedure

(a) Human EEG

We analyzed human EEG data during loss and recovery of consciousness during administration of the anesthetic drug propofol. The experimental design and EEG preprocessing have been extensively described in25. Briefly, 10 healthy volunteers (18–36 years old) were infused increasing amounts of propofol spanning 6 target effect site concentrations (0, 1, 2, 3, 4, and 5 \(\upmu\)g L\(^{-1}\)). Infusion was computer controlled and each concentration was maintained for 14 min. To monitor loss and recovery of consciousness behaviorally, subjects were presented with an audio stimulus (a click or a verbal command - only the latter is reported here) every 4 s and had to respond by pressing a button. The probability of response and associated 95\(\%\) credible intervals were estimated using Monte-Carlo methods48 to fit a state space model to these data. Finally, EEG data were pre-processed using an anti-aliasing filter and downsampled to 250 Hz.

We computed spectrograms of the EEG using the parametric expression associated with oscillation decomposition (derived in Supplementary Materials S1). Standard techniques for PAC analysis were applied on 6 and 120 s windows for which alpha and slow component were assumed to be known and extracted using bandpass filters around 0.1–1 Hz and 9–12 Hz. Significance for the standard PAC method was assessed using 200 random permutations.

(b) Rat LFP

Rat LFP dataset was generously shared by Tort et al.4. Data were recorded from the CA3 region of the dorsal hippocampus of rats as they learned a spatial recognition task. The signal was sampled at 1000 Hz, bandpassed from 1 to 300 Hz and binned into non-overlapping 2 s time windows. The standard PAC analysis was performed using 6-10 Hz and 30–55 Hz filters to extract theta and gamma components, respectively. To replicate the original results, modulation indices were averaged over 20 trials.

Simulations

We tested our algorithm on simulated datasets generated by different models. We constructed each simulated signal by combining unit variance Gaussian noise, a slow oscillation centered at \(f_{\text {s}}\) (= 1 Hz unless stated otherwise), and a modulated fast oscillation centered at \(f_{\text {f}}\) (= 10 Hz unless stated otherwise). It is important to note that we chose to generate these simulated signals using a method or “model class” that was different from the state space oscillator model we use to analyze the data. For standard processing, significance was assessed with 200 random permutations, \(f_{\text {s}}\) and \(f_{\text {f}}\) were assumed to be known, and components were extracted with bandpass filters with pass bands set to 0.1–1 Hz for the slow component and 8–12 Hz for the fast component.

(a) Simulating the slow oscillation

Neural oscillations are not perfect sinusoids and instead have a broad band character. Using the approach described in26, we simulated a broad band slow oscillation by convolving (filtering) independent identically distributed Gaussian noise with the following impulse response function

where \(\omega _{\text {s}} =2 \pi f_{\text {s}}\), \(c_0\) is a Blackman window of order \(2 \left\lfloor 1.65 f_s / \Delta f_{\text {s}}^{\text {gen}}\right\rfloor +1\). The smaller the slow frequency bandwidth \(\Delta f_{\text {s}}^{\text {gen}}\), the closer the signal is to a sinusoid. When necessary, we additionally use a \(\pi /2\) phase-shifted filter: \(\tilde{c}(t) = c_0(t) \sin (\omega _\text {s}t)\) to model an analytic slow oscillation \(x_t^{\text {s}}\) from which we deduce the phase \(\phi ^{\text {s}}_t\). The resulting series is finally normalized such that its standard deviation is set to \(\sigma _\text {s}\).

(b) Simulating the modulation

To assess the temporal resolution of our method and the standard method, we generated simulated data sets with different rates of time-varying modulation. First, to construct the modulated fast oscillation, we constructed a fast oscillation centered at \(\omega _{\text {f}} =2 \pi f_{\text {f}}\) and normalized to \(\sigma _\text {f}\) as described above and modulated it by

Here, \(K^{\text {mod}}_t\) and phase \(\phi ^{\text {mod}}_t\) are time varying and follow the dynamics illustrated in Fig. 5 (and in Fig. S4). Representative simulated EEG signal traces for different generative parameters are illustrated in Fig. S5.

We also generated simulated data using an alternative modulation function (Fig. 6 and Figs. S6, S7 and S8)

where \(u_{\phi ^{\text {mod}}}^\intercal = \left[ \begin{matrix} \cos (\phi ^{\text {mod}})&-\sin (\phi ^{\text {mod}}) \end{matrix} \right]\), described previously in described in26.

(c) Simulated signals with abrupt changes

Signals with abrupt or sharp transitions can lead to artefactual phase-amplitude modulation19. To assess the robustness of our state-space PAC method under such conditions, we used a Van Der Pol oscillator to generate a signal with abrupt changes. Here, the oscillation x is governed by the differential equation:

Equation (14) was solved using Euler method with fixed time steps.

State-space oscillator model

For a time series of length N sampled at \(F_s\) (unless stated otherwise \(F_s=250\) Hz and \(N/F_s=6\) s), we consider a time window \(\{ y_t \}_{t=1}^{N} \in \mathbb {R}^{N}\), and we assume, in this section, that \(y_t\) is the sum of observation noise and components from two latent states \(x^{\text {s}}_t\) and \(x^{\text {f}}_t \in \mathbb {R}^{2N}\) which account for a slow and a fast component. We use the oscillation decomposition model described by Matsuda and Komaki22. For \(j= \text {s}, \text {f}\) and \(t=2\ldots N\), each component follows the process Eq.

where \(a_j \in (0,1)\) and \(\mathscr {R}(\omega _{j})\) is a rotation matrix with angle \(\omega _{j}= 2 \pi f_j /F_s\)

and

As previously stated, the phase \(\phi _t^j\) and amplitude \(A_t^j\) of each oscillation are obtained using the latent vector polar coordinates:

Each oscillation has a broad-band power spectral density (PSD) with a peak at frequency \(f_j\). The parametric expression for this PSD is derived in the Supplementary Materials S1.

Setting \(M=\left[ \begin{matrix} 1&0&1&0 \end{matrix} \right]\), \(x_t= [x^{\text {s} \intercal }_{t} x^{\text {f} \intercal }_t]^\intercal\), and Q and \(\Phi\) to be block diagonal matrices whose blocks are \(Q_j\) and \(a_j \mathscr {R}( \omega _j)\), respectively, we find the canonical state space of Eq. (3).

Given the observed signal \(y_t\), we aim to estimate both the hidden oscillations \(x_t\) and their generating parameters \((\Phi , Q, R)\). We do so using an Expectation-Maximization (EM) algorithm (see Supplementary Materials S1 for a more general derivation). The hidden oscillations \(x_t\) are estimated in the E-step of the EM algorithm using the Kalman filter and fixed-interval smoother51, while the generating parameters are estimated in each iteration of the M-step.

Phase amplitude coupling model

Standard processing using bandpass filters and the Hilbert transform

Standard approaches for PAC analysis follow a procedure described in Tort, et al.24, which we briefly summarize here. The raw signal \(y_t\) is first bandpass filtered to isolate slow and fast oscillations. A Hilbert transform is then applied to estimate the instantaneous phase of the slow oscillation \(\phi ^{\text {s}}_t\), and instantaneous amplitude of the fast oscillation \(A^{\text {f}}_t\). At time t, the alpha amplitude \(A^{\text {f}}_t\) is assigned to one of (usually 18) equally spaced phase bins of length \(\delta \psi\) based on the instantaneous value of the slow oscillation phase: \(\phi ^{\text {s}}_t\). The histogram is constructed over some time window T of observations, for instance a \(\sim\) 2 min epoch, which yields the phase amplitude modulogram (PAM)40:

For a given window T, PAM(T,.) is a probability distribution function which assesses how the fast oscillation amplitude is distributed with respect to the slow oscillation phase. The strength of the modulation is then usually measured with the Kullback–Leibler divergence with a uniform distribution. It yields the Modulation Index (MI):

Finally, under this standard approach, surrogate data such as random permutations are used to assess the statistical significance of the observed MI. Random time shifts \(\Delta t\) are drawn from a uniform distribution whose interval depends on the problem dynamics40 and phase amplitude coupling is estimated using the shifted fast amplitudes \(A^{\text {f}}_{t-\Delta t}\) and the original slow phase \(\phi ^{\text {s}}_t\). The MI is then calculated for this permuted time series, and the process is repeated to construct a null distribution for the MI. The original MI is deemed significant if it is bigger that 95% of the permuted values. Overall, this method requires that the underlying process remains stationary for sufficiently long so that the modulogram can be estimated reasonably well and so that enough comparable data segments can be permuted in order to assess significance.

Parametric phase amplitude coupling

To improve statistical efficiency, we introduce a parametric representation of PAC. For a given window, we consider the following (constrained) linear regression problem:

where \(\beta = [ \begin{matrix} \beta _0&\beta _1&\beta _2 \end{matrix} ]^\intercal\), \(X(\phi ^{\text {s}}_t) = \left[ \begin{matrix} 1&\cos (\phi ^{\text {s}}_t)&\sin (\phi ^{\text {s}}_t) \end{matrix} \right]\) and \(W(\overline{K}) = \{ \beta \in \mathbb {R}^3 | \sqrt{\beta _1^2 + \beta _2^2} < \beta _0 \overline{K}\}\). If we define

we see that Eq. (21) is equivalent to:

Setting \(\overline{K}=1\) ensures that the model is consistent, i.e., that the modulation envelope cannot exceed the amplitude of the carrier signal. But this can be a computationally expensive constraint to impose. If the data have a high signal to noise ratio so that \(K^{\text {mod}}\) is unlikely to be greater than 1 by chance, we could also choose to solve the unconstrained problem (\(\overline{K}= + \infty\)). Under the constrained solution, the posterior distribution for \(\beta\) is a truncated multivariate t-distribution47:

The likelihood, conjugate prior, posterior parameters \(\overline{\beta }, V, b, \nu\), and the normalizing constant Z are justified and derived in Supplementary Material S1. We refer to this estimate as State Space PAC (SSP) and we note

Posterior sampling

The standard approach relies on surrogate data to determine statistical significance, which decreases its efficiency even further. Instead, we estimate the posterior distribution \(p(\beta | \{ y_t \}_t)\) from which we obtain the credible intervals (CI) of the modulation parameters \(K^{\text {mod}}\) and \(\phi ^{\text {mod}}\). To estimate the posterior distribution, we sample from the posterior distributions given by (i) the state space oscillator model and (ii) the parametric PAC model.

(i) The Kalman Filter used in the \(r{th}\) E-Step (see Supplementary Materials S1) of the EM algorithm provides the following moments, for \(t,t'=1\ldots N\):

Therefore, we can sample \(l_1\) times series: \(\mathscr {X} = \{ \mathscr {X}_{t} \}_{t=1}^N\) using

where P is a \(4N \times 4N\) matrix whose block entries are given by \((\mathcal {{\textbf {P}}})_{tt'}= P_{t,t'}^N\).

(ii) For each \(\mathscr {X}\), we use Eq. (18) to compute the resampled slow oscillation’s phase \(\varphi\) and fast oscillation’s amplitude \(\mathscr {A}\). We then use Eq. (24) to draw \(l_2\) samples from \(p \left( \beta | \mathscr {A},\varphi \right)\). As a result, we produce \(l_1 \times l_2\) samples to estimate:

We finally construct CI around \(\beta ^{\text {{\tiny SSP}}}\) using an \(L_2\) norm and in turn derive the CI of \(K^{\text {mod}}\) and \(\phi ^{\text {mod}}\) (Fig. 1h,i).

A second state-space model to represent time-varying PAC

We segment the time series into multiple non-overlapping windows of length N to which we apply the previously described analysis. We hence produce \(\{ \beta _{_T}^{\text {{\tiny SSP}}} \}_{_T}\), a set of vectors in \(\mathbb {R}^3\) accounting for the modulation where T denotes a time window of length N.

A second state-space model can be used to represent the modulation dynamics. Here we fit an autoregressive (AR) model of order p with observation noise to the modulation vectors \(\beta _{_T}^{\text {{\tiny SSP}}}\) across time windows. It yields the double State Space PAC estimate (dSSP):

We proceed by solving and optimizing Yule-Walker type equations numerically (see Supplementary Materials S1) and we select the order p with Bayesian Information Criterion30. Finally, we can use the fitted parameters to filter the \(l_1 \times l_2\) resampled parameters to construct a CI for \(\{ \beta _{_T}^{\text {{\tiny SSP}}} \}_{_T}\) when necessary.

Equivalence

To better compare standard techniques with the SSP, we derive an approximate expression for the PAM under our parametric model (Supplementary Materials S1). For a window T:

Initialization of the expectation maximization (EM) algorithm

Although EM ensures convergence, the log likelihood which is to be maximized is not always concave22. To address this issue, Matsuda and Komaki initialize a signal composed of d oscillations with the parameters of the best autoregressive (AR) process of order \(p \in [|d, 2d|]\). Nevertheless, because of the electrophysiological signal’s aperiodic component, such procedure might bias the initialization. Indeed, the aperiodic components are usually described by a \(1/f^{\chi }\) power-law function45,46 which might be regressed by the AR process. In such cases, the initialization could fail to account for an actual underlying oscillation.

To help mitigate this potential problem, we adapt Haller, Donoghue and Peterson’s FOOOF algorithm27 to the state space oscillation framework. Our initialization algorithm aims to disentangle the oscillatory components from the aperiodic one before fitting the resulting spectra with the parametric PSD of the oscillation (Eq. 44). All fits in this initialization procedure use interior point methods to minimize \(L_2\) norms.

The power spectral density (PSD) for the observed data signal \(y_t\) is estimated using the multitaper method38. We set the frequency resolution \(r_f\) (typically to 1 Hz) which yields the time bandwidth product \(\text {TW} = \frac{r_{f}}{2} \frac{N}{F_s}\). The number of taper K is then chosen such that \(K<< \left\lfloor 2\text {TW}\right\rfloor -1\).

First of all, we estimate the observation noise \(R_0\) (used to initialized R) using:

and we remove this offset from the PSD.

Regressing out the non oscillatory component

The aperiodic signal PSD in dB, at frequencies f is then modeled by:

\(\chi\) controls the slope of the aperiodic signal, \(g_0\) the offset and \(f_0\) the ”knee” frequency. A first pass fit is applied to identify the frequencies corresponding to non oscillatory components: only \(f_0\) is fitted while \(\chi\) and \(g_0\) are respectively set to \(\chi = 2\) and \(g_0 = \text {PSD}(f=0)\) (Fig. 9a). We fix a threshold (typically 0.8 quantile of the residual) to identify frequencies associated to the aperiodic signals (Fig. 9b).

A second pass fit is then applied only on those frequencies from which we deduce \(g_0\), \(f_0\) and \(\chi\) (Fig. 9c). We remove g(f) from the raw PSD in dB and use it for the second step of the algorithm (Fig. 9d).

Steps for the initialization procedure. A first pass fit is applied to the raw multitaper power spectral density (PSD) estimate (a). We remove this fit from the raw PSD and fix a threshold to identify non-oscillatory components (b). A second pass fit is applied (c) which yields a redressed PSD (d). We then fit the parametric expression of the PSD for a fixed number of oscillations (d). The fitted parameters are then used to initialize the EM algorithm.

Oscillation initialization

From the redressed PSD, we fit a given number \(d_0\) (e.g \(d_0 =4\)) of independent oscillations using the theoretical PSD given (Eq. 44). To do so, we identify PSD peaks of sufficient width (wider than \(r_f/2\)) before fitting an oscillation theoretical spectra in a neighborhood of width \(2r_f\) around this peak. For oscillation j, we deduce \((f_j)_0\), \((a_j)_0\) and \((\tilde{\sigma }^2_j)_0\). Since \((\tilde{\sigma }^2_j)_0\) represents the offset of a given oscillation after removing the aperiodic component, we adjust it to estimate \(\sigma ^2_j\):

The resulting spectra \(\text {PSD}_j\) are then subtracted and the process is repeated until all oscillations are estimated (Fig. 9e, blue). We finally estimate the power P\(_j\) of an an oscillation j in the neighborhood of \((f_j)_0\) and estimate its contribution to the total power P\(_0\) by \(- 10 \log _{10} \left( 1- \frac{\text {P}_j}{\text {P}_0 + 2r_fR_0/F_s} \right)\).

Oscillation are sorted and the resulting parameters are used to initialize the EM algorithm with the \(d \in [|1, d_0|]\) first oscillations.

Code availability

Code will be made public after publication.

References

Buzsáki, G., Anastassiou, C. A. & Koch, C. The origin of extracellular fields and currents: EEG, ECoG, LFP and spikes. Nat. Rev. Neurosci. 13(6), 407–420 (2012).

Canolty, R. T. et al. Oscillatory phase coupling coordinates anatomically dispersed functional cell assemblies. Proc. Natl. Acad. Sci. 107(40), 17356–17361 (2010).

Tort, A. B. L., Kramer, M. A., Thorn, C., Gibson, D.J., Kubota, Y., Graybiel, A. M., & Kopell, N.J. Dynamic cross-frequency couplings of local field potential oscillations in rat striatum and hippocampus during performance of a T-maze task. Proc. Natl. Acad. Sci., 0810524105 (2008).

Tort, A. B. L., Komorowski, R. W., Manns, J. R., Kopell, N. J., Eichenbaum, H. Theta–gamma coupling increases during the learning of item–context associations. Proc. Natl. Acad. Sci. 106(49), 20942–20947 (2009).

Axmacher, N. et al. Cross-frequency coupling supports multi-item working memory in the human hippocampus. PNAS 107(7), 3228–3233 (2010).

Lisman, J. E. & Idiart, M. A. Storage of 7 +/- 2 short-term memories in oscillatory subcycles. Science 267(5203), 1512–1515 (1995).

Lisman, J. E. & Jensen, O. The theta-gamma neural code. Neuron 77(6), 1002–1016 (2013).

López-Azcárate, J. et al. Coupling between beta and high-frequency activity in the human subthalamic nucleus may be a pathophysiological mechanism in Parkinson’s disease. J. Neurosci. 30(19), 6667–6677 (2010).

Shimamoto, S. A. et al. Subthalamic nucleus neurons are synchronized to primary motor cortex local field potentials in Parkinson’s disease. J. Neurosci. 33(17), 7220–7233 (2013).

de Hemptinne, C. et al. Exaggerated phase-amplitude coupling in the primary motor cortex in Parkinson disease. PNAS 110(12), 4780–4785 (2013).

Moran, L. V., & Elliot Hong, L. High vs low frequency neural oscillations in Schizophrenia. Schizophr. Bull. 37(4), 659–663 (2011).

Kirihara, K., Rissling, A. J., Swerdlow, N. R., Braff, D. L. & Light, G. A. Hierarchical organization of gamma and theta oscillatory dynamics in Schizophrenia. Biol. Psychiat. 71(10), 873–880 (2012).

Miskovic, V. et al. Changes in EEG cross-frequency coupling during cognitive behavioral therapy for social anxiety disorder. Psychol. Sci. 22(4), 507–516 (2011).

Allen, E. A. et al. Components of cross-frequency modulation in health and disease. Front. Syst. Neurosci. 5, 59 (2011).

Fiebelkorn, I. C. & Kastner, S. A Rhythmic Theory of Attention. Trends Cogn. Sci. 23(2), 87–101 (2019).

Börgers, C., Epstein, S., & Kopell, N. J. Gamma oscillations mediate stimulus competition and attentional selection in a cortical network model. Proc. Natl. Acad. Sci.. pnas-0809511105 (2008).

Shirvalkar, P. R., Rapp, P. R. & Shapiro, M. L. Bidirectional changes to hippocampal theta-gamma comodulation predict memory for recent spatial episodes. Proc. Natl. Acad. Sci. 107(15), 7054–7059 (2010).

Palva, J. M., Monto, S., Kulashekhar, S., & Palva, S. Neuronal synchrony reveals working memory networks and predicts individual memory capacity. PNAS 107(16), 7580–7585 (2010).

Aru, J. et al. Untangling cross-frequency coupling in neuroscience. Curr. Opin. Neurobiol. 31, 51–61 (2015).

Siebenhühner, F. et al. Genuine cross-frequency coupling networks in human resting-state electrophysiological recordings. PLoS Biol. 18(5), e3000685 (2020).

Idaji, M. J., Zhang, J., Stephani, T., Nolte, G., Müller, K.-R., Villringer, A., & Nikulin, V. V. H. A method for eliminating spurious interactions due to the harmonic components in neuronal data. NeuroImage, 252, 119053 (2022).

Matsuda, T. & Komaki, F. Multivariate time series decomposition into oscillation components. Neural Comput. 29(8), 2055–2075 (2017).

Shumway, R. H. & Stoffer, D. S. An approach to time series smoothing and forecasting using the Em algorithm. J. Time Ser. Anal. 3(4), 253–264 (1982).

Tort, A. B. L., Komorowski, R., Eichenbaum, H., & Kopell, N. Measuring phase-amplitude coupling between neuronal oscillations of different frequencies. J. Neurophysiol. 104(2), 1195–1210 (2010).

Purdon, P. L., Pierce, E. T., Mukamel, E. A., Prerau, M. J., Walsh, J. L., Wong, K. F. K., Salazar-Gomez, A. F., Harrell, P. G., Sampson, A. L., Cimenser, A., Ching, S., Kopell, N. J., Tavares-Stoeckel, C., Habeeb, K., Merhar, R., & Brown, E. N. Electroencephalogram signatures of loss and recovery of consciousness from propofol. Proc. Natl. Acad. Sci. USA110(12), E1142–E1151 (2013).

Dupré la Tour, T., Tallot, L., Grabot, L., Doyère, V., van Wassenhove, V., Grenier, Y., & Gramfort, A. Non-linear auto-regressive models for cross-frequency coupling in neural time series. PLoS Comput. Biol.13(12) (2017).

Haller, M., Donoghue, T., Peterson, E., Varma, P., Sebastian, P., Gao, R., Noto, T., Knight, R. T., Shestyuk, A., & Voytek, B. Parameterizing neural power spectra. bioRxiv, page 299859 (2018).

Cole, S. R. & Voytek, B. Brain oscillations and the importance of waveform shape. Trends Cogn. Sci. 21(2), 137–149 (2017).

Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 19(6), 716–723 (1974).

Schwarz, G. Estimating the dimension of a model. Ann. Stat. 6(2), 461–464 (1978).

Kramer, M. A. & Eden, U. T. Assessment of cross-frequency coupling with confidence using generalized linear models. J. Neurosci. Methods 220(1), 64–74 (2013).

Soplata, A. E. et al. Thalamocortical control of propofol phase-amplitude coupling. PLoS Comput. Biol. 13(12), e1005879 (2017).

Gaskell, A. L., Hight, D. F., Winders, J., Tran, G., Defresne, A., Bonhomme, V., Raz, A., Sleigh, J. W., Sanders, R. D., & Hemmings, H. C. Frontal alpha-delta EEG does not preclude volitional response during anaesthesia: Prospective cohort study of the isolated forearm technique. Br. J. Anaesth. 119(4), 664–673 (2017).

Brown, E. N., Purdon, P. L., Akeju, O. & An, J. Using EEG markers to make inferences about anaesthetic-induced altered states of arousal. Br. J. Anaesth. 121(1), 325–327 (2018).

Brown, E. N., Purdon, P. L. & Van Dort, C. J. General anesthesia and altered states of arousal: A systems neuroscience analysis. Annu. Rev. Neurosci. 34, 601–628 (2011).

Purdon, P. L., Sampson, A., Pavone, K. J. & Brown, E. N. Clinical Electroencephalography for Anesthesiologists: Part I: Background and Basic Signatures. Anesthesiology 123(4), 937–960 (2015).

Canolty, R. T. & Knight, R. T. The functional role of cross-frequency coupling. Trends Cogn. Sci. 14(11), 506–515 (2010).

Babadi, B. & Brown, E. N. A review of multitaper spectral analysis. IEEE Trans. Biomed. Eng. 61(5), 1555–1564 (2014).

Brown, E. N., Lydic, R. & Schiff, N. D. General anesthesia, sleep, and coma. N. Engl. J. Med. 363(27), 2638–2650 (2010).

Mukamel, E. A., Wong, K. F., Prerau, M. J., Brown, E. N., & Purdon, P. L. Phase-based measures of cross-frequency coupling in brain electrical dynamics under general anesthesia. Conf. Proc. IEEE Eng. Med. Biol. Soc. 1981–1984 (2011).

Canolty, R. T. et al. High gamma power is phase-locked to theta oscillations in human neocortex. Science 313(5793), 1626–1628 (2006).

Gregoriou, G. G., Gotts, S. J., Zhou, H. & Desimone, R. High-frequency, long-range coupling between prefrontal and visual cortex during attention. Science 324(5931), 1207–1210 (2009).

van Elswijk, G. et al. Corticospinal beta-band synchronization entails rhythmic gain modulation. J. Neurosci. 30(12), 4481–4488 (2010).

Neske, G. T. The slow oscillation in cortical and thalamic networks: Mechanisms and functions. Front. Neural Circ.9 (2016).

Bêdard, C. & Destexhe, A. Macroscopic models of local field potentials and the apparent 1/f noise in brain activity. Biophys. J. 96(7), 2589–2603 (2009).

Pritchard, W. S. The brain in fractal time: 1/f-like power spectrum scaling of the human electroencephalogram. Int. J. Neurosci. 66(1–2), 119–129 (1992).

Davis, W. W. Bayesian analysis of the linear model subject to linear inequality constraints. J. Am. Stat. Assoc. 73(363), 573–579 (1978).

Smith, A. C., Stefani, M. R., Moghaddam, B. & Brown, E. N. Analysis and design of behavioral experiments to characterize population learning. J. Neurophysiol. 93(3), 1776–1792 (2005).

Beck, A. M., Stephen, E. P. & Purdon, P. L. State Space Oscillator Models for Neural Data Analysis. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2018, 4740–4743 (2018).

De Jong, P. & Mackinnon, M. J. Covariances for smoothed estimates in state space models. Biometrika 75(3), 601–602 (1988).

Kitagawa, G., & Gersch, W. Smoothness priors analysis of time series. Number 116 in Lecture notes in statistics. Springer, New York (1996).

Mukamel, E. A., Pirondini, E., Babadi, B., Wong, K. F., Pierce, E. T., Harrell, P.G., Walsh, J. L., Salazar-Gomez, A.F., Cash, S. S., Eskandar, E. N., Weiner, V. S., Brown, E. N., & Purdon, P. L. A transition in brain state during propofol-induced unconsciousness. J. Neurosci.34(3):839–845 (2014).

Acknowledgements

This work was generously supported by the Bertarelli Foundation (fellowship for H.S.), the National Science Foundation (predoctoral fellowship for A.M.B.), and grants from the National Institutes of Health (R01AG056015, R01AG054081, R21DA048323 to P.L.P.). Acknowledgements. We thank Adriano Tort and colleagues for sharing their rat LFP dataset.

Author information

Authors and Affiliations

Contributions

Conceptualization: H.S., P.L.P. Methodology: H.S., E.P.S, A.M.B., P.L.P. Software H.S., E.P.S, A.M.B. Formal Analysis: H.S., P.L.P. Writing: H.S., P.L.P. Funding Acquisition: P.L.P.

Corresponding author

Ethics declarations

Competing interests

P.L.P. is also a co-founder of PASCALL Systems, Inc., a start-up company developing closed-loop physiological control for anesthesiology. P.L.P., H.S., E.P.S, and A.M.B. are co-inventors for a patent application employing systems and methods described in part in this manuscript.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Soulat, H., Stephen, E.P., Beck, A.M. et al. State space methods for phase amplitude coupling analysis. Sci Rep 12, 15940 (2022). https://doi.org/10.1038/s41598-022-18475-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-18475-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.