Abstract

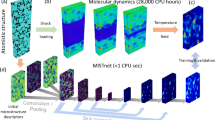

Microstructured materials that can selectively control the optical properties are crucial for the development of thermal management systems in aerospace and space applications. However, due to the vast design space available for microstructures with varying material, wavelength, and temperature conditions relevant to thermal radiation, the microstructure design optimization becomes a very time-intensive process and with results for specific and limited conditions. Here, we develop a deep neural network to emulate the outputs of finite-difference time-domain simulations (FDTD). The network we show is the foundation of a machine learning based approach to microstructure design optimization for thermal radiation control. Our neural network differentiates materials using discrete inputs derived from the materials’ complex refractive index, enabling the model to build relationships between the microtexture’s geometry, wavelength, and material. Thus, material selection does not constrain our network and it is capable of accurately extrapolating optical properties for microstructures of materials not included in the training process. Our surrogate deep neural network can synthetically simulate over 1,000,000 distinct combinations of geometry, wavelength, temperature, and material in less than a minute, representing a speed increase of over 8 orders of magnitude compared to typical FDTD simulations. This speed enables us to perform sweeping thermal-optical optimizations rapidly to design advanced passive cooling or heating systems. The deep learning-based approach enables complex thermal and optical studies that would be impossible with conventional simulations and our network design can be used to effectively replace optical simulations for other microstructures.

Similar content being viewed by others

Introduction

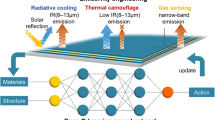

The ability to engineer how materials interact with light is at the core of the development of materials that are engineered to manage surface temperature via thermal radiation. Materials that can selectively emit or absorb thermal radiation can be engineered to passively cool beneath ambient temperatures1,2 or to heat radiatively3,4. Radiative heating and cooling depend on two spectral regions: visible (VIS) to near-infrared (NIR) and the mid-infrared (MIR) respectively1. Thermal absorption for a surface exposed to the sun is defined by the solar/NIR spectrum from λ = 300–2500 nm, whereas thermal emission depends on the temperature of the body5. A wide variety of topologies have been utilized for maximizing thermal absorption such as nano-domes6, corrugated surfaces4, core–shell structures7, and gratings8. Similarly, “passive-cooling structures”—surfaces that have significant thermal emission with limited solar absorption and can cool beneath ambient temperatures9—can be engineered from materials such as polymers2,10,11,12 or corrugated graphene13,14. Unlike many of the solutions to radiative heating and cooling, microscale pyramid-like (“micropyramid”) surface texturing surfaces can be used to engineer either radiative cooling or heating materials15. Periodic micropryamid texturing on a surface induces anti-reflective properties as a result of significant light confinement by the geometry16,17, and has been demonstrated to enhance absorption in silicon18,19,20,21,22,23,24,25,26, nickel3,27, tungsten28 as well as for dielectrics29 and polymers12.

The design and optimization of textures to control light—such as micropyramids—can be a challenge as simulating across the available design space is a computationally demanding process that often requires dedicated numerical simulation software30. To compound this problem, the vast array of available materials means that for a given set of application requirements and constraints there can be a different material that is best suited to fulfill those requirements. A powerful approach that has emerged in the field of nanophotonics is the use of Deep Learning (DL) and Deep-Neural Networks (DNNs) to fill the design space and to circumvent the necessity of large time investments in simulations. Inspired by the biology and architecture of the human brain, the DL methodology is capable of high levels of non-linear abstraction from datasets31. DL and Machine Learning (ML) have been used, in a broad setting, to solve complex problems ranging from machine vision for self-driving vehicles32 to automatic speech recognition33 and spacecraft system optimization34,35,36,37. In the field of optics, DL has been used recently to predict and model plasmonic behavior31,38,39,40,41,42, grating structures43,44, ceramic metasurfaces45,46, chiral materials47,48, particles and nanosturctures49,50,51, and to do inverse design31,41,50,51,52,53,54. Deep-Learning has also been used extensively in the field of heat transfer for applications such as predicting thermal conductivity55,56 and thermal boundary resistance57, studying transport phenomena58, optimizing integrated circuits59, modelling boiling heat transfer60, predicting thermal-optical properties44,61,62, and addressing thermal radiation problems63,64,65,66.

Spectrally selective surface designs are heavily dependent upon material selection. The interaction of light with a surface is a process regulated by the complex refractive index of the material(s) involved67,68, and material selection is fundamental to a microstructure’s performance. Different material classes such as metals, ceramics, polymers, and dielectrics interact differently with light, and the influence of geometry and microstructure can vary significantly even for small changes in the constituent material’s complex refractive index. A polymer, for example, has a strong mid-infrared response as a function of its complex refractive index, but due to the extinction coefficient of ~ 0 in the VIS–NIR, it is optically transparent. To manipulate the optical properties in those wavelengths, another material needs to be included in the polymer matrix10,69. To provide a comprehensive thermal optimization, we need to be able to exhaustively search across a material catalog to find what material and geometry combination are best suited for the thermal design requirements.

In this paper we propose a methodology based on a DNN to predict the optical properties of micropyramids across a wide design space of geometries, wavelengths, and, most importantly, materials. As opposed to many other studies that provide a deep learning approach to a structure with a single material38,52, a geometry with fixed materials44,47,51, or a material input defined by one-hot encoding with a random forest50, our DNN is designed to predict the optical properties of a vast array of materials and is not constrained by material input. While there are many available machine learning methods42,50,61,70,71,72,73,74, we choose to utilize the deep neural network approach due to the method’s input flexibility, scalability, and the ability to extrapolate outputs from unseen inputs.

The model we present can predict the transmissivity, reflectivity, and emissivity of micropyramids across a diverse library of materials. = Our model emulates finite-difference time-domain (FDTD) simulation outputs by predicting spectral properties for a combination of the plane-wave source wavelength, geometric properties of the texture, and material. The model differentiates materials by taking discrete material inputs derived from the complex refractive index and subsequently builds relationships between the material inputs and the geometry and wavelength to predict the transmissivity and reflectivity. From the predicted optical data, we make thermal predictions for the texture’s thermal emission and absorption performance. For a given material, there is a vast optical property design space afforded by a microstructure. By using the network to search across a library of materials, we can identify material and geometry combinations that can best optimize a set of thermal conditions. We can rapidly perform exhaustive searches across a material database and geometric design space to find optimum combinations, a process that would be too computationally expensive with previous micropyramid optimization approaches. While we apply our methodology to micropyramid structures, our methodology has wide applicability for neural network designs that can replicate and effectively replace optical simulations for metasurface and microstructured surface optimization.

Results

Model training and design

Solutions using the FDTD method, while accurate, are time consuming. Optimizing the spectral properties of a microstructure can be a challenge due to the number of simulations required. We employ a deep neural network architecture that can estimate the simulation outcome to predict optical properties rapidly and accurately. We design a network that can predict across the geometric design space of a micropyramid for a given minimum and maximum wavelength (λmin and λmax) and is capable of modeling and predicting the behavior of micropyramids constructed of an array of materials. Once the model is trained, the prediction phase is nearly instantaneous. Thus, if the model’s predictions are accurate, we can perform accurate optimizations in the span of seconds and mitigate the necessity of additional computationally expensive simulations.

Our model is trained, validated, and tested on a dataset constructed of data compiled from 35,500 different simulations from Lumerical’s commercially available 2D/3D FDTD solver. The simulation framework provides exact solutions for Maxwell’s equations across a finite element mesh and we can extract the dispersion and absorption from the results75,76. For this work, all simulations are calculated in 2D to minimize simulation time and generate large datasets for each material. 2D FDTD simulations deliver accurate results for micropyramid geometries, but slightly overestimate the emissivity when compared to a more realistic 3D simulation15. We chose to simulate our periodic micropyramid structures using FDTD instead of a semi-analytical approach such as RCWA77,78 due the accuracy of the FDTD method as well as the scalability and applicability of FDTD beyond the geometrically simple structures shown in this work. The simulations are based upon the geometry shown in Fig. 1, with key independent geometric parameters: the triangle base span (xspan), height (zspan), and substrate thickness (tsub). We utilize periodic boundary conditions for our simulations: the structure shown in Fig. 1 occupies the entire unit cell. Additionally, as micropyramids have been shown to demonstrate omnidirectional optical properties3,15,18,26, we do not vary the source angle of incidence or polarization. For this work we assume that Kirchhoff’s law is valid and the emissivity we calculate from the simulations is derived from α = ε = 1 – R – T, where reflectivity (R) and transmissivity (T) are calculated from power monitors above and below and domain respectively and where absorptivity (α) is synonymous with emissivity (ε). For each material, we generate a uniformly distributed random matrix of xspan, zspan, and tsub variables and run the simulation with a plane-wave injection source that ranges from λmin to λmax. Details on the dataset distributions can be found in the supplementary materials. The size of the randomly generated geometric property matrix corresponds to the number of simulations, with the randomness generally ensuring that simulations in a dataset have a unique combination of the three geometric variables. While the xspan and zspan coordinates are randomly generated with values ranging from 0 to 10 um, the range of tsub and λmin to λmax properties are selected based on the material. A detailed description of the simulation domain and setup are in the Methods section. Additionally, our simulations assume that there is no surface roughness or additional hierarchy to maintain simulation simplicity.

The geometric input parameters used in simulations and input into the DNN are the independent parameters xspan, zspan, tsub. We assume periodic boundary conditions for a unit cell that contains a single micropyramid with the specified geometric parameters. We divide the wavelength spectrum used in simulation into a set of single inputs. Each wavelength point has a set of λ-dependent n, k, εreal, and εim. The material properties are used as inputs to one multi-layer perceptron (MLP) and the geometric properties/wavelength are grouped as inputs for another MLP. The MLPs concatenate and connect to a larger DNN structure. The outputs of the DNN are a reflectivity and transmissivity point corresponding to λ.

The architecture of the neural network shown in Fig. 1 is designed to emulate the critical simulation inputs that influence the computed optical properties. In total, our network employs 8 inputs: xspan, zspan, tsub,, λ, n, k, εreal, εim. These inputs follow three classifications: geometric parameters, wavelength, and material data. The geometric parameters are xspan, zspan, and thickness of the substrate under the surface texture (tsub). We include the substrate thickness to capture the optical property behavior with respect to the thickness so that our model can more accurately interpret and predict the behavior of transmissive materials. The second input classification is the injection wavelength (λ). The wavelength is the fundamental determining factor that links the output and material data together. In a FDTD simulation, each frequency/wavelength point we solve at has a corresponding set of optical properties (ε, R, T) so to emulate that behavior we utilize a single wavelength point as a network input. The solution to Maxwell’s equations is not sequentially dependent, meaning that we can separate a large, simulated wavelength spectrum into smaller groupings of inputs for the neural network. Previous network designs employed the full simulation wavelength spectra and the corresponding wavelength dependent material properties, but we found that dividing the full-spectrum simulations into single wavelength inputs yields much more accurate results. Details on our design iteration can be found in the supplementary materials.

Corresondingly, the FDTD method uses the complex refractive index to differentiate between materials. At each wavelength point of the solution, there is a matching refractive index value (n) and extinction coefficient (k). The third grouping of the neural network’s input parameters—the material properties—enable the DNN to differentiate materials similar way to how a FDTD simulation would. To better strengthen the connection between material properties and the output, we include two correlated parameters—the real (εreal) and imaginary permittivity (εim), shown in Fig. 1. Compared to using only normalized n and k inputs to differentiate materials, the inclusion of the correlated parameters strengthens the connections between the material input and the output optical properties, enabling higher prediction accuracy for materials not included in the training of the model. The output of the neural network is the reflectivity and transmissivity that correspond to the wavelength input and material/geometric properties. This design emulates the output of the power monitors used in the FDTD simulations. Predicting all three optical properties is unnecessary as—assuming Kirchhoff’s law is applicable—we calculate the emissivity from the other two properties. To further enhance the connection between input and output, we utilize two smaller multi-layer perceptron (MLP) architectures that allow the model to build connections with the geometry/wavelength and wavelength/material properties respectively. The outputs from these MLPs are fed into the larger DNN structure. The uncoupled MLP structures are implemented to increase the connections between the inputs and to develop separate non-linear relationships between the key independent parameter (λ) and the geometric information and the material information. The concatenated output of the MLPs is fed as an input to the larger and fully connected sequential DNN structure. In our design process, we have found that this methodology has led to increased accuracy in extrapolating optical properties for new materials.

The DNN is trained using the FDTD generated datasets and allow it to learn and predict the non-linear relationships between the input geometry, wavelength, material properties, and the output spectra. The simulation data is divided into three separate subgroups: training, validation, and testing, which carry a 70/20/10 split respectively. We use the training and validation data in the model generation process. The test dataset—unseen during training—is used to evaluate the performance and accuracy of the network in interpolating optical properties for new geometric and wavelength combinations. The training/test datasets encompass simulations from 14 different materials of widely varying complex refractive index, including metals (Ni/Ag/Al/Cr/Fe/Sn)79,80,81, refractory metals (Ta/W)79,82, a phase-change material (VO2 Metallic/Insulating)83, a polymer (PDMS)84, a semiconductor (SiC)85, a ceramic (SiO2)79, and a material with a near zero extinction coefficient across a wide spectrum (Diamond)86. The network predictions vs the simulation results for the test dataset are shown in Fig. 2a,b. The diverse set of materials enables the network to interpret a wide range of n and k inputs—including extreme values—during the training process. The values of the complex refractive index are plotted in Fig. 2c, to highlight the differences between the materials in the training/validation/test datasets.

(a) neural network predictions of the optical properties compared to the properties obtained from FDTD simulations plotted for the test dataset. (b) By material average mean absolute error (MAE) for reflectivity (orange) and transmissivity (blue) separately for the test dataset. No error exceeds 0.01, the overall test dataset has an MAE of 0.0034. (c) The extinction coefficient (k) vs the refractive index (n) for all of the materials included in the test dataset, highlighting the differences between the materials used in the training of the network.

The test dataset does not contain new material data, but it includes geometric combinations that the model has not seen in training. Our model demonstrates an ability to predict with an extreme accuracy new geometric/wavelength combinations made of materials included in the training process. The error between the prediction and simulation values is plotted in Fig. 2a and broken down by material in Fig. 2b. The mean absolute error (MAE) for the training and validation sets are 0.0034 and 0.0035 respectively. These error values correspond to an MSE error for the training/validation datasets of 1.22e−4 and 1.34e−4 respectively. The test dataset has an MAE and MSE error of 0.0034 and 1.53e−4 respectively. While some outlier predictions do exist, as visualized in Fig. 2a, the error by material in Fig. 2b validates that our model is interpolating the optical properties for “seen” materials with high efficacy. An apparent relationship from Fig. 2b is that transmissive materials show a larger error in the predicted transmission, and metallic materials show an increased error in reflection. This is a manifestation of the role of the extinction coefficient, with a high extinction coefficient leading to reflection dominated optical properties and low extinction coefficient leading to transmission dominated optical properties. For some materials with a low extinction coefficient (k < < 1), geometry has little to no influence on the reflection and tsub is the only geometric parameter that determines the optical properties. This relationship necessitates that the design of the network correctly connects the material properties, wavelength, and geometry, to make accurate predictions for any arbitrary material not included in training.

The small differences in error between the test/evaluation datasets and the training/validation training errors verify our network can predict the optical properties for inputs within the design limits with a high degree of accuracy. Additionally, the minimal difference in error between the test and training/validation datasets allows us to conclude with a high degree of certainty that our model is not overfitting during training. We validate this assumption by examining the overlap in geometric parameters between the test and training/validation datasets, shown in the supplementary materials. We discuss the precise architecture, details on the hyperparameter optimization, network parameters, network architecture optimization process, etc., of the model used to achieve these results in the Methods section.

Optical predictions for select materials unseen in training

The network’s input design—with distinct material inputs, wavelength, and geometric parameters—enable our network to dynamically predict the optical spectra of micropyramids made of materials that are not included in the training process. We first test our network’s capacity to predict the optical properties of new materials with two new datasets: a metal (Titanium)79 and a ceramic (Alumina, Al2O3)87 dataset comprised of 1500 simulations each. These materials are not used in the training or validation process, and they were explicitly chosen as Ti/Al2O3’s complex refractive index values significantly differ from the materials used in training. Comparisons of the refractive indices used in training to those predict the titanium and alumina datasets are shown in the supplementary materials. These datasets were generated with the same methodology as before and each simulation has a unique combination of tsub, xspan, and zspan. After making predictions with a trained neural network that does not include any Titanium or Alumina data in training, we generate a different model that includes 10 randomly selected simulations from the alumina and titanium datasets (< 1% of the simulations) to compare the prediction accuracy when a small amount of data is included in the training process.

Figure 3a,b plots the predicted optical properties by FDTD simulation vs the neural network predictions. The MAE between prediction and simulation for the alumina and titanium datasets are 0.0175 and 0.0131 respectively. Broken down by individual output, the MAEReflectivity is (0.026, 0.0063) and MAETranmissivity is (6.01e−5, 0.028) for titanium and alumina respectively. The error in the reflectivity and transmission mirrors the results in Fig. 2b – metallic materials have reflection driven optical properties with geometry and show a very low error in transmission. Conversely, the relationship between the substrate thickness and extinction coefficient of alumina leads to non-zero transmission, with geometry playing a reduced role in determining the reflection and transmission properties. In Fig. 3c,d we compare the absolute difference between the neural network and FDTD predicted emissivity for the alumina and titanium datasets. Across both the geometric and wavelength space, we observe a high degree of accuracy in the neural network predictions. The exception to this is a significant deviation in the titanium dataset (Fig. 3d) that occurs in a region with high material/geometry specific resonance. Similarly, the network minorly underpredicts the role of transmission in Al2O3, leading to the observed prediction differences. Despite these differences, the model is clearly able to differentiate material in a meaningful way and extrapolate accurately beyond the dataset used in training.

Neural-network predictions for two materials (Ti/Al2O3) that are not used in the in the training process. (a, b) The predicted optical properties vs. the FDTD computed properties, with and without 10 simulations included in training for alumina and titanium. Surface plot of the absolute error between prediction and simulation with no simulations included (c, d) and with simulations included in training. The wavelength is on the x-axis and the geometric information is visualized with the aspect ratio on the y-axis. Including 10 simulations (1% of the dataset) dramatically reduces the error in the alumina to a near zero value across all wavelengths and aspect ratios. For Ti, the resonance driven peaks in the low-aspect ratio structures are reduced and the error in all other sections becomes approximately zero.

To improve the prediction accuracy, we examine what occurs when we include a seemingly trivial amount of simulation data from the “unseen” materials in the training process. We select 10 simulations at random from the Ti and Al2O3 datasets (< 1%) and include them in the training/validation/test datasets. Figure 3a,b and Fig. 3e,f underscore that the small inclusion of data has a large impact on the prediction accuracy. The overall MAE score becomes (0.0073, 0.0049) while MAEReflectivity improves to (0.014, 0.004) and MAETranmissivity improves to (7.69e−5, 0.0058) for titanium and alumina when 10 simulations of each are included in the training dataset. These error values are close to those shown in Fig. 2b for the materials in the test dataset, indicating that only a small amount of simulation data is required to calibrate the model for a new material. Figure 3e,f demonstrates that even this small amount of data—while not enough to completely remove error—effectively reduces prediction error throughout, even in the highly erroneous resonant region of Ti. While the prediction error from completely unseen data is excellent, including a small number of simulations align the accuracy of the “unseen” materials with the accuracy of the much larger datasets included in training.

Optical predictions for a library of materials unseen in training

To further demonstrate the capability of our model to provide accurate optical predictions for microstructures made of materials outside of the scope of the model’s training, we compare the network’s predictions to simulation results for 23 additional materials that were not seen in the training process. As many of these materials require much more time to simulate each geometric combination, we only perform 100 simulations for each material, for a total of 2300 additional simulations. The materials included in this library range dramatically in material properties, with the complete material list and compilation of prediction accuracy shown in Table 1.

The error between the predicted optical properties and simulated optical properties for the unseen material library is plotted in Fig. 4a, with the errors shown in more detail in Table 1. The only material with an MAE > 0.1 is TiO2, with a transmission prediction error of 0.2003. Low extinction coefficient materials generally exhibit more error in transmission and high extinction coefficient materials exhibit a larger error in reflection. The results indicate that while the neural network does not perfectly replicate the physics of the FDTD simulations, it is nevertheless accurate in making predictions for materials that vary significantly from those used in training– the overall mean average error across all 23 materials is 0.0279.

MAE for the transmission and reflection predictions compared to FDTD simulations for the 23 unseen library materials. (a) Plotted error when the materials are completely “unseen” and (b) after 5 simulations for each material are included in the training/testing/validation process. The log and then linearly normalized average extinction coefficient is shown in the z-axis, pointing to the role of the material in predicting where the error will occur. The error’s (x,y) distance from an MAE error of zero is shown with the color bar. Including 5 simulations systematically reduces the prediction error for the rest of the dataset, indicating that very little data is needed to calibrate the model for new materials and lead to accurate predictions.

We can improve this accuracy and calibrate the model by including a small number of simulations in the training/validation/test datasets. Here, we choose 5 random simulations from each of the 100 to include in the training/validation/test datasets. After training the model on this data, we show the improvement to the predictions in Fig. 4b. Despite using only 5% of the simulations contained in these materials’ datasets, the small calibration data has removed much of the error on every material. The combined MAE score after 5 simulations are included in the training process is 0.0118. The increase in accuracy provides further validation that our model has connected the inputs to the outputs via the simulation physics well enough that it only requires a small amount of calibration data to produce extremely accurate results across the rest of a material’s latent design space.

Material selection algorithm and thermal optimization

We apply the strength of our network architecture by using it to make optical predictions for a library of materials. We use the resulting optical predictions from the neural network to perform thermal optimization and search for the material and geometry that best optimize our selected thermal conditions. In total, we pass along 41 materials into the trained neural network. The materials cross a wide spectrum and include all the materials that were included in training, titanium/alumina, and the 23 other materials that are unseen by the network during training.

To fully demonstrate the speed of our network and how comprehensive we can be in searching the latent design space, we generate a grid of coordinates (xspan, zspan) that span from 0 to 10 um across both axes in increments of 0.1 um, for a total of 10,000 geometric coordinate pairs for each material. Over all 41 materials, this correlates to a total input of outputs of 410,000 optical simulations. For each of these simulations, there are 100 wavelength points, for a total of 41 million sets of inputs to the network. The network requires approximately 25 – 40 s to predict the optical properties across all 1,000,000 synthetic simulation input sets for each material. In total, the network requires 15 to 20 min to make predictions for all 41 materials. Each individual DNN approximated simulation requires anywhere from 30 to 40 ms on our computer. The output encompasses a total of 82 million datapoints for all 41 materials. The remarkable speed of prediction punctuates our desire to use a neural network to mostly supplant FDTD simulations, as the trained network can comprehensively predict a library of materials’ optical spectrums in minutes.

We then use the DNN spectral predictions to perform a material search process to identify what materials and geometries best optimize a set of imposed thermal optimization equations. The selection of the thermal optimization equation is application specific. For sake of demonstration, the optimization we present is for high-temperature cooling. Additional optimizations using different optimization conditions are presented in the supplementary materials. The equations and thermal optimization are discussed in the Methods section. We process the thermal optimization equations for each wavelength dependent spectral property matrix to generate a figure of merit (FOM), a task that requires a significantly larger amount of computational time than the neural network’s optical predictions. Figure 5 shows the materials and Table 2 shows the geometries identified by the search process that best optimizes the cooling thermal balance defined by Eqs. (2–3) at three different surface temperatures: 300, 500, and 1000 K. The definition of Eq. (3) leads to Au being the most optimal material for a single material cooling microstructure at 300 K, a result that hinges upon the role of transmission in the equation. Despite being a transmissive material, SiO2 is identified by the search algorithm as the most optimal material at 500 K. This as a result of a balance of the transmission of solar radiation with the large thermal emission at that temperature. It should be noted that we did not change the material data inputs into the network to account for temperature variation. The design of the network material inputs, allows us to adapt our material data for different temperatures if a significant difference in the material properties is expected.

Material search algorithm identifying the most optimal microstructures for cooling at surface temperatures of 300, 500, and 1000 K based on the figure of merit defined by Eqs. (5–6). Due to the role of transmission in Eq. (5), the most optimum microstructure for cooling at room temperature is Au (FOM = 0.772) as typical cooling materials such as PDMS and SiO2 transmit thermal radiation in the visible wavelengths, negating cooling for a surface below. At 500 K, we identify SiO2 micropyramids as being most optimal (FOM = 0.852). At 1000 K, the algorithm identifies VO2 as best performing micropyramid structure (FOM = 0.982) among all 41 materials that were predicted by the network. All materials are predicted assuming a minimum wavelength of 0.3 and maximum wavelength of 16 um to capture both thermal emission and solar absorption optical properties.

Discussion

In contrast to FDTD simulations, which can take anywhere from minutes to hours to run, each DNN prediction takes approximately 30–40 µs/input to predict the spectra of a geometry/material/wavelength combination. Comparatively, we estimate simulating the same 10,000 parametric grid of geometries would require on average 1–3 months for the solutions to compute in FDTD via our simulation computers for each material. Accordingly, the neural network approach is approximated to be 6–8 orders of magnitude faster than traditional simulation methodologies. This estimation changes based on the available computational resources, but a tremendous benefit to a neural network driven approach is that an already trained model requires a miniscule quantity of resources to operate and make predictions. Our methodology is also scalable beyond the 2D micropyramid simulations we used in this work. We utilized 2D simulations such that we could more rapidly generate large training datasets, but our approach can be easily applied to replace or significantly reduce the reliance on simulations of more complex geometries or designs of other microstructures to increase throughput by orders of magnitude.

While our approach cannot completely replace simulations, we dramatically reduce the necessity of computationally expensive optical simulations. The use of material information and wavelength enables the model to build connections between the inputs and the physics, providing accurate predictions for a wide variety of materials that are highly dissimilar from those used in training the model. The model we show can be used to make generally accurate predictions for a material, with an overall MAE of 0.0279 for the library of completely unseen materials. Despite this accuracy, we can make more confident predictions by including a small amount of calibration data from simulations to tune the model to new physics, resonant behavior, etc., that may be present in the new material as a function of material properties or geometry. Including merely 5 simulations in training (500 datapoints) reduces our error on the remainder of the dataset to 0.0118. This indicates that our model is not merely interpolating existing material/geometry results and is making reliable predictions for materials that vary dramatically from those used to train the network.

The speed of the network combined with the ability to predict materials unused in training facilitates explorations of the design space in ways that would be impossible with traditional simulations. Figure 5 shows several specific instances of material and or geometry combinations that suit several generalized thermal balance equations, but there are near limitless combinations of temperature and environmental conditions. Our methodology allows for us to define a set of thermal conditions and search hundreds of thousands of material/geometry combinations in seconds to determine which combination yields the best result.

The network can perform thermal optimization in minutes—a task that would take years to generate a similarly sized dataset to search through using FDTD. The capability to explore the comprehensive material and geometric latent space of our problem empowers us to solve complex problems both rapidly and comprehensively. An example of this is using the network to identify optimal fabrication designs within particular constraints: for example, if the aspect ratio needs to be limited, we can identify in seconds both the material and geometric combination that provide the best expected results under the constraints. We provide an example of this in the supplementary materials. The network can also be utilized to quantify expected fabrication and experimental uncertainty by exploring the effects of nanoscale changes in the geometric parameters on the optical properties.

Ultimately, a fundamental problem facing surrogate models is the diminishing returns: to provide accurate results, more data is required, to the point where the design space has been thoroughly explored to generate the neural network model. The test dataset exemplifies this. While we can still explore minutia and small variations in geometry, a large amount of computational time was invested in generating the combined training dataset, to the point where the necessity of the neural network is diminished for these materials. Where our network is different from others that merely interpolate geometric or existing results is in the prediction of materials that have drastically different relationships between the incident wavelength, material properties, and geometry. We desire a network that can accurately extrapolate optical properties from any input material, without needing distinct and/or limiting classification methods or a large amount of new data. A particular challenge in developing the model to this end was overcoming errors in the prediction of transmissive materials. Whereas the reflection is primarily a material/geometry dependent phenomenon, transmission depends on more parameters. The inclusion of separate MLP’s, the permittivity inputs, and different normalization methods were all designed to improve the prediction accuracy of the model for both reflective and transmissive materials, ultimately improving the model’s connection to the relevant physics.

The ability of the model to take different material inputs and predict outside of its original training scope—not bound to classification—unlocks many possibilities. This includes predicting optical changes at different temperatures, enabling much more complex temperature dependent optimizations. While we do not demonstrate a reversible network in this work, the network shown could also serve as a basis for a reverse-network structure. Multiple problems—such as multiple material solutions for the same desired optical output—will need to be overcome to implement a successful reverse network capable of predicting across a wide array of materials. These insights will inform the next generation of models that move to more complex microstructures with more material, geometric, and thermal parameters.

Conclusion

We have demonstrated a Deep-Neural Network that can emulate finite difference time domain simulation outputs that can be used for the rapid thermal and optical optimization of microstructured surfaces. The network can make accurate predictions for micropyramids across a wide array of materials and can accurately extrapolate optical properties from input data that is outside of the scope of training. Further, the network design allows us to accommodate and train on any number of materials and allows us to make predictions for the optical properties of micropyramids made of materials the model has not been trained on. We have demonstrated how our model can be used as the basis for a material search algorithm that can identify materials and geometries that best optimize a thermal environment and set of constraints. The neural network driven predictions occur at a rate 6–8 orders of magnitude faster than the simulations that were used to train the model. The network predicts the optical spectra of over 1 million simulations per minute regardless of material choice, generating output datasets in seconds that would take years to simulate in FDTD. The material search process demonstrated in this work can identify the optimal material/geometry combination across a vast latent space nearly instantaneously. Furthermore, the methodology can be easily translated to other geometries beyond micropyramids, enabling DL based models that can significantly reduce the need for computationally expensive simulations for a variety of microstructure surface textures. Our methodology effectively replaces FDTD simulations for micropyramids, decreases the time required to optimize surface conditions, and allows for more complex and comprehensive studies to explore the latent space of the problem.

Methods

Data and code availability

The datasets generated and/or analyzed during the current study are available in the Optical-Prediction-Neural-Network repository, [https://github.com/jmsulliv/Optical-Prediction-Neural-Network.git].

FDTD simulations

We perform FDTD simulations in Lumerical/ANSYS’s commercially available FDTD simulation software. The unit cell shown in Fig. 1 replicates the major variables simulated—xspan, zspan, and tsub. A plane wave source with normal incidence is placed in the z-direction. For this work we do not consider angular dependence of the optical properties or of the dependence of the optical properties on the polarization angle. The injection wavelength spans a linearly spaced vector of 100 wavelength points that begins with λmin and ends with λmax. Perfectly matched layers are applied in the direction of the injection source to prevent boundary reflection at both the top and bottom of the domain and periodic boundary conditions are placed perpendicular to the wave source. Frequency-domain field and power monitors are placed above and below the PML boundary layers to monitor reflection and transmission respectively. Emissivity is computed using Kirchhoff’s Law, α = ε = 1 – R – T. The monitors are solved at every frequency/wavelength point, leading to a one-to-one matching of the simulation output to the wave source.

For each material dataset we generate, we specify a different λmin and λmax. The selection of these values depend on knowledge of the material data. For materials that are transmissive in UV–VIS, (PDMS/SiO2) λmin/λmax are set to 2 um/16 um. Most metals (Ni, Al, Ag, W, Sn, Fe) are simulated with λmin/λmax of 0.3 μm/10 μm. All other materials and some metals (VO2, Cr, Ta) are simulated with λmin/λmax of 0.3 μm/16 μm. The unseen materials (Ti, Al2O3) have a λmin/λmax of 0.3/16 μm and 2/16 μm respectively. Vanadium Dioxide is divided into two separate materials: that of an insulation phase (ceramic behavior) and metallic phase (metallic behavior)83.

The value of tsub also depends on the material selection. For metals (Ni, Al, Ag, W, Sn, Fe, Ta, Cr, Ti) and SiC we simulate over a range of random tsub values confined by a minimum value of 1 μm and a maximum of 3 um. For transmissive materials with a wide range of substrate dependent performance (VO2, SiO2, PDMS, Al2O3) we choose the minimum thickness to be 1 um and the maximum to be 100 μm. More information on the simulation output’s variation vs. the substrate thickness for these materials is in the supplemental section.

Network Architecture and optimization

We use a deep neural network with fully connected dense layers as shown in Fig. 1. Our deep learning approach is built upon the open source keras library in python99. Our optimized DNN uses 8 fully connected dense layers with 400 neurons per layer, and both MLPs are 4 layers of 50 neurons each. Optimization of the hyperparameters is performed with the built-in hyperband optimization method100. We also utilize manual cross-fold validation for limited hyperparameter optimization. For training, we utilize a MSE loss function and validate/evaluate using an MAE score based on Eqs. (1, 2) respectively, where \(Y_{i}\) is the predicted value.

Adam is the optimization engine used for the network training. To minimize overfitting, we utilize L2 regularization in the training and validation process, in addition to utilizing early-stopping, checkpoint save, and reduce learning rate on plateau callbacks.

Datasets and normalization

All datasets used by the neural network are derived from FDTD simulation inputs and outputs directly. For each material in the training/validation/test dataset, we simulate a minimum of 1,000 individual combinations of xspan, zspan, and stub. We generate a uniformly distributed random matrix for each of the geometric properties to use as inputs for the simulation. The simulation wavelength and n and k values are taken from each simulation and split into sets of input data, spanning a total of 8 neural inputs (n and k are converted into εreal and εim). The simulation output is 100 emissivity and 100 reflectivity points that one-to-one match the simulation wavelength vector, which is divided into pairs for each λ. For this work we utilize several normalization methods depending on the input dataset. X, Z, and λ are considered uniform, and a simple linear normalization is applied to each separately using Eq. (1). For the refractive index (n) we use a log-linear normalization, using Eq. (4) with α = 0 and then Eq. (3) to bring the values between 0 and 1.

The distribution of k, tsub, εreal, and εim pose a more significant normalization challenge. The εreal permittivity value is of particular concern due to the negative values induced by −k2. The dataset distribution before and after normalization for each input is shown in the supplementary materials. A fundamental problem faced is that optically, the difference between k = 1e−4 and 1e−3 is not mathematically large, but the difference does have a large impact on the transmission behavior through the substrate. Thus, the data is grouped near 0 but we need to differentiate values in a meaningful way to distinguish the physical behavior of each material. Log normalization reduces the severity of the weighted inputs but does not solve it. Thus, for these variables, we turn to more complex normalizations. For this work, we utilize quantile normalization with sklearn’s built in quantile transformer, to generate a uniform distribution of inputs for k, tsub, εreal, and εim. To ensure our values for all materials stay between 0 and 1 on all inputs, we normalize all of the simulations together. This is done to have consistent normalization, and the complete dataset (all simulations—unseen, library, and train/val/test) is included with our github before and after normalization.

We combine 35,500 FDTD simulations for micropyramids made of 14 different materials to form our training, validation, and test dataset. We follow a 70/20/10 percentage split respectively. The test dataset is used to evaluate the performance and overfitting of the model and it is not seen by the network in the training process. The prediction accuracy of the optimized network architecture is shown for all 13 materials in the test dataset in Fig. 2. We shuffle the complete dataset every time the model is run or generated such that the training, validation, and test datasets are never identical from iteration to iteration. We utilize several other datasets in the grading our of model and the prediction of performance. The prediction performance of the model for unseen materials is evaluated with normalized datasets constructed of 1500 titanium and alumina FDTD simulations, and we have 100 simulations for each material in the 23 unseen material library. In total, our combined dataset used for normalization contains 40,300 2-D FDTD simulations in 41 different materials. Our thermal predictions shown in Fig. 4 are generated from “gridpoint” neural inputs where the only variation between the inputs for each material are the xspan, zspan , and tsub neurons that follow a meshgrid of coordinates. We take the outputs for each synthetic combination of xspan, zspan, and tsub and use that to predict geometric dependent thermal performance for each material’s generated input grid.

Thermal optimization

While we can choose to define thermal optimization equation for specific applications such as radiative cooling or heating, high temperature cooling, etc., for this work we use a simple relation for easy comparison in the unseen material predictions. The cost function used in this work neglects solar absorption and focuses only on maximizing thermal emission. We define the objective function with the heat transfer balance,

where Pmax,rad is the maximum amount of blackbody radiation that can be emitted by the surface, Prad is the emitted radiation, Pabs is the amount of absorbed solar radiation, Ptrans is the amount of transmitted power through the surface, and Psolar is the amount of power available to absorb from the sun. Pabs and Ptrans cannot be larger than Psolar, as defined by the integrals in Eq, 4. We do not include the effects of atmospheric emission in the heat balance equation to maintain a simple relationship between the maximum emission and achieved emission by the surface in the optimization process. The heat transfer equation shown in Eq. (5) is a cost-function equation that prioritizes cooling performance when subjected to solar radiation. Preferentially, the surface should reflect all incident radiation while maximizing thermal emission. As we are only considering a single material system, we include a term that accounts for transmitted power. Some materials (such as PDMS or SiO2) are good emitters but would allow solar radiation to pass through, leading to deceptive performance unless a term that accounts for transmission is included. In our search process utilizing these equations, we are attempting to minimize the cost function.

For this work, we present the results in terms of the figure of merit—as seen from the coordinate grid contour plots of Fig. 5a–c which is defined by Eq. (7) as,

References

Raman, A. P., Anoma, M. A., Zhu, L., Rephaeli, E. & Fan, S. Passive radiative cooling below ambient air temperature under direct sunlight. Nature 515, 540–544 (2014).

Zhai, Y. et al. Scalable-manufactured randomized glass-polymer hybrid metamaterial for daytime radiative cooling. Science 355, 1062–1066 (2017).

Li, P. et al. Large-scale nanophotonic solar selective absorbers for high-efficiency solar thermal energy conversion. Adv. Mater. 27, 4585–4591 (2015).

Kumar, R. & Rosen, M. A. Thermal performance of integrated collector storage solar water heater with corrugated absorber surface. Appl. Therm. Eng. 30, 1764–1768 (2010).

Planck, M. The Theory of Heat Radiation (P. Blakinston’s Son & Co., 1914).

Zhu, J., Hsu, C. M., Yu, Z., Fan, S. & Cui, Y. Nanodome solar cells with efficient light management and self-cleaning. Nano Lett. 10, 1979–1984 (2010).

Zhou, L., Yu, X. & Zhu, J. Metal-core/semiconductor-shell nanocones for broadband solar absorption enhancement. Nano Lett. 14, 1093–1098 (2014).

Lee, B. J., Chen, Y. B., Han, S., Chiu, F. C. & Lee, H. J. Wavelength-selective solar thermal absorber with two-dimensional nickel gratings. J. Heat Transfer 136, 1–7 (2014).

Yin, X., Yang, R., Tan, G. & Fan, S. Terrestrial radiative cooling: Using the cold universe as a renewable and sustainable energy source. Science 370, 786–791 (2020).

Nie, X. et al. Cool white polymer coatings based on glass bubbles for buildings. Sci. Rep. 10, 1–10 (2020).

Mandal, J. et al. Hierarchically porous polymer coatings for highly efficient passive daytime radiative cooling. Science 362, 315–319 (2018).

Zhang, H. et al. Biologically inspired flexible photonic films for efficient passive radiative cooling. Proc. Natl. Acad. Sci. 117, 202001802 (2020).

Krishna, A. et al. Ultraviolet to mid-infrared emissivity control by mechanically reconfigurable graphene. Nano Lett. 19, 5086–5092 (2019).

Sala-Casanovas, M., Krishna, A., Yu, Z. & Lee, J. Bio-inspired stretchable selective emitters based on corrugated nickel for personal thermal management. Nanoscale Microscale Thermophys. Eng. 23, 173–187 (2019).

Sullivan, J., Yu, Z. & Lee, J. Optical analysis and optimization of micropyramid texture for thermal radiation control. Nanoscale Microscale Thermophys. Eng. https://doi.org/10.1080/15567265.2021.1958960 (2021).

Campbell, P. & Green, M. A. Light trapping properties of pyramidally textured surfaces. J. Appl. Phys. 62, 243–249 (1987).

Leon, J. J. D., Hiszpanski, A. M., Bond, T. C. & Kuntz, J. D. Design rules for tailoring antireflection properties of hierarchical optical structures. Adv. Opt. Mater. 5, 1–8 (2017).

Zhang, T. et al. Black silicon with self-cleaning surface prepared by wetting processes. Nanoscale Res. Lett. 8, 1–5 (2013).

Liu, Y. et al. Hierarchical robust textured structures for large scale self-cleaning black silicon solar cells. Nano Energy 3, 127–133 (2014).

Dimitrov, D. Z. & Du, C. H. Crystalline silicon solar cells with micro/nano texture. Appl. Surf. Sci. 266, 1–4 (2013).

Peter Amalathas, A. & Alkaisi, M. M. Efficient light trapping nanopyramid structures for solar cells patterned using UV nanoimprint lithography. Mater. Sci. Semicond. Process. 57, 54–58 (2017).

Mavrokefalos, A., Han, S. E., Yerci, S., Branham, M. S. & Chen, G. Efficient light trapping in inverted nanopyramid thin crystalline silicon membranes for solar cell applications. Nano Lett. 12, 2792–2796 (2012).

Rahman, T., Navarro-Cía, M. & Fobelets, K. High density micro-pyramids with silicon nanowire array for photovoltaic applications. Nanotechnology 25, 485202 (2014).

Singh, P. et al. Fabrication of vertical silicon nanowire arrays on three-dimensional micro-pyramid-based silicon substrate. J. Mater. Sci. 50, 6631–6641 (2015).

Zhu, J. et al. Optical absorption enhancement in amorphous silicon nanowire and nanocone arrays. Nano Lett. 9, 279–282 (2009).

Wei, W. R. et al. Above-11%-efficiency organic-inorganic hybrid solar cells with omnidirectional harvesting characteristics by employing hierarchical photon-trapping structures. Nano Lett. 13, 3658–3663 (2013).

Peng, Y. J., Huang, H. X. & Xie, H. Rapid fabrication of antireflective pyramid structure on polystyrene film used as protective layer of solar cell. Sol. Energy Mater. Sol. Cells 171, 98–105 (2017).

Sai, H., Yugami, H., Kanamori, Y. & Hane, K. Solar selective absorbers based on two-dimensional W surface gratings with submicron periods for high-temperature photothermal conversion. Sol. Energy Mater. Sol. Cells 79, 35–49 (2003).

Deinega, A., Valuev, I., Potapkin, B. & Lozovik, Y. Minimizing light reflection from dielectric textured surfaces. J. Opt. Soc. Am. A 28, 770 (2011).

Shore, K. A. Numerical methods in photonics, by Andrei V. Lavrinenko, Jesper Laegsgaard, Niles Gregersen, Frank Schmidt, and Thomas Sondergaard. Contemporary Physics vol. 57 (2016).

Malkiel, I. et al. Plasmonic nanostructure design and characterization via deep learning. Light Sci. Appl. 7, 1–8 (2018).

Bojarski, M. et al. End to End Learning for Self-Driving Cars. 1–9 (2016).

Hinton, G. et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 29, 16–17 (2012).

Spantideas, S. T., Giannopoulos, A. E., Kapsalis, N. C. & Capsalis, C. N. A deep learning method for modeling the magnetic signature of spacecraft equipment using multiple magnetic dipoles. IEEE Magn. Lett. 12, 1–5 (2021).

Xiong, Y., Guo, L., Tian, D., Zhang, Y. & Liu, C. Intelligent optimization strategy based on statistical machine learning for spacecraft thermal design. IEEE Access 8, 204268–204282 (2020).

Zhang, C. A Statistical Machine Learning Based Modeling and Exploration Framework for Run-Time Cross-Stack Energy Optimization (University of North Carolina at Charlotte, 2013).

Zhu, W. et al. Optimization of the thermophysical properties of the thermal barrier coating materials based on GA-SVR machine learning method: Illustrated with ZrO2doped DyTaO4system. Mater. Res. Express 8, 125503 (2021).

Zhang, T. et al. Machine learning and evolutionary algorithm studies of graphene metamaterials for optimized plasmon-induced transparency. Opt. Express 28, 18899 (2020).

Li, X., Shu, J., Gu, W. & Gao, L. Deep neural network for plasmonic sensor modeling. Opt. Mater. Express 9, 3857 (2019).

Baxter, J. et al. Plasmonic colours predicted by deep learning. Sci. Rep. 9, 1–19 (2019).

He, J., He, C., Zheng, C., Wang, Q. & Ye, J. Plasmonic nanoparticle simulations and inverse design using machine learning. Nanoscale 11, 17444–17459 (2019).

Sajedian, I., Kim, J. & Rho, J. Finding the optical properties of plasmonic structures by image processing using a combination of convolutional neural networks and recurrent neural networks. Microsyst. Nanoeng. 5, 1–8 (2019).

Han, S., Shin, J. H., Jung, P. H., Lee, H. & Lee, B. J. Broadband solar thermal absorber based on optical metamaterials for high-temperature applications. Adv. Opt. Mater. 4, 1265–1273 (2016).

Seo, J. et al. Design of a broadband solar thermal absorber using a deep neural network and experimental demonstration of its performance. Sci. Rep. 9, 1–9 (2019).

Nadell, C. C., Huang, B., Malof, J. M. & Padilla, W. J. Deep learning for accelerated all-dielectric metasurface design. Opt. Express 27, 27523 (2019).

Deppe, T. & Munday, J. Nighttime photovoltaic cells: Electrical power generation by optically coupling with deep space. ACS Photon. https://doi.org/10.1021/acsphotonics.9b00679 (2019).

Ma, W., Cheng, F. & Liu, Y. Deep-learning-enabled on-demand design of chiral metamaterials. ACS Nano 12, 6326–6334 (2018).

Li, Y. et al. Self-learning perfect optical chirality via a deep neural network. Phys. Rev. Lett. 123, 1–6 (2019).

Balin, I., Garmider, V., Long, Y. & Abdulhalim, I. Training artificial neural network for optimization of nanostructured VO2-based smart window performance. Opt. Express 27, A1030 (2019).

Elzouka, M., Yang, C., Albert, A., Prasher, R. S. & Lubner, S. D. Interpretable forward and inverse design of particle spectral emissivity using common machine-learning models. Cell Rep. Phys. Sci. 1, 100259 (2020).

Peurifoy, J. et al. Nanophotonic particle simulation and inverse design using artificial neural networks. arXiv 1–8 (2017). https://doi.org/10.1117/12.2289195.

An, S. et al. A Deep learning approach for objective-driven all-dielectric metasurface design. ACS Photon. 6, 3196–3207 (2019).

Gao, L., Li, X., Liu, D., Wang, L. & Yu, Z. A bidirectional deep neural network for accurate silicon color design. Adv. Mater. 31, 1–7 (2019).

Wiecha, P. R., Arbouet, A., Girard, C. & Muskens, O. L. Deep learning in nano-photonics: Inverse design and beyond. arXiv 9, 182–200 (2020).

Wu, S. et al. Machine-learning-assisted discovery of polymers with high thermal conductivity using a molecular design algorithm. npj Comput. Mater. 5, 1–11 (2019).

Wei, H., Zhao, S., Rong, Q. & Bao, H. Predicting the effective thermal conductivities of composite materials and porous media by machine learning methods. Int. J. Heat Mass Transf. 127, 908–916 (2018).

Zhan, T., Fang, L. & Xu, Y. Prediction of thermal boundary resistance by the machine learning method. Sci. Rep. 7, 1–2 (2017).

Farimani, A. B., Gomes, J. & Pande, V. S. Deep Learning the Physics of Transport Phenomena. 94305 (2017).

Park, S. J., Bae, B., Kim, J. & Swaminathan, M. Application of machine learning for optimization of 3-D integrated circuits and systems. IEEE Trans. Very Large Scale Integr. Syst. 25, 1856–1865 (2017).

Liu, Y., Dinh, N., Sato, Y. & Niceno, B. Data-driven modeling for boiling heat transfer: Using deep neural networks and high-fidelity simulation results. Appl. Therm. Eng. 144, 305–320 (2018).

Zhang, W., Wang, B. & Zhao, C. Selective thermophotovoltaic emitter with aperiodic multilayer structures designed by machine learning. ACS Appl. Energy Mater. 4, 2004–2013 (2021).

Kudyshev, Z. A., Kildishev, A. V., Shalaev, V. M. & Boltasseva, A. Machine-learning-assisted metasurface design for high-efficiency thermal emitter optimization. Appl. Phys. Rev. 7, 021407 (2020).

Karras, T. et al. Analyzing and improving the image quality of StyleGAN. arXiv 8110–8119 (2019).

García-Esteban, J. J., Bravo-Abad, J. & Cuevas, J. C. Deep learning for the modeling and inverse design of radiative heat transfer. Phys. Rev. Appl. 16, 1 (2021).

Tausendschön, J. & Radl, S. Deep neural network-based heat radiation modelling between particles and between walls and particles. Int. J. Heat Mass Transf. 177, 121557 (2021).

Kang, H. H., Kaya, M. & Hajimirza, S. A data driven artificial neural network model for predicting radiative properties of metallic packed beds. J. Quant. Spectrosc. Radiat. Transf. 226, 66–72 (2019).

Macleod, H. A. Thin-film optical filters. Thin-Film Opt. Filters https://doi.org/10.1887/0750306882 (1986).

Chattopadhyay, S. et al. Anti-reflecting and photonic nanostructures. Mater. Sci. Eng. R. Rep. 69, 1–35 (2010).

Yu, Z., Nie, X., Yuksel, A. & Lee, J. Reflectivity of solid and hollow microsphere composites and the effects of uniform and varying diameters. J. Appl. Phys. 128, 0531103 (2020).

Lecun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Zuo, C. et al. Deep Learning in Optical Metrology: A Review. Light: Science and Applications Vol. 11 (Springer, 2022).

Ma, T., Guo, Z., Lin, M. & Wang, Q. Recent trends on nanofluid heat transfer machine learning research applied to renewable energy. Renew. Sustain. Energy Rev. 138, 110494 (2021).

Song, Y., Liang, J., Lu, J. & Zhao, X. An efficient instance selection algorithm for k nearest neighbor regression. Neurocomputing 251, 26–34 (2017).

Balabin, R. M. & Lomakina, E. I. Support vector machine regression (LS-SVM)an alternative to artificial neural networks (ANNs) for the analysis of quantum chemistry data. Phys. Chem. Chem. Phys. 13, 11710–11718 (2011).

Wang, H. & Wang, L. Perfect selective metamaterial solar absorbers. Opt. Express 21, A1078 (2013).

Chan, D. L. C., Soljačić, M. & Joannopoulos, J. D. Thermal emission and design in one-dimensional periodic metallic photonic crystal slabs. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 74, 206–214 (2006).

Sai, H., Yugami, H., Akiyama, Y., Kanamori, Y. & Hane, K. Spectral control of thermal emission by periodic microstructured surfaces in the near-infrared region. J. Opt. Soc. Am. A 18, 1471 (2001).

Krishna, A. & Lee, J. Morphology-driven emissivity of microscale tree-like structures for radiative thermal management. Nanoscale Microscale Thermophys. Eng. 22, 124–136 (2018).

Palik, E. D. Handbook of Optical Constants of Solids (Academic Press, 1985).

Yang, H. U. et al. Optical dielectric function of silver. Phys. Rev. B Condens. Matter Mater. Phys. 91, 1–11 (2015).

Weber, M. J. Handbook of Optical Materials Vol. 3 (CRC Press, 2003).

Ordal, M. A., Bell, R. J., Alexander, R. W., Newquist, L. A. & Querry, M. R. Optical properties of Al, Fe, Ti, Ta, W, and Mo at submillimeter wavelengths. Appl. Opt. 27, 1203 (1988).

Wan, C. et al. On the optical properties of thin-film vanadium dioxide from the visible to the far infrared. Ann. Phys. 1900188, 1900188 (2019).

Srinivasan, A., Czapla, B., Mayo, J. & Narayanaswamy, A. Infrared dielectric function of polydimethylsiloxane and selective emission behavior. Appl. Phys. Lett. 109, 061905 (2016).

Larruquert, J. I. et al. Self-consistent optical constants of SiC thin films. J. Opt. Soc. Am. A 28, 2340 (2011).

Phillip, H. R. & Taft, E. A. Kramers-Kronig analysis of reflectance data for diamond. Phys. Rev. 136, 1445 (1964).

Kischkat, J. et al. Mid-infrared optical properties of thin films of aluminum oxide, titanium dioxide, silicon dioxide, aluminum nitride, and silicon nitride. Appl. Opt. 51, 6789–6798 (2012).

Babar, S. & Weaver, J. H. Optical constants of Cu, Ag, and Au revisited. Appl. Opt. 54, 477 (2015).

Larruquert, J. I. et al. Self-consistent optical constants of sputter-deposited B 4 C thin films. JOSA A 29, 117–123 (2012).

Querry, M. R. From the Millimeter To the Ultraviolet. (1987).

Rakić, A. D., Djurišić, A. B., Elazar, J. M. & Majewski, M. L. Optical properties of metallic films for vertical-cavity optoelectronic devices. Appl. Opt. 37, 5271 (1998).

Querry, M. R. Optical Constants, Report No. AD-A158 623. Crdc CR-85034, 1–413 (1985).

Koyama, R. Y., Smith, N. V. & Spicer, W. E. Optical properties of indium. Phys. Rev. B 8, 2426–2432 (1973).

You, A., Be, M. A. Y. & In, I. Optical dispersion relations for GaP, GaAs, GaSb, InP, InAs, InSb. Al. J. Appl. Phys. 66, 6030 (1998).

Rasigni, M. & Rasigni, G. Optical constants of lithium deposits as determined from the Kramers-Kronig analysis. J. Opt. Soc. Am. 67, 54 (1977).

Hagemann, H. J., Gudat, W. & Kunz, C. Optical constants from the far infrared to the X-ray region: Mg, Al, Cu, Ag, Au, Bi, C, and Al2O3. J. Opt. Soc. Am. 65, 742–744 (1975).

Golovashkin, A. I., Leksina, I. E., Motulevhich, G. P. & Shubin, A. A. The optical properties of Niobium. Sov. Phys. JetP 29, 27–34 (1969).

Nemoshkalenko, V. V., Antonov, V. N., Kirillova, M. M., Krasovskii, A. E. & Nomerovannaya, L. V. The structure and energy bands and optical absoprtion in osmium. Sov. JetP 63, 115 (1986).

Chollet, F. Keras. https://github.com/fchollet/keras (2015).

Li, L., Jamieson, K., DeSalvo, G., Rostamizadeh, A. & Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 18, 1–52 (2018).

Acknowledgements

This work was funded by the Aeronautics Research Mission Directorate (ARMD) of the National Aeronautics and Space Administration (NASA) through the NASA Fellowship Activity, under contract 80NSSC19K1671. Dr. Vikram Shyam from NASA’s John H. Glenn Research Center is the technical advisor for this contract. J.S. and J.L. also thank the support provided by the National Science Foundation (No. ECCS-1935843).

Author information

Authors and Affiliations

Contributions

J.S., A.M., and J.L. conceived the idea. J.S. contributed to the generation of the deep-learning models, optimization, and dataset preparation. J.S. contributed to the FDTD simulations. J.S. contributed to the thermal analysis and optimization. J.S. contributed to the generation of the material search algorithm. All authors discussed the results and revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sullivan, J., Mirhashemi, A. & Lee, J. Deep learning based analysis of microstructured materials for thermal radiation control. Sci Rep 12, 9785 (2022). https://doi.org/10.1038/s41598-022-13832-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-13832-8

This article is cited by

-

Photonic structures in radiative cooling

Light: Science & Applications (2023)

-

Deep learning-based inverse design of microstructured materials for optical optimization and thermal radiation control

Scientific Reports (2023)

-

Transport properties of two-dimensional dissipative flow of hybrid nanofluid with Joule heating and thermal radiation

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.